Enhancing Breast Cancer Detection through Advanced AI-Driven Ultrasound Technology: A Comprehensive Evaluation of Vis-BUS

Abstract

1. Introduction

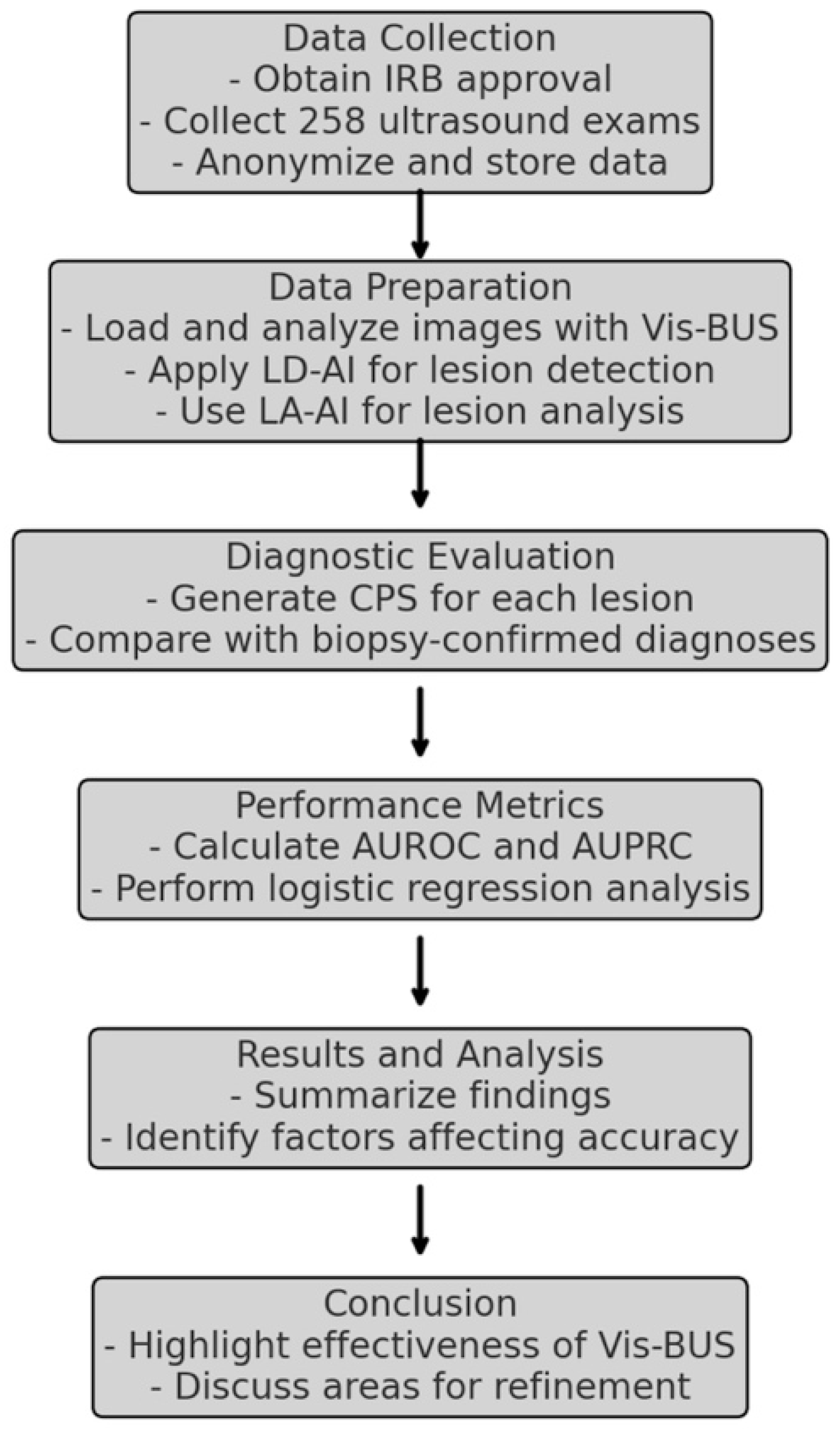

2. Materials and Methods

2.1. Background and Theoretical Framework

2.1.1. Breast Imaging-Reporting and Data System (BI-RADS)

- BI-RADS 0: Incomplete; additional imaging evaluation needed.

- BI-RADS 1: Negative; no findings of concern.

- BI-RADS 2: Benign findings.

- BI-RADS 3: Probably benign; <2% risk of malignancy, with a recommendation for short-term follow-up.

- BI-RADS 4: Suspicious abnormality; subdivided into 4A, 4B, and 4C, with increasing likelihood of malignancy (2–95%).

- BI-RADS 5: Highly suggestive of malignancy; >95% risk, with biopsy recommended.

- BI-RADS 6: Known biopsy-proven malignancy.

2.1.2. Artificial Intelligence in Medical Imaging

2.1.3. Diagnostic Performance Metrics

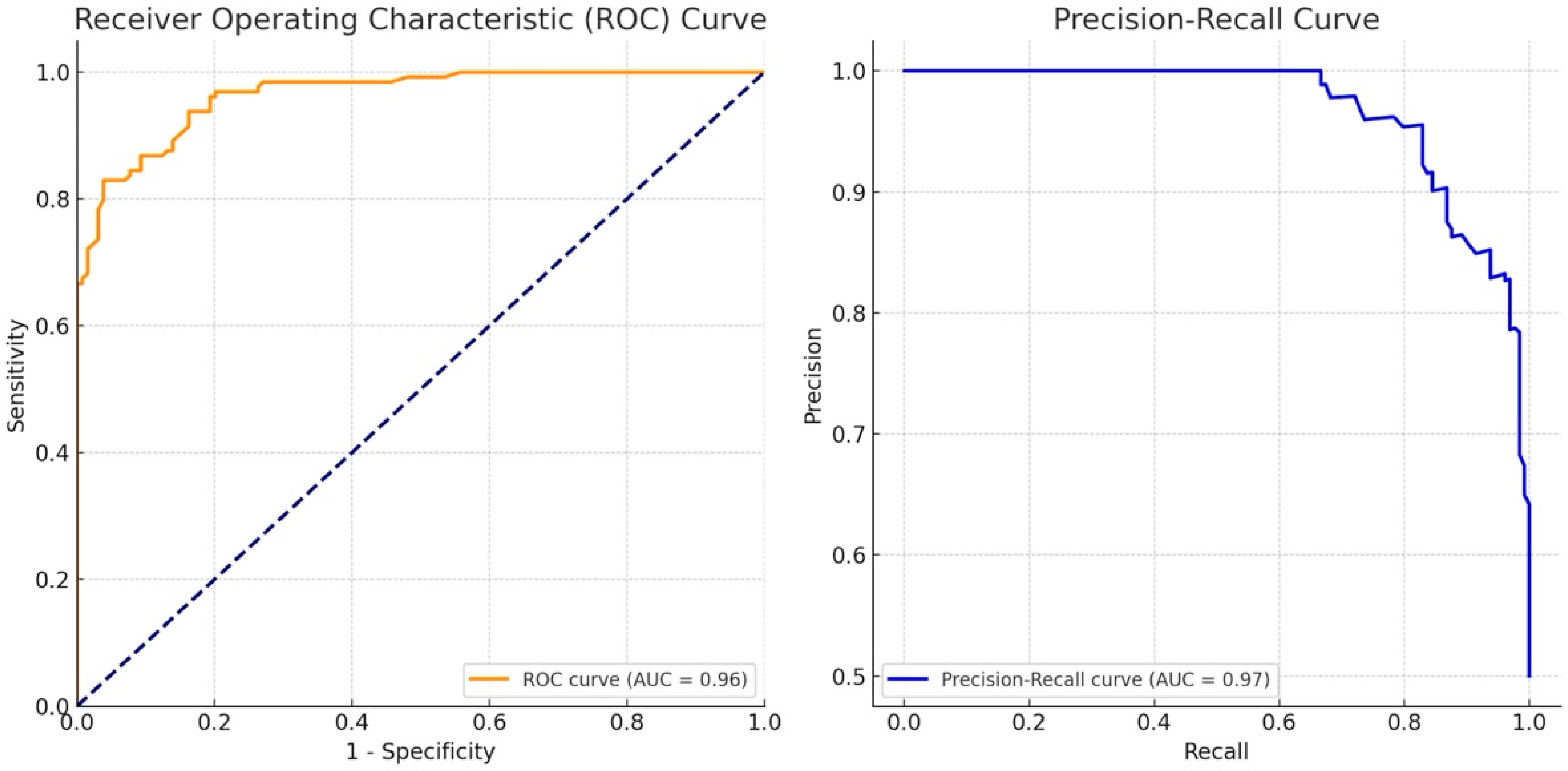

- Area Under the Receiver Operating Characteristic Curve (AUROC): A measure of the ability of a classifier to distinguish between classes. The AUROC value ranges from 0.5 (no better than random guessing) to 1.0 (perfect classification).

- Area Under the Precision–Recall Curve (AUPRC): Focuses on the trade-off between precision (positive predictive value) and recall (sensitivity), especially important in cases where one class is much less frequent than the other.

2.1.4. Cancer Probability Score (CPS)

- Calculation Process:

- Input Data: Ultrasound images of breast lesions are processed by the AI system.

- Feature Extraction: The AI system extracts and analyzes key features of the lesions, including morphological and textural characteristics.

- Score Generation: The AI uses the extracted features to generate the CPS, which reflects the probability of the lesion being malignant. The score is derived through a combination of lesion detection (using Lesion Detection AI) and lesion analysis (using Lesion Analysis AI).

- Output: The CPS is presented on a scale from −100 to 100, aiding clinicians in assessing the malignancy risk of the lesion.

- The CPS formulawhere, and denotes LD-AI and LA-AI neural network, respectively.

2.1.5. CPS and Its Relationship with AUPRC and AUROC

- AUROC (Area Under the Receiver Operating Characteristic Curve): The AUROC measures the ability of the CPS to discriminate between benign and malignant lesions across various threshold settings. It is calculated by plotting the true positive rate (sensitivity) against the false positive rate (1-specificity) at different thresholds. An AUROC of 1 indicates perfect discrimination, while an AUROC of 0.5 indicates no better performance than random guessing.

- AUPRC (Area Under the Precision–Recall Curve): The AUPRC focuses on the trade-off between precision (positive predictive value) and recall (sensitivity), particularly in datasets where the classes (malignant vs. benign) are imbalanced. The AUPRC is calculated by plotting precision against recall at various threshold levels. A higher AUPRC indicates that the CPS maintains good precision and recall, even when the threshold is varied.

2.2. Study Design

2.3. Development of Vis-BUS

2.3.1. System Architecture and Components

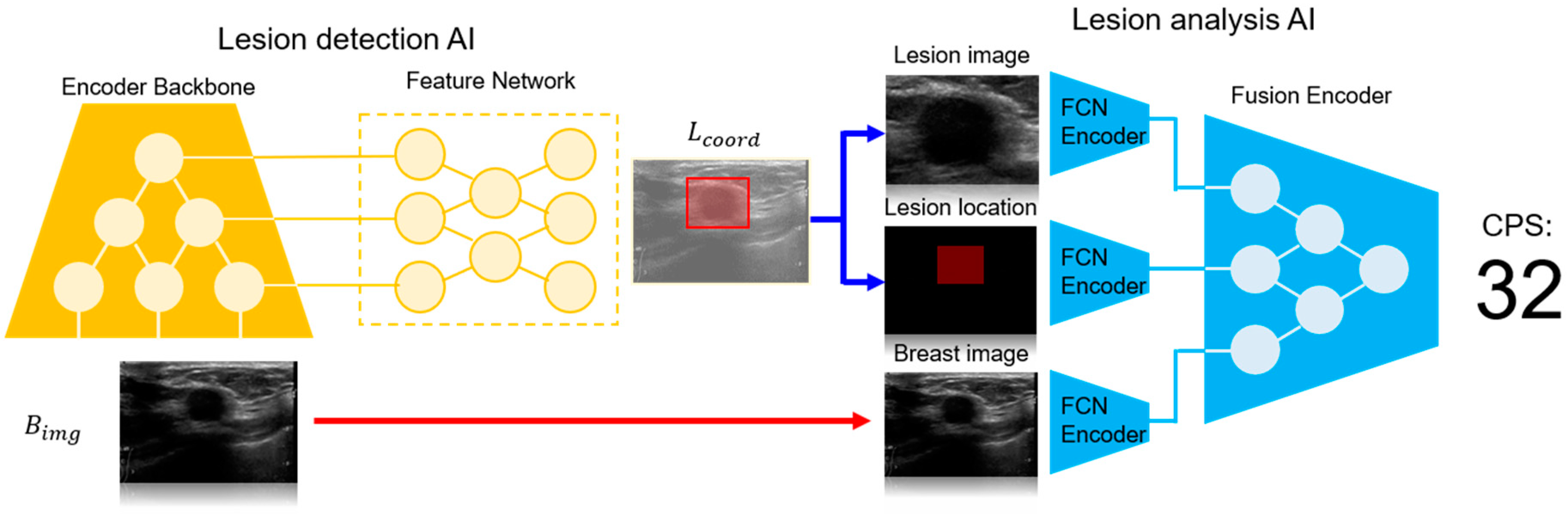

- Lesion Detection AI (LD-AI): This module is responsible for detecting the location of the breast lesions in ultrasound images.

- Lesion Analysis AI (LA-AI): This module further analyzes the detected lesions to assess their malignancy and generates the Cancer Probability Score (CPS).

- LD-AI (Lesion Detection AI):The LD-AI module was developed based on a state-of-the-art object detection framework, leveraging deep convolutional neural networks (CNNs) to identify lesions within B-mode ultrasound images. The LD-AI component includes the following key elements:The LD-AI is implemented based on the object detection framework [10], where the neural network outputs lesion position and size and a corresponding confidence score as an output (. The LD-AI consists of a convolutional encoder backbone and a feature network. The encoder backbone is based on the EfficientNet [11] for parameter-efficient feature analysis of the . A bi-directional feature network [10] is employed as the feature network. The lesion location is trained to minimize mean squared error loss between the ground truth and the LD-AI output, while the confidence score employs focal loss [12] as a learning objective. The AdamW [13] with a learning rate of 1 × 10−4 is utilized as the network optimizer.

- Convolutional Encoder Backbone: The backbone of LD-AI is based on EfficientNet, a highly efficient CNN architecture known for its balance between performance and computational cost. EfficientNet was chosen for its ability to effectively capture the complex features of ultrasound images with fewer parameters.

- Feature Network: A bi-directional feature network is employed, allowing the system to analyze features from multiple scales, improving the detection of lesions of varying sizes and shapes.

- Training Process: The LD-AI was trained using a large dataset of 19,000 annotated ultrasound images. The ground truth for lesion locations was provided by expert radiologists. The network was optimized with a learning rate set to 1 × 10−4, focusing on minimizing the mean squared error between the predicted and actual lesion coordinates.

- LA-AI (Lesion Analysis AI):Following the detection of a lesion, the LA-AI module processes the identified lesion to determine its malignancy, outputting a CPS. The LA-AI component includes:The LA-AI employs , , and lesion image to analyze the lesion malignancy. Features of each input are interpreted using a fully convolutional neural network (FCN encoder). These features are then concatenated channel-wise into a latent vector, which serves as the input for the fusion encoder, producing CPS as the neural network output. The fusion encoder architecture is based on EfficientNet. The LA-AI is optimized with the AdamW optimizer (learning rate = 1 × 10−3), with a learning objective of minimizing binary cross entropy between the CPS and the ground truth.

- Fully Convolutional Network (FCN) Encoder: The FCN processes the B-mode images and the coordinates of the detected lesions. This encoder extracts features that are crucial for malignancy assessment.

- Fusion Encoder: The output from the FCN is concatenated channel-wise into a latent vector, which is then passed through the fusion encoder. This architecture, also based on EfficientNet, integrates the features extracted from the lesion to generate a CPS.

- Optimization: The LA-AI was trained at a learning rate of 1 × 10−3, with the objective of minimizing binary cross-entropy between the CPS and the actual malignancy status as determined by biopsy.

2.3.2. Training and Data Preparation

- Data Sources:The training dataset consisted of ultrasound images acquired from machines by leading manufacturers such as Philips (Amsterdam, The Netherlands), GE (Boston, MA, USA), and Fujifilm (Tokyo, Japan). This diversity in data sources was crucial to ensure that Vis-BUS could perform reliably across different imaging systems.

- Annotation and Ground Truth:Each ultrasound image was meticulously annotated by expert radiologists at Seoul National University Bundang Hospital (SNUBH), providing the ground truth for lesion location and malignancy. This rigorous annotation process was critical for training the AI models to achieve high accuracy in real-world clinical settings.

- Model Validation and Testing:To ensure the robustness of Vis-BUS, the models were validated on a separate set of ultrasound images not used in training. The performance of the system was evaluated using key metrics such as the Area Under the Receiver Operating Characteristic Curve (AUROC) and the Area Under the Precision–Recall Curve (AUPRC).

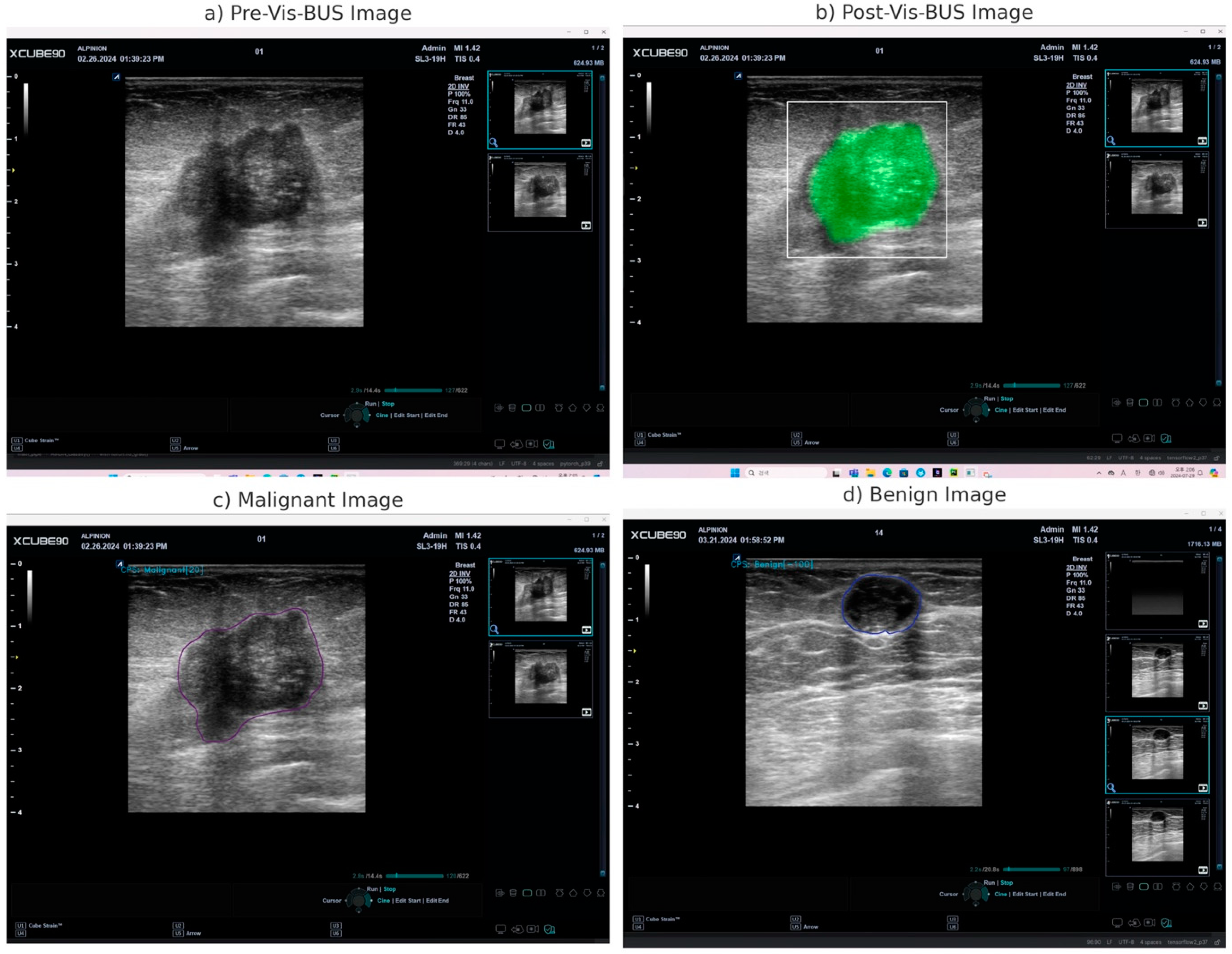

2.3.3. Implementation and Integration

- Real-Time Analysis: The AI processes the video feed in real-time, providing immediate diagnostic insights as the ultrasound examination is being conducted.

- Freeze-Frame Analysis: Clinicians can pause the ultrasound scan and perform detailed analysis on static images using the AI tool, which continues to offer diagnostic information without losing any capabilities.

- User Interface: The tablet interface is designed to be intuitive, allowing clinicians to easily navigate through diagnostic results, adjust settings, and generate detailed reports.

2.3.4. Cancer Probability Score (CPS)

2.3.5. Performance and Optimization

2.4. Explanation of Vis-BUS Operation

2.5. Patients Dataset

2.6. Evaluation Metrics and Statistical Analysis

3. Results

3.1. Vis-BUS Operational Outcomes

3.2. Patient Characteristics

3.3. Cancer Probability Score (CPS) Performance in Diagnosing Breast Masses

3.4. Logistic Regression Analysis of Diagnostic Accuracy

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [PubMed]

- Berg, W.A.; Blume, J.D.; Cormack, J.B.; Mendelson, E.B.; Lehrer, D.; Böhm-Vélez, M.; Pisano, E.D.; Jong, R.A.; Evans, W.P.; Morton, M.J. Combined screening with ultrasound and mammography vs mammography alone in women at elevated risk of breast cancer. JAMA 2008, 299, 2151–2163. [Google Scholar] [CrossRef] [PubMed]

- Giger, M.L.; Karssemeijer, N.; Schnabel, J.A. Breast image analysis for risk assessment, detection, diagnosis, and treatment of cancer. Annu. Rev. Biomed. Eng. 2013, 15, 327–357. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.-Z.; Ni, D.; Chou, Y.-H.; Qin, J.; Tiu, C.-M.; Chang, Y.-C.; Huang, C.-S.; Shen, D.; Chen, C.-M. Computer-aided diagnosis with deep learning architecture: Applications to breast lesions in US images and pulmonary nodules in CT scans. Sci. Rep. 2016, 6, 24454. [Google Scholar] [CrossRef] [PubMed]

- Becker, A.S.; Marcon, M.; Ghafoor, S.; Wurnig, M.C.; Frauenfelder, T.; Boss, A. Deep learning in mammography: Diagnostic accuracy of a multipurpose image analysis software in the detection of breast cancer. Investig. Radiol. 2017, 52, 434–440. [Google Scholar] [CrossRef] [PubMed]

- Villa-Camacho, J.C.; Baikpour, M.; Chou, S.-H.S. Artificial intelligence for breast US. J. Breast Imaging 2023, 5, 11–20. [Google Scholar] [CrossRef] [PubMed]

- Mango, V.L.; Sun, M.; Wynn, R.T.; Ha, R. Should we ignore, follow, or biopsy? Impact of artificial intelligence decision support on breast ultrasound lesion assessment. Am. J. Roentgenol. 2020, 214, 1445–1452. [Google Scholar] [CrossRef] [PubMed]

- Amir, T.; Coffey, K.; Sevilimedu, V.; Fardanesh, R.; Mango, V.L. A role for breast ultrasound artificial intelligence decision support in the evaluation of small invasive lobular carcinomas. Clin. Imaging 2023, 101, 77–85. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Uzun Ozsahin, D.; Ikechukwu Emegano, D.; Uzun, B.; Ozsahin, I. The systematic review of artificial intelligence applications in breast cancer diagnosis. Diagnostics 2022, 13, 45. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Wang, Y.; Yuan, J.; Cheng, Q.; Wang, X.; Carson, P.L. Medical breast ultrasound image segmentation by machine learning. Ultrasonics 2019, 91, 1–9. [Google Scholar] [CrossRef]

- Sickles, E.A. ACR BI-RADS® Atlas, Breast Imaging Reporting and Data System; American College of Radiology: Reston, VA, USA, 2013. [Google Scholar]

- Wadhwa, A.; Sullivan, J.R.; Gonyo, M.B. Missed breast cancer: What can we learn? Curr. Probl. Diagn. Radiol. 2016, 45, 402–419. [Google Scholar] [CrossRef] [PubMed]

- Youk, J.H.; Kim, E.-K.; Kim, M.J.; Lee, J.Y.; Oh, K.K. Missed breast cancers at US-guided core needle biopsy: How to reduce them. Radiographics 2007, 27, 79–94. [Google Scholar] [CrossRef] [PubMed]

- Burnside, E.S.; Davis, J.; Chhatwal, J.; Alagoz, O.; Lindstrom, M.J.; Geller, B.M.; Littenberg, B.; Shaffer, K.A.; Kahn, C.E., Jr.; Page, C.D. Probabilistic computer model developed from clinical data in national mammography database format to classify mammographic findings. Radiology 2009, 251, 663–672. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Ruiz, A.; Krupinski, E.; Mordang, J.-J.; Schilling, K.; Heywang-Köbrunner, S.H.; Sechopoulos, I.; Mann, R.M. Detection of breast cancer with mammography: Effect of an artificial intelligence support system. Radiology 2019, 290, 305–314. [Google Scholar] [CrossRef] [PubMed]

- Lehman, C.D.; Wellman, R.D.; Buist, D.S.; Kerlikowske, K.; Tosteson, A.N.; Miglioretti, D.L.; Consortium, B.C.S. Diagnostic accuracy of digital screening mammography with and without computer-aided detection. JAMA Intern. Med. 2015, 175, 1828–1837. [Google Scholar] [CrossRef] [PubMed]

- Brunetti, N.; Calabrese, M.; Martinoli, C.; Tagliafico, A.S. Artificial intelligence in breast ultrasound: From diagnosis to prognosis—A rapid review. Diagnostics 2022, 13, 58. [Google Scholar] [CrossRef] [PubMed]

- O’Connell, A.M.; Bartolotta, T.V.; Orlando, A.; Jung, S.H.; Baek, J.; Parker, K.J. Diagnostic performance of an artificial intelligence system in breast ultrasound. J. Ultrasound Med. 2022, 41, 97–105. [Google Scholar] [CrossRef] [PubMed]

| Experiment/Outcome | Description | Key Findings |

|---|---|---|

| Real-time Diagnostic Insights | Vis-BUS processes live ultrasound images and provides immediate diagnostic feedback. | High-resolution quantitative analysis with real-time feedback, effectively highlighting pathological features. |

| Post-Analysis on Freeze Frames | Detailed examination of static ultrasound images using the AI tool. | Maintains diagnostic precision even when analyzing static images, ensuring thorough evaluation of specific frames. |

| Cancer Probability Score (CPS) | Quantitative assessment of the likelihood of a lesion being malignant or benign, displayed on a scale from −100 to 100. | Accurate differentiation between benign and malignant lesions, aiding in informed clinical decision-making. |

| Diagnostic Performance Across Ultrasound Machines | Evaluation of Vis-BUS performance on ultrasound images obtained from various machines. | Consistent performance across different ultrasound equipment, validating the tool’s versatility and reliability. |

| Lesion Characterization | Analysis of key pathological features such as tissue texture, echo patterns, and microcalcifications. | Precise identification of lesion characteristics, contributing to the accurate classification of breast lesions. |

| Variable | Benign (n = 129) | Malignancy (n = 129) | p-Value | ||

|---|---|---|---|---|---|

| age, mean (SD) | 44.91 | (9.99) | 51.57 | (10.9) | <0.001 |

| lesion size, mean (SD) | 1.02 | (0.64) | 2.41 | (1.29) | <0.001 |

| T-stage | T1 | 123 | T1 | 70 | <0.001 |

| T2 | 6 | T2 | 48 | ||

| T3 | 0 | T3 | 11 | ||

| Density | Dense | 116 | Dense | 104 | <0.001 |

| Fatty | 13 | Fatty | 3 | ||

| Unknown | 0 | Unknown | 22 | ||

| Microcalcification | Y | 13 | Y | 66 | <0.001 |

| N | 116 | N | 63 | ||

| Ultrasound shape | Irregular | 66 | Irregular | 124 | <0.001 |

| Oval | 58 | Oval | 4 | ||

| Round | 5 | Round | 1 | ||

| Ultrasound margin | Independent: | 85 | Independent: | 91 | <0.001 |

| Circumscribe | 41 | Circumscribe | 2 | ||

| Microlobulate | 3 | Microlobulate | 3 | ||

| Angular | 0 | Angular | 8 | ||

| Ultrasound echo-pattern | Hypoechioic | 96 | Hypoechioic | 114 | <0.001 |

| Isoechoic | 25 | Isoechoic | 3 | ||

| Heterogeneous | 9 | Heterogeneous | 9 | ||

| Complex | 0 | Complex | 3 |

| Variable | Odds Ratio (95% CI) | p-Value |

|---|---|---|

| Lesion Density | ||

| Fatty | 27.7781 (0.00, 1.00 × 106) | 1.0000 |

| Unknown | 0.3185 (0.123, 0.774) | 0.0162 |

| Lesion Size | ||

| Medium | 0.7891 (0.451, 1.376) | 0.3980 |

| Large | 0.8014 (0.388, 1.658) | 0.5530 |

| Microcalcifications | ||

| Present | 1.360 (0.860, 2.160) | 0.1941 |

| BI-RADS Categories | ||

| C3 | 2.548 (0.755, 8.602) | 0.1318 |

| C4a | 0.7977 (0.289, 2.198) | 0.6622 |

| C4b | 1.086 (0.242, 4.873) | 0.9138 |

| C4c | 1.778 (0.186, 17.024) | 0.6177 |

| C5 | 10.173 (2.526, 40.962) | 0.0011 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwon, H.; Oh, S.H.; Kim, M.-G.; Kim, Y.; Jung, G.; Lee, H.-J.; Kim, S.-Y.; Bae, H.-M. Enhancing Breast Cancer Detection through Advanced AI-Driven Ultrasound Technology: A Comprehensive Evaluation of Vis-BUS. Diagnostics 2024, 14, 1867. https://doi.org/10.3390/diagnostics14171867

Kwon H, Oh SH, Kim M-G, Kim Y, Jung G, Lee H-J, Kim S-Y, Bae H-M. Enhancing Breast Cancer Detection through Advanced AI-Driven Ultrasound Technology: A Comprehensive Evaluation of Vis-BUS. Diagnostics. 2024; 14(17):1867. https://doi.org/10.3390/diagnostics14171867

Chicago/Turabian StyleKwon, Hyuksool, Seok Hwan Oh, Myeong-Gee Kim, Youngmin Kim, Guil Jung, Hyeon-Jik Lee, Sang-Yun Kim, and Hyeon-Min Bae. 2024. "Enhancing Breast Cancer Detection through Advanced AI-Driven Ultrasound Technology: A Comprehensive Evaluation of Vis-BUS" Diagnostics 14, no. 17: 1867. https://doi.org/10.3390/diagnostics14171867

APA StyleKwon, H., Oh, S. H., Kim, M.-G., Kim, Y., Jung, G., Lee, H.-J., Kim, S.-Y., & Bae, H.-M. (2024). Enhancing Breast Cancer Detection through Advanced AI-Driven Ultrasound Technology: A Comprehensive Evaluation of Vis-BUS. Diagnostics, 14(17), 1867. https://doi.org/10.3390/diagnostics14171867