Abstract

The challenges of respiratory infections persist as a global health crisis, placing substantial stress on healthcare infrastructures and necessitating ongoing investigation into efficacious treatment modalities. The persistent challenge of respiratory infections, including COVID-19, underscores the critical need for enhanced diagnostic methodologies to support early treatment interventions. This study introduces an innovative two-stage data analytics framework that leverages deep learning algorithms through a strategic combinatorial fusion technique, aimed at refining the accuracy of early-stage diagnosis of such infections. Utilizing a comprehensive dataset compiled from publicly available lung X-ray images, the research employs advanced pre-trained deep learning models to navigate the complexities of disease classification, addressing inherent data imbalances through methodical validation processes. The core contribution of this work lies in its novel application of combinatorial fusion, integrating select models to significantly elevate diagnostic precision. This approach not only showcases the adaptability and strength of deep learning in navigating the intricacies of medical imaging but also marks a significant step forward in the utilization of artificial intelligence to improve outcomes in healthcare diagnostics. The study’s findings illuminate the path toward leveraging technological advancements in enhancing diagnostic accuracies, ultimately contributing to the timely and effective treatment of respiratory diseases.

1. Introduction

Respiratory infections, including COVID-19, SARS, and pneumonia, present significant global health challenges. The widespread use of RT-PCR, acknowledged as the gold standard for SARS-CoV-2 diagnosis, faces limitations such as supply shortages and complexity, leading to delays in test results [1]. This highlights the need for complementary diagnostic approaches, especially valuable in later stages of the infection where imaging becomes crucial [2]. Additionally, the challenges in the supply and implementation of RT-PCR testing underscore the urgency for alternative methods [3].

Radiological imaging, particularly chest X-rays, is pivotal in diagnosing COVID-19 pneumonia. The interpretation of these images, however, is complicated due to their similarity to other respiratory conditions, coupled with the high workload and potential for diagnostic errors among radiologists [4]. Furthermore, the need for a standard diagnostic method becomes crucial in mitigating patient congestion and healthcare bottlenecks [5]. Misdiagnoses not only affect patient care but also increase the risk of exposure and add to the overall burden on healthcare systems [6]. The uncertainty in distinguishing COVID-19 from other viral pneumonia types can lead to delayed treatment and increased healthcare strain [7].

- In addressing the diagnostic challenges of respiratory infections, the application of artificial intelligence (AI) and deep learning is gaining prominence. Specifically, convolutional neural networks (CNNs) are employed to enhance the accuracy and efficiency of medical imaging diagnoses [8]. This study aims to leverage AI and deep learning to develop a more effective diagnostic tool for COVID-19 pneumonia, addressing the gaps and challenges in current diagnostic methodologies [9].

- The field of medical imaging has extensively adopted CNNs due to their utility in symptom identification and learning [10]. Furthermore, with the advent of deep CNNs and their successful application in various areas, the use of deep learning techniques with chest X-rays is becoming increasingly popular. This is bolstered by the availability of vast data sets to train deep-learning algorithms [11].

- The deep learning model significantly simplifies the diagnostic process by enabling rapid retraining of CNNs with minimal data [12]. Additionally, the idea of feature transfer within a machine-learning framework has been utilized effectively to distinguish pneumonia from other infections [13].

- Given the ongoing development of vaccines and treatments for COVID-19, deep learning-based techniques that assist radiologists in diagnosing this disease could potentially enable faster and more accurate assessments especially in remote areas [14].

2. Related Works

COVID-19 is an epidemic disease. The World Health Organization(WHO) is concerned and announced it is a worldwide pandemic health incidence because it rolls out all over the world and causes many deaths worldwide. It is a different kind of virus for detecting deep learning techniques that are helpful with clinical images [15]. In the literature we analyzed, researchers utilized chest X-ray data sets for the identification of COVID-19 and other respiratory infections in such a way:

A custom-built CNN approach known as VGG16 was employed by A. Ranjan et al. [16] to get lung region recognition and various pneumonia categorizations. Huge hospital-scale X-ray images were used by Wang et al. [17] for the categorization and diagnosis of specified affected regions. Ronneburger et al. [18] employed compact and augmentation data sets to instruct a feature transfer scheme for image segmentation cases and enhance the functioning. Rajpurkar et al. [19] described the deep learning model CheNet with 121 feature extraction over the chest X-ray images to achieve 14 pathologies dictation as pneumonia and others with the help of rendition of various CNN. P. Lakhani et al. [20] employed transfer learning to categorize 1427 chest X-rays image sets, including 224 COVID-19, 700 bacterial pneumonia, and 504 Normal X-rays with correctness, sensitivity, and specificity of 96.78%, 98.66%, and 96.46% respectively. Many individual pre-trained deep-learning CNNs were analyzed; the presented outcomes were founded on a limited set of images. Ashfar et al. [21] reported a Capsule Network, COVID-CAPS, instead of a conventional neural network trained on a smaller amount of Image data. COVID-CAPS was recorded with a correctness of 95.7%, sensitivity of 90%, and specificity of 95.8%. Abbas et al. [22] introduced a pre-trained CNN model (DeTraC-Decompose, Transfer, and Compose) that was employed on a smaller database of 105 COVID-19, 80 Normal, and 11 SARS X-ray images for the findings of COVID-19. Indicate that it would assist in making more homogenous classes, reduce the space in memory, and obtain the accuracy, sensitivity, and specificity of 95.12%, 97.91%, and 91.87%, respectively. Wang et al. [23] produced a COVID-Net deep learning network for COVID-19 cases from approximately 14k chest X-ray images, in a split of 83.5% accuracy was obtained. Ucar et al. [24] have adjusted a pre-trained CNN model with Bayesian optimization, named SqueezeNet, to analyze COVID-19 images and obtained encouraging results on a minimal amount of image dataset. From the perspective of achieving promising accuracy, using this approach should exercise spacious COVID and non-COVID images. Khan et al. [25] employed a features detection process on 310 normal, 330 bacterial pneumonia, 327 viral pneumonia, and 284 COVID-19 pneumonia images. Nonetheless, only some deep learning approaches and the empirical obligation fuzzy in this study were examined in this approach. In summary, a few recent studies were reported on the feature transfer approach for classifying COVID-19 X-ray images from a limited dataset with encouraging outcomes. However, it is necessary to investigate it on many images. Some approaches have modified the pre-trained deep learning models to enhance their accuracy and efficiency and some studies operate capsule algorithms [26]. We have explored additional approaches, examining various researchers’ perspectives on analyzing the impact of respiratory disease, as shown in the Table 1.

Table 1.

Key Findings.

So, in these studies, we have organized an extensive CXR database of normal, SARS, COVID-19, and abnormal from the openly accessible data so that most explorers can take advantage of this work. Furthermore, two different data sets were used to train, test, and validate five pre-trained CNN models. The encouragement for the investigation is to deploy deep learning algorithms in such a way that it excellently classifies COVID-19, SARS, and normal and abnormal chest X-ray images by combining deep learning and combinatorial fusion analysis for early age detection of these diseases that is highly prominent for humankind survival in this crucial pandemic situation. It is acknowledged that CXR images include a lot of noisy errors bound to them during the prediction of diseases along with inferior-density grayscale pictures [31]. Consequently, the contradistinction between CXR images acquired from specific radiology machinery and borderline depictions may be feeble [32]. To detract features from that CXR image is thoroughly challenging. The quality of these CXRs can be enhanced by deploying some contrast enhancement procedures and increasing the image dataset. Hence, feature extraction from these CXRs can be executed proficiently and smoothly [33]. Our study centers on addressing the imbalance in chest X-ray (CXR) datasets and enhancing the accuracy of deep learning models. Utilizing histogram equalization, a powerful image processing method for optimal contrast in Python, we augmented the data. This approach resulted in two distinct datasets: the original and the enhanced (augmented) dataset. We then applied these datasets to prominent deep learning models for the extraction of feature vectors. A driving force behind our research is the evident gap in existing studies dealing with data imbalance and the scarcity of research utilizing feature extraction via deep learning models to increase accuracy, particularly through combinatorial fusion analysis. Our study, therefore, makes a significant contribution in these areas, offering new insights and methodologies for enhancing diagnostic accuracy in medical imaging, our research contribution can be succinctly summarized as follows:

- Classified the diseases with five art of states pre-trained convolutional neural network using CXR images.

- To resolve data imbalance, the study employs a fivefold cross-validation approach, ensuring a balanced data representation and consistent model evaluation.

- Enhanced the deep learning model testing accuracy using combinatorial fusion analysis.

The present study is additionally organized into distinct subsections, including Section 3—dataset, models used, and Section 4—materials and methods. In Section 5—performance and evaluation matrix. In Section 6—further, the evaluation of results regarding training and testing for models used is discussed along with future scope. Finally, This work is concluded in Section 7.

3. Datasets and Model

3.1. Datasets

This study used two different CXR datasets to diagnose COVID-19, SARS, and normal and abnormal diseases. Among these databases, the COVID-19 database was developed using publicly available research articles, while others are generated from the publicly available Kaggle and GitHub datasets.

Three prime sources have been used for the COVID-19 dataset creation; one is the Novel Corona Virus 2019 Dataset: Joseph Paul Cohen and Paul Morrison, and Lan Dao have generated a public database on GitHub by accumulating 319 radiographic images of COVID-19, Middle East respiratory syndrome (MERS), Severe acute respiratory syndrome (SARS) and ARDS from the published articles and online resources. The second is the Italian Society of Medical and Interventional Radiology (SIRM). SIRM presents 384 COVID-19-positive radiographic images (CXR and CT) with commuting motion. We have collected 60 COVID-19-positive chest X-ray images from the various recently published articles, we deposited 30 positive chest X-ray images from Radiopaedia, which were not itemized in the GitHub repository. Also, images from the RSNAPneumonia-Detection-Challenge database and the CXR Images database using Kaggle were used to create the normal and abnormal sub-databases. RSNA-Pneumonia-Detection-Challenge The Radiology Society of North America (RSNA) constructed an artificial intelligence (AI) challenge to disclose pneumonia using CXR images. This database included normal chest X-ray images and non-COVID pneumonia images. The third is Chest X-ray images (pneumonia): Kaggle CXR database is the most plausible database, which has more than 5000 chest X-ray images of normal, viral, and bacterial pneumonia organized from disparate subjects. So, the original dataset that we have prepared from GitHub, Kaggle, and some other resources as publicly available data is used to generate an augmented dataset that also contains the higher chest X-ray images; both datasets are represented as follows in Table 2 and Table 3.

Table 2.

Distribution of chest X-ray original dataset over training and testing for all rounds in this study.

Table 3.

Distribution of chest X-ray augmented (correct) dataset over training and testing for all rounds in this study.

3.2. Original Dataset

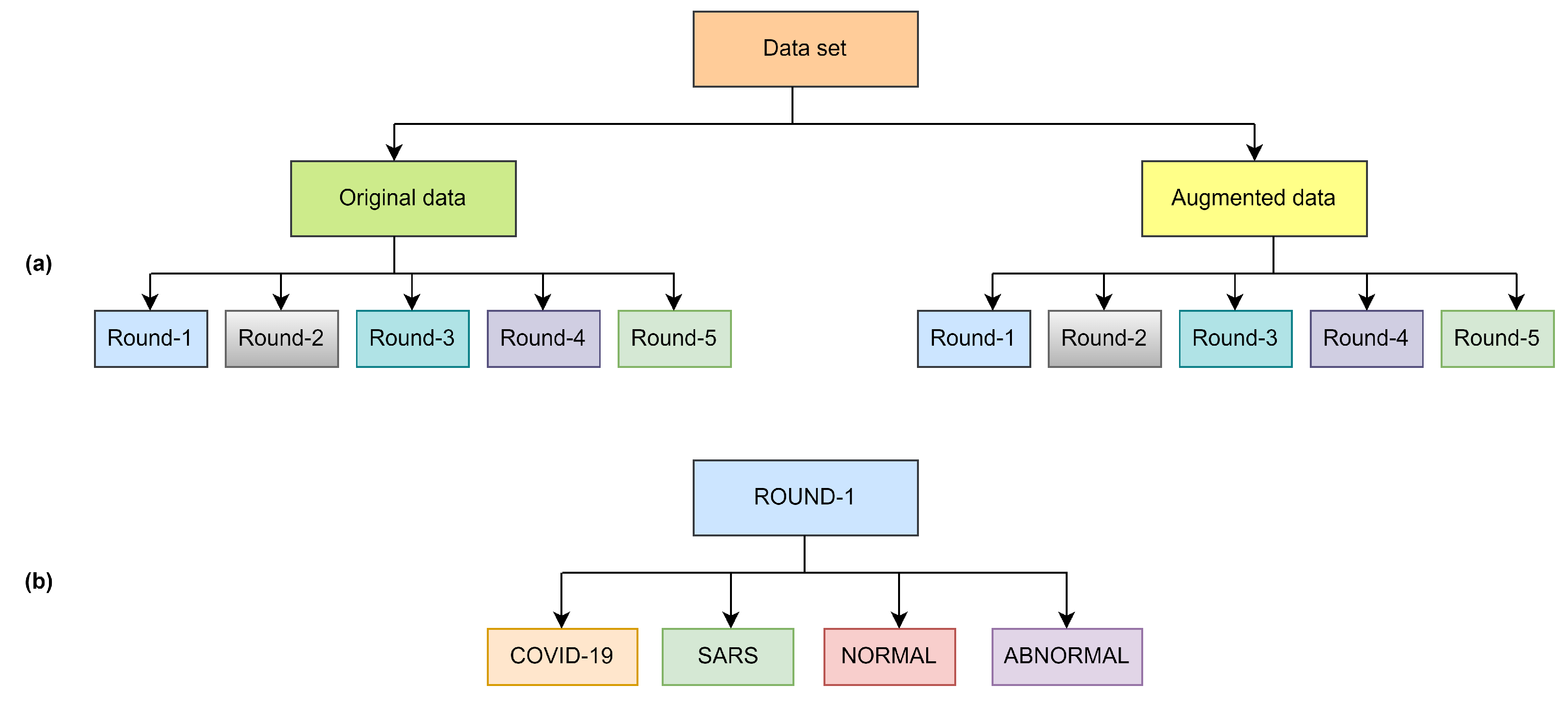

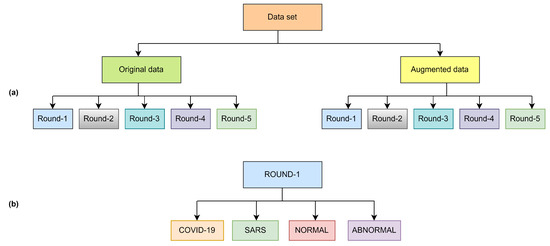

The original dataset was organized with five rounds of sub-datasets with equal images. All the rounds included four classes as shown in Figure 1 and Figure 2, as well as all the classes, contain 113 images of COVID-19 for training and 28 images for testing, the normal class contains 1073 images for training and 286 images for testing, SARS class contain seven images for training and one image for testing and abnormal class contain 1103 images for training and 275 for testing as shown in Table 2. Finding a publicly available data set is quite arduous; as we know, COVID-19 is a novel virus. Given this case, the CXR images in openly echeloned datasets have united to create the original dataset.

Figure 1.

Overview of dataset structure (a) and class distribution per round (b).

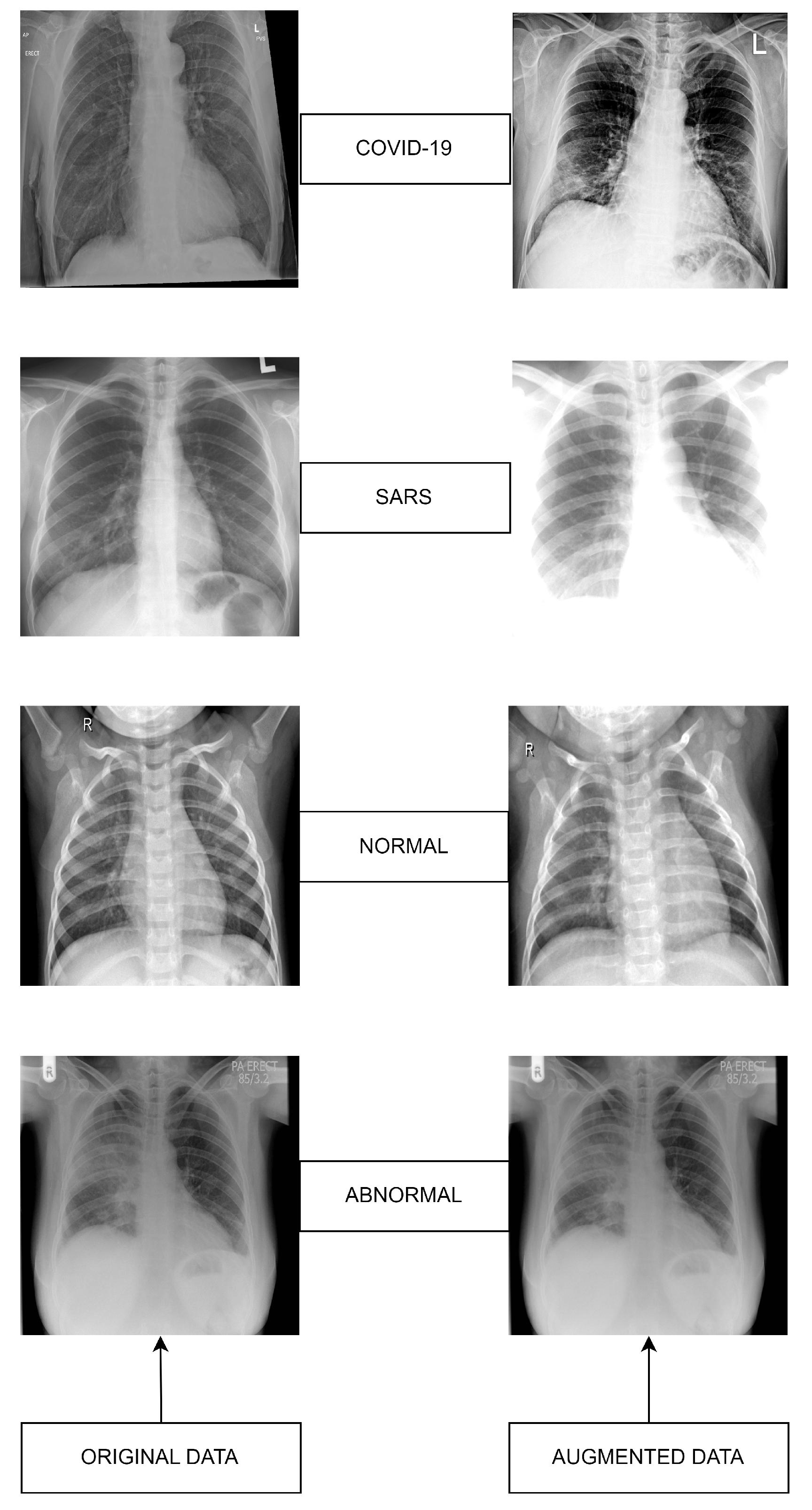

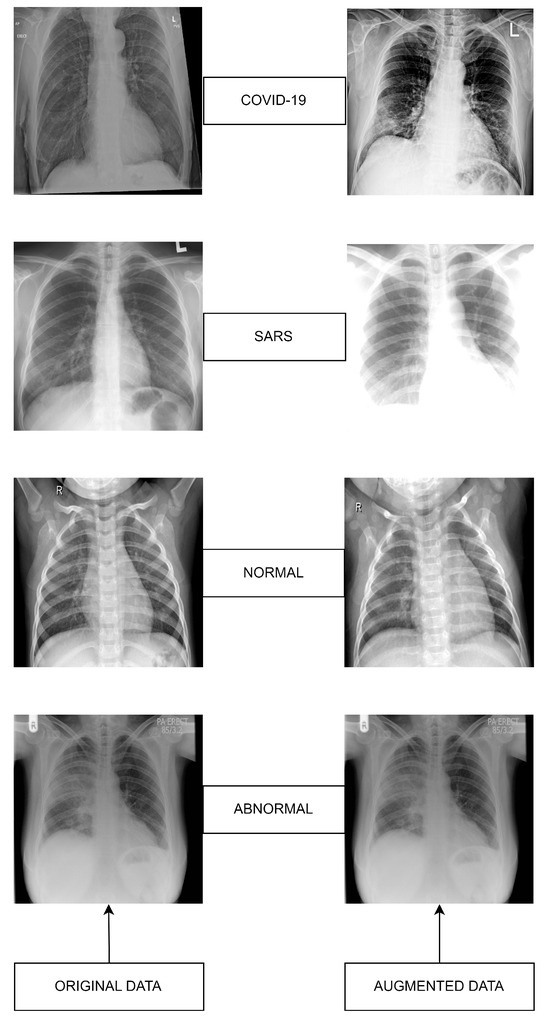

Figure 2.

Datasets sample images: Original (left) versus Augmented (right).

3.3. Augmented Dataset

Given the deficit of publicly available data and for the higher performance of the models, we increased our dataset in augmented form. Data augmentation is an AI evolution for distending the size and the multiformity of the data using many iterations of the samples in a dataset. Data augmentation is generally deployed in machine learning to compromise the class misbalancing issues, detract overfitting in deep learning, and raise convergence, which results in finer outcomes in the end. After enforcing augmentation, the number of entire images in the dataset is introduced in Table 3.

3.4. Deep Learning CNN Model Selection

In this study, we evaluated five deep learning networks: VGG 16, VGG 19, ResNet 50, GoogleNet, and AlexNet, to assess their suitability for our research objectives. Our aim was to compare the performance of shallow versus deep learning networks, as well as to examine the effects of employing two variants of the VGG architecture to elucidate the impact of network depth within a similar framework. To ensure a fair and consistent comparison across these models, we applied a uniform set of optimization parameters. Specifically, each model was trained using an image size of pixels and the Adam optimization algorithm (adaptive moment estimation) for efficient network updates. The training was conducted with a batch size of 10 and a learning rate of across 100 epochs. This methodological consistency was strategically chosen to isolate and examine the impact of architectural differences on the performance of each model, thereby providing a clearer insight into the inherent capabilities and limitations of each architecture. Additionally, the structure of these pre-trained CNN models, as well as their performance metrics, are detailed in Table 4.

Table 4.

Structure of Models.

3.5. VGG-16

VGG16 is a kind of Visual Geometry Group convolutional neural network, and this model was presented by K. Simonyan and A. Zisserman from the University of Oxford in the paper “Very Deep Convolutional Networks for Large-Scale Image Recognition”. The VGG-16 network accomplishes 92.7% top-5 train and test accuracy in ImageNet, a dataset of over 14 million images of 1000 classes [34]. In VGG-16, more kernels are changed with the different numbers of 3 × 3 filters to extract complex features cheaply. VGG-16 network is a sequence of five convolutional blocks (13 convolutional layers) and three fully-connected layers [35].

3.6. VGG-19

Visual Geometry Group Network (VGG-19) is grounded in convolutional neural network architecture. It was executed at the 2014 Large Scale Visual Recognition Challenge (ILSVRC2014). The VGG Net performed extensively on the millions of images [36]. Concerning upgrading image extraction functionality, the VGG Net used smaller filters of 3 × 3, compared to the AlexNet 11 × 11 filter. VGG19 is deeper than VGG16. However, it is more extensive and thus more expensive than VGG16 to train the network [37].

3.7. AlexNet

AlexNet is an 8-layer CNN network, and it was reported early in 2012 with an apportion in the ImageNet contest. Later, in this contest, it was substantiated that the image qualities acquired from CNN architectures can increase the properties gated from the traditional methods. In this network, Rectified- Linear Unit-(ReLU) is employed to attach non-linearity, boosting the network. AlexNet has five convolutional layers; three fully connected layers accompany the output layer and additionally accommodate 62.3 million parameters [38].

3.8. ResNet-50

Microsoft Research Team developed the deep learning convolutional neural network named ResNet, and it received the 2015 “ImageNet Large Scale Visual Recognition Challenge (ILSVRC)” challenge, including a 3.57% error rate [39]. In the Resnet, every layer comprehends various blocks. Along the ResNet model, while the residual layer formation is seated, the number of parameters computed is decreased compared to the other deep learning CNN models [40].

3.9. GoogleNet

In 2015, a new CNN model was grounded on the floor, named the GoogleNet deep learning model, which emerged with the idea that existing neural networks should go deeper. This CNN model is expressed by the module known as inception, and all the modules include the various information of convolution and max-pooling layers. Even though the network dealing with an overall of 9 inception blocks has computational complexity, the execution and compliance of the network model were enhanced with the improvements [41].

4. Materials and Methods

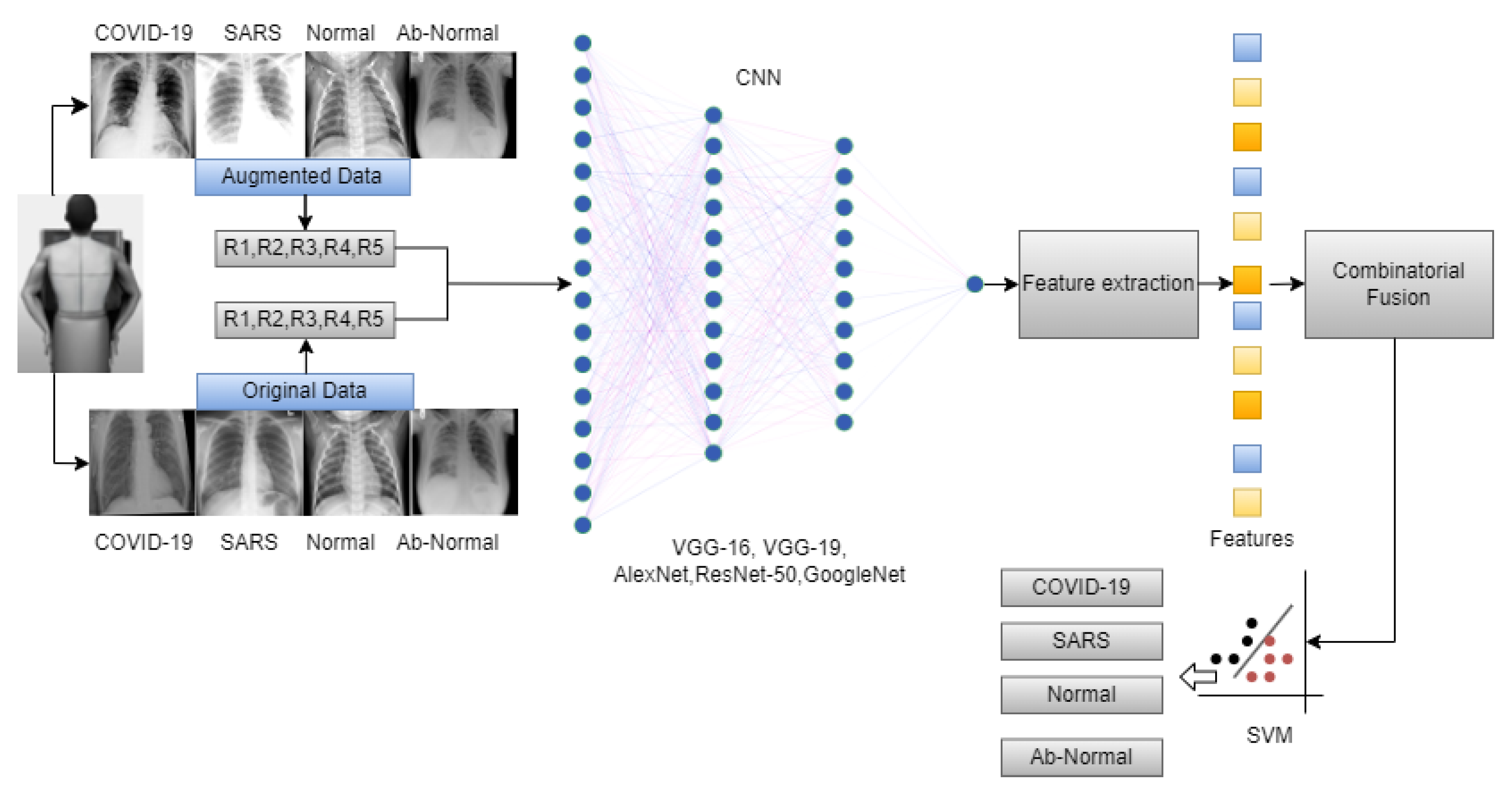

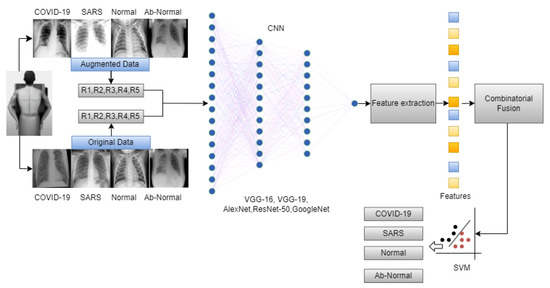

The overall workflow of this study is provided in Figure 3. In this work, we have used five deep-learning models for our experiment due to their interoperability and ease of use. Furthermore, this methodology section discusses the data preprocessing process for the deep learning models and the overall workflow of the system architecture and combinatorial fusion with support vector machine. The dataset structure is given in Figure 1 while the dataset images are shown in Figure 2.

Figure 3.

Overall workflow of the proposed work.

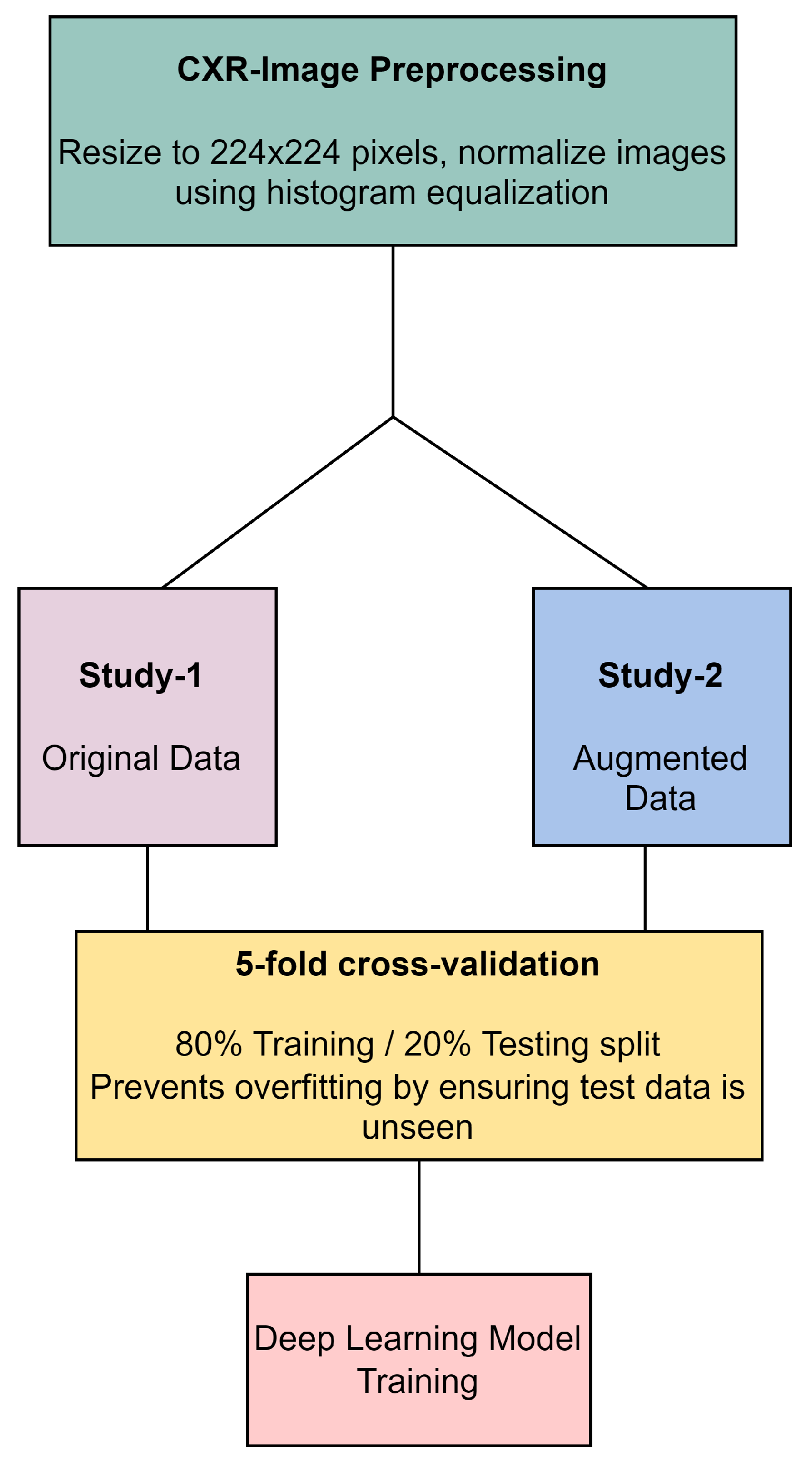

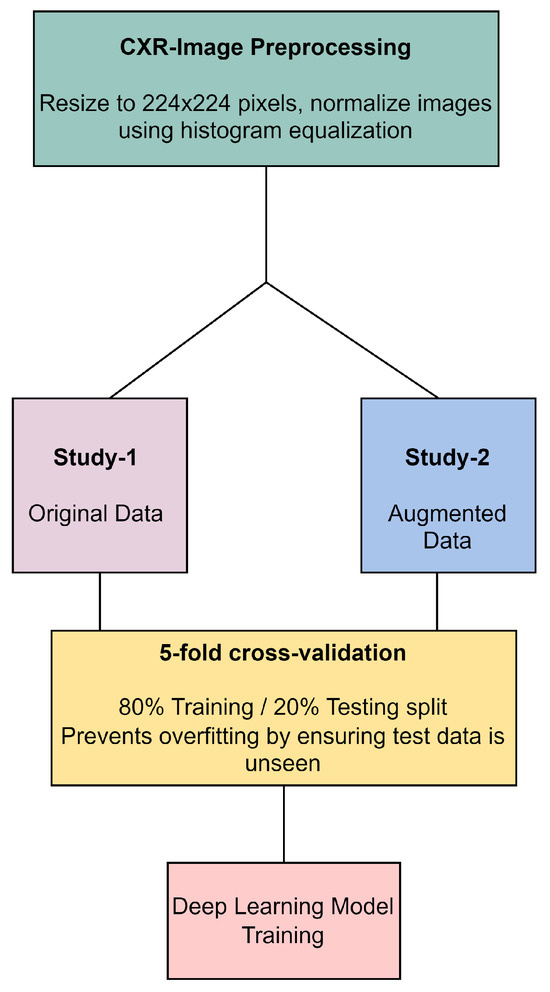

In our methodology, all CXR images underwent preprocessing, which included resizing to 224 × 224 pixels and normalization through histogram equalization, as illustrated in Figure 4. The original dataset, referred to as Study-1, did not undergo image augmentation and consisted of a specific number of images per category: 113 COVID-19, 1073 normal, 7 SARS, and 1103 abnormal images. In contrast, the Study-2 dataset was enriched with augmented data to create a balanced training set that included 1243 COVID-19, 1073 normal, 1267 SARS, and 1103 abnormal images. Both studies were subjected to a stratified 5-fold cross-validation process, ensuring an 80% training and 20% testing split to prevent overfitting. This split was carefully maintained, with augmented data from Study-2 being strictly utilized for training purposes to improve model robustness, while ensuring that the validation and testing phases were conducted with unseen, original images only. This approach underscores our commitment to methodological rigor and the validity of our results in the development of deep learning models for CXR image analysis.

Figure 4.

CXR image preprocessing and preparation workflow.

Combinatorial Fusion

Combinatorial fusion is a methodology that combines different data sources or decision-making strategies to achieve a better performance than any individual source or strategy [42]. It seeks the optimal combination from the possible combinations. For showing the strengths of multiple deep learning architectures, such as VGG16, VGG19, ResNet50, GoogLeNet, and AlexNet [43]. It enhances our two-stage data analysis by merging the advantages of individual models (VGG-16, VGG19, AlexNet, ResNet-50, GoogleNet) to boost classification accuracy. We extract feature vectors from each trained model, representing crucial image features. The procedure begins by separately loading each of these architectures, each pre-trained on an augmented dataset, and then using these models for feature extraction. In our study for each image (where I represents the set of all images) every architecture (with ) is employed to extract a respective feature vector, represented as .

Thus, for every image, we obtain a set of feature vectors as shown in Equation (1)

Combinatorial fusion technique is applied to amalgamate the strengths of each model. The feature vectors from all the models are concatenated to produce a combined feature vector for each image, represented mathematically as

where ⊕ denotes the concatenation operation.

With a robust combined feature representation, a Support Vector Machine (SVM) classifier is trained on these feature vectors for each. The SVM is chosen due to its proven efficacy in high-dimensional spaces and its ability to handle linear and non-linear data distributions [44]. The SVM was configured with a Radial Basis Function (RBF) kernel, chosen for its effectiveness in managing the nonlinear characteristics of our dataset. The SVM was parameterized with a regularization parameter and a gamma value of for the RBF kernel, optimized via grid search to ensure a balance between model complexity and generalization, thus preventing overfitting. This strategy aimed to maximize cross-validation accuracy, enabling reliable classification of images into COVID-19, SARS, normal, or abnormal categories.

5. Performance and Evaluation Matrix

The application developed for the study was implemented in the Python environment. The computer running the application has features such as 16 GB RAM, an I7 processor, and a GeForce 1070 graphics card. Performance metrics are calculated from the confusion matrix obtained in the experimental results. These metrics include Sensitivity (Se), Specificity (Sp), F-score (F-Scr), Precision (Pre), and Accuracy (Acc). True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN) values are used to calculate these metrics.

6. Results

In this comprehensive study, we focused on classifying lung X-ray images into four distinct categories: COVID-19, pneumonia, normal, and abnormal, using advanced deep learning models and combinatorial fusion techniques. Our primary goal was to significantly enhance the accuracy of these classifications. The performance of various models across five-fold cross-validation is presented in Table 5. Shown in Table 6 and Table 7, GoogleNet and ResNet, both deep learning models, provided better results in experimental studies with approximately 98.41% and 99% average training accuracy in all five rounds using both the original and enhancement datasets compared to other models.

Table 5.

Performance Metrics Across 5-Fold Cross-Validation.

Table 6.

Training accuracy of the original data set.

Table 7.

Training Accuracy of Correct data set.

6.1. Discussion

This study’s integration of deep learning models with combinatorial fusion in a two-stage analytical approach represents a significant stride in diagnosing respiratory infections from lung X-ray images. The Table 5, comparative analysis across 5-fold cross-validation showcases ResNet50 and GoogleNet as the superior models, with ResNet50 achieving accuracy scores up to 95.4%, precision as high as 92.9%, recall reaching 91.8%, and F1-scores up to 92.2%. GoogleNet closely follows, with top scores nearly matching those of ResNet50, slightly lower in accuracy and precision but consistently high across all metrics. In contrast, VGG16, VGG19, and AlexNet exhibit lower performance, with VGG19 peaking at 92.3% accuracy, which, while commendable, falls short of the benchmark set by ResNet50 and GoogleNet. This succinct summary encapsulates the models’ efficacy, positioning ResNet50 and GoogleNet as the preferred choices for high-stakes accuracy-dependent applications. The average training loss of the original dataset in the case of ResNet and GoogleNet is 0.08624 and 0.05854, respectively, relatively higher because of the data ambiguity, as shown in Table 8. As we compare it with the correct dataset as shown in Table 9, average training loss in all the phases, ResNet and GoogleNet both perform better with 0.0174 and 0.0397 average losses and the rest of the other models also perform pretty well in all the rounds than the original dataset. As shown in Table 10, GoogleNet and VGG-19 performed well with 93.61% and 93.99% average accuracy, while ResNet and other models are entirely satisfactory. On the correct data set as shown in Table 11, GoogleNet and ResNet both models are performing well in case of validation also with more than 95 and 96% average performance that’s higher than the original dataset while VGG-19 and others models are performing relatively good. Testing average loss VGG-19 is 0.1809, quite less than other models in the original data as shown in Table 12.

Table 8.

Training Loss of Original data set.

Table 9.

Training Loss of Correct data set.

Table 10.

Round-wise Performance Metrics of Different Models.

Table 11.

Testing accuracy of correct data set.

Table 12.

Testing Loss of original data set.

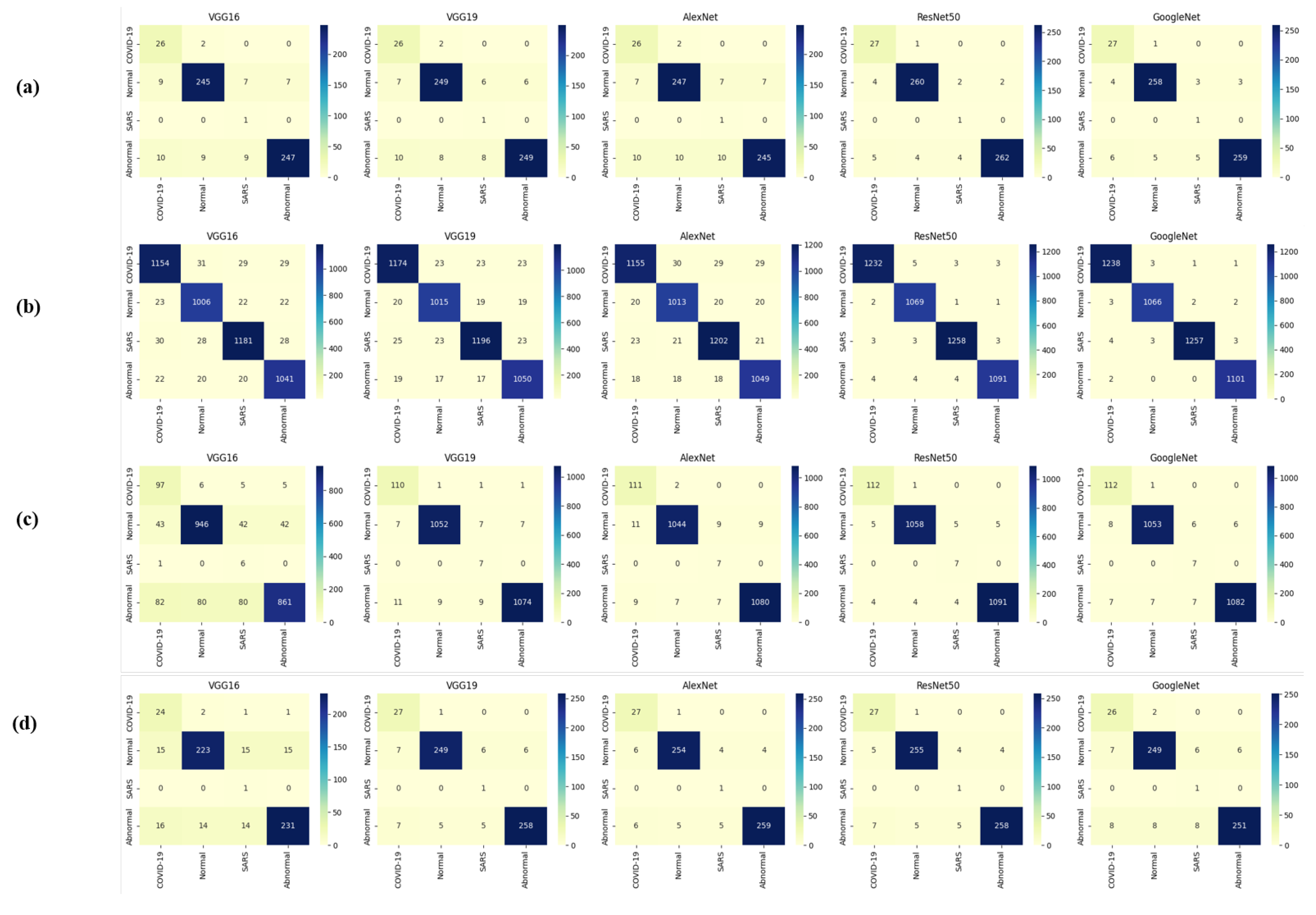

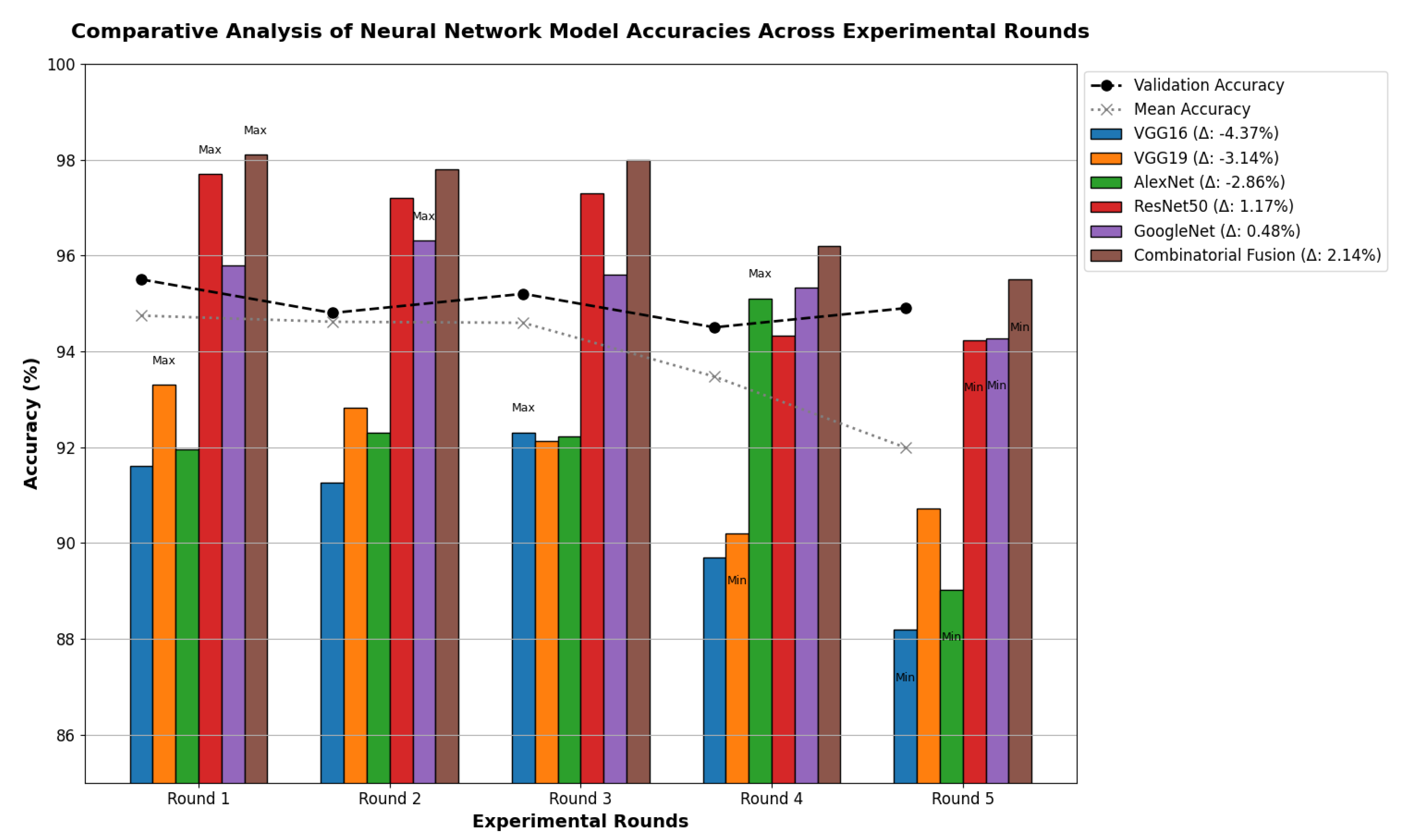

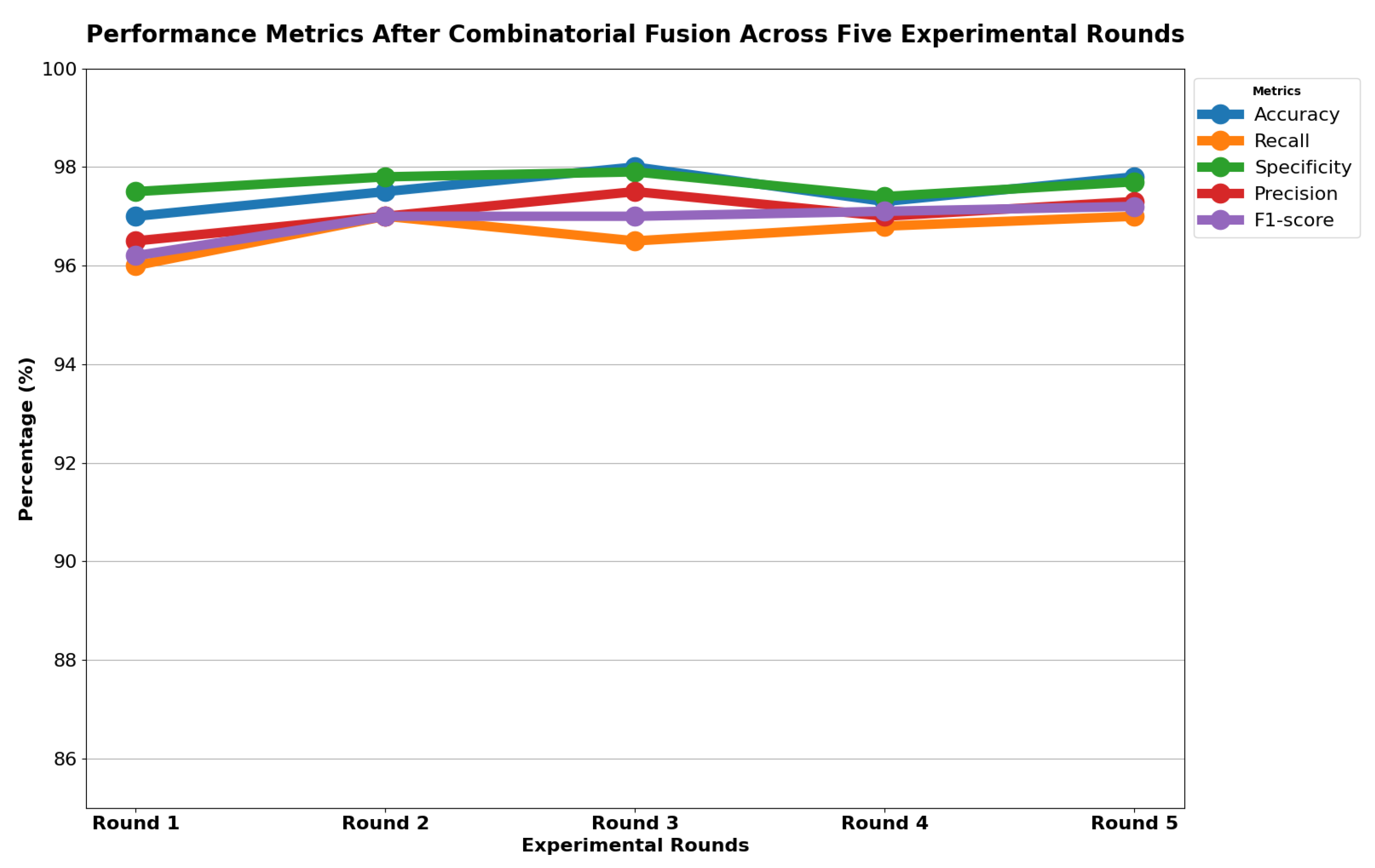

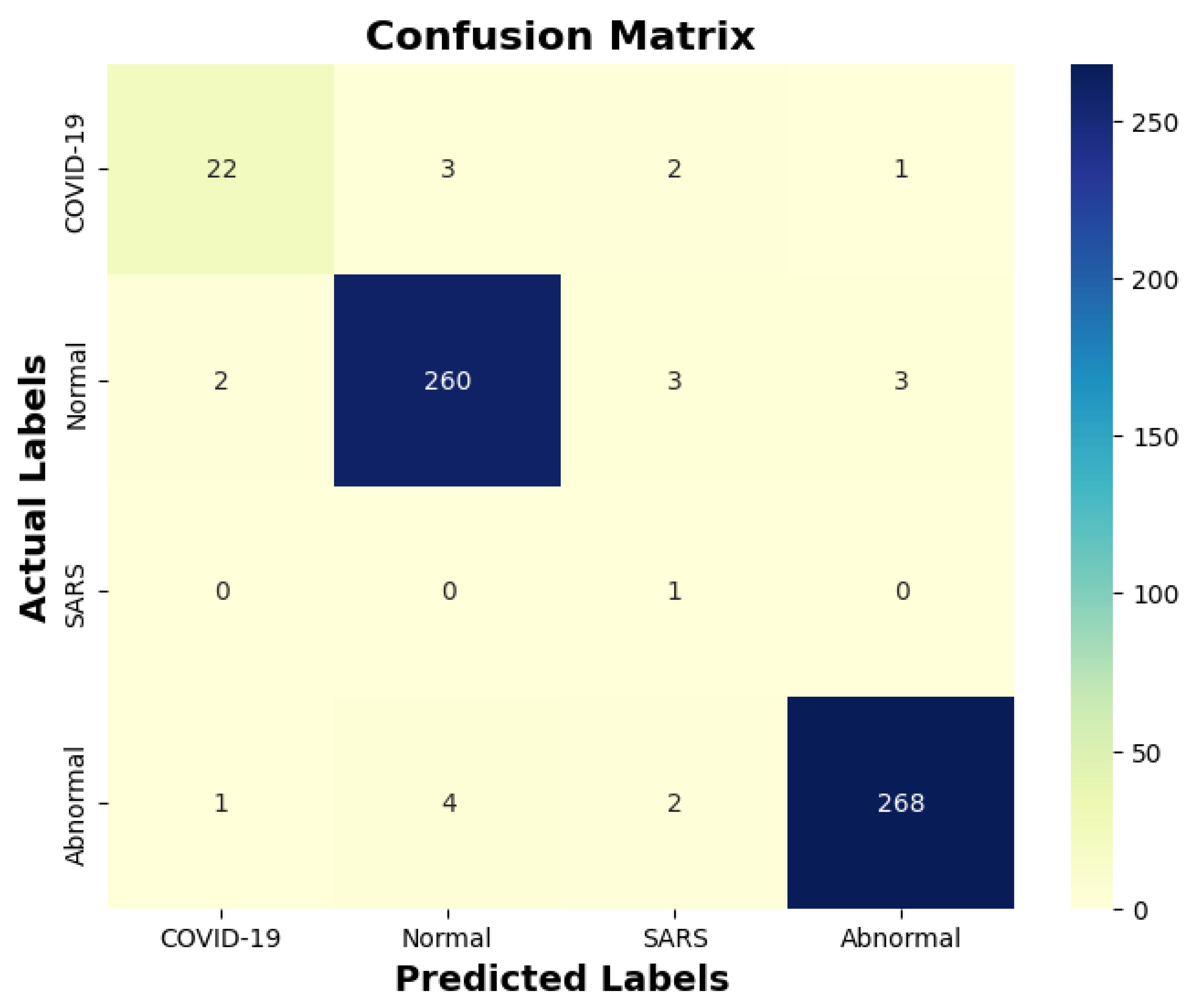

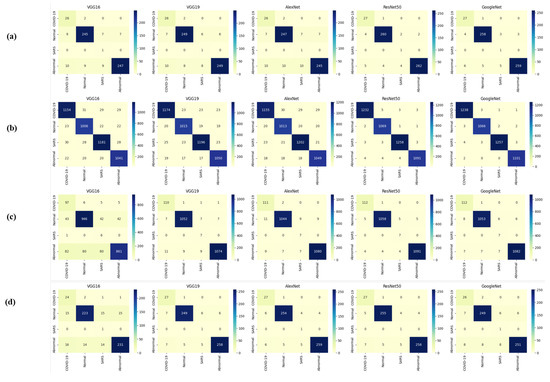

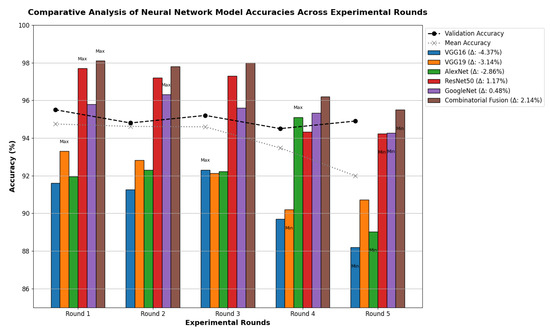

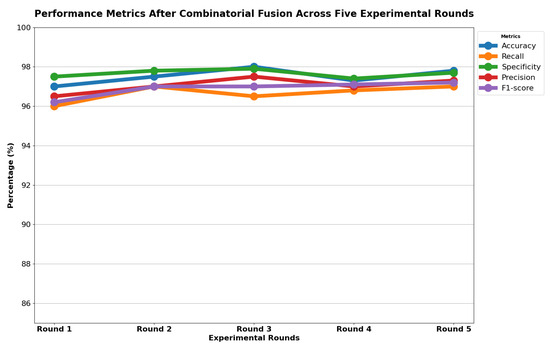

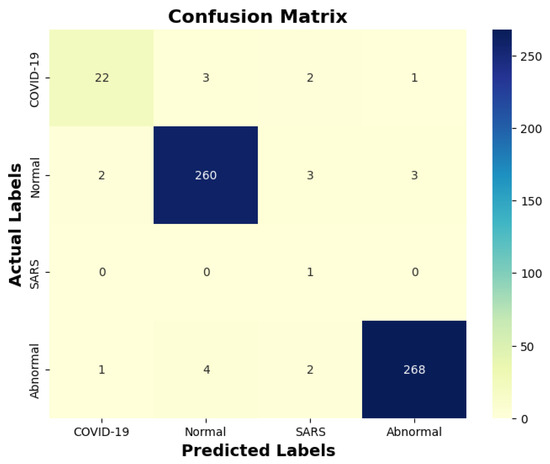

Table 13 indicates that ResNet loss is 0.2235 and GoogleNet is 0.3507 in the case of the correct dataset. As we look and compare, Table 14 shows the performance metrics for the augmented (correct) dataset, while Table 15 shows the performance metrics of correct(augmented) data models. In both cases, Google performed exceptionally well. When we look at the initial values of the results approximately 94% using the features obtained from AlexNet and VGG-19 models and the results obtained with the approach we propose, there was not much change in Figure 5 confusion matrices for five machine learning models for both the data set orignal dataset and original dataset with VGG-16, VGG-19, AlexNet, ResNet50, and GoogleNet—in classifying medical images into four categories: COVID-19, Normal, SARS, and Abnormal. Each matrix shows the number of correct and incorrect predictions for each category’s average of all rounds. These matrices offer a concise way to evaluate each model’s performance; however, when the features extracted from all these models were combined using Combinatorial Fusion Analysis and then classified using a Support Vector Machine (as shown in Figure 6), a notable increase in the success rate was observed; in this study, the most compelling features were identified through Combinatorial Fusion Analysis. The visual representation distinctly underscores the prowess of various neural network architectures across five experimental rounds. While individual models like VGG16, VGG19, AlexNet, ResNet50, and GoogleNet exhibit variations in their performance, the Combinatorial Fusion model is a notable highlight. This method ingeniously amalgamates features and strengths from the base architectures, and its effect on Accuracy is evident. Across the experimental rounds, the Combinatorial Fusion consistently posts accuracies in the high 90 s, even touching 98.1% in Round 1. In many instances, this fusion approach outperforms individual models and closely parallels or surpasses the validation accuracy, emphasizing its potential reliability and robustness. This overarching trend suggests that a fusion of models can yield a synergistic improvement, capitalizing on the collective strengths and mitigating individual model weaknesses. The visual data accentuates the promise and capabilities combinatorial approaches like this bring to the evolving landscape of neural networks. The graph elegantly visualizes the effects of Combinatorial Fusion on key performance metrics across five experimental rounds, as shown in Figure 7. Each metric, namely Accuracy, Recall, Specificity, Precision, and F1-score, is represented by a distinct colored line. The x-axis represents the experimental rounds (‘Round 1’ through ‘Round 5’), while the y-axis indicates the percentage value of each metric, ranging from 85% to 100%. The lines for each metric depict their trajectory across rounds after applying combinatorial fusion. Notably, the fusion consistently enhances the metrics’ values, further validating the efficacy of the fusion approach. While Accuracy maintains a high 97.8%, the fusion’s impact is also vividly evident in other metrics. Recall, Specificity, Precision, and the F1-score remain robust, revealing consistent improvement, validating the methodology’s effectiveness, and showing the confusion matrics prediction accordingly. The graph serves as a succinct yet insightful visual representation of the fusion’s positive impact on key performance aspects, reaffirming its significance in elevating the overall quality of the model’s output, and Figure 8, shows the confusion matrix of the combination score.

Table 13.

Testing Loss of Correct data set.

Table 14.

The obtained results for the correct dataset on different models for k = 5 using performance metrics.

Table 15.

The obtained results for the original dataset on different models for k = 5 using performance metrics.

Figure 5.

Confusion matrices (a,b) are derived from correct testing and training data, while (c,d) are from the original data across all models.

Figure 6.

Combination score performance augmented correct data.

Figure 7.

Combination score performance graph of all rounds.

Figure 8.

Combination score performance confusion matrix.

6.2. Comparison

Our study’s use of combinatorial fusion with deep learning models for lung X-ray image analysis has achieved testing accuracies of approximately 98% for the augmented dataset and around 96.15% for the original dataset. This is a significant advancement when compared to the findings in existing literature. For example, the work of Asmaa Abbas using the DeTraC classifier attained a 95.12% accuracy rate, whereas the new CNN model by Kesim and Dokur reported 86% accuracy, and Aras.M. Ismael’s research with various ResNet and VGG models achieved 94% accuracy, all of which are lower than our results. Yujin Oh’s ResNet-18 based model had an accuracy of 76.9%, and Zhang’s use of ResNet-18 reached 95.18% accuracy, both trailing behind our study’s performance. Importantly, our approach addresses a critical issue highlighted in recent reports, such as the one by Ulinici M et al., regarding the limitations of X-ray as a diagnostic tool for interstitial pneumonia, a common manifestation in COVID-19. Traditional X-ray imaging can be challenging for diagnosing this condition due to its subtlety in early stages. The integration of AI tools in our study offers a promising enhancement to this diagnostic method. By applying advanced deep learning techniques, our method potentially improves the sensitivity and specificity of X-ray imaging for detecting such complex conditions, thus contributing to more accurate and timely diagnoses. Overall, the results of our study not only demonstrate considerable improvements in lung X-ray image classification accuracy but also suggest a pivotal role for AI-enhanced imaging in addressing inherent limitations of conventional methods, especially in the context of challenging respiratory diseases like COVID-19.

6.3. Limitations and Future Recommendations

Our study represents a significant advancement in the application of combinatorial fusion and deep learning for medical imaging diagnostics. However, we acknowledge certain limitations that provide directions for future research. Firstly, the reliance on publicly available X-ray datasets, while invaluable for initial model training and validation, may not capture the full spectrum of clinical variability. This limitation underscores the need for a more diverse dataset that reflects a wider range of patient demographics and disease manifestations. Furthermore, by focusing exclusively on X-ray imaging, our current model may miss critical diagnostic information available through other modalities, such as CT scans, which can offer complementary insights into respiratory conditions. Addressing these gaps, future efforts will aim to integrate a broader array of imaging data, including CT, MRI, and ultrasound, to enrich our model’s diagnostic capability and generalizability across different clinical scenarios.

In addition to enhancing data diversity and model comprehensiveness, a key area of our future work will concentrate on the computational aspects of our methodology. Recognizing the importance of scalability and efficiency in clinical applications, we are committed to a rigorous evaluation of time and space complexity. This endeavor will involve not only a detailed performance analysis under various computational conditions but also the pursuit of advanced optimization strategies to refine our model’s efficiency without detracting from its accuracy. Moreover, to bridge the gap between theoretical innovation and practical utility, we plan to undertake pilot deployment studies in clinical environments. These studies will assess the real-world applicability of our diagnostic tool, focusing on its integration into clinical workflows, user acceptance, and the impact of computational demands on operational feasibility. Such real-world evaluations are crucial for ensuring that our AI-driven diagnostic solutions are not only technologically advanced but also pragmatically viable and adaptable to the evolving landscape of medical diagnostics.By addressing these limitations and setting a clear roadmap for future work, we are poised to significantly enhance the relevance, effectiveness, and sustainability of AI tools in medical imaging diagnostics. Our commitment to continuous improvement and adaptation promises to keep our methodology at the forefront of the field, ready for widespread adoption in diverse healthcare settings.

7. Conclusions

COVID-19, which is a rapidly spreading disease in the world, will continue to affect our lives for a long time if vaccine studies do not succeed shortly. Researchers continue to investigate methods for diagnosis and treatment in this regard. The primary purpose of our study is to contribute to this research. For this purpose, we created a 4-class dataset, which included COVID-19, pneumonia, and normal and un-normal X-ray lung images we obtained from open sources. The created data set was preprocessed, and a new one was obtained. Deep learning models of Alex Net, VGG-16, VGG19, GoogleNet, and ResNet, trained with this data set, were used for feature extraction. Then, the most compelling features were selected from the extracted features with the help of combinatorial fusion, and selected features were classified with the help of it. The features of the models that provided the highest performance were combined among themselves, and the features of the models that provided the lowest performance were combined. When we look at the results obtained, overall Accuracy was obtained as a result of selecting and classifying the features obtained from the ResNet model and GoogleNet. Another successful model was found to be AlexNet and VGG19. Since the approach was proven reliable by considering different criteria, it is predicted that it can be used to provide another idea for experts during the diagnosis of COVID-19 disease. To contribute to this field in future studies, the plan is to continue studies using image processing and different deep-learning models.

Author Contributions

Conceptualization, R.K.; Methodology, R.K.; Software, C.-T.P.; Validation, C.-T.P. and Z.-H.W.; Formal analysis, R.K. and C.-H.W.; Investigation, C.-T.P. and C.-H.W.; Resources, C.-T.P. and C.-Y.C.; Data curation, R.K.; Visualization, Z.-H.W. and Y.-L.S.; Supervision, Y.-L.S.; Project administration, C.-Y.C.; Funding acquisition, C.-Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Kaohsiung Armed Forces General Hospital, Kaohsiung (KAFGH_A_113001).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All the data and codes used in this work are available upon a reasonable request from the corresponding authors.

Acknowledgments

The authors extend their profound appreciation to Kaohsiung Armed Forces General Hospital, Kaohsiung, and National Sun Yat-sen University, Kaohsiung, for their substantial support throughout this project. This support was crucial to the project’s success, offering essential resources and valuable inspiration that significantly influenced our research. We recognize their critical contribution to our work’s progress and are deeply thankful for their dedication to fostering scientific advancement.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Ulinici, M.; Covantev, S.; Wingfield-Digby, J.; Beloukas, A.; Mathioudakis, A.G.; Corlateanu, A. Screening, diagnostic and prognostic tests for COVID-19: A comprehensive review. Life 2021, 11, 561. [Google Scholar] [CrossRef] [PubMed]

- Abdullah, A.; Sam, I.C.; Ong, Y.J.; Theo, C.H.; Pukhari, M.H.; Chan, Y.F. Comparative Evaluation of a Standard M10 Assay with Xpert Xpress for the Rapid Molecular Diagnosis of SARS-CoV-2, Influenza A/B Virus, and Respiratory Syncytial Virus. Diagnostics 2023, 13, 3507. [Google Scholar] [CrossRef]

- Lassmann, S.; Bauer, M.; Soong, R.; Schreglmann, J.; Tabiti, K.; Nährig, J.; Rüger, R.; Höfler, H.; Werner, M. Quantification of CK20 gene and protein expression in colorectal cancer by RT-PCR and immunohistochemistry reveals inter-and intratumour heterogeneity. J. Pathol. 2002, 198, 198–206. [Google Scholar] [CrossRef]

- Yin, Z.; Kang, Z.; Yang, D.; Ding, S.; Luo, H.; Xiao, E. A comparison of clinical and chest CT findings in patients with influenza A (H1N1) virus infection and coronavirus disease (COVID-19). Am. J. Roentgenol. 2020, 215, 1065–1071. [Google Scholar] [CrossRef]

- Jaegere, T.M.H.D.; Krdzalic, J.; Fasen, B.A.C.M.; Kwee, R.M.; COVID-19 CT Investigators South-East Netherlands (CISEN) Study Group. Radiological Society of North America chest CT classification system for reporting COVID-19 pneumonia: Interobserver variability and correlation with reverse-transcription polymerase chain reaction. Radiol. Cardiothorac. Imaging 2020, 2, e200213. [Google Scholar] [CrossRef] [PubMed]

- Pasa, F.; Golkov, V.; Pfeiffer, F.; Cremers, D.; Pfeiffer, D. Efficient deep network architectures for fast chest X-ray tuberculosis screening and visualization. Sci. Rep. 2019, 9, 6268. [Google Scholar] [CrossRef] [PubMed]

- Shah, F.M.; Joy, S.K.S.; Ahmed, F.; Hossain, T.; Humaira, M.; Ami, A.S.; Paul, S.; Jim, M.A.R.K.; Ahmed, S. A comprehensive survey of COVID-19 detection using medical images. SN Comput. Sci. 2021, 2, 434. [Google Scholar] [CrossRef]

- Shkolyar, E.; Jia, X.; Chang, T.C.; Trivedi, D.; Mach, K.E.; Meng, M.Q.H.; Xing, L.; Liao, J.C. Augmented bladder tumor detection using deep learning. Eur. Urol. 2019, 76, 714–718. [Google Scholar] [CrossRef] [PubMed]

- Ahsan, M.; Based, M.A.; Haider, J.; Kowalski, M. COVID-19 detection from chest X-ray images using feature fusion and deep learning. Sensors 2021, 21, 1480. [Google Scholar]

- Kumar, A.; Vashishtha, G.; Gandhi, C.P.; Zhou, Y.; Glowacz, A.; Xiang, J. Novel convolutional neural network (NCNN) for the diagnosis of bearing defects in rotary machinery. IEEE Trans. Instrum. Meas. 2021, 70, 3510710. [Google Scholar] [CrossRef]

- Sevi, M.; Aydin, İ. COVID-19 Detection Using Deep Learning Methods. In Proceedings of the 2020 International Conference on Data Analytics for Business and Industry: Way Towards a Sustainable Economy (ICDABI), Sakheer, Bahrain, 26–27 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Mahmud, T.; Rahman, M.A.; Fattah, S.A. CovXNet: A multi-dilation convolutional neural network for automatic COVID-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Comput. Biol. Med. 2020, 122, 103869. [Google Scholar] [CrossRef]

- Gupta, A.; Gupta, S.; Katarya, R. InstaCovNet-19: A deep learning classification model for the detection of COVID-19 patients using Chest X-ray. Appl. Soft Comput. 2021, 99, 106859. [Google Scholar] [CrossRef]

- Fusco, R.; Grassi, R.; Granata, V.; Setola, S.V.; Grassi, F.; Cozzi, D.; Pecori, B.; Izzo, F.; Petrillo, A. Artificial intelligence and COVID-19 using chest CT scan and chest X-ray images: Machine learning and deep learning approaches for diagnosis and treatment. J. Pers. Med. 2021, 11, 993. [Google Scholar] [CrossRef]

- Jebril, N. World Health Organization Declared a Pandemic Public Health Menace: A Systematic Review of the Coronavirus Disease 2019 “COVID-19”. Available online: https://ssrn.com/abstract=3566298 (accessed on 1 April 2020).

- Ranjan, A.; Kumar, C.; Gupta, R.K.; Misra, R. Transfer Learning Based Approach for Pneumonia Detection Using Customized VGG16 Deep Learning Model. In Internet of Things and Connected Technologies; Misra, R., Kesswani, N., Rajarajan, M., Veeravalli, B., Patel, A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 17–28. [Google Scholar]

- Yang, T.; Wang, Y.C.; Shen, C.F.; Cheng, C.M. Point-of-care RNA-based diagnostic device for COVID-19. Diagnostics 2020, 10, 165. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef] [PubMed]

- Lakhani, P.; Sundaram, B. Deep learning at chest radiography: Automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 2017, 284, 574–582. [Google Scholar] [CrossRef]

- Afshar, P.; Heidarian, S.; Naderkhani, F.; Oikonomou, A.; Plataniotis, K.N.; Mohammadi, A. Covid-caps: A capsule network-based framework for identification of COVID-19 cases from X-ray images. Pattern Recognit. Lett. 2020, 138, 638–643. [Google Scholar] [CrossRef]

- Abbas, A.; Abdelsamea, M.M.; Gaber, M.M. Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Appl. Intell. 2021, 51, 854–864. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Lin, Z.Q.; Wong, A. Covid-net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef] [PubMed]

- Ucar, F.; Korkmaz, D. COVIDiagnosis-Net: Deep Bayes-SqueezeNet based diagnosis of the coronavirus disease 2019 (COVID-19) from X-ray images. Med. Hypotheses 2020, 140, 109761. [Google Scholar] [CrossRef]

- Khan, A.I.; Shah, J.L.; Bhat, M.M. CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest X-ray images. Comput. Methods Programs Biomed. 2020, 196, 105581. [Google Scholar] [CrossRef]

- Zammit, S.C.; Sidhu, R. Capsule Endoscopy–Recent Developments and Future Directions. Expert Rev. Gastroenterol. Hepatol. 2021, 15, 127–137. [Google Scholar] [CrossRef] [PubMed]

- Kesim, E.; Dokur, Z.; Olmez, T. X-ray Chest Image Classification by A Small-Sized Convolutional Neural Network. In Proceedings of the 2019 Scientific Meeting on Electrical-Electronics & Biomedical Engineering and Computer Science (EBBT), Istanbul, Turkey, 24–26 April 2019; pp. 1–5. [Google Scholar]

- Demir, F.; Ismael, A.M.; Sengur, A. Classification of Lung Sounds with CNN Model Using Parallel Pooling Structure. IEEE Access 2020, 8, 105376–105383. [Google Scholar] [CrossRef]

- Park, S.; Kim, G.; Oh, Y.; Seo, J.B.; Lee, S.M.; Kim, J.H.; Moon, S.; Lim, J.K.; Ye, J.C. Multi-task vision transformer using low-level chest X-ray feature corpus for COVID-19 diagnosis and severity quantification. Med. Image Anal. 2022, 75, 102299. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Lu, S.; Wang, S.H.; Yu, X.; Wang, S.J.; Yao, L.; Pan, Y.; Zhang, Y.D. Diagnosis of COVID-19 Pneumonia via a Novel Deep Learning Architecture. J. Comput. Sci. Technol. 2022, 37, 330–343. [Google Scholar] [CrossRef] [PubMed]

- Makris, A.; Kontopoulos, I.; Tserpes, K. COVID-19 detection from chest X-ray images using Deep Learning and Convolutional Neural Networks. In Proceedings of the 11th Hellenic Conference on Artificial Intelligence, Athens, Greece, 2–4 September 2020; pp. 60–66. [Google Scholar]

- Pereira, R.M.; Bertolini, D.; Teixeira, L.O.; Silla, C.N., Jr.; Costa, Y.M.G. COVID-19 identification in chest X-ray images on flat and hierarchical classification scenarios. Comput. Methods Programs Biomed. 2020, 194, 105532. [Google Scholar] [CrossRef] [PubMed]

- Dey, A.; Chattopadhyay, S.; Singh, P.K.; Ahmadian, A.; Ferrara, M.; Senu, N.; Sarkar, R. MRFGRO: A hybrid meta-heuristic feature selection method for screening COVID-19 using deep features. Sci. Rep. 2021, 11, 24065. [Google Scholar] [CrossRef]

- Basha, S.H.; Farazuddin, M.; Pulabaigari, V.; Dubey, S.R.; Mukherjee, S. Deep Model Compression Based on the Training History. arXiv 2021, arXiv:2102.00160. [Google Scholar] [CrossRef]

- Geng, L.; Zhang, S.; Tong, J.; Xiao, Z. Lung Segmentation Method with Dilated Convolution Based on VGG-16 Network. Comput. Assist. Surg. 2019, 24, 27–33. [Google Scholar] [CrossRef]

- Bagaskara, A.; Suryanegara, M. Evaluation of VGG-16 and VGG-19 Deep Learning Architecture for Classifying Dementia People. In Proceedings of the 2021 4th International Conference of Computer and Informatics Engineering (IC2IE), Depok, Indonesia, 14–15 September 2021; pp. 1–4. [Google Scholar]

- Mohammadi, R.; Salehi, M.; Ghaffari, H.; Reiazi, R. Transfer Learning-Based Automatic Detection of Coronavirus Disease 2019 (COVID-19) from Chest X-ray Images. J. Biomed. Phys. Eng. 2020, 10, 559. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Van Esesn, B.C.; Awwal, A.A.S.; Asari, V.K. The history began from alexnet: A comprehensive survey on deep learning approaches. arXiv 2018, arXiv:1803.01164. [Google Scholar]

- Reddy, A.S.B.; Juliet, D.S. Transfer Learning with ResNet-50 for Malaria Cell-Image Classification. In Proceedings of the 2019 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 4–6 April 2019; pp. 0945–0949. [Google Scholar]

- Al-Haija, Q.A.; Adebanjo, A. Breast Cancer Diagnosis in Histopathological Images Using ResNet-50 Convolutional Neural Network. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020; pp. 1–7. [Google Scholar]

- Anand, R.; Shanthi, T.; Nithish, M.S.; Lakshman, S. Face Recognition and Classification Using GoogleNET Architecture. In Soft Computing for Problem Solving; Springer: Berlin/Heidelberg, Germany, 2020; pp. 261–269. [Google Scholar]

- Foo, P.H.; Ng, G.W. High-level information fusion: An overview. J. Adv. Inf. Fusion 2013, 8, 33–72. [Google Scholar]

- Mohandes, M.; Deriche, M.; Aliyu, S.O. Classifiers combination techniques: A comprehensive review. IEEE Access 2018, 6, 19626–19639. [Google Scholar] [CrossRef]

- Gu, Q.; Han, J. Clustered support vector machines. In Proceedings of the 16th International Conference on Artificial Intelligence and Statistics (AISTATS), Scottsdale, AZ, USA, 29 April–1 May 2013; pp. 307–315. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).