Accuracy of Artificial Intelligence Models in Dental Implant Fixture Identification and Classification from Radiographs: A Systematic Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Registration

2.2. Inclusion and Exclusion Criterias

2.3. Exposure and Outcome

- P: Human X-rays with dental implants.

- I: Artificial intelligence tools.

- C: Expert opinions and reference standards.

- O: Accuracy of detection of the dental implant.

2.4. Information Sources and Search Strategy

2.5. Screening, Selection of Studies, and Data Extraction

2.6. Quality Assessment of Included Studies

3. Results

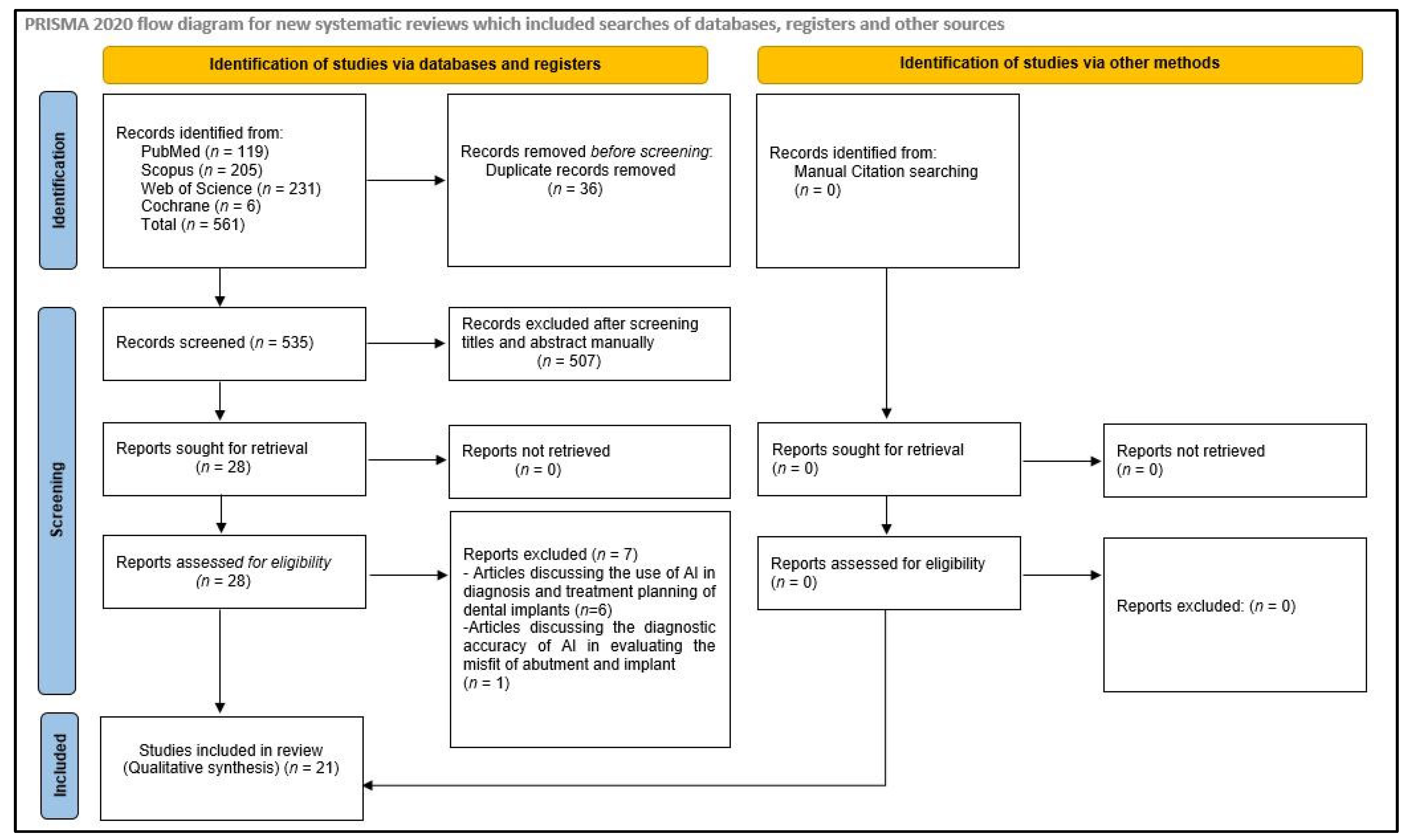

3.1. Identification and Screening

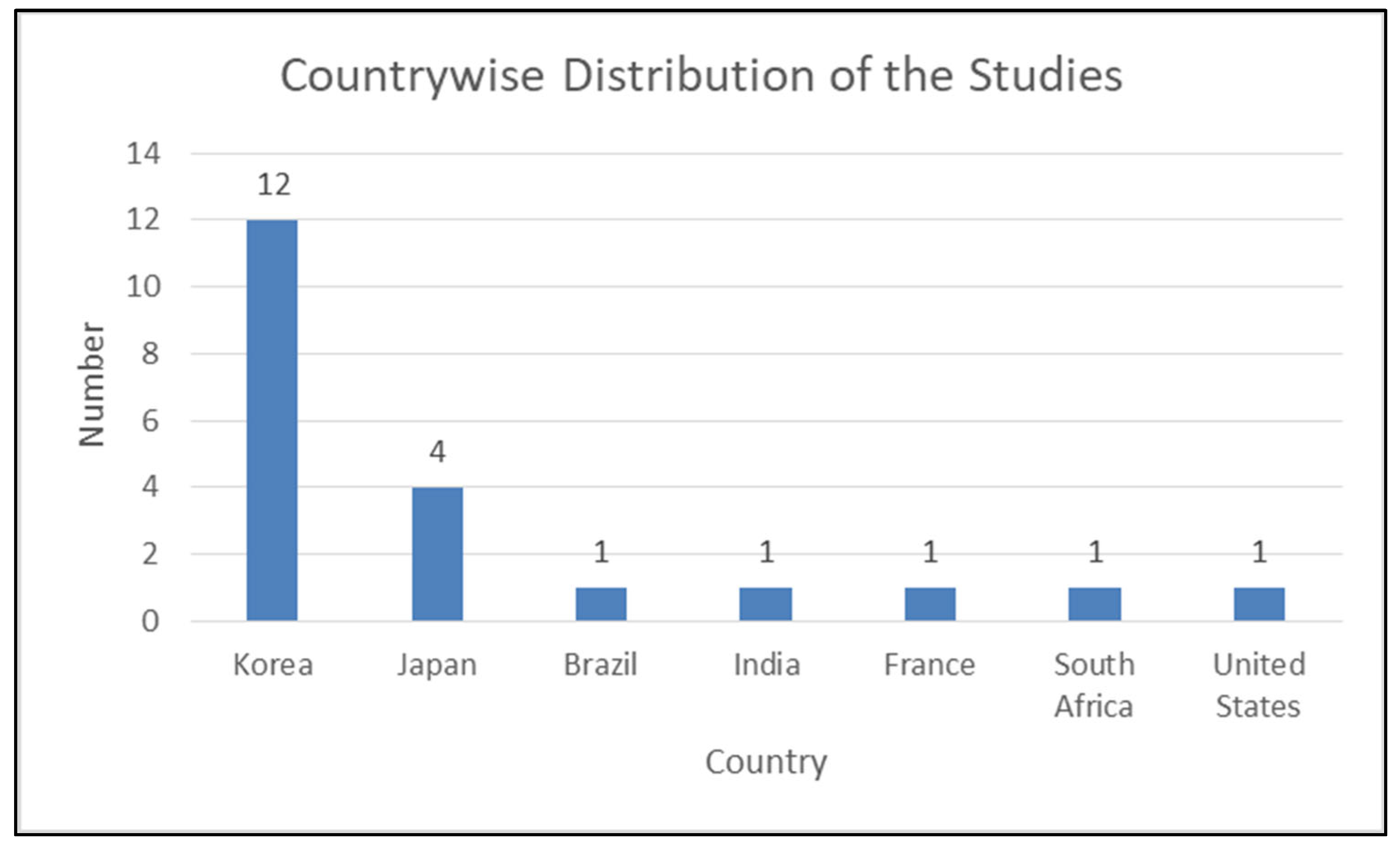

3.2. Study Characteristics

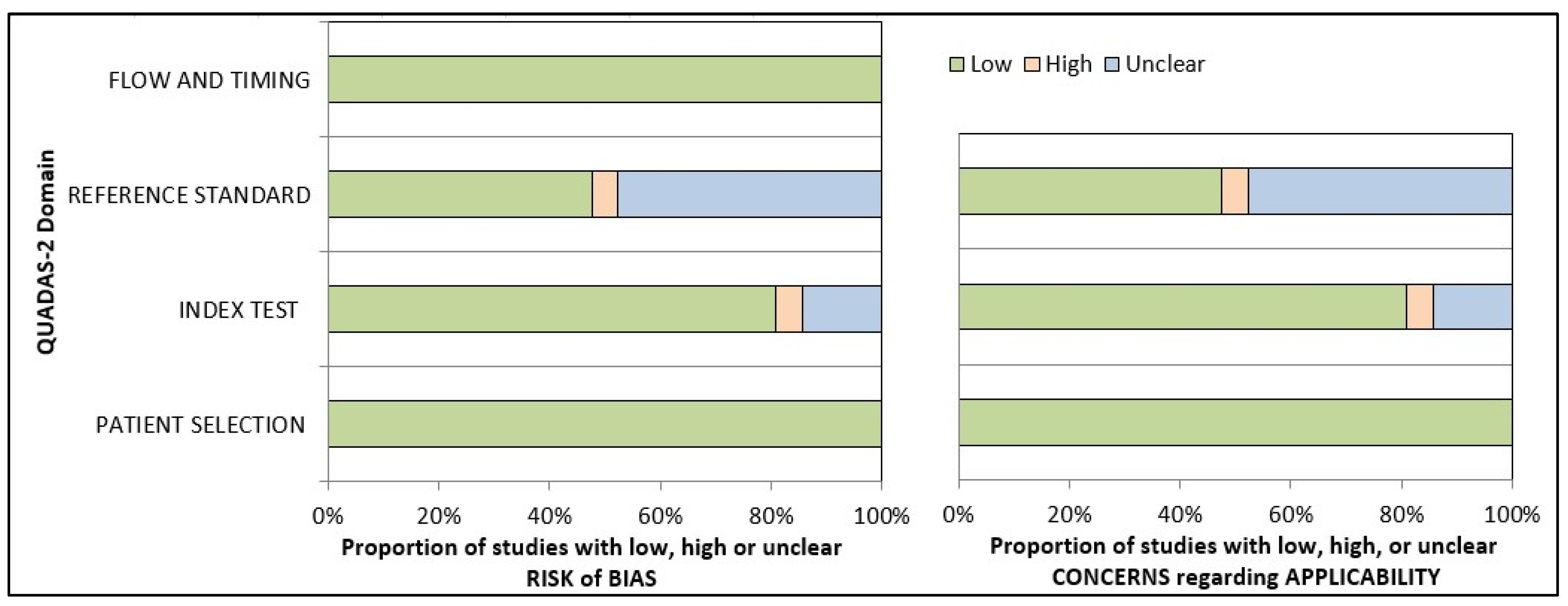

3.3. Quality Assessment of Included Studies

3.4. Accuracy Assessment

4. Discussion

4.1. Inferences and Future Directions

4.2. Limitations

5. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Abad-Coronel, C.; Bravo, M.; Tello, S.; Cornejo, E.; Paredes, Y.; Paltan, C.A.; Fajardo, J.I. Fracture Resistance Comparative Analysis of Milled-Derived vs. 3D-Printed CAD/CAM Materials for Single-Unit Restorations. Polymers 2023, 15, 3773. [Google Scholar] [CrossRef] [PubMed]

- Martín-Ortega, N.; Sallorenzo, A.; Casajús, J.; Cervera, A.; Revilla-León, M.; Gómez-Polo, M. Fracture resistance of additive manufactured and milled implant-supported interim crowns. J. Prosthet. Dent. 2022, 127, 267–274. [Google Scholar] [CrossRef] [PubMed]

- Jain, S.; Sayed, M.E.; Shetty, M.; Alqahtani, S.M.; Al Wadei, M.H.D.; Gupta, S.G.; Othman, A.A.A.; Alshehri, A.H.; Alqarni, H.; Mobarki, A.H.; et al. Physical and mechanical properties of 3D-printed provisional crowns and fixed dental prosthesis resins compared to CAD/CAM milled and conventional provisional resins: A systematic review and meta-analysis. Polymers 2022, 14, 2691. [Google Scholar] [CrossRef] [PubMed]

- Gad, M.M.; Fouda, S.M.; Abualsaud, R.; Alshahrani, F.A.; Al-Thobity, A.M.; Khan, S.Q.; Akhtar, S.; Ateeq, I.S.; Helal, M.A.; Al-Harbi, F.A.; et al. Strength and surface properties of a 3D-printed denture base polymer. J. Prosthodont. 2021, 31, 412–418. [Google Scholar] [CrossRef]

- Al Wadei, M.H.D.; Sayed, M.E.; Jain, S.; Aggarwal, A.; Alqarni, H.; Gupta, S.G.; Alqahtani, S.M.; Alahmari, N.M.; Alshehri, A.H.; Jain, M.; et al. Marginal Adaptation and Internal Fit of 3D-Printed Provisional Crowns and Fixed Dental Prosthesis Resins Compared to CAD/CAM-Milled and Conventional Provisional Resins: A Systematic Review and Meta-Analysis. Coatings 2022, 12, 1777. [Google Scholar] [CrossRef]

- Wang, D.; Wang, L.; Zhang, Y.; Lv, P.; Sun, Y.; Xiao, J. Preliminary study on a miniature laser manipulation robotic device for tooth crown preparation. Int. J. Med. Robot. Comput. Assist. Surg. 2014, 10, 482–494. [Google Scholar] [CrossRef] [PubMed]

- Toosi, A.; Arbabtafti, M.; Richardson, B. Virtual reality haptic simulation of root canal therapy. Appl. Mech. Mater. 2014, 666, 388–392. [Google Scholar] [CrossRef]

- Jain, S.; Sayed, M.E.; Ibraheem, W.I.; Ageeli, A.A.; Gandhi, S.; Jokhadar, H.F.; AlResayes, S.S.; Alqarni, H.; Alshehri, A.H.; Huthan, H.M.; et al. Accuracy Comparison between Robot-Assisted Dental Implant Placement and Static/Dynamic Computer-Assisted Implant Surgery: A Systematic Review and Meta-Analysis of In Vitro Studies. Medicina 2024, 60, 11. [Google Scholar] [CrossRef] [PubMed]

- Bellman, R. Artificial Intelligence: Can Computers Think? Thomson Course Technology; Boyd & Fraser: Boston, MA, USA, 1978; 146p. [Google Scholar]

- Akst, J. A primer: Artificial intelligence versus neural networks. Inspiring Innovation. The Scientist Exploring Life, 1 May 2019; 65802. [Google Scholar]

- Kozan, N.M.; Kotsyubynska, Y.Z.; Zelenchuk, G.M. Using the artificial neural networks for identification unknown person. IOSR J. Dent. Med. Sci. 2017, 1, 107–113. [Google Scholar]

- Khanagar, S.B.; Al-ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Sikka, A.; Jain, A. Sex determination of mandible: A morphological and morphometric analysis. Int. J. Contemp. Med. Res. 2016, 3, 1869–1872. [Google Scholar]

- Kaladhar, D.; Chandana, B.; Kumar, P. Predicting Cancer Survivability Using Classification Algorithms. Books 1 View project Protein Interaction Networks in Metallo Proteins and Docking Approaches of Metallic Compounds with TIMP and MMP in Control of MAPK Pathway View project Predicting Cancer. Int. J. Res. Rev. Comput. Sci. 2011, 2, 340–343. [Google Scholar]

- Kalappanavar, A.; Sneha, S.; Annigeri, R.G. Artificial intelligence: A dentist’s perspective. Pathol. Surg. 2018, 5, 2–4. [Google Scholar] [CrossRef]

- Krishna, A.B.; Tanveer, A.; Bhagirath, P.V.; Gannepalli, A. Role of artificial intelligence in diagnostic oral pathology-A modern approach. J. Oral Maxillofac. Pathol. 2020, 24, 152–156. [Google Scholar] [CrossRef] [PubMed]

- Katne, T.; Kanaparthi, A.; Gotoor, S.; Muppirala, S.; Devaraju, R.; Gantala, R. Artificial intelligence: Demystifying dentistry—The future and beyond artificial intelligence: Demystifying dentistry—The future and beyond. Int. J. Contemp. Med. Surg. Radiol. 2019, 4, 4. [Google Scholar] [CrossRef]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Murata, M.; Ariji, Y.; Ohashi, Y.; Kawai, T.; Fukuda, M.; Funakoshi, T.; Kise, Y.; Nozawa, M.; Katsumata, A.; Fujita, H.; et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019, 35, 301–307. [Google Scholar] [CrossRef] [PubMed]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019, 45, 917–922.e5. [Google Scholar] [CrossRef]

- Deif, M.A.; Attar, H.; Amer, A.; Elhaty, I.A.; Khosravi, M.R.; Solyman, A.A.A. Diagnosis of Oral Squamous Cell Carcinoma Using Deep Neural Networks and Binary Particle Swarm Optimization on Histopathological Images: An AIoMT Approach. Comput. Intell. Neurosci. 2022, 2022, 6364102. [Google Scholar] [CrossRef]

- Yang, S.Y.; Li, S.H.; Liu, J.L.; Sun, X.Q.; Cen, Y.Y.; Ren, R.Y.; Ying, S.C.; Chen, Y.; Zhao, Z.H.; Liao, W. Histopathology-Based Diagnosis of Oral Squamous Cell Carcinoma Using Deep Learning. J. Dent. Res. 2022, 101, 1321–1327. [Google Scholar] [CrossRef]

- Lee, K.S.; Jung, S.K.; Ryu, J.J.; Shin, S.W.; Choi, J. Evaluation of transfer learning with deep convolutional neural networks for screening osteoporosis in dental panoramic radiographs. J. Clin. Med. 2020, 9, 392. [Google Scholar] [CrossRef]

- Saghiri, M.A.; Garcia-Godoy, F.; Gutmann, J.L.; Lotfi, M.; Asgar, K. The reliability of artificial neural network in locating minor apical foramen: A cadaver study. J. Endod. 2012, 38, 1130–1134. [Google Scholar] [CrossRef] [PubMed]

- Saghiri, M.A.; Asgar, K.; Boukani, K.K.; Lotfi, M.; Aghili, H.; Delvarani, A.; Karamifar, K.; Saghiri, A.M.; Mehrvarzfar, P.; Garcia-Godoy, F. A new approach for locating the minor apical foramen using an artificial neural network. Int. Endod. J. 2012, 45, 257–265. [Google Scholar] [CrossRef] [PubMed]

- Hatvani, J.; Horváth, A.; Michetti, J.; Basarab, A.; Kouamé, D.; Gyöngy, M. Deep learning-based super-resolution applied to dental computed tomography. IEEE Trans. Rad. Plasma Med. Sci. 2019, 3, 120–128. [Google Scholar] [CrossRef]

- Hiraiwa, T.; Ariji, Y.; Fukuda, M.; Kise, Y.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac. Radiol. 2019, 48, 20180218. [Google Scholar] [CrossRef]

- Vila-Blanco, N.; Carreira, M.J.; Varas-Quintana, P.; Balsa-Castro, C.; Tomas, I. Deep neural networks for chronological age estimation from OPG images, IEEE Trans. Med. Imaging 2020, 39, 2374–2384. [Google Scholar] [CrossRef]

- Vishwanathaiah, S.; Fageeh, H.N.; Khanagar, S.B.; Maganur, P.C. Artificial Intelligence Its Uses and Application in Pediatric Dentistry: A Review. Biomedicines 2023, 11, 788. [Google Scholar] [CrossRef]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Tanaka, F.; Yamashita, K.; Kagaya, T.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; et al. Is attention branch network effective in classifying dental implants from panoramic radiograph images by deep learning? PLoS ONE 2022, 17, e0269016. [Google Scholar] [CrossRef]

- Kong, H.J.; Eom, S.H.; Yoo, J.Y.; Lee, J.H. Identification of 130 Dental Implant Types Using Ensemble Deep Learning. Int. J. Oral Maxillofac. Implants 2023, 38, 150–156. [Google Scholar] [CrossRef] [PubMed]

- Kohlakala, A.; Coetzer, J.; Bertels, J.; Vandermeulen, D. Deep learning-based dental implant recognition using synthetic X-ray images. Med. Biol. Eng. Comput. 2022, 60, 2951–2968. [Google Scholar] [CrossRef]

- Kurt Bayrakdar, S.; Orhan, K.; Bayrakdar, I.S.; Bilgir, E.; Ezhov, M.; Gusarev, M.; Shumilov, E. A deep learning approach for dental implant planning in cone-beam computed tomography images. BMC Med. Imaging 2021, 21, 86. [Google Scholar] [CrossRef] [PubMed]

- Moufti, M.A.; Trabulsi, N.; Ghousheh, M.; Fattal, T.; Ashira, A.; Danishvar, S. Developing an artificial intelligence solution to autosegment the edentulous mandibular bone for implant planning. Eur. J. Dent. 2023, 17, 1330–1337. [Google Scholar] [CrossRef] [PubMed]

- Howe, M.S.; Keys, W.; Richards, D. Long-term (10-year) dental implant survival: A systematic review and sensitivity meta-analysis. J. Dent. 2019, 84, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Simonis, P.; Dufour, T.; Tenenbaum, H. Long-term implant survival and success: A 10-16-year follow-up of non-submerged dental implants. Clin. Oral Implants Res. 2010, 21, 772. [Google Scholar] [CrossRef] [PubMed]

- Romeo, E.; Lops, D.; Margutti, E.; Ghisolfi, M.; Chiapasco, M.; Vogel, G. Longterm survival and success of oral implants in the treatment of full and partial arches: A 7-year prospective study with the ITI dental implant system. Int. J. Oral Maxillofac. Implants. 2004, 19, 247–249. [Google Scholar] [PubMed]

- Papaspyridakos, P.; Mokti, M.; Chen, C.J.; Benic, G.I.; Gallucci, G.O.; Chronopoulos, V. Implant and prosthodontic survival rates with implant fixed complete dental prostheses in the edentulous mandible after at least 5 years: A systematic review. Clin. Implant. Dent. Relat. Res. 2014, 16, 705–717. [Google Scholar] [CrossRef] [PubMed]

- Jokstad, A.; Braegger, U.; Brunski, J.B.; Carr, A.B.; Naert, I.; Wennerberg, A. Quality of dental implants. Int. Dent. J. 2003, 53, 409–443. [Google Scholar] [CrossRef]

- Sailer, I.; Karasan, D.; Todorovic, A.; Ligoutsikou, M.; Pjetursson, B.E. Prosthetic failures in dental implant therapy. Periodontol. 2000 2022, 88, 130–144. [Google Scholar] [CrossRef]

- Lee, D.W.; Kim, N.H.; Lee, Y.; Oh, Y.A.; Lee, J.H.; You, H.K. Implant fracture failure rate and potential associated risk indicators: An up to 12-year retrospective study of implants in 5124 patients. Clin. Oral Implants Res. 2019, 30, 206–217. [Google Scholar] [CrossRef] [PubMed]

- Tabrizi, R.; Behnia, H.; Taherian, S.; Hesami, N. What are the incidence and factors associated with implant fracture? J. Oral Maxillofac. Surg. 2017, 75, 1866–1872. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, M.; Meyer, S.; Mombelli, A.; Müller, F. Dental implants in the elderly population: A systematic review and meta-analysis. Clin. Oral Implants Res. 2017, 28, 920–930. [Google Scholar] [CrossRef]

- Al-Wahadni, A.; Barakat, M.S.; Abu Afifeh, K.; Khader, Y. Dentists’ most common practices when selecting an implant system. J. Prosthodont. 2018, 27, 250–259. [Google Scholar] [CrossRef] [PubMed]

- Tyndall, D.A.; Price, J.B.; Tetradis, S.; Ganz, S.D.; Hildebolt, C.; Scarfe, W.C.; American Academy of Oral and Maxillofacial Radiology. Position statement of the American Academy of Oral and Maxillofacial Radiology on selection criteria for the use of radiology in dental implantology with emphasis on cone beam computed tomography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2012, 113, 817–826. [Google Scholar] [CrossRef] [PubMed]

- Hadj Saïd, M.; Le Roux, M.K.; Catherine, J.H.; Lan, R. Development of an Artificial Intelligence Model to Identify a Dental Implant from a Radiograph. Int. J. Oral Maxillofac. Surg. 2020, 36, 1077–1082. [Google Scholar] [CrossRef]

- Takahashi, T.; Nozaki, K.; Gonda, T.; Mameno, T.; Wada, M.; Ikebe, K. Identification of dental implants using deep learning-pilot study. Int. J. Implant. Dent. 2020, 6, 53. [Google Scholar] [CrossRef]

- Park, W.; Huh, J.K.; Lee, J.H. Automated deep learning for classification of dental implant radiographs using a large multi-center dataset. Sci. Rep. 2023, 13, 4862. [Google Scholar] [CrossRef]

- Da Mata Santos, R.P.; Vieira Oliveira Prado, H.E.; Soares Aranha Neto, I.; Alves de Oliveira, G.A.; Vespasiano Silva, A.I.; Zenóbio, E.G.; Manzi, F.R. Automated Identification of Dental Implants Using Artificial Intelligence. Int. J. Oral Maxillofac. Implants 2021, 36, 918–923. [Google Scholar] [CrossRef] [PubMed]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Yamashita, K.; Nakano, K.; Yamamoto, N.; Nagatsuka, H.; Furuki, Y. Deep Neural Networks for Dental Implant System Classification. Biomolecules 2020, 10, 984. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Jeong, S.N. Efficacy of deep convolutional neural network algorithm for the identification and classification of dental implant systems, using panoramic and periapical radiographs: A pilot study. Medicine 2020, 99, e20787. [Google Scholar] [CrossRef]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Matsuyama, T.; Yamashita, K.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Furuki, Y. Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images. Biomolecules 2021, 11, 815. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-H.; Kim, Y.-T.; Lee, J.-B.; Jeong, S.-N. A Performance Comparison between Automated Deep Learning and Dental Professionals in Classification of Dental Implant Systems from Dental Imaging: A Multi-Center Study. Diagnostics 2020, 10, 910. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.-W.; Kim, S.-Y.; Jeong, S.-N.; Lee, J.-H. Artificial Intelligence in Fractured Dental Implant Detection and Classification: Evaluation Using Dataset from Two Dental Hospitals. Diagnostics 2021, 11, 233. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.-E.; Nam, N.-E.; Shim, J.-S.; Jung, Y.-H.; Cho, B.-H.; Hwang, J.J. Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. J. Clin. Med. 2020, 9, 1117. [Google Scholar] [CrossRef] [PubMed]

- Benakatti, V.B.; Nayakar, R.P.; Anandhalli, M. Machine learning for identification of dental implant systems based on shape—A descriptive study. J. Indian Prosthodont. Soc. 2021, 21, 405–411. [Google Scholar] [CrossRef] [PubMed]

- Jang, W.S.; Kim, S.; Yun, P.S.; Jang, H.S.; Seong, Y.W.; Yang, H.S.; Chang, J.S. Accurate detection for dental implant and peri-implant tissue by transfer learning of faster R-CNN: A diagnostic accuracy study. BMC Oral Health 2020, 22, 591. [Google Scholar] [CrossRef] [PubMed]

- Kong, H.J. Classification of dental implant systems using cloud-based deep learning algorithm: An experimental study. J. Yeungnam Med. Sci. 2023, 40 (Suppl.), S29–S36. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.S.; Ha, E.G.; Kim, Y.H.; Jeon, K.J.; Lee, C.; Han, S.S. Transfer learning in a deep convolutional neural network for implant fixture classification: A pilot study. Imaging Sci. Dent. 2022, 52, 219–224. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Kim, Y.T.; Lee, J.B.; Jeong, S.N. Deep learning improves implant classification by dental professionals: A multi-center evaluation of accuracy and efficiency. J. Periodontal Implant Sci. 2022, 52, 220–229. [Google Scholar] [CrossRef]

- Kong, H.J.; Yoo, J.Y.; Lee, J.H.; Eom, S.H.; Kim, J.H. Performance evaluation of deep learning models for the classification and identification of dental implants. J. Prosthet. Dent. 2023, in press. [CrossRef] [PubMed]

- Park, W.; Schwendicke, F.; Krois, J.; Huh, J.K.; Lee, J.H. Identification of Dental Implant Systems Using a Large-Scale Multicenter Data Set. J. Dent. Res. 2023, 102, 727–733. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, C.Y.; Bai, H.; Ling, H.; Yang, J. Artificial Intelligence in Identifying Dental Implant Systems on Radiographs. Int. J. Periodontics Restor. Dent. 2023, 43, 363–368. [Google Scholar]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; PRISMA-P Group. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 2015, 4, 1. [Google Scholar] [CrossRef] [PubMed]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group (2011). QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef] [PubMed]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Literature in English language | Literature in a language other than English |

| Human clinical studies | Animal studies, cadaver studies, technical reports, case reports, posters, case series, reports, commentaries, reviews, unpublished abstracts and dissertations, incomplete trials, and non-peer reviewed articles |

| Articles published between January 2008 and December 2023 | Articles published prior to January 2008 |

| Studies evaluating the diagnostic accuracy of artificial intelligence tools in the identification and classification of dental implants | Studies evaluating the accuracy of artificial intelligence tools in identification of other dental/oral structures |

| Studies in which three or more implant models were identified | Studies having only the abstract and not the full text |

| Studies in which less than three implant models were identified | |

| Studies discussing artificial intelligence tools under trial |

| Database | Combination of Search Terms and Strategy | Number of Titles |

|---|---|---|

| MEDLINE-PubMed | (((((((dental implants[MeSH Terms]) OR (dental implantation[MeSH Terms])) OR (dental implant*)) OR (dental implant system*)) OR (Dental Implant System Classification)) OR (dental implant fixture)) OR (dental implant fixture classification)) AND ((((((((((((((dental diagnostic imaging) OR (dental digital radiography[MeSH Terms])) OR (dental radiography[MeSH Terms])) OR (oral digital radiography) OR (dental Digital radiograph))) OR (Panoramic image*)) OR (panoramic radiography[MeSH Terms])) OR (Periapical images)) OR (dental radiology)) OR (periapical radiograph*)) OR (dental X-ray image)) OR (synthetic dental X-ray image)) OR (OPG)) OR (Orthopantomogram)) OR (Intro oral radiograph) AND ((((((((((((((((artificial intelligence[MeSH Terms]) OR (machine learning[MeSH Terms])) OR (neural networks computer[MeSH Terms])) OR (algorithms[MeSH Terms])) OR (deep learning)) OR (supervised machine learning)) OR (Automated deep learning)) OR (Object detection)) OR (Yolov3)) OR (object detection algorithm)) OR (convolutional neural network*)) OR (Deep Neural Network*)) OR (multi-task learning)) OR (deep convolutional neural network)) OR (Transfer Learning)) OR (attention branch network)) OR (Ensemble Deep Learning) AND (((((((((sensitivity and specificity[MeSH Terms]) OR (Accuracy)) OR (sensitivity)) OR (specificity)) OR (Positive Predictive Value*)) OR (Negative Predictive Value*)) OR (Precision)) OR (Recall)) OR (F1 score)) OR (Area under receiver operating characteristics curve) Filters: Humans, English, from 1 January 2008 to 31 December 2023 | 119 |

| Scopus | (“dental implants” OR “dental implantation” OR “dental implant*” OR “dental implant system*” OR “Dental Implant System Classification” OR “dental implant fixture” OR “dental implant fixture classification”) AND (“dental diagnostic imaging” OR “dental digital radiography” OR “dental radiography” OR “oral digital radiography” OR “dental Digital radiograph” OR “Panoramic image*” OR “panoramic radiography” OR “Periapical images” OR “dental radiology” OR “periapical radiograph*” OR “dental X-ray image” OR “synthetic dental X-ray image” OR “OPG” OR “Orthopantomogram” OR “Intro oral radiograph”) AND (“artificial intelligence” OR “machine learning” OR “neural networks computer” OR “algorithms” OR “deep learning” OR “supervised machine learning” OR “Automated deep learning” OR “Object detection” OR “Yolov3” OR “object detection algorithm” OR “convolutional neural network*” OR “Deep Neural Network*” OR “multi-task learning” OR “deep convolutional neural network” OR “Transfer Learning” OR “attention branch network” OR “Ensemble Deep Learning”) AND (“sensitivity and specificity” OR “Accuracy” OR “sensitivity” OR “specificity” OR “Positive Predictive Value*” OR “Negative Predictive Value*” OR “Precision” OR “Recall” OR “F1 score” OR “Area under receiver operating characteristics curve”) AND PUBYEAR > 2008 AND PUBYEAR <2023 AND (LIMIT-TO (SUBJAREA, “DENT”)) AND (LIMIT-TO (DOCTYPE, “ar”)) AND (LIMIT-TO (LANGUAGE, “English”)) AND (LIMIT-TO(SRCTYPE, “j”)) | 205 |

| Web of Science | #1 (P) TS = (‘dental implants’ OR ‘dental implantation’ OR ‘dental implant*’OR ‘dental implant system*’ OR ‘Dental Implant System Classification’ OR ‘dental implant fixture’ OR ‘dental implant fixture classification’ OR ‘dental diagnostic imaging’ OR ‘dental digital radiography’ OR ‘dental radiography’ OR ‘oral digital radiography’ OR ‘dental Digital radiograph’ OR ‘Panoramic image*’ OR ‘panoramic radiography’ OR ‘Periapical images’ OR ‘dental radiology’ OR ‘periapical radiograph*’ OR ‘dental X-ray image’ OR ‘synthetic dental X-ray image’ OR ‘OPG’ OR Orthopantomogram OR ‘Intro oral radiograph’) #2 (I) TS = (‘artificial intelligence’ OR ‘machine learning’ OR ‘neural networks computer’ OR algorithms OR ‘deep learning’ OR ‘supervised machine learning’ OR ‘Automated deep learning’ OR ‘Object detection’ OR ‘Yolov3′ OR ‘object detection algorithm’ OR ‘convolutional neural network*’ OR ‘Deep Neural Network*’ OR ‘multi-task learning’ OR ‘deep convolutional neural network’ OR ‘Transfer Learning’ OR ‘attention branch network’ OR ‘Ensemble Deep Learning’) #3 (O) TS = (‘sensitivity and specificity’ OR Accuracy OR sensitivity OR specificity OR ‘Positive Predictive Value*’ OR ‘Negative Predictive Value*’ OR Precision OR Recall OR ‘F1 score’ OR ‘Area under receiver operating characteristics curve’) #3 AND #2 AND #1 Indexes = SCI-EXPANDED, SSCI, A&HCI, CPCI-S, CPCI-SSH, ESCI, CCR-EXPANDED, IC Timespan = January 2008 to December 2023 and English (Languages) | 8 |

| Cochrane Library | #1 MeSH descriptor: [Dental Implants] explode all trees #2 MeSH descriptor: [Dental Implantation] explode all trees #3 dental implant* #4 dental implant system* #5 Dental Implant System Classification #6 dental implant fixture #7 dental implant fixture classification #8 dental diagnostic imaging #9 MeSH descriptor: [Radiography, Dental, Digital] explode all trees #10 MeSH descriptor: [Radiography, Dental] explode all trees #11 oral digital radiography #12 dental Digital radiograph #13 Panoramic image* #14 panoramic radiography #15 Periapical images #16 dental radiology #17 periapical radiograph* #18 dental X-ray image #19 synthetic dental X-ray image #20 OPG #21 Orthopantomogram #22 Intro oral radiograph #23 MeSH descriptor: [Artificial Intelligence] explode all trees #24 MeSH descriptor: [Machine Learning] explode all trees #25 MeSH descriptor: [Neural Networks, Computer] explode all trees #26 MeSH descriptor: [Algorithms] explode all trees #27 deep learning #28 supervised machine learning #29 Automated deep learning #30 Object detection #31 Yolov3 #32 object detection algorithm #33 convolutional neural network* #34 Deep Neural Network* #35 multi-task learning #36 deep convolutional neural network #37 Transfer Learning #38 attention branch network #39 Ensemble Deep Learning #40 MeSH descriptor: [Sensitivity and Specificity] explode all trees #41 Accuracy #42 sensitivity #43 specificity #44 Positive Predictive Value* #45 Negative Predictive Value* #46 Precision #47 Recall #48 F1 score #49 Area under receiver operating characteristics curve #50 #1 OR #2 OR #3 OR #4 OR #5 OR #6 OR #7 #51 #8 OR #9 OR #10 OR #11 OR #12 OR #13 OR #14 OR #15 OR #16 OR #17 OR #18 OR #19 OR #20 OR #21 OR #22 #52 #23 OR #24 OR #25 OR #26 OR #27 OR #28 OR #29 OR #30 OR #31 OR #32 OR #33 OR #34 OR #35 OR #36 OR #37 OR #38 OR #39 #53 #40 OR #41 OR #42 OR #43 OR #44 OR #45 OR #46 OR #47 OR #48 OR #49 #54 #50 AND #51 AND #52 AND #53 | 6 |

| Author, Year Country | Algorithm Network Architecture and Name | Architecture Depth (Number of Layers), Number of Training Epochs, and Learning Rate | Type of Radiographic Image | Patient Data Collection/X-ray Collection Duration | Number of X-rays/Implant Images Evaluated (N) | Number and Names of Implant Brands and Models Evaluated | Comparator | Test Group and Training/Validation Number and Ratio | Accuracy Reported | Authors Suggestions/Conclusions |

|---|---|---|---|---|---|---|---|---|---|---|

| Kong et al., 2023, Republic of Korea [61] | - 2 DL - YOLOv5 - YOLOv7 | -Training Epochs: YOLOv5: 146, 184, 200 YOLOv7: 200 | Pano | 2001 to 2021 | N = 14,037 | Implant models: N = 130 * Implant design classification: 1. Coronal one-third 2. Middle one-third 3. Apical part | EORS | Test Group: 20% Training Group: 80% | mAP (area under the precision–recall curve): YOLOv5: Implant-dataset-1:0.929 Implant-dataset-2: 0.940 Implant-dataset-3:0.873 YOLOv7: Implant-dataset-1: 0.931 Implant-dataset-2: 0.984 Implant-dataset-3: 0.884 YOLOv7 Implant-dataset-1: IPA: 0.986 IPA + Magnification ×2: 0.988 IPA + Magnification ×4: 0.986 | mAP: YOLOv7 > YOLOv5 The tested DL has a high accuracy |

| Kong, 2023, Republic of Korea [58] | - Fine-tuned CNN - Google automated machine learning (AutoML) Vision | Training: 32 node hours | PA | January 2005 to December 2019 | N = 4800 | Implant Brands: N = 3 (A) Osstem Implant (B) Biomet 3i LLC (C) Dentsply Sirona Implant models: N = 4 (1) Osstem TSIII (25%) (2) Osstem USII (25%) (3) Biomet 3i Osseotite External (25%) (4) Dentsply Sirona Xive (25%) | EORS | Test Group: 10% Training Group: 80% Fine-tuning Group:10% | Overall Accuracy: 0.981 Precision: 0.963 Recall: 0.961 Specificity: 0.985 F1 score: 0.962 | Tested fine-tuned CNN showed high accuracy in the classification of DISs |

| Park et al., 2023, Republic of Korea [62] | - Fine-tuned and pretrained DL - ResNet-50 | - Depth: 50 layers | PA and Pano | NM | N = 150,733 PA (24.8%) and Pano (75.2%) | Implant Brands: N = 10 (A) Neobiotech (n = 14.1%) (B) NB (n = 2.41%) (C) Dentsply (n = 10.14%) (D) Dentium (n = 27.26%) (E) Dio (n = 1.01%) (F) Megagen (n = 5.17%) (G) ST (n = 3.30%) (H) Shinhung (n = 2.23%) (I) Osstem (n = 28.47%) (J) Warantec (n = 5.86%) Implant Models: N = 25 (A) Neobiotech: (1) IS I 1, (2) IS II, (3) IS III, (4) EB; (B) NB: (1) Branemark; (C) Dentsply: (1) Astra, (2) Xive; (D) Dentium: (1) Implantium, (2) Superline; (E) Dio: (1) UF, (2) UF II; (F) Megagen: (1) Any ridge, (2) Anyone internal, (3) Anyone external, (4) Exfeel external; (G) ST: (1) TS standard, (2) TS standard plus, (3) Bone level; (HI) Shinhung: (1) Luna; (I) Osstem: (1) GS II, (2) SS II, (3) TS III, (4) US II, (5) US III; (J) Warantec: (1) Hexplant | DL vs. 28 dental professionals (9 dentists specialized in implantology and 19 dentists not specialized in implantology) | Training Group: 80% Validation Group: 10% Test Group: 10% | Accuracy (1) DL: (a) Both Pano and PA: 82.3% (95% CI, 78.0–85.9%) (b) PA: 83.8% (95% CI, 79.6–87.2%) (c) Pano: 73.3% (95% CI, 68.5–77.6%) (2) All dental professionals: (a) Both Pano and PA: 23.5% ± 18.5 (b) PA: 26.2% ± 18.2 (c) Pano: 24.5% ± 19.0 (3) Dentist specialized in Implantology: (a) Both Pano and PA: 43.3% ± 20.4 (b) PA: 43.3% ± 19.7 (c) Pano: 43.2% ± 21.2 (4) Dentist not specialized in Implantology: (a) Both Pano and PA: 16.8% ± 9 (b) PA: 18.1% ± 9.9 (c) Pano: 15.6% ± 8.5 Deep learning (For both Pano and PA) AUC: 0.823 Sensitivity: 80.0% Specificity: 84.5% PPV: 83.8% NPV: 80.9% | Classification accuracy performance of DL was significantly superior |

| Hsiao et al., 2023, USA [63] | - 10 CNN architectures (1) MnasNet (2) ShuffleNet7 (3) MobileNet8 (4) AlexNet9 (5) VGG10 (6) ResNet11 (7) DenseNet12 (8) SqueezeNet13 (9) ResNeXt14 (10) Wide ResNet15 | - Learning rate: 0.001 - For training accuracy, the CNN assessed data 90 times per image | PA | January 2011 to January 2019 | N = 788 | Implant Brands: N = 3 (A) BioHorizons (22.84%) (B) ST (34.51%) (C) NB (42.63%) Implant Models (A) BioHorizons: (1) Legacy implant Tapered Pro; (B) ST: (1) Bone Level, Bone Level Tapered, Standard Straumann, Tapered Effect; (C) NB: (1) Active, (2) Parallel, (3) Replace, (4) Replace Select Straight, (5) Replace Select Tapered, (6) Speedy Groovy, (7) Speedy Replace | EORS | Training Group: 75% Test Group: 25% | Overall implant-identification Accuracy: >90% Test accuracy (1) MnasNet6: 81.89% (2) ShuffleNet7: 96.85% (3) MobileNet8: 92.68% (4) AlexNet9: 94.35% (5) VGG10: 92.94% (6) ResNet11: 96.43% (7) DenseNet12: 96.41% (8) SqueezeNet13: 91.55% (9) ResNeXt14: 93.90% (10) Wide ResNet15: 92.01% | Tested CNN has high accuracy and speed |

| Park et al., 2023, Republic of Korea [48] | - Customized automatic DL - Neuro-T version 3.0.1 | - Training epochs: 500 | PA and Pano | NM | N = 156,965 (Pano: 116,756; PA: 40,209) | Implant Brands: N = 10 (A) Neobiotech, (B) NB, (C) Dentsply, (D) Dentium, (E) Dioimplant, (F) Megagen, (G) ST, (H) Shinhung, (I) Osstem, (J) Warantec Implant models: N = 27 1. IS I (Neobiotech) (5%); 2. IS II (Neobiotech) (1.83%); 3. IS III (Neobiotech) (5.18%); 4. EB (Neobiotech) (1.53%); 5. Branemark (NB) (2.32%); 6. Astra (Dentsply) (8.90%); 7. Xive (Dentsply) (0.84%); 8. Implatinum (Dentium) (12.20%); 9. Superline (Dentium) (13.98%); 10. UF (Dioimplant) (0.51%); 11. UF II (Dioimplant (0.47%); 12. Any ridge (Megagen) (0.22%); 13. Anyone international (Megagen) (2.43%); 14. Anyone external (Megagen) (1.63%); 15. Exfeel external (Megagen) (0.69%); 16. TS standard (Straumann) (0.85%); 17. TS standard plus (Straumann) (0.66%); 18. Bone level (Straumann) (1.66%); 19. Luna (Shinhung) (2.15%); 20. GS II (Osstem) (1.10%); 21. SS II (Osstem) (0.53%); 22. TS III (Osstem) (18.96%); 23. US II (Osstem) (6.15%); 24. US III (Osstem) (0.60%); 25. Hexplant (Warantec) (5.63%); 26. Internal (Warantec) (3.68%); 27. IT (Warantec) (0.28%) | EORS | Test Group: 10% Training Group: 80% Validation Group: 10% | Overall 1. Accuracy: 88.53% 2. Precision: 85.70% 3. Recall: 82.30% 4. F1 score: 84.00% Using Pano: 1. Accuracy: 87.89% 2. Precision: 85.20% 3. Recall: 81.10% 4. F1 score: 83.10% Using PA: 1. Accuracy: 86.87% 2. Precision: 84.40% 3. Recall: 81.70% 4. F1 score: 83.00% | - DL has reliable classification accuracy - No statistically significant difference in accuracy performance between the Pano and PA - Suggestion: Additional dataset needed for confirming clinical feasibility of DL |

| Kong et al., 2023, Republic of Korea [31] | 3 DLs (1) EfficientNet (2) Res2Next (3) Ensemble model | NM | Pano | March 2001 and April 2021 | N = 45,909 | Implant Brands: N = 20 (A) Bicon; (B) BioHorizons; (C) BIOMET 3i; (D) Biotem; (E) Dental Ratio; (F) Dentis; (G) Dentium; (H) Dentsply Sirona; (I) Dio Implant; (J) Hi ossen Implant; (K) IBS Implant; (L) Keystone Dental; (M) MegaGen Implant; (N) Neobiotech; (O) NB; (P) Osstem Implant; (Q) Point Implant; (R) ST; (S) Thommen Medical; (T) Zimmer Dental Implant models: N = 130 * | EORS | Test Group:20% Training Group: 80% | Top-1 accuracy (ratio that the nearest class was predicted, and the answer was correct) (a) EfficientNet: 73.83 (b) Res2Next: 73.09 (c) Ensemble model: 75.27 Top-5 accuracy (ratio in which the five nearest classes were predicted, and the answer was among them) (a) EfficientNet: 93.84 (b) Res2Next: 93.60 (c) Ensemble model: 95.02 Precision: (a) EfficientNet: 74.61 (b) Res2Next: 77.79 (c) Ensemble model: 78.84 Recall: (a) EfficientNet: 73.83 (b) Res2Next: 73.08 (c) Ensemble model: 75.27 F1 score: (a) EfficientNet: 72.02 (b) Res2Next: 73.55 (c) Ensemble model: 74.89 | Accuracy: Ensemble model > EfficientNet > Res2Next |

| Jang et al., 2022, Republic of Korea [57] | Faster R-CNN Resnet 101 | - Training epochs: 1000 | PA | January 2016 to June 2020 | N = 300 | NM | EORS | Test Group: 20% Training Group: 80% | Classification: Precision: 0.977 Recall: 0.992 F1 score: 0.984 | Faster R-CNN model provided high-quality object detection for dental implants and peri-implant tissues |

| Kohlakala et al., 2022, South Africa [32] | DL (1) FCN-1 (2) FCN-2 | - Training epochs: 1000 | Artificially generated (simulated) X-ray images | NM | NM | Implant brands: N = 1 MIS Dental implant Implant models: N = 9 (1) Conical narrow platform V3 (2) Conical narrow platform C1 (3) Conical standard platform V3 (4) Conical wide platform C1 Internal diameter 4.00 mm (5) Internal hex narrow platform SEVEN (6) Internal hex standard platform SEVEN (7) Internal hex wide platform SEVEN (8) External hex standard platform LANCE (9) External hex wide platform | EORS | Test Group: 17–18% Training Group: 82–83% Validation: 12–13% | Semi-automated system (human jaws) Full precision: 70.52% Recall: 69.76% Accuracy: 69.76% F1 score: 69.70% Fully automated system (human jaws) Full precision: 70.55% Recall: 68.67% Accuracy: 68.67% F1 score: 67.60% | Proposed fully automated system displayed promising results for implant classification |

| Sukegawa et al., 2022, Japan [30] | CNN and CNN + ABN (1) ResNet18 (2) ResNet18 + ABN (3) ResNet50 (4) ResNet50 + ABN (5) ResNet152 (6) ResNet152 + ABN | - Depth: (1 and 2) 18 layers (3 and 4) 50 layers (5 and 6) 152 layers - Training epochs: 100 - Learning rate: 0.001 | Pano | NM | N = 10,191 | Implant brands: N = 5 (A) ZB (4.19%) (B) NB (25.21%) (C) Kyocera Co. (7.07%) (D) ST (8.94%) (E) Dentsply IH AB (54.16%) Implant models: N = 13 1. Full OSSEOTITE 4.0 (4.19%) 2. Astra EV 4.2 (8.29%) 3. Astra TX 4.0 (24.73%) 4. Astra TX 4.5 (10.93%) 5. Astra Micro Thread 4.0 (6.91%) 6. Astra Micro Thread 4.5 (3.73%) 7. Branemark Mk III 4.0 (3.48%) 8. FINESIA 4.2 (3.33%) 9. POI EX 42 (3.74%) 10. Replace Select Tapered 4.3 (6.04%) 11. Nobel Replace CC 4.3 (15.69%) 12. Straumann Tissue 4.1 (6.43%) 13. Straumann Bone Level 4.1 (2.51%) | EORS | Test dataset split Training: validation: 8:2. | Test accuracy (95% CI): (a) ResNet18: 0.9486 (b) ResNet18 + ABN: 0.9719 (c) ResNet50: 0.9578 (d) ResNet50 + ABN: 0.9511 (e) ResNet152: 0.9624 (f) ResNet152 + ABN: 0.9564 Precision: (a) ResNet18: 0.9441 (b) ResNet18 + ABN: 0.9686 (c) ResNet50: 0.9546 (d) ResNet50 + ABN: 0.9477 (e) ResNet152: 0.9575 (f) ResNet152 + ABN: 0.9514 Recall: (a) ResNet18: 0.9333 (b) ResNet18 + ABN: 0.9627 (c) ResNet50: 0.9471 (d) ResNet50 + ABN: 0.9382 (e) ResNet152: 0.9509 (f) ResNet152 + ABN: 0.9450 F1 score: (a) ResNet18: 0.9382 (b) ResNet18 + ABN: 0.9652 (c) ResNet50: 0.9498 (d) ResNet50 + ABN: 0.9416 (e) ResNet152: 0.9530 (f) ResNet152 + ABN: 0.9470 AUC: (a) ResNet18: 0.9979 (b) ResNet18 + ABN: 0.9993 (c) ResNet50: 0.9983 (d) ResNet50 + ABN: 0.9975 (e) ResNet152: 0.9985 (f) ResNet152 + ABN: 0.9955 | ResNet 18 showed very high compatibility in the ABN model Accuracy: ResNet18 + ABN > ResNet152 > ResNet50: 0.9578 > ResNet152 + ABN > ResNet50 + ABN > ResNet18 |

| Kim et al., 2022, Republic of Korea [59] | - DCNN - YOLOv3 (Darknet-53) | - Depth: 53 layers - Training epochs: 100, 200, and 300 | PA | April 2020 to July 2021 | N = 355 | Implant models: N = 3 (1) Superline (Dentium Co. Ltd., Seoul, Republic of Korea) (34.08%) (2) TS III (Osstem Implant Co. Ltd., Seoul, Republic of Korea) (32.39%) (3) Bone Level Implant (Institut ST AG, Basel, Switzerland) (33.52%) | EORS | Test Group: 20% Training Group: 80% (10% used for validation) | At 200 epochs training: Accuracy: 96.7% Sensitivity: 94.4% Specificity: 97.9% Confidence score: 0.75 | High performance could be achieved with YOLOv3 DCNN |

| Lee et al., 2022, Republic of Korea [60] | - Automated DL - Neuro-T version 2.0.1, Neurocle Inc., Seoul, Republic of Korea | NM | Pano | NM | N = 180 | Implant models: N = 6 (1) Astra OsseoSpeed® TX (16.66%) (2) Dentium Implantium® (16.66%) (3) Dentium Superline® (16.66%) (4) Osstem TSIII® (16.66%) (5) Straumann SLActive® BL (16.66%) (6) Straumann SLActive®BLT (16.66%). | DL vs. 44 dental professionals (5 board-certified periodontists, 8 periodontology residents, 17 conservative and pediatric dentistry residents, and 14 interns) | Training Group: 80% Validation Group: 20% | Mean Accuracy - Automated DL algorithm: 80.56% - All participants (without DL assistance): 63.13% -- All participants (with DL assistance): 78.88% | The DL algorithm significantly helps improve the classification accuracy of dental professionals Average accuracy: board-certified periodontists with DL > Automated DL |

| Benakatti et al., 2021, India [56] | 4 machine learning algorithms: (1) Support vector machine (SVM) (2) Logistic regression (3) K-nearest neighbor (KNN) (4) X boost classifiers | NM | Pano | January 2021 to April 2021 | NM | Implant models: N = 3 1. Osstem TS III SA Regular, 2. Osstem TS III SA Medium, 3. Noris Medical Tuff. | EORS | Test Group: 20% Training Group: 80% | Average accuracy overall: 0.67 Accuracy based on Hu moments (a) SVM:0.47 (b) Logistic regression: 0.33 (c) KNN: 0.50 (d) X boost classifiers: 0.33 Accuracy based on eigenvalues (a) SVM: 0.67 (b) Logistic regression: 0.17 (c) KNN: 0.67 (d) X boost classifiers: 0.67 | The machine learning models tested are proficient enough to identify DISs Accuracy: logistic regression > SVM > KNN > X boost |

| Santos et al., 2021, Brazil [49] | - DCNN - Stochastic Gradient Descent optimization algorithm | - Depth: 5 convolutional layers + 5 dense layers - Training epochs: 25 - Learning rate: 0.005 | PA | 2018–2020 | N = 1800 | Implant Brands and Model: N = 3 (A) ST (internal-connection) (33.33%) (B) Neodent (Neodent) (33.33%) (C) SIN Implante (SIN Morse taper with prosthetic platform) (33.33%) | EORS | Test Group: 20% Training Group: 80% | 1. Accuracy = 85.29% (78.4% to 90.5%) 2. Sensitivity = 89.9% (81.1% to 95.6%) 3. Specificity = 82.4% (73.7% to 87.3%) 4. PPV = 82.6% (74.1% to 86.6%) 5. NPV = 88.5% (79.8% to 93.9% | - DCNN has high degree of accuracy for implant identification - Suggestion: Need for more comprehensive database |

| Sukegawa et al., 2021, Japan [52] | 5 CNNs 1. ResNet18 2. ResNet34 3. ResNet50 4. ResNet101 5. ResNet152 | - Depth: 1. 18 layers 2. 34 layers 3. 50 layers 4. 101 layers 5. 152 layers - Training epochs: 50 - Learning rate: 0.001 | Pano | January 2005 to December 2020 | N = 9767 | Implant brands: N = 5 (A) ZB, (B) Dentsply, (C) NB, (D) Kyocera, (E) ST Implant models: N = 12 1. Full OSSEOTITE 4.0 (ZB) (4.37%); 2. Astra EV 4.2 (Dentsply) (8.65%); 3. Astra TX 4.0 (Dentsply) (25.80%); 4. Astra MicroThread 4.0 (Dentsply) (7.20%); 5. Astra MicroThread 4.5 (Dentsply) (3.89%); 6. Astra TX 4.5 (Dentsply) (11.40%); 7. Brånemark Mk III 4.0 (NB) (3.63%); 8. FINESIA 4.2 (Kyocera) (3.39%); 9. Replace Select Tapered 4.3 (NB) (6.30%); 10. Nobel CC 4.3 (NB) (16.37%); 11. Straumann Tissue 4.1 (ST) (6.70%); 12. Straumann Bone Level 4.1 (ST) (2.25%) | EORS | Validation Group: 20% Training Group: 80% | Single task: accuracy: (a) ResNet18: 0.9787 (b) ResNet34: 0.9800 (c) ResNet50: 0.9800 (d) ResNet101: 0.9841 (e) ResNet152: 0.9851 Precision: (a) ResNet18: 0.9737 (b) ResNet34: 0.9790 (c) ResNet50: 0.9816 (d) ResNet101: 0.9822 (e) ResNet152: 0.9839 Recall: (a) ResNet18: 0.9726 (b) ResNet34: 0.9743 (c) ResNet50: 0.9746 (d) ResNet101: 0.9789 (e) ResNet152: 0.9809 F1 score: (a) ResNet18: 0.9724 (b) ResNet34: 0.9762 (c) ResNet50: 0.9776 (d) ResNet101: 0.9805 (e) ResNet152: 0.9820 AUC: (a) ResNet18: 0.9996 (b) ResNet34: 0.9997 (c) ResNet50: 0.9996 (d) ResNet101: 0.9997 (e) ResNet152: 0.9998 | - CNNs conferred high validity in the classification of DISs - The larger the number of parameters and the deeper the network, the better the performance for classifications |

| Lee et al., 2021, Republic of Korea [54] | - 3 DCNN (1) VGGNet-19 (2) GoogLeNet Inception-v3 (3) Automated DCNN (Neuro-T version 2.1.1) | - Depth: (1) 19 layers (2) 22 layers (3) 18 layers - Training epochs: 2000 - Learning rate: 0.0001 | PA and Pano | January 2006 to December 2019 | 251 intact and 198 fractured dental implants images (Pano: 45.2%, PA: 54.8%) | Not mentioned Intact and fractured dental implants were identified and classified | EORS | Test Group: 20% Training Group: 60% Validation Group: 20% | Overall: AUC: - VGGNet-19: 0.929 (95% CI: 0.854–0.972) - GoogLeNet Inception-v3: 0.967 (95% CI: 0.906–0.993) - Automated DCNN: 0.972 (95% CI: 0.913–0.995) Sensitivity: - VGGNet-19: 0.933 - GoogLeNet Inception-v3: 1.00 -Automated DCNN: 0.866 Specificity: - VGGNet-19: 0.933 - GoogLeNet Inception-v3: 0.866 -Automated DCNN: 0.966 Youden index: - VGGNet-19: 0.866 - GoogLeNet Inception-v3: 0.866 - Automated DCNN: 0.833 | - All tested DCNNs showed acceptable accuracy in the detection and classification of fractured dental implants - Best accuracy: Automated DCNN architecture using only PA images |

| Hadj Saïd et al., 2020, France [46] | - DCNN - Pretrained GoogLeNet Inception v3 | - Depth: 22 layers deep (27 including the pooling layers) - Training epochs: 1000 - Learning rate: 0.02 | PA and Pano | NM | N = 1206 | Implant brands: N = 3 (A) NB (49.4%), (B) ST (25.5%), (C) ZB (25%) Implant models: N = 6 1. NobelActive (21.64%); 2. Brånemark system (21.77%); 3. Straumann Bone Level (12.43%); 4. Straumann Tissue Level (13.1%); 5. Zimmer Biomet Dental Tapered Screw-Vent (12.6%); 6. SwissPlus (Zimmer) (12.43%) | EORS | Test Group: 19.9% Training and Validation Group: 80% | 1. Diagnostic accuracy = 93.8% (87.2% to 99.4%) 2. Sensitivity = 93.5% (84.2% to 99.3%) 3. Specificity = 94.2% (83.5% to 99.4%) 4. PPV = 92% (83.9% to 97.2%) 5. NPV = 91.5% (80.2% to 97.1%) | - Good performance of DCNN in implant identification - Suggestion: Creation of a giant database of implant radiographs |

| Lee et al., 2020, Republic of Korea [53] | - Automated DCNN - Neuro-T version 2.0.1 (Neurocle Inc., Republic of Korea) | - Depth: 18 layers | PA and Pano | January 2006 to May 2019 | N = 11,980 (Pano: 59.6% and PA: 40.4%) | Implant brands: N = 4 (A) Osstem implant system (46.9%) (B) Dentium implant system (40.7%) (C) Institut ST implant system (9.2%) (D) Dentsply implant system (3.2%) Implant models: N = 6 1. Astra OsseoSpeed TX (Dentsply) (3.2%) 2. Implantium (Dentium) (21%) 3. Superline (Dentium) (19.7%) 4. TSIII® (Osstem) (46.9%) 5. SLActive BL (Institut ST) (4.5%) 6. SLActive BLT (Institut ST) (4.7%) | DCNN vs. 25 dental professionals (board-certified periodontist, periodontology residents, other specialty residents) | Test Group: 20% Training Group: 80% | DCNN overall (based on 180 Images): Accuracy (AUC): 0.954 Youden index: 0.808 Sensitivity: 0.955 Specificity: 0.853 Using Pano images: AUC: 0.929 Youden index: 0.804 Sensitivity: 0.922 Specificity: 0.882 Using PA images: AUC: 0.961 Youden index: 0.802 Sensitivity: 0.955 Specificity: 0.846 AUC Dental Professionals: i. Board-certified periodontist: 0.501–0.968 ii. Periodontology residents: 0.503–0.915 iii. Other specialty residents: 0.544–0.915 | Accuracy: DCNN > Dental professionals |

| Takahashi et al., 2020, Japan [47] | - DL - Fine-tuned Yolo v3 | - Training epochs: 1000 - Learning rate: 0.01 | Pano | Feb. 2000–2020 | N = 1282 | Implant brands: N = 3 (A) NB, (B) ST, (C) GC Implant models: N = 6 1. MK III (NB); 2. MK III Groovy (NB); 3. Bone level implant (ST); 4. Genesio Plus ST (Genesio) (GC); 5. MK IV (NB); 6. Speedy Groovy (NB) | EORS | Test Group: 20% Training Group: 80% | 1. True-positive ratio: 0.50 to 0.82 2. Average precision: 0.51 to 0.85 3. Mean average precision: 0.71 4. Mean intersection over union: 0.72 | - Implants can be identified by using DL - Suggestion: More images of other implant systems will be necessary to increase the learning performance |

| Sukegawa et al., 2020, Japan [50] | - 5 DCNNs 1. Basic CNN 2. VGG16 transfer-learning model 3. Finely tuned VGG16 4. VGG19 transfer-learning model 5. Finely tuned VGG19 | - Depth: 1: 3 convolution layers, 2 and 3: 16 layers (13 convolutional layers + 3 fully connected layers), 4 and 5: 19 layers (16 convolutional layers + 3 fully connected layers) - Training epochs: 700 - Learning rate: 0.0001 | Pano | January 2005 to December 2019 | N = 8859 | Implant brands: N = 5 (A) ZB, (B) Dentsply, (C) NB, (D) Kyocera, (E) ST Implant models: N = 11 1. Full OSSEOTITE 4.0 (ZB) (4.81%); 2. Astra EV 4.2 (Dentsply) (4.79%); 3. Astra TX 4.0 (Dentsply) (28.45%); 4. Astra MicroThread 4.0 (Dentsply) (12.28%); 5. Astra MicroThread 4.5 (Dentsply) (7.87%); 6. Astra TX 4.5 (Dentsply) (4.36%); 7. Brånemark Mk III 4.0 (NB) (4.77%); 8. FINESIA 4.2 (Kyocera) (2.63%); 9. Replace Select Tapered 4.3 (NB) (5.48%); 10. Nobel CC 4.3 (NB) (18.97%); 11. Straumann Tissue 4.1 (ST) (5.53%) | EORS | Test Group: 25% Training Group: 75% | 1. Basic CNN: i. Accuracy: 0.860 ii. Precision: 0.842 iii. Recall: 0.802 iv. F1 score: 0.819 2. VGG16-transfer learning: i. Accuracy: 0.899 ii. Precision: 0.888 iii. Recall: 0.864 iv. F1 score: 0.874 3. Finely tuned VGG16: i. Accuracy: 0.935 ii. Precision: 0.928 iii. Recall: 0.907 iv. F1 score: 0.916 4. VGG19 transfer-learning: i. Accuracy: 0.880 ii. Precision: 0.873 iii. Recall: 0.840 iv. F1 score: 0.853 5. Finely tuned VGG19: i. Accuracy: 0.927 ii. Precision: 0.913 iii. Recall: 0.894 iv. F1 score: 0.902 | High accuracy demonstrated by all tested DCNNs Accuracy: Finely tuned VGG16 > Finely tuned VGG19 > VGG16-transfer learning > VGG19 transfer-learning > Basic CNN |

| Lee and Jong, 2020, Republic of Korea [51] | - DCNN - GoogLeNet Inception v3 | - Depth: 22 layers deep, 2 fully connected layers - Training epochs: 1000 | PA and Pano | January 2010 to December 2019 | N = 10,770 (Pano: 5390, PA: 5380) | Implant brands: N = 3 (A) Osstem TSIII implant system (42.71%) (B) Dentium Superline implant system (40.57%) (C) Straumann BLT implant system (16.71%) | DCNN vs. board-certified periodontist | Test Group: 20% Training Group: 60% Validation Group: 20% | i. AUC: 1. Overall: - DCNN: 0.971 (95% CI: 0.963–0.978) - Periodontist: 0.925 (95% CI: 0.913–0.935) 2. Pano: - DCNN: 0.956 (95% CI: 0.942–0.967) - Periodontist: 0.891 (95% CI: 0.871–0.909) 3. PA - DCNN: 0.979 (95% CI: 0.969–0.987) - Periodontist: 0.959 (95% CI: 0.945–0.970) ii. Sensitivity and specificity 1. Overall: - DCNN: 95.3% and 97.6% - Periodontist: 88.7% and 87.1% 2. Pano: - DCNN: 93.6% and 95.7% - Periodontist: 82.9% and 90.3% 3. PA - DCNN: 97.1% and 99.5% - Periodontist: 94.2% and 95.8% | DCNN is useful for the identification and classification of DISs Accuracy: DCNN > Periodontist (both are reliable) |

| Kim et al., 2020, Republic of Korea [55] | 5 different CNNs (1) SqueezeNet (2) GoogLeNet (3) ResNet-18 (4) MobileNet-v2 (5) ResNet-50 | - Depth: (1) 18 layers (2) 22 layers (3) 18 layers (4) 54 layers (5) 50 layers - Training epochs: 500 | PA | 2005 to 2019 | N = 801 | Implant models: N = 4 1. Brånemark Mk TiUnite 2. Dentium Implantium 3. Straumann Bone Level 4. Straumann Tissue Level | EORS | NM | Test accuracy: (a) SqueezeNet: 96% (b) GoogLeNet: 93% (c) ResNet-18: 98% (d) MobileNet-v2: 97% (e) ResNet-50: 98% Precision: (a) SqueezeNet: 0.96 (b) GoogLeNet: 0.92 (c) ResNet-18: 0.98 (d) MobileNet-v2: 0.96 (e) ResNet-50: 0.98 Recall: (a) SqueezeNet: 0.96 (b) GoogLeNet: 0.94 (c) ResNet-18: 0.98 (d) MobileNet-v2: 0.96 (e) ResNet-50: 0.98 F1 score: (a) SqueezeNet: 0.96 (b) GoogLeNet: 0.93 (c) ResNet-18: 0.98 (d) MobileNet-v2: 0.96 (e) ResNet-50: 0.98 | CNNs can classify implant fixtures with high accuracy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ibraheem, W.I. Accuracy of Artificial Intelligence Models in Dental Implant Fixture Identification and Classification from Radiographs: A Systematic Review. Diagnostics 2024, 14, 806. https://doi.org/10.3390/diagnostics14080806

Ibraheem WI. Accuracy of Artificial Intelligence Models in Dental Implant Fixture Identification and Classification from Radiographs: A Systematic Review. Diagnostics. 2024; 14(8):806. https://doi.org/10.3390/diagnostics14080806

Chicago/Turabian StyleIbraheem, Wael I. 2024. "Accuracy of Artificial Intelligence Models in Dental Implant Fixture Identification and Classification from Radiographs: A Systematic Review" Diagnostics 14, no. 8: 806. https://doi.org/10.3390/diagnostics14080806

APA StyleIbraheem, W. I. (2024). Accuracy of Artificial Intelligence Models in Dental Implant Fixture Identification and Classification from Radiographs: A Systematic Review. Diagnostics, 14(8), 806. https://doi.org/10.3390/diagnostics14080806