Abstract

Digital twin technology significantly enhances construction site management efficiency; however, dynamically reconstructing site activities presents a considerable challenge. This study introduces a methodology that leverages camera data for the 3D reconstruction of construction site activities. The methodology was initiated using 3D scanning to meticulously reconstruct the construction scene and dynamic elements, forming a model base. It further integrates deep learning algorithms to precisely identify static and dynamic elements in obstructed environments. An enhanced semi-global block-matching algorithm was then applied to derive depth information from the imagery, facilitating accurate element localization. Finally, a near-real-time projection method was introduced that utilizes the spatial relationships among elements to dynamically incorporate models into a 3D base, enabling a multi-perspective view of site activities. Validated by simulated construction site experiments, this methodology showcased an impressive reconstruction accuracy reaching up to 95%, this underscores its significant potential in enhancing the efficiency of creating a dynamic digital twin model.

1. Introduction

The construction phase, recognized as the most critical stage of a project, accounts for the bulk of time and economic costs, primarily owing to the stringent requirements of safety, quality, and schedule management [1]. Digital twin technology, known for its seamless integration of physical and virtual spaces, is regarded as one of the most effective methods for achieving the precise management of construction sites [2]. As digital twin technology continues to advance, its integration into construction management has become increasingly critical, highlighting the urgent need for a dynamic three-dimensional (3D) digital twin model capable of accurately reflecting the real-time activities and processes of a construction site. Such comprehensive models are crucial for maximizing the benefits of digital twins, enabling efficient information exchange and monitoring management in construction projects [3,4]. However, challenges persist in the application of this technology, particularly regarding the complexities of the modeling process and the latency in model interactions [5]. Consequently, enhancing the efficiency of digital twin modeling, including all elements of construction sites, is of utmost importance.

To enhance the efficiency of digital twin models, recent research has focused on developing three-dimensional (3D) reconstruction techniques for static elements at construction sites. Zhu et al. [6] introduced a method combining laser scanning with binocular vision to accelerate the 3D point cloud reconstruction process. Sani et al. [7] utilized dense point clouds gathered from Unmanned Aerial Vehicles (UAVs) to create high-quality, photorealistic 3D building models. The application of UAV technology further extends to comprehensive areas, such as large-scale construction scene 3D reconstruction [8,9], detection [10], mapping [11,12], and building condition monitoring [9]. However, the above-mentioned reconstruction equipment has limitations such as high-cost investment and the loss of local reconstruction details. And it is crucial to emphasize that the 3D reconstruction for digital twin models must encompass both the geometric and physical attributes of the construction elements. In subsequent studies, some scholars used camera measurement technology and deep learning models to carry out ensemble reconstruction. Liu et al. [13] integrated the Direct Sparse Odometry with Loop Closure (LDSO) algorithm and deep learning models to facilitate the near real-time reconstruction of static elements, capturing their geometric and physical information using a monocular camera. The aforementioned methodology offers a sophisticated technological framework for the advancement of digital twin modeling and the virtual oversight of construction processes. Nonetheless, there remains a critical imperative to enhance the fidelity of the reconstruction algorithms.

Recent research trends in digital twin technology for construction management have highlighted the critical need to integrate static infrastructure elements with dynamic construction activities to capture the evolving characteristics of construction processes within digital twin models. An et al. [14] present a significant contribution with the Moving Objects in Construction Sites (MOCS) image dataset, encompassing 41,668 images from 174 construction sites, which facilitated the development of 15 deep neural network-based detectors demonstrating exceptional precision and robustness in detecting dynamic elements. Concurrently, technological strides in safety management for detecting safety gear and monitoring personnel behavior have bolstered onsite safety protocols [15,16]. A notable innovation by Liu et al. [17] is the GM-APD LiDAR algorithm, which facilitates the accurate and efficient dynamic 3D reconstruction of moving objects. Despite these advancements, the predominant reliance on two-dimensional camera images for feature detection presents a significant impediment to the seamless integration of dynamic elements within digital twin models for the holistic virtual management of construction projects. To surmount this challenge, an innovative, low-cost, high-precision modeling approach is needed that can encapsulate the complex dynamic properties of construction sites, paving the way for enhanced schedule and safety management paradigms.

Given the state of the research and the field’s practical needs, a near-real-time dynamic 3D reconstruction method for construction site is proposed here. More specifically, this method uses innovative projection technology to integrate the dynamic model into the static model substrate in near real time, so as to achieve the full-view safety monitoring of the construction site.

The remainder of this paper is organized as follows: Section 2 reviews the research on techniques related to dynamic reconstruction; Section 3 describes the improvement of techniques related to dynamic reconstruction and accuracy analysis; Section 4 describes the experimental verification of simulated construction scenarios; and finally, a conclusion is drawn in Section 5.

2. Related Works

2.1. The 3D Reconstruction of Static Construction Scenes and Elements

The reconstruction of the geometric features of construction scenes and elements is critical for the reconstruction of dynamic activities at construction sites, thereby facilitating the establishment of dynamic digital twin models. The principal methods for capturing the 3D geometric features of these elements include laser scanning and camera photography. Laser scanning, a non-contact method, is highly effective in generating detailed point clouds for spatial delineation and has been extensively used in research to achieve the accurate reconstruction of building surfaces through sophisticated optimization algorithms [18,19,20,21,22,23]. Concurrently, the adoption of monocular cameras is on the rise, driven by their cost-effectiveness and operational convenience. Recent algorithm advancements have enabled these cameras to achieve a reconstruction precision that rivals laser scanning, as evidenced by recent studies [24,25].

To accurately position elements within construction scenes, it is essential to extract depth information from camera images. Binocular cameras that leverage stereo vision technology have become instrumental in enhancing depth information retrieval for the 3D reconstruction of construction elements. By simultaneously capturing images from two distinct perspectives, these cameras enable the analysis of disparities between the views captured by the left and right cameras. Such disparity analysis is integral to generating detailed point clouds using the Structure from Motion (SfM) technique, ultimately facilitating the creation of precise 3D models [26,27,28]. Studies have underscored the benefits of binocular cameras in the reconstruction process, particularly noting improvements in speed, accuracy, and robustness in capturing depth information [7,29,30,31,32,33]. Consequently, binocular cameras have been recognized as an efficacious technological approach for 3D reconstruction that encompasses depth information.

2.2. Detection of Static and Dynamic Elements at Construction Sites

Based on the 3D reconstruction of construction scenes and elements, the establishment of dynamic digital twin models requires accurate element detection using camera image data. Deep neural networks (DNNs) have recently demonstrated exceptional performance in object detection tasks compared to traditional methods. DNN-based detectors can learn detailed features from large datasets, improving object-detection robustness significantly [34]. In the construction field, DNNs have been applied to scenarios such as noncertified work detection [35] and the recognition of construction activities [36]. It has been observed that the detection accuracy of DNNs is intrinsically linked to both the quality and the scale of the data resources used for detection [37]. However, the detectors in the above studies were trained and tested on their datasets, which could be small-scale (i.e., only a few thousand images) or customized (i.e., targeted at specific objects) [34,37]. Therefore, benchmarks for DNN-based detectors on large-scale datasets are required to improve the generalization performance of the algorithm.

Image datasets for element detection at construction sites are relatively limited [37]. Roberts and Golparvar-Fard introduced a dataset focused on detecting, tracking, and analyzing earthmoving equipment, featuring 479 videos capturing the atomic activities of excavators and dump trucks [37]. Annotations within these videos identify two objects per frame, categorized by the specific activity of the equipment. Wu et al. released the GDUT-HWD dataset aimed at hard hat wear detection, comprising 3174 images with 18,893 hardhats annotated for bounding box types [16]. Kim et al. developed a dataset from ImageNet consisting of 2920 instances across five distinct categories [38]. Addressing the need for enhanced generalization in element detection at construction sites, An et al. released the MOCS dataset, a large-scale, diverse dataset designed for construction object detection [14]. The MOCS dataset, annotating 13 types of moving elements over 41,668 images, establishes a benchmark with 15 different DNN-based detectors. This dataset underpins the efficient and accurate detection of construction elements in varied scenarios, providing a robust data foundation for advancing construction site detection technologies.

2.3. Spatial Information Perception of Static and Dynamic Elements

The accurate perception of spatial information from 2D camera images for static and dynamic elements is essential for the 3D reconstruction of dynamic construction activities. Stereo-matching algorithms are computational techniques that derive the 3D coordinates of scene elements by analyzing the disparity between binocular camera setups [39]. These algorithms have recently shown marked improvements in their ability to accurately perceive spatial information, with notable advancements in efficiency and accuracy documented [40,41,42,43,44]. Cai et al. [45] introduced the BConTri algorithm, which synergizes a boundary condition method with a prototype Radio Frequency Identification (RFID) system for precise object location tracking on construction sites. In addition, Chen et al. [46] developed a near real-time localization method for construction elements using an integrated sensor system combining cameras and 3D LiDAR with the YOLOv4-Tiny network, facilitating comprehensive model training. Yang et al. [47] utilized video data from a tower crane camera for image recognition via the MASK R-CNN method to enhance safety operations for tower crane operators. The precise and rapid perception of spatial information for construction site elements underpins the near real-time projection of dynamic element models and the formulation of dynamic digital twin models.

2.4. Brief Summary

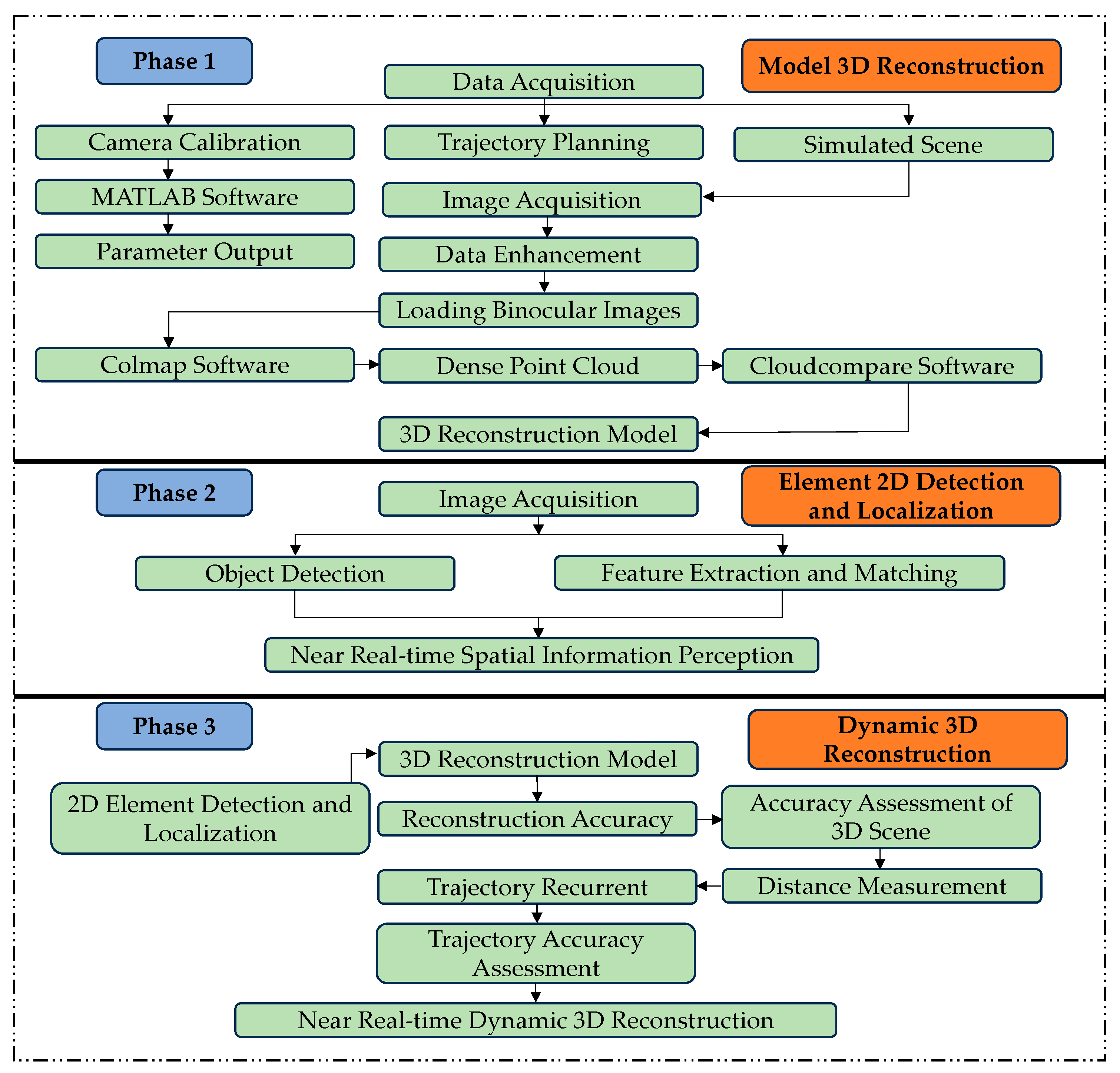

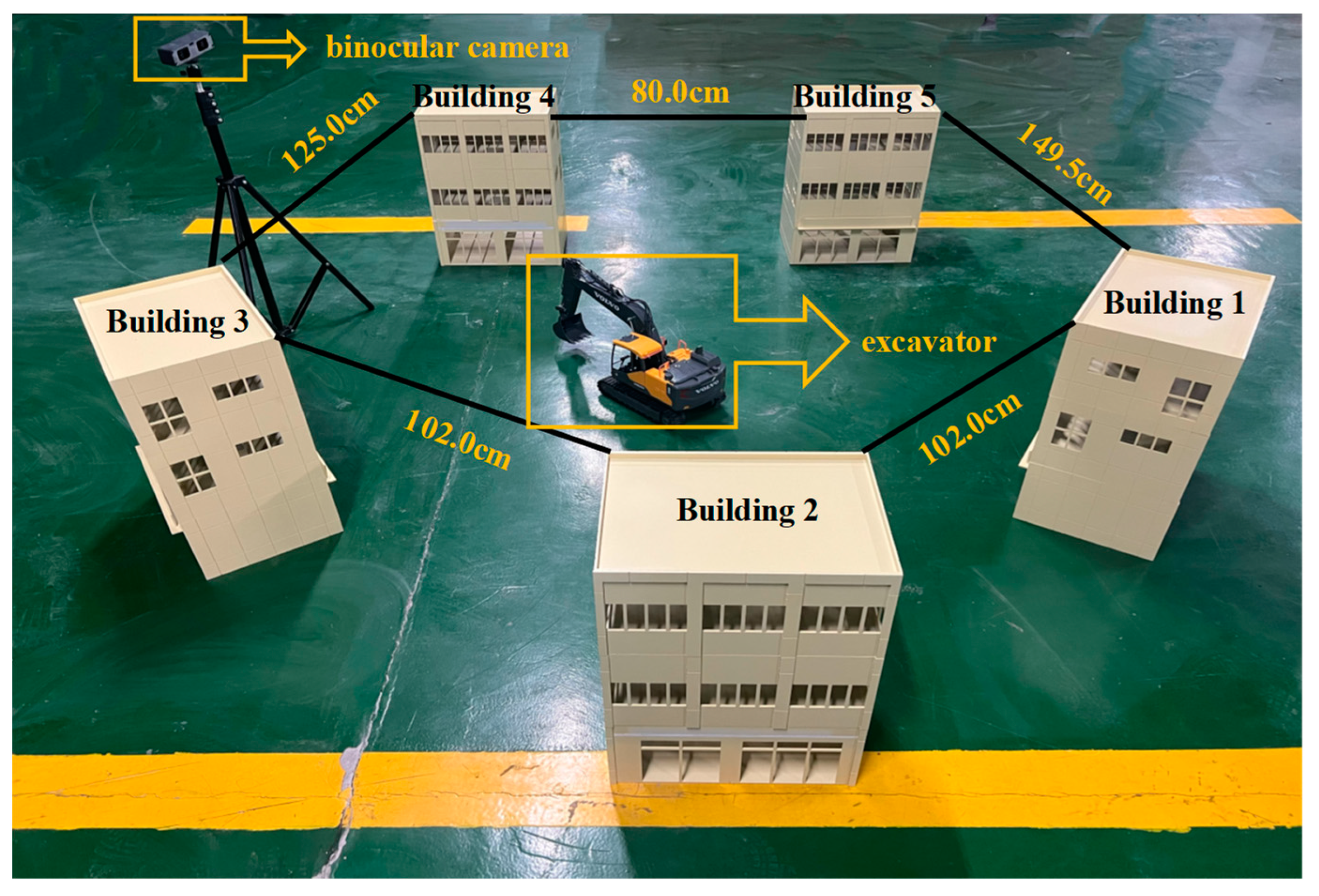

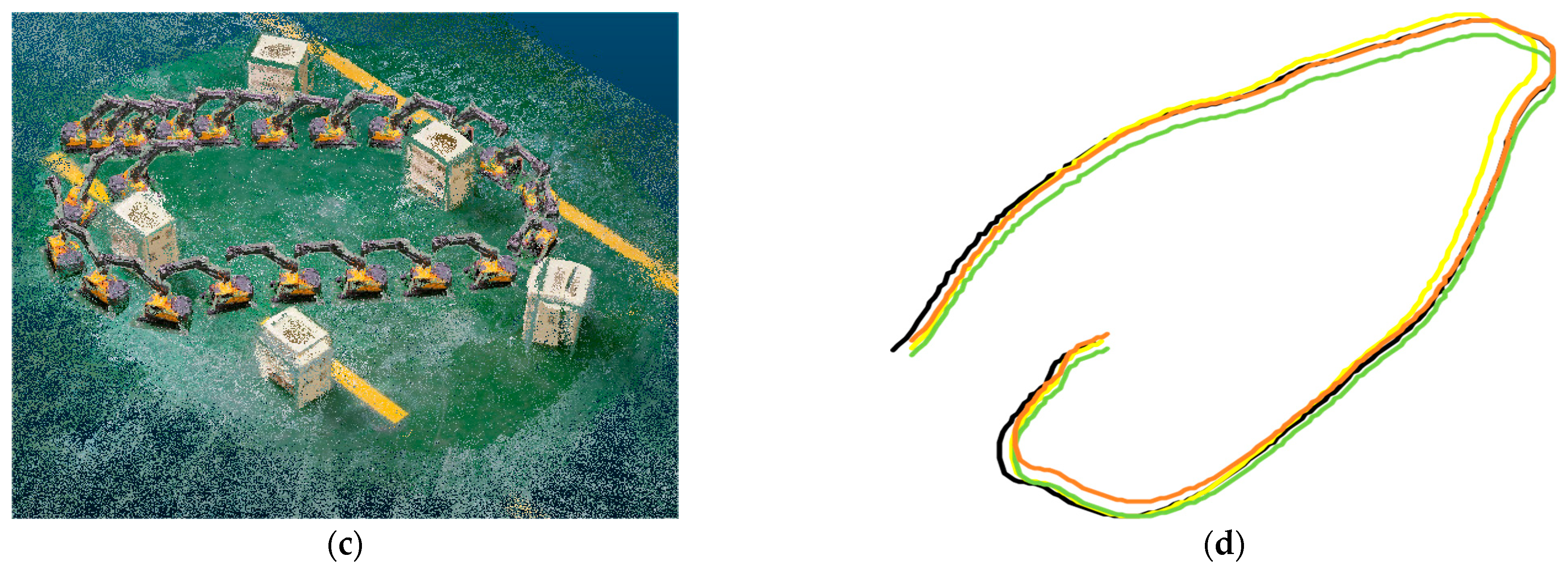

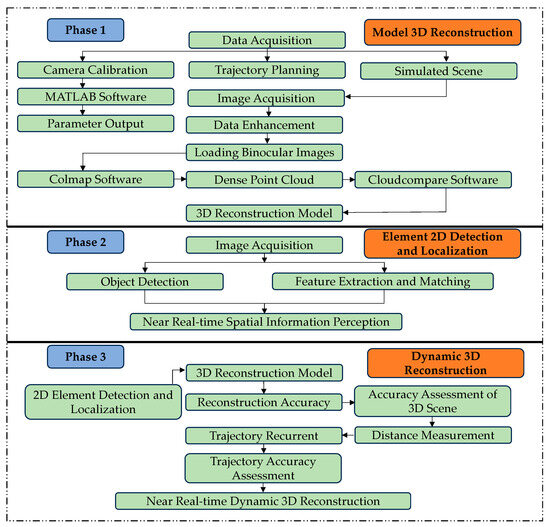

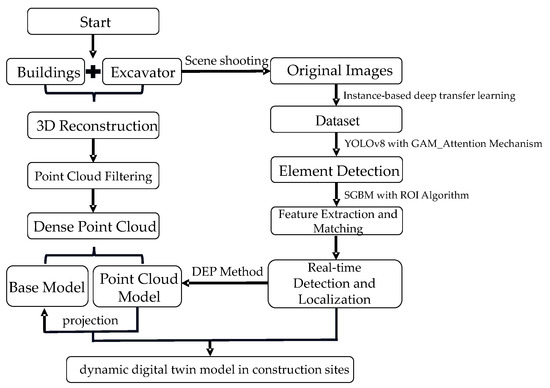

An overview of related works shows that recent advancements have facilitated the 3D reconstruction of the geometric characteristics of construction elements and their detection and localization through image information. However, a notable gap exists in effectively integrating image-based detection and localization information into the 3D reconstruction model base, which is crucial for establishing dynamic digital twin models for construction sites. This paper proposes a methodology to bridge this gap by emphasizing the synchronization of attributes between 3D models and 2D image data to reconstruct dynamic construction site activities accurately. The methodology was demonstrated using scale models, including buildings and an electric excavator, to represent static and dynamic construction elements, as shown in Figure 1.

Figure 1.

Details of the methodology.

3. The 3D Reconstruction of the Dynamic Digital Twin Model

3.1. Geometric Features of the 3D Reconstruction of Construction Elements

3.1.1. Reconstruction Techniques Based on a Binocular Camera

The binocular camera in this study was a 5-megapixel same-frame synchronized camera with a pixel size of 1280 × 480/30 fps and a focal length of 5 cm to infinity. The device was used to scan the excavator and the building in 3D to obtain the image dataset, and the image was enhanced using Histogram Equalization [48], Gray Level Transformation [49], etc., which in turn ensures the fidelity of the subsequent reconstruction work.

The high-precision 3D reconstructions of the excavator and building models were achieved using a binocular camera-based reconstruction technique [50]. Initially, local feature algorithms, namely the scale-invariant feature transform (SIFT) and speeded up robust features (SURF), were utilized to extract feature points from images and create their descriptors [51,52]. These algorithms enable the robust detection and characterization of image features across various scales and rotations. Subsequently, a nearest-neighbor-based feature-matching algorithm was applied to establish the correspondence between images [53]. The camera pose was accurately determined using a triangulation-based localization method, facilitating the reconstruction of the 3D point cloud structure of the construction scene using multiview geometry techniques [54]. Finally, to achieve a seamless 3D model, the Poisson reconstruction algorithm transforms sparse point cloud data into continuous form [53].

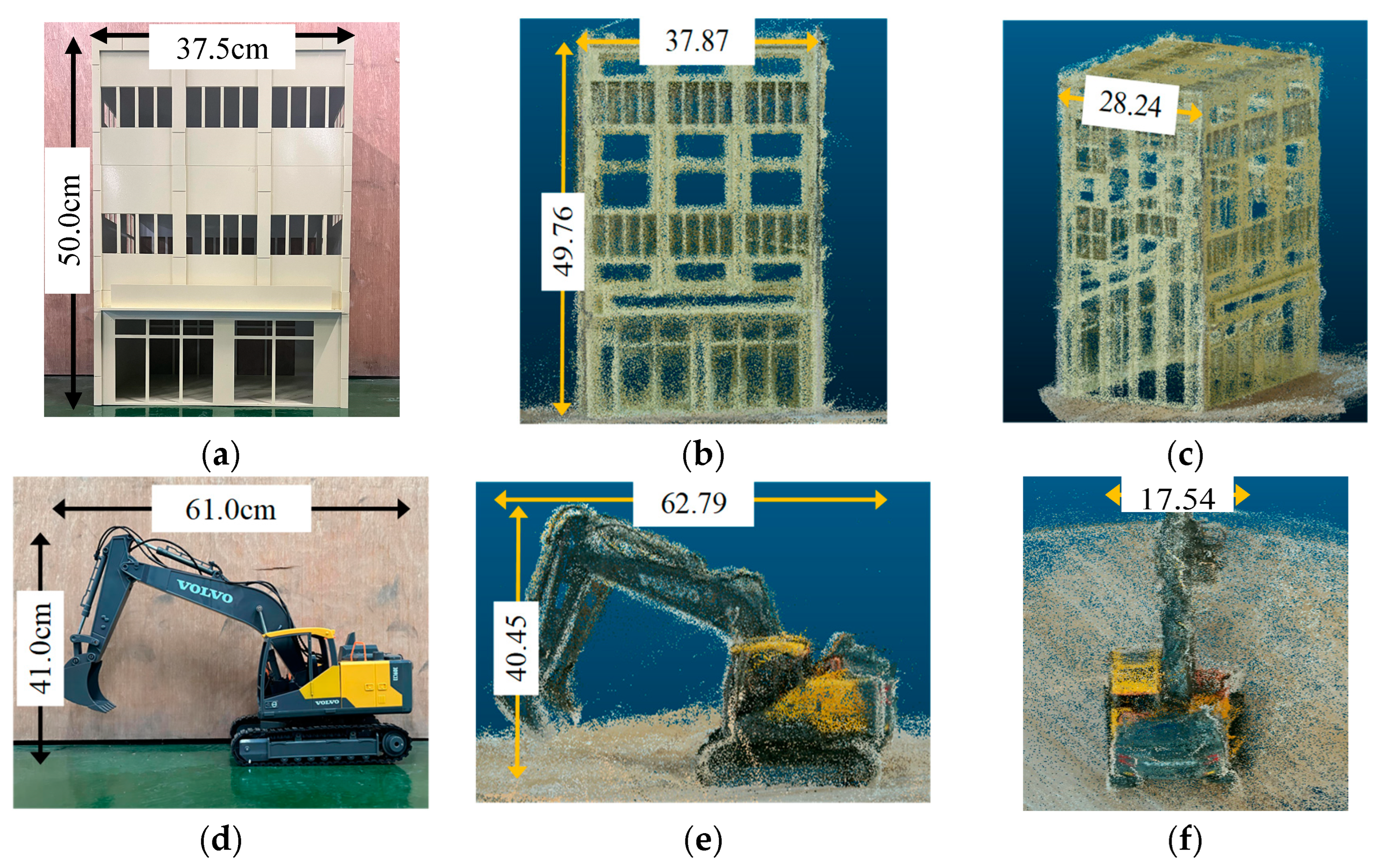

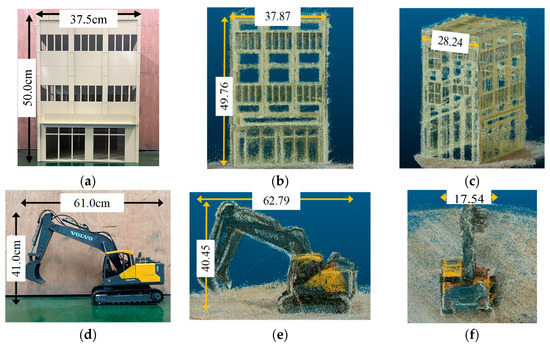

3.1.2. Accuracy Analysis of Geometric Features Reconstruction

Figure 2 shows a comparison between the actual and reconstructed dimensions of the building and excavator. It should be noted that the reconstructed point cloud models are non-dimensional. Therefore, the accuracy of the reconstruction process was ascertained by calculating the proportional-relative error between the actual element sizes and their respective point cloud dimensions. The formulas for this calculation and the results are shown in Equation (1) and Table 1: The results show that the relative errors for the building and excavator in terms of the actual-to-point cloud size ratios were 1.5% and 4.5%, respectively. These results indicate a high level of reconstruction accuracy.

where

Figure 2.

Comparison between the actual dimensions and the reconstructed point cloud dimensions: (a) actual size of the building; (b) pixel dimensions of the reconstructed building’s point cloud model (perspective 1); (c) pixel dimensions of the reconstructed building’s point cloud model (perspective 2); (d) actual size of excavator; (e) pixel dimensions of the reconstructed excavator’s point cloud model (perspective 1); (f) pixel dimensions of the reconstructed excavator’s point cloud model (perspective 2).

Table 1.

Relative error between the actual size and reconstructed point cloud.

- 1.

- is the proportional relative error between the actual size of the elements and their point cloud dimensions;

- 2.

- denotes the ratio of the actual dimensions of length, width, and height for both the excavator and building;

- 3.

- denotes the ratio of the reconstructed point cloud dimensions of length, width, and height.

3.2. Detection of Construction Elements under Occlusion Scenarios

3.2.1. Expanded Dataset and Yolov8 with GAM_Attention Mechanism

The methodology presented in this study aims to reconstruct dynamic construction-site activities using a single binocular camera. Thus, overcoming the occlusions inherent to a singular viewpoint is essential for the accurate detection of construction elements. An instance-based deep transfer learning approach was introduced to expand the MOCS dataset [55] to address this issue. This approach employs a weight adjustment strategy whereby specific instances from the source domain are selected to augment the training dataset in the target domain with appropriate weights allocated to these instances. A total of 3000 images from the MOCS dataset resembling our camera image data were selected as the source domain (S). Furthermore, 400 images captured using a binocular camera were added as enhancements to constitute the target domain (T). These were incorporated into the source domain data to enhance the generalization capacity of the model.

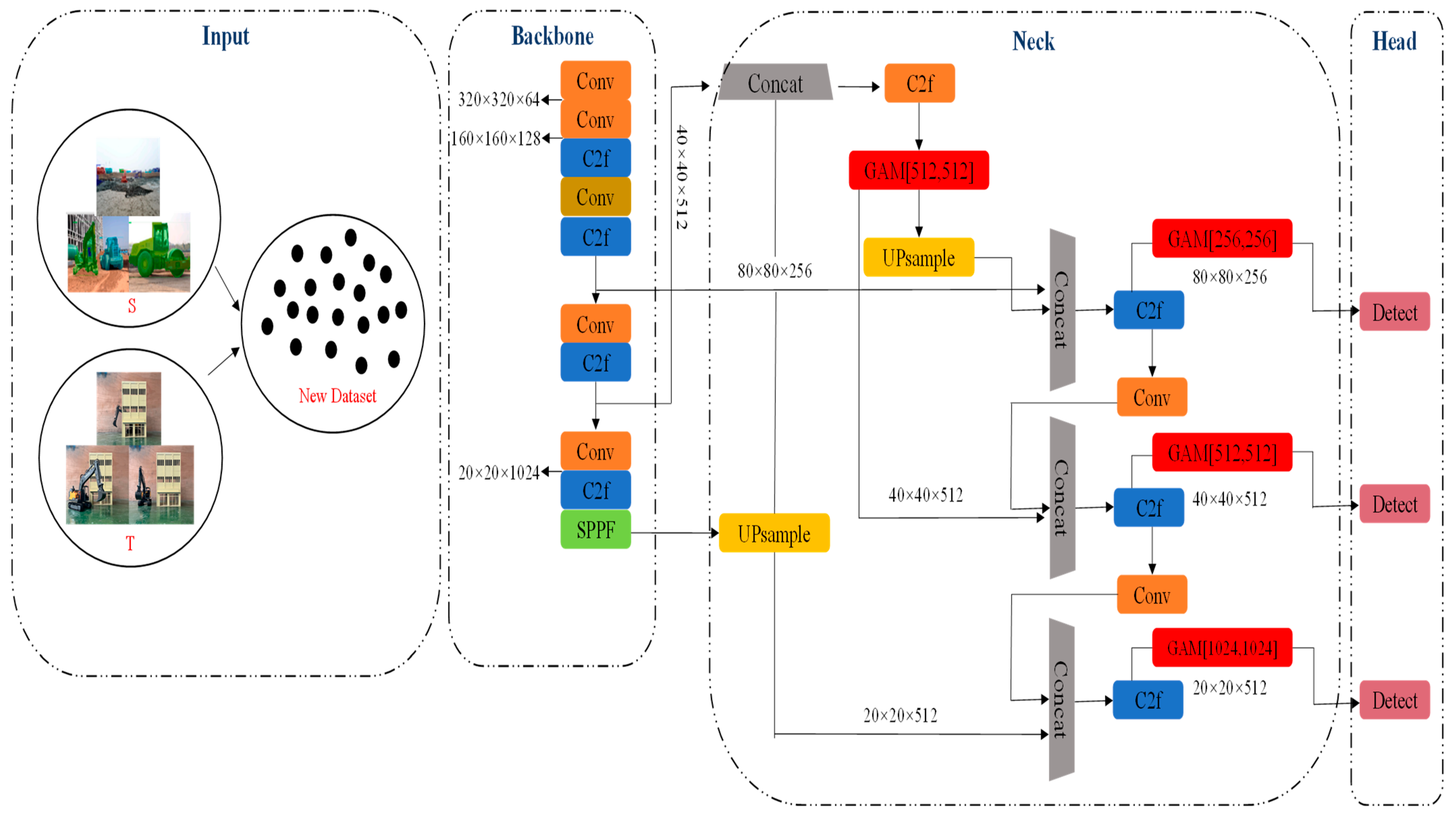

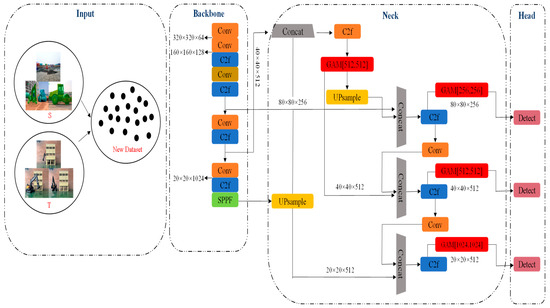

Based on the expanded dataset, this study adopted the YOLOv8n model [56], the latest advancement in YOLO-based object detection. The YOLOv8n model stands out for its multi-scale feature fusion and non-maximum suppression techniques, which are pivotal for improving detection performance. To address construction scene challenges such as obstacles and occlusions, the model incorporates the GAM_Attention Mechanism, as detailed in Figure 3. This mechanism integrates Channel Attention (CAM) and Spatial Attention Mechanisms (SAM), where CAM applies a multilayer perceptron (MLP) and sigmoid activation to feature maps for dimensional consistency, and SAM adjusts the channel numbers, integrating both into YOLOv8n’s neck module. The synergy between the CAM and SAM enhances the global feature detection capabilities of the model.

Figure 3.

Principle of the YOLOv8n model enhanced with the GAM_Attention mechanism.

3.2.2. Accuracy Analysis of Construction Elements Detection

In this study, the accuracy of construction element detection was validated using spatial scenarios involving buildings and excavators. The dataset was strategically divided into three segments: 3000 images from the MOCS dataset for training, 100 images from the augmented dataset for validation, and 300 images for testing. The training process was configured with 100 epochs and a batch size of 16. This expanded dataset was then imported into the YOLOv8n model containing the Attention Mechanism module.

Table 2 presents the performance of the YOLOv8n model for various Intersection over Union (IOU) thresholds and Attention Mechanism configurations. The results indicated that the YOLOv8n model integrated with the GAM_Attention Mechanism consistently achieved superior accuracy across the same IOU values. A notable peak in detection accuracy was observed at an IOU threshold of 0.6, where the model achieved a MAP@0.5 score of 0.995. This finding underscores the impact of IOU on detection confidence, as IOU quantifies the overlap between the predicted and actual labeled frames. A decline in the average accuracy at higher IOUs (0.8, 0.9, and 1.0) relative to 0.6 was noted. This trend is attributed to the increased overlap in higher IOUs, which inadvertently incorporates more non-target negative samples, such as incorrectly identified ground surfaces, into the analysis.

Table 2.

Test results with different IOU thresholds and Attention Mechanisms.

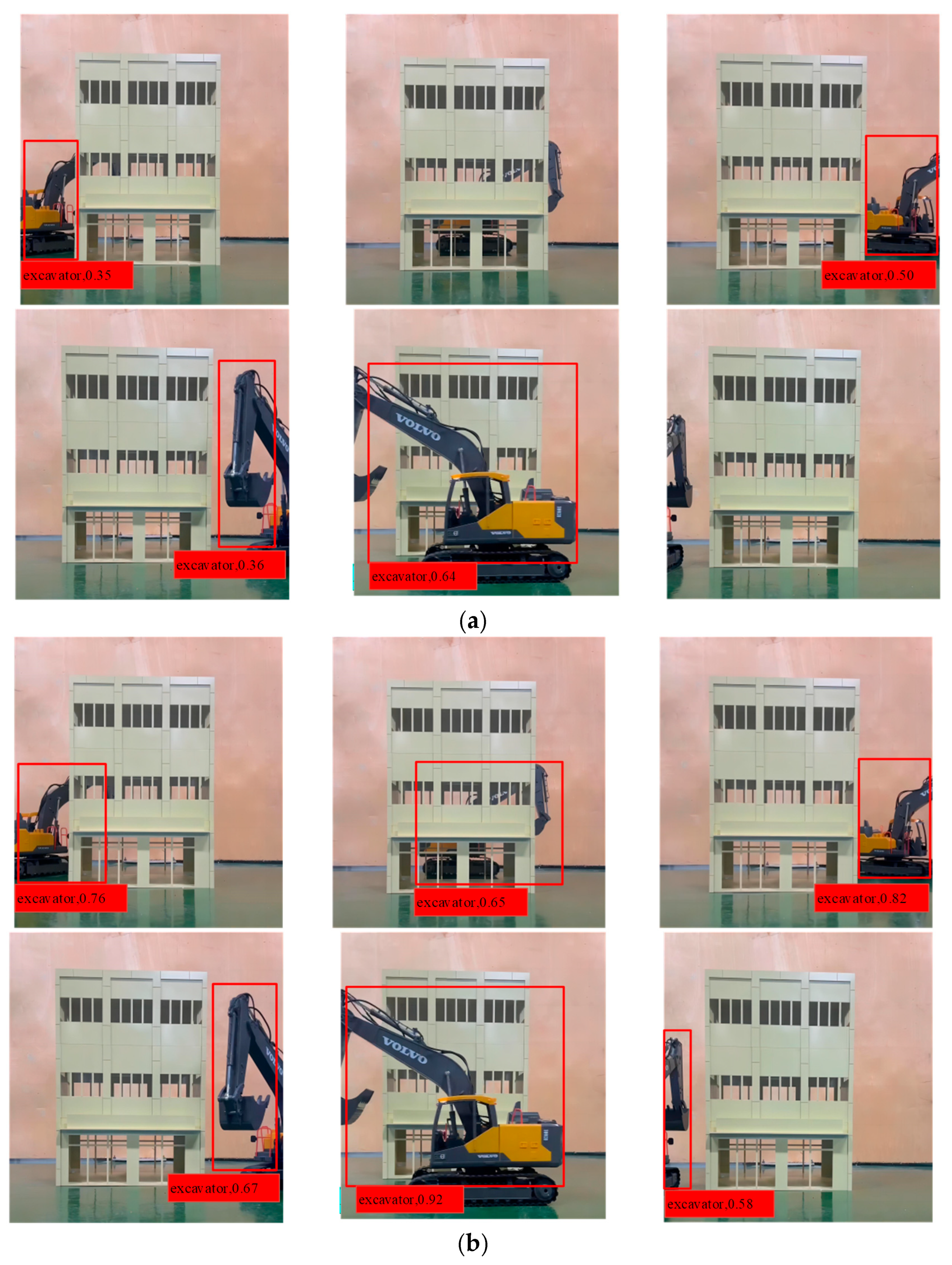

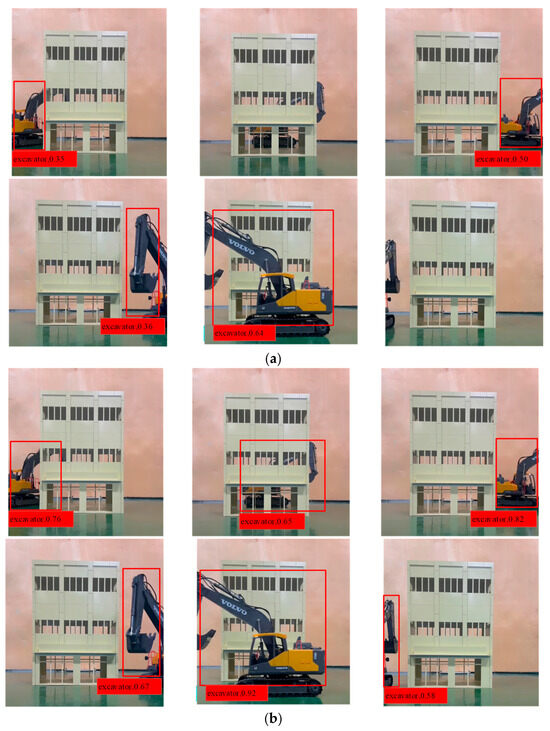

Furthermore, the influence of the dataset extension on detection performance was analyzed. Figure 4a shows the object detection results in a scenario without using the MOCS dataset, whereas Figure 4b presents the outcomes of the MOCS dataset application. This comparative analysis underscores the substantial improvement in object detection efficiency facilitated by the MOCS dataset, notably mitigating the target loss in scenarios characterized by obstructions.

Figure 4.

Influence of dataset extension on detection performance: (a) without the use of the MOCS dataset; (b) with the use of the MOCS dataset.

3.3. Spatial Information Perception of Construction Elements

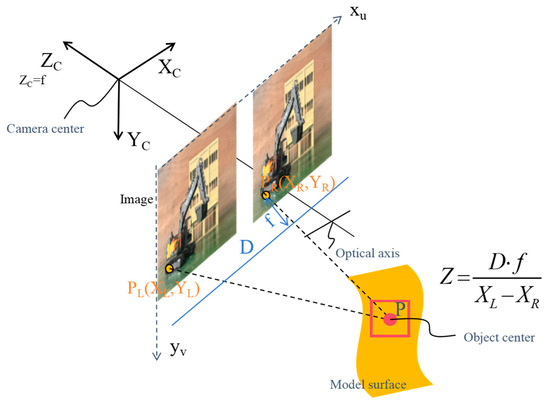

3.3.1. Principles of the Spatial Stereo Matching Algorithm Adopted

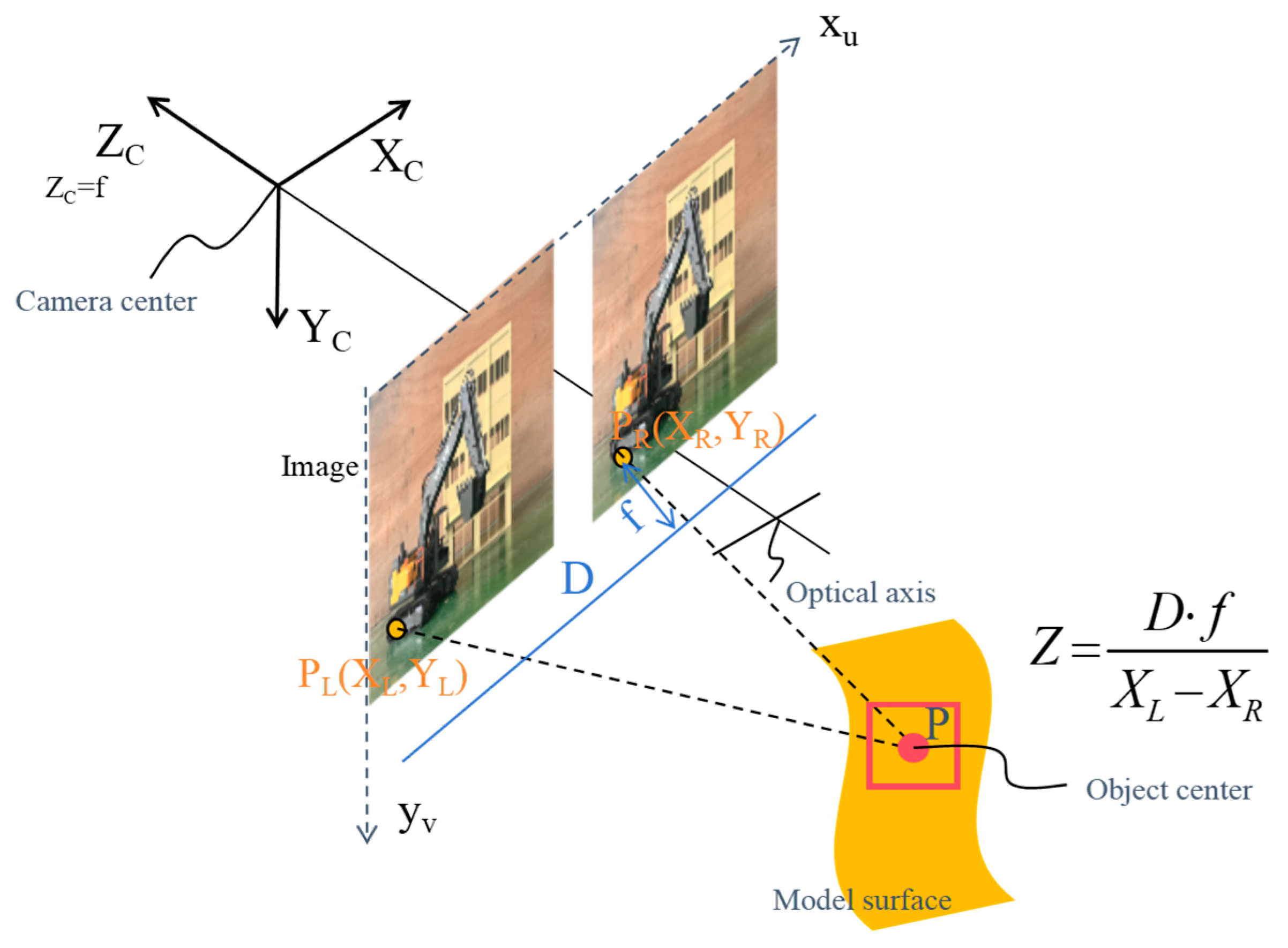

Building on the detection of construction elements, the reconstruction of dynamic construction site activities requires an enhanced perception of the spatial information of these elements based on image data, facilitating the spatial localization of static and dynamic construction elements. This study employed the Semi-Global Block Matching (SGBM) algorithm, renowned for its superior matching accuracy, edge preservation capabilities, and computational efficiency, to extract relative spatial information between excavators and buildings from binocular camera imagery [56]. The SGBM algorithm, as a semi-global stereo-matching method, capitalizes on parallax computation intrinsic to binocular vision. It proficiently generates image depth information through sophisticated methods such as uniqueness detection, sub-pixel interpolation, and left-right consistency checks. This approach is particularly effective in conducting detailed spatial analyses of static and dynamic construction elements. As illustrated in Figure 5, the 3D coordinates of any given spatial point P can be determined precisely using the depth information obtained from the binocular camera images. The relative spatial positioning between buildings and excavators was effectively discerned by accurately mapping these 3D coordinates for specific feature points.

Figure 5.

Principles of the SGBM algorithm.

3.3.2. Accuracy Analysis of Spatial Information Perception

The performance of the Semi-Global Block Matching (SGBM) algorithm was evaluated by comparing spatial data derived from the binocular imagery of buildings and excavators with their actual positional relationships. For this validation experiment, identical models of a building and excavator were employed. Stereoscopic image correction, a prerequisite for an accurate spatial analysis, was achieved through camera calibration using the Zhengyou checkerboard technique [57]. Mounted on a tripod, the binocular camera system captured video sequences subsequently segmented into 150 pairs of left and right frames. Supervised data enhancement methods, including color correction, cropping, and smoothing, were applied to improve the quality of these frames, which resulted in an expanded dataset of 200 images per viewpoint. Subsequently, the enhanced images were processed using the SGBM algorithm, which was factored into the camera calibration parameters to produce synthetic depth maps and RGB images, ensuring the fidelity of spatial analysis.

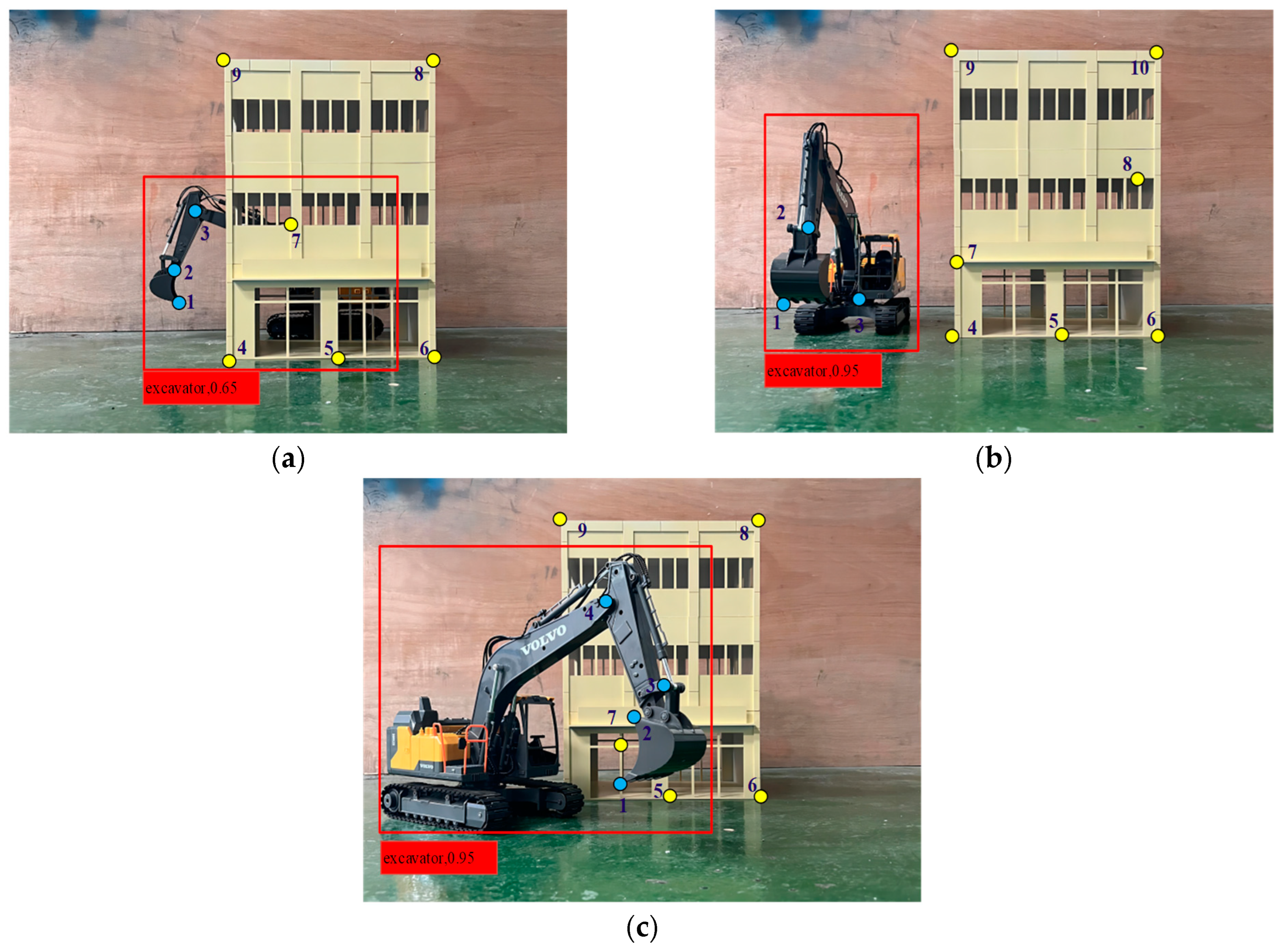

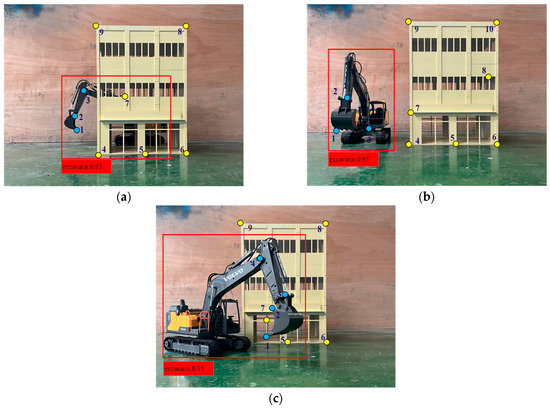

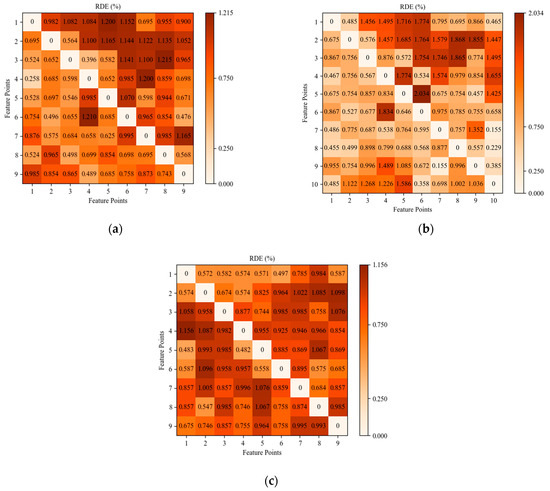

In this study, three spatial scenarios were analyzed: an unobstructed view, partial obstruction of the excavator by the building, and partial obstruction of the building by the excavator. Given that both the excavator and building are rigid bodies, their shapes, and sizes remain constant during movement. Hence, by employing the principle of rigid-body invariance, their forms and positions were determined by measuring the relative distances between the designated feature points. These feature points were systematically identified using a region of interest (ROI) extraction algorithm [58]. Variations in the quantity and arrangement of feature points across scenarios were noted, reflecting the varying degrees of obstruction between the excavator and building, as illustrated in Figure 6.

Figure 6.

Feature points extracted under three spatial scenarios: (a) partial obstruction of the excavator by the building; (b) unobstructed view; and (c) partial obstruction of the building by the excavator.

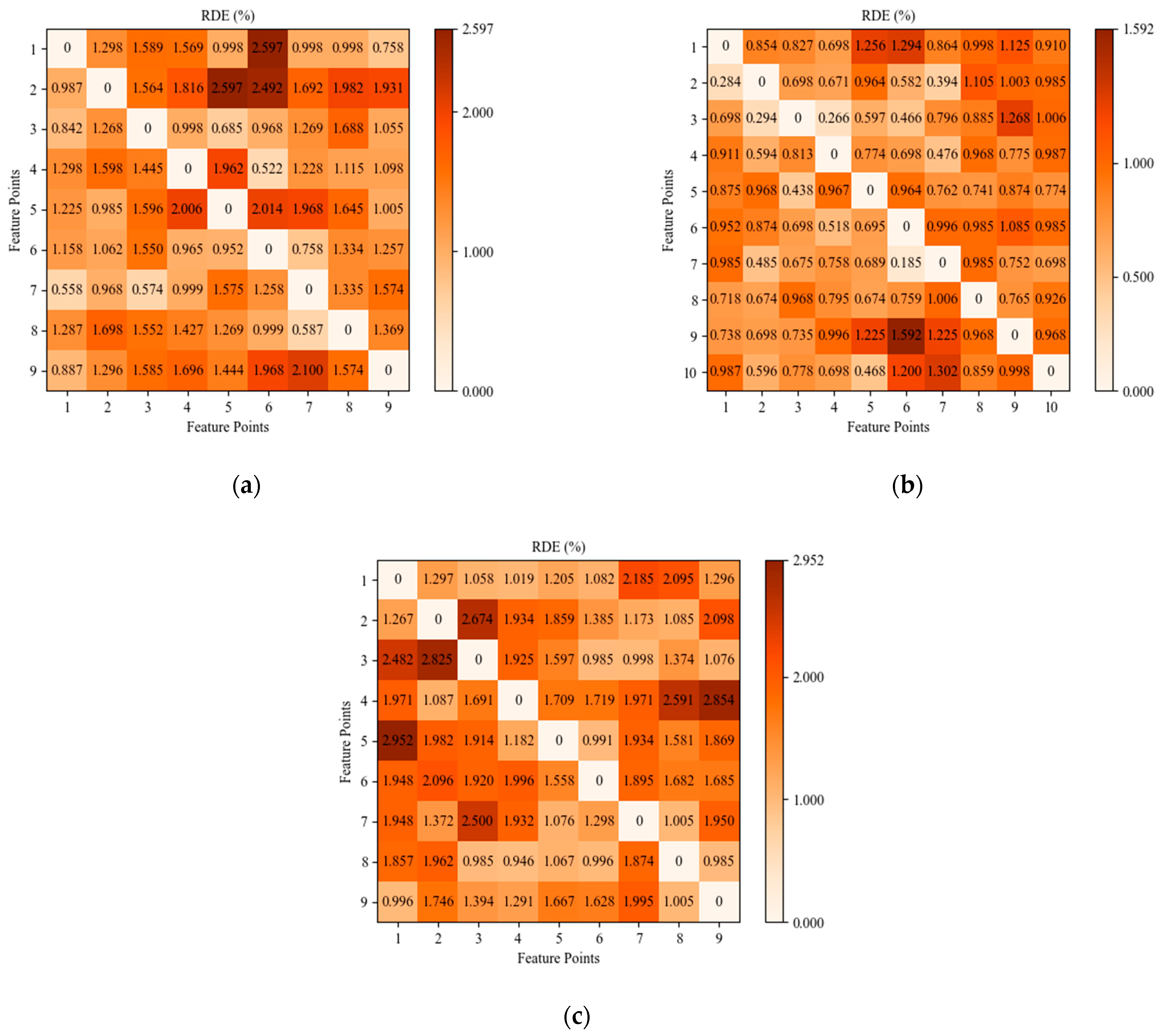

The extracted feature points were analyzed using the SGBM algorithm, and the relative distance error (RDE) between the actual and pixel distances of these points was calculated, as detailed in Equation (2).

where

- 1.

- represents the numerical identifier assigned to the feature points extracted from the excavator;

- 2.

- denotes the numerical identifier assigned to the feature points extracted from the structure;

- 3.

- is the actual distance between the feature points of the excavator and building;

- 4.

- is the pixel distance between the feature points of the excavator and building.

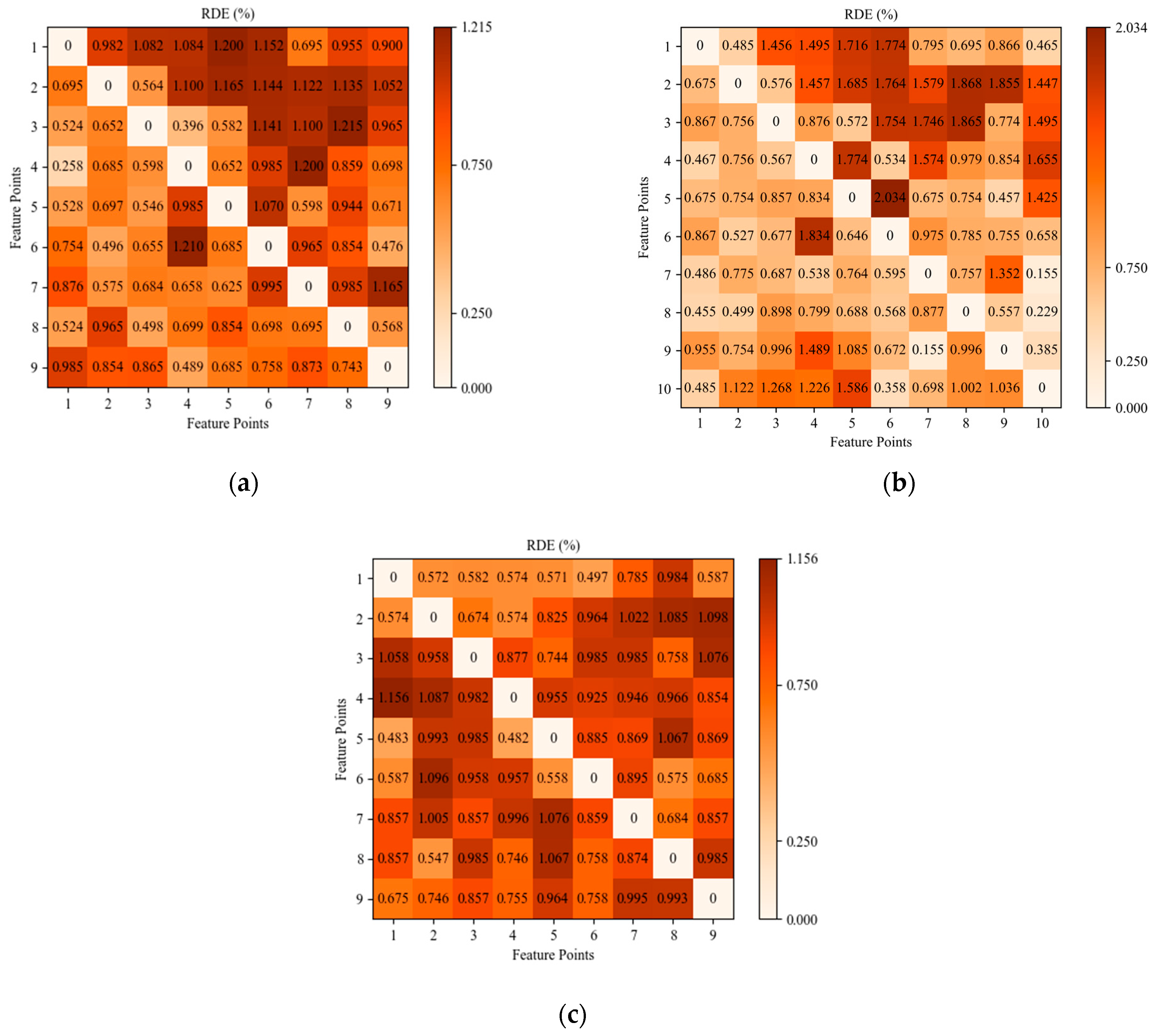

Figure 7 presents a comparative analysis between the actual distances and pixel-relative distance errors among the feature points in the three distinct scenarios. The maximum recorded error was 1.22%. These results suggest that spatial information perception facilitated by the SGBM algorithm in this study exhibits high accuracy.

Figure 7.

RDE among feature points in the three scenarios: (a) partial obstruction of the excavator by the building; (b) unobstructed view; and (c) partial obstruction of the building by the excavator.

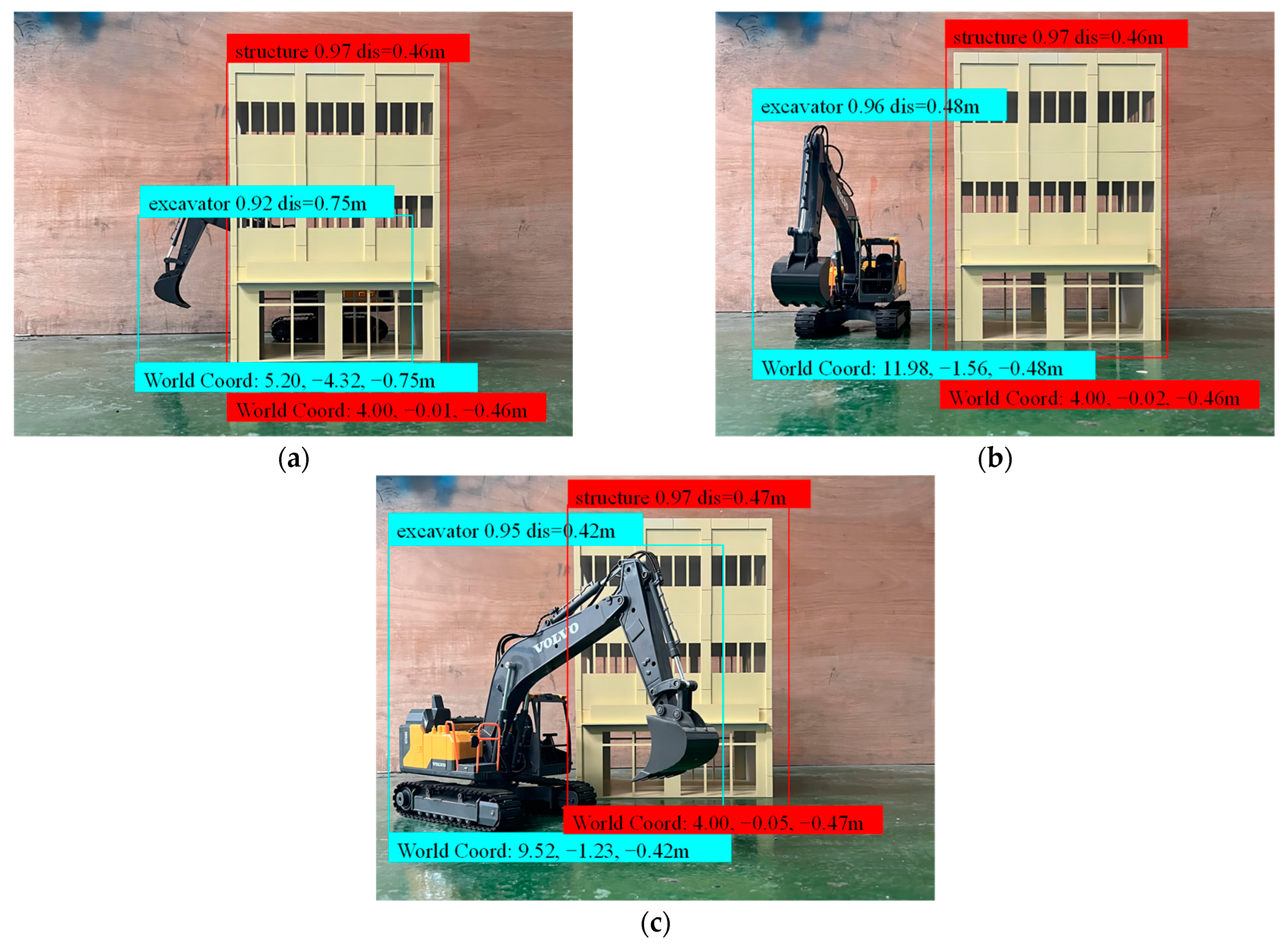

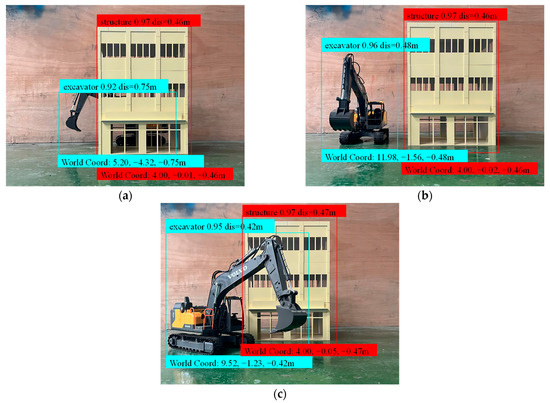

3.3.3. Synchronous Detection and Localization of Construction Elements

Based on spatial information perception, this study enhances the synchronous detection and localization of construction elements by integrating the SGBM algorithm with YOLOv8. Specifically, the YOLOv8 model detects excavators and buildings, whereas the SGBM algorithm automatically pinpoints the feature points of these objects, thereby facilitating the extraction of depth information and three-dimensional coordinates. This integrated approach enables the efficient image-based synchronous detection and localization of construction elements. It should be noted that the depth and three-dimensional coordinates shown in Figure 8 correspond to the center points of the detection boxes.

Figure 8.

Synchronous detection and localization of target objects under different scenarios: (a) partial obstruction of the excavator by the building; (b) unobstructed view; and (c) partial obstruction of the building by the excavator.

3.4. Near Real-Time Projection Method for Integrating Dynamic Elements into the Static Model Base

3.4.1. Near Real-Time Projection Method

As previously identified, accurately representing the spatial relationships and temporal dynamics of construction site elements in real-time within a 3D model space presents a significant challenge to the establishment of dynamic digital twin models. Therefore, a novel near-real-time projection method that effectively merges 2D image data with 3D models was introduced. This approach utilizes point cloud models of the construction environment and dynamic elements as the foundational bases for model integration. Initially, the trajectory of dynamic elements is recorded through the strategic deployment of binocular camera systems. Subsequently, leveraging the segmented video feed, an enhanced YOLOv8 detection framework, in conjunction with the SGBM algorithm, is employed for the near-real-time detection and localization of dynamic elements. This process facilitates the automated extraction and congruence of feature points, thereby enabling the precise determination of the three-dimensional coordinates of dynamic elements within each frame. Furthermore, the spatial coordinates of the underlying three-dimensional model’s point cloud are meticulously apprehended. Capitalizing on the principle of rigid-body geometric invariance, the dynamic elements are accurately projected onto the static model foundation. This projection ensures the comprehensive capture of positional data across the entire visual spectrum of each frame. Subsequently, the establishment of a dynamic digital twin model is accomplished, which encapsulates the spatial position relationship between dynamic and static elements within the construction environment, the projection process is shown in Figure 9.

Figure 9.

Projection of excavator into the building model base.

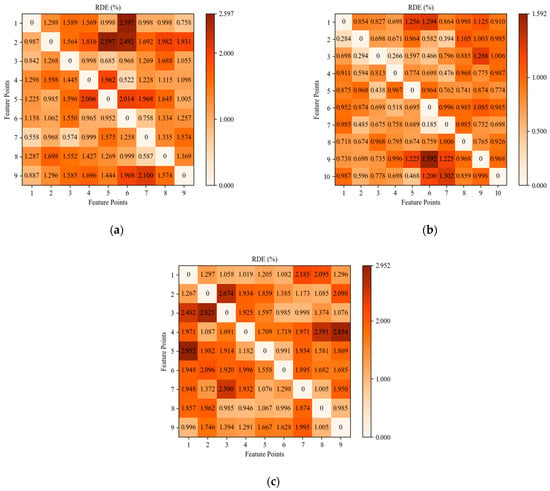

3.4.2. Accuracy Analysis of the Projection Method

To assess the accuracy of the projection method employed in this study, the three scenarios depicted in Figure 10 were analyzed. The analysis involved comparing the actual distances to the pixel distances between the feature points on the excavator and the building models. Given the non-dimensional nature of pixel distances in the 3D point cloud model, the relative error was calculated based on the ratio of the distances between feature points in both the actual scene and the 3D model. The distance ratios in the scene were calculated using Equation (3), whereas those in the 3D model were determined using Equation (4). Subsequently, Equation (5) was used to calculate the relative error within the reconstructed 3D model, measuring the method’s precision. Figure 10 presents the relative errors observed in the 3D models generated using the projection method in the three scenarios, demonstrating that the highest error recorded was only 3%. This finding corroborated the substantial accuracy of the projection method.

where

Figure 10.

RDE in 3D models generated using the projection method across three distinct scenarios: (a) partial obstruction of the excavator by the building; (b) unobstructed view; and (c) partial obstruction of the building by the excavator.

- 1.

- represents the relative error of the DEP method;

- 2.

- denotes the ratio of distances between any two feature points in real space;

- 3.

- signifies the ratio of pixel distances between any two feature points in the 3D model;

- 4.

- , , , , , and denote the spatial coordinates of the feature points in the real world and 3D models;

- 5.

- , , and represent the identifiers of the feature points.

4. Experiment and Verification

4.1. Experimental Design and Procedure

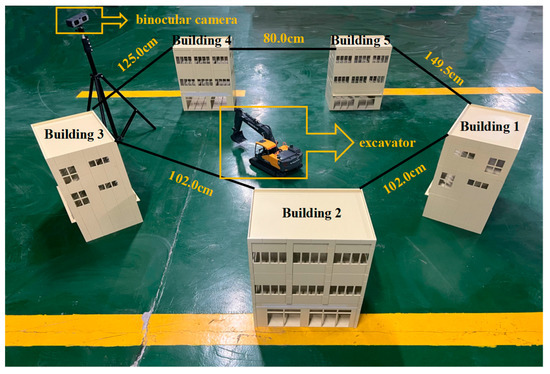

To evaluate the efficacy of the proposed 3D reconstruction methodology, an experimental setup was established under daylight conditions using a binocular camera system alongside a calibration plate. The system, also equipped with a 5-megapixel synchronized camera, captured images at a resolution of 1280 × 480 pixels, operating at a capture rate of 30 fps and a focal length ranging from 5 cm to infinity. As depicted in Figure 11, the experimental configuration included five miniature building models and an excavator model, with dimensions aligned with those outlined in earlier sections of this study. The spatial arrangement placed the buildings at defined distances: 102 cm between buildings 1 and 2, 102 cm between buildings 2 and 3, 125 cm between buildings 3 and 4, 107 cm between buildings 4 and 5, and 149.5 cm between buildings 5 and 1. The methodology involved the remote operation of the excavator model using this setup, and its movements were comprehensively documented using a binocular camera system.

Figure 11.

Experimental scene and instruments.

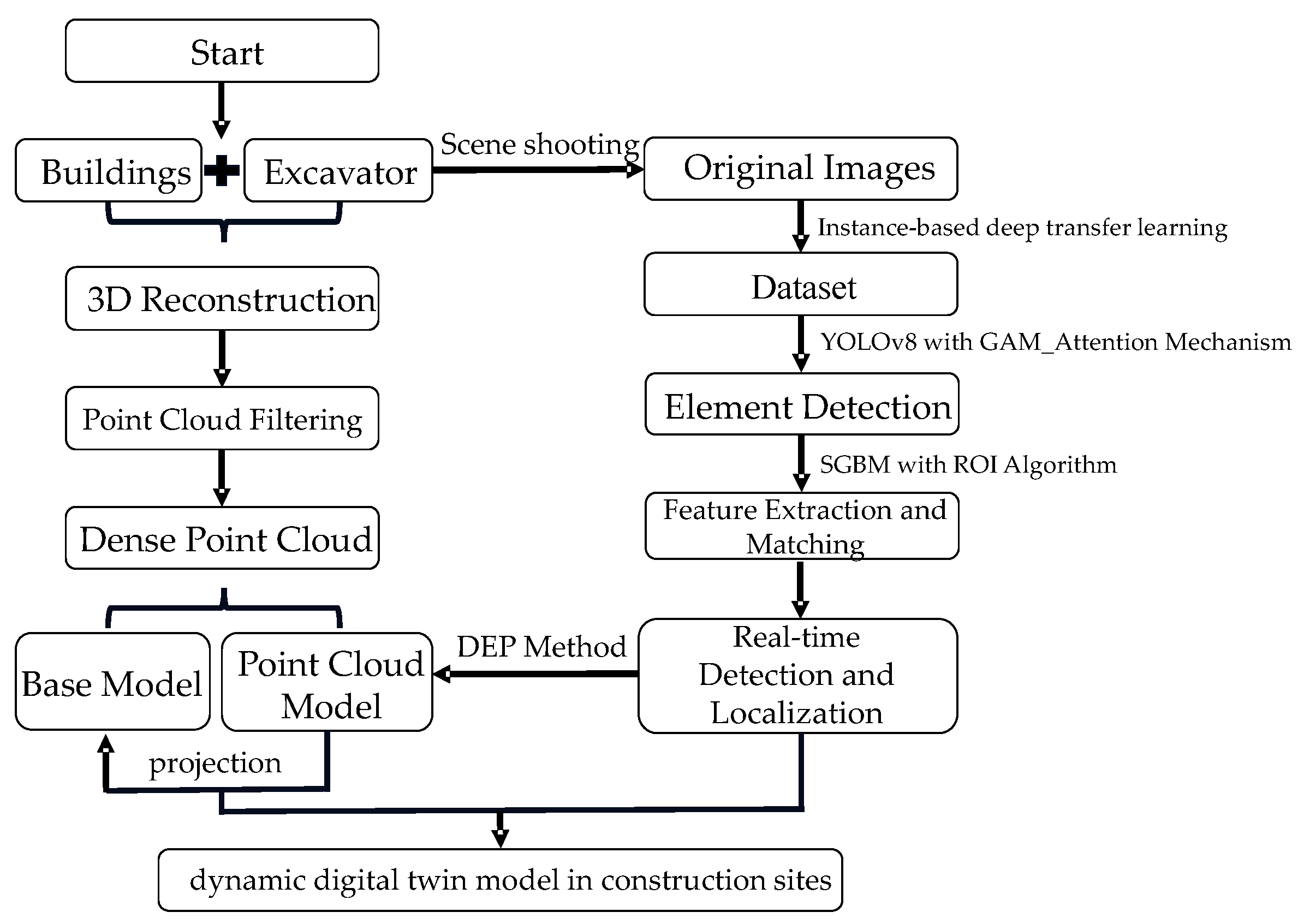

As depicted in Figure 12, the experimental workflow was initiated with the 3D reconstruction of both the construction site and excavator by employing a static element reconstruction technique. This phase yielded a point cloud model, laying the foundation for subsequent modeling processes. The binocular camera system captured the movements of the excavator within the experimental setup and produced 440 images. This initial dataset was expanded to 3440 images by integrating 3000 images from the MOCS dataset. Subsequently, the YOLOv8n model, enhanced by the GAM_Attention Mechanism, was trained using this comprehensive dataset. The SGBM algorithm was applied to these images to extract depth information from the detected feature points to achieve the synchronous detection and localization of the excavator and buildings. The final step entailed the integration of the excavator point cloud model into the base model of the construction scene using the specified near-real-time projection method, effectively demonstrating the application of the proposed methodology in a test environment.

Figure 12.

Experimental procedure.

4.2. Results and Discussion

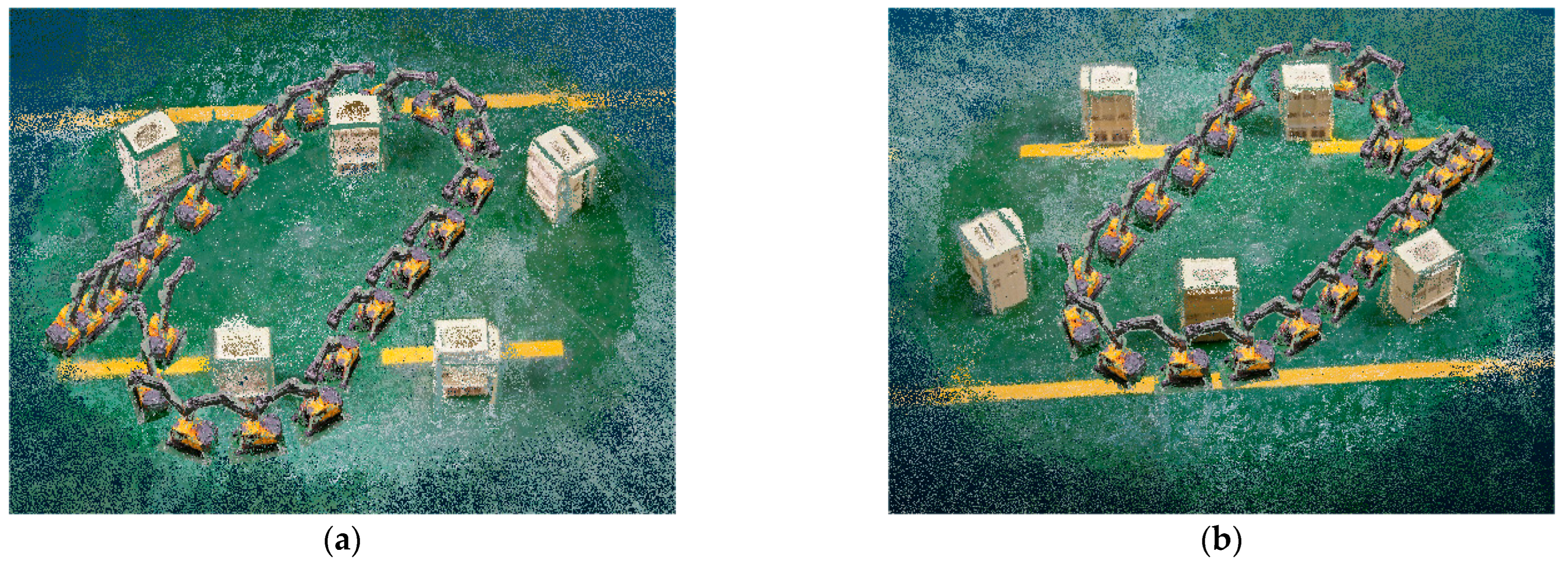

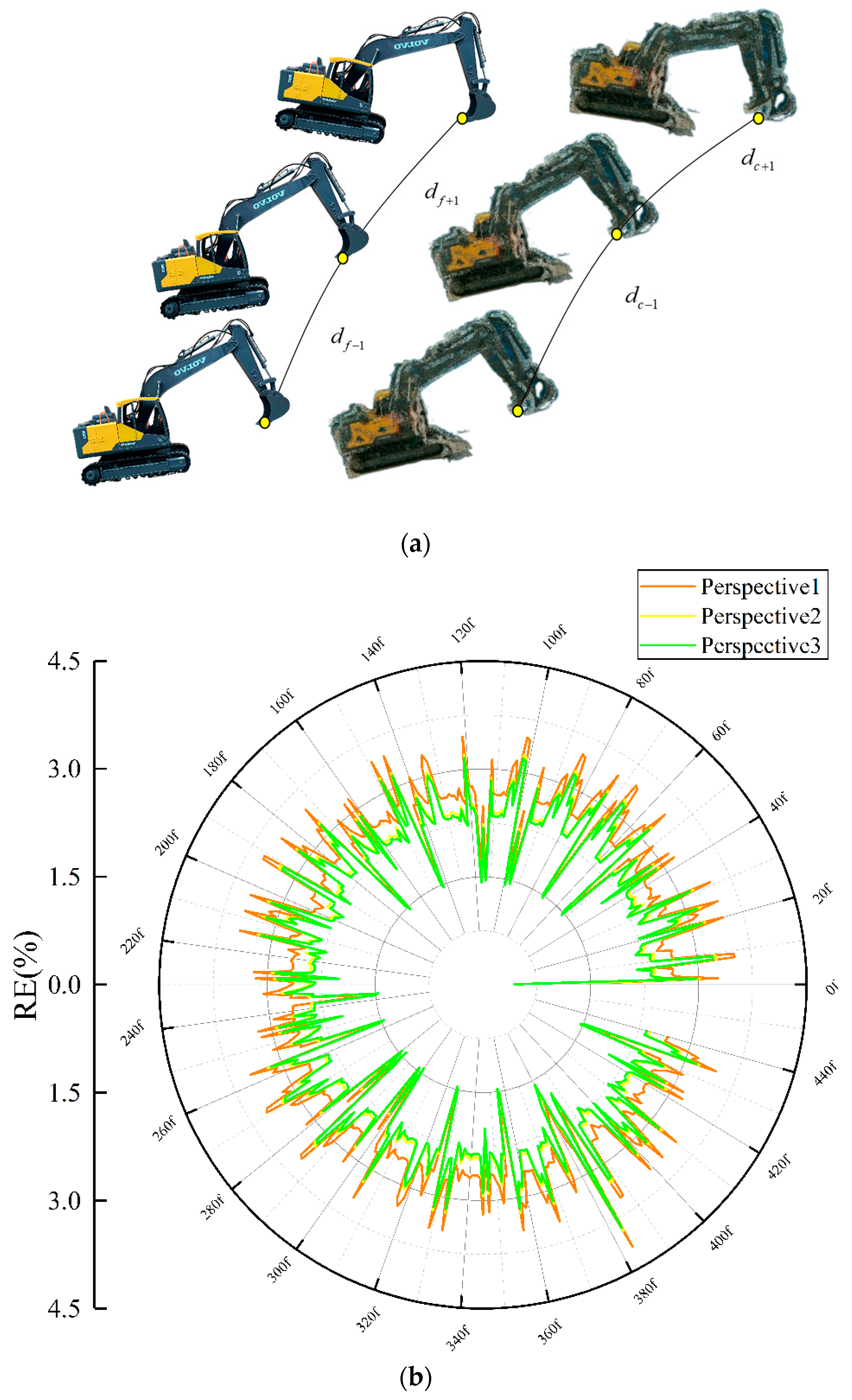

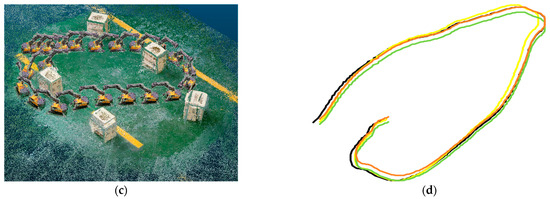

In this study, a 44 s video capturing an excavator navigating through a series of buildings was recorded with a binocular camera, from which 440 frames were extracted to evaluate the excavator’s dynamic trajectory within a digital twin framework. Figure 13a–c illustrate the reconstruction of the digital twin model from three different viewpoints. As observed in these frames, the movement trajectory of the excavator is highlighted to illustrate its path from various perspectives. Figure 13d compares the motion trajectories extracted from these diverse viewpoints, showing considerable consistency across the three angles,. This finding substantiates the efficacy of the proposed methodology for the 3D reconstruction of dynamic environments. It converts 2D video data captured using a binocular camera into a 3D virtual model space from multiple angles.

Figure 13.

Trajectory of the excavator in the reconstructed model from three different perspectives: (a) Perspective 1; (b) Perspective 2; (c) Perspective 3; (d) comparison of excavator’s travel trajectories from three different perspectives.

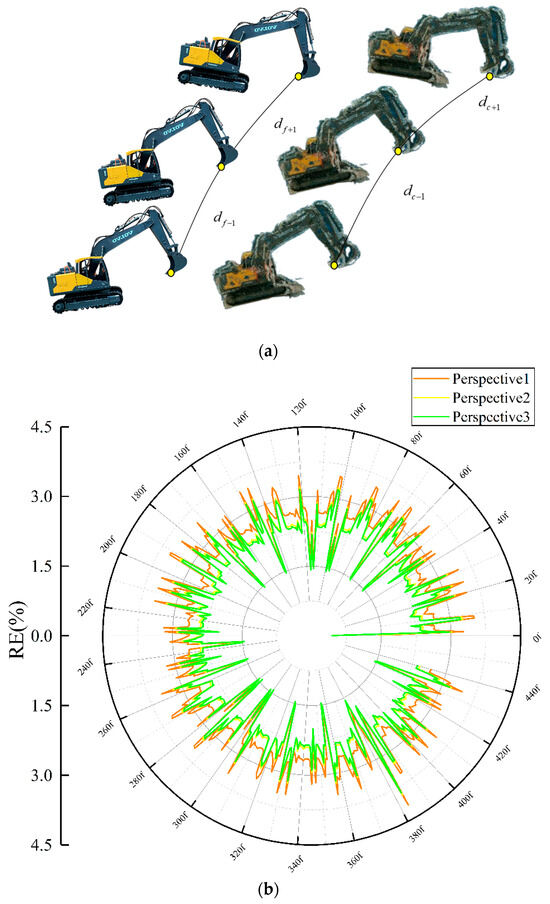

To rigorously assess the precision of the proposed reconstruction methodology, this study utilized the movement distance of the excavator between consecutive frames as a metric for precision evaluation. Figure 14a shows the process of calculating the actual spatial and pixel distances within the point cloud model for feature points across three consecutive frames. Equations (6) and (7) were applied to compute the ratios of the movement distances between the first pair of frames and the subsequent pair in both the real environment and the model space. The relative error in the reconstructed model was analyzed from different viewpoints by comparing the movement distance ratios, as shown in Equation (8). Figure 14b shows the distribution of reconstruction errors over 440 frames for the feature points of the excavator. The maximum relative error observed in the movement trajectories from three distinct perspectives was 3.5%. This outcome verifies the capability of the developed reconstruction method to achieve the high-precision replication of dynamic activities at construction sites from 2D camera images, enabling the comprehensive transformation of 2D image data into a 3D spatial model and significantly enhancing the generation process of digital twin models.

where

Figure 14.

Accuracy analysis of 3D reconstruction: (a) methodology for measuring excavator travel distances; (b) relative error distribution of excavator travel distances from three perspectives in 3D reconstruction models.

- 1.

- indicates the ratio of movement distances between the initial and subsequent frames in the real scene;

- 2.

- denotes the pixel-based movement distances of feature points across frames in the model space;

- 3.

- and refer to the actual distances between feature points in the first and last two frames, respectively, in a real scene;

- 4.

- and represent the pixel-based distances in the model space. N is the total count of feature points;

- 5.

- is the total count of feature points;

- 6.

- denotes the relative error in the model’s dynamic reconstruction.

5. Conclusions

This research introduces a pioneering methodological framework for the three-dimensional reconstruction of dynamic digital twin models at construction sites, facilitating the comprehensive monitoring of the construction environment from every conceivable angle within a 3D model space. The proposed methodology integrates advanced techniques in geometric element 3D reconstruction, object detection, and spatial information perception, thereby enhancing the fidelity and utility of the digital twin models, and a groundbreaking near-real-time projection technique is introduced, which is pivotal for the dynamic reconstruction of digital twin models. This innovation significantly streamlines the process of establishing dynamic digital twins, enabling the multi-perspective management and oversight of dynamic sequences captured in video imagery within the digital twin environment. This advancement leads to a substantial improvement in the operational efficiency of construction site management, and the principal findings of this study are summarized as follows:

- (1)

- The accuracy of the 3D reconstruction technique based on binocular vision exceeds 95%.

- (2)

- The error of the elemental localization of the improved SGBM algorithm is less than 1.22%.

- (3)

- The YOLOv8 model is fused with the improved SGBM algorithm to realize near real-time detection and localization of target objects; MAP@0.5 was over 0.995.

- (4)

- The accuracy of the near-real-time 2D-to-3D projection technique was over 0.97.

The proposed methodology resulted in great improvements in reconstruction cost, detection accuracy, and positioning time optimization: Initially, the acquisition cost of binocular cameras has been significantly reduced by more than twofold in comparison to the conventional reconstruction apparatus. Subsequently, the detection precision of the enhanced model witnessed an increase of 7.2%. Ultimately, the system was engineered to operate in a parallel processing framework, thereby facilitating the attainment of reconstructions that approximate real-time performance.

This study has certain limitations, and the improvements that will be made to this study in the future are as follows: (1) we will showcase the technique’s practical applicability by implementing it within a real-world, large-scale construction project; (2) we will incorporate additional dynamic components such as personnel and machinery into our demonstration to underscore the technique’s versatility; (3) our ongoing commitment to enhancing the algorithms will be complemented by integrating the technology with a safety alert system, thereby enabling the comprehensive, real-time safety surveillance of the construction site.

Author Contributions

Conceptualization, P.L.; methodology, X.A. and P.L.; validation, J.H. and P.L.; formal analysis, J.H. and P.L.; investigation, C.W. and X.A.; resources, X.A. and P.L.; data curation, J.H.; writing—original draft preparation, J.H. and P.L.; writing—review and editing, P.L., X.A. and C.W.; visualization, J.H.; supervision, P.L., X.A. and C.W.; project administration, P.L., X.A. and C.W.; funding acquisition, P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 52379115, the Chongqing Natural Science Foundation of China, grant number CSTB2022NSCQ-MSX0509, and the Research and Innovation Program for Graduate Students in Chongqing, grant 2024S0046.

Data Availability Statement

All data, models, or codes that support the fundings of this study are available from the corresponding author upon reasonable request. The data are not publicly available due to site privacy.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Luo, R.; Sheng, B.; Lu, Y.; Huang, Y.; Fu, G.; Yin, X. Digital Twin Model Quality Optimization and Control Methods Based on Workflow Management. Appl. Sci. 2023, 13, 2884. [Google Scholar] [CrossRef]

- Tuhaise, V.V.; Tah, J.H.M.; Abanda, F.H. Technologies for digital twin applications in construction. Autom. Constr. 2023, 152, 104931. [Google Scholar] [CrossRef]

- Yang, C.; Lin, J.-R.; Yan, K.-X.; Deng, Y.-C.; Hu, Z.-Z.; Liu, C. Data-Driven Quantitative Performance Evaluation of Construction Supervisors. Buildings 2023, 13, 1264. [Google Scholar] [CrossRef]

- Zhong, B.; Gan, C.; Luo, H.; Xing, X. Ontology-based framework for building environmental monitoring and compliance checking under BIM environment. J. Affect. Disord. 2018, 141, 127–142. [Google Scholar] [CrossRef]

- Kavaliauskas, P.; Fernandez, J.B.; McGuinness, K.; Jurelionis, A. Automation of Construction Progress Monitoring by Integrating 3D Point Cloud Data with an IFC-Based BIM Model. Buildings 2022, 12, 1754. [Google Scholar] [CrossRef]

- Zhu, J.; Zeng, Q.; Han, F.; Jia, C.; Bian, Y.; Wei, C. Design of laser scanning binocular stereo vision imaging system and target measurement. Optik 2022, 270, 169994. [Google Scholar] [CrossRef]

- Sani, N.H.; Tahar, K.N.; Maharjan, G.R.; Matos, J.C.; Muhammad, M. 3D reconstruction of building model using UAV point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 455–460. [Google Scholar] [CrossRef]

- Dabetwar, S.; Kulkarni, N.N.; Angelosanti, M.; Niezrecki, C.; Sabato, A. Sensitivity analysis of unmanned aerial vehicle-borne 3D point cloud reconstruction from infrared images. J. Build. Eng. 2022, 58, 105070. [Google Scholar] [CrossRef]

- Zhao, S.; Kang, F.; Li, J.; Ma, C. Structural health monitoring and inspection of dams based on UAV photogrammetry with image 3D reconstruction. Autom. Constr. 2021, 130, 103832. [Google Scholar] [CrossRef]

- Kim, H.; Kim, H. 3D reconstruction of a concrete mixer truck for training object detectors. Autom. Constr. 2018, 88, 23–30. [Google Scholar] [CrossRef]

- Guan, J.; Yang, X.; Lee, V.C.; Liu, W.; Li, Y.; Ding, L.; Hui, B. Full field-of-view pavement stereo reconstruction under dynamic traffic conditions: Incorporating height-adaptive vehicle detection and multi-view occlusion optimization. Autom. Constr. 2022, 144, 104615. [Google Scholar] [CrossRef]

- Jiang, W.; Zhou, Y.; Ding, L.; Zhou, C.; Ning, X. UAV-based 3D reconstruction for hoist site mapping and layout planning in petrochemical construction. Autom. Constr. 2020, 113, 103137. [Google Scholar] [CrossRef]

- Liu, Z.; Kim, D.; Lee, S.; Zhou, L.; An, X.; Liu, M. Near Real-Time 3D Reconstruction and Quality 3D Point Cloud for Time-Critical Construction Monitoring. Buildings 2023, 13, 464. [Google Scholar] [CrossRef]

- An, X.; Zhou, L.; Liu, Z.; Wang, C.; Li, P.; Li, Z. Dataset and benchmark for detecting moving objects in construction sites. Autom. Constr. 2021, 122, 103482. [Google Scholar] [CrossRef]

- Wu, H.; Zhao, J. An intelligent vision-based approach for helmet identification for work safety. Comput. Ind. 2018, 100, 267–277. [Google Scholar] [CrossRef]

- Wu, J.; Cai, N.; Chen, W.; Wang, H.; Wang, G. Automatic detection of hardhats worn by construction personnel: A deep learning approach and benchmark dataset. Autom. Constr. 2019, 106, 102894. [Google Scholar] [CrossRef]

- Liu, D.; Sun, J.; Lu, W.; Li, S.; Zhou, X. 3D reconstruction of the dynamic scene with high-speed targets for GM-APD lidar. Opt. Laser Technol. 2023, 161, 109114. [Google Scholar] [CrossRef]

- Chu, C.-C.; Nandhakumar, N.; Aggarwal, J. Image segmentation using laser radar data. Pattern Recognit. 1990, 23, 569–581. [Google Scholar] [CrossRef]

- Verykokou, S.; Ioannidis, C. An Overview on Image-Based and Scanner-Based 3D Modeling Technologies. Sensors 2023, 23, 596. [Google Scholar] [CrossRef]

- Hosamo, H.H.; Hosamo, M.H. Digital Twin Technology for Bridge Maintenance using 3D Laser Scanning: A Review. Adv. Civ. Eng. 2022, 2022, 2194949. [Google Scholar] [CrossRef]

- Liu, T.; Wang, N.; Fu, Q.; Zhang, Y.; Wang, M. Research on 3D Reconstruction Method Based on Laser Rotation Scanning. In Proceedings of the 2019 IEEE International Conference on Mechatronics and Automation (ICMA), Tianjin, China, 4–7 August 2019; pp. 1600–1604. [Google Scholar] [CrossRef]

- Yu, Q.; Helmholz, P.; Belton, D. Semantically Enhanced 3D Building Model Reconstruction from Terrestrial Laser-Scanning Data. J. Surv. Eng. 2017, 143, 04017015. [Google Scholar] [CrossRef]

- Pérez, G.; Escolà, A.; Rosell-Polo, J.R.; Coma, J.; Arasanz, R.; Marrero, B.; Cabeza, L.F.; Gregorio, E. 3D characterization of a Boston Ivy double-skin green building facade using a LiDAR system. J. Affect. Disord. 2021, 206, 108320. [Google Scholar] [CrossRef]

- Abdulwahab, S.; Rashwan, H.A.; Garcia, M.A.; Masoumian, A.; Puig, D. Monocular depth map estimation based on a multi-scale deep architecture and curvilinear saliency feature boosting. Neural Comput. Appl. 2022, 34, 16423–16440. [Google Scholar] [CrossRef]

- Jin, S.; Ou, Y. Feature-Based Monocular Dynamic 3D Object Reconstruction; Social Robotics. In Proceedings of the 10th International Conference, ICSR 2018, Qingdao, China, 28–30 November 2018; Proceedings 10; Springer: Berlin/Heidelberg, Germany, 2018; pp. 380–389. [Google Scholar] [CrossRef]

- Ni, Z.; Burks, T.F.; Lee, W.S. 3D Reconstruction of Plant/Tree Canopy Using Monocular and Binocular Vision. J. Imaging 2016, 2, 28. [Google Scholar] [CrossRef]

- Deng, L.; Sun, T.; Yang, L.; Cao, R. Binocular video-based 3D reconstruction and length quantification of cracks in concrete structures. Autom. Constr. 2023, 148, 104743. [Google Scholar] [CrossRef]

- Yin, H.; Lin, Z.; Yeoh, J.K. Semantic localization on BIM-generated maps using a 3D LiDAR sensor. Autom. Constr. 2023, 146, 104641. [Google Scholar] [CrossRef]

- Hane, C.; Zach, C.; Cohen, A.; Pollefeys, M. Dense Semantic 3D Reconstruction. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1730–1743. [Google Scholar] [CrossRef]

- Stathopoulou, E.-K.; Remondino, F. Semantic photogrammetry—Boosting image-based 3D reconstruction with semantic labeling. ISPRS—Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, 42, 685–690. [Google Scholar] [CrossRef]

- Tong, C. Three-dimensional reconstruction of the dribble track of soccer robot based on heterogeneous binocular vision. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 6361–6372. [Google Scholar] [CrossRef]

- Li, J.; Gao, W.; Wu, Y.; Liu, Y.; Shen, Y. High-quality indoor scene 3D reconstruction with RGB-D cameras: A brief review. Comput. Vis. Media 2022, 8, 369–393. [Google Scholar] [CrossRef]

- Sung, C.; Kim, P.Y. 3D terrain reconstruction of construction sites using a stereo camera. Autom. Constr. 2016, 64, 65–77. [Google Scholar] [CrossRef]

- Kim, D.; Liu, M.; Lee, S.; Kamat, V.R. Remote proximity monitoring between mobile construction resources using camera-mounted UAVs. Autom. Constr. 2019, 99, 168–182. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Rose, T.M.; An, W.; Yu, Y. A deep learning-based method for detecting non-certified work on construction sites. Adv. Eng. Inform. 2018, 35, 56–68. [Google Scholar] [CrossRef]

- Luo, X.; Li, H.; Cao, D.; Dai, F.; Seo, J.; Lee, S. Recognizing Diverse Construction Activities in Site Images via Relevance Networks of Construction-Related Objects Detected by Convolutional Neural Networks. J. Comput. Civ. Eng. 2018, 32, 04018012. [Google Scholar] [CrossRef]

- Roberts, D.; Golparvar-Fard, M. End-to-end vision-based detection, tracking and activity analysis of earthmoving equipment filmed at ground level. Autom. Constr. 2019, 105, 102811. [Google Scholar] [CrossRef]

- Kim, H.; Kim, H.; Hong, Y.W.; Byun, H. Detecting Construction Equipment Using a Region-Based Fully Convolutional Network and Transfer Learning. J. Comput. Civ. Eng. 2018, 32, 04017082. [Google Scholar] [CrossRef]

- Wang, C.; Cui, X.; Zhao, S.; Guo, K.; Wang, Y.; Song, Y. The application of deep learning in stereo matching and disparity estimation: A bibliometric review. Expert Syst. Appl. 2023, 238, 122006. [Google Scholar] [CrossRef]

- Zhang, Y.; Gu, J.; Rao, T.; Lai, H.; Zhang, B.; Zhang, J.; Yin, Y. A Shape Reconstruction and Measurement Method for Spherical Hedges Using Binocular Vision. Front. Plant Sci. 2022, 13, 849821. [Google Scholar] [CrossRef]

- Gai, Q. Optimization of Stereo Matching in 3D Reconstruction Based on Binocular Vision. J. Phys. Conf. Ser. 2018, 960, 012029. [Google Scholar] [CrossRef]

- Hu, Y. Research on a three-dimensional reconstruction method based on the feature matching algorithm of a scale-invariant feature transform. Math. Comput. Model. 2011, 54, 919–923. [Google Scholar] [CrossRef]

- Xiang, R.; Jiang, H.; Ying, Y. Recognition of clustered tomatoes based on binocular stereo vision. Comput. Electron. Agric. 2014, 106, 75–90. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, K.; Ni, J.; Li, Q. 3D reconstruction method based on second-order semiglobal stereo matching and fast point positioning Delaunay triangulation. PLoS ONE 2022, 17, e0260466. [Google Scholar] [CrossRef] [PubMed]

- Cai, H.; Andoh, A.R.; Su, X.; Li, S. A boundary condition based algorithm for locating construction site objects using RFID and GPS. Adv. Eng. Inform. 2014, 28, 455–468. [Google Scholar] [CrossRef]

- Chen, P.-Y.; Lin, H.-Y.; Pai, N.-S.; Huang, J.-B. Construction of Edge Computing Platform Using 3D LiDAR and Camera Heterogeneous Sensing Fusion for Front Obstacle Recognition and Distance Measurement System. Processes 2022, 10, 1876. [Google Scholar] [CrossRef]

- Yang, Z.; Yuan, Y.; Zhang, M.; Zhao, X.; Zhang, Y.; Tian, B. Safety Distance Identification for Crane Drivers Based on Mask R-CNN. Sensors 2019, 19, 2789. [Google Scholar] [CrossRef] [PubMed]

- Maini, R.; Aggarwal, H. A comprehensive review of image enhancement techniques. arXiv 2010, arXiv:1003.4053. [Google Scholar] [CrossRef]

- Shukla, K.N. A Review on Image Enhancement Techniques. Int. J. Eng. Appl. Comput. Sci. 2017, 2, 232–235. [Google Scholar] [CrossRef]

- Liang, H.; Liu, M.; Hui, M.; Zhao, Y.; Dong, L.; Kong, L. 3D reconstruction of typical entities based on multi-perspective images. In Optical Metrology and Inspection for Industrial Applications IX; SPIE: Bellingham, WA, USA, 2022; Volume 12319, pp. 338–345. [Google Scholar] [CrossRef]

- Yang, Q.; Yang, J.H. A Kruppa Equation-Based Virtual Reconstruction Method for 3D Images Under Visual Communication Scenes. IEEE Access 2023, 11, 143721–143730. [Google Scholar] [CrossRef]

- Barzi, F.K.; Nezamabadi-Pour, H. Automatic objects’ depth estimation based on integral imaging. Multimed. Tools Appl. 2022, 81, 43531–43549. [Google Scholar] [CrossRef]

- Weng, Z.; He, D.; Zheng, Y.; Zheng, Z.; Zhang, Y.; Gong, C. Research on 3D reconstruction method of cattle face based on image. J. Intell. Fuzzy Syst. 2023, 44, 10551–10563. [Google Scholar] [CrossRef]

- Yuan, J.; Zhu, S.; Tang, K.; Sun, Q. ORB-TEDM: An RGB-D SLAM Approach Fusing ORB Triangulation Estimates and Depth Measurements. IEEE Trans. Instrum. Meas. 2022, 71, 1–15. [Google Scholar] [CrossRef]

- Katsigiannis, S.; Seyedzadeh, S.; Agapiou, A.; Ramzan, N. Deep learning for crack detection on masonry façades using limited data and transfer learning. J. Build. Eng. 2023, 76, 107105. [Google Scholar] [CrossRef]

- Feng, W.; Liang, Z.; Mei, J.; Yang, S.; Liang, B.; Zhong, X.; Xu, J. Petroleum Pipeline Interface Recognition and Pose Detection Based on Binocular Stereo Vision. Processes 2022, 10, 1722. [Google Scholar] [CrossRef]

- Xiong, X.; Wang, K.; Chen, J.; Li, T.; Lu, B.; Ren, F. A Calibration System of Intelligent Driving Vehicle Mounted Scene Projection Camera Based on Zhang Zhengyou Calibration Method. In Proceedings of the 2022 34th Chinese Control and Decision Conference (CCDC), Hefei, China, 15–17 August 2022; pp. 747–750. [Google Scholar]

- Tang, Y.; Xi, C.; Gong, Z.; Li, L. Scoliosis Detection Based on Feature Extraction from Region-of-Interest. Trait. Signal 2022, 39, 815–822. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).