1. Introduction

Ovarian cancer is the eighth leading cause of female cancer death worldwide [

1]. The incidence of ovarian cancer increases with age and peaks in the 50s [

2]. In addition, malignant germ cell tumors are common in young patients with ovarian cancer [

3].

CT is the major modality for diagnosing ovarian tumors, detecting metastases, staging ovarian cancer, following up after surgery, and assessing the efficacy of chemotherapy. On the other hand, CT radiation exposure may be associated with elevated risks of thyroid cancer and leukemia in all adult ages and non-Hodgkin lymphoma in younger patients [

4]. Patients with ovarian cancer tend to be relatively young, therefore the reduction of CT radiation exposure is essential. Radiation exposure of CT is mainly controlled by adjusting the tube current and voltage [

5]. Lowering the radiation dose increases image noise, so techniques that reduce image noise and artifacts and maintain image quality are needed. Low-dose CT images were reconstructed by filtered back projection (FBP) until the 2000s. However, iterative reconstruction (IR) has been the mainstream since the first IR technique was clinically introduced in 2009 [

5]. IR reconstruction technology has evolved into hybrid IR, followed by model-based IR (MBIR). IR has been reported to reduce the radiation dose by 23–76% without compromising image quality compared to FBP [

5].

In recent years, a technique called sparse-sampling CT that resembles compressed sensing in MRI has attracted attention as a possible new technique to reduce exposure. This technique reconstructs CT images using a combination of sparse-sampling CT and Artificial intelligence (AI), especially deep learning, which may reduce CT radiation exposure more than two-fold over the current technology [

5]. A few studies show that with the application of sparse-sampling CT and deep-learning, lower-dose CT could be used [

6,

7].

Research for the noise reduction of CT images using deep learning started around 2017 [

6,

7,

8,

9,

10,

11,

12,

13,

14,

15]. In 2017, image-patch-based noise reduction was performed using deep learning model on low-dose CT images [

7,

11]. On the other hand, Jin et al. show that entire CT images could be directly denoised using U-net [

9]. To improve perceptual image quality, generative adversarial network (GAN) was introduced for CT noise reduction [

12,

13]. Following the advancement in noise reduction using deep learning, Nakamura et al. evaluated noise reduction using deep learning on a real CT scanner [

16]. However, most of them focused on quantitative measures such as peak signal-to-noise ratio (PSNR) and structural similarity (SSIM). To the best of our knowledge, there are few studies that radiologists visually evaluate abnormal lesions such as metastasis on CT images processed with deep learning [

16]. Furthermore, the quantitative measure, such as PSNR and SSIM, and human perceived quality were not always consistent in agreement [

17]. Therefore, we suggest that PSNR and SSIM alone cannot assure clinical usefulness and accuracy of lesion detection.

The present study aimed to evaluate the usefulness of sparse-sampling CT with deep learning-based reconstruction for radiologists to detect the metastasis of malignant ovarian tumors. This study used both quantitative and qualitative assessment of denoised sparse-sampling CT with deep learning, including PSNR and SSIM, along with radiologists’ visual score, and the detectability of metastasis.

2. Materials and Methods

This study used anonymized data from a public database. The regulations of our country did not require approval from an institutional review board for the use of a public database.

2.1. Dataset

Our study tested abdominal CT images obtained from The Cancer Imaging Archive (TCIA) [

18,

19,

20]. We used one public database of the abdominal CT images available from TCIA: The Cancer Genome Atlas Ovarian Cancer (TCGA-OV) dataset. The dataset is constructed by a research community of The Cancer Genome Atlas, which focuses on the connection between cancer phenotypes and genotypes by providing clinical images. In TCGA-OV, clinical, genetic, and pathological data reside in Genomic Data Commons Data Portal while radiological data are stored on TCIA.

TCGA-OV provides 143 cases of abdominal contrast-enhanced CT images. Two cases were excluded from the current study because the pelvis was outside the CT scan range. The other 141 cases were included in the current study. The 141 cases were randomly divided into 71 training cases, 20 validation cases, and 50 test cases. For training, validation, and test cases, the number of CT images was 6916, 1909, and 4667, respectively.

2.2. Simulation of Sparse-Sampling CT

As in a previous study [

9], sparse-sampling CT images were simulated for the 141 sets of abdominal CT images of TCGA-OV. The original CT images of the TCGA-OV were converted into sinograms with 729 pixels by 1000 views using ASTRA-Toolbox (version 1.8.3,

https://www.astra-toolbox.com/), an open-source MATLAB and Python toolbox of high-performance graphics processing unit (GPU) primitives for two- and three-dimensional tomography [

21,

22]. To simulate sparse-sampling CT images, we uniformly (at regular view intervals) subsampled the sinograms by a factor of 10, which corresponded to 100 views. While a 20-fold subsampling rate was used in the previous study [

9], our preliminary analysis revealed that the abdominal CT images simulated with a 20-fold subsampling rate were too noisy. As a result, we utilized a 10-fold subsampling rate in the current study. The 10-fold subsampled sinograms were converted into the sparse-sampling CT images using FBP of the ASTRA-Toolbox.

2.3. Deep Learning Model

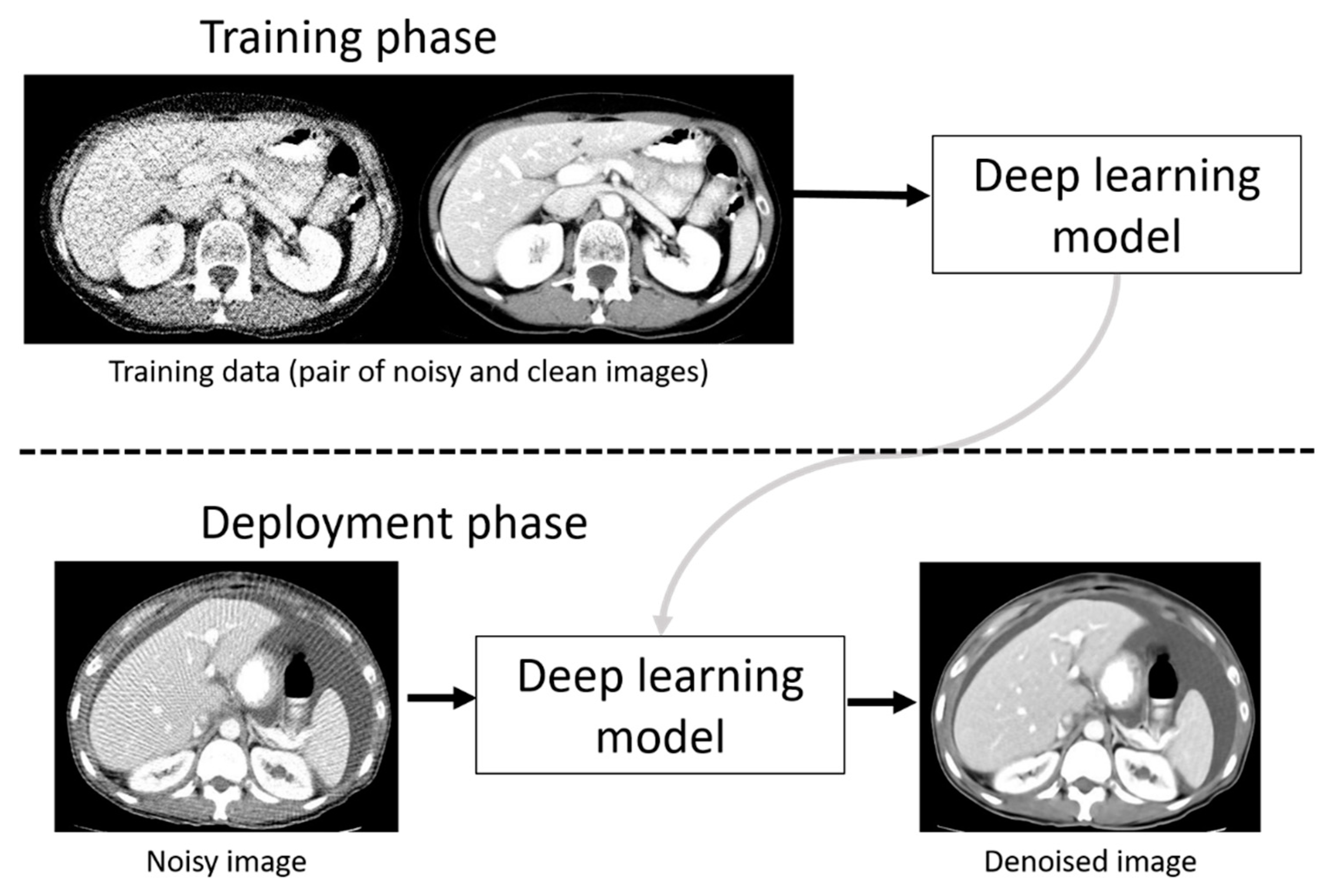

To denoise the sparse-sampling CT images, a deep learning model was employed in the current study. The outline of the training phase and deployment (denoising) phase using a deep learning model is represented in

Figure 1. In the training phase, pairs of original and noisy CT images were used for constructing a deep learning model. In the deployment phase, we used the deep learning model for denoising noisy CT images. We used a workstation with GPU (GeForce RTX 2080 Ti with 11 GB memory, NVIDIA Corporation, Santa Clara, California, USA) for training and denoising.

Two types of deep learning models were evaluated: Residual Encoder-Decoder Convolutional Neural Network (RED-CNN) [

7] and deeper U-net with skip connection [

6]. RED-CNN combines autoencoder, deconvolution network, and shortcut connections into its network structure, and it performed well in denoising low-dose CT images. RED-CNN used image patches extracted from the CT image (size 55 × 55 pixels) for training [

7]. Nakai et al. developed deeper U-net for denoising sparse-sampling chest CT images and showed that deeper U-net was superior to conventional U-net with skip connection [

6]. Contrary to RED-CNN, deeper U-net made it possible to use entire CT images (size 512 × 512 pixels) as training data. In the current study, the usefulness of deeper U-net was evaluated and compared to RED-CNN.

We implemented deeper U-net using Keras (version 2.2.2,

https://keras.io/) with TensorFlow (version 1.10.1,

https://www.tensorflow.org/) backend. The major differences of network structure between our deeper U-net and Nakai’s deeper U-net were as follows: (i) the number of maxpooling and upsampling was 9; (ii) the numbers of feature maps in the first convolution layer of our U-net was 104. After the maxpooling layer, the number of feature maps in the convolution layer was doubled. However, if the numbers of feature maps were 832, the number of feature maps was not increased even after the maxpooling layer. The changes in the network structure of our deeper U-net including (i) and (ii) are shown in

Appendix A in more detail. To train deeper U-net, pairs of original CT images and sparse-sampling CT images were prepared. Mean squared error (MSE) between the original and denoised CT images represented the loss function of deeper U-net. Adam was used as an optimizer, and its learning rate was 0.0001. The number of training epochs was 100. 4000 seconds were required for training deeper U-net per one epoch.

RED-CNN was trained using its PyTorch implementation (

https://github.com/SSinyu/RED_CNN). RED-CNN was trained on an image patch size of 55 × 55 pixels. Network-related parameters of RED-CNN were retained as described previously [

7].

2.4. Quantitative Image Analysis

To evaluate the denoising performance of deep learning models, we used two quantitative measures, PSNR and SSIM, on the 4667 CT images from 50 test cases [

23]. These parameters are frequently used as standard objective distortion measures and for quantitative assessment of the reconstructed images [

10]. PSNR is defined as

where

MSE is calculated between the denoised and original CT images, and

MAXI is the maximum value of the original CT image. SSIM is a metric that supposedly reflects the human visual perception rather than PSNR. It is defined as

where

x and

y are the denoised and original CT images, respectively;

ux and

uy are the means of

x and

y, respectively;

sx2 and

sy2 are the variances of

x and

y, respectively;

sxy is the covariance of

x and

y; and

c1 and

c2 are determined by the dynamic range of the pixel values to stabilize the division with the weak denominator. Scikit-image (version 0.13.0,

https://scikit-image.org/) was used to calculate these two quantitative measures.

2.5. Qualitative Image Analysis

On the denoised sparse-sampling CT of 50 test cases, the normal and abnormal lesions were visually evaluated. For the qualitative evaluation, four radiologists were participated, two performing visual assessments as readers and the other two defining and extracting lesions to be assessed. The two groups were independent of each other.

As readers, two board-certified radiologists (with 17 and 10 years of experience, respectively) independently evaluated the denoised CT images and referred to the original images on 3D Slicer (version 4.10.2,

https://www.slicer.org/) [

24]. For all visual evaluations described in the following section, we used a five-point scale as follows: (1) Unacceptable, (2) Poor, (3) Moderate, (4) Good, and (5) Excellent. The definition of each score and detail procedure of qualitative evaluation is shown in

Table 1,

Table 2 and

Table 3 and

Appendix B, respectively.

The image quality evaluation of the entire CT and the normal local lesions were evaluated. For the entire CT image quality, (A) Overall image quality and (B) Noise and artifacts were evaluated. The overall image quality represented a comprehensive evaluation, including noise, artifacts, and visibility of anatomical structures.

As an evaluation of the normal local lesions, the visibility of the iliac artery (the common iliac artery, internal iliac artery, and external iliac artery) was evaluated. A score was given on whether or not the diameter could be reliably measured at each of the three points of the common iliac artery, internal iliac artery, and external iliac artery.

Peritoneal dissemination, liver metastasis, and lymph node metastasis were visually evaluated as abnormal lesions by the two radiologists. The abnormal lesions were determined by the consensus of two other independent board-certified radiologists (6 and 14 years of experience, respectively) on the original CT image based on the following criteria. Peritoneal dissemination was defined as previously established as either 1) an isolated mass or 2) subtle soft tissue infiltration and reticulonodular lesions [

25]. Lymph node metastasis was defined as short axis ≥10 mm. With reference to RESIST v1.1, we defined peritoneal dissemination and liver metastasis as follows: peritoneal dissemination for non-measurable or measurable (long axis ≥ 10 mm); liver metastasis (long axis ≥ 10 mm) [

26]. The measurable lesions of peritoneal dissemination were further subdivided into long axis ≤ 20 and > 20 mm because the staging of FIGO 2014 differs depending on the size [

27].

2.6. Statistical Analysis

For the quantitative assessment of the denoised images, the mean scores of PSNR and SSIM of deeper U-net and RED-CNN were calculated. All the qualitative image quality scores were compared between deeper U-net and RED-CNN using the Wilcoxon signed-rank test. For each abnormal lesion, a 5-point score ≥ 3 was regarded as true positive (TP), and a 5-point score < 3 as false negative (FN). He detectability of abnormal lesions was calculated based on the following equation: (sensitivity). The detectability of abnormal lesions was compared between deeper U-net and RED-CNN using the McNemar test. Statistical analyses were performed using JMP® (version 14.2, SAS Institute Inc., Cary, NC, USA). All tests were two sided with a significance level of 0.05.

3. Results

A summary of patient demographics of the 141 cases is provided in

Table 4. The location of ovarian cancer and clinical stage were available from TCIA in 140 cases. Age was obtained from DICOM data of CT images. For the 50 test cases, 124 abnormal lesions were determined, including 6 liver metastases, 25 lymph node metastases, and 93 peritoneal disseminations. For the peritoneal disseminations, the numbers of non-measurable lesions, measurable lesions with long axis ≤ 20 mm, and measurable lesions with long axis > 20 mm were 53, 28, and 12, respectively.

For normal local lesions and abnormal lesions, representative images of the original CT and denoised CT obtained using deeper U-net and RED-CNN are shown in

Figure 2. Additionally, representative images of the original CT, the sparse-sampled CT images before denoised processing and denoised CT obtained using deeper U-net and RED-CNN are shown in

Figure 3.

3.1. Quantitative Image Analysis

We evaluate the PSNR and SSIM on the 4667 CT images from 50 test cases. The number of samples for calculating PSNR and SSIM was 4667. The PSNR and SSIM were 29.2 ± 1.49 and 0.75 ± 0.04 for the sparse-sampling images before denoising, 48.5 ± 2.69 and 0.99 ± 0.01 for deeper U-net, and 37.3 ± 1.97 and 0.93 ± 0.02 for RED-CNN.

3.2. Qualitative Image Analysis

The results of the visual evaluation are shown in

Figure 4 and

Figure 5 and

Table 5 and

Table 6. For all items of the visual evaluation, deeper U-net scored better than that of RED-CNN for both readers as shown in

Table 7.

Streak artifacts tended to be stronger on images at the upper abdomen level, especially where the lung and abdominal organs were visualized on the same image.

The detectability of abnormal lesions with deeper U-net was significantly better than that with RED-CNN: 95.2% (118/124) vs. 62.9% (78/124) (

p < 0.0001) for reader 1 and 97.6% (121/124) vs. 36.3% (45/124) (

p < 0.0001) for reader 2. The number of FN with deeper U-net were six and three for readers 1 and 2, respectively. All these abnormal lesions were non-measurable peritoneal dissemination, which were identified as slight subtle soft-tissue infiltration and reticulonodular lesions on the original CT image. The representative images of the FN case are shown in

Figure 6.

4. Discussion

In the current study, we compared the quantitative and qualitative image quality of sparse-sampling CT denoised with deeper U-net and RED-CNN. RED-CNN was compared with our deeper U-net because of its similar network structure [

7]. For quantitative analysis, mean scores of PSNR and SSIM of CT image quality with deeper U-net were better than those with RED-CNN. For all of the visual evaluation items, the scores of CT image quality with deeper U-net were significantly better than those of RED-CNN. In addition, the detectability of ovarian cancer metastasis was more than 95% in deeper U-net.

A few studies on deep learning-based reconstruction have shown that it improved image quality and reduced noise and artifacts better than hybrid IR and MBIR [

8,

16,

28]. Nakamura et al. reported that deep learning reconstruction could reduce noise and artifacts more than hybrid IR could and that it may improve the detection of low-contrast lesions when evaluating hypovascular hepatic metastases [

16]. While their study did not evaluate low-dose CT, the deep learning model is also considered an effective method with the potential to use lower-dose CT techniques such as sparse sampling with clinically acceptable results [

5]. Our results showed that denoising with deeper U-net could be used to detect ovarian cancer metastasis.

To the best of our knowledge, this was the first study that evaluated the detectability of cancer metastasis, including peritoneal dissemination, liver metastasis, and lymph node metastasis on deep learning-based reconstructed CT images. The usefulness of sparse-sampling CT with deep learning has been previously reported [

6,

7,

9,

10], but image evaluation was limited to quantitative measures in most of these studies. While Nakai et al. reported on quantitative and qualitative assessments of the efficacy of deep learning on chest CT images [

6], our study evaluated the usefulness of sparse-sampling CT denoised with deep learning techniques from the clinical viewpoint. We have proven that deeper U-net has an excellent ability to improve image quality and detectability of metastasis, and it could prove effective in clinical practice.

The performance difference between deeper U-net and RED-CNN was significant when assessing sparse-sampling CT images. A strong streak artifact around bony structures affected the image quality of sparse-sampling CT [

29]. Therefore, to improve the image quality of sparse-sampling CT, an ideal deep learning model should reduce streak artifact associated with anatomical structures. RED-CNN used an image patch (size 55 × 55 pixels) for its training, therefore the algorithm had difficulty discerning between a streak artifact and anatomical structures. As a result, reducing the streak artifact related to anatomical structure may be limited in RED-CNN. In contrast, since deeper U-net used the entire CT image (size 512 × 512 pixels) for training, deeper U-net could be optimized to reduce streak artifact related to anatomical structures. This difference between the two deep learning models may lead to performance differences shown in the current study.

Since score 5 was defined as image quality and visualization equivalent to original CT (

Table 1,

Table 2 and

Table 3), the denoised CT images of deeper U-net was not the same image quality as original CT images from the viewpoint of score. However, the visual scores and detectability of deeper U-net were sufficiently high.

Although patients with peritoneal dissemination are diagnosed as advanced stage, complete debulking surgery can be expected to improve the prognosis in epithelial malignant ovarian tumor [

30]. In addition, there are some histological types with a favorable prognosis due to successful chemotherapy, such as yolk sac tumor [

31]. Thus, the reduction of CT-radiation exposure is essential for patients with ovarian cancer. With our proposed method, theoretically, the CT radiation exposure can be reduced to one-tenth of that of the original CT. The reduction of radiation exposure may reduce the incidence of radiation-induced cancer. Furthermore, while we evaluated about only the detection of metastasis of malignant ovarian tumors in the current study, we speculate that the proposed method may be applied to other diseases.

While our results show that deeper U-net proved useful in detecting cancer metastasis, there were several drawbacks in the model. First, fine anatomical structures were obscured due to excessive denoising. This effect might be minimized by blending images of FBP and deep learning-based reconstruction, such as hybrid IR and MBIR, by adjusting radiation exposure (rate of sparse sampling) and blending rate. Secondly, the strong streak artifacts around the prosthesis and the upper abdomen compromised diagnostic ability near these anatomical lesions. Furthermore, streak artifacts tended to be stronger on images at the upper abdomen level, especially where the lung and abdominal organs were visualized on the same image. This effect may have resulted from the relatively small number of training data images that included both lung and abdominal organs compared to images that included only abdominal organs. Since ovarian cancer primarily metastasizes to the peritoneal liver surface and the liver, improving the image quality in these areas is considered a future research area. Increasing the number of training data images with cross-sections displaying both the lung and abdominal organs may help improve image quality and reduce streak artifacts in deep learning models, including deeper U-net.

Our study had several limitations. First, we used images from only one public database. The application of our deep learning model should be further evaluated in other databases. Second, sparse-sampling images cannot be obtained from real CT scanners at the current time. Our simulated subsampled images may differ from the images on real scanners. In future, we need to evaluate the performance of our deeper U-net using real CT acquisitions. Third, images obtained with the deep learning model of GAN tended to be more “natural” than those obtained with conventional deep learning model. However, the noise reduction of GAN is weaker than that of a conventional deep learning model [

17]. There was a concern that the radiologist’s ability to detect metastasis might decline if the noise reduction was insufficient. Therefore, GAN was not used in the current study. Finally, because of our study design, we did not evaluate false positives, true negatives, and specificity in the current study. Therefore, it is necessary to conduct radiologists’ observer studies in which false positives and true negatives are evaluated.