A Cooperative Human-Robot Interface for Constrained Manipulation in Robot-Assisted Endonasal Surgery

Abstract

:1. Introduction

Related Work

2. Materials and Methods

2.1. Endonasal Surgery Workspace Requirements

2.2. Robotic Surgical System

2.3. Robotic Environment Description

2.4. Cooperative Human-Robot Interface

- Workspace constraints

- Multiple levels of assistance

- Intuitive and ergonomic operation

- Safe and stable operation

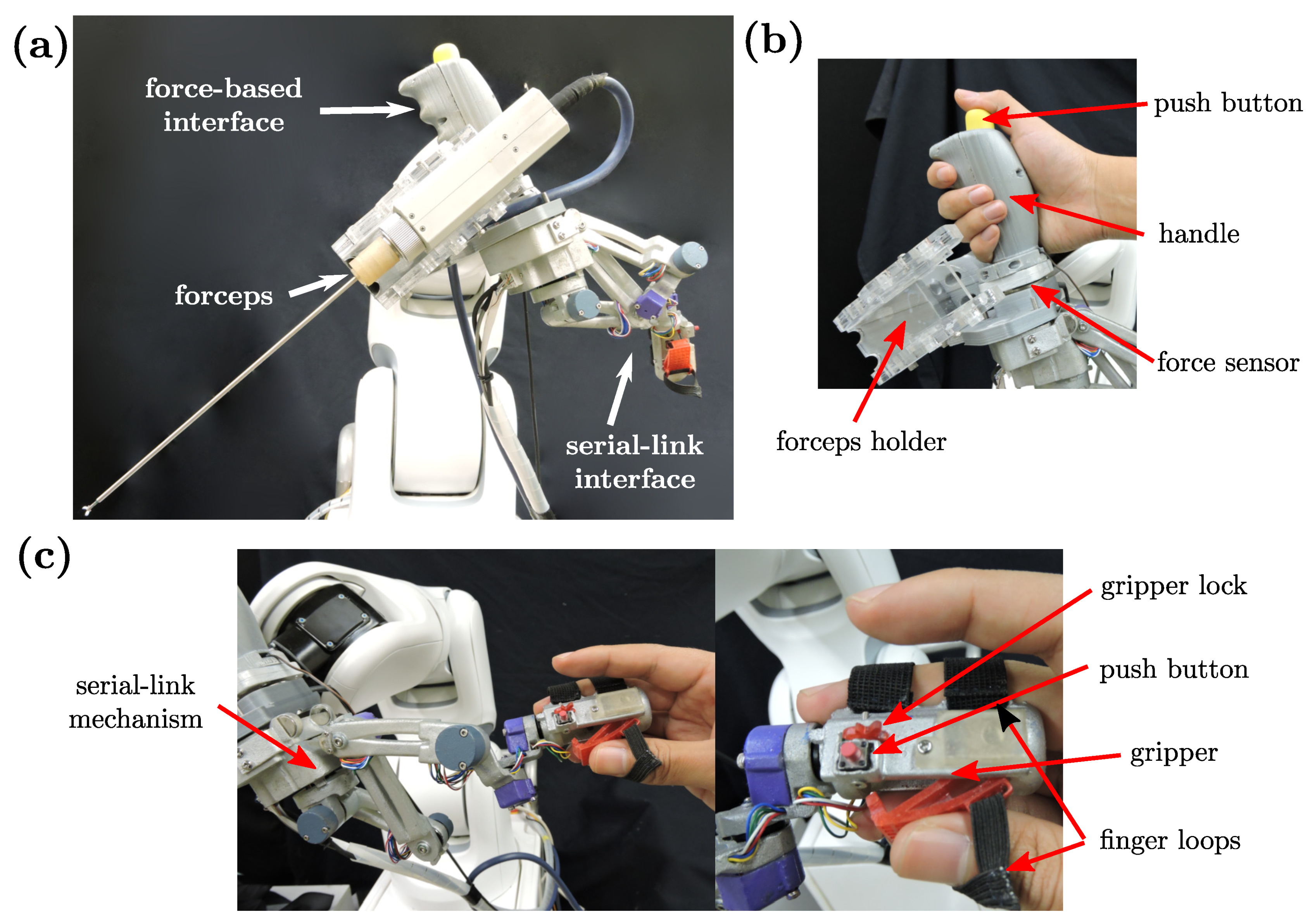

Mechanical Interface Design

2.5. Robot Motion Control

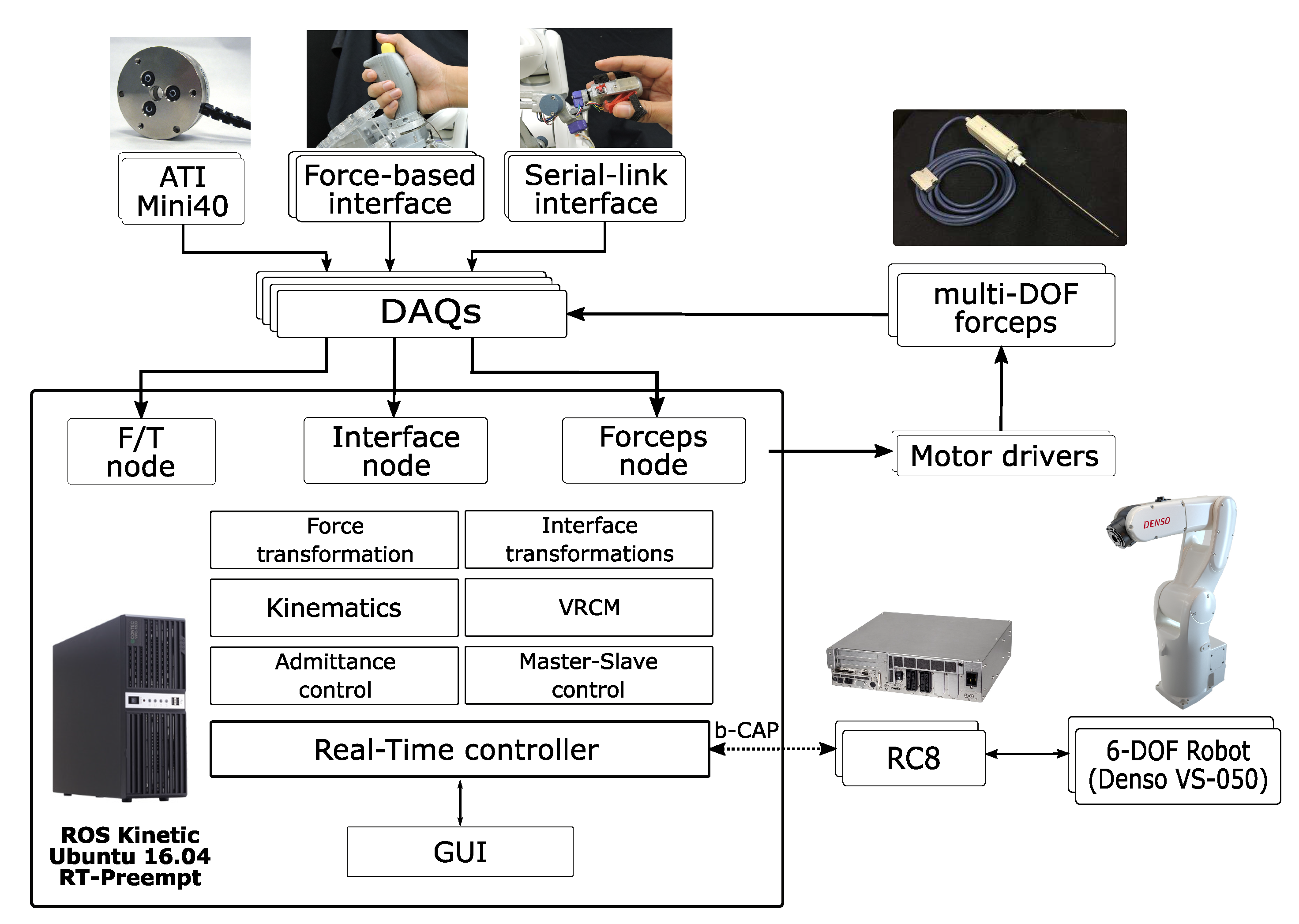

2.5.1. Software Architecture

2.5.2. Positioning

2.5.3. Insertion And Extraction

Variable Admittance Parameters

Virtual Remote-Center-of-Motion

2.5.4. Manipulation

Workspace Virtual Walls

Virtual Remote Center of Motion (VRCM)

Online Trajectory Generation

3. Experiments And Discussion

3.1. Experiment 1: Reachability

3.2. Experiment 2: Pick-and-Place and Experiment 3: Block-in-Hole

- Task completion time (s): starting from the first contact with the tube/block until the release of the last tube/block.

- Motion smoothness: we used the root mean squared jerk (RMSJ) [33] as a metric, defined by:

3.3. Experiment 4: Needle Stitching Task

- Task completion time (s): starting from removing the needle from its initial position until the complete needle extraction on the left side.

- Interaction force (N): recorded with a force sensor placed behind the tissue.

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cutler, A.R.; Barkhoudarian, G.; Griffiths, C.F.; Kelly, D.F. Transsphenoidal endoscopic skull base surgery: State of the art and future perspective. Innov. Neurosurg. 2013, 1, 15–35. [Google Scholar] [CrossRef]

- Kenan, K.; İhsan, A.; Dilek, O.; Burak, C.; Gurkan, K.; Savas, C. The learning curve in endoscopic pituitary surgery and our experience. Neurosurg. Rev. 2006, 29, 298–305. [Google Scholar] [CrossRef] [PubMed]

- Cimon, K.; Pautler, S. Robot-Assisted Surgery Compared with Open Surgery and Laparoscopic Surgery: Clinical Effectiveness and Economic Analyses. CADTH Technol. Rep. 2011. [Google Scholar] [PubMed]

- Schneider, J.S.; Burgner, J.; Webster, R.J.; Russell, P.T. Robotic surgery for the sinuses and skull base: What are the possibilities and what are the obstacles? Curr. Opin. Otolaryngol. Head Neck Surg. 2013, 21, 11–16. [Google Scholar] [CrossRef] [Green Version]

- Schleer, P.; Drobinsky, S.; de la Fuente, M.; Radermacher, K. Toward versatile cooperative surgical robotics: A review and future challenges. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1673–1686. [Google Scholar] [CrossRef]

- Guthart, G.S.; Salisbury, J.K. The Intuitive telesurgery system: overview and application. In Proceedings of the 2000 IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 22–28 April 2000; pp. 618–621. [Google Scholar] [CrossRef]

- Massie, T.H.; Salisbury, J.K. The PHANTOM Haptic Interface: A Device for Probing Virtual Objects. In Proceedings of the ASME Winter Annual Meeting, Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Chicago, IL, USA, November 1994; pp. 295–300. [Google Scholar]

- Tobergte, A.; Helmer, P.; Hagn, U.; Rouiller, P.; Thielmann, S.; Grange, S.; Albu-Schäffer, A.; Conti, F.; Hirzinger, G. The sigma.7 haptic interface for MiroSurge: A new bi-manual surgical console. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3023–3030. [Google Scholar] [CrossRef]

- Lee, J.Y.; O’Malley, B.W., Jr.; Newman, J.G.; Weinstein, G.S.; Lega, B.; Diaz, J.; Grady, M.S. Transoral robotic surgery of the skull base: A cadaver and feasibility study. ORL 2010, 72, 181–187. [Google Scholar] [CrossRef]

- Hanna, E.Y.; Holsinger, C.; DeMonte, F.; Kupferman, M. Robotic Endoscopic Surgery of the Skull Base. Arch. Otolaryngol. Neck Surg. 2007, 133, 1209. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Burgner, J.; Rucker, D.C.; Gilbert, H.B.; Swaney, P.J.; Russell, P.T.; Weaver, K.D.; Webster, R.J. A Telerobotic System for Transnasal Surgery. IEEE/ASME Trans. Mechatron. 2014, 19, 996–1006. [Google Scholar] [CrossRef] [Green Version]

- Piccigallo, M.; Focacci, F.; Tonet, O.; Megali, G.; Quaglia, C.; Dario, P. Hand-held robotic instrument for dextrous laparoscopic interventions. Int. J. Med Robot. Comput. Assist. Surg. 2008, 4, 331–338. [Google Scholar] [CrossRef]

- Zahraee, A.H.; Paik, J.K.; Szewczyk, J.; Morel, G. Toward the Development of a Hand-Held Surgical Robot for Laparoscopy. IEEE/ASME Trans. Mechatron. 2010, 15, 853–861. [Google Scholar] [CrossRef]

- MacLachlan, R.A.; Becker, B.C.; Tabarés, J.C.; Podnar, G.W.; Lobes, L.A., Jr.; Riviere, C.N. Micron: An Actively Stabilized Handheld Tool for Microsurgery. IEEE Trans. Robot. 2012, 28, 195–212. [Google Scholar] [CrossRef] [PubMed]

- Song, C.; Park, D.Y.; Gehlbach, P.L.; Park, S.J.; Kang, J.U. Fiber-optic OCT sensor guided “SMART” micro-forceps for microsurgery. Biomed. Opt. Express 2013, 4, 1045. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Payne, C.J.; Yang, G.Z. Hand-Held Medical Robots. Ann. Biomed. Eng. 2014, 42, 1594–1605. [Google Scholar] [CrossRef] [PubMed]

- Jakopec, M.; Baena, F.y.; Harris, S.J.; Gomes, P.; Cobb, J.; Davies, B.L. The Hands-on Orthopaedic Robot “Acrobot”: Early Clinical Trials of Total Knee Replacement Surgery. IEEE Trans. Robot. Autom. 2003, 19, 902–911. [Google Scholar] [CrossRef]

- Hagag, B.; Abovitz, R.; Kang, H.; Schmitz, B.; Conditt, M. RIO: Robotic-Arm Interactive Orthopedic System MAKOplasty: User Interactive Haptic Orthopedic Robotics. In Surgical Robotics; Springer: Boston, MA, USA, 2010; pp. 219–246. [Google Scholar] [CrossRef]

- Taylor, R.; Jensen, P.; Whitcomb, L.; Barnes, A.; Kumar, R.; Stoianovici, D.; Gupta, P.; Wang, Z.; Dejuan, E.; Kavoussi, L. A Steady-Hand Robotic System for Microsurgical Augmentation. Int. J. Robot. Res. 1999, 18, 1201–1210. [Google Scholar] [CrossRef]

- Matinfar, M.; Baird, C.; Batouli, A.; Clatterbuck, R.; Kazanzides, P. Robot-assisted skull base surgery. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 865–870. [Google Scholar] [CrossRef]

- Xia, T.; Baird, C.; Jallo, G.; Hayes, K.; Nakajima, N.; Hata, N.; Kazanzides, P. An integrated system for planning, navigation and robotic assistance for skull base surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2008, 4, 321–330. [Google Scholar] [CrossRef] [Green Version]

- He, Y.; Hu, Y.; Zhang, P.; Zhao, B.; Qi, X.; Zhang, J. Human-Robot Cooperative Control Based on Virtual Fixture in Robot-Assisted Endoscopic Sinus Surgery. Appl. Sci. 2019, 9, 1659. [Google Scholar] [CrossRef] [Green Version]

- García, Á.M.; Rivas, I.; Turiel, J.P.; Muñoz, V.; Marinero, J.C.F.; de la Fuente, E.; Sabater, J.M. Integration of a Surgical Robotic Co-worker in an Endoscopic Neurosurgical Assistance Platform. In Robot 2019: Fourth Iberian Robotics Conference; Springer: Cham, Switzerland, 2020; pp. 453–464. [Google Scholar] [CrossRef]

- Travaglini, T.A.; Swaney, P.J.; Weaver, K.D.; Webster, R.J., III. Initial Experiments with the Leap Motion as a User Interface in Robotic Endonasal Surgery. In Robotics and Mechatronics. Mechanisms and Machine Science; Springer: Cham, Switzerland, 2016; Volume 37, pp. 171–179. [Google Scholar] [CrossRef] [Green Version]

- Nathan, C.A.; Chakradeo, V.; Malhotra, K.; D’Agostino, H.; Patwardhan, R. The Voice-Controlled Robotic Assist Scope Holder AESOP for the Endoscopic Approach to the Sella. Skull Base 2006, 16, 123–131. [Google Scholar] [CrossRef] [Green Version]

- Marinho, M.M.; Nakazawa, A.; Nakanishi, J.; Ueyama, T.; Hasegawa, Y.; Arata, J.; Mitsuishi, M.; Harada, K. Conceptual design of a versatile robot for minimally invasive transnasal microsurgery. In Proceedings of the 2016 International Symposium on Micro-NanoMechatronics and Human Science, Nagoya, Japan, 28–30 November 2016; pp. 65–66. [Google Scholar] [CrossRef]

- Arata, J.; Fujisawa, Y.; Nakadate, R.; Kiguchi, K.; Harada, K.; Mitsuishi, M.; Hashizume, M. Compliant four degree-of-freedom manipulator with locally deformable elastic elements for minimally invasive surgery. In Proceedings of the 2019 IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 2663–2669. [Google Scholar] [CrossRef]

- Keemink, A.Q.; van der Kooij, H.; Stienen, A.H. Admittance control for physical human–robot interaction. Int. J. Robot. Res. 2018, 37, 1421–1444. [Google Scholar] [CrossRef] [Green Version]

- Bettini, A.; Marayong, P.; Lang, S.; Okamura, A.M.; Hager, G.D. Vision-Assisted Control for Manipulation Using Virtual Fixtures. IEEE Trans. Robot. 2004, 20, 953–966. [Google Scholar] [CrossRef] [Green Version]

- Marinho, M.M.; Bernardes, M.C.; Bo, A.P.L. Using General-Purpose Serial-Link Manipulators for Laparoscopic Surgery with Moving Remote Center of Motion. J. Med. Robot. Res. 2016, 1, 1650007. [Google Scholar] [CrossRef]

- Kröger, T.; Wahl, F.M. Online Trajectory Generation: Basic Concepts for Instantaneous Reactions to Unforeseen Events. IEEE Trans. Robot. 2010, 26, 94–111. [Google Scholar] [CrossRef]

- Beeson, P.; Ames, B. TRAC-IK: An open-source library for improved solving of generic inverse kinematics. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots, Seoul, Korea, 3–5 November 2015; pp. 928–935. [Google Scholar] [CrossRef]

- Young, R.P.; Marteniuk, R.G. Acquisition of a multi-articular kicking task: Jerk analysis demonstrates movements do not become smoother with learning. Hum. Mov. Sci. 1997, 16, 677–701. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Colan, J.; Nakanishi, J.; Aoyama, T.; Hasegawa, Y. A Cooperative Human-Robot Interface for Constrained Manipulation in Robot-Assisted Endonasal Surgery. Appl. Sci. 2020, 10, 4809. https://doi.org/10.3390/app10144809

Colan J, Nakanishi J, Aoyama T, Hasegawa Y. A Cooperative Human-Robot Interface for Constrained Manipulation in Robot-Assisted Endonasal Surgery. Applied Sciences. 2020; 10(14):4809. https://doi.org/10.3390/app10144809

Chicago/Turabian StyleColan, Jacinto, Jun Nakanishi, Tadayoshi Aoyama, and Yasuhisa Hasegawa. 2020. "A Cooperative Human-Robot Interface for Constrained Manipulation in Robot-Assisted Endonasal Surgery" Applied Sciences 10, no. 14: 4809. https://doi.org/10.3390/app10144809