Autonomous Vehicles: Vehicle Parameter Estimation Using Variational Bayes and Kinematics

Abstract

:1. Introduction

2. State of the Art

2.1. Bayesian State Estimation Approaches

2.2. Advances in Gaussian-Process Models

3. SGP Motion Model Implementation

- If the derived kinematic model is in accordance with real measurements, the machine learning model will be able to represent the corresponding physical behavior.

- However, if the kinematic model does not rely on data acquired from real-life measurements, the machine learning model might identify incoherences on the sample data that would prevent it from making the appropriate decisions.

3.1. SGP Model Training

3.2. Vehicle Parameter Estimation

3.3. Comparison of the Presented Approach with Blackbox and Whitebox Models

4. Results

4.1. Ground Truth Data Generation

4.2. Motion Model and Vehicle Parameter Estimation

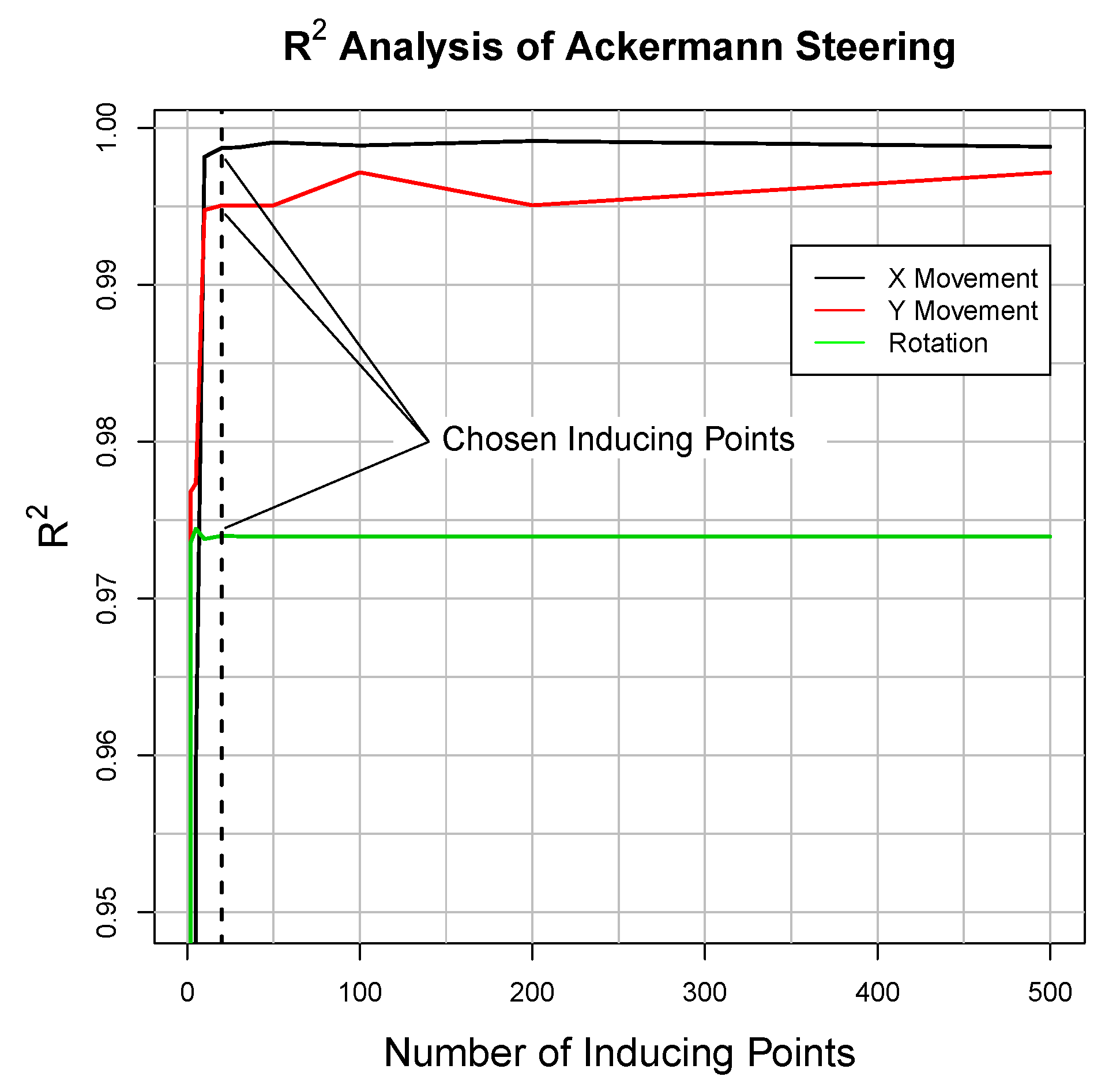

- Motion Model: We need to fit the sGP motion model to the data. In doing so, we need to optimize parameters and to ensure that the model represents the simulated movement.

- Kinematic Parameter Estimation: The kinematic parameter estimation is based on the result of the motion model and simplified vehicle kinematics.

4.3. Comparison of Whitebox and Blackbox Models

5. Conclusion and Future Work

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CAD | computer-aided design |

| EKF | Extended Kalman Filter |

| EKFDR | Extended Kalman Filter Dead Reckoning |

| GNSS | Global navigation satellite system |

| GP | Gaussian process |

| ICC | instantaneous center of curvature |

| ICR | Instantaneous center of rotation |

| IMU | Inertial measurement unit |

| KF | Kalman Filter |

| LIDAR | light detection and ranging |

| MCMC | Makrov chain monte carlo |

| M m | Map Matching |

| PF | Particle Filter |

| RMS | Root mean square |

| RTK | Real time kinematic |

| sGP | Sparsed Gaussian processes |

| UAV | autonomous unmanned air vehicles |

| VB | Variational Bayes |

References

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Dictionary, O.E. Definition of Vehicle. Available online: https://www.dictionary.com/browse/vehicle (accessed on 9 September 2020).

- Dictionary, O.E. Definition of Robot. Available online: https://www.dictionary.com/browse/robot (accessed on 9 September 2020).

- Pendleton, S.D.; Andersen, H.; Du, X.; Shen, X.; Meghjani, M.; Eng, Y.H.; Rus, D.; Ang, M.H. Perception, planning, control, and coordination for autonomous vehicles. Machines 2017, 5, 6. [Google Scholar] [CrossRef]

- Li, J.; Dai, B.; Li, X.; Xu, X.; Liu, D. A dynamic bayesian network for vehicle maneuver prediction in highway driving scenarios: Framework and verification. Electronics 2019, 8, 40. [Google Scholar] [CrossRef] [Green Version]

- da Costa Botelho, S.S.; Drews, P.; Oliveira, G.L.; da Silva Figueiredo, M. Visual odometry and mapping for underwater autonomous vehicles. In Proceedings of the 2009 6th Latin American Robotics Symposium (LARS 2009), Valparaiso, Chile, 29–30 October 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1–6. [Google Scholar]

- Russell, S.; Norvig, P. Quantifying Uncertainty. In Artificial Intelligence: A Modern Approach, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Ko, J.; Fox, D. GP-BayesFilters: Bayesian Filtering Using Gaussian Process Prediction and Observation Models. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Nice, France, 22–26 September 2008; IEEE: Nice, France, 2008. [Google Scholar]

- Fox, D. KLD-Sampling: Adaptive Particle Filters and Mobile Robot Localization. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Vancouver, BC, Canada, 3–8 December 2001. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning and Inference, 2nd ed.; Cambridge University Press: New York, NY, USA, 2009. [Google Scholar]

- Pearl, J. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1988. [Google Scholar]

- Bishop, C.M. Sequential Data. In Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Russell, S.; Norvig, P. Probabilistic Reasoning Over Time. In Artificial Intelligence: A Modern Approach, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Larsen, T.D.; Hansen, K.L.; Andersen, N.A.; Ole, R. Design of Kalman filters for mobile robots; evaluation of the kinematic and odometric approach. In Proceedings of the 1999 IEEE International Conference on Control Applications (Cat. No.99CH36328), Kohala Coast, HI, USA, 22–27 August 1999; Volume 2, pp. 1021–1026. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Gaussin Filters. In Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Konstantin, A.; Kai, S.; Rong-zhong, L. Modification of Nonlinear Kalman Filter Using Self-organizing Approaches and Genetic Algorithms. Int. J. Inf. Eng. 2013, 3, 129–136. [Google Scholar]

- Shakhtarin, B.I.; Shen, K.; Neusypin, K. Modification of the nonlinear kalman filter in a correction scheme of aircraft navigation systems. J. Commun. Technol. Electron. 2016, 61, 1252–1258. [Google Scholar] [CrossRef]

- Shen, K.; Wang, M.; Fu, M.; Yang, Y.; Yin, Z. Observability Analysis and Adaptive Information Fusion for Integrated Navigation of Unmanned Ground Vehicles. IEEE Trans. Ind. Electron. 2020, 67, 7659–7668. [Google Scholar] [CrossRef]

- Julier, S.J.; Uhlmann, J.K. New extension of the Kalman filter to nonlinear systems. In Signal Processing, Sensor Fusion, and Target Recognition VI; Kadar, I., Ed.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 1997; Volume 3068, pp. 182–193. [Google Scholar]

- Pearl, J.; Mackenzie, D. The Book of Why: The New Science of Cause and Effect, 1st ed.; Basic Books, Inc.: New York, NY, USA, 2018. [Google Scholar]

- Bengio, Y.; Courville, A.C.; Vincent, P. Unsupervised Feature Learning and Deep Learning: A Review and New Perspectives. arXiv 2012, arXiv:1206.5538. [Google Scholar]

- Neal, R. Bayesian Learning for Neuronal Networks. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 1995. [Google Scholar]

- Hartikainen, J.; Särkkä, S. Kalman filtering and smoothing solutions to temporal Gaussian process regression models. In Proceedings of the 2010 IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Espoo, Finland, 21–24 September 2020. [Google Scholar]

- Reece, S.; Roberts, S. An introduction to Gaussian processes for the Kalman filter expert. In Proceedings of the 2010 13th Conference on Information Fusion (FUSION), Edinburgh, UK, 26–29 July 2010. [Google Scholar]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning (Adaptive Computation and Machine Learning); The MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Bishop, C.M. Kernel Methods. In Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Lefèvre, S.; Vasquez, D.; Laugier, C. A survey on motion prediction and risk assessment for intelligent vehicles. ROBOMECH J. 2014, 1, 1. [Google Scholar] [CrossRef] [Green Version]

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55. [Google Scholar] [CrossRef] [Green Version]

- Katrakazas, C.; Quddus, M.; Chen, W.H.; Deka, L. Real-time motion planning methods for autonomous on-road driving: State-of-the-art and future research directions. Transp. Res. Part C Emerg. Technol. 2015, 60, 416–442. [Google Scholar] [CrossRef]

- Lundquist, C.; Karlsson, R.; Ozkan, E.; Gustafsson, F. Tire Radii Estimation Using a Marginalized Particle Filter. Intell. Transp. Syst. IEEE Trans. 2014, 15, 663–672. [Google Scholar] [CrossRef] [Green Version]

- M’Sirdi, N.; Rabhi, A.; Fridman, L.; Davila, J.; Delanne, Y. Second order sliding mode observer for estimation of velocities, wheel sleep, radius and stiffness. Am. Control. Conf. 2006, 2006, 6. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. arXiv 2013, arXiv:1311.2901. [Google Scholar]

- Damianou, A.; Lawrence, N. Deep Gaussian Processes. In Proceedings of the Sixteenth International Conference on Artificial Intelligence and Statistics, San Diego, CA, USA, 9–12 May 2015; Carvalho, C.M., Ravikumar, P., Eds.; PMLR: Scottsdale, AZ, USA, 2013; Volume 31, pp. 207–215. [Google Scholar]

- Titsias, M.K.; Lawrence, N.D. Bayesian Gaussian Process Latent Variable Model. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, AISTATS 2010, Chia Laguna Resort, Sardinia, Italy, 13–5 May 2010; pp. 844–851. [Google Scholar]

- Blei, D.M.; Kucukelbir, A.; McAuliffe, J.D. Variational Inference: A Review for Statisticians. J. Am. Stat. Assoc. 2017, 112, 859–877. [Google Scholar] [CrossRef] [Green Version]

- Wan, G.; Yang, X.; Cai, R.; Li, H.; Zhou, Y.; Wang, H.; Song, S. Robust and Precise Vehicle Localization Based on Multi-Sensor Fusion in Diverse City Scenes. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4670–4677. [Google Scholar] [CrossRef] [Green Version]

- Yu, B.; Dong, L.; Xue, D.; Zhu, H.; Geng, X.; Huang, R.; Wang, J. A hybrid dead reckoning error correction scheme based on extended Kalman filter and map matching for vehicle self-localization. J. Intell. Transp. Syst. 2019, 23, 84–98. [Google Scholar] [CrossRef]

- Kia, S.S.; Rounds, S.F.; Martínez, S. A centralized-equivalent decentralized implementation of Extended Kalman Filters for cooperative localization. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 3761–3766. [Google Scholar]

- Jurevičius, R.; Marcinkevičius, V.; Šeibokas, J. Robust GNSS-denied localization for UAV using particle filter and visual odometry. Mach. Vis. Appl. 2019, 30, 1181–1190. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.; Liu, B.; Goh, C.Y.; Lee, S.; Myung, H. Robust Vehicle Localization Using Entropy-Weighted Particle Filter-based Data Fusion of Vertical and Road Intensity Information for a Large Scale Urban Area. IEEE Robot. Autom. Lett. 2017, 2, 1518–1524. [Google Scholar] [CrossRef]

- Carvalho, H.; Moral, P.D.; Monin, A.; Salut, G. Optimal nonlinear filtering in GPS/INS integration. IEEE Trans. Aerosp. Electron. Syst. 1997, 33, 835–850. [Google Scholar] [CrossRef]

- Seeger, M.; Williams, C.K.; Lawrence, N.D. Fast forward selection to speed up sparse Gaussian process regression. In Proceedings of the 9th International Workshop on Artificial Intelligence and Statistics, Key West, FL, USA, 3–6 January 2003. [Google Scholar]

- Titsias, M.K. Variational Learning of Inducing Variables in Sparse Gaussian Processes. In Proceedings of the 12th International Conference on Artificial Intelligence and Statistics (AISTATS), Clearwater Beach, FL, USA, 16–18 April 2009. [Google Scholar]

- Snelson, E.; Ghahramani, Z. Sparse Gaussian Processes using Pseudo-inputs. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Vancouver, BC, Canada, 5–8 December 2005. [Google Scholar]

- Siegwart, R.; Nourbakhsh, I.R. Mobile Robot Kinematics. In Introduction to Autonomous Mobile Robots; Bradford Company: Holland, MI, USA, 2004. [Google Scholar]

- Snider, J.M. Automatic Steering Methods for Autonomous Automobile Path Tracking; Tech. Rep.; Robotics Institute of Carnegie Mellon University: Pittsburgh, PA, USA, 2009. [Google Scholar]

- GPy. GPy: A Gaussian Process Framework in Python. Since 2012. Available online: http://github.com/SheffieldML/GPy (accessed on 9 September 2020).

- Bishop, C.M. Kernel Density Estimators. In Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Luttinen, J. BayesPy: Variational Bayesian Inference in Python. J. Mach. Learn. Res. 2016, 17, 1–6. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Otrebski, R.; Pospisil, D.; Engelhardt-Nowitzki, C.; Kryvinska, N.; Aburaia, M. Flexibility Enhancements in Digital Manufacturing by means of Ontological Data Modeling. Procedia Comput. Sci. 2019, 155, 296–302. [Google Scholar] [CrossRef]

- Bishop, C.M. Determing the Number of Components. In Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 9 September 2020).

- Matthews, A.G.D.G.; van der Wilk, M.; Nickson, T.; Fujii, K.; Boukouvalas, A.; León-Villagrá, P.; Ghahramani, Z.; Hensman, J. GPflow: A Gaussian process library using TensorFlow. J. Mach. Learn. Res. 2017, 18, 1–6. [Google Scholar]

| B | T | |||||||

|---|---|---|---|---|---|---|---|---|

| M | GT | M | GT | M | GT | M | GT | |

| Mobile Robot | 3.329 cm | 3.300 cm | 15.96 cm | 16 cm | - | - | - | - |

| Ackermann Steering | 30.79 cm | 31.26 cm | 1.561 m | 1.586 m | 30.797 cm | 31.265 cm | 2.757 m | 2.86 m |

| B | T | |||||||

|---|---|---|---|---|---|---|---|---|

| M | GT | M | GT | M | GT | M | GT | |

| AM 1 | 26.890 cm | 26.265 cm | 1.629 m | 1.586 m | 35.428 cm | 36.270 cm | 2.522 m | 2.860 m |

| AM 2 | 29.105 cm | 30.000 cm | 1.534 m | 1.586 m | 23.142 cm | 25.000 cm | 2.437 m | 2.860 m |

| AM 3 | 29.872 cm | 30.000 cm | 1.581 m | 1.586 m | 26.890 cm | 25.000 cm | 2.443 m | 2.860 m |

| B | T | |||||||

|---|---|---|---|---|---|---|---|---|

| M | GT | M | GT | M | GT | M | GT | |

| Mobile Robot | 3.683 cm | 3.300 cm | 17.866 cm | 16 cm | - | - | - | - |

| Ackermann Steering | 28.429 cm | 31.26 cm | 1.436 m | 1.586 m | 28.429 cm | 31.265 cm | 2.582 m | 2.86 m |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wöber, W.; Novotny, G.; Mehnen, L.; Olaverri-Monreal, C. Autonomous Vehicles: Vehicle Parameter Estimation Using Variational Bayes and Kinematics. Appl. Sci. 2020, 10, 6317. https://doi.org/10.3390/app10186317

Wöber W, Novotny G, Mehnen L, Olaverri-Monreal C. Autonomous Vehicles: Vehicle Parameter Estimation Using Variational Bayes and Kinematics. Applied Sciences. 2020; 10(18):6317. https://doi.org/10.3390/app10186317

Chicago/Turabian StyleWöber, Wilfried, Georg Novotny, Lars Mehnen, and Cristina Olaverri-Monreal. 2020. "Autonomous Vehicles: Vehicle Parameter Estimation Using Variational Bayes and Kinematics" Applied Sciences 10, no. 18: 6317. https://doi.org/10.3390/app10186317

APA StyleWöber, W., Novotny, G., Mehnen, L., & Olaverri-Monreal, C. (2020). Autonomous Vehicles: Vehicle Parameter Estimation Using Variational Bayes and Kinematics. Applied Sciences, 10(18), 6317. https://doi.org/10.3390/app10186317