Exploiting Generative Adversarial Networks as an Oversampling Method for Fault Diagnosis of an Industrial Robotic Manipulator

Abstract

1. Introduction

2. Methodology

2.1. Feature Extraction

2.2. Generative Adversarial Network

A Vapnik Loss Inspired GAN

2.3. Random Forests for Fault Classification

2.4. Data Generation for Fault Classification

3. Experiment

3.1. Experimental Test-Rig

3.2. Collected Vibration Signals

4. Results and Discussion

4.1. On Different Scenarios for Dealing with the Unbalanced Data Set

4.2. On of the Performance in Each Class

4.3. On the Training Set

4.3.1. Learning Curves

4.3.2. Shuffling Data

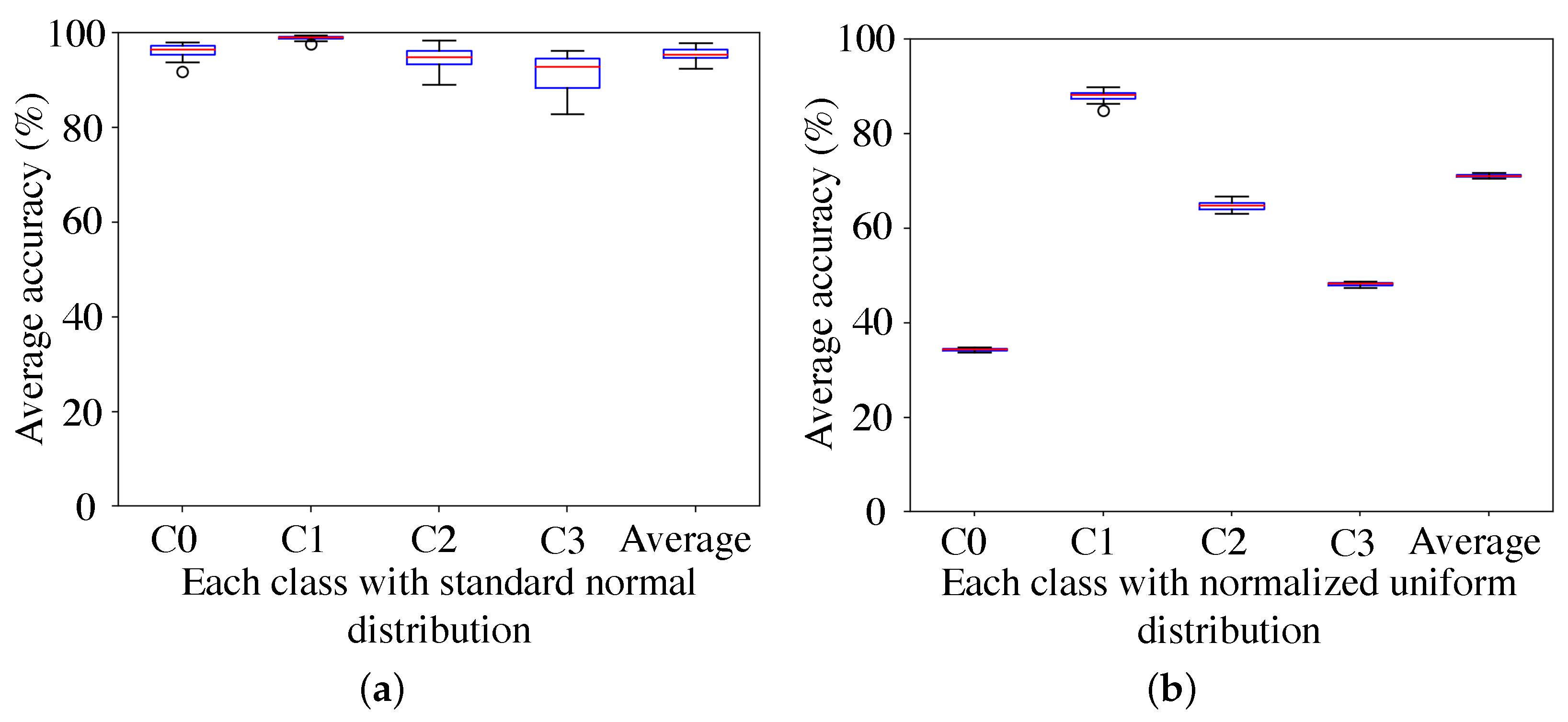

4.4. On the Distribution Used for Sampling Random Inputs

4.5. On the Initial Conditions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jin, X.; Que, Z.; Sun, Y.; Guo, Y.; Qiao, W. A data-driven approach for bearing fault prognostics. IEEE Trans. Ind. Appl. 2019, 55, 3394–3401. [Google Scholar] [CrossRef]

- Schmidt, S.; Heyns, P.S.; Gryllias, K.C. A discrepancy analysis methodology for rolling element bearing diagnostics under variable speed conditions. Mech. Syst. Signal Process. 2019, 116, 40–61. [Google Scholar] [CrossRef]

- Zaidan, M.A.; Harrison, R.F.; Mills, A.R.; Fleming, P.J. Bayesian hierarchical models for aerospace gas turbine engine prognostics. Expert Syst. Appl. 2015, 42, 539–553. [Google Scholar] [CrossRef]

- He, M.; He, D. Deep learning based approach for bearing fault diagnosis. IEEE Trans. Ind. Appl. 2017, 53, 3057–3065. [Google Scholar] [CrossRef]

- Liu, H.; Liu, C.; Huang, Y. Adaptive feature extraction using sparse coding for machinery fault diagnosis. Mech. Syst. Signal Process. 2011, 25, 558–574. [Google Scholar] [CrossRef]

- Wu, C.; Jiang, P.; Ding, C.; Feng, F.; Chen, T. Intelligent fault diagnosis of rotating machinery based on one-dimensional convolutional neural network. Comput. Ind. 2019, 108, 53–61. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Gao, L.; Chen, W.; Li, P. Intelligent fault diagnosis of rotating machinery using a new ensemble deep auto-encoder method. Measurement 2020, 151, 107232. [Google Scholar] [CrossRef]

- Shen, C.; Xie, J.; Wang, D.; Jiang, X.; Shi, J.; Zhu, Z. Improved hierarchical adaptive deep belief network for bearing fault diagnosis. Appl. Sci. 2019, 9, 3374. [Google Scholar] [CrossRef]

- Deng, S.; Cheng, Z.; Li, C.; Yao, X.; Chen, Z.; Sanchez, R.V. Rolling bearing fault diagnosis based on Deep Boltzmann machines. In Proceedings of the 2016 Prognostics and System Health Management Conference (PHM-Chengdu), Chengdu, China, 19–21 October 2016; pp. 1–6. [Google Scholar]

- Cho, C.N.; Hong, J.T.; Kim, H.J. Neural network based adaptive actuator fault detection algorithm for robot manipulators. J. Intell. Robot. Syst. 2019, 95, 137–147. [Google Scholar] [CrossRef]

- Wang, H.; Li, S.; Song, L.; Cui, L. A novel convolutional neural network based fault recognition method via image fusion of multi-vibration-signals. Comput. Ind. 2019, 105, 182–190. [Google Scholar] [CrossRef]

- Ma, Q.; Chen, E.; Lin, Z.; Yan, J.; Yu, Z.; Ng, W.W. Convolutional Multitimescale Echo State Network. IEEE Trans. Cybern. 2019. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Zhang, S.; Li, C. Evolving deep echo state networks for intelligent fault diagnosis. IEEE Trans. Ind. Inform. 2019, 16, 4928–4937. [Google Scholar] [CrossRef]

- Hu, G.; Li, H.; Xia, Y.; Luo, L. A deep Boltzmann machine and multi-grained scanning forest ensemble collaborative method and its application to industrial fault diagnosis. Comput. Ind. 2018, 100, 287–296. [Google Scholar] [CrossRef]

- Wang, J.; Wang, K.; Wang, Y.; Huang, Z.; Xue, R. Deep Boltzmann machine based condition prediction for smart manufacturing. J. Ambient Intell. Humaniz. Comput. 2019, 10, 851–861. [Google Scholar] [CrossRef]

- Lee, K.P.; Wu, B.H.; Peng, S.L. Deep-learning-based fault detection and diagnosis of air-handling units. Build. Environ. 2019, 157, 24–33. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Li, X.; Liang, T. Rolling bearing fault detection using continuous deep belief network with locally linear embedding. Comput. Ind. 2018, 96, 27–39. [Google Scholar] [CrossRef]

- Polic, M.; Krajacic, I.; Lepora, N.; Orsag, M. Convolutional autoencoder for feature extraction in tactile sensing. IEEE Robot. Autom. Lett. 2019, 4, 3671–3678. [Google Scholar] [CrossRef]

- D’Elia, G.; Mucchi, E.; Cocconcelli, M. On the identification of the angular position of gears for the diagnostics of planetary gearboxes. Mech. Syst. Signal Process. 2017, 83, 305–320. [Google Scholar] [CrossRef]

- Zaidan, M.A.; Mills, A.R.; Harrison, R.F.; Fleming, P.J. Gas turbine engine prognostics using Bayesian hierarchical models: A variational approach. Mech. Syst. Signal Process. 2016, 70, 120–140. [Google Scholar] [CrossRef]

- Ali, J.B.; Fnaiech, N.; Saidi, L.; Chebel-Morello, B.; Fnaiech, F. Application of empirical mode decomposition and artificial neural network for automatic bearing fault diagnosis based on vibration signals. Appl. Acoust. 2015, 89, 16–27. [Google Scholar]

- Yan, K.; Ji, Z.; Lu, H.; Huang, J.; Shen, W.; Xue, Y. Fast and accurate classification of time series data using extended ELM: Application in fault diagnosis of air handling units. IEEE Trans. Syst. Man, Cybern. Syst. 2017, 49, 1349–1356. [Google Scholar] [CrossRef]

- Iqbal, J.; Islam, R.U.; Abbas, S.Z.; Khan, A.A.; Ajwad, S.A. Automating industrial tasks through mechatronic systems—A review of robotics in industrial perspective. Teh. Vjesn. 2016, 23, 917–924. [Google Scholar]

- Caccavale, F.; Cilibrizzi, P.; Pierri, F.; Villani, L. Actuators fault diagnosis for robot manipulators with uncertain model. Control Eng. Pract. 2009, 17, 146–157. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Shao, S.; Wang, P.; Yan, R. Generative adversarial networks for data augmentation in machine fault diagnosis. Comput. Ind. 2019, 106, 85–93. [Google Scholar] [CrossRef]

- Li, C.; Cabrera, D.; Sancho, F.; Sánchez, R.V.; Cerrada, M.; Long, J.; de Oliveira, J.V. Fusing convolutional generative adversarial encoders for 3D printer fault detection with only normal condition signals. Mech. Syst. Signal Process. 2020, 147, 107108. [Google Scholar] [CrossRef]

- Mao, W.; Liu, Y.; Ding, L.; Li, Y. Imbalanced fault diagnosis of rolling bearing based on generative adversarial network: A comparative study. IEEE Access 2019, 7, 9515–9530. [Google Scholar] [CrossRef]

- Jiang, W.; Hong, Y.; Zhou, B.; He, X.; Cheng, C. A GAN-based anomaly detection approach for imbalanced industrial time series. IEEE Access 2019, 7, 143608–143619. [Google Scholar] [CrossRef]

- Li, C.; Cabrera, D.; Sancho, F.; Sánchez, R.V.; Cerrada, M.; de Oliveira, J.V. One-shot fault diagnosis of 3D printers through improved feature space learning. IEEE Trans. Ind. Electron. 2020, 147, 107108. [Google Scholar]

- Wang, Y.R.; Sun, G.D.; Jin, Q. Imbalanced sample fault diagnosis of rotating machinery using conditional variational auto-encoder generative adversarial network. Appl. Soft Comput. 2020, 92, 106333. [Google Scholar] [CrossRef]

- Gokhale, M.; Khanduja, D.K. Time domain signal analysis using wavelet packet decomposition approach. Int. J. Commun. Netw. Syst. Sci. 2010, 3, 321. [Google Scholar] [CrossRef]

- Bruce, L.M.; Koger, C.H.; Li, J. Dimensionality reduction of hyperspectral data using discrete wavelet transform feature extraction. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2331–2338. [Google Scholar] [CrossRef]

- Li, C.; Sanchez, R.V.; Zurita, G.; Cerrada, M.; Cabrera, D.; Vásquez, R.E. Gearbox fault diagnosis based on deep random forest fusion of acoustic and vibratory signals. Mech. Syst. Signal Process. 2016, 76, 283–293. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein gan. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017; pp. 5767–5777. [Google Scholar]

- Cabrera, D.; Sancho, F.; Long, J.; Sánchez, R.V.; Zhang, S.; Cerrada, M.; Li, C. Generative adversarial networks selection approach for extremely imbalanced fault diagnosis of reciprocating machinery. IEEE Access 2019, 7, 70643–70653. [Google Scholar] [CrossRef]

- Vapnik, V.; Izmailov, R. Rethinking statistical learning theory: Learning using statistical invariants. Mach. Learn. 2019, 108, 381–423. [Google Scholar] [CrossRef]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Demsar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Pacheco, F.; Valente de Oliveira, J.; Sánchez, R.V.; Cerrada, M.; Cabrera, D.; Li, C.; Zurita, G.; Artés, M. A statistical comparison of neuroclassifiers and feature selection methods for gearbox fault diagnosis under realistic conditions. Neurocomputing 2016, 194, 192–206. [Google Scholar] [CrossRef]

| Fault Id | Part | Fault Type |

|---|---|---|

| A | None | Healthy |

| B | Sun gear 1 | Pitting |

| C | Sun gear 1 | Broken tooth |

| D | Planetary gear 1 | Cracking |

| Fault Id | Training Set | Test Set |

|---|---|---|

| A | 14,000 | 6000 |

| B | 140 | 6000 |

| C | 140 | 6000 |

| D | 140 | 6000 |

| Pair | p-Value | Winner |

|---|---|---|

| RF-i vs. RF-b2 | 8.857 × 10 | RF-b2 |

| RF-i vs. RF-GAN | 8.857 × 10 | RF-GAN |

| RF-i vs. RF-GAN1 | 8.857 × 10 | RF-GAN1 |

| RF-i vs. RF-GAN2 | 8.857 × 10 | RF-GAN2 |

| RF-i vs. SMOTE | 8.857 × 10 | SMOTE |

| RF-b2 vs. RF-GAN | 8.857 × 10 | RF-GAN |

| RF-b2 vs. RF-GAN1 | 8.857 × 10 | RF-GAN1 |

| RF-b2 vs. RF-GAN2 | 8.857 × 10 | RF-GAN2 |

| RF-b2 vs. SMOTE | 1.204 × 10 | SMOTE |

| RF-GAN vs. RF-GAN1 | 0.079 | – |

| RF-GAN vs. RF-GAN2 | 8.857 × 10 | RF-GAN |

| RF-GAN vs. SMOTE | 8.857 × 10 | RF-GAN |

| RF-GAN1 vs. RF-GAN2 | 8.845 × 10 | RF-GAN1 |

| RF-GAN1 vs. SMOTE | 8.844 × 10 | RF-GAN1 |

| RF-GAN/RF-GAN1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pu, Z.; Cabrera, D.; Sánchez, R.-V.; Cerrada, M.; Li, C.; Valente de Oliveira, J. Exploiting Generative Adversarial Networks as an Oversampling Method for Fault Diagnosis of an Industrial Robotic Manipulator. Appl. Sci. 2020, 10, 7712. https://doi.org/10.3390/app10217712

Pu Z, Cabrera D, Sánchez R-V, Cerrada M, Li C, Valente de Oliveira J. Exploiting Generative Adversarial Networks as an Oversampling Method for Fault Diagnosis of an Industrial Robotic Manipulator. Applied Sciences. 2020; 10(21):7712. https://doi.org/10.3390/app10217712

Chicago/Turabian StylePu, Ziqiang, Diego Cabrera, René-Vinicio Sánchez, Mariela Cerrada, Chuan Li, and José Valente de Oliveira. 2020. "Exploiting Generative Adversarial Networks as an Oversampling Method for Fault Diagnosis of an Industrial Robotic Manipulator" Applied Sciences 10, no. 21: 7712. https://doi.org/10.3390/app10217712

APA StylePu, Z., Cabrera, D., Sánchez, R.-V., Cerrada, M., Li, C., & Valente de Oliveira, J. (2020). Exploiting Generative Adversarial Networks as an Oversampling Method for Fault Diagnosis of an Industrial Robotic Manipulator. Applied Sciences, 10(21), 7712. https://doi.org/10.3390/app10217712