1. Introduction

The finite element method (FEM) is one of the most popular numerical simulation methods, which is commonly used to address many science and engineering problems. The core idea of the FEM is to discretize a continuum into a set of finite size elements to solve continuum mechanics problems. Generally, a two-dimensional model is discretized into a triangular or quadrilateral mesh; and a three-dimensional model is discretized into a tetrahedral or hexahedral mesh. The mesh is the basis of discretization in the numerical analysis of FEM. Thus, the quality of meshes plays a key role on the computational accuracy and efficiency of final results [

1,

2,

3]. To obtain a high-quality mesh, numornous mesh generation methods [

3,

4,

5,

6] have been proposed. However, the generated initial meshes are in general have poor quality, and cannot be directly used for numerical computation. Therefore, it is necessary to further optimize the mesh to improve its quality after initial generation.

There are two main approaches used to optimize meshes [

7,

8]. One is to improve the mesh quality by encrypting, removing, or inserting mesh nodes [

9], which changes the topology of the mesh [

10,

11]; the other is to change the locations of the mesh nodes, which is called mesh smoothing [

12,

13,

14,

15]. Mesh smoothing is more widely used because it does not change the connectivity of the mesh; one of the popular approaches is Laplacian mesh smoothing [

16,

17,

18].

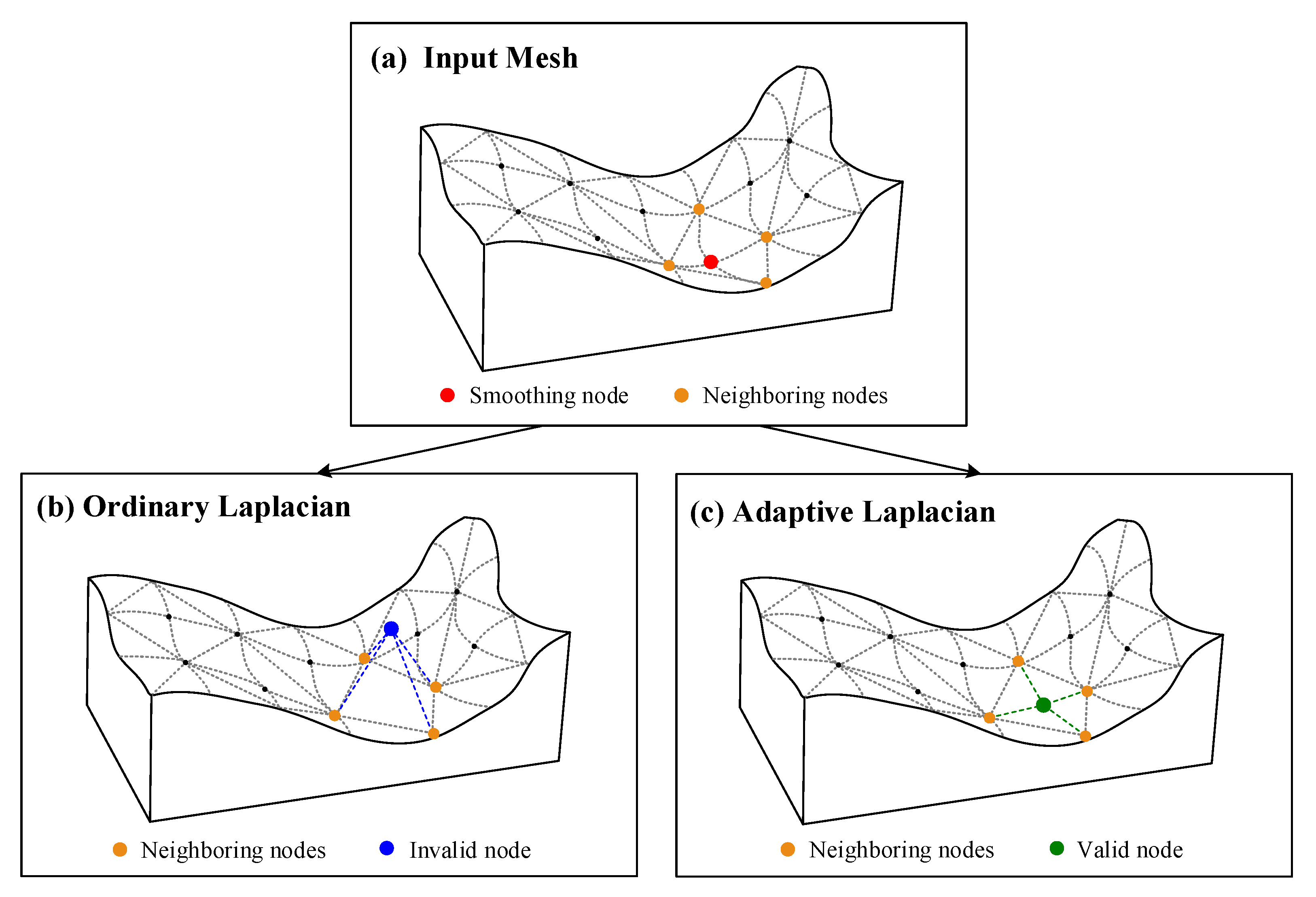

The process of Laplacian mesh smoothing is straightforward, requiring only that the locations of mesh nodes be updated to the geometric center of their neighbors during each iteration. In this case, the mesh topology will not be changed. However, in large-scale and complicated application scenarios, the computational models consist of a large number of nodes and elements, which means that in tetrahedral or hexahedral mesh models, a large number of tetrahedrons or hexahedrons need to be involved in the iterative computations. In this case, the complex iteration process will result in expensive computational costs when using Laplacian mesh smoothing. The use of parallel computing is an effective strategy to improve the efficiency of the Laplacian mesh smoothing algorithm; the powerful parallelism features of a modern graphics processing unit (GPU) can be utilized.

Currently, parallel computing on GPUs has been widely used in numerical computing, artificial intelligence, and other applications [

19,

20,

21,

22]. In Laplacian mesh smoothing, when the input mesh changes from a simple triangular mesh to a complex tetrahedral or hexahedral mesh, there are obviously larger number of tetrahedrons need to be calculated, Laplacian mesh smoothing process in serial calculation will become extremely long, and the experimental cost will be expensive. Therefore, it is necessary to accelerate Laplacian mesh smoothing algorithm on a GPU.

Several feasible algorithms accelerated on the GPU have been proposed to optimize mesh quality. For example, Mei et al. [

23] proposed an ordinary Laplacian mesh smoothing algorithm accelerated on the GPU; Dahal and Newman [

24] proposed three efficient parallel algorithms to improve finite element meshes based on the Laplacian smoothing. In addition, other parallel optimization strategies have been proposed for various types of meshes. For example, Jiao, X et al. [

25] presented a parallel approach for optimizing surface meshes by redistributing vertices on a feature-aware higher-order reconstruction of a triangulated surface. Antepara, O et al. [

26] described a parallel adaptive mesh refinement strategy for two-phase flows using tetrahedral meshes. Shang Mengmeng [

27] proposed a multithreaded parallel version of a sequential quality improvement algorithm for tetrahedral meshes, which combined mesh smoothing operations and local reconnection operations.

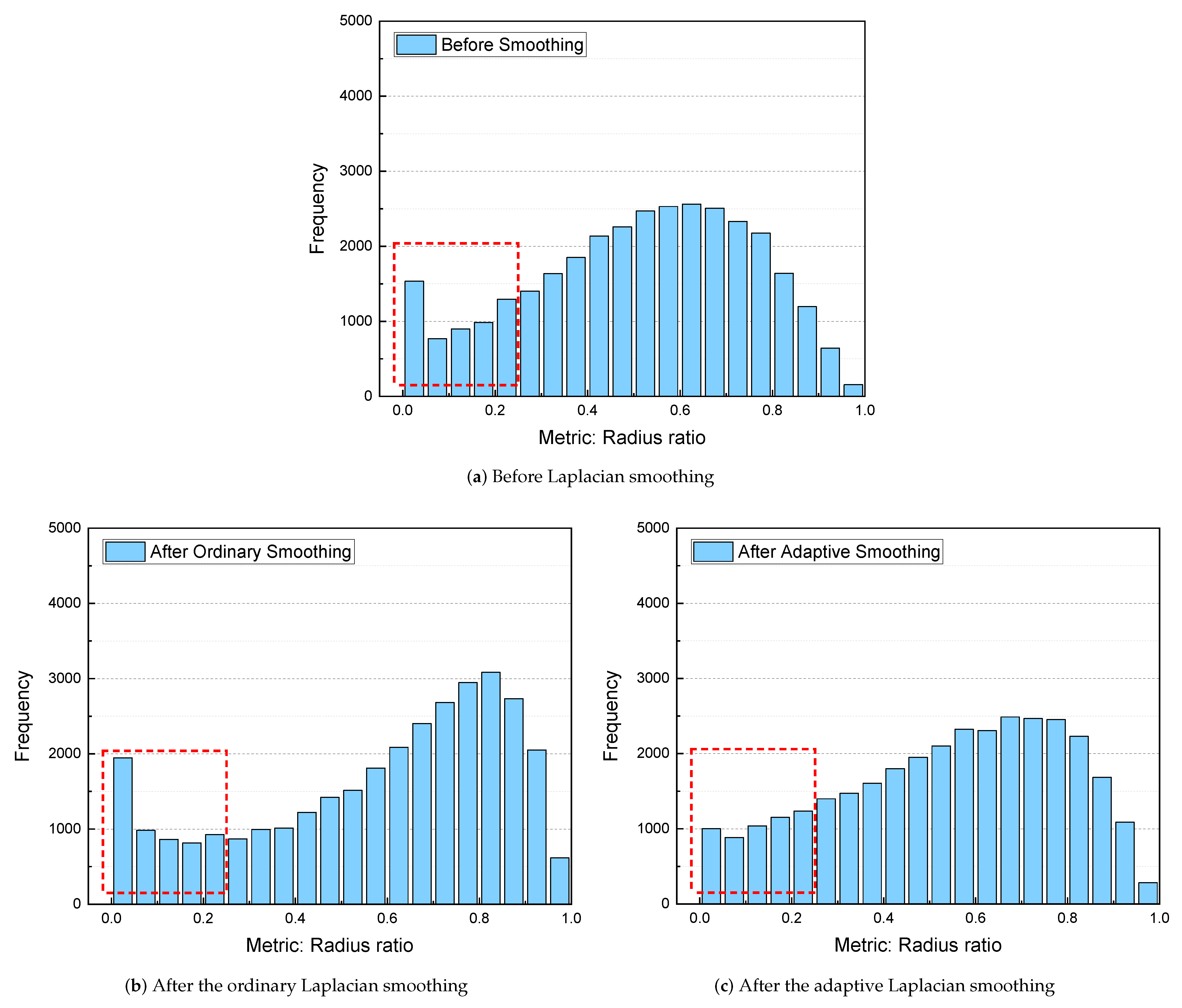

In our previous research, we developed parallel Laplacian mesh smoothing algorithms for triangular meshes accelerated on the GPU [

23,

28]. Moreover, a parallel ordinary Laplacian mesh smoothing for tetrahedral mesh accelerated on the GPU [

29] has been presented. However, there is a shortcoming in the previously proposed ordinary Laplacian mesh smoothing algorithm. Specifically, the potential invalid nodes will be created in the concave area of the mesh. In FEM, the creation of invalid nodes will lead to distorted elements, which will strongly reduce the computational accuracy [

30,

31,

32,

33]. For example, Freitag L.A [

18] found that approximately 30 percent of the Laplacian smoothing steps will result in an invalid mesh when using the ordinary algorithm compared with approximately 3 percent when using an improved swapping approach. Moreover, Vollmer J [

33] found that the ordinary Laplacian algorithm shrinks meshes. Huang Lili et al. [

4] reported that there are still severely distorted elements after using Laplacian smoothing. Therefore, it is necessary to propose improved Laplacian mesh smoothing algorithms.

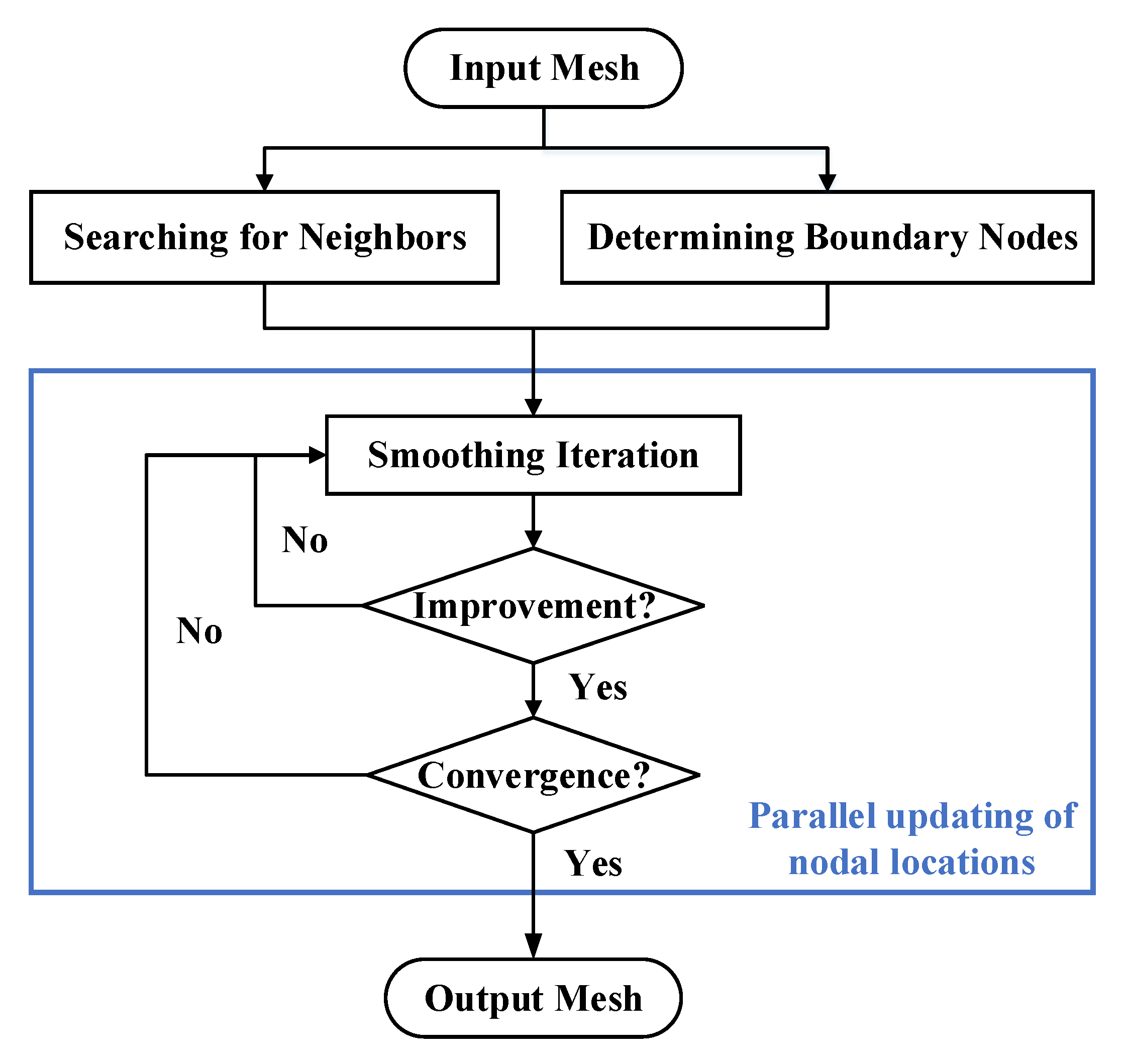

To address the above problem, in this paper, we specifically designed a parallel adaptive Laplacian smoothing algorithm for improving the quality of large-scale tetrahedral meshes by exploiting the parallelism features of the GPU. In the proposed algorithm, we added a judgment of tetrahedral mesh quality in the Laplacian smoothing process, and the new smoothing location is retained only if it improves the mesh quality. Furthermore, we changed the method of searching for neighboring nodes and compared the impact of different data layouts and iteration forms on the running performance and compared the efficiency on the GPU when using a single block and multiple blocks. The results are compared and analyzed with the serial version and ordinary Laplacian smoothing.

The rest of the paper is organized as follows:

Section 2 provides a background introduction to Laplacian mesh smoothing and GPU computing.

Section 3 introduces the proposed parallel adaptive Laplacian mesh smoothing in detail.

Section 4 describes the experimental test and results.

Section 5 discusses the advantages and shortcomings of the proposed parallel algorithm and outlines future work. Finally,

Section 6 concludes this work.