1. Introduction

Nowadays, reducing the risk of musculoskeletal diseases (MSDs) for workers of manufacturing industries is of paramount importance to reduce absenteeism on work, due to illnesses related to bad working conditions, and to improve process efficiency in assembly lines. One of the main goals of Industry 4.0 is to find solutions to put workers in human-suitable working conditions, improving the efficiency and productivity of the factory [

1]. However, we are still far from this goal, as shown in the 2019 European Risk Observatory Report [

2]: reported working-related MSDs are decreasing but remain too high (58% in 2015 against 60% in 2010), and if we consider the working-class aging, these reports can only get worse. According to the European Commission’s Ageing Report 2015 [

3], the employment rate of people over-55 will reach 59.8% in 2023 and 66.7% by 2060, because many born during the “baby boom” are getting older, life expectancy and retirement age are increasing, while the birth rate is decreasing. Solving this problem is of extreme importance. Working demand usually does not change with age, while this cannot be said for working capacity: in aging, physiological changes in perception, information processing and motor control reduce work capacity. The physical work capacity of a 65-year-old worker is about half that of a 25-year-old worker [

4]. On the other hand, age-related changes in physiological function can be dampened by various factors including physical activity [

5], so work capability is a highly individual variable.

In this context, industries will increasingly need to take the human variability into account and to predict the workers’ behaviors, going behind the concept of “the worker” as a homogeneous group and monitoring the specific work-related risks more accurately, to implement more effective health and safety management systems to increase factory efficiency and safety.

To reduce ergonomic risks and promote the workers’ well-being, considering the characteristics and performance of every single person, we need cost-effective, robust tools able to provide a direct monitoring of the working postures and a continuous ergonomic risk assessment along with the work activities. Moreover, we need to improve the workers’ awareness about ergonomic risks and define the best strategies to prevent them. Several studies in ergonomics suggested that providing workers with ergonomic feedback can positively influence the motion of workers and decrease hazardous risk score values [

6,

7,

8]. However, this goal still seems far from being achieved due to the lack of low-cost, continuous monitoring systems to be easily applied on the shop floor.

Currently, ergonomic risk assessment is mainly based on postural observation methods [

9,

10], such as: National Institute for Occupational Safety and Health (NIOSH) lifting equation [

11], Rapid Upper Limb Assessment (RULA) [

12], Rapid Entire Body Assessment (REBA) [

13], Strain Index [

14], and Occupational Repetitive Action (OCRA) [

15]. They require the intervention of an experienced ergonomist who observed workers’ actions, directly or by means of video recordings. Data collection required to compute the risk index is generally obtained through subjective observation or simple estimation of projected joint angles (e.g., elbow, shoulder, knee, trunk, and neck) by analyzing videos or pictures. This ergonomic assessment results to be costly and time-consuming [

9], highly affects the intra- and inter-observer results variability [

16] and may lead to low accuracy of such evaluations [

17].

Several tools are available to automate the postural analysis process by calculating various risk indices, to make ergonomic assessment more efficient. They are currently embedded in the most widely used computer-aided design (CAD) packages (e.g., CATIA-DELMIA by Dassault Systèmes, Pro/ENGINEER by PTC Manikin or Tecnomatix/Jack by Siemens) and allow detailed human modeling based on digital human manikins, according to an analytical ergonomic perspective [

18]. However, to perform realistic and reliable simulations, they require accurate information related to the kinematic of the worker’s body (posture) [

19].

Motion capture systems can be used to collect such data accurately and quantitatively. However, the most reliable systems commercially available, i.e., motion capture sensor-based (e.g., Xsense [

20], Vicon Blue Trident [

21]) and marker-based optical systems (e.g., Vicon Nexus [

22], OptiTrack [

23]) have important drawbacks, so that their use in real work environments is still scarce [

9]. Indeed, they are expensive in terms of cost and setup time and have a limited application in a factory environment due to several constraints, ranging from lighting conditions to electro-magnetic interference [

24]. Therefore, their use is currently limited to laboratory experimental setups [

25], while they are not easy to manage on the factory shop floor. In addition, these systems can also be intrusive as they frequently require the use of wearable devices (i.e., sensors or markers) positioned on the workers’ bodies according to proper specification [

25] and following specific calibration procedures. These activities require the involvement of specialized professionals and are time consuming, so it is not easy to carry them out in real working conditions, on a daily basis. Moreover, marker-based optical systems greatly suffer from occlusion problems and need the installation of multiple-cameras, which could rarely be feasible in a working environment where space is always limited, and so they cannot be optimally placed.

In the last few years, to overcome these issues, several systems based on computer vision and machine learning techniques have been proposed.

The introduction on the market of low-cost body-tracking technologies, based on RGB-D cameras, such as the Microsoft Kinect

®, has aroused great interest in many application fields such as: gaming and virtual reality [

26], healthcare [

27,

28], natural user interfaces [

29], education [

30] and ergonomics [

31,

32,

33]. Being an integrated device, it does not require calibration. Several studies evaluated its accuracy [

34,

35,

36] and evaluated it in working environments and industrial contexts [

37,

38]. Their results suggest that Kinect may successfully be used for assessing the risk of the operational activities, where very high precision is not required, despite errors depending on the performed posture [

39]. Since the acquisition is performed from a single point of view, the system suffers occlusion problems, which can induce large error values, especially in complex motions with auto-occlusion or if the sensor is not placed in front of the subject, as recommended in [

36]. Using multiple Kinects can only partially solve these problems, as the quality of the depth images degrades with the number of Kinects concurrently running, due to IR emitter interference problems [

40]. Moreover, RGB-D cameras are not as widely and cheaply acceptable as RGB, and their installation and calibration on the workspace is not a trivial task, because of ferromagnetic interference that can cause significant noise in the output [

41].

In a working industrial environment, having motion capture working with standard RGB sensors (such as those embedded in smartphones or webcams) can represent a more viable solution. Several systems have been introduced in the last few years to enable real-time human pose estimation from video streaming provided by RGB cameras. Among them, OpenPose, developed by researchers of the Carnegie Mellon University [

42,

43], represents the first real-time multi-person system to jointly detect the human body, hands, face, and feet (137 keypoints estimation per person: 70-keypoints face, 25-keypoints body/foot and 2x21-keypoints hand) on a single image. It is an open-source software, based on Convolutional Neural Network (CNN) found in the OpenCV library [

44] initially written in C++ and Caffe, and freely available for non-commercial use. Such a system does not seem to be significantly affected by occlusion problems, as it ensures body tracking even when several body joints and segments are temporarily occluded, so that only a portion of the body is framed in the video [

18]. Several studies validated its accuracy by comparing one person’s skeleton tracking results with those obtained from a Vicon system. All found a highly negligible relative limb positioning error index [

45,

46]. Many studies exploited OpenPose for several research purposes, including Ergonomics. In particular, several studies carried out both in a laboratory and in real life manufacturing environments, suggest that OpenPose is a helpful tool to support worker posture ergonomics assessment based on RULA, REBA, and OCRA [

18,

46,

47,

48,

49].

However, deep learning algorithms used to enable people tracking using RGB images usually require hardware with high computational requirements, so it is essential to have a good CPU and GPU performance [

50].

Recently, a newer open-source machine learning pose-estimation algorithm inspired from OpenPose, namely Tf-pose-estimation [

51], has been released. It has been implemented using Tensorflow and introduced several variants that have some changes to the CNN structure it implements so that it enables real-time processing of multi-person skeletons also on the CPU or on low-power embedded devices. It provides several models, including a body model variant that is characterized by 18 key points and runs on mobile devices.

Given its low computational requirements, it has been used to implement mobile apps for mobility analysis (e.g., Lindera [

52]) and to implement edge computing solutions for human behavior estimation [

53], or for human posture recognition (e.g., yoga pose [

54]). However, as far as we know, the suitability of this tool to support ergonomic risk assessment in the industrial context has not been assessed yet.

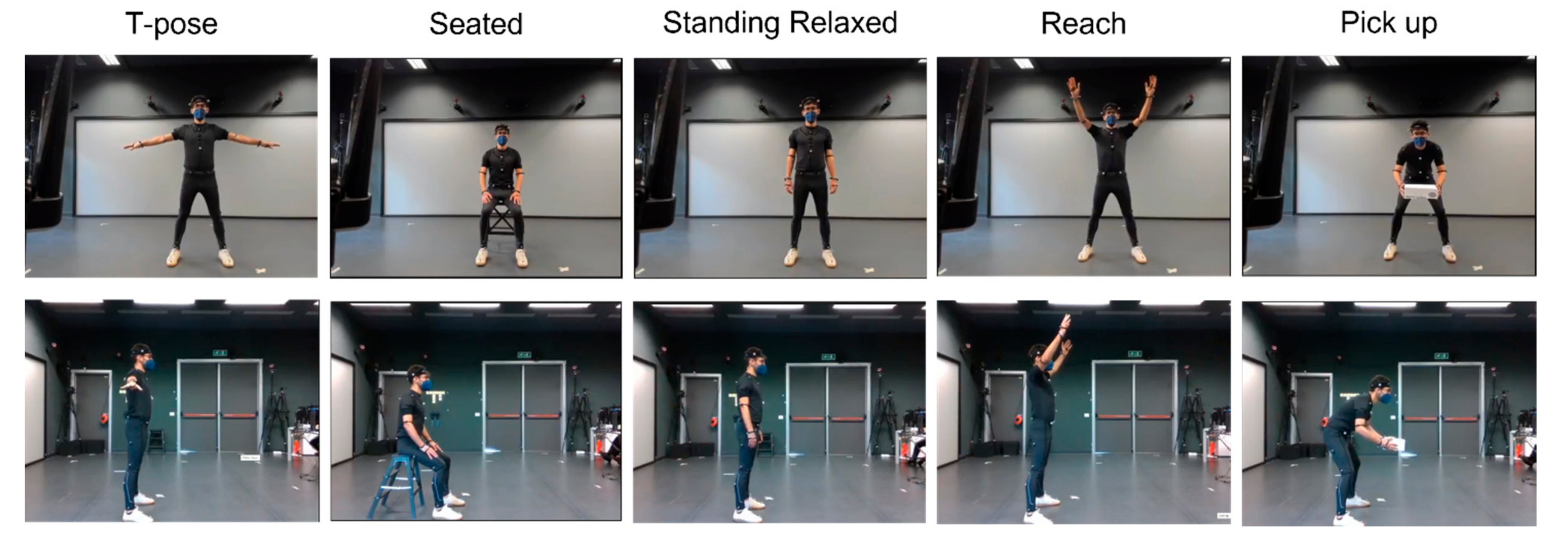

In this context, this paper introduces a new low-cost and low-computational marker-less motion capture system, based on frame images from RGB cameras acquisition, and on their processing through the multi-person key points detection Tf-pose-estimation algorithm. To assess the accuracy of this tool, a comparison among the data provided by it with those collected from a Vicon Nexus system, and those measured through a manual video analysis by a panel of three expert ergonomists, is performed. Moreover, to preliminary validate the proposed system for ergonomic assessment, RULA scores obtained with the data provided by it have been compared to (1) those measured by the expert ergonomists and (2) those obtained with data provided by the Vicon Nexus system.

3. Results

Table 3 shows the RMSE comparison between the angles extracted from the RGB-MAS and the ones extracted by the Vicon system for a pure system-to-system analysis.

As it can be observed, angle predictions provided by the proposed system result in general lower accuracy, in the case of shoulder abduction and shoulder and elbow flexion/extension. The accuracy is particularly low if we consider the reach posture. This is probably because of perspective distortions.

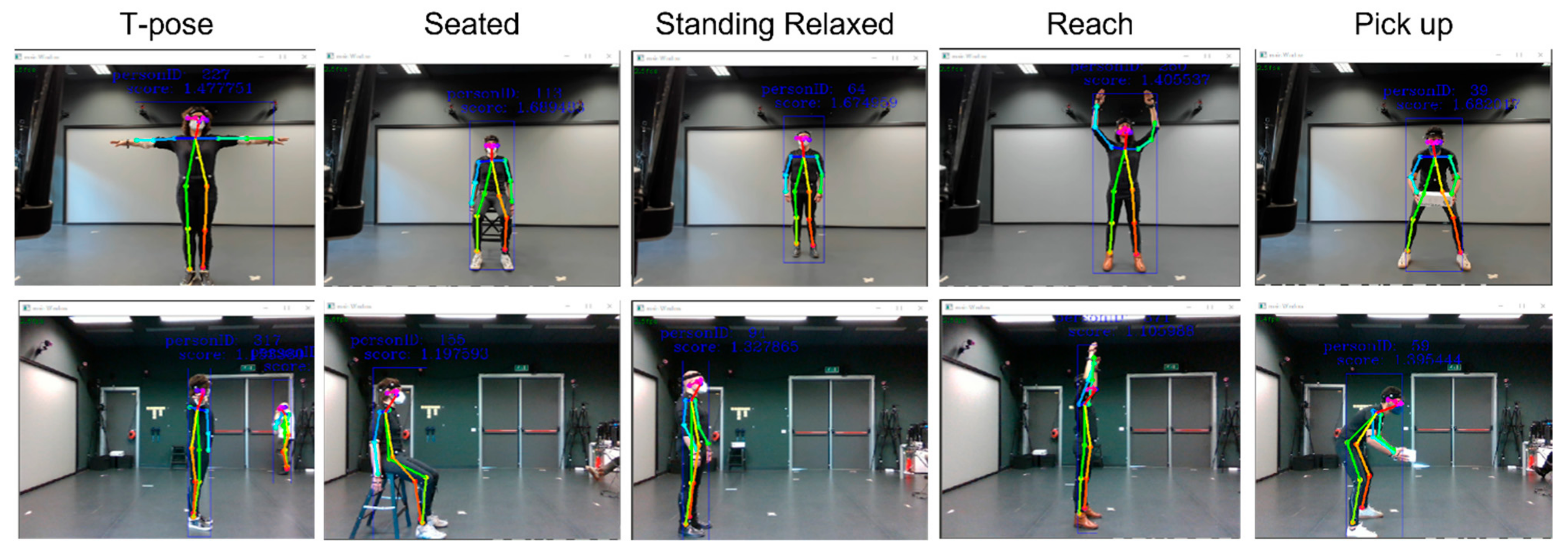

However, the pickup posture could not be traced with the Vicon system, due to occlusion problems caused by the presence of the box that occludes some of the markers needed by it.

Table 4 allows easy comparison of the RMSE between angles respectively predicted by the RGB-MAS and the Vicon system, and those manually measured. These results suggest that the proposed system can be considered somehow feasible to support ergonomists doing their analysis.

Figure 6 highlights the similarities and discrepancies between the angle predictions provided by RGB-MAS and Vicon system with respect to the manual analysis. It can be observed that the prediction provided by the RGB-MAS suffers from a wider variability compared to the reference system. As for the neck flexion/extension angle, the RGB-MAS system slightly overestimates the results compared to the Vicon system. This occurs more pronouncedly for the shoulder abduction and flexion/extension as well, especially when abduction and flexion both occur simultaneously. In

Figure 7, the keypoint’s locations and the skeleton are shown superimposed over the posture pictures. In particular, from the picture of the Pick Up posture, we can see that the small occlusion that caused problems to the Vicon system did not cause any consequences to the RGB-MAS system.

Moreover, high variability is also found for all the angles of the left-hand side of the body. Nevertheless, RGB-MAS accurately predicts the trunk flexion/extension and the right-hand side angles. This left-right inconsistency can be due to the lack of a left camera, and so the left-hand side angles are predicted with less confidence than their right-hand side counterparts.

Table 5 shows the median RULA values obtained using each angle extraction method considered (i.e., manual measurement, RGB-MAS prediction, Vicon tracking).

Table 6 shows the RMSE between the RULAs determined through manual angle measurement, and those calculated from the angles predicted by RGB-MAS and the Vicon system, respectively. The maximum RMSE between RGB-MAS and manual analysis is 1.35, while the maximum Vicon vs. manual RMSE is 1.78. Since the closer a value of RMSE is to zero, the better the prediction accuracy is, it can be observed that RGB-MAS compared to a most used manual analysis is generally able to provide a result closer to that of the Vicon systems. However, this should not be interpreted as a better performance of RGB-MAS than Vicon: the result that can be obtained, instead, is that the values provided by Vicon, in some cases, are very different from both those of the RGB-MAS system and those estimated manually. This is because the result of the Vicon system is not affected by estimation errors due to perspective distortions, which instead occur with the other two systems. Ultimately, the RGB-MAS system can provide estimates that are very similar to those obtained from a manual extraction, although the system’s accuracy is poor compared to the Vicon.

As can be seen from

Table 5, the risk indices calculated from the angles provided by the RGB-MAS system, those evaluated from manually measured angles, and those provided by Vicon belong to the same ranges. The only exception can be found for the Reach posture, where the Vicon underestimated the scores of a whole point. Despite the overestimations in angle prediction, the RULA score evaluation made considering the extension of the angles within particular risk ranges filters out noise and slight measurement inaccuracies. This leads to RULA scores that are slightly different in numbers but can be considered almost the same in terms of risk ranges.

4. Discussion

This paper aims to introduce a novel tool to help ergonomists in ergonomic risk assessments by automatically extracting angles from video acquisition, in a quicker way than the traditional one. Its overall systematic reliability and numerical accuracy are assessed by comparing the tool’s performance in ergonomics evaluation with the one obtained by standard procedures, representing the gold standard in the context.

Results suggest that it generally provides a good consistency in predicting the angles from the front camera and a slightly less accuracy with the lateral one, with a broader variability than the Vicon. However, in most cases, the average and median values are relatively close to the reference one. This apparent limitation should be analyzed in the light of the setup needed to obtain this data; in fact, by using only two cameras (instead of the nine ones the Vicon needs), we obtained reliable angles proper to compute a RULA score.

Although it has greater accuracy than the proposed tool, the Vicon requires installing a vast number of cameras (at least six), precisely positioned in the space, to completely cover the work area and ensure the absence of occlusions. In addition, such a system requires calibration and forces workers to wear markers in precise positions. However, when performing a manual ergonomic risk assessment in a real working environment, given the constraints typically presented, an ergonomist usually can collect videos from one or two cameras at most: the proposed RGB-MAS copes with this aspect providing predicted angles even from the blind side of the subject (like a human could do, but quicker), or when the subject results partially occluded.

As proof of this, it is worth noticing that the pickup posture, initially considered for its tendency to introduce occlusion, was then discarded from the comparison just for the occlusion leading to the lack of data from the Vicon system, while no problems seemed to arise with the RGB-MAS.

In addition, the RMSE values obtained comparing the RGB-MAS RULA scores with the manual one showed tighter variability than the same values resulting from the comparison between the RULA scores estimated through the Vicon and the manual analysis. This suggests that the RGB-MAS can be helpful to fruitfully support ergonomists to estimate the RULA score on a first exploratory evaluation. The proposed system can extract angles with a numerical accuracy comparable to one of the reference systems, at least in a controlled environment such as a laboratory. The next step will be to test its methodological reliability and instrumental feasibility in a real working environment, where a Vicon-like system cannot be introduced due to its limitations (e.g., installation complexity, calibration requirements, occlusion sensitivity).

Study Limitations

This study provides the results of a first assessment of the proposed system, with the aim to measure its accuracy and to preliminary determine its utility for ergonomic assessment. Many studies should be carried out to fully understand its practical suitability to be used for ergonomic assessment in real working environments. The experiment was conducted only in the laboratory and not in a real working environment. This limits the study results. Therefore, it did not allow the researchers to evaluate the instrument’s sensitivity to any changes in lighting or unexpected illumination conditions (e.g., glares or reflections). Further studies are needed to fully evaluate the implementation constraints of the proposed system in a real working environment.

In addition, the study is limited to evaluating the RULA risk index related to static postures only. Further studies will be needed to evaluate the possibility of using the proposed system for the acquisition of data necessary for other risk indexes (e.g., REBA, OCRA), also considering dynamic postures.

Another limitation is that the experiment conducted did not entirely evaluate the proposed system functionalities in conditions of severe occlusion (e.g., as could happen when the workbench partially covers the subject). Despite results evidenced that the proposed system, unlike Vicon, does not suffer from minor occlusion (i.e., due to the presence of a box during a picking operation), further studies are needed to accurately assess the sensitivity of the proposed system with different levels of occlusion.

Another limitation is the small number of subjects involved in the study. A small group of subjects was involved, with limited anthropometric variation, assuming that the tf-pose-estimation model was already trained on a large dataset. Further studies will need to confirm whether anthropometric variations affect the results (e.g., whether and how the BMI factor may affect the estimated angle accuracy).

5. Conclusions

This work proposes a valuable tool, namely RGB motion analysis system (RGB-MAS), to make a more efficient, and affordable ergonomic risk assessment. Our scope was to aid ergonomists in saving up time doing their job while maintaining highly reliable results. The lengthy part of their job is manually extracting human angles from video analysis based on video captures, by analyzing how ergonomists carry out an RULA assessment. In this context, the paper proposed a system able to speed up angle extraction and RULA calculation.

The validation in the laboratory shows the promising performance of the system, suggesting its possible suitability also in real working conditions (e.g., picking activities in the warehouse or manual tasks in the assembly lines), to enable the implementation of more effective health and safety management systems in the future, so as to improve the awareness of MSDs and to increase the efficiency and safety of the factory.

Overall, experimental results suggested that the RGB-MAS can be useful to support ergonomists to estimate the RULA score, providing results comparable to those estimated by ergonomic experts. The proposed system allows ergonomists and companies to reduce the cost necessary to perform ergonomic analysis, due to decreasing time for risk assessment. This competitive advantage makes it appealing not only to large-sized enterprises, but also to small and medium-sized enterprises, wishing to improve the working conditions of their workers. The main advantages of the proposed tool are: the ease of use, the wide range of scenarios where it can be installed, its full compatibility with every RGB commercially available camera, no-need calibration, low CPU and GPU performance requirements (i.e., it can process video recordings in a matter of seconds by using a common laptop), and low cost.

However, according to the experimental results, the increase in efficiency that the system allows comes at the expense of small errors in angle estimation and ergonomic evaluation: since the proposed system is not based on any calibration procedure and is still affected by perspective distortion problems, it obviously does not reach the accuracy of the Vicon. Nonetheless, if it is true that the Vicon system is to be considered as the absolute truth as far as accuracy is concerned, it is also true that using it in a real working environment is actually impossible, since it greatly suffers problem occlusion (even the presence of an object such as a small box can determine the loss of body tracking), and requires:

A high amount of highly expensive cameras, placed in the space in a way that is impracticable in a real work environment.

A preliminary calibration procedure.

The use of wearable markers may invalidate the quality of the measurement as they are invasive.

Future studies should aim to improve the current functionalities of the proposed system. Currently, the system is incapable of automatically computing RULA scores. A spreadsheet based on the derived angles is filled to obtain them. However, it should not be difficult to implement such functionality. In particular, future studies should be focused to implement a direct stream of the angles extracted by the RGB-MAS system to a structured, ergonomic risk assessment software (e.g., Siemens Jack) to animate a virtual mannikin, again automatically obtaining RULA scores.

Moreover, the proposed system cannot predict hand- and wrist-related angles: further research might cope with this issue and try to fill the gap. For example, possible solutions can be those proposed in [

56,

57].

For a broader application of the proposed RGB-MAS system, other efforts should be made to improve the angles prediction accuracy.

Moreover, the main current issue is that it is not always possible to correctly predict shoulder abduction and flexion angles with non-calibrated cameras, e.g., when the arms are simultaneously showing flexion in the lateral plane and abduction in the frontal plane. This comes from the fact that, at the moment, there is no spatial correlation between the two cameras: the reference system is not the same for both, so it is not possible to determine 3D angles. Thus, another topic for future work may cover the development of a dedicated algorithm to correlate the spatial position of the cameras one to each other. In addition, such an algorithm should provide a (real-time) correction to effectively manage the inevitable perspective distortion introduced by the lenses, to improve the system accuracy. However, all of this would require the introduction of a calibration procedure that would slow down the implementation of the system in real working places.