Automatic Point-Cloud Registration for Quality Control in Building Works

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

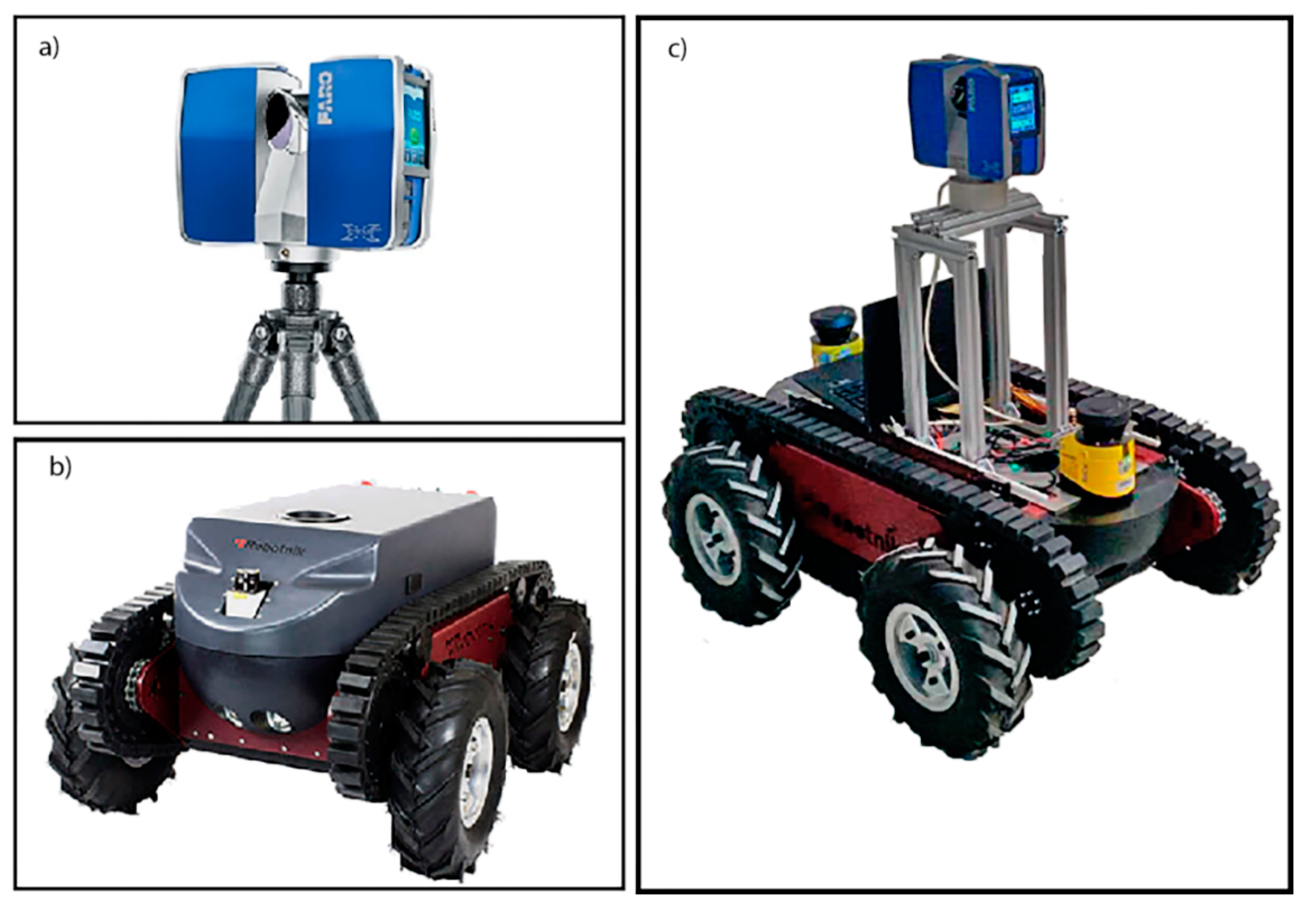

Unnamed Ground Vehicle (UGV) and Terrestrial Laser Scanner (TLS)

2.2. Method

2.2.1. Path Planning

2.2.2. Pre-Processing

2.2.3. Alignment

3. Results and Discussion

3.1. Case Study

3.2. Data Acquisition

3.3. Automatic Registration

3.4. Quality Control

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Azhar, S.; Khalfan, M.; Maqsood, T. Building information modelling (BIM): Now and beyond. Constr. Econ. Build. 2012, 12, 15–28. [Google Scholar] [CrossRef]

- Real Decreto 1515/2018, de 28 de diciembre, por el que se crea la Comisión interministerial para la incorporación de la metodología BIM en la contratación pública. Available online: https://www.boe.es/eli/es/rd/2018/12/28/1515 (accessed on 28 December 2020).

- Jung, W.; Lee, G. The status of BIM adoption on six continents. Int. J. Civ. Environ. Struct. Constr. Archit. Eng. 2015, 9, 444–448. [Google Scholar]

- Kreider, R.G.; Messner, J.I. The Uses of BIM: Classifying and Selecting BIM Uses; State College Pennsylvania: State College, PA, USA, 2013; p. 22. [Google Scholar]

- Remondino, F.; Guarnieri, A.; Vettore, A. 3D modeling of close-range objects: Photogrammetry or laser scanning? In Videometrics VIII; International Society for Optics and Photonics: San José, CA, USA, 2005; Volume 5665, p. 56650M. [Google Scholar]

- Alidoost, F.; Arefi, H. An image-based technique for 3D building reconstruction using multi-view UAV images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 43. [Google Scholar] [CrossRef]

- Guarnieri, A.; Vettore, A.; Remondino, F.; Church, O.P. Photogrammetry and Ground-Based Laser Scanning: Assessment of Metric Accuracy of the 3D Model of Pozzoveggiani Church. 2004. Available online: https://www.fig.net/resources/proceedings/fig_proceedings/athens/papers/ts26/TS26_4_Guarnieri_et_al.pdf (accessed on 5 February 2021).

- Bernat, M.; Janowski, A.; Rzepa, S.; Sobieraj, A.; Szulwic, J. Studies on the use of terrestrial laser scanning in the maintenance of buildings belonging to the cultural heritage. In Proceedings of the 14th Geoconference on Informatics, Geoinformatics and Remote Sensing, SGEM, Albena, Bulgaria, 17–26 June 2014; Volume 3, pp. 307–318. [Google Scholar]

- Pritchard, D.; Sperner, J.; Hoepner, S.; Tenschert, R. Terrestrial laser scanning for heritage conservation: The Cologne Cathedral documentation project. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 213–220. [Google Scholar] [CrossRef]

- Sánchez-Aparicio, L.J.; Del Pozo, S.; Ramos, L.F.; Arce, A.; Fernandes, F.M. Heritage site preservation with combined radiometric and geometric analysis of TLS data. Autom. Constr. 2018, 85, 24–39. [Google Scholar] [CrossRef]

- Sánchez-Aparicio, L.J.; Bautista-De Castro, Á.; Conde, B.; Carrasco, P.; Ramos, L.F. Non-destructive means and methods for structural diagnosis of masonry arch bridges. Autom. Constr. 2019, 104, 360–382. [Google Scholar] [CrossRef]

- Del Pozo, S.; Herrero-Pascual, J.; Felipe-García, B.; Hernández-López, D.; Rodríguez-Gonzálvez, P.; González-Aguilera, D. Multispectral radiometric analysis of façades to detect pathologies from active and passive remote sensing. Remote Sens. 2016, 8, 80. [Google Scholar] [CrossRef]

- Cabo, C.; Del Pozo, S.; Rodríguez-Gonzálvez, P.; Ordóñez, C.; González-Aguilera, D. Comparing terrestrial laser scanning (TLS) and wearable laser scanning (WLS) for individual tree modeling at plot level. Remote Sens. 2018, 10, 540. [Google Scholar] [CrossRef]

- Akca, D. Full Automatic Registration of Laser Scanner Point Clouds; ETH Zurich: Zurich, Switzerland, 2003. [Google Scholar]

- Theiler, P.W.; Schindler, K. Automatic registration of terrestrial laser scanner point clouds using natural planar surfaces. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 3, 173–178. [Google Scholar] [CrossRef]

- Chen, S.; Nan, L.; Xia, R.; Zhao, J.; Wonka, P. PLADE: A Plane-Based Descriptor for Point Cloud Registration with Small Overlap. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2530–2540. [Google Scholar] [CrossRef]

- Gai, M.; Cho, Y.K.; Xu, Q. Target-free automatic point clouds registration using 2D images. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering, Los Angeles, California, 23–25 June 2013; pp. 865–872. [Google Scholar]

- Kim, P.; Chen, J.; Cho, Y.K. Automated point cloud registration using visual and planar features for construction environments. J. Comput. Civ. Eng. 2018, 32, 04017076. [Google Scholar] [CrossRef]

- Ge, X.; Hu, H.; Wu, B. Image-Guided Registration of Unordered Terrestrial Laser Scanning Point Clouds for Urban Scenes. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9264–9276. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B. Fully automatic image-based registrationof unorganised TLS data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, W12. [Google Scholar]

- Di Filippo, A.; Sánchez-Aparicio, L.; Barba, S.; Martín-Jiménez, J.; Mora, R.; González Aguilera, D. Use of a Wearable Mobile Laser System in Seamless Indoor 3D Mapping of a Complex Historical Site. Remote Sens. 2018, 10, 1897. [Google Scholar] [CrossRef]

- Sánchez-Aparicio, L.J.; Conde, B.; Maté-González, M.A.; Mora, R.; Sánchez-Aparicio, M.; García-Álvarez, J.; González-Aguilera, D. A Comparative Study between WMMS and TLS for the Stability Analysis of the San Pedro Church Barrel Vault by Means of the Finite Element Method. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4215, 1047–1054. [Google Scholar] [CrossRef]

- Otero, R.; Lagüela, S.; Garrido, I.; Arias, P. Mobile indoor mapping technologies: A review. Autom. Constr. 2020, 120, 103399. [Google Scholar] [CrossRef]

- Geo-SLAM Zeb-Revo. Available online: http://geoslam.com/hardware-products/zeb-revo/ (accessed on 5 February 2021).

- Faro Focus 3D. Features, Benefits & Technical Specifications. Available online: https://knowledge.faro.com/Hardware/3D_Scanners/Focus/Technical_Specification_Sheet_for_the_Laser_Scanner_Focus_3D (accessed on 5 February 2021).

- EHE-08. Instrucción de Hormigón Estructural Secretaría General Técnica; Ministerio de Fomento: Madrid, España, 2008. [Google Scholar]

- Kim, P.; Chen, J.; Cho, Y.K. SLAM-driven robotic mapping and registration of 3D point clouds. Autom. Constr. 2018, 89, 38–48. [Google Scholar] [CrossRef]

- Tombari, F.; Salti, S.; Di Stefano, L. Performance evaluation of 3D keypoint detectors. Int. J. Comput. Vis. 2013, 102, 198–220. [Google Scholar] [CrossRef]

- Chen, Z.; Czarnuch, S.; Smith, A.; Shehata, M. Performance evaluation of 3D keypoints and descriptors. In International Symposium on Visual Computing; Springer: Cham, Switzerland, 2016; pp. 410–420. [Google Scholar]

- Hänsch, R.; Weber, T.; Hellwich, O. Comparison of 3D interest point detectors and descriptors for point cloud fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 57. [Google Scholar] [CrossRef]

- Lowe, G. SIFT-the scale invariant feature transform. Int. J. 2004, 2, 91–110. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Böhm, J.; Becker, S. Automatic marker-free registration of terrestrial laser scans using reflectance. In Proceedings of the 8th Conference on Optical 3D Measurement Techniques, Zurich, Switzerland, 9–12 July 2007; pp. 9–12. [Google Scholar]

- Moussa, W.; Abdel-Wahab, M.; Fritsch, D. An automatic procedure for combining digital images and laser scanner data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, B5. [Google Scholar] [CrossRef]

- Sipiran, I.; Bustos, B. Harris 3D: A robust extension of the Harris operator for interest point detection on 3D meshes. Vis. Comput. 2011, 27, 963. [Google Scholar] [CrossRef]

- Pratikakis, I.; Spagnuolo, M.; Theoharis, T.; Veltkamp, R. A robust 3D interest points detector based on Harris operator. In Eurographics Workshop on 3D Object Retrieval; 2010; Volume 5, Available online: https://users.dcc.uchile.cl/~isipiran/papers/SB10b.pdf (accessed on 4 February 2021).

- Tombari, F.; Salti, S.; Di Stefano, L. A combined texture-shape descriptor for enhanced 3D feature matching. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 809–812. [Google Scholar]

- Aldoma, A.; Marton, Z.C.; Tombari, F.; Wohlkinger, W.; Potthast, C.; Zeisl, B.; Rusu, R.B.; Gedikli, S.; Vincze, M. Tutorial: Point cloud library: Three-dimensional object recognition and 6 DOF pose estimation. IEEE Robot. Autom. Mag. 2012, 19, 80–91. [Google Scholar] [CrossRef]

- Salih, Y.; Malik, A.S.; Walter, N.; Sidibé, D.; Saad, N.; Meriaudeau, F. Noise robustness analysis of point cloud descriptors. In International Conference on Advanced Concepts for Intelligent Vision Systems; Springer: Cham, Switzerland, 2013; pp. 68–79. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391. [Google Scholar]

- Filipe, S.; Alexandre, L.A. A comparative evaluation of 3D keypoint detectors in a RGB-D object dataset. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Porgutal, 5–8 January 2014; Volume 1, pp. 476–483. [Google Scholar]

- Orts-Escolano, S.; Morell, V.; García-Rodríguez, J.; Cazorla, M. Point cloud data filtering and downsampling using growing neural gas. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar]

- Díaz-Vilariño, L.; Frías, E.; Balado, J.; González-Jorge, H. Scan planning and route optimization for control of execution of as-designed BIM. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, XLII-4, 143–148. [Google Scholar] [CrossRef]

- Heikkilä, R.; Kivimäki, T.; Mikkonen, M.; Lasky, T.A. Stop & Go Scanning for Highways—3D Calibration Method for a Mobile Laser Scanning System. In Proceedings of the 27th International Symposium on Automation and Robotics in Construction, Bratislava, Slovakia, 25–27 June 2010; pp. 40–48. [Google Scholar]

- Vo, A.V.; Truong-Hong, L.; Laefer, D.F.; Bertolotto, M. Octree-based region growing for point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Gesto-Diaz, M.; Tombari, F.; Gonzalez-Aguilera, D.; Lopez-Fernandez, L.; Rodriguez-Gonzalvez, P. Feature matching evaluation for multimodal correspondence. ISPRS J. Photogramm. Remote Sens. 2017, 129, 179–188. [Google Scholar] [CrossRef]

- Holz, D.; Ichim, A.E.; Tombari, F.; Rusu, R.B.; Behnke, S. Registration with the point cloud library: A modular framework for aligning in 3-D. IEEE Robot. Autom. Mag. 2015, 22, 110–124. [Google Scholar] [CrossRef]

- Golomb, S.W.; Baumert, L.D. Backtrack programming. J. ACM 1965, 12, 516–524. [Google Scholar] [CrossRef]

- Dorigo, D. Ant Colonies for the Traveling Salesman Problem; Université Libre de Bruxelles: Brussels, Belgium, 1997; Volume 1, pp. 53–66. [Google Scholar]

- Han, X.F.; Jin, J.S.; Wang, M.J.; Jiang, W.; Gao, L.; Xiao, L. A review of algorithms for filtering the 3D point cloud. Signal Process. Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Balta, H.; Velagic, J.; Bosschaerts, W.; De Cubber, G.; Siciliano, B. Fast statistical outlier removal-based method for large 3D point clouds of outdoor environments. IFAC Pap. OnLine 2018, 51, 348–353. [Google Scholar] [CrossRef]

- Miknis, M.; Davies, R.; Plassmann, P.; Ware, A. Near real-time point cloud processing using the PCL. In Proceedings of the 2015 International Conference on Systems, Signals and Image Processing (IWSSIP), London, UK, 10–12 September 2015; pp. 153–156. [Google Scholar]

- Miknis, M.; Davies, R.; Plassmann, P.; Ware, A. Efficient point cloud pre-processing using the point cloud library. Int. J. Image Process. 2016, 10, 63–72. [Google Scholar]

- Ximin, Z.; Xiaoging, Y.; Wanggen, W.; Junxing, M.; Qingmin, L.; Libing, L. The Simplification of 3D Color Point Cloud Based on Voxel. In Proceedings of the IET International Conference on Smart and Sustainable City 2013 (ICSSC 2013), Shanghai, China, 19–20 August 2013. [Google Scholar]

- Yu, T.H.; Woodford, O.J.; Cipolla, R. A performance evaluation of volumetric 3D interest point detectors. Int. J. Comput. Vis. 2013, 102, 180–197. [Google Scholar] [CrossRef]

- Liu, J.; Jakas, A.; Al-Obaidi, A.; Liu, Y. A comparative study of different corner detection methods. In Proceedings of the 2009 IEEE International Symposium on Computational Intelligence in Robotics and Automation-(CIRA), Daejeon, Korea, 15–18 December 2009; pp. 509–514. [Google Scholar]

- Alexandre, L.A. 3D descriptors for object and category recognition: A comparative evaluation. In Proceedings of the Workshop on Color-Depth Camera Fusion in Robotics at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7 October 2012; Volume 20, p. 7. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Sensor Fusion IV: Control Paradigms and Data Structures; International Society for Optics and Photonics: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Sharp, G.C.; Lee, S.W.; Wehe, D.K. ICP registration using invariant features. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 90–102. [Google Scholar] [CrossRef]

- Li, P.; Wang, R.; Wang, Y.; Tao, W. Evaluation of the ICP Algorithm in 3D Point Cloud Registration. IEEE Access 2020, 8, 68030–68048. [Google Scholar] [CrossRef]

- Barnes, P.; Davies, N. BIM in Principle and in Practice; ICE Publishing: London, UK, 2015. [Google Scholar]

- Rodríguez-Gonzálvez, P.; Garcia-Gago, J.; Gomez-Lahoz, J.; González-Aguilera, D. Confronting passive and active sensors with non-gaussian statistics. Sensors 2014, 14, 13759–13777. [Google Scholar] [CrossRef] [PubMed]

| Target Based [14] | Plane Based [15,16] | Image Based [17] | Image Based + Network [18,19,20] | Proposed Method | |

|---|---|---|---|---|---|

| Target Need | X | ||||

| Image Need | X | X | |||

| Only Pairwise Registration | X | X | |||

| Minimum Overlap Required | X | X | X | X | X |

| Noise Sensitive | X | X | X | X | X |

| Parameter | Value |

|---|---|

| Mean laser range (m) | 8 |

| Security distance (m) | 0.8 |

| % Scanning overlap | 60% |

| Scanning resolution | 4 mm at 20 m |

| Bias | Dispersion | ||

|---|---|---|---|

| Median (m) | NMAD (m) | (BWMV) (m) | |

| Study Case 1 | 0.007 | ±0.007 | ±0.00005 |

| Study Case 2 | 0.008 | ±0.008 | ±0.00007 |

| Tolerances in Construction Elements | |

|---|---|

| Cross Section (D < 30) | +10–8 mm |

| Cross Section (30 < D < 100) | +12–10 mm |

| Cross Section (100 < D) | +24–20 mm |

| Vertical deviation outer edges columns | ±6 mm |

| Wall Thickness < 25 cm | +12–10 mm |

| Wall Thickness > 25 cm | +16–10 mm |

| Element | BIM Width (mm) | As-Built Width (mm) | |

|---|---|---|---|

| Study Case 1 | Pillar 1 | 500 | 500 |

| Pillar 2 | 450 | 450 | |

| Pillar 3 | 350 | 351 | |

| Pillar 5 | 450 | 449 | |

| Pillar 6 | 450 | 450 | |

| Pillar 7 | 350 | 348 | |

| Pillar 8 | 600 | 600 | |

| Study Case 2 | Pillar 1 | 600 | 597 |

| Pillar 2 | 450 | 452 | |

| Pillar 3 | 450 | 451 | |

| Pillar 4 | 450 | 448 | |

| Pillar 5 | 450 | 448 | |

| Pillar 6 | 400 | 398 | |

| Pillar 7 | 500 | 498 |

| Bias | Dispersion | |||

|---|---|---|---|---|

| Median (mm) | NMAD (mm) | (BWMV) (mm) | ||

| Study Case 1 | Pillar 1 | 503 | ±1.5 | ±0.5 |

| Pillar 2 | 450 | ±4.4 | ±9.6 | |

| Pillar 3 | 352 | ±3.0 | ±9.0 | |

| Pillar 5 | 449 | ±3.0 | ±9.8 | |

| Pillar 6 | 451 | ±1.5 | ±7.6 | |

| Pillar 7 | 348 | ±1.5 | ±3.3 | |

| Pillar 8 | 600 | ±1.5 | ±2.3 | |

| Study Case 2 | Pillar 1 | 597 | ±1.5 | ±2.9 |

| Pillar 2 | 452 | ±1.5 | ±1.6 | |

| Pillar 3 | 451 | ±1.5 | ±1.8 | |

| Pillar 4 | 447 | ±1.5 | ±1.6 | |

| Pillar 5 | 448 | ±2.9 | ±4.4 | |

| Pillar 6 | 398 | ±2.9 | ±7.7 | |

| Pillar 7 | 497 | ±1.5 | ±3.0 | |

| Id | BIM Model | As Built Model | Errors | |||

|---|---|---|---|---|---|---|

| Width (mm) | Length (mm) | Width–d1 (mm) | Length–d2 (mm) | Width (mm) | Length (mm) | |

| Pillar 3 (Study Case 1) | 350.0 | 500.0 | 350.9 | 490.6 | 0.9 | 0.4 |

| Pillar 9 (Study Case 2) | 360.0 | 500.0 | 360.1 | 500.2 | 0.1 | 0.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mora, R.; Martín-Jiménez, J.A.; Lagüela, S.; González-Aguilera, D. Automatic Point-Cloud Registration for Quality Control in Building Works. Appl. Sci. 2021, 11, 1465. https://doi.org/10.3390/app11041465

Mora R, Martín-Jiménez JA, Lagüela S, González-Aguilera D. Automatic Point-Cloud Registration for Quality Control in Building Works. Applied Sciences. 2021; 11(4):1465. https://doi.org/10.3390/app11041465

Chicago/Turabian StyleMora, Rocio, Jose Antonio Martín-Jiménez, Susana Lagüela, and Diego González-Aguilera. 2021. "Automatic Point-Cloud Registration for Quality Control in Building Works" Applied Sciences 11, no. 4: 1465. https://doi.org/10.3390/app11041465

APA StyleMora, R., Martín-Jiménez, J. A., Lagüela, S., & González-Aguilera, D. (2021). Automatic Point-Cloud Registration for Quality Control in Building Works. Applied Sciences, 11(4), 1465. https://doi.org/10.3390/app11041465