Design and Implementation of an Autonomous Electric Vehicle for Self-Driving Control under GNSS-Denied Environments

Abstract

:1. Introduction

2. Related Studies

2.1. Control

2.2. Vehicle Localization

2.3. Research Contribution

3. Proposed Approach

4. Vehicle State Estimation, Localization, and Sensors

4.1. The Proposed Algorithms for Vehicle State Estimation, Localization, and Sensors

4.2. Visual-Inertial Odometry Algorithm

4.3. Wheel Odometry Algorithm

4.4. Sensor Fusion

5. Control System Module

5.1. Kinematic Bicycle Model

5.2. The NMPC Algorithm

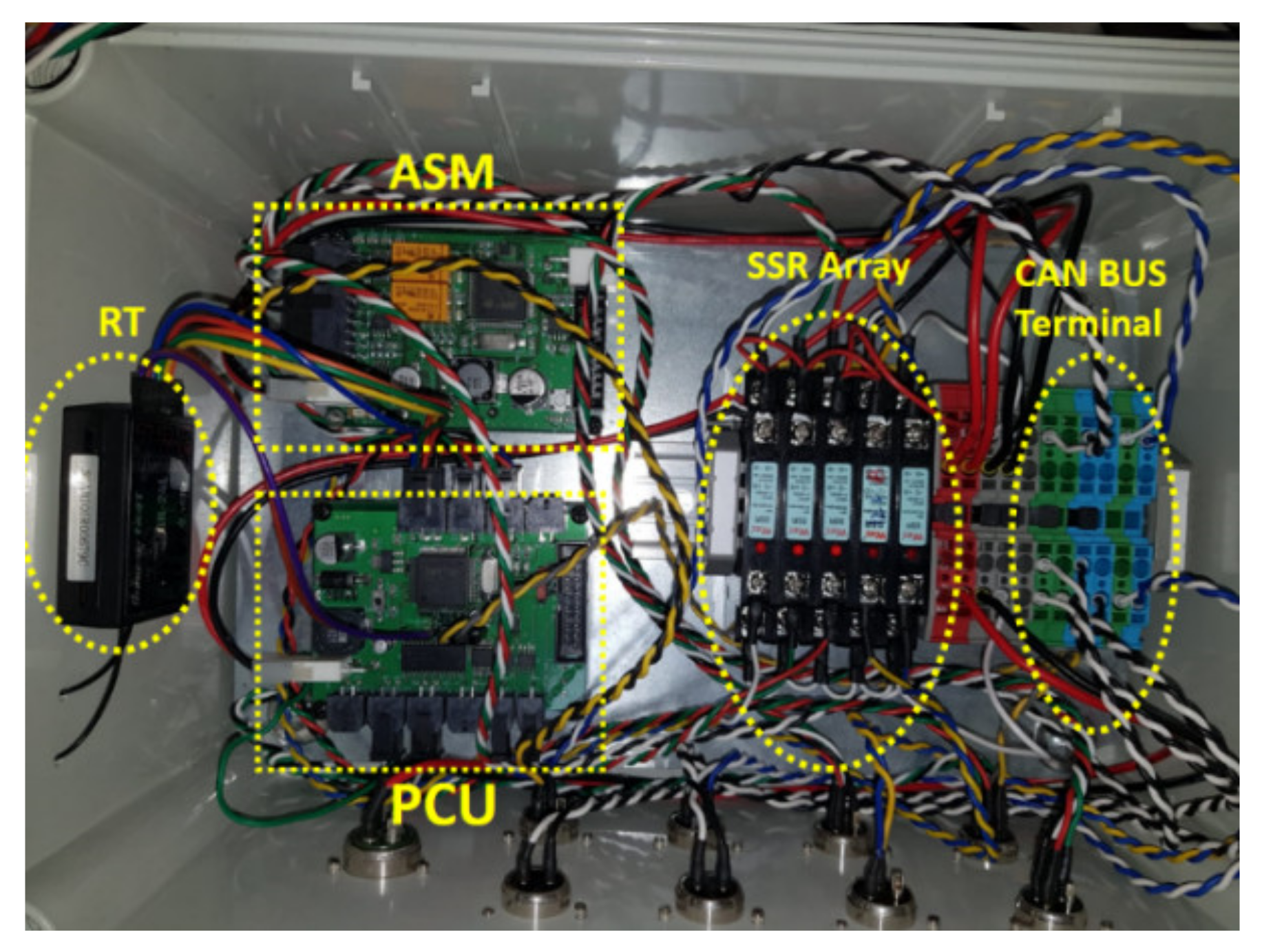

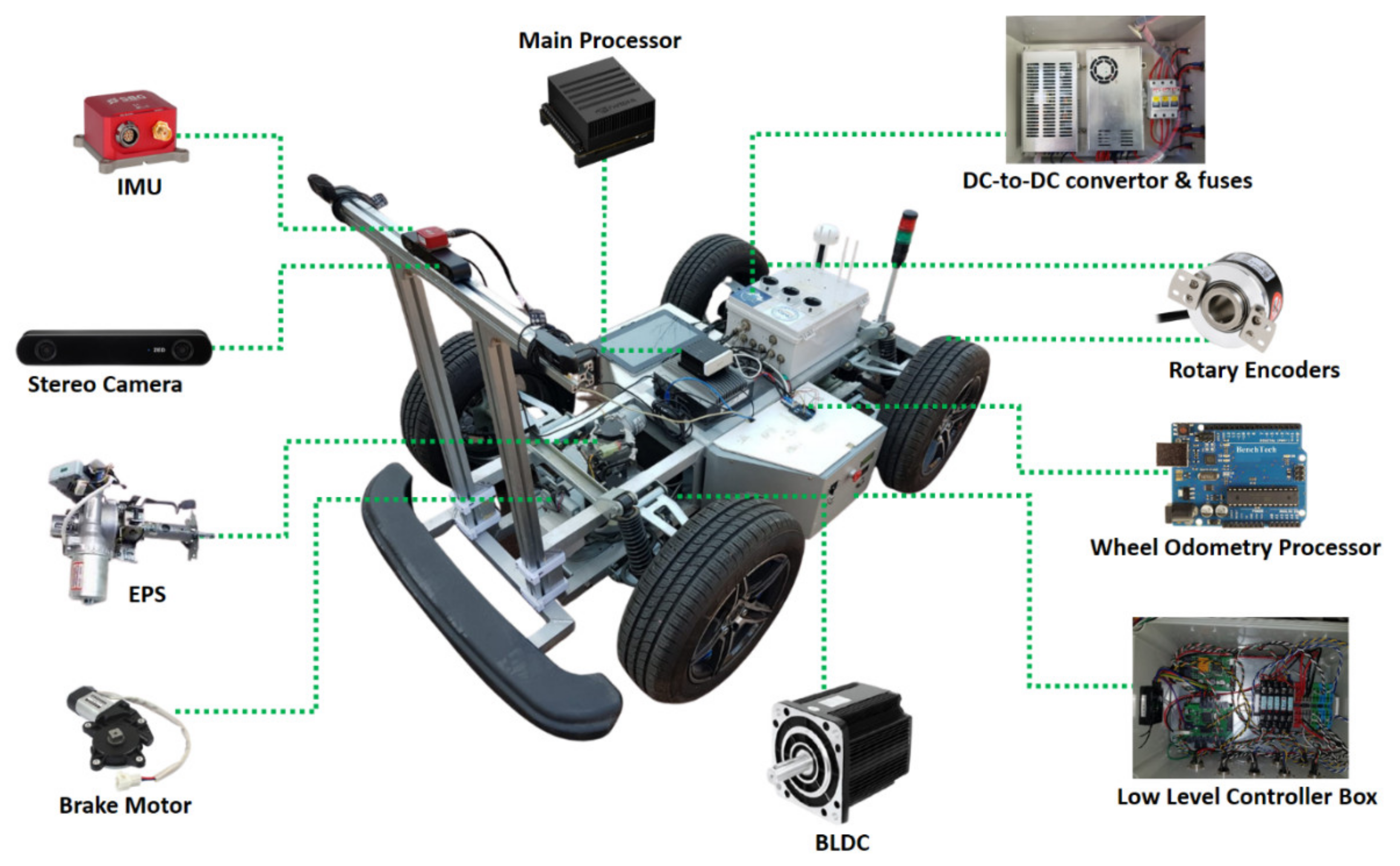

6. Hardware Architecture and Interfaces

7. Simulation and Real-Time Experimental Results from the Employed Localization Algorithm

8. Simulation and Evaluation of the Employed Control Algorithm

9. Real-Time Electric Vehicle Experimental Results

9.1. Trajectory Tracking

9.2. Path Following

10. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Maghenem, M.; Loria, A.; Nuno, E.; Panteley, E. Distributed full-consensus control of nonholonomic vehicles under non-differentiable measurement delays. IEEE Control Syst. Lett. 2021, 5, 97–102. [Google Scholar] [CrossRef]

- Zhao, P.; Chen, J.; Song, Y.; Tao, X.; Xu, T.; Mei, T. Design of a control system for an autonomous vehicle based on adaptive-PID. Int. J. Adv. Robot. Syst. 2012, 9, 44. [Google Scholar] [CrossRef]

- Barzegar, A.; Piltan, F.; Vosoogh, M.; Mirshekaran, A.M.; Siahbazi, A. Design serial intelligent modified feedback linearization like controller with application to spherical motor. Int. J. Inf. Technol. Comput. Sci. 2014, 6, 72–83. [Google Scholar] [CrossRef] [Green Version]

- Alouache, A.; Wu, Q. Genetic algorithms for trajectory tracking of mobile robot based on PID controller. In Proceedings of the 2018 IEEE 14th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 6–8 September 2018. [Google Scholar]

- Abdelhakim, G.; Abdelouahab, H. A new approach for controlling a trajectory tracking using intelligent methods. J. Electr. Eng. Technol. 2019, 14, 1347–1356. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The Robot That won the darpa grand challenge. In The 2005 DARPA Grand Challenge; Springer: Berlin/Heidelberg, Germany, 2007; Volume 36, p. 1. [Google Scholar]

- Amer, N.H.; Hudha, K.; Zamzuri, H.; Aparow, V.R.; Abidin, A.F.Z.; Kadir, Z.A.; Murrad, M. Adaptive modified Stanley controller with fuzzy supervisory system for trajectory tracking of an autonomous armoured vehicle. Rob. Auton. Syst. 2018, 105, 94–111. [Google Scholar] [CrossRef]

- Dominguez, S.; Ali, A.; Garcia, G.; Martinet, P. Comparison of lateral controllers for autonomous vehicle: Experimental results. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016. [Google Scholar]

- Morales, J.; Martínez, J.L.; Martínez, M.A.; Mandow, A. Pure-pursuit reactive path tracking for nonholonomic mobile robots with a 2D laser scanner. EURASIP J. Adv. Signal Process. 2009. [Google Scholar] [CrossRef] [Green Version]

- Pure Pursuit Controller-MATLAB & Simulink. Available online: https://www.mathworks.com/help/robotics/ug/pure-pursuit-controller.html (accessed on 30 January 2021).

- Mobarez, E.N.; Sarhan, A.; Ashry, M.M. Comparative robustness study of multivariable controller of fixed wing Ultrastick25-e UAV. In Proceedings of the 2018 14th International Computer Engineering Conference (ICENCO), Giza, Egypt, 29–30 December 2018. [Google Scholar]

- Norouzi, A.; Kazemi, R.; Azadi, S. Vehicle lateral control in the presence of uncertainty for lane change maneuver using adaptive sliding mode control with fuzzy boundary layer. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2018, 232, 12–28. [Google Scholar] [CrossRef]

- National Aeronaut Administration (Nasa). An Improved Lateral Control Wheel Steering Law for The Transport Systems Research Vehicle (TSRV); Createspace Independent Publishing Platform: North Charleston, SC, USA, 2018; ISBN 9781722072544. [Google Scholar]

- Vivek, K.; Ambalal Sheta, M.; Gumtapure, V. A comparative study of Stanley, LQR and MPC controllers for path tracking application (ADAS/AD). In Proceedings of the 2019 IEEE International Conference on Intelligent Systems and Green Technology (ICISGT), Visakhapatnam, India, 29–30 June 2019. [Google Scholar]

- Camacho, E.F.; Bordons Alba, C. Model Predictive Control, 2nd ed.; Springer: London, UK, 2007; ISBN 9780857293985. [Google Scholar]

- Findeisen, R.; Allgöwer, F. An Introduction to Nonlinear Model Predictive Control; Technische Universiteit Eindhoven Veldhoven: Eindhoven, The Netherlands, 2002; Volume 11, pp. 119–141. [Google Scholar]

- Canale, M.; Fagiano, L. Vehicle yaw control using a fast NMPC approach. In Proceedings of the 2008 47th IEEE Conference on Decision and Control, Cancun, Mexico, 9–11 December 2008. [Google Scholar]

- Kong, J.; Pfeiffer, M.; Schildbach, G.; Borrelli, F. Kinematic and dynamic vehicle models for autonomous driving control design. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, South Korea, 28 June–1 July 2015. [Google Scholar]

- Menhour, L.; d’Andrea-Novel, B.; Boussard, C.; Fliess, M.; Mounier, H. Algebraic nonlinear estimation and flatness-based lateral/longitudinal control for automotive vehicles. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An open-source SLAM system for monocular, stereo and RGB-D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In European Conference on Computer Vision, Proceedings of the ECCV 2014: Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 834–849. ISBN 9783319106045. [Google Scholar]

- Goncalves, T.; Comport, A.I. Real-time direct tracking of color images in the presence of illumination variation. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014. [Google Scholar]

- Krombach, N.; Droeschel, D.; Behnke, S. Combining feature-based and direct methods for semi-dense real-time stereo visual odometry. In Intelligent Autonomous Systems 14; Springer International Publishing: Cham, Switzerland, 2017; pp. 855–868. ISBN 9783319480350. [Google Scholar]

- Fanani, N. Predictive Monocular Odometry Using Propagation-Based Tracking; Goethe-Universität Frankfurt: Johann Wolfgang, Germany, 2018. [Google Scholar]

- Oskiper, T.; Zhu, Z.; Samarasekera, S.; Kumar, R. Visual odometry system using multiple stereo cameras and inertial measurement unit. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Sensing and Control for Autonomous Vehicles: Applications to Land, Water and Air Vehicles, 1st ed.; Fossen, T.I.; Pettersen, K.Y.; Nijmeijer, H. (Eds.) Springer International Publishing: Cham, Switzerland, 2017; ISBN 9783319553726. [Google Scholar]

- Jimenez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J. Indoor pedestrian navigation using an INS/EKF framework for yaw drift reduction and a foot-mounted IMU. In Proceedings of the 2010 7th Workshop on Positioning, Navigation and Communication, Dresden, Germany, 11–12 March 2010. [Google Scholar]

- Weiss, S.; Siegwart, R. Real-time metric state estimation for modular vision-inertial systems. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef] [Green Version]

- Yang, Z.; Shen, S. Monocular visual-inertial state estimation with online initialization and camera–IMU extrinsic calibration. IEEE Trans. Autom. Sci. Eng. 2017, 14, 39–51. [Google Scholar] [CrossRef]

- Usenko, V.; Engel, J.; Stuckler, J.; Cremers, D. Direct visual-inertial odometry with stereo cameras. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Yang, G.; Zhao, L.; Mao, J.; Liu, X. Optimization-based, simplified stereo visual-inertial odometry with high-accuracy initialization. IEEE Access 2019, 7, 39054–39068. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Tsotsos, K.; Chiuso, A.; Soatto, S. Robust inference for visual-inertial sensor fusion. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Kelly, J.; Sukhatme, G.S. Visual-inertial sensor fusion: Localization, mapping and sensor-to-sensor self-calibration. Int. J. Robot. Res. 2011, 30, 56–79. [Google Scholar] [CrossRef] [Green Version]

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust stereo visual inertial odometry for fast autonomous flight. arXiv 2017, arXiv:1712.00036. [Google Scholar] [CrossRef] [Green Version]

- Trajković, M.; Hedley, M. Fast corner detection. Image Vis. Comput. 1998, 16, 75–87. [Google Scholar] [CrossRef]

- Barzegar, A.; Doukhi, O.; Lee, D.-J.; Jo, Y.-H. Nonlinear Model Predictive Control for Self-Driving cars Trajectory Tracking in GNSS-denied environments. In Proceedings of the 2020 20th International Conference on Control, Automation and Systems (ICCAS), Busan-City, Korea, 13–16 October 2020. [Google Scholar]

- Huang, G.P.; Mourikis, A.I.; Roumeliotis, S.I. Observability-based rules for designing consistent EKF SLAM estimators. Int. J. Robot. Res. 2010, 29, 502–528. [Google Scholar] [CrossRef] [Green Version]

- Hesch, J.A.; Kottas, D.G.; Bowman, S.L.; Roumeliotis, S.I. Observability-constrained vision-aided inertial navigation. Univ. Minn. Dep. Comput. Sci. Eng. MARS Lab. Tech. Rep. 2012, 1, 6. [Google Scholar]

- Castellanos, J.A.; Martinez-Cantin, R.; Tardós, J.D.; Neira, J. Robocentric map joining: Improving the consistency of EKF-SLAM. Rob. Auton. Syst. 2007, 55, 21–29. [Google Scholar] [CrossRef] [Green Version]

- Moore, T.; Stouch, D. A generalized extended Kalman filter implementation for the robot operating system. In Intelligent Autonomous Systems 13; Springer International Publishing: Cham, Switzerland, 2016; pp. 335–348. ISBN 9783319083377. [Google Scholar]

- Matute, J.A.; Marcano, M.; Diaz, S.; Perez, J. Experimental validation of a kinematic bicycle model predictive control with lateral acceleration consideration. IFAC Pap. OnLine 2019, 52, 289–294. [Google Scholar] [CrossRef]

- The Málaga Stereo and Laser Urban Data Set. Available online: https://www.mrpt.org/MalagaUrbanDataset (accessed on 22 February 2021).

- Andersson, J.A.E.; Gillis, J.; Horn, G.; Rawlings, J.B.; Diehl, M. CasADi: A software framework for nonlinear optimization and optimal control. Math. Program. Comput. 2019, 11, 1–36. [Google Scholar] [CrossRef]

- Wächter, A.; Biegler, L.T. On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 2006, 106, 25–57. [Google Scholar] [CrossRef]

| Parameter | Quantity |

|---|---|

| Right wheel radius ( | 0.27 m |

| Left wheel radius () | 0.27 m |

| Distance between wheels () | 0.975 m |

| Hardware Unit | Specifications |

|---|---|

| GPU | 512-core Volta GPU with tensor cores |

| CPU | 8-core ARM v8.2 64-bit CPU, 8 MB L2 +4 MB L3 |

| Memory | 32 GB 256-bit LPDDR4xI137GB/s |

| Accelerometer Parameters | Quantity | Gyroscopes Parameters | Quantity |

|---|---|---|---|

| Scale factor stability (%) | 0.1 | Scale factor stability (%) | 0.05 |

| Nonlinearity (% of FS) | 0.2 | Nonlinearity (% of FS) | 0.05 |

| One year bias stability (mg) | 5 | One year bias stability (°/s) | 0.2 |

| Velocity random walk (µg/) | 100 (x,y) | Angular random walk (°/) | 0.16 |

| 150 (z) | |||

| In-run bias instability (µg) | 20 | In-run bias instability (°/hr) | 8 |

| Vibrating rectification error (mg/) | 7 | Orthogonality | 0.05 |

| Bandwidth (Hz) | 250 | Bandwidth (Hz) | 133 |

| Sampling rate (kHz) | 3 | Sampling rate (kHz) | 0.05 |

| Parameter | Specifications/Quantity |

|---|---|

| Output resolution | Side by side 2× (2208 × 1242) @ 15 fps |

| 2× (1920 × 1080) @ 30 fps 2× (1280 × 720) @ fps 2× (640 × 480) @ 100 fps | |

| Output format | YUV 4:2:2 |

| Field of view | Max. 110° (D) |

| Baseline | 120 mm |

| Interface | USB 3.0 |

| Sensor type | 1/2.7″ |

| Active array size | 4 M pixels per sensor |

| Focal length | 2.8 mm (0.11″)—f/2.0 |

| Shutter | Electronic synchronized rolling shutter |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barzegar, A.; Doukhi, O.; Lee, D.-J. Design and Implementation of an Autonomous Electric Vehicle for Self-Driving Control under GNSS-Denied Environments. Appl. Sci. 2021, 11, 3688. https://doi.org/10.3390/app11083688

Barzegar A, Doukhi O, Lee D-J. Design and Implementation of an Autonomous Electric Vehicle for Self-Driving Control under GNSS-Denied Environments. Applied Sciences. 2021; 11(8):3688. https://doi.org/10.3390/app11083688

Chicago/Turabian StyleBarzegar, Ali, Oualid Doukhi, and Deok-Jin Lee. 2021. "Design and Implementation of an Autonomous Electric Vehicle for Self-Driving Control under GNSS-Denied Environments" Applied Sciences 11, no. 8: 3688. https://doi.org/10.3390/app11083688