Abstract

Educational Virtual Modeling (EVM) is a novel VR-based application for sketching and modeling in an immersive environment designed to introduce freshman engineering students to modeling concepts and reinforce their understanding of the spatial connection between an object and its 2D projections. It was built on the Unity 3D game engine and Microsoft’s Mixed Reality Toolkit (MRTK). EVM was designed to support the creation of the typical parts used in exercises in basic engineering graphics courses with a special emphasis on a fast learning curve and a simple way to provide exercises and tutorials to students. To analyze the feasibility of using EVM for this purpose, a user study was conducted with 23 freshmen and sophomore engineering students that used both EVM and Trimble SketchUp to model six parts using an axonometric view as the input. Students had no previous experience in any of the two systems. Each participant went through a brief training session and was allowed to use each tool freely for 20 min. At the end of the modeling exercises with each system, the participants rated its usability by answering the System Usability Scale (SUS) questionnaire. Additionally, they filled out a questionnaire for assessment of the system functionality. The results demonstrated a very high SUS score for EVM (M = 92.93, SD = 6.15), whereas Trimble SketchUp obtained only a mean score of 76.30 (SD = 6.69). The completion time for the modeling tasks with EVM showed its suitability for regular class use, despite the fact that it usually takes longer to complete the exercises in the system than in Trimble SketchUp. There were no statistically significant differences regarding functionality assessment. At the end of the experimental session, participants were asked to express their opinion about the systems and provide suggestions for the improvement of EVM. All participants showed a preference for EVM as a potential tool to perform exercises in the engineering graphics course.

1. Introduction and Related Work

The application of Virtual Reality (VR) technology to 3D modeling was one of the first uses that VR pioneers gave to the primitive hardware that was available in the 1990s. 3DM [1] was one of the first systems with the capability of creating 3D geometry. The system was implemented with a VPL EyePhone head-mounted display (HMD) with a 442 × 238 point LCD screen, a field of view of 108° × 76°, and a price of around $9000 [2]. The resolution was so low that the text labels on the buttons were very difficult to read. The 3DM system was able to create a 3D geometry by the direct construction of triangular facets, the extrusion of polylines, and the creation of a set of basic 3D primitives. Due to the important limitation on the resolution of the screens in the available commercial HMDs, other researchers opted for desktop VR solutions such as HoloSketch [3]. This early system used a regular high-resolution monitor (960 × 680, 20 in screen) coupled with stereo shutter glasses to provide stereo vision at a 56.45 Hz refresh rate. It supported a set of primitive 3D objects, free-form tubes, 3D isolated line segments, and polyline wires, among others. The system was a wireframe modeler without any surface or solid representations.

The hardware capabilities, both in terms of processing and visualization, were the limiting factors in the systems developed during these years. Fortunately, the development of low-cost HMDs that began with the DK1 and DK2 Oculus Rift development kits [4] in 2013 and 2014 fueled the development of novel systems that leveraged the affordability of the new generation of HMDs.

The creation of precise geometry in a VR environment has followed two main routes [5]: create a VR front-end to interact with a commercial CAD system or provide 3D modeling functionality using a geometric kernel such as Open Cascade [6] or ACIS [7]. An example of the first approach (linking a VR front-end with a CAD system) is cRea-VR [8], where a middleware architecture was developed to interact with Dassault Systèmes’ CATIA V5 in VR [9]. Another prototype system [10] interfaced a CAD system (Autodesk Fusion 360 [11]) with a game engine (Autodesk Stingray [12]) with limited modeling capabilities that supported the creation of solid prisms and spheres, snapping them to a grid, and performing cuts of similar shapes.

Instead of controlling a host CAD system to create 3D geometry, an alternative research line focuses on supporting 3D sketching for VR. For example, Multiplanes [13], provides a VR-based 3D sketching environment that supports 3D freehand drawing that automatically beautifies strokes to compensate for the difficulty of drawing in 3D. Similarly, Smart3DGuides [14] automatically generates visual guidance by analyzing the user’s gaze, controller position and orientation, and previous strokes in the VR environment, to increase the overall quality of the drawn shapes. To help users create sketches with higher precision, VRSketchIn [15] combines a 6DoF-tracked pen and a 6DoF-tracked tablet as the input devices, supporting unconstrained 3D mid-air sketching with constrained 2D-surface-based sketching.

Other researchers have used commercial VR applications to study the reaction of users when they have to perform sketching and modeling tasks in the virtual environment. The commercial success of some HMDs has created a market for this type of system. The most widely recognized applications include:

- Gravity Sketch [16];

- Tilt Brush [17];

- Master Piece [18];

- Kodon [19];

- ShapeLab [20];

- Adobe Medium [21];

- flyingshapes [22].

The results are contradictory. For example, a user study [23] with Gravity Sketch and Kodon concluded that these VR applications do not provide significant advances compared to conventional 2D sketching tools. This study also reports that the use of these VR applications produced noticeably more physical fatigue than traditional 2D sketching. Another study [24] compared Gravity Sketch versus paper sketching and offered a positive view by considering VR sketching as a unique form of visual representation that facilitates the rapid and flexible creation of 3D models, engaging users in visual thinking and visual communication activities in ways that cannot be reached with any other tool.

An experiment with Tilt Brush [25] compared paper sketching to VR sketching, with and without a physical surface to rest the stylus. The study concluded that the absence of a physical drawing surface is a major source of inaccuracies in VR sketching. A second experiment analyzed the use of visual guidance to help with the sketching process and improve precision. The authors reported that visual guidance increases positional accuracy, but produces strokes of lesser aesthetic quality.

Another study using Gravity Sketch [26] found that VR modelling is natural and fast and provides better spatial perception for users. However, VR modeling is less precise compared to desktop tools. The study also found that current VR tools do not provide suitable functionality for creating technical objects as they are oriented toward the creation of free-form organic shapes.

Context and Objectives

Some of the main learning outcomes of a typical freshmen engineering graphics course are [27,28] 2D and 3D visualization, mapping between 2D and 3D, planar graphical elements, sectional views, methodologies for object representation, projection theory, parallel projection methodologies, drawing conventions, dimensioning, and solid modeling. Unfortunately, many of these topics do not appeal to engineering students [29]. The introduction of new technologies such as augmented reality [30,31,32] gamification with mobile devices [33] and computer-aided sketching [34] in the area of engineering graphics has always had a positive impact on student motivation. Considering that in the near future, low-cost VR headsets will open a real possibility to add this technology to regular teaching/learning practices in this field, a review of commercial available software [16,17,18,19,20,21,22] was conducted to assess their suitability for the type of exercises and topics covered in first-year engineering graphics courses. This review concluded that the learning curve of these systems, their major focus on free-from surfaces, and the difficulty of delivering exercises and tutorials in an integrated manner made them unsuitable for meeting the learning outcomes described previously, leading to the development of the Educational Virtual Modeling (EVM) system described in this paper.

EVM was developed to explore the feasibility of using a simple modeling application to promote students’ motivation and support the learning of key topics in the engineering graphics discipline such as modeling and mapping between 2D and 3D.

The option of designing a VR front-end to interact with a commercial CAD system was discarded due to its complexity. Building a VR modeling application on a game engine platform was considered the best alternative. This approach would allow exploring the best way to provide exercises and tutorials to students inside the virtual space and the creation of the classic parts used as exercises in basic engineering graphics courses. These parts are mainly composed of prismatic and cylindrical elements.

In the next sections, the system components will be described in detail to facilitate the understanding of EVM’s functionality. Next, the results of a user study with 23 participants are presented to analyze if the design objectives were achieved. Trimble SketchUp [35], a desktop modeling system known for its ease of use, was used as a reference. The paper ends with the discussion of the results and the conclusions, limitations of the study, and future work planned.

2. System Description

EVM was developed in C# on the Unity platform Version 2019.2.16f1 [36] and Microsoft’s Mixed Reality Toolkit (MRTK) [37], a set of cross-platform tools for the development of mixed reality experiences.

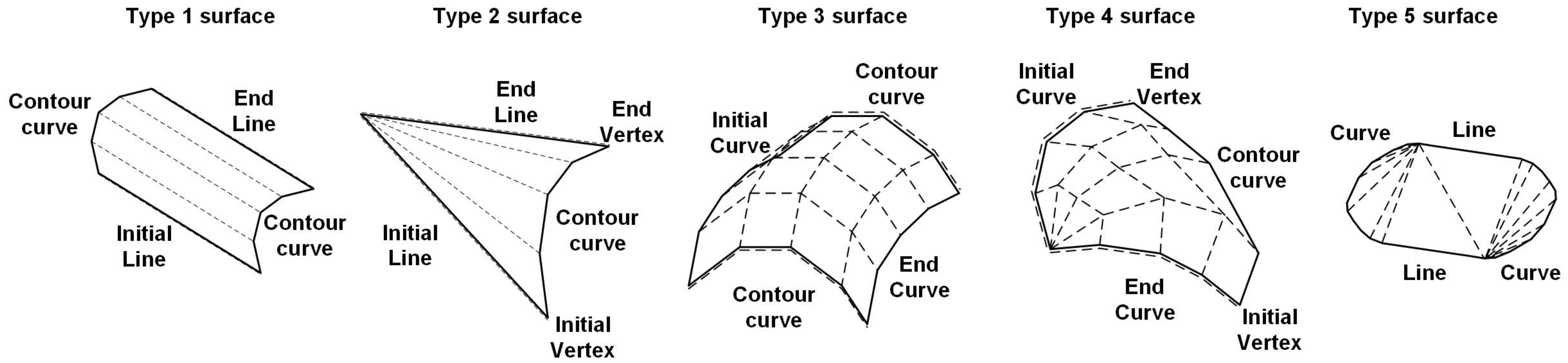

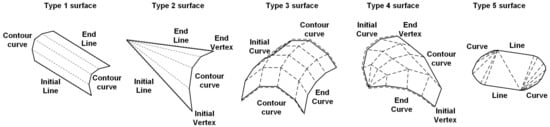

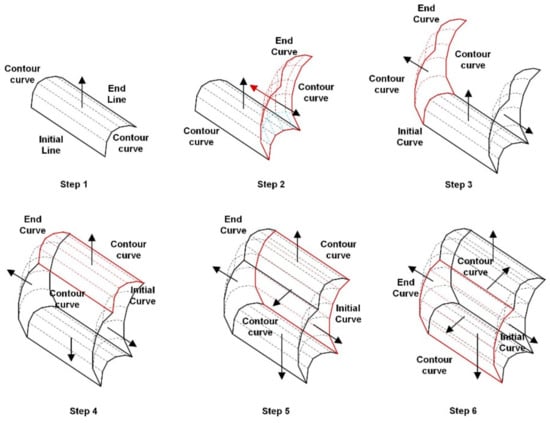

EVM works on a custom database in which all entities can be reduced in their simplest form to a line. For example, a curve is given as a set of consecutive lines, whereas a surface is defined by a contour formed by lines and curves and a set of mesh objects responsible for filling and shading its interior. Figure 1 shows all the surfaces that can be generated with EDM:

Figure 1.

Types of surfaces supported by EVM.

- Type 1: when the user drags a line (initial line) along a path (contour curve);

- Type 2: when the user drags the end of a line (initial vertex) along a path (contour curve) while the other end of the line remains fixed in space;

- Type 3: when the user drags a curve (initial curve) along a path (contour curve);

- Type 4: when the user drags the end of a curve (initial vertex) along a path (contour curve) while the other end of the curve remains fixed in space;

- Type 5: by triangulation when the user requests to form a surface from a closed contour. For this type of surface, an ear-trimming algorithm is used [38].

For each mesh, a normal vector must be defined to identify the exterior face of the polygon. All this information is necessary to manipulate the model and apply shading and lighting techniques, among other things. In the next section, we discuss the algorithm used to orient the normal vectors to the mesh.

2.1. Orientation of Normal Vectors to the Mesh

When the first surface is created in the virtual environment, all the normal vectors in its mesh are oriented in the same direction. The generation of subsequent surfaces triggers a method that reorients the direction of the normal vectors (if necessary) of all meshes of the current surfaces. The goal is to keep all normal vectors pointing toward the outside of a possible solid object.

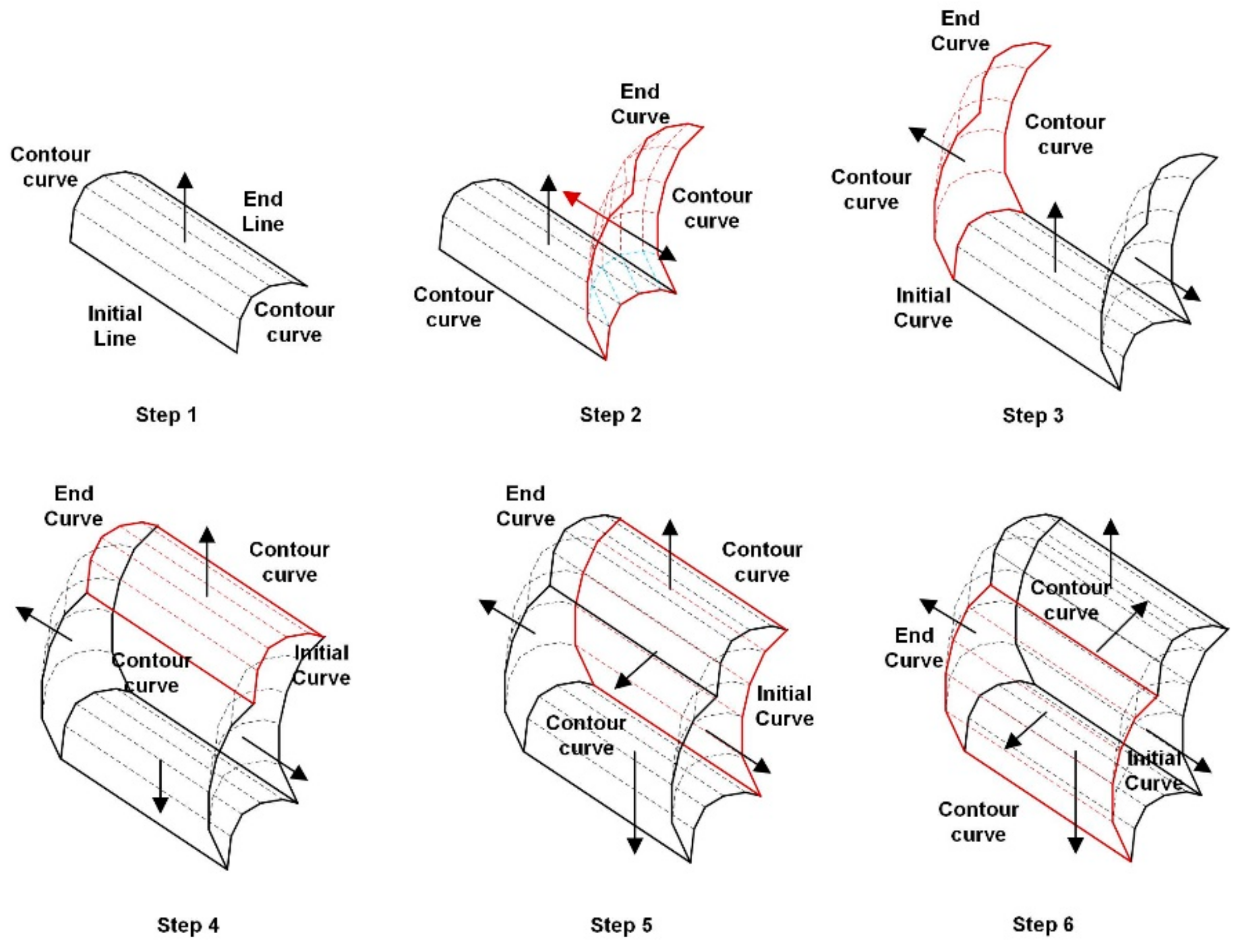

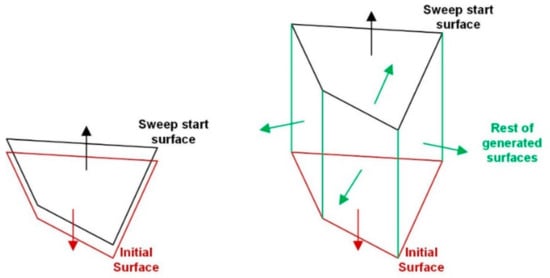

The algorithm implemented by this function checks each mesh on the surfaces in the scene to determine the number of intersections between a line passing through the center of gravity of a triangle defined by the vertices of the mesh and the mesh of other surfaces in the direction of the normal vector. If the total intersection points is an odd number in any mesh of the surface, the normal vectors of all the meshes in that surface are reversed. An example of how the algorithm works is shown in the process illustrated in Figure 2.

Figure 2.

Structure of the surfaces represented in the scene.

The sweeping operation can also be performed on surfaces generated in a previous step. In this case, a new surface is produced directly, which is a copy of the original and with the normal vectors in opposite directions. The normal vectors on the first surface are assigned the direction that is opposite the motion of the controller, and those on the second surface are assigned the direction of motion of the controller. Likewise, a new surface is generated for each entity that defines the contour of the surface being swept. Once again, the normal vectors will point toward the outside of the enclosed volume, as shown in Figure 3.

Figure 3.

Solid generated by extrusion of a surface.

2.2. User Workspace

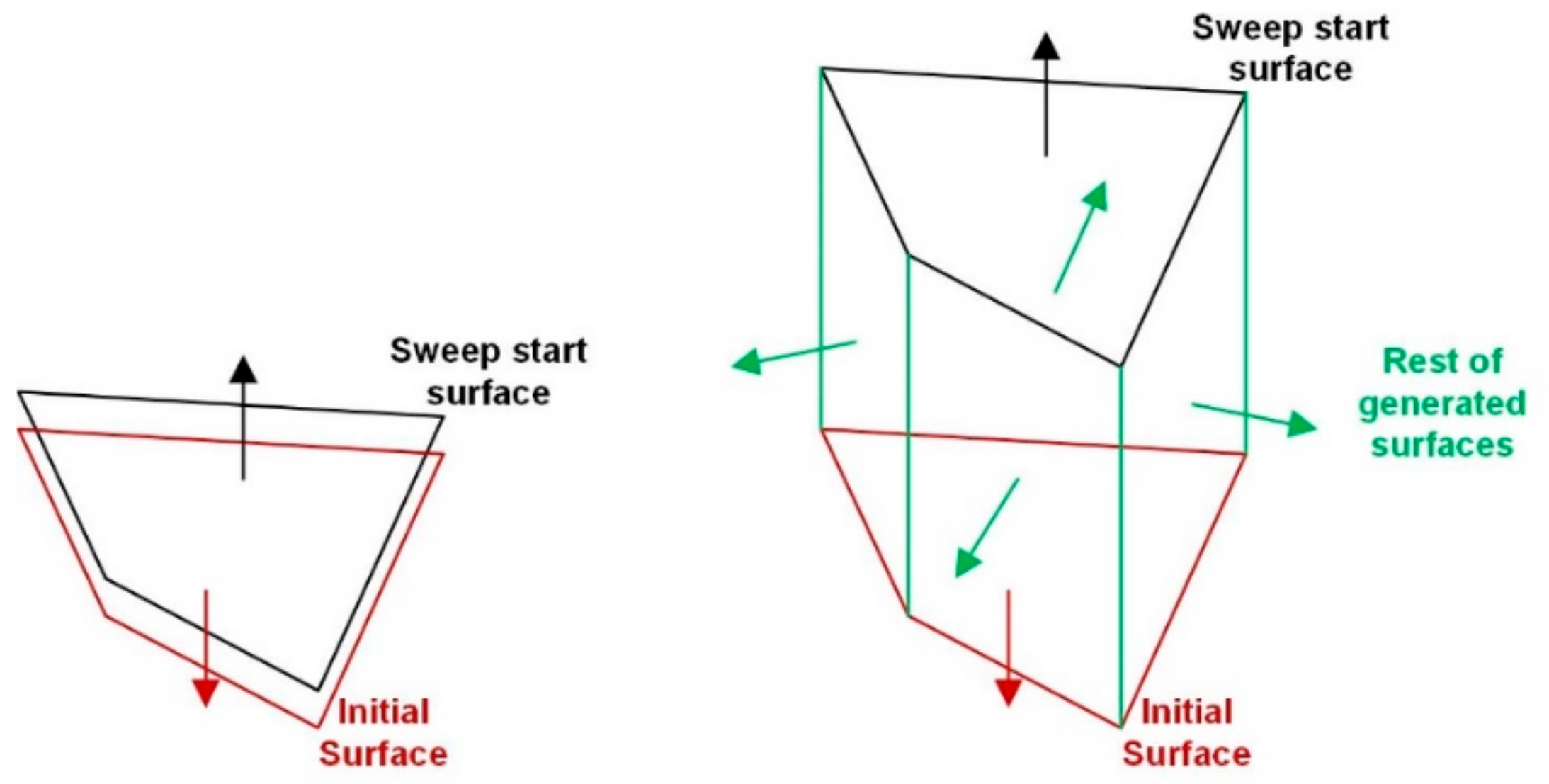

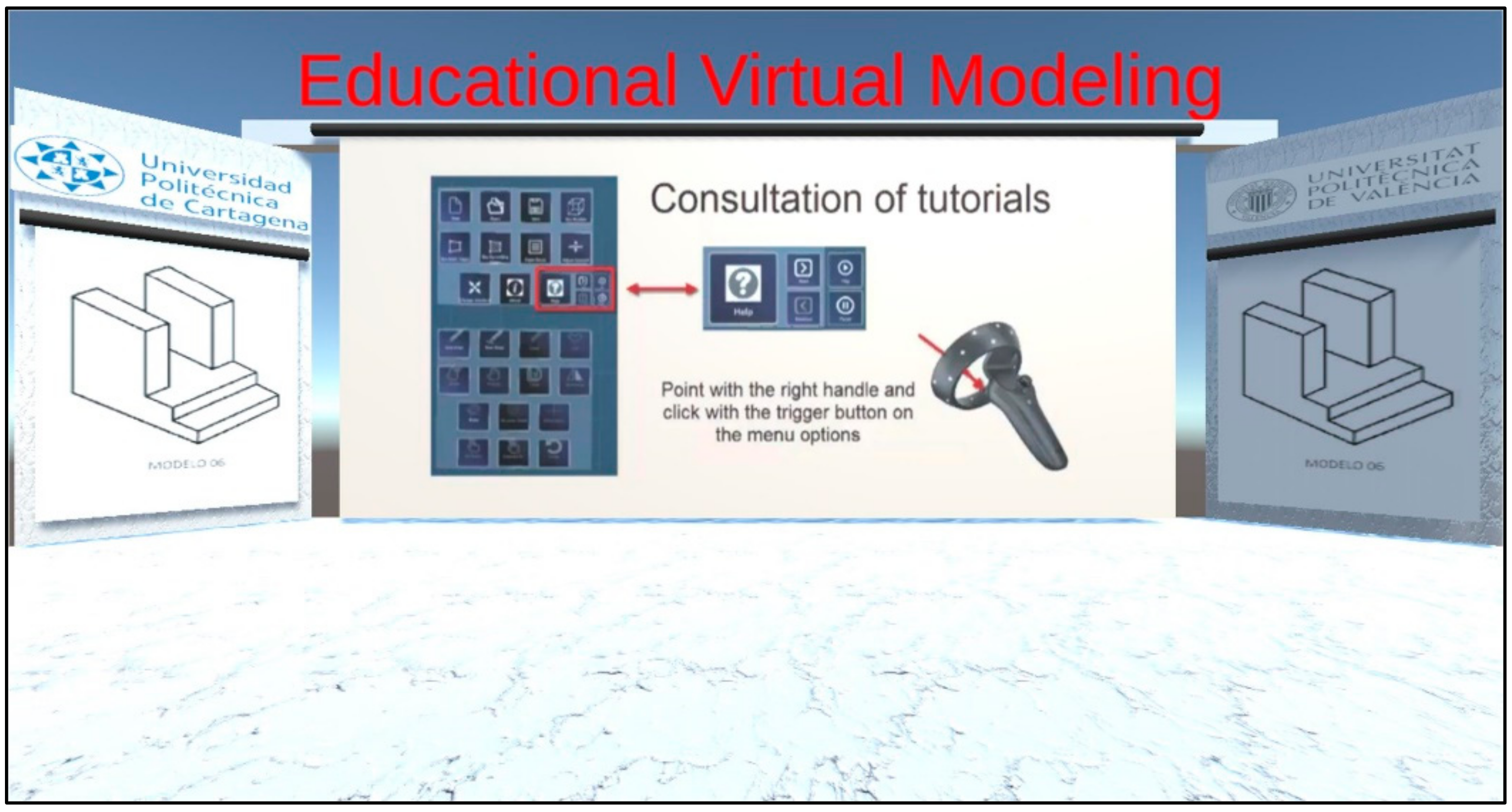

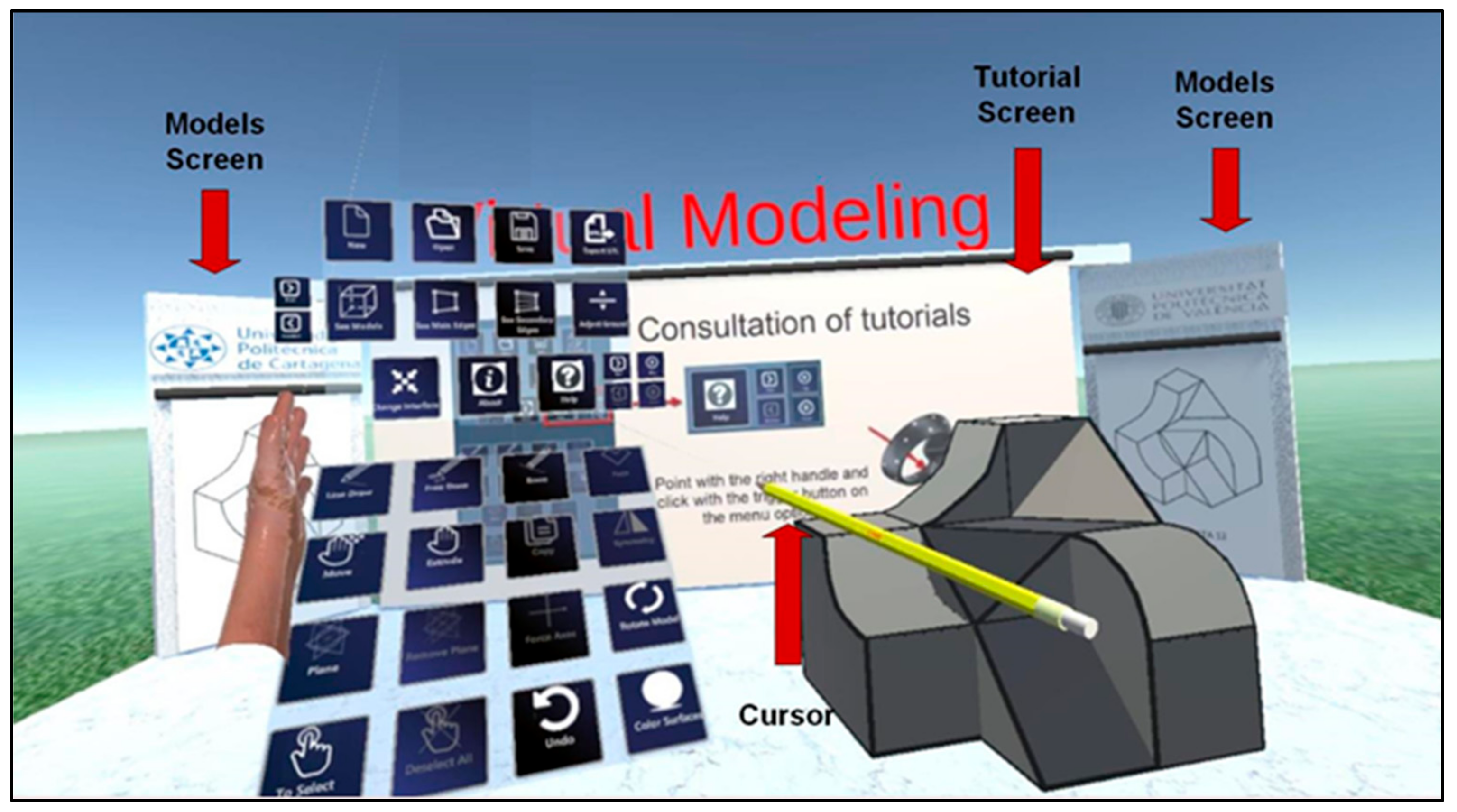

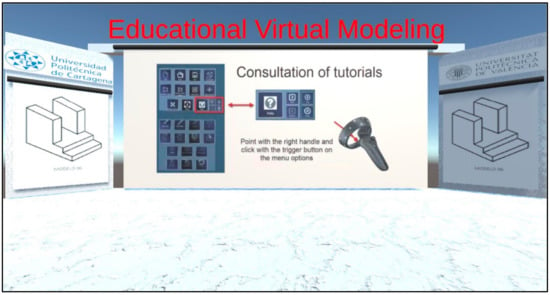

Considering that a main objective of EVM was to provide a simple mechanism for delivering exercises and tutorials to the students in the virtual space, a work area equipped with a main screen for visualizing multimedia tutorial videos and two lateral walls to display the exercises was implemented (model screens in Figure 4).

Figure 4.

User workspace in the virtual environment.

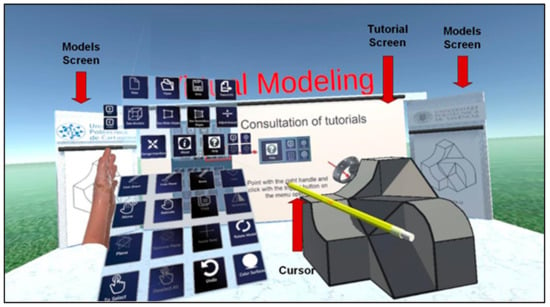

Regarding the user interface, we tested several menu configurations and finally decided to implement a classical panel menu considering our testing results and recent publications [39,40]. The panel menu is attached to a virtual hand that is linked to the non-dominant hand controller. According to the mode of operation, the dominant hand controller can show a pencil (Figure 5 shows an example) for geometry creation and selection, a pencil rotated 180° (with the eraser pointing forward), which indicates the use of the erase command or a virtual hand that is symmetrical to the one shown for the non-dominant hand controller, which indicates the use of a different command. A ray-casting technique is used for the selection.

Figure 5.

User workspace with the panel menu.

Given the educational nature of the application, it is important to highlight the integrated exercise and tutorials’ functionality. EVM can visualize exercises stored as image files. The images must be uploaded to a specific directory so they can be visualized using the forward and backward icons from the panel menu. These lateral walls can be hidden by using the icon described in Table 1. The display of tutorials is also one of the strengths of this application. Far from consulting manuals or information in any other medium, the application can display video tutorials on the central wall. For this purpose, a total of four tutorial videos with a duration of around 9 min were recorded. The user can watch these videos by clicking the play, pause, next, and previous icons deployed when selecting the help icon shown in Table 1.

Table 1.

Commands to manage tutorials and lateral walls.

2.3. Commands

The panel menu presents a set of icons organized into four groups: commands to create geometry, commands to edit geometry, modeling aids, and commands to manage files, visualization, and settings.

The commands available to create geometry in the virtual environment are shown in Table 2. During the execution of any command, surfaces, endpoints, and midpoints are detected automatically on any line or curve in the virtual space. These points can be selected as start or end points for the command.

Table 2.

Available commands to create geometry.

The extrude command allows users to sweep previously drawn lines or curves. The user can click and drag an existing entity or perform the action on the initial or final vertices, generating some of the surface types described at the beginning of Section 2. Additionally, as described in Figure 3, it is possible to sweep surfaces to create volumes. The path followed by the geometry during the sweeping operation follows the trajectory of the dominant hand controller. The commands face, copy, and symmetry are used on a set of pre-selected entities. For the face command, the selected entities must be coplanar and define a closed contour.

The commands available to edit the existing geometry in the virtual environment are shown in Table 3. Moving the location of an entity implies that any geometry that the entity belongs to must be modified. In fact, if a vertex changes its location, any line, curve, or surface that connects to that point will readjust automatically to the new location of the vertex. If a line or curve is moved, then any surface that contains the line will readjust to the new location of the line or curve.

Table 3.

Available commands to edit geometry.

To facilitate the creation of specific geometric shapes, we implemented a series of modeling aids to the proposed system, as shown in Table 4. The “plane” command is used to create work planes. Once a plane is defined, all commands for creating geometry will be executed with respect to this plane. The “delete plane” command deletes any previously created plane. The “force axes” command can only be activated when there is an existing plane in the scene. The command forces all geometry creation and editing operations to be performed along the main axes that define the plane. Selecting geometry involves clicking on it. Selecting an entity that has previously been selected will deselect it. The “deselect” command will clear any selections in the scene. Finally, the undo command erases the last change made to the model, reverting it to an older state.

Table 4.

Modeling aids.

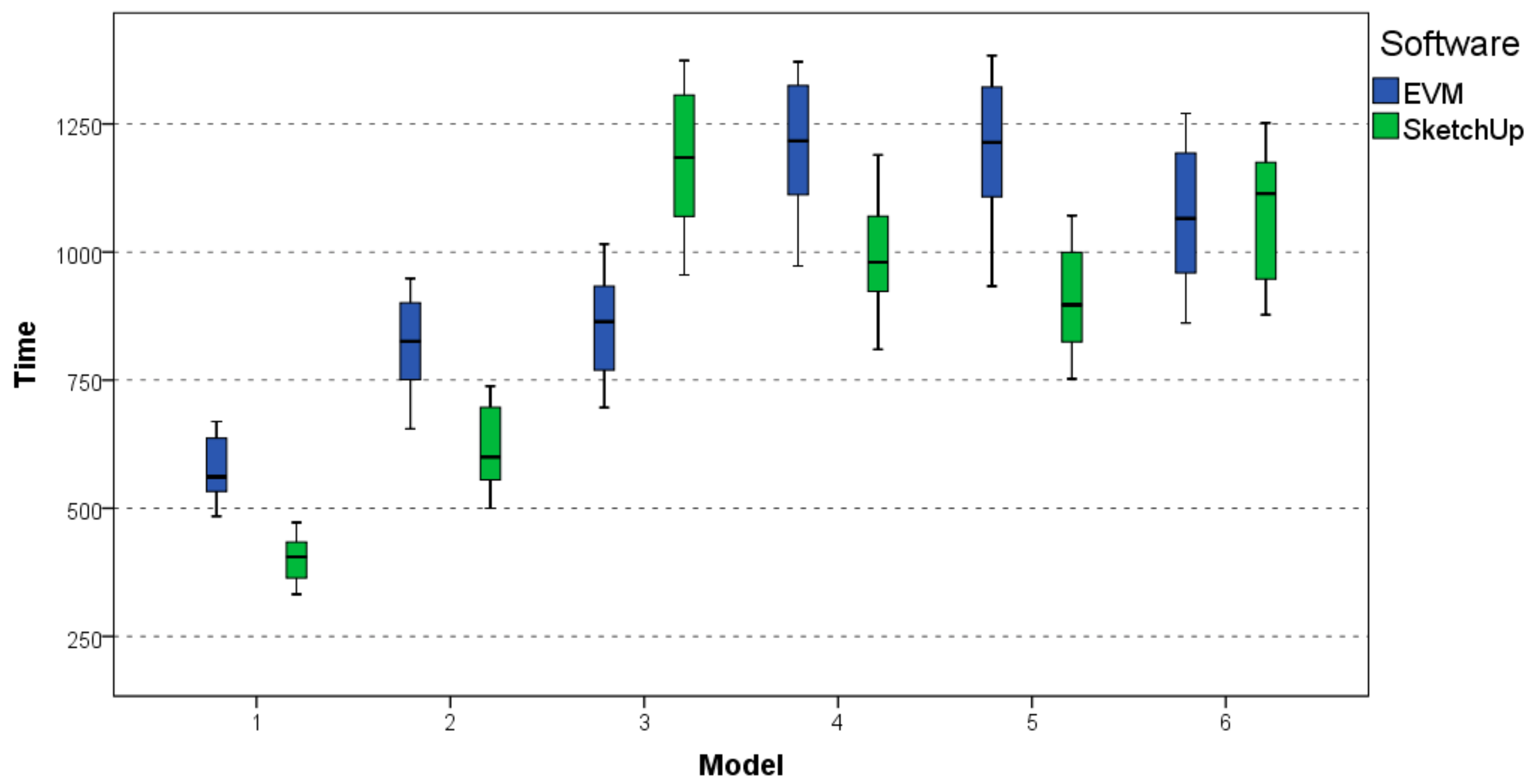

The commands available to manage files, visualization, and settings in the virtual environment are shown in Table 5. These commands enable the creation of new files, as well as opening and saving a 3D model. We also included the option to export the model to STL format, so that the model can be viewed in or printed from other applications. It also confirms the validity of the geometric information created by our modeling tool and stored in our database.

Table 5.

Commands to manage files, visualization, and settings.

The commands “show main edges” and “show secondary edges” allow users to show/hide the edges that define the contour or the mesh of a surface, respectively. A 3D model with secondary edges visible, a model with some colored surfaces (using the color surfaces command), and a model visualized after being exported to STL are shown in Figure 6.

Figure 6.

Model with secondary edges visible (left), surface coloring (middle), and exporting a model to STL (right).

3. User Study

To determine if EVM provides a simple modeling mechanism to support the creation of typical parts used in basic engineering, a user study was conducted with 23 participants. We used Trimble SketchUp as a reference to compare the performance of EVM. SketchUp is a desktop CAD system used in many introductory engineering and architecture graphics courses. It is well known for its simplicity and ease of use. SketchUp follows a modeling paradigm similar to EVM. 3D models are created by defining 2D contours that can be swept to generate volumes. These volumes can then be modified by manipulating their vertices, edges, and faces.

3.1. Participants

Twenty-three first- and second-year industrial engineering students (five females and eighteen males) between the ages of 18 y and 21 y and with no previous experience in any of the systems tested were selected to participate in our study.

3.2. Materials and Instruments

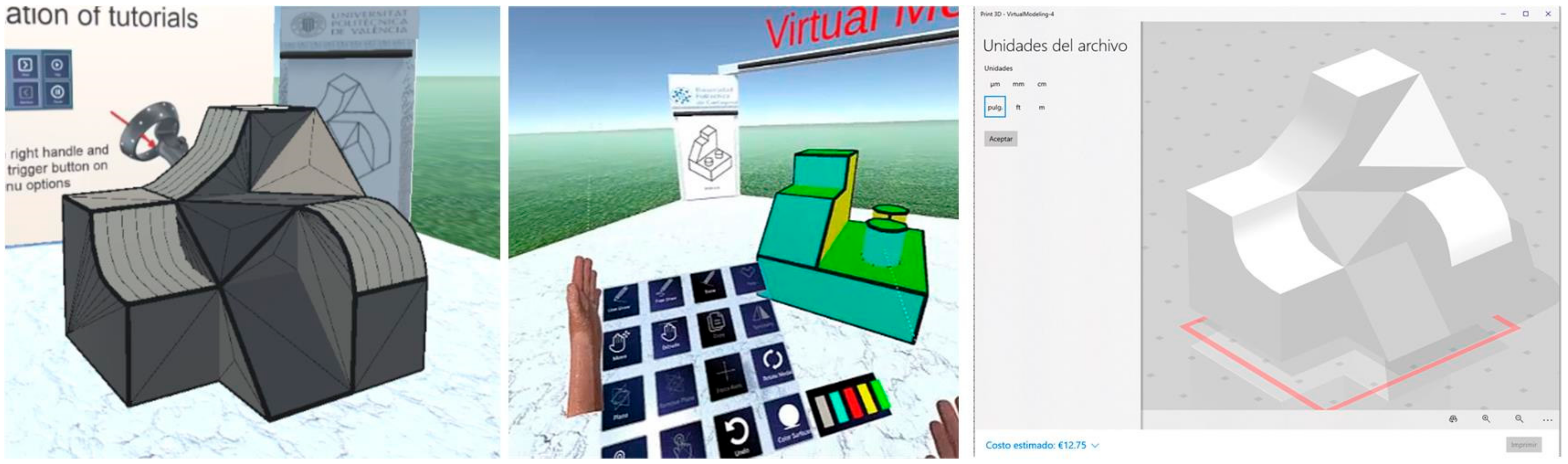

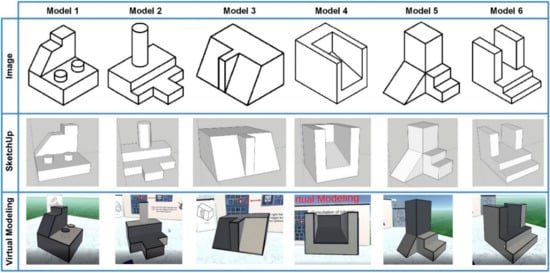

Both EVM and Trimble SketchUp ran on an HP computer with an Intel Core I7 2.2 GHz processor with 8 GB of RAM and a 64 bit Windows operating system. For the virtual reality application, we used a Lenovo Explorer HMD with a 1440 × 1440 resolution per eye and 90 Hz refresh rate and two motion controllers. The study was set up in a room with an available space of 4 m2. The participants had to perform 6 modeling tasks, using the axonometric drawings shown in Figure 7 as the input. The sequence of modeling tasks was generated random for each participant. The parts were not dimensioned as the subjects were asked to model 3D objects with proportions as those observed in the axonometric perspectives.

Figure 7.

Set of models used in our experiment.

The System Usability Scale (SUS) [41] questionnaire (presented on Table 6) was used for the evaluation of perceived usability. Although published in 1996, it is still widely used in many user studies [42], especially in the areas of 3D modeling and VR [15,43,44,45]. The instrument uses 5-point Likert scales (1 indicates that the user strongly disagrees with the statement and 5 that he/she strongly agrees).

Table 6.

System Usability Scale (SUS) questionnaire [41].

For the assessment of the ease of use, we applied the same questionnaire (shown in Table 7) that Stadler et al. [44] used to evaluate ImPro, a new application for immersive prototyping for industrial designers with a similar structure to EVM. It also employs a 5-point Likert scale as the SUS questionnaire.

Table 7.

Ease of use questionnaire [44].

3.3. Experimental Procedure

Separate appointments were made for each participant in the study. At the beginning of the experiment, each participant went through a brief training session with each tool where he/she watched a tutorial and was allowed to use the tool freely for a period of 20 min. Subsequently, participants were asked to model each of the proposed parts (the time required to complete the modeling task was registered). The order was randomly chosen for each participant. To minimize bias, 50% of the participants started the experiment using SketchUp and the other 50% using EVM. At the end of the modeling tasks, the participants filled out the SUS and ease of use questionnaires.

4. Results

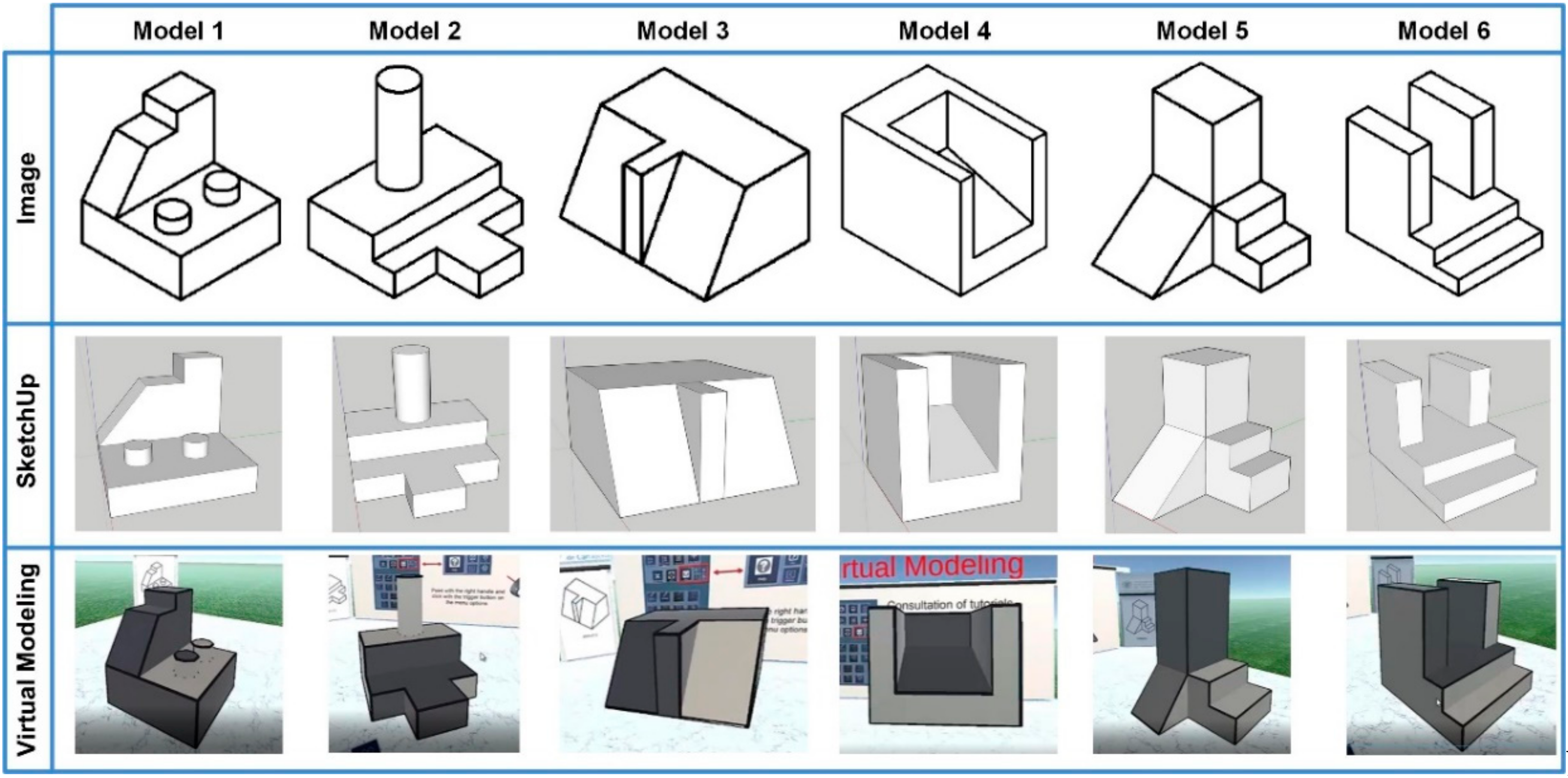

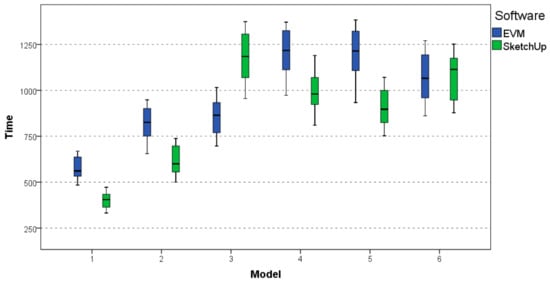

The average modeling times employed for each participant in each of the parts in our study are shown in Figure 8. Except for Models 3 and 6, the time required to create a model using EVM was greater than the time required to perform the same task using SketchUp. For Model 6, both tools performed similarly. The boxplots corresponding to modeling each part are illustrated in Figure 8. The execution time for each participant is presented in Figure 9. Boxplots for execution times are displayed in Table 8.

Figure 8.

Boxplot for each modeling task using EVM and Trimble SketchUp.

Figure 9.

Times employed by the participants to create the six models using SketchUp and EVM.

Table 8.

Mean execution time (in minutes) per model and total, std. deviation, and t-test results.

After confirming the normality of the data with a Shapiro–Wilk test and applying a repeated measures t-test with Bonferroni correction to compare the mean execution time for each model, we observed statistically significant differences between EVM and Trimble SketchUp except for Model 6, as detailed in Table 8.

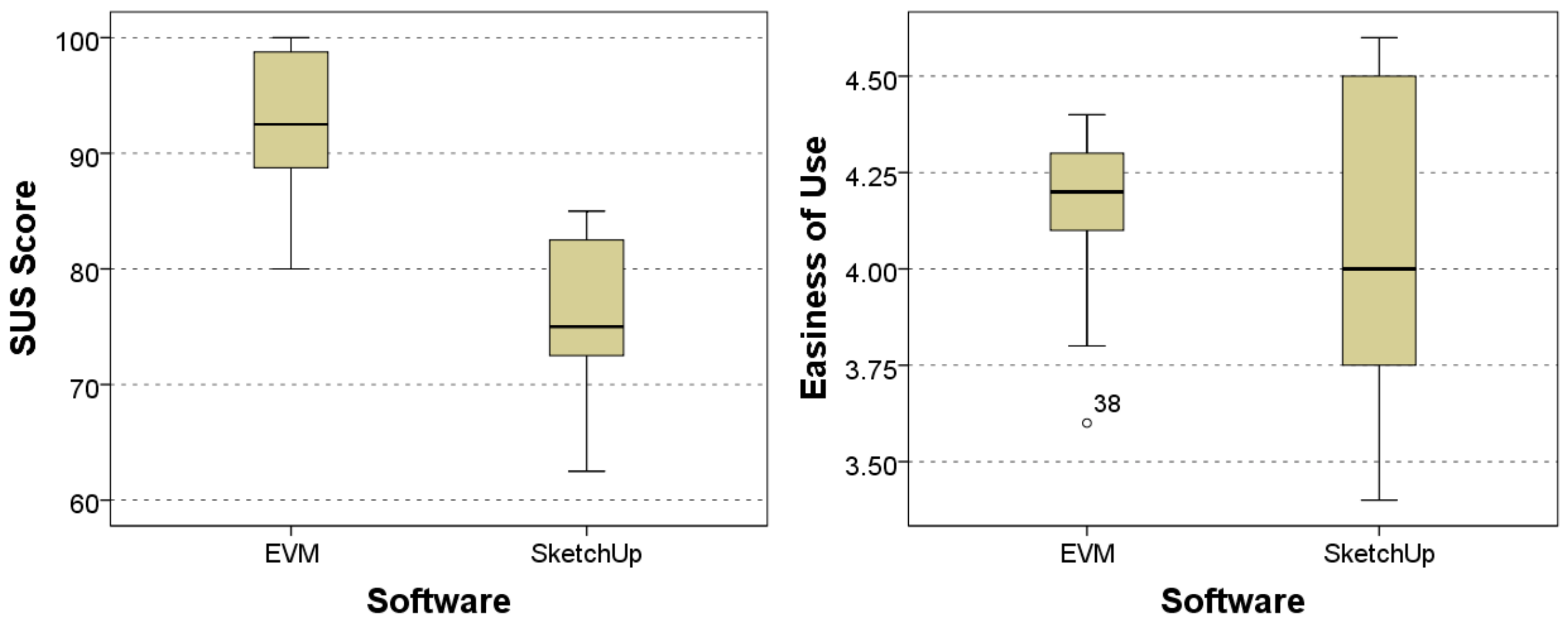

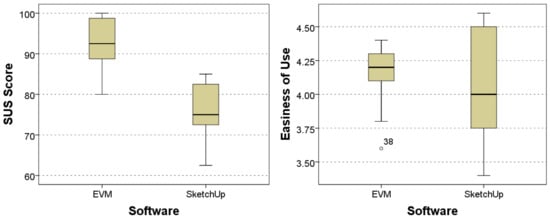

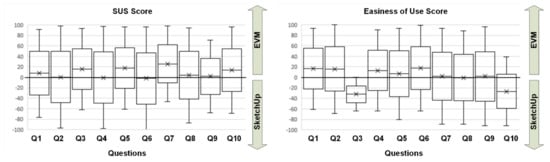

Regarding the SUS score results, the mean value of the SUS score using EVM was 92.3 points, with a standard deviation of 6.15, and 76.30 points for SketchUp, with a standard deviation of 6.69. A repeated measures t-test concluded that there existed a statistically significant difference between the SUS scores, t (22) = 8.89, p < 0.001, which confirmed the observation of the boxplot in Figure 10.

Figure 10.

Boxplots for SUS and ease of use scores.

Considering the ease of use score, the mean value for the SUS score using EVM was 4.17 points, with a standard deviation of 0.19 and a median of 4.2. SketchUp obtained a mean value of 4.06 with a standard deviation of 0.43 and a median of 4. Because of the non-normality of the data, we applied a related-samples Wilcoxon signed rank test, which revealed that there were no statistically significant differences between the ease of use scores (Z = −1.32, p = 0.18). The corresponding boxplot is presented in Figure 10.

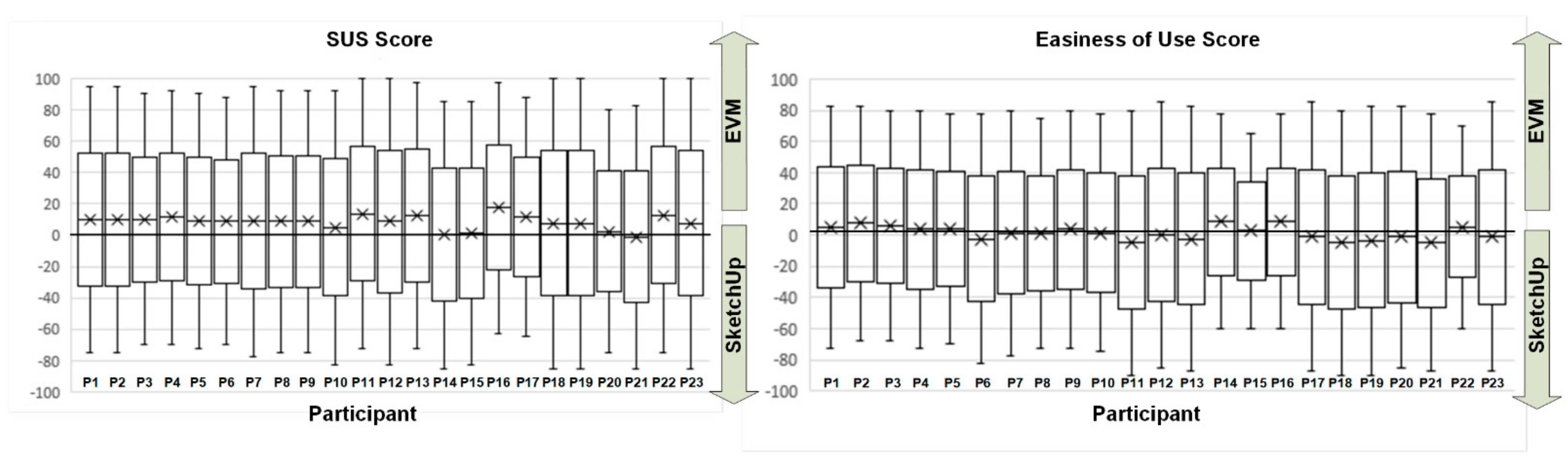

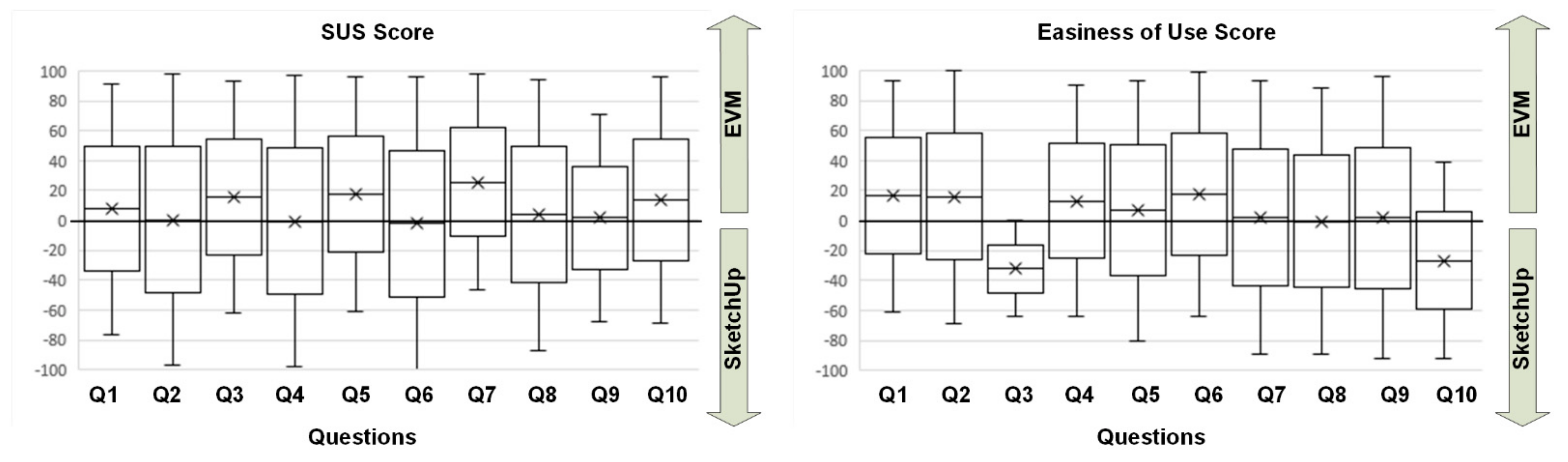

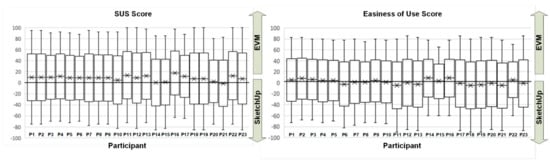

The relative SUS and ease of use scores for each participant (the ease of use score was normalized to a 100-point scale) are presented in Figure 11. The relative score was calculated by subtracting the SketchUp score from the EVM score. Positive values indicate better scores for EVM, and negative values refer to SketchUp. The rectangles represent the score distributions between first and third quartiles. The horizontal line inside the box represents the average score. The relative scores per question are shown in Figure 12.

Figure 11.

Relative SUS and ease of use scores per participant.

Figure 12.

Relative SUS and ease of use scores per question.

Figure 11 shows that all participants rated EVM higher than SketchUp in the SUS questionnaire except for one participant. In the ease of use questionnaire, 9 participants scored SketchUp higher, 1 participant scored both applications equally, and 13 considered EVM to be easier to use.

Analyzing the questionnaires per question, EVM always obtained more positive scores for the SUS than SketchUp except on Questions Q4 and Q6 (by a small margin). In terms of ease of use, EVM received higher scores except on Questions Q3 and Q10, which were related to the scaling operations and undo/redo operations, which makes sense as EVM does not provide a scale or redo command.

5. Discussion

The observations of the participants during the experiment and their comments during the informal interview at the end of the experiment provided interesting information to interpret the results. The initial reaction of participants when they tried a VR HMD for the first time was astonishment and enthusiasm. All of them seemed much more excited to work in VR than with the desktop program. The 20 min they were allowed to practice freely were short when they did this with EVM, and they wanted to continue modeling and experimenting. However, with SketchUp, the 20 min were very long, and they wanted to move on to the next experimental phase sooner. This is a relevant fact to consider EVM as a potential learning tool in an academic setting, as learner motivation is an important part of any learning experience. If learners are not motivated, no matter how effective a particular tool is, they will not use it. This observation confirms the results of previous works [46,47,48] and reflects the positive impact of VR in increasing student motivation.

Regarding the execution time of the modeling tasks, the observation of the behavior of the participants may explain why SketchUp outperformed EVM in four of the six modeling tasks. We highlight two key aspects:

- When modeling in the VR-based environment, participants tended to create models that were much larger in size than those created in SketchUp, which forced users to move around the virtual environment and perform actions that required the movement of their arms. Working with SketchUp, however, only requires subtle movements of the hands and wrists, which undoubtedly can be performed much more quickly. This behavior is similar to other studies [23] that reported physical fatigue in the VR condition due to the physical activity required to sketch and model in that context;

- When modeling in the VR system, participants tended to spend a significant amount of time looking at the model and the environment. This behavior is common in users who have never experienced an immersive virtual reality environment before. This behavior perhaps is related to observations in similar experiments [24] that found a simpler mental image manipulation as one of the positive characteristics of VR sketching. The participant does need to create a 3D mental representation before sketching, as a virtual 3D representation supports cognitive processes.

It is important to mention the important difference in the perceived usability measured by the SUS score. Considering the extensive analysis performed in [49], the high score (92.3) obtained by EVM is very relevant and can be rated as excellent. The SketchUp score was modest (74.3), which can be rated as good. These results can be partially explained by considering the typical “wow effect” [50] that first-time VR users experience.

Regarding the ease of use, the similar scores of EVM and SketchUp and the fact that no statistically significant differences were found in the questionnaire’s overall score may be explained by the fact that the ray-casting and panel menu combination used in EVM are conceptually very near the typical WIMP interface [51], which is well known by participants. Mouse cursor operation and selection of menu options by clicking the mouse on an icon are replaced by moving the controller in space and using ray-casting to select the desired icon.

6. Conclusions, Limitations, and Future Work

After the analysis of the results of the user study, we can conclude that EVM can be a feasible tool for an introductory engineering graphics course. The positive impact on motivation, high score on the SUS questionnaire, equivalent ease of use as the SketchUp system (well known for its simplicity), and task completion times in a range near (95.4 min for EVM vs. 86.27 for SketchUp, as the total modeling time) SketchUp support this statement. It is important to mention that EVM was not designed to provide a faster way to model. The goal is to create a tool that is viable to deploy in a laboratory and provides reasonable task completion times. After the experiment was completed, conversations with participants revealed that the experiential learning facilitated by VR had a greater impact on students than a desktop application. Taking this into account, the small difference in total execution time is irrelevant.

A significant contribution of the developed system is the immersive help system. One of the most praised features of EVM was the possibility of viewing multimedia tutorials on how to use the application within the virtual environment itself, as well as using the side virtual screens to view the modeling tasks that had to be performed. In addition, the fast learning curve and ease of use shed light on the feasibility of using a virtual reality environment to perform basic sketching and modeling tasks with minimal training. These features, coupled with the fact that HMDs are becoming less expensive and more powerful, justify investing the additional effort to improve the developed system.

As future work, we plan to improve the capabilities of EVM by first porting the application to a standalone HMD such as Oculus Quest 2 and then completing the functionality of the application with elements that the user study revealed, such as the possibility of scaling parts and a complete implementation of undo/redo commands. Other ideas that are being considered to enrich the current system to facilitate the learning of spatial relations is the representation of the lateral projections of the objects under construction, to be able to easily analyze the connection between 3D objects and their orthogonal projections, which are often a difficult concept to work with in traditional teaching environments. Finally, we would like to conduct user studies with larger sample sizes and longer interventions to overcome the limitations of the study presented in this paper, which had a sample size of 23 subjects and a duration of the modeling tasks of approximately 90 min.

Author Contributions

Conceptualization, J.C.-P. and M.C.; methodology, M.C.; software, J.C.-P.; validation, J.C.-P.; writing—original draft reparation, J.C.-P. and M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Butterworth, J.; Davidson, A.; Hench, S.; Olano, M.T. 3DM: A three dimensional modeler using a head-mounted display. In Proceedings of the 1992 Symposium on Interactive 3D Graphics—SI3D ’92, Cambridge, MA, USA, 29 March–1 April 1992; ACM Press: New York, NY, USA, 1992; pp. 135–138. [Google Scholar]

- Holloway, R.; Lastra, A. Virtual Environments: A Survey of the Technology; University of North Carolina at Chapel Hill: Chapel Hill, NC, USA, 1993. [Google Scholar]

- Deering, M.F. HoloSketch: A Virtual Reality sketching / animation Tool. ACM Trans. Comput. Interact. 1995, 2, 220–238. [Google Scholar] [CrossRef]

- James, P. The Oculus Rift DK2, In-Depth Review and DK1 Comparison. Available online: https://www.roadtovr.com/oculus-rift-dk2-review-dk1-comparison-vr-headset (accessed on 26 November 2021).

- Cordeiro, E.; Giannini, F.; Monti, M. A survey of immersive systems for shape manipulation. Comput. Aided. Des. Appl. 2019, 16, 1146–1157. [Google Scholar] [CrossRef]

- Open Cascade Home Page. Available online: https://dev.opencascade.org (accessed on 26 November 2021).

- ACIS Home Page. Available online: https://www.spatial.com/products/3d-acis-modeling (accessed on 26 November 2021).

- Martin, P.; Masfrand, S.; Okuya, Y.; Bourdot, P. A VR-CAD data model for immersive design. In Augmented Reality, Virtual Reality, and Computer Graphics, Proceedings of the 4th International Conference, AVR 2017, Ugento, Italy, 12–15 June 2017; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 10324, pp. 222–241. ISBN 9783319609218. [Google Scholar]

- Dassault Systèmes CATIA Home Page. Available online: https://www.3ds.com/products-services/catia (accessed on 26 November 2021).

- Feeman, S.M.; Wright, L.B.; Salmon, J.L. Exploration and evaluation of CAD modeling in virtual reality. Comput. Aided. Des. Appl. 2018, 15, 892–904. [Google Scholar] [CrossRef] [Green Version]

- Autodesk Fusion 360 Home Page. Available online: https://www.autodesk.com/products/fusion-360/overview (accessed on 26 November 2021).

- Autodesk Stingray Home Page. Available online: https://www.autodesk.com/products/stingray/overview (accessed on 26 November 2021).

- Machuca, M.D.B.; Asente, P.; Stuerzlinger, W.; Lu, J.; Kim, B. Multiplanes: Assisted freehand VR sketching. In Proceedings of the Symposium on Spatial User Interaction, Berlin, Germany, 13–14 October 2018; ACM: New York, NY, USA, 2018; pp. 36–47. [Google Scholar]

- Machuca, M.D.B.; Stuerzlinger, W.; Asente, P. Smart3DGuides: Making unconstrained immersive 3D drawing more accurate. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology, Parramatta, NSW, Australia, 12–15 November 2019; ACM: New York, NY, USA, 2019; pp. 1–13. [Google Scholar]

- Drey, T.; Gugenheimer, J.; Karlbauer, J.; Milo, M.; Rukzio, E. VRSketchIn: Exploring the design space of pen and tablet interaction for 3D sketching in Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020; pp. 1–14. [Google Scholar]

- Gravity Sketch Home Page. Available online: https://www.gravitysketch.com (accessed on 26 November 2021).

- Tilt Brush Home Page. Available online: https://www.tiltbrush.com (accessed on 26 November 2021).

- Master Piece Home Page. Available online: https://masterpiecestudio.com (accessed on 26 November 2021).

- Kodon Home Page. Available online: https://www.kodon.xyz (accessed on 26 November 2021).

- ShapeLab Home Page. Available online: https://shapelabvr.com (accessed on 26 November 2021).

- Adobe Medium Home Page. Available online: https://www.adobe.com/products/medium.html (accessed on 26 November 2021).

- Flyingshapes Home Page. Available online: https://www.flyingshapes.com (accessed on 26 November 2021).

- Lorusso, M.; Rossoni, M.; Colombo, G. Conceptual modeling in product design within Virtual Reality environments. Comput. Aided. Des. Appl. 2020, 18, 383–398. [Google Scholar] [CrossRef]

- Oti, A.; Crilly, N. Immersive 3D sketching tools: Implications for visual thinking and communication. Comput. Graph. 2021, 94, 111–123. [Google Scholar] [CrossRef]

- Arora, R.; Kazi, R.H.; Anderson, F.; Grossman, T.; Singh, K.; Fitzmaurice, G. Experimental evaluation of sketching on surfaces in VR. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2007; ACM: New York, NY, USA, 2017; Volume 2017, pp. 5643–5654. [Google Scholar]

- Vlah, D.; Čok, V.; Urbas, U. VR as a 3D modelling tool in engineering design applications. Appl. Sci. 2021, 11, 7570. [Google Scholar] [CrossRef]

- Sadowski, M.A.; Sorby, S.A. Engineering graphics concepts: A delphi study. In Proceedings of the 122nd ASEE Annual Conference and Exposition, Seattle, WA, USA, 14–17 June 2015; pp. 67–72. [Google Scholar]

- Study, N.E.; Nozaki, S.; Sorby, S. Engineering and engineering technology student outcomes across different programs on an engineering graphics concept inventory. J. Eng. Technol. 2020, 37, 44–52. [Google Scholar]

- Kukk, P.; Heikkinen, S. Assignments, assessment and feedback in Engineering Graphics courses. In Proceedings of the 2015 IEEE Global Engineering Education Conference (EDUCON), Tallinn, Estonia, 18–20 March 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 77–82. [Google Scholar] [CrossRef]

- Martín-Gutiérrez, J.; Contero, M.; Alcañiz, M. Evaluating the usability of an augmented reality based educational application. In Proceedings of the International Conference on Intelligent Tutoring Systems, Pittsburgh, PA, USA, 14–18 June 2010; pp. 296–306. [Google Scholar]

- Camba, J.D.; Contero, M. Incorporating augmented reality content in Engineering Design Graphics materials. In Proceedings of the 2013 IEEE Frontiers in Education Conference (FIE), Oklahoma City, OK, USA, 23–26 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 35–40. [Google Scholar]

- Camba, J.D.; Otey, J.; Contero, M.; Alcañiz, M. Visualization & Engineering Design Graphics with Augmented Reality, 2nd ed.; SDC Publications: Mission, KS, USA, 2014; ISBN 978-1585039050. [Google Scholar]

- Martin-Dorta, N.; Sanchez-Berriel, I.; Bravo, M.; Hernandez, J.; Saorin, J.L.; Contero, M. Virtual Blocks: A serious game for spatial ability improvement on mobile devices. Multimed. Tools Appl. 2013, 73, 1575–1595. [Google Scholar] [CrossRef]

- Contero, M.; Naya, F.; Company, P.; Saorin, J.L.; Conesa, J. Improving Visualization Skills in Engineering Education. IEEE Comput. Graph. Appl. 2005, 25, 24–31. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- SketchUp Home Page. Available online: https://www.sketchup.com (accessed on 26 November 2021).

- Unity Home Page. Available online: https://unity.com (accessed on 28 November 2016).

- What Is the Mixed Reality Toolkit. Available online: https://docs.microsoft.com/en-us/windows/mixed-reality/mrtk-unity/?view=mrtkunity-2021-05 (accessed on 28 November 2016).

- Meisters, G.H. Polygonons have ears. Am. Math. Mon. 1975, 82, 648–651. [Google Scholar] [CrossRef]

- Monteiro, P.; Coelho, H.; Gonçalves, G.; Melo, M.; Bessa, M. Comparison of radial and panel menus in virtual reality. IEEE Access 2019, 7, 116370–116379. [Google Scholar] [CrossRef]

- Lediaeva, I.; LaViola, J.J. Evaluation of body-referenced graphical menus in virtual environments. In Proceedings of the Graphics Interface 2020, Ontario, ON, Canada, 28–29 May 2020; Canadian Human-Computer Communications Society: Mississauga, ON, Canada, 2020; pp. 308–316. [Google Scholar]

- Brooke, J. SUS: A “quick and rirty” usability scale. In Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; pp. 207–212. [Google Scholar]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum. Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Huang, H.; Lin, C.; Cai, D. Enhancing the learning effect of virtual reality 3D modeling: A new model of learner’s design collaboration and a comparison of its field system usability. Univers. Access Inf. Soc. 2021, 20, 429–440. [Google Scholar] [CrossRef]

- Stadler, S.; Cornet, H.; Mazeas, D.; Chardonnet, J.-R.; Frenkler, F. IMPRO: Immersive prototyping in virtual environments for industrial designers. In Proceedings of the Design Society: DESIGN Conference, Cavtat, Croatia, 26–29 October 2020; Volume 1, pp. 1375–1384. [Google Scholar]

- Fechter, M.; Schleich, B.; Wartzack, S. Comparative evaluation of WIMP and immersive natural finger interaction: A user study on CAD assembly modeling. Virtual Real. 2021. [Google Scholar] [CrossRef]

- Gomes, N.; Lou, Y.; Patwardhan, V.; Moyer, T.; Vavala, V.; Barros, C. The effects of virtual reality learning environments on improving the retention, comprehension, and motivation of medical school students. In Proceedings of the Advances in Intelligent Systems and Computing, Nice, France, 22–24 August 2019; Springer: Berlin/Heidelberg, Germany, 2020; Volume 1018, pp. 289–296. [Google Scholar]

- Abadia, R.; Calvert, J.; Tauseef, S.M. Salient features of an effective immersive non-collaborative virtual reality learning environment. In Proceedings of the 10th International Conference on Education Technology and Computers, Tokyo, Japan, 26–28 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 268–274. [Google Scholar]

- Makransky, G.; Borre-Gude, S.; Mayer, R.E. Motivational and cognitive benefits of training in immersive virtual reality based on multiple assessments. J. Comput. Assist. Learn. 2019, 35, 691–707. [Google Scholar] [CrossRef]

- Bangor, A.; Staff, T.; Kortum, P.; Miller, J.; Staff, T. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Reunanen, T.; Penttinen, M.; Borgmeier, A. “Wow-Factors” for boosting business. In Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2017; Volume 498, pp. 589–600. ISBN 9783319420691. [Google Scholar]

- van Dam, A. Post-WIMP user interfaces. Commun. ACM 1997, 40, 63–67. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).