Complementary Segmentation of Primary Video Objects with Reversible Flows

Abstract

:1. Introduction

- We formulate the problem of primary object segmentation into a novel objective function based on the relationship between foreground and background and incorporate the objective optimization problem into end-to-end CNNs. By training specific CNNs, two dual tasks of foreground and background segmentation can be simultaneously addressed, and primary video objects can be segmented from complementary cues.

- We construct neighborhood reversible flow between superpixels which effectively propagates foreground and background cues along the most reliable inter-frame correspondences and leads to more temporally consistent results.

- Based on the proposed method, we achieve impressive performance compared with 22 image-based and video-based existing models, achieving state-of-the-art results.

2. Related Work

2.1. Salient Object Segmentation in Images

2.2. Primary/Semantic Object Segmentation in Videos

3. Initialization with Complementary CNNs

3.1. Problem Formulation

3.2. Deep Optimization with Complementary CNNs

4. Efficient Temporal Propagation with Neighborhood Reversible Flow

- (1)

- How to find the most reliable correspondences between various (nearby or far-away) frames;

- (2)

- How to infer out the consistent primary objects based on spatiotemporal correspondences and the initialization results?

4.1. Neighborhood Reversible Flow

4.2. Temporal Propagation of Spacial Features

5. Experiments

5.1. Experimental Settings

- (1)

- SegTrack V2 [59] is a classic dataset in video object segmentation that is frequently used in many previous works. It consists of 14 densely annotated video clips with 1066 frames in total. Most primary objects in this dataset are defined as ones with irregular motion patterns.

- (2)

- Youtube-Objects [1] contains a large amount of Internet videos, and we adopt its subset [69] that contains 127 videos with 20,977 frames. In these videos, 2153 keyframes are sparsely sampled and manually annotated with pixel-wise masks according to the video tags. In other words, primary objects in Youtube-Objects are defined from the perspective of semantic attributes.

- (3)

- VOS [15] contains 200 videos with 116,093 frames. On 7467 uniformly sampled keyframes, all objects are pre-segmented by 4 subjects, and the fixations of another 23 subjects are collected in eye-tracking tests. With these annotations, primary objects are automatically selected as the ones whose average fixation densities over the whole video fall above a predefined threshold. If no primary objects can be selected with the predefined threshold, objects that receive the highest average fixation density will be treated as the primary ones. Different from SegTrack V2 and Youtube-Objects, primary objects in VOS are defined from the perspective of human visual attention.

5.2. Comparisons with State-of-the-Art Models

5.3. Detailed Performance Analysis

5.3.1. Performance of Complementary CNNs

5.3.2. Effectiveness of Neighborhood Reversible Flow

5.3.3. Running Time

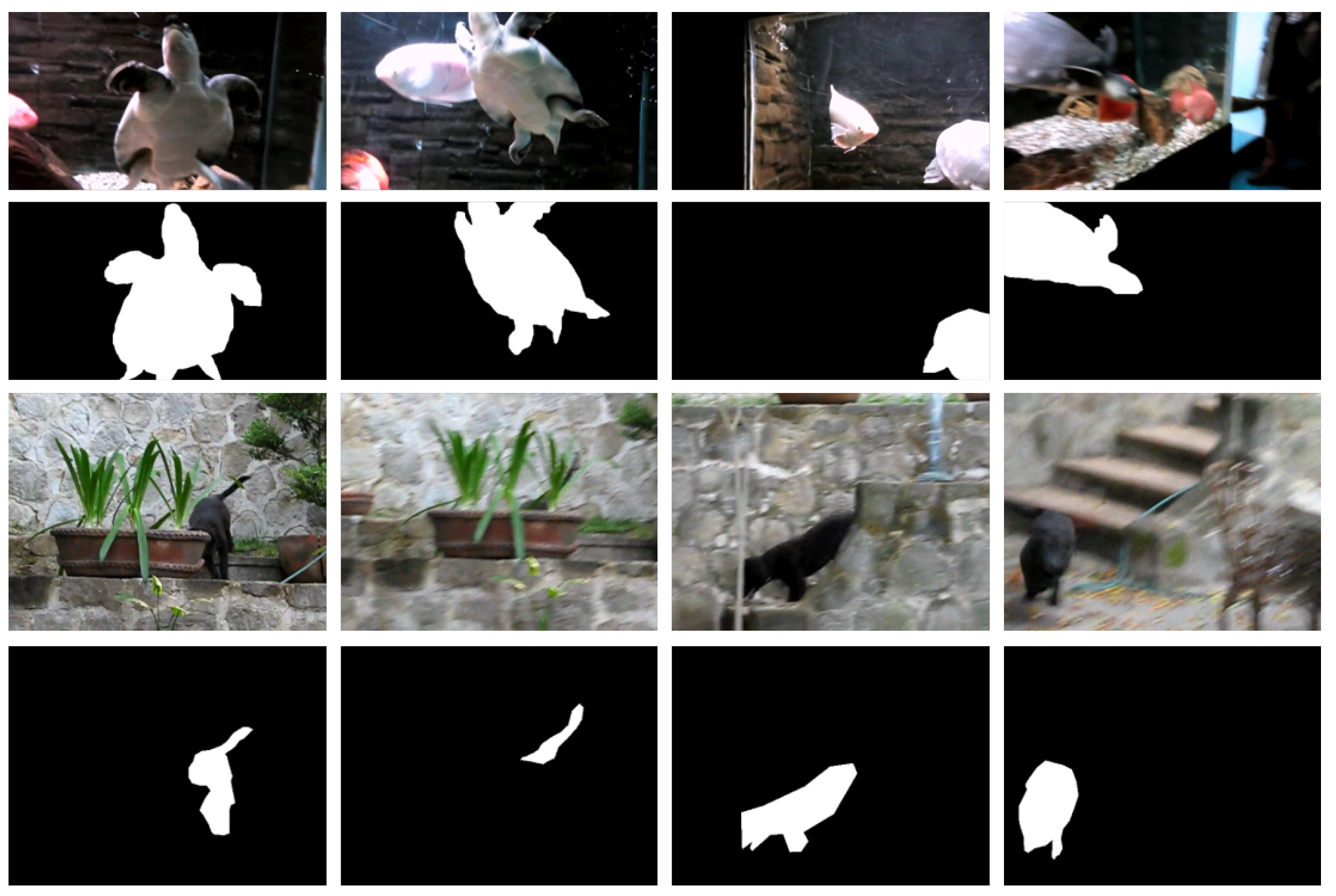

5.3.4. Failure Cases

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Prest, A.; Leistner, C.; Civera, J.; Schmid, C.; Ferrari, V. Learning object class detectors from weakly annotated video. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3282–3289. [Google Scholar]

- Jiang, H.; Wang, J.; Yuan, Z.; Wu, Y.; Zheng, N.; Li, S. Salient object detection: A discriminative regional feature integration approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 2083–2090. [Google Scholar]

- Zhang, D.; Meng, D.; Han, J. Co-saliency detection via a self-paced multiple-instance learning framework. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 865–878. [Google Scholar] [CrossRef] [PubMed]

- Lee, G.; Tai, Y.W.; Kim, J. Deep saliency with encoded low level distance map and high level features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 660–668. [Google Scholar]

- Li, G.; Yu, Y. Visual saliency based on multiscale deep features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5455–5463. [Google Scholar]

- Fan, D.P.; Li, T.; Lin, Z.; Ji, G.P.; Zhang, D.; Cheng, M.M.; Fu, H.; Shen, J. Re-thinking co-salient object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4339–4354. [Google Scholar] [CrossRef] [PubMed]

- Zhao, R.; Ouyang, W.; Li, H.; Wang, X. Saliency detection by multi-context deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1265–1274. [Google Scholar]

- Zhuge, M.; Fan, D.P.; Liu, N.; Zhang, D.; Xu, D.; Shao, L. Salient object detection via integrity learning. IEEE Trans. Pattern Anal. Mach. Intell. 2022. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Chen, C.; Fan, D.; Hao, A.; Qin, H. Weakly Supervised Visual-Auditory Saliency Detection with Multigranularity Perception. arXiv 2021, arXiv:2112.13697. [Google Scholar]

- Fan, D.P.; Zhang, J.; Xu, G.; Cheng, M.M.; Shao, L. Salient objects in clutter. IEEE Trans. Pattern Anal. Mach. Intell. 2022. [Google Scholar] [CrossRef]

- Zhao, J.X.; Liu, J.J.; Fan, D.P.; Cao, Y.; Yang, J.; Cheng, M.M. EGNet: Edge guidance network for salient object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 8779–8788. [Google Scholar]

- Fan, D.P.; Cheng, M.M.; Liu, J.J.; Gao, S.H.; Hou, Q.; Borji, A. Salient objects in clutter: Bringing salient object detection to the foreground. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 186–202. [Google Scholar]

- Wang, L.; Lu, H.; Ruan, X.; Yang, M.H. Deep networks for saliency detection via local estimation and global search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3183–3192. [Google Scholar]

- Perazzi, F.; Pont-Tuset, J.; McWilliams, B.; Van Gool, L.; Gross, M.; Sorkine-Hornung, A. A Benchmark Dataset and Evaluation Methodology for Video Object Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Li, J.; Xia, C.; Chen, X. A benchmark dataset and saliency-guided stacked autoencoders for video-based salient object detection. IEEE Trans. Image Process. 2018, 27, 349–364. [Google Scholar] [CrossRef] [Green Version]

- Maerki, N.; Perazzi, F.; Wang, O.; Sorkine-Hornung, A. Bilateral space video segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ramakanth, S.A.; Babu, R.V. SeamSeg: Video object segmentation using patch seams. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 376–383. [Google Scholar] [CrossRef]

- Seguin, G.; Bojanowski, P.; Lajugie, R.; Laptev, I. Instance-level video segmentation from object tracks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tsai, Y.H.; Zhong, G.; Yang, M.H. Semantic co-segmentation in videos. In Proceedings of the 14th European Conference on Computer Vision, Munich, Germany, 8–14 September 2016. [Google Scholar]

- Zhang, Y.; Chen, X.; Li, J.; Wang, C.; Xia, C. Semantic object segmentation via detection in weakly labeled video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3641–3649. [Google Scholar]

- Zhang, D.; Javed, O.; Shah, M. Video Object Segmentation through Spatially Accurate and Temporally Dense Extraction of Primary Object Regions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 628–635. [Google Scholar] [CrossRef] [Green Version]

- Jang, W.D.; Lee, C.; Kim, C.S. Primary object segmentation in videos via alternate convex optimization of foreground and background distributions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 696–704. [Google Scholar]

- Papazoglou, A.; Ferrari, V. Fast object segmentation in unconstrained video. In Proceedings of the IEEE International Conference on Computer Vision, Portland, OR, USA, 23–28 June 2013; pp. 1777–1784. [Google Scholar]

- Xiao, F.; Jae Lee, Y. Track and segment: An iterative unsupervised approach for video object proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 933–942. [Google Scholar]

- Ji, G.P.; Fu, K.; Wu, Z.; Fan, D.P.; Shen, J.; Shao, L. Full-duplex strategy for video object segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 4922–4933. [Google Scholar]

- Fan, D.P.; Wang, W.; Cheng, M.M.; Shen, J. Shifting More Attention to Video Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Chiu, W.C.; Fritz, M. Multi-class video co-segmentation with a generative multi-video model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 321–328. [Google Scholar] [CrossRef] [Green Version]

- Fu, H.; Xu, D.; Zhang, B.; Lin, S.; Ward, R.K. Object-based multiple foreground video co-segmentation via multi-state selection graph. IEEE Trans. Image Process. 2015, 24, 3415–3424. [Google Scholar] [CrossRef]

- Zhang, D.; Javed, O.; Shah, M. Video object co-segmentation by regulated maximum weight cliques. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 551–566. [Google Scholar]

- Yu, C.P.; Le, H.; Zelinsky, G.; Samaras, D. Efficient video segmentation using parametric graph partitioning. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3155–3163. [Google Scholar]

- Wang, W.; Shen, J.; Yang, R.; Porikli, F. Saliency-aware video object segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 20–33. [Google Scholar] [CrossRef]

- Cheng, M.M.; Mitra, N.J.; Huang, X.; Torr, P.H.; Hu, S.M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [Green Version]

- Jiang, P.; Ling, H.; Yu, J.; Peng, J. Salient region detection by ufo: Uniqueness, focusness and objectness. In Proceedings of the IEEE International Conference on Computer Vision, Portland, OR, USA, 23–28 June 2013; pp. 1976–1983. [Google Scholar]

- Zhu, W.; Liang, S.; Wei, Y.; Sun, J. Saliency Optimization from Robust Background Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Zhang, J.; Sclaroff, S. Saliency detection: A boolean map approach. In Proceedings of the IEEE International Conference on Computer Vision, Portland, OR, USA, 23–28 June 2013; pp. 153–160. [Google Scholar]

- Ge, W.; Guo, Z.; Dong, Y.; Chen, Y. Dynamic background estimation and complementary learning for pixel-wise foreground/background segmentation. Pattern Recognit. 2016, 59, 112–125. [Google Scholar] [CrossRef]

- Koh, Y.J.; Kim, C.S. Primary Object Segmentation in Videos Based on Region Augmentation and Reduction. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 1, p. 7. [Google Scholar]

- Tsai, Y.H.; Yang, M.H.; Black, M.J. Video segmentation via object flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3899–3908. [Google Scholar]

- Li, J.; Zheng, A.; Chen, X.; Zhou, B. Primary video object segmentation via complementary cnns and neighborhood reversible flow. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1417–1425. [Google Scholar]

- Liu, H.; Jiang, S.; Huang, Q.; Xu, C.; Gao, W. Region-based visual attention analysis with its application in image browsing on small displays. In Proceedings of the 15th ACM International Conference on Multimedia, Augsburg, Germany, 24–29 September 2007; pp. 305–308. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-aware saliency detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1915–1926. [Google Scholar] [CrossRef] [Green Version]

- Wei, Y.; Wen, F.; Zhu, W.; Sun, J. Geodesic saliency using background priors. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 29–42. [Google Scholar]

- Schauerte, B.; Stiefelhagen, R. Quaternion-based spectral saliency detection for eye fixation prediction. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 116–129. [Google Scholar]

- Liu, T.; Yuan, Z.; Sun, J.; Wang, J.; Zheng, N.; Tang, X.; Shum, H.Y. Learning to detect a salient object. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 353–367. [Google Scholar]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M.H. Saliency detection via graph-based manifold ranking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3166–3173. [Google Scholar]

- Qin, Y.; Lu, H.; Xu, Y.; Wang, H. Saliency detection via cellular automata. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 110–119. [Google Scholar]

- Zhang, J.; Sclaroff, S.; Lin, Z.; Shen, X.; Price, B.; Mech, R. Minimum barrier salient object detection at 80 fps. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1404–1412. [Google Scholar]

- Li, X.; Zhao, L.; Wei, L.; Yang, M.H.; Wu, F.; Zhuang, Y.; Ling, H.; Wang, J. Deepsaliency: Multi-task deep neural network model for salient object detection. IEEE Trans. Image Process. 2016, 25, 3919–3930. [Google Scholar] [CrossRef] [Green Version]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Liu, N.; Han, J. Dhsnet: Deep hierarchical saliency network for salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 678–686. [Google Scholar]

- Kuen, J.; Wang, Z.; Wang, G. Recurrent attentional networks for saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3668–3677. [Google Scholar]

- Li, G.; Yu, Y. Deep contrast learning for salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 478–487. [Google Scholar]

- Yu, H.; Li, J.; Tian, Y.; Huang, T. Automatic interesting object extraction from images using complementary saliency maps. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 891–894. [Google Scholar]

- Tian, Y.; Li, J.; Yu, S.; Huang, T. Learning complementary saliency priors for foreground object segmentation in complex scenes. Int. J. Comput. Vis. 2015, 111, 153–170. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, X.; Luo, S.; Le Meur, O. Superpixel-based spatiotemporal saliency detection. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1522–1540. [Google Scholar] [CrossRef]

- Wang, W.; Shen, J.; Porikli, F. Saliency-aware geodesic video object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3395–3402. [Google Scholar]

- Ochs, P.; Malik, J.; Brox, T. Segmentation of moving objects by long term video analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1187–1200. [Google Scholar] [CrossRef] [Green Version]

- Li, F.; Kim, T.; Humayun, A.; Tsai, D.; Rehg, J.M. Video segmentation by tracking many figure-ground segments. In Proceedings of the IEEE International Conference on Computer Vision, Portland, OR, USA, 23–28 June 2013; pp. 2192–2199. [Google Scholar]

- Wang, W.; Shen, J.; Shao, L. Consistent video saliency using local gradient flow optimization and global refinement. IEEE Trans. Image Process. 2015, 24, 4185–4196. [Google Scholar] [CrossRef] [Green Version]

- Nilsson, D.; Sminchisescu, C. Semantic video segmentation by gated recurrent flow propagation. arXiv 2016, arXiv:1612.08871. [Google Scholar]

- Gadde, R.; Jampani, V.; Gehler, P.V. Semantic video cnns through representation warping. CoRR 2017, 8, 9. [Google Scholar]

- Zhu, X.; Xiong, Y.; Dai, J.; Yuan, L.; Wei, Y. Deep feature flow for video recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; Volume 1, p. 3. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- MSRA10K. Available online: http://mmcheng.net/gsal/ (accessed on 1 January 2016).

- Yan, Q.; Xu, L.; Shi, J.; Jia, J. Hierarchical Saliency Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1155–1162. [Google Scholar] [CrossRef] [Green Version]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Jegou, H.; Schmid, C.; Harzallah, H.; Verbeek, J. Accurate image search using the contextual dissimilarity measure. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2–11. [Google Scholar] [CrossRef] [Green Version]

- Jain, S.D.; Grauman, K. Supervoxel-consistent foreground propagation in video. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 656–671. [Google Scholar]

- Peng, H.; Li, B.; Ling, H.; Hu, W.; Xiong, W.; Maybank, S.J. Salient object detection via structured matrix decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 818–832. [Google Scholar] [CrossRef] [Green Version]

- Tong, N.; Lu, H.; Ruan, X.; Yang, M.H. Salient object detection via bootstrap learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1884–1892. [Google Scholar]

- Tu, W.C.; He, S.; Yang, Q.; Chien, S.Y. Real-time salient object detection with a minimum spanning tree. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2334–2342. [Google Scholar]

- Wang, L.; Wang, L.; Lu, H.; Zhang, P.; Ruan, X. Saliency detection with recurrent fully convolutional networks. In Proceedings of the 14th European Conference on Computer Vision, Munich, Germany, 8–14 September 2016; pp. 825–841. [Google Scholar]

- Faktor, A.; Irani, M. Video Segmentation by Non-Local Consensus Voting. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Liu, J.J.; Hou, Q.; Liu, Z.A.; Cheng, M.M. Poolnet+: Exploring the potential of pooling for salient object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022. [Google Scholar] [CrossRef]

- Liu, J.J.; Hou, Q.; Cheng, M.M. Dynamic Feature Integration for Simultaneous Detection of Salient Object, Edge and Skeleton. IEEE Trans. Image Process. 2020, 29, 8652–8667. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.Y.; Bian, J.W.; Zhang, L.; Cheng, M.M. SAMNet: Stereoscopically Attentive Multi-scale Network for Lightweight Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3804–3814. [Google Scholar] [CrossRef]

- Ma, M.; Xia, C.; Li, J. Pyramidal feature shrinking for salient object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 2311–2318. [Google Scholar]

| Type Name | Patch Size/Stride/Pad/Dilation/Group | Output Size |

|---|---|---|

| Conv1_pb1 | 3 × 3/1/1/1/32 | 10 × 10 × 256 |

| Conv2_pb1 | 1 × 1/1/0/1/1 | 10 × 10 × 2048 |

| Pool1 | avg pool, 2 × 2, stride 2 | 5 × 5 × 2048 |

| Conv3_pb1 | 3 × 3/1/1/1/32 | 5 × 5 × 256 |

| Conv4_pb1 | 1 × 1/1/0/1/1 | 5 × 5 × 2048 |

| Conv1_pb2 | 3 × 3/1/1/1/32 | 5 × 5 × 256 |

| Conv2_pb2 | 1 × 1/1/0/1/1 | 5 × 5 × 2048 |

| Pool2 | avg pool, 2 × 2, stride 2 | 3 × 3 × 2048 |

| Conv3_pb2 | 3 × 3/1/1/1/32 | 3 × 3 × 256 |

| Conv4_pb2 | 1 × 1/1/0/1/1 | 3 × 3 × 2048 |

| Interp1 | bilinear upsampling | 40 × 40 × 1024 |

| Conv1 | 1 × 1/1/0/1/1 | 40 × 40 × 512 |

| Interp2 | bilinear upsampling | 40 × 40 × 2048 |

| Conv2 | 1 × 1/1/0/1/1 | 40 × 40 × 512 |

| Interp3 | bilinear upsampling | 40 × 40 × 2048 |

| Conv3 | 1 × 1/1/0/1/1 | 40 × 40 × 512 |

| Interp4 | bilinear upsampling | 40 × 40 × 2048 |

| Conv4 | 1 × 1/1/0/1/1 | 40 × 40 × 512 |

| Conv5 | 3 × 3/1/1/1/32 | 40 × 40 × 256 |

| Conv6 | 1 × 1/1/0/1/1 | 40 × 40 × 512 |

| Conv7_xf | 1 × 1/1/0/1/1 | 40 × 40 × 512 |

| Conv8_yf | 3 × 3/1/y/y/32 | 40 × 40 × 256 |

| Conv9f | 1 × 1/1/0/1/1 | 40 × 40 × 256 |

| Conv10f | 3 × 3/1/1/1/8 | 40 × 40 × 64 |

| Deconv1f | 3 × 3/4/1/1/1 | 161 × 161 × 1 |

| Models | SegTrackV2 (14 Videos) | Youtube-Objects (127 Videos) | VOS (200 Videos) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mAP | mAR | mIoU | mAP | mAR | mIoU | mAP | mAR | mIoU | ||||

| DRFI [2] | 0.464 | 0.775 | 0.511 | 0.395 | 0.542 | 0.774 | 0.582 | 0.453 | 0.597 | 0.854 | 0.641 | 0.526 |

| RBD [34] | 0.380 | 0.709 | 0.426 | 0.305 | 0.519 | 0.707 | 0.553 | 0.403 | 0.652 | 0.779 | 0.677 | 0.532 |

| BL [71] | 0.202 | 0.934 | 0.246 | 0.190 | 0.218 | 0.910 | 0.264 | 0.205 | 0.483 | 0.913 | 0.541 | 0.450 |

| BSCA [47] | 0.233 | 0.874 | 0.280 | 0.223 | 0.397 | 0.807 | 0.450 | 0.332 | 0.544 | 0.853 | 0.594 | 0.475 |

| MB+ [48] | 0.330 | 0.883 | 0.385 | 0.298 | 0.480 | 0.813 | 0.530 | 0.399 | 0.640 | 0.825 | 0.675 | 0.532 |

| MST [72] | 0.450 | 0.678 | 0.488 | 0.308 | 0.538 | 0.698 | 0.568 | 0.396 | 0.658 | 0.739 | 0.675 | 0.497 |

| SMD [70] | 0.442 | 0.794 | 0.493 | 0.322 | 0.560 | 0.730 | 0.592 | 0.424 | 0.668 | 0.771 | 0.690 | 0.533 |

| MDF [5] | 0.573 | 0.634 | 0.586 | 0.407 | 0.647 | 0.776 | 0.672 | 0.534 | 0.601 | 0.842 | 0.644 | 0.542 |

| ELD [4] | 0.595 | 0.767 | 0.627 | 0.494 | 0.637 | 0.789 | 0.667 | 0.531 | 0.682 | 0.870 | 0.718 | 0.613 |

| DCL [53] | 0.757 | 0.690 | 0.740 | 0.568 | 0.727 | 0.764 | 0.735 | 0.587 | 0.773 | 0.727 | 0.762 | 0.578 |

| LEGS [13] | 0.420 | 0.778 | 0.470 | 0.351 | 0.549 | 0.776 | 0.589 | 0.450 | 0.606 | 0.816 | 0.644 | 0.523 |

| MCDL [7] | 0.587 | 0.575 | 0.584 | 0.424 | 0.647 | 0.613 | 0.638 | 0.471 | 0.711 | 0.718 | 0.713 | 0.581 |

| RFCN [73] | 0.759 | 0.719 | 0.749 | 0.591 | 0.742 | 0.750 | 0.744 | 0.592 | 0.749 | 0.796 | 0.760 | 0.625 |

| NLC [74] | 0.933 | 0.753 | 0.884 | 0.704 | 0.692 | 0.444 | 0.613 | 0.369 | 0.518 | 0.505 | 0.515 | 0.364 |

| ACO [22] | 0.827 | 0.619 | 0.767 | 0.551 | 0.683 | 0.481 | 0.623 | 0.391 | 0.706 | 0.563 | 0.667 | 0.478 |

| FST [23] | 0.792 | 0.671 | 0.761 | 0.552 | 0.687 | 0.528 | 0.643 | 0.380 | 0.697 | 0.794 | 0.718 | 0.574 |

| SAG [57] | 0.431 | 0.819 | 0.484 | 0.384 | 0.486 | 0.754 | 0.529 | 0.397 | 0.538 | 0.824 | 0.585 | 0.467 |

| GF [60] | 0.444 | 0.737 | 0.489 | 0.354 | 0.529 | 0.722 | 0.563 | 0.407 | 0.523 | 0.819 | 0.570 | 0.436 |

| PN+ [75] | 0.734 | 0.633 | 0.708 | 0.577 | 0.759 | 0.690 | 0.742 | 0.559 | 0.808 | 0.882 | 0.824 | 0.754 |

| DFI [76] | 0.711 | 0.663 | 0.699 | 0.579 | 0.729 | 0.799 | 0.744 | 0.617 | 0.792 | 0.906 | 0.816 | 0.746 |

| FSal [77] | 0.645 | 0.725 | 0.662 | 0.561 | 0.344 | 0.358 | 0.347 | 0.170 | 0.313 | 0.330 | 0.317 | 0.152 |

| PFS [78] | 0.604 | 0.581 | 0.598 | 0.410 | 0.736 | 0.704 | 0.728 | 0.549 | 0.692 | 0.639 | 0.679 | 0.471 |

| CSP | 0.789 | 0.778 | 0.787 | 0.669 | 0.778 | 0.820 | 0.787 | 0.675 | 0.805 | 0.910 | 0.827 | 0.747 |

| Test Cases | Backbone | Objective | Evaluation | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| VGG16/ResNet50 | CE | Comple. | NRF | Multi-Test | mAP | mAR | mIoU | mT | ||

| V-Init. FG | VGG16 | √ | 0.750 | 0.879 | 0.776 | 0.684 | 0.117 | |||

| V-Init. BG | VGG16 | √ | 0.743 | 0.884 | 0.771 | 0.680 | 0.117 | |||

| V-Init. (FG + BG) | VGG16 | √ | 0.791 | 0.834 | 0.800 | 0.689 | 0.121 | |||

| V-Init. + NRF | VGG16 | √ | √ | 0.789 | 0.870 | 0.806 | 0.710 | 0.109 | ||

| R-Init. FG | ResNet50 | √ | 0.763 | 0.901 | 0.791 | 0.710 | 0.128 | |||

| R-Init. BG | ResNet50 | √ | 0.764 | 0.899 | 0.791 | 0.711 | 0.128 | |||

| R-Init. (FG + BG) | ResNet50 | √ | 0.808 | 0.863 | 0.820 | 0.724 | 0.127 | |||

| R-Init. FGp | ResNet50 | √ | √ | 0.768 | 0.925 | 0.800 | 0.726 | 0.124 | ||

| R-Init. BGp | ResNet50 | √ | √ | 0.763 | 0.927 | 0.796 | 0.723 | 0.124 | ||

| R-Init. (FGp + BGp) | ResNet50 | √ | √ | 0.814 | 0.883 | 0.829 | 0.739 | 0.122 | ||

| R-Init. + NRF | ResNet50 | √ | √ | √ | 0.803 | 0.901 | 0.824 | 0.739 | 0.108 | |

| R-Init. + NRFp | ResNet50 | √ | √ | √ | √ | 0.805 | 0.910 | 0.827 | 0.747 | 0.097 |

| Step | mAP | mAR | mIoU | T | |

|---|---|---|---|---|---|

| R-Init. FGp (Sup.) | 0.765 | 0.924 | 0.797 | 0.723 | 0.133 |

| R-Init BKp (Sup.) | 0.759 | 0.926 | 0.792 | 0.719 | 0.133 |

| R-Init FGp + BKp (Sup.) | 0.814 | .881 | 0.829 | 0.738 | 0.129 |

| Color Space | mAP | mAR | mIoU | |

|---|---|---|---|---|

| RGB | 0.785 | 0.862 | 0.801 | 0.703 |

| Lab | 0.786 | 0.860 | 0.802 | 0.702 |

| HSV | 0.787 | 0.866 | 0.804 | 0.707 |

| RGB + Lab + HSV | 0.789 | 0.870 | 0.806 | 0.710 |

| Key Step | Speed (s/frame) |

|---|---|

| Initialization (+) | 0.05 (0.36) |

| Superpixel & Feature (+) | 0.12 (0.12) |

| Build Flow & Propagation (+) | 0.02 (0.26) |

| Primary Object Seg. (+) | 0.01 (0.01) |

| Total (+) | ∼0.20 (0.75) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Li, J.; Xu, L. Complementary Segmentation of Primary Video Objects with Reversible Flows. Appl. Sci. 2022, 12, 7781. https://doi.org/10.3390/app12157781

Wu J, Li J, Xu L. Complementary Segmentation of Primary Video Objects with Reversible Flows. Applied Sciences. 2022; 12(15):7781. https://doi.org/10.3390/app12157781

Chicago/Turabian StyleWu, Junjie, Jia Li, and Long Xu. 2022. "Complementary Segmentation of Primary Video Objects with Reversible Flows" Applied Sciences 12, no. 15: 7781. https://doi.org/10.3390/app12157781

APA StyleWu, J., Li, J., & Xu, L. (2022). Complementary Segmentation of Primary Video Objects with Reversible Flows. Applied Sciences, 12(15), 7781. https://doi.org/10.3390/app12157781