1. Introduction

In recent years, the increase of the industrial product demand has imposed optimization of production lines in order to maintain competitiveness. In addition, aging and work preference changes in developed countries have encouraged the development of new automation technologies. As a consequence, smart robotics systems emerge as a compelling solution for automatizing processes. Robotic systems have proved to be outstanding for repetitive tasks. In the future, due to the rise of artificial intelligence, robot systems are expected to perform more complex tasks and adapt to changing environments.

Bin picking is an example of this problem. It is generally defined as a process of emptying a container with randomly distributed pieces and placing them in a specific position. In spite of its simple definition, bin picking poses a series of challenges that are non-trivial to tackle, such as the localization of pieces, the calculation of grasps, and collision-free path planning and control. These tasks imply a high level of cognition capabilities. Moreover, the difficulties of these challenges may vary depending on the type of the pieces and the environment setups.

Bin-picking systems are usually composed of an optical sensor (3D or 2D), a robot, and an end-effector (magnet, vacuum, gripper, etc.). The choice of these components varies depending on the application. A typical workflow starts with the localization of the pieces, which analyzes the data acquired from the optical sensor and obtains valid object poses. Then, it is followed by the grasping pose calculation and the trajectory generation towards the grasping poses. The robot executes a collision free trajectory in order to pick the object and place it in the desired destination. This process is repeated until the bin is empty or it is impossible to pick the remaining pieces.

Bin picking has been addressed by researchers at universities and in industries with different goals in mind as it combines fields of robotics and artificial intelligence. Academia tends to focus on the picking efficacy of unknown objects, typically daily-lightweight objects, as in the Amazon Robotic Challenge [

1]. In the industrial context, more emphasis is placed on the cycle time, robustness, and reliability. Moreover, the model of the pieces is normally known beforehand. However, contrary to daily-lightweight objects, industrial pieces are usually big and heavy and require compliance of traceability and safety standards. On the other hand, the constant demand of customized products requires higher flexibility in an industrial bin-picking application, to lower the efforts spent on switching among different production lines or hardware. For instance, it is common to utilize specifically designed end-effectors for picking up different types of pieces in real applications. The benefits of automated industrial bin-picking systems are two-fold: first, they increase the production process speed, and secondly, human resources can be reallocated to other tasks that are less backbreaking.

Developing a bin-picking system that overcomes all these challenges at once is not an easy feat. Industries tend to rely on ad-hoc solutions for well defined picking problems, which can hardly be adapted to other application scenarios. In this sense, a considerable amount of design work is necessary since not every robotic manipulator or gripper is suitable for every bin-picking problems. It takes a lot of effort to effect even a small change in a pipeline of this type. In recent years, several commercial and open-source types of software have been developed to simulate [

2] and implement a solution for the previously mentioned challenges. Commonly, off-the-shelf software is easier to use and more intuitive, but it is restricted to the supported robot components and comes with a steep price tag. Open-source software tends to be agnostic to the hardware and more flexible, which provides the possibility of developing reusable applications. The downside is that users may have a hard time implementing even simple setups effectively, which increases the barrier to entry [

3].

In this work, we present a bin-picking framework named PickingDK, which provides an efficient way of building up industrial bin-picking applications. The proposed framework is operating system (OS)-independent and deals with sensor data acquisition, localization, grasping computation, path planning, and robot control. PickingDK is intended for easy-flexible configuration of bin-picking applications as it provides different functionalities depending on the expertise level of the user. The main novelty of PickingDK Framework lies in the architecture itself, which makes the software extendable since the user also has the opportunity to develop certain functionalities as plugins. This work is structured as follows.

Section 2 presents the related work in the field. In

Section 3, the proposed framework is described in detail. In

Section 4, a comparison between PickingDK and robotic operating system (ROS)-based frameworks is presented to justify the pros and cons in PickingDK.

Section 5 presents use cases where multiple bin-picking applications are built under a PickingDK framework for different user-level profiles.

Section 6 presents a discussion and future work. Finally,

Section 7 draws conclusions.

2. Related Work

Research academies and industries have been working on the bin-picking topic for decades. Various works focusing on specific modules of a bin-picking process are presented in the recent years. Different types of sensors are used for bin-picking tasks, such as 2D/3D cameras. Based on the obtained visual data, approaches for object localization are studied. Drost [

4] presents a 3D object localization method from point cloud data obtained from a range scanner. Chang [

5] uses a structured light sensor mounted on the robot to perform the localization of the pieces. Liu [

6] employs neural networks that detect occlusion-free objects in 2D images and then propagate this result back to the 3D. Xu [

7] uses 2D and 3D information to perform precise localization. Instance segmentation in a RGBD image is done in order to get a prior estimation, then a posterior fine-tunning is performed via classical matching techniques. On the other hand, various types of grippers, such as magnet, vacuum, and parallel jaw, are exploited to pick objects with different shapes. Mathiesen [

8] designed a gripper able to pick and reorient small pieces from a clutter without vision, but it is specific to a certain component shape. In [

9], a multi-tool which uses suction and multiple fingertips is presented; the system automatically chooses the appropriate tool for each object. Studies and surveys on grasping and manipulation strategies are presented in [

10,

11,

12]. In the robotic field, works on path planning such as MoveIt [

3], Orocos [

13], the Open-Motion Planning Library [

14], the Robotics Library [

15], and robot control [

16] present several software tools that are widely used by the robotic community.

On the other hand, recent works such as [

17,

18,

19,

20,

21,

22] focus on addressing specific bin-picking applications with deep learning approaches, which perform the grasping point estimation directly from the visual data and depth information and achieve a comparable success rate to a more classic approach such as point cloud registration [

23]. These studies are still applied only in an experimental academic context since their results highly depend on the input dataset and require a considerable amount of training time. A review of deep learning techniques applied to the grasping pose computation is presented in [

11].

These methods are not applicable to the industrial context because industrial applications require high precision and control over the grasping point, i.e., each application will need a specific grasping configuration. Therefore, industry-focused studies such as [

24,

25,

26,

27,

28] differentiate localization and grasping estimation problems. However, these studies provide ad-hoc solutions for particular bin-picking cases with limited generalization ability. In [

29,

30], bin-picking datasets of industrial objects are presented that are intended for learning based approaches.

Frameworks that orchestrate among the independently developed bin-picking modules become a critical point when building robust and flexible industrial bin-picking applications. Tavares [

31] exploits an ROS-based architecture to adapt different bin-picking modules, where the crucial parts of the system are implemented as ROS nodes and the data flows between different modules are represented as ROS messages. However, the framework is ad-hoc to only a few pick-and-place scenarios. Furthermore, ROS has relevant security issues, as reported in [

32], which also prevents it from being a reliable solution for industrial applications. Advances in fixing the security issues are made with the release of ROS2 [

33]. However, it is still not mature enough to be applied in industrial applications. [

34] presents a versatile interface-based framework for planning and designing. It focuses more on the simulation of various bin-picking applications without being verified in real bin-picking setups. This work is developed under the Microsoft .Net framework, which makes it OS-dependent.

A few bin-picking solutions are also developed by companies as commercial products, such as Pickit [

35], AccuPick3d [

36], Bin-Picking Studio [

37], and Inpicker [

38]. The major drawbacks are that they are normally not open-source and are restricted to specific sensors or robots.

3. Overview of PickingDK

To cope with the challenges in industrial bin-picking tasks mentioned in

Section 1, we developed a functional framework that is independent of specific picking applications, called PickingDK. It modularizes the commonly needed functionalities into different types of services with predefined interfaces, supporting an agile integration of different algorithms. In practice, PickingDK employs a plugin/service-based architecture, where the functionalities are abstracted into different types of services.These services behave as interfaces to distribute reusable functionalities and deploy customizable bin-picking applications. This also promises a unified usage of virtual and real bin-picking services, which allows for their free hybridization. In this sense, quick concept proving and real scenario adaption can be easily achieved when building a bin-picking application. PickingDK provides a general workflow, named Picking Pipeline, which standardizes bin-picking processes in order to ease solution development.

3.1. Picking Pipeline: Standardized bin-picking Process

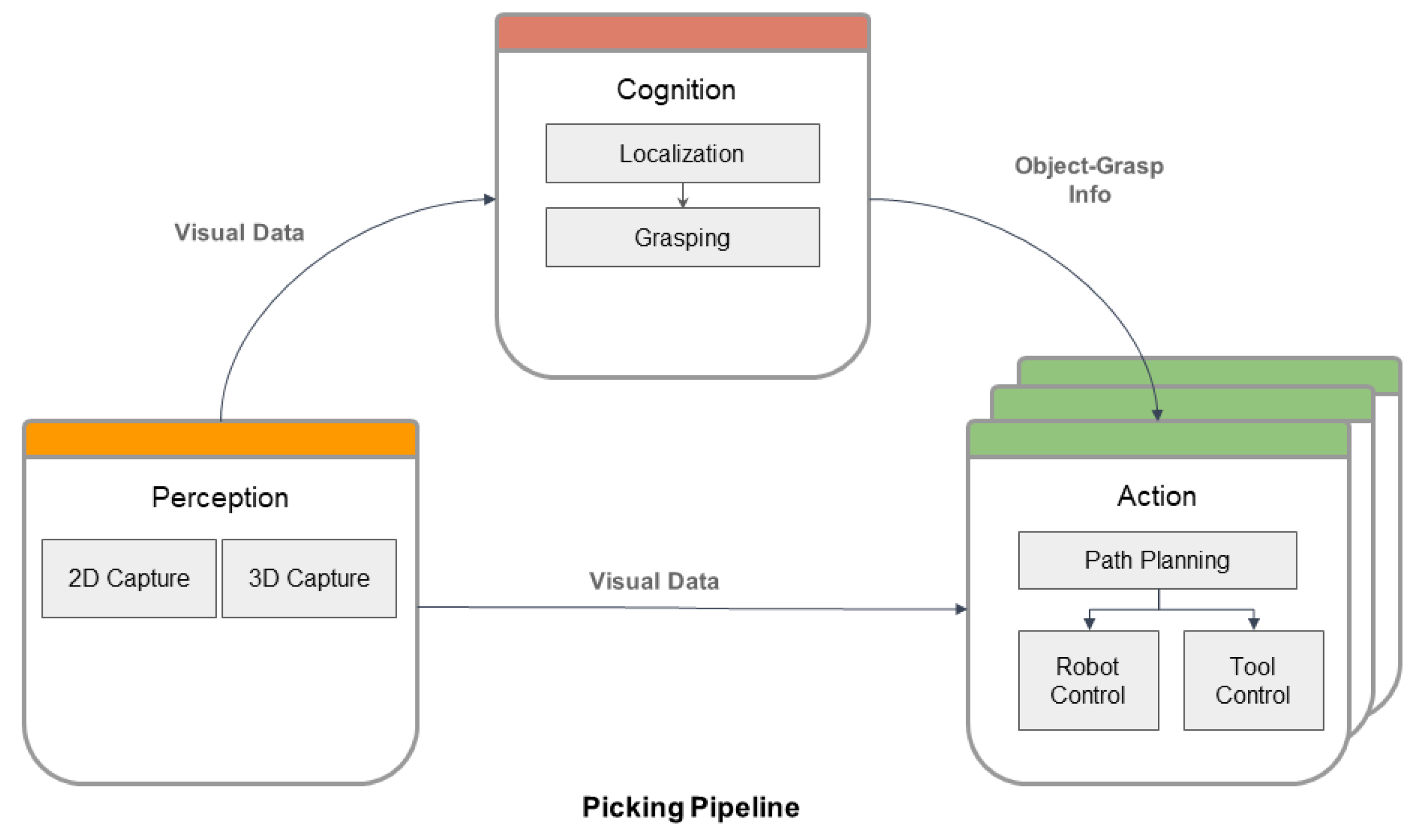

In PickingDK, a picking application is formed as a picking pipeline that represents a general process with three different steps: perception, cognition, and action. As shown in

Figure 1, the perception step captures visual data from the picking scene. The visual data is then fed to a cognition step, where various algorithms are applied to analyze the input data; this step deals with piece localization and feasible grasping calculation. Finally, with the grasps computed in the cognition step, the action steps work on collision-free planning, trajectory execution, and tool control. Note that the action steps may also require visual data information in order to adapt to environmental changes such as piece reallocation.

In each step, the general execution logic description is based on the predefined service types. Taking the cognition step as an example, PickingDK intends to first localize the objects to be picked using a localization service. Then, a grasping service computes all of the possible collision-free grasps given the localized object poses. Finally, the obtained collision-free grasps and object positions are forwarded to the actions steps. A picking process is usually composed of different robot-tool movements, i.e., moving from home pose to pre-pick pose, moving to a pre-pick pose, moving from pre-pick to pick pose, and moving from pick pose to post pick. Each movement is encoded as an action step. Thus, every pipeline would have multiple action steps.

In this manner, the behaviour of each step is configurable where suitable services are selected to address specific picking problems.

Figure 1 also presents the service types predefined in the three steps of a picking pipeline.

3.2. Plugin-Service Based Architecture

PickingDK exploits the plugin-service based architecture to support the above mentioned flexibility in building a picking application. Services are encapsulated into plugins and compiled as dynamic libraries that can be loaded in a program.

PickingDK defines 7 types of services that are generally needed in picking applications:

Capture2DService: manages the camera connection, configuration, and image captures.

Capture3DService: manages the camera connection, configuration, and point-cloud captures.

LocalizationService: performs object localization based on visual data and obtains 6 DOF object poses.

GraspingService: computes grasps for the robot given object poses.

PathPlanningService: computes collision-free robot trajectories in order to reach the desired poses.

RobotControlService: controls the robot movement.

GripperControlService: controls the end effector.

Each type of service is generalized and abstracted with respect to its functionalities. Therefore, service interfaces are standardized and decoupled from their implementations. This makes PickingDK an extendable library, where developers can implement new services as plugins, which can be used in a picking pipeline.

Figure 2 illustrates the concept of picking pipeline customization.

In PickingDK, there is a selection of plugin-services that are applied to different picking applications.

Table 1 lists the available plugin-services in PickingDK, while in

Section 5 we present several use cases which involve the listed plugin services.

3.3. PickingDK User Types

PickingDK intends to be usable for users with different levels of experience.

Figure 3 shows an overall architecture of PickingDK, where different levels of interactions are granted for each user type. PickingDK addresses three types of user.

End-users interact with PickingDK via the graphical user interface, which allows them to configure and execute a basic picking pipeline. This level is meant for system integrators who are not familiar with Python or C++.

Mid-level python users are granted with limited functionalities exposed via Python bindings, which allows them to access to intermediate data and craft/test more complex picking pipelines. This level is meant for users with a basic Python level.

C++ developers have the maximum freedom to interact with PickingDK, which allows them to develop and use new plugin services. This level is meant for skilled users with knowledge in C++.

3.4. PickingDK User Interface

The outermost layer of abstraction in PickingDK is composed of the two user interfaces. The first one is an application intended to ease the process of defining a picking application in a wizard-like style, where the user is guided through a series of steps. The second interface is used to monitor and control the execution of the picking process.

In this way, even a person without any programming experience could test and implement a variety of picking scenarios. This PickingDK level could also be used as a fast prototyping tool since it is possible to define every combination of robot, gripper, and piece as long as the necessary plugins and data are available. Once the system is defined, all of the necessary files are automatically packed in a compressed archive. This archive contains all of the necessary algorithms, parameter files, models, and a python file that describes the picking pipeline. Then, the monitor interface loads this file and starts the bin-picking application.

Figure 4 shows a snapshot of the user interface. The left panel shows the three main configuration steps. The first one allows the user to define the scene, the second one is used to specify the grasping points of the object, and the last one is used to select the required plugins along with their parameters. The scene specification step additionally has four steps where the user can indicate the environment CAD model, the size of the container, the robot model, its home and drop pose, the end-effector, the camera, and the piece reference model. Additionally, the right panel shows interaction options with scene objects. The center of the graphical interface is composed of a 3D view of the current scene.

Figure 5 shows a picture of the monitor application, which acts as a digital twin of the real environment. The right panel displays several buttons to control the process. The top bar shows the state of the current picking cycle, while the central window shows the virtual representation of the environment. It has to be noted that both interfaces were designed with a minimalistic approach in order to ease the learning process. Experienced users can modify the configuration files to customize all the process parameters as well as the coordinate system settings. Videos of both graphical interfaces can be found in the

Supplementary Materials.

4. Framework Comparison

In this section, a comparison is performed between PickingDK and other bin-picking frameworks based on their architectures and available features.

4.1. ROS Based Frameworks

The robot operating system (ROS) is a flexible framework for developing robot software composed from a collection of tools, libraries, and conventions. ROS provides a thin, message-based, peer-to-peer architecture for general robotic applications. Bin-picking frameworks based on ROS benefit from the standardized input/output data structures (ROS messages) for bin-picking modules, as well as the ROS action paradigm for possible asynchronization in a industrial process.

However, industrial bin-picking applications display highly sequential behavior, where the output of the previous step serves as the input of the following step. Therefore, asynchronous behaviour does not provide substantial improvement and increases architecture complexity and resource consumption.

Working with ROS also implies some disadvantages. For instance, ROS is mainly used in Linux systems, and it is not fully supported in other operating systems. However, industrial manufacturers normally have their manufacturing execution system (MES) and the related infrastructures based on Windows.

Moreover, System security is of great importance in an industrial bin-picking application. This prejudices the usage of ROS in this context as it suffers from significant security issues.

PickingDK data flow is done through inputs/outputs of the standardized interfaces, which does not encounter the security issues in ROS. The fact that the proposed framework is OS-independent provides higher flexibility and adaptability comparing to ROS based framework.

In [

31], an ROS-based pick and place framework is presented. Each functionality is represented by an ROS node, such as capture image, process image, robot control, and tool control. The process is orchestrated by a control node that performs the sequence of actions. This architecture is analogous to PickingDK, where functionalities are services and the picking pipeline acts as a coordinator. However, this ROS-based framework is only meant for ROS users since knowledge about ROS is required. Moreover, any modifications have to be made from scratch as no interfaces or APIs are provided. Taking that into account, its usability is very limited in the industrial context. In contrast, PickingDK overcomes this problem thanks to its user interactions levels and its ROS independence.

It is worth to note that PickingDK allows any kind of service implementation, including ROS-based service in the plugins, although the plugin-service based architecture is employed in the framework to avoid critical problems in ROS. The advantage is that PickingDK provides freedom of plugin implementation, while the plugin selection is done by the user who builds the bin-picking application.

4.2. Commercial Frameworks

Comparison between different commercial bin-picking frameworks is not a straightforward task. Since most of them are commercial, licenses and equipment are required, and software architecture is bounded to the user. Moreover, the evaluation of picking performance is out of the scope of this work since picking performance would strongly depend on the evaluation case choice and would not generalize well.

Therefore, in this subsection, a feature comparison is made based on the information presented in their respective websites.

Table 2 shows a summary of this comparison.

All commercial frameworks provide support for multiple robot brands. The compatible robot catalogue is limited and established by the vendors. These catalogues contain the most popular industrial robot brands. However, in the case of a custom-robot they fail on providing support. That is not the case for PickingDK, in which the user can connect with any robots or system, as long as the appropriate plugin is developed.

Commercial frameworks tend to provide sensor-software solutions. Thus, the supported sensors are the ones provided by the vendor itself. Pickit and Bin-Picking Studio can only work with structured-light sensors, while InPicker and Accupick3D can also work with other sensor types such as stereo vision. Apart from sensors, some of them also include a hardware controller with the embedded software. As a consequence, these frameworks are hardware-dependent. Contrary, PickingDK allows for the use sensors independently of the brand, which may be quite favourable when meeting system requirements. Besides, PickingDK is completely hardware agnostic.

In terms of localization algorithms, the information provided from the commercial frameworks is bounded. The user can modify some parameters in order to adapt better to a particular case, but he/she does not have any knowledge about the algorithm. This is usual since these softwares are oriented to users with low computer-vision knowledge. However, computer vision experts would develop beneficial custom algorithms to fit particular requirements. PickingDK provides flexibility thanks to plugin-based architecture. In terms of the grasping point calculation, the grasping point must be specified over the CAD model of the piece; this method works fine for industrial applications since its computation is straightforward. However, it restricts its performance to known objects.

All five frameworks present the idea of a wizard-style graphical interface to define the bin-picking process, which is the best method for simplicity. The bin-picking process is also displayed in a graphical interface showing representative data to the user. However, for complex scenarios or debugging purposes, it would be advisable to have a better control of the process. In the case of an advanced user, PickingDK allows for high customisability since it offers complete control of the process data flow.

5. Cases of Study

In this section, experiments are presented to demonstrate the functionalities in PickingDK framework from different aspects.

5.1. Use Case 1-Prototype Case

This use case shows a picking prototype built on PickingDK, where a 7 Degree Of Freedom (DOF) LBR iiwa 14, a Schmalz vacuum ECBPi 12, and an Ensenso N35 are employed to pick up metal pieces with a thin plate shape.

Figure 6 shows the environment setup for this picking prototype.

To cope with the localization task, a custom localization service calledhy EdgeMatchingLocalization was developed in PickingDK. EdgeMatchingLocalization service combines both 2D and 3D information captured from the Ensenso. It firstly predicts oriented bounding boxes of the metal pieces given a grayscale image. Then, it back-projects the 2D points within the bounding box to 3D. Finally, it performs a PCA analysis to obtain the 6 DOF object poses, see the localization results in

Figure 7. The picking preference for the localized object is computed with respect to the pulling difficulty. Pulling difficulty is defined as the size of the occluding point cloud around the object on a pulling direction.

On the other hand, MoveItPlanner service, a plugin that communicated with Moveit and ROS, is utilized to deal with the path planning task. It computes collision-free robot trajectories using Rapidly-exploring Random Trees (RRTs). For controlling the robot and gripper, we employ ROSRobotControl and SchmalzVacuumControl services in PickingDK. ROSRobotControl service contains an integrated ROS controller that provides configuration and control interfaces for the robot. In SchmalzVacuumControl, the Schmalz vacuum gripper is controlled by a weemos D1 mini-board connected via WiFi.

The prototype is validated 10 times with 13 random distributed pieces. The experimental results show that it cleans all 13 pieces in every experiment. It reaches less than 8s in cycle time with 50% robot maximum speed.

5.2. Use Case 2-Industrial Case

In this use case, another picking application is built with PickingDK. This use case presents a real industrial bin-picking scenario, which employs an industrial robot (kuka kr20r1810) equipped with a pneumatic magnet gripper and an Ensenso N30, which are managed by a Beckhoff Programmable Logic Controller (PLC). Picking pieces are hat-shaped iron pieces of around 13 kg.

Figure 8 shows the environment setup for this picking application.

To address the localization problem, a service called LCCPLocalization is exploited, where a classic segment-and-register process is utilized. In practice, input point cloud is segmented using locally convex connected supervoxels (LCCP) [

39]. Then, iterative closest point (ICP) is performed on the segments to obtain the final six D0F object poses.

The performance of this localization service is evaluated on a manually annotated dataset. It includes 24 frames captured using Ensenso N30 in real scenes, where the number of detectable pieces varies from 27 to 61.

Table 3 shows the quantitative evaluation of the LCCPLocalization service, where the true positive (TP), false positive (FP), false negative (FN), precision, recall, and processing time are measured. In industrial bin-picking applications, it is critical to have virtually zero FP to ensure efficacy in the picking process. Thus, in our experiment, the LCCPLocalization service is configured with a high fitness threshold of the ICP registration phase. This increases localization accuracy while suppressing recall. With this configuration, an overall 100% precision and 50.8% recall is achieved within 4.1 s per frame on average.

Figure 9 presents a bar chart on the TP against FN.

Figure 10 shows a qualitative result. The most promising localized object poses are marked in red, green, and blue, respectively, as well as the best grasping pose shown with a magnet gripper.

Similar to the previous use case, the MoveItPlanner service is used for computing the optimal robot trajectories. However, the robot and gripper control are achieved by the BeckhoffRobot and BeckhoffMagnet Control services. These services communicate directly with the PLC that governs the robot. This control scheme is widely used in industries as the PLC also controls safety elements such as optical barriers and the emergency button, and it provides a fast response to any incident.

5.3. Use Case 3-Design Evaluations

In this experiment, a reachability test is performed to compare performance in four different scenarios, a combination of two positions for the robot base (ceiling and ground, see

Figure 11) with two different end-effectors (straight and angled, see

Figure 12) is studied.

We use a hybrid combination of virtual plugins for capture, localization, grasping, control, and a functional plugin for path planning, in this case MoveItPlanner service. A pseudo uniform sampling of possible grasping poses inside the bin is performed. Then, trajectories that comprise a basic pipeline, i.e., go to the piece pick it and place it in another place, are generated for each pose.

Table 4 shows the success ratio of the path planning. The planning computation time is displayed in

Figure 13; note that only successful plans are taken into account.

From the reachability results, it can be concluded that the floor position is more advisable as it presents higher ratios. Comparing planning times for scenarios 2 and 4, the straight end effector presents a slightly faster planning time, whereas its planning reachability is marginally lower. Thanks to that, the designer’s choice is supported by objective data.

5.4. Use Case 4-Synthetic Data Generation for Parameter Optimization

In this use case, parameter optimization with synthetic data using PickingDK is shown. The appropriate parameter configuration of an algorithm ensures its performance and efficiency. Traditionally, parameter fine-tuning is done manually based on certain physical meaning. Drawbacks of manual configuration are obvious:

It requests expertise in a specific algorithm, which limits the flexibility of applying an algorithm to different scenarios.

It lacks of annotated data for qualitatively evaluating parameter settings.

Thus, in PickingDK, virtual capture services are introduced to cope with the data generation problem. The real bin-picking scene is simulated and rendered to obtain synthetic data and ground truth annotations.

Taking the VirtualCapture service as an example, synthetic data generation starts by throwing user-provided pieces with random initial poses into a container. Physics simulation is then performed until all the pieces reach the stationary state, this simulates a real picking scene with randomly posed pieces. Finally, a rendering process is employed to obtain multi-modal information from this virtual scene, such as color image, depth map, and point cloud, as well as the ground truth data, such as the segmentation mask and 6 DOF object poses.

Figure 14 shows an example of the captured synthetic point cloud.

With the synthetic data and its ground truth, a simple experiment is performed in order to optimize the sampling factor of the PPFLocalization service [

4]. Appropiate setting for the sampling factor is searched with respect to the detection rate using the synthetic data. A sampling factor

n defines that the algorithm samples 1 per

n points in the input point cloud. Point pair features are computed within the neighborhood of these sampled points and compared with the trained model. A detection is considered valid when pose error between detected and ground truth is lower than certain threshold.

Figure 15 shows the experiment result, where the parameter “sampling factor” is searched within the interval [10, 100] with the step length 10. A larger sampling factor reduces the overall processing time while providing less localization accuracy. The increase of detection rate from sampling factor 10 to 30 shows that sparser sampling, to some extent, provides higher robustness against the noise in the input point cloud.

6. Discussion

The presented use-cases show the contributions made by the PickingDK framework in different aspects of a bin-picking solution process.

In uses cases 1 and 2, picking pipelines are successfully executed in different scenarios using different plugins. Use case 1 presents a more research-oriented pipeline, while use case 2 represents a real industrial scenario. Moreover, these pipelines were defined from different user-level categories. These use cases illustrate the flexibility of the applications built with PickingDK framework.

Use cases 3 and 4 focus on the a priori evaluation of the feasibility of a bin-picking project. Before an industrial bin-picking project comes into existence, it is necessary to firstly verify the production process to be automated. Thanks to the plugin-service architecture, virtual services are treated as one kind of service implementation that behaves the same as functional services in PickingDK. This enables the capability of hybridization between virtual and functional services to optimize the system before real commissioning. Hybridization is convenient to form various prototypes for the purpose of the proof-of-concept in any step of a bin-picking application. For instance, use case 3 focuses on the designing step of the cell. Cell design plays a major role in the performance of the solution. The approach presented in use case 3 ensures favorable configuration for the robot-tool system before the real cell designing process. Moreover, the reachability study performed in use case 3 does not require any hardware to be involved, which saves costs. Use case 4 shows a prototype to perform quick evaluation/fine-tuning of a localization algorithm with synthetic data. This eases the problem in the algorithm design step of a bin-picking application and provides confidence for the following economical inversions. Hybridization can also serve as a testing step for optimizing service performance.

Due to the plugin-based architecture of PickingDK framework, it is highly extendable for new features. PickingDK is under the continuous update of plugins, where the following aspects are considered:

Integration of Deep Neural Networks: As state-of-the-art methods suggest, deep learning approaches are showing promising results. Deep learning methods for object localization or grasping estimation may be implemented as plugins to be use inside the cognitive step

Integration GPU accelerated algorithms: To accelerate performance, motion planning algorithms will be implemented with GPU capabilities.

Communication layer: In the industrial context, allowing for the integration of this framework on the manufacturing execution systems of the companies can be substantially beneficial. Therefore, in the future, we plan to add a communication layer with standard protocols such as OPC-UA [

40].

7. Conclusions

In this work, we have presented a reproducible bin-picking framework called PickingDK. The main contributions of the PickingDK framework are as follows: its flexibility, thanks to its plugin-based architecture; its ability to cope with different hardware and integrate third-party libraries for specific functions; its fast prototyping and quick proof-of-concept, as it provides hybridization among virtual/functional plugins; and its usability, as it offers different levels of control for beginners and competent or expert users.

Author Contributions

Conceptualization, M.O., X.L. and J.R.S.; software, M.O., X.L. and A.T.; validation, M.O. and X.L.; formal analysis, M.O. and X.L.; investigation, M.O. and X.L.; visualization, A.T.; writing—original draft preparation, M.O., X.L. and A.T.; writing—review and editing, M.O., X.L. and J.R.S.; supervision, J.R.S.; Funding acquisition, J.R.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank Ekide Group for its support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Correll, N.; Bekris, K.E.; Berenson, D.; Brock, O.; Causo, A.; Hauser, K.; Okada, K.; Rodriguez, A.; Romano, J.M.; Wurman, P.R. Analysis and observations from the first amazon picking challenge. IEEE Trans. Autom. Sci. Eng. 2016, 15, 172–188. [Google Scholar] [CrossRef]

- Collins, J.; Chand, S.; Vanderkop, A.; Howard, D. A Review of Physics Simulators for Robotic Applications. IEEE Access 2021, 9, 51416–51431. [Google Scholar] [CrossRef]

- Coleman, D.; Sucan, I.; Chitta, S.; Correll, N. Reducing the Barrier to Entry of Complex Robotic Software: A MoveIt! Case Study. arXiv 2014, arXiv:1404.3785. [Google Scholar]

- Drost, B.; Ulrich, M.; Navab, N.; Ilic, S. Model Globally, Match Locally: Efficient and Robust 3D Object Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 998–1005. [Google Scholar]

- Chang, W.; Wu, C.H. Eye-in-hand vision-based robotic bin-picking with active laser projection. Int. J. Adv. Manuf. Technol. 2016, 85, 2873–2885. [Google Scholar] [CrossRef]

- Liu, D.; Arai, S.; Xu, Y.; Tokuda, F.; Kosuge, K. 6D Pose Estimation of Occlusion-Free Objects for Robotic Bin-Picking Using PPF-MEAM With 2D Images (Occlusion-Free PPF-MEAM). IEEE Access 2021, 9, 50857–50871. [Google Scholar] [CrossRef]

- Xu, Y.; Arai, S.; Liu, D.; Lin, F.; Kosuge, K. FPCC: Fast point cloud clustering-based instance segmentation for industrial bin-picking. Neurocomputing 2022, 494, 255–268. [Google Scholar] [CrossRef]

- Mathiesen, S.; Iturrate, I.; Kramberger, A. Vision-less bin-picking for small parts feeding. IEEE Int. Conf. Autom. Sci. Eng. 2019, 2019, 1657–1663. [Google Scholar]

- Olesen, A.S.; Gergaly, B.B.; Ryberg, E.A.; Thomsen, M.R.; Chrysostomou, D. A Collaborative Robot Cell for Random Bin-picking based on Deep Learning Policies and a Multi-gripper Switching Strategy. Procedia Manuf. 2020, 51, 3–10. [Google Scholar] [CrossRef]

- Spenrath, F.; Pott, A. Gripping Point Determination for Bin Picking Using Heuristic Search. Procedia CIRP 2017, 62, 606–611. [Google Scholar] [CrossRef]

- Alonso, M.; Izaguirre, A.; Graña, M. Current Research Trends in Robot Grasping and Bin Picking. Adv. Intell. Syst. Comput. 2019, 771, 367–376. [Google Scholar]

- Li, R.; Qiao, H. A Survey of Methods and Strategies for High-Precision Robotic Grasping and Assembly Tasks—Some New Trends. IEEE/ASME Trans. Mechatron. 2019, 24, 2718–2732. [Google Scholar] [CrossRef]

- Bruyninckx, H. Open robot control software: The OROCOS project. In Proceedings of the 2001 ICRA, IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Korea, 21–26 May 2001; Volume 3, pp. 2523–2528. [Google Scholar]

- Sucan, I.; Moll, M.; Kavraki, E. The Open Motion Planning Library. Robot. Autom. Mag. IEEE 2012, 19, 72–82. [Google Scholar] [CrossRef]

- Rickert, M.; Gaschler, A. Robotics library: An object-oriented approach to robot applications. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 733–740. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3. [Google Scholar]

- Zeng, A.; Song, S.; Yu, K.T.; Donlon, E.; Hogan, F.R.; Bauza, M.; Ma, D.; Taylor, O.; Liu, M.; Romo, E.; et al. Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 3750–3757. [Google Scholar]

- Zeng, A.; Song, S.; Welker, S.; Lee, J.; Rodriguez, A.; Funkhouser, T. Learning Synergies Between Pushing and Grasping with Self-Supervised Deep Reinforcement Learning. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4238–4245. [Google Scholar]

- Pinto, L.; Gupta, A. Supersizing self-supervision: Learning to grasp from 50 K tries and 700 robot hours. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3406–3413. [Google Scholar]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Quillen, D. Learning Hand-Eye Coordination for Robotic Grasping with Deep Learning and Large-Scale Data Collection. Int. J. Robot. Res. 2016, 37, 421–436. [Google Scholar] [CrossRef]

- Iriondo, A.; Lazkano, E.; Ansuategi, A. Affordance-based grasping point detection using graph convolutional networks for industrial bin-picking applications. Sensors 2021, 21, 816. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, C.; Wang, Z.; Zhao, H.; Ding, H. Semantic part segmentation method based 3D object pose estimation with RGB-D images for bin-picking. Robot. Comput. Integr. Manuf. 2021, 68, 102086. [Google Scholar] [CrossRef]

- Mahler, J.; Liang, J.; Niyaz, S.; Laskey, M.; Doan, R.; Liu, X.; Ojea, J.A.; Goldberg, K. Dex-net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. arXiv 2017, arXiv:1703.09312. [Google Scholar]

- Pretto, A.; Tonello, S.; Menegatti, E. Flexible 3D localization of planar objects for industrial bin-picking with monocamera vision system. In Proceedings of the 2013 IEEE International Conference on Automation Science and Engineering (CASE), Madison, WI, USA, 17–20 August 2013; pp. 168–175. [Google Scholar]

- Buchholz, D.; Futterlieb, M.; Winkelbach, S.; Wahl, F.M. Efficient bin-picking and grasp planning based on depth data. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3245–3250. [Google Scholar]

- Blank, A.; Hiller, M.; Zhang, S.; Leser, A.; Metzner, M.; Lieret, M.; Thielecke, J.; Franke, J. 6DoF Pose-Estimation Pipeline for Texture-less Industrial Components in Bin Picking Applications. In Proceedings of the 2019 European Conference on Mobile Robots (ECMR), Prague, Czech Republic, 4–6 September 2019; pp. 1–7. [Google Scholar]

- Kleeberger, K.; Schnitzler, J.; Khalid, M.U.; Bormann, R.; Kraus, W.; Huber, M. Precise Object Placement with Pose Distance Estimations for Different Objects and Grippers. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robot and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Martinez, C.; Boca, R.; Zhang, B.; Chen, H.; Nidamarthi, S. Automated bin picking system for randomly located industrial parts. In Proceedings of the 2015 IEEE International Conference on Technologies for Practical Robot Applications (TePRA), Woburn, MA, USA, 11–12 May 2015; pp. 1–6. [Google Scholar]

- Kleeberger, K.; Landgraf, C.; Huber, M.F. Large-scale 6D Object Pose Estimation Dataset for Industrial Bin-Picking. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 2573–2578. [Google Scholar] [CrossRef]

- Zhang, X.; Lv, W.; Zeng, L. A 6DoF Pose Estimation Dataset and Network for Multiple Parametric Shapes in Stacked Scenarios. Machines 2021, 9, 321. [Google Scholar] [CrossRef]

- Tavares, P.; Sousa, A. Flexible pick and place architecture using ros framework. In Proceedings of the 2015 10th Iberian Conference on Information Systems and Technologies (CISTI), Aveiro, Portugal, 17–20 June 2015; pp. 1–6. [Google Scholar]

- Dieber, B.; Breiling, B.; Taurer, S.; Kacianka, S.; Rass, S.; Schartner, P. Security for the robot operating system. Robot. Auton. Syst. 2017, 98, 192–203. [Google Scholar] [CrossRef]

- DiLuoffo, V.; Michalson, W.R.; Sunar, B. Robot Operating System 2: The need for a holistic security approach to robotic architectures. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418770011. [Google Scholar] [CrossRef]

- Schyja, A.; Kuhlenkötter, B. Virtual Bin Picking-a generic framework to overcome the Bin Picking complexity by the use of a virtual environment. In Proceedings of the 2014 4th International Conference On Simulation And Modeling Methodologies, Technologies And Applications (SIMULTECH), Vienna, Austria, 28–30 August 2014; pp. 133–140. [Google Scholar]

- Pickit. Pickit3D. 2021. Available online: https://www.pickit3d.com/ (accessed on 12 May 2021).

- Solomon. Solomon3D. 2021. Available online: https://www.solomon-3d.com/ (accessed on 12 May 2021).

- Photoneo. Photoneo-Bin Picking Studio. 2021. Available online: https://www.photoneo.com/bin-picking-studio/ (accessed on 16 May 2021).

- InPicker. InPicker. 2021. Available online: https://www.inpicker.com/ (accessed on 12 May 2021).

- Christoph Stein, S.; Schoeler, M.; Papon, J.; Worgotter, F. Object partitioning using local convexity. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 304–311. [Google Scholar]

- Graube, M.; Hensel, S.; Iatrou, C.; Urbas, L. Information models in OPC UA and their advantages and disadvantages. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–8. [Google Scholar]

Figure 1.

The three main steps in a picking pipeline of PickingDK and the related service types.

Figure 1.

The three main steps in a picking pipeline of PickingDK and the related service types.

Figure 2.

Select plugin/services for a picking pipeline.

Figure 2.

Select plugin/services for a picking pipeline.

Figure 3.

Users at different level.

Figure 3.

Users at different level.

Figure 4.

User interface of the pipeline definition application.

Figure 4.

User interface of the pipeline definition application.

Figure 5.

User interface for control and monitor a picking pipeline.

Figure 5.

User interface for control and monitor a picking pipeline.

Figure 6.

The environment setup for Use Case 1, (left): the real scene, (right): the virtual scene where obstacles are broadened for safety reasons.

Figure 6.

The environment setup for Use Case 1, (left): the real scene, (right): the virtual scene where obstacles are broadened for safety reasons.

Figure 7.

An example of metal pieces localization in EdgeMatchingLocalization.

Figure 7.

An example of metal pieces localization in EdgeMatchingLocalization.

Figure 8.

The environment setup for Use Case 2, left: the real scene, right: the virtual scene in moveit.

Figure 8.

The environment setup for Use Case 2, left: the real scene, right: the virtual scene in moveit.

Figure 9.

True positives and false negatives in all the testing frames.

Figure 9.

True positives and false negatives in all the testing frames.

Figure 10.

Qualitative results. Left panels correspond to a top view. Right panels correspond to a side view.

Figure 10.

Qualitative results. Left panels correspond to a top view. Right panels correspond to a side view.

Figure 11.

Pose of the robot for reachability study.

Figure 11.

Pose of the robot for reachability study.

Figure 12.

End effectors for the reachability study.

Figure 12.

End effectors for the reachability study.

Figure 13.

Box plot of the path planning computational times of the success rates. The purple line represents the median of the distribution, and the red dot the average.

Figure 13.

Box plot of the path planning computational times of the success rates. The purple line represents the median of the distribution, and the red dot the average.

Figure 14.

Synthetic point cloud captured with VirtualEnsenso service.

Figure 14.

Synthetic point cloud captured with VirtualEnsenso service.

Figure 15.

Above: detection rate under different configurations for sampling rate, Below: processing time under different configurations of sampling rate.

Figure 15.

Above: detection rate under different configurations for sampling rate, Below: processing time under different configurations of sampling rate.

Table 1.

List of available services in PickingDK.

Table 1.

List of available services in PickingDK.

| Plugin Name | Service Type(s) | Description |

|---|

| Ensenso | Capture2DService | Manage ensenso connection, setting, and image capture |

| | Capture3DService | Point cloud capture |

| PPFLocalization | LocalizationService | Object localization based on point pair features |

| LCCPLocalization | LocalizationService | Object localization based on Locally Convex Connected Patches segmentation and ICP Registration |

| EdgeMatching Localization | LocalizationService | Object localization based on 2d edge matching and 3d back-projection |

| PassiveGripper Grasping | GraspingService | Grasping pose calculation for passive gripper (such as magnet and vacuum) |

| ActiveGripper Grasping | GraspingService | Grasping pose calculation for active gripper (such as parallel jaw) |

| CustomMagnet | ControlToolSerivce | Magnet gripper controller |

| SchmalzVacuum | ControlToolService | Schmalz vacuum gripper controller |

| Robot | ControlRobotService | Control the movement of the robot |

| MoveItPlanner | PathPlanningService | A moveit based path planner |

| VirtualCapture | Capture3DService | Simulate the real scene and capture synthetic point cloud with ground truth |

| VirtualLocalization | LocalizationService | Simulate the result of a localization service from user-defined information |

| VirtualGrasping | GraspingService | Simulate resulting grasps from user-defined information |

| VirtualRobot | ControlRobotService | Simulate the movements of a virtual robot |

Table 2.

Framework comparison.

Table 2.

Framework comparison.

| Framework | Multiple

Robot Brand | Multiple

Sensor Types | Hardware

Independence | Customization |

|---|

| Accupick3D [36] | ✓ | ✓ | ✗ | ✗ |

| Pickit [35] | ✓ | ✗ | ✗ | ✗ |

Bin picking

Studio [37] | ✓ | ✗ | ✗ | ✗ |

| InPicker [38] | ✓ | ✓ | ✗ | ✗ |

| PickingDK | ✓ | ✓ | ✓ | ✓ |

Table 3.

A quantitative evaluation of LCCPLocalization service on a dataset with 24 frames.

Table 3.

A quantitative evaluation of LCCPLocalization service on a dataset with 24 frames.

|

Frame ID | Detectable

Pieces | TP | FP | FN | Precision | Recall | Time(s) |

|---|

| 1 | 61 | 33 | 0 | 28 | 100% | 54.1% | 5.32 |

| 2 | 28 | 14 | 0 | 14 | 100% | 50.0% | 3.13 |

| 3 | 58 | 33 | 0 | 25 | 100% | 56.9% | 4.45 |

| 4 | 55 | 26 | 0 | 29 | 100% | 47.3% | 4.25 |

| 5 | 58 | 31 | 0 | 27 | 100% | 53.5% | 4.48 |

| 6 | 58 | 28 | 0 | 30 | 100% | 48.3% | 4.44 |

| 7 | 56 | 33 | 0 | 23 | 100% | 58.9% | 4.34 |

| 8 | 61 | 29 | 0 | 32 | 100% | 47.5% | 4.23 |

| 9 | 60 | 27 | 0 | 33 | 100% | 45.0% | 4.50 |

| 10 | 60 | 27 | 0 | 33 | 100% | 45.0% | 4.54 |

| 11 | 56 | 25 | 0 | 31 | 100% | 44.6% | 4.55 |

| 12 | 61 | 29 | 0 | 32 | 100% | 47.5% | 4.56 |

| 13 | 54 | 28 | 0 | 26 | 100% | 51.9% | 6.08 |

| 14 | 53 | 21 | 0 | 32 | 100% | 39.6% | 4.60 |

| 15 | 50 | 20 | 0 | 30 | 100% | 40.0% | 4.35 |

| 16 | 50 | 23 | 0 | 27 | 100% | 46.0% | 4.32 |

| 17 | 36 | 22 | 0 | 14 | 100% | 61.0% | 3.25 |

| 18 | 34 | 19 | 0 | 15 | 100% | 55.9% | 3.27 |

| 19 | 32 | 16 | 0 | 16 | 100% | 50.0% | 3.50 |

| 20 | 32 | 17 | 0 | 15 | 100% | 53.1% | 3.23 |

| 21 | 30 | 17 | 0 | 13 | 100% | 56.7% | 3.29 |

| 22 | 29 | 16 | 0 | 13 | 100% | 55.1% | 3.18 |

| 23 | 27 | 15 | 0 | 12 | 100% | 55.6% | 3.34 |

| 24 | 29 | 16 | 0 | 13 | 100% | 55.2% | 5.32 |

Table 4.

Summary of the success ratio of the four scenarios.

Table 4.

Summary of the success ratio of the four scenarios.

|

ID | Description | Success Ratio |

|---|

| 1 | Ceiling pose + angled end-effector | 81.25% |

| 2 | Floor pose + angled end-effector | 97.91% |

| 3 | Ceiling pose + straight

end-effector | 77.08% |

| 4 | Floor pose + straight end-effector | 95.83% |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).