Magnetic Skyrmion-Based Spiking Neural Network for Pattern Recognition

Abstract

:1. Introduction

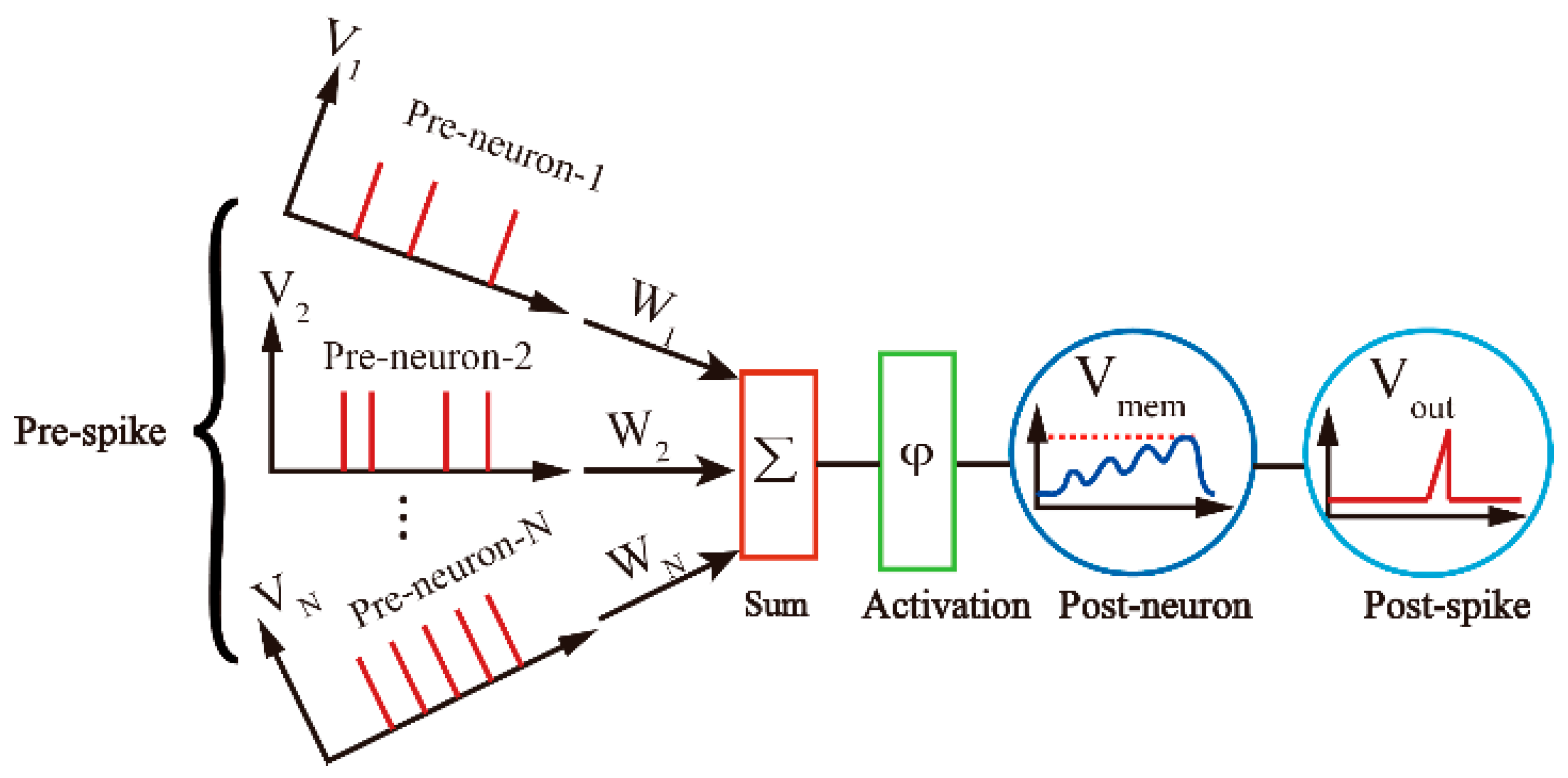

2. Overview

2.1. LIF Neuron Model

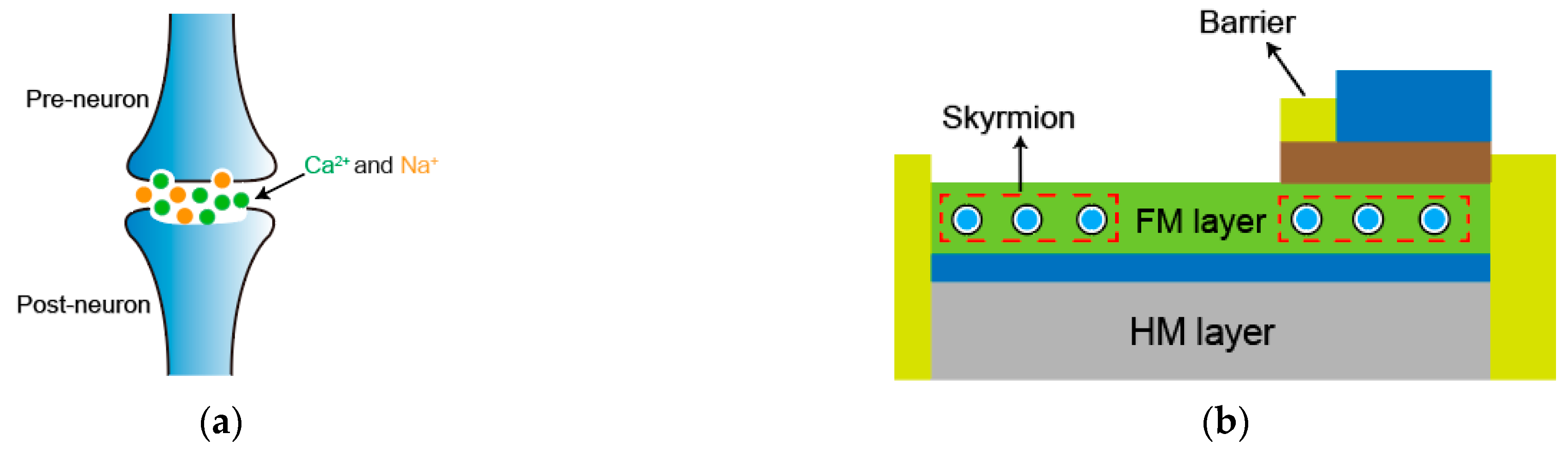

2.2. Skyrmion-Based LIF Neuron

2.3. Skyrmion-Based Synapse

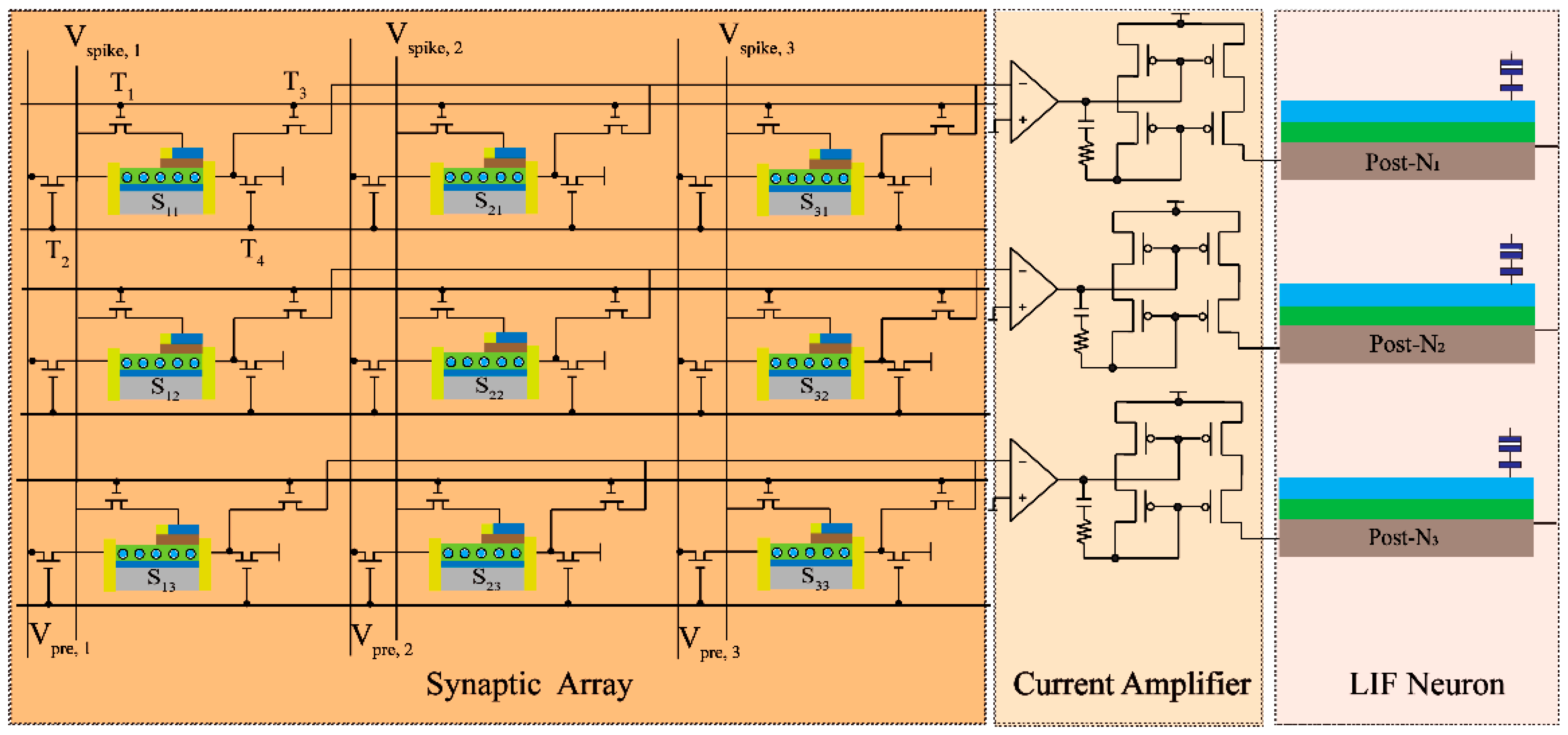

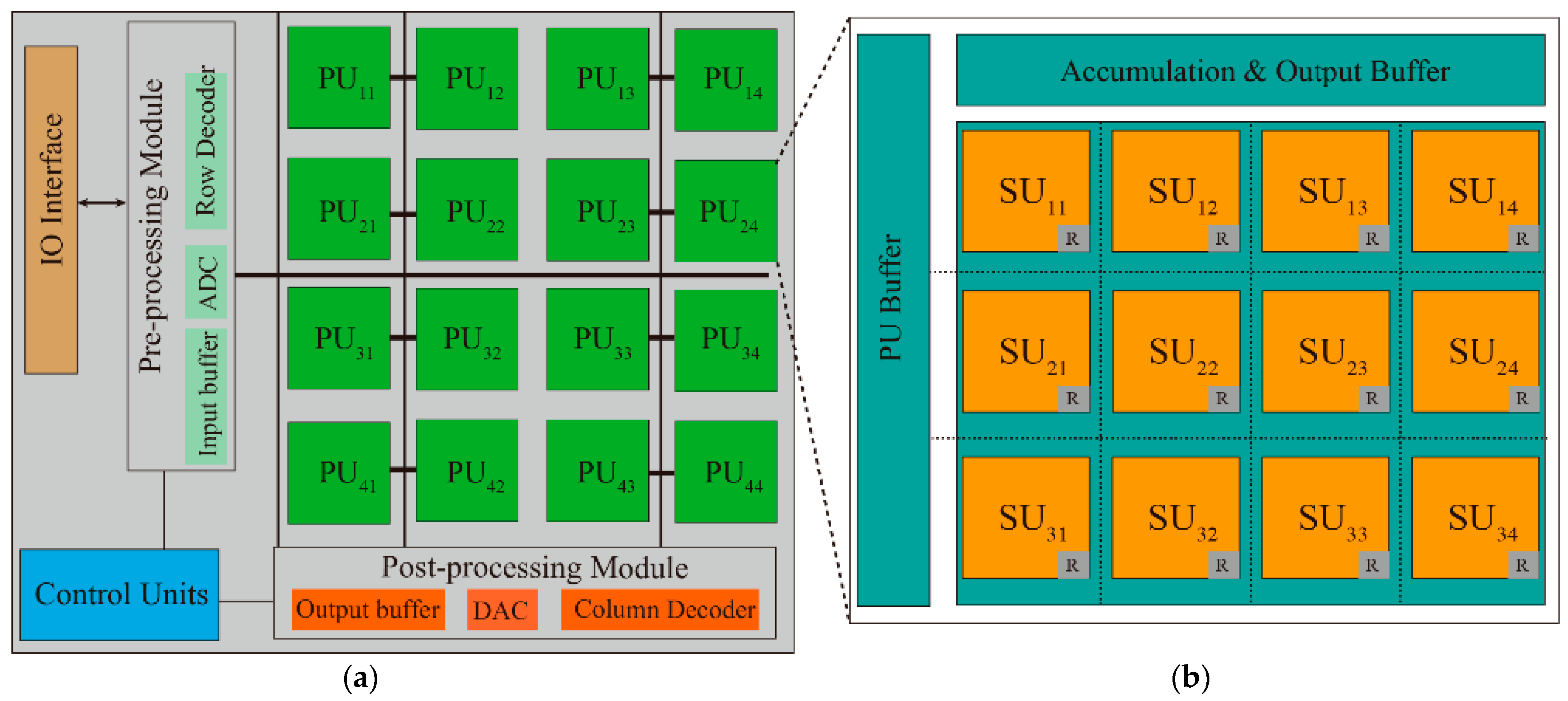

3. Proposed Architecture

4. Simulation and Discussion

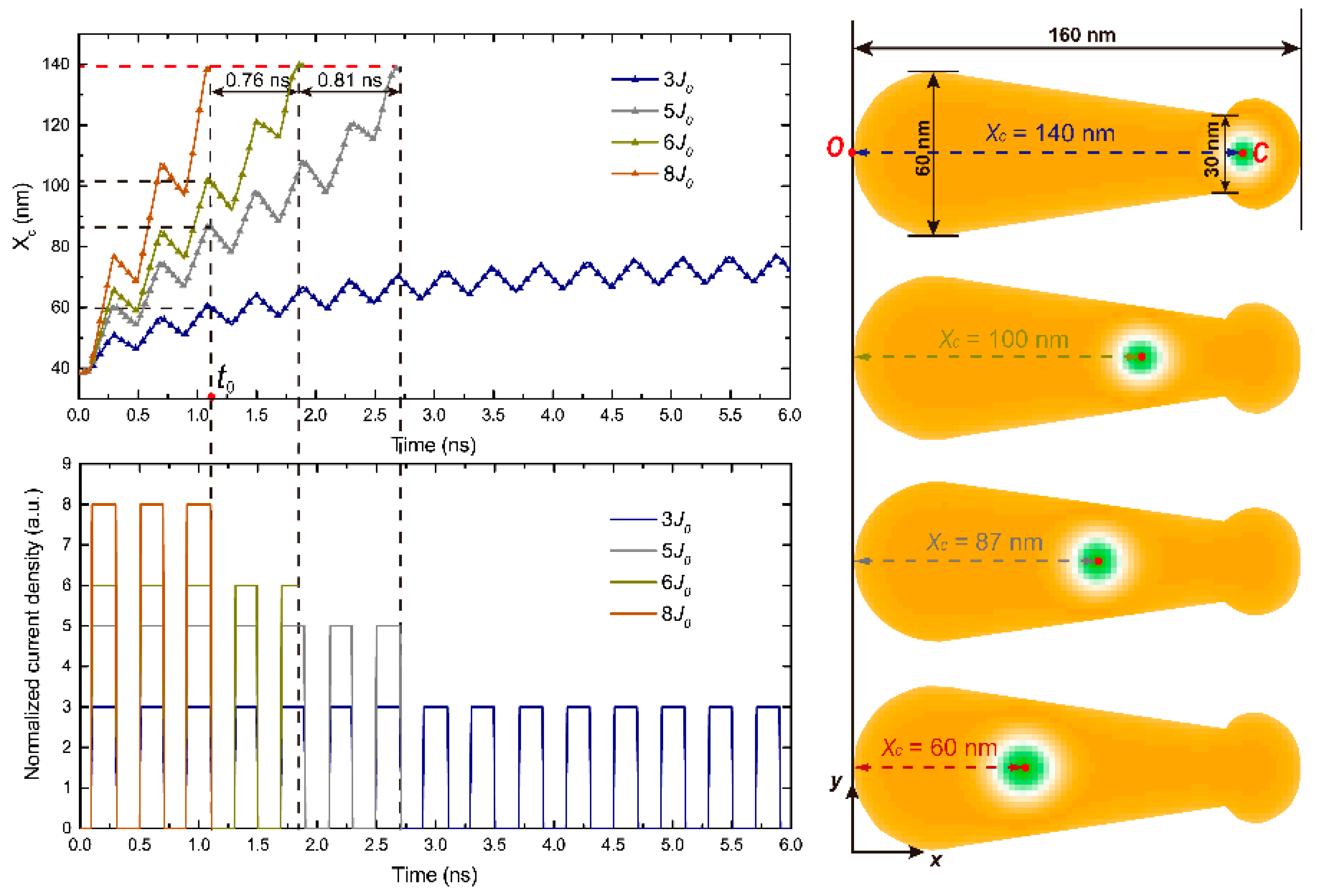

4.1. Micromagnetic Simulation

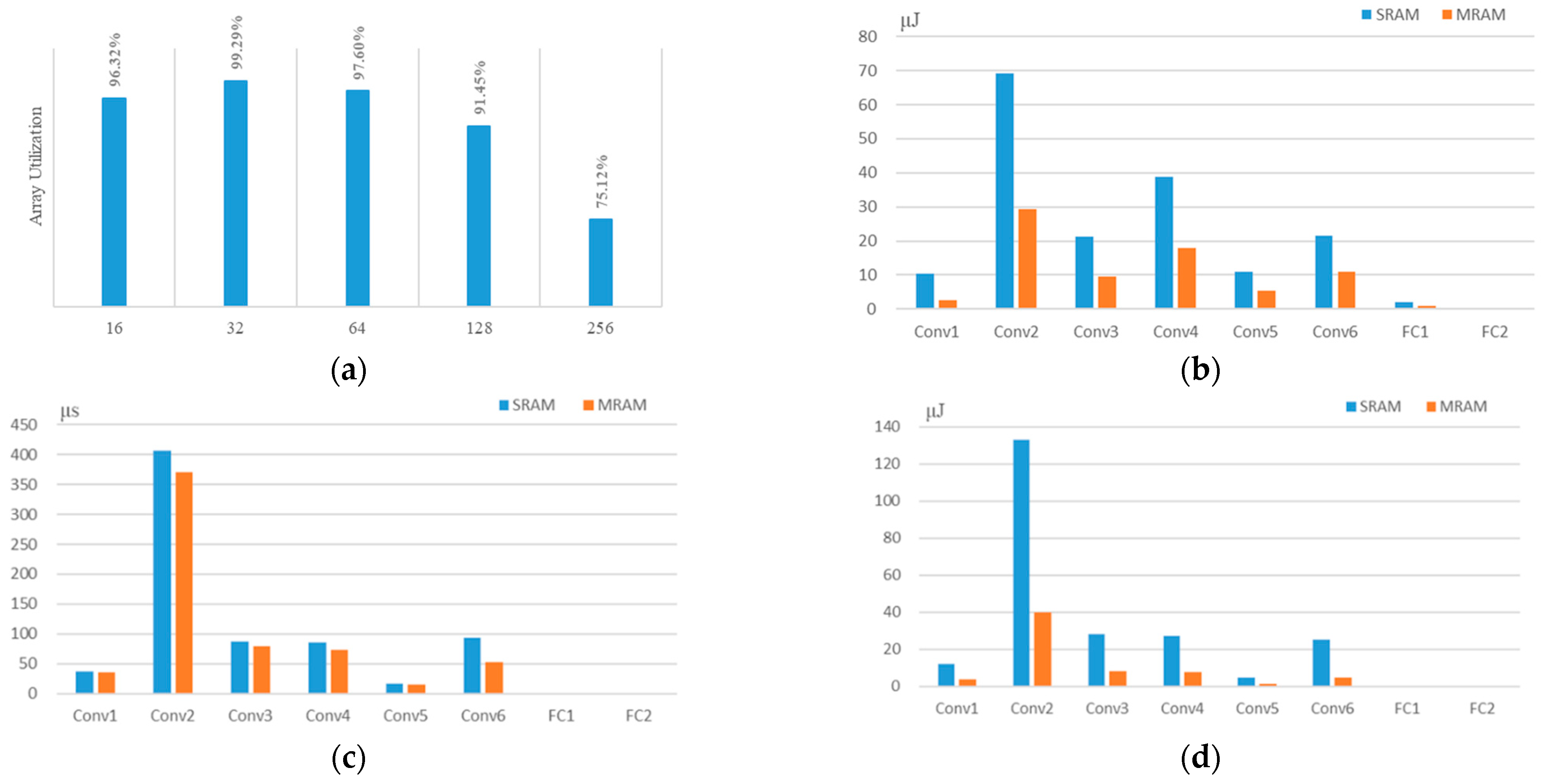

4.2. System Simulation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef]

- Markram, H.; Gerstner, W.; Sjöström, P.J. Spike-timing-dependent plasticity: A comprehensive overview. Front. Synaptic Neurosci. 2012, 4, 2. [Google Scholar] [CrossRef]

- Wu, X.; Saxena, V.; Zhu, K.; Balagopal, S. A CMOS Spiking Neuron for Brain-Inspired Neural Networks with Resistive Synapses and In-Situ Learning. IEEE Trans. Circ. Syst. Expr. Briefs 2015, 62, 1088–1092. [Google Scholar] [CrossRef]

- Seo, J.-S.; Brezzo, B.; Liu, Y.; Parker, B.D.; Esser, S.K.; Montoye, R.K.; Rajendran, B.; Tierno, J.A.; Chang, L.; Modha, D.S.; et al. A 45 nm CMOS neuromorphic chip with a scalable architecture for learning in networks of spiking neurons. In Proceedings of the 2011 IEEE Custom Integrated Circuits Conference (CICC), San Jose, CA, USA, 19–21 September 2011. [Google Scholar]

- Jo, S.H.; Chang, T.; Ebong, I.; Bhadviya, B.B.; Mazumder, P.; Lu, W. Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 2010, 10, 1297–1301. [Google Scholar] [CrossRef]

- Kuzum, D.; Jeyasingh, R.G.D.; Lee, B.; Wong, H.-S.P. Nanoelectronic programmable synapses based on phase change materials for brain-inspired computing. Nano Lett. 2012, 12, 2179–2186. [Google Scholar] [CrossRef]

- Grollier, J.; Querlioz, D.; Stiles, M.D. Spintronic nanodevices for bioinspired computing. Proc. IEEE 2016, 104, 2024–2039. [Google Scholar] [CrossRef]

- Wang, M.; Cai, W.; Zhu, D.; Wang, Z.; Kan, J.; Zhao, Z.; Cao, K.; Wang, Z.; Zhang, Y.; Zhang, T.; et al. Field-free switching of a perpendicular magnetic tunnel junction through the interplay of spin–orbit and spin-transfer torques. Nat. Electron. 2018, 1, 582–588. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, H.; Wang, M.; Cai, W.; Zhu, D.; Klein, J.O.; Zhao, W. Proposal of Toggle Spin Torques Magnetic RAM for Ultrafast Computing. IEEE Electron Device Lett. 2019, 40, 726–729. [Google Scholar] [CrossRef]

- Peng, S.; Zhu, D.; Zhou, J.; Zhang, B.; Cao, A.; Wang, M.; Cai, W.; Cao, K.; Zhao, W. Modulation of heavy metal/ferromagnetic metal interface for high-performance spintronic devices. Adv. Electron. Mater. 2019, 5, 1900134. [Google Scholar] [CrossRef]

- Wang, M.; Cai, W.; Cao, K.; Zhou, J.; Wrona, J.; Peng, S.; Yang, H.; Wei, J.; Kang, W.; Zhang, Y.; et al. Current-induced magnetization switching in atom-thick tungsten engineered perpendicular magnetic tunnel junctions with large tunnel magnetoresistance. Nat. Commun. 2018, 9, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhang, L.; Wang, M.; Wang, Z.; Zhu, D.; Zhang, Y.; Zhao, W. High-Density NAND-Like Spin Transfer Torque Memory With Spin Orbit Torque Erase Operation. IEEE Electron Device Lett. 2018, 39, 343–346. [Google Scholar] [CrossRef]

- Peng, S.; Zhao, W.; Qiao, J.; Su, L.; Zhou, J.; Yang, H.; Zhang, Q.; Grezes, C.; Amiri, P.K.; Wang, K.L. Giant interfacial perpendicular magnetic anisotropy in MgO/CoFe/capping layer structures. Appl. Phys. Lett. 2017, 110, 072403. [Google Scholar] [CrossRef]

- Pan, B.; Wang, G.; Zhang, H.; Kang, W.; Zhao, W. A Mini Tutorial of Processing in Memory: From Principles, Devices to Prototypes. IEEE Trans. Circuits Syst. Express Briefs 2022, 69, 3044–3050. [Google Scholar] [CrossRef]

- Fert, A.; Cros, V.; Sampaio, J. Skyrmions on the track. Nat. Nanotechnol. 2013, 8, 152–156. [Google Scholar] [CrossRef]

- Huang, Y.; Kang, W.; Zhang, X.; Zhou, Y.; Zhao, W. Magnetic skyrmion-based synaptic devices. Nanotechnology 2017, 28, 08LT02. [Google Scholar] [CrossRef]

- He, Z.; Fan, D. A tunable magnetic skyrmion neuron cluster for energy efficient artificial neural network. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017; pp. 350–355. [Google Scholar]

- Chen, M.-C.; Sengupta, A.; Roy, K. Magnetic skyrmion as a spintronic deep learning spiking neuron processor. IEEE Trans. Magn. 2018, 54, 1500207. [Google Scholar] [CrossRef]

- Das, B.; Schulze, J.; Ganguly, U. Ultra-Low Energy LIF Neuron Using Si NIPIN Diode for Spiking Neural Networks. IEEE Electron Device Lett. 2018, 39, 1832–1835. [Google Scholar] [CrossRef]

- Chen, X.; Kang, W.; Zhu, D.; Zhang, X.; Lei, N.; Zhang, Y.; Zhou, Y.; Zhao, W. A Compact Skyrmionic Leaky-integrate-fire Spiking Neuron. Nanoscale 2018, 10, 6139–6146. [Google Scholar] [CrossRef]

- Li, S.; Kang, W.; Huang, Y.; Zhang, X.; Zhou, Y.; Zhao, W. Magnetic skyrmion-based artificial neuron device. Nanotechnology 2017, 28, 31LT01. [Google Scholar] [CrossRef]

- Stimberg, M.; Goodman, D.F.; Benichoux, V.; Brette, R. Equationoriented specification of neural models for simulations. Front. Neuroinformatics 2014, 8, 6. [Google Scholar] [CrossRef] [PubMed]

- Clayton, T.; Cameron, K.; Rae, B.R.; Sabatier, N.; Charbon, E.; Henderson, R.K.; Leng, G.; Murray, A. An Implementation of a Spike-Response Model With Escape Noise Using an Avalanche Diode. IEEE Trans. Biomed. Circuits Syst. 2011, 5, 231–243. [Google Scholar] [CrossRef] [PubMed]

- Lapicque, L. Recherches quantitatives sur l’excitation electrique des nerfs traitée comme une polarization. J. Physiol. Pathol. Gen. 1907, 9, 620–635. [Google Scholar]

- Rohart, S.; Thiaville, A. Skyrmion Confinement in Ultrathin Film Nanostructures in the Presence of Dzyaloshinskii-Moriya Interaction. Phys. Rev. B 2013, 88, 184422. [Google Scholar] [CrossRef]

- Kang, W.; Huang, Y.; Zhang, X.; Zhou, Y.; Zhao, W. Skyrmion-Electronics: An Overview and Outlook. Proc. IEEE 2016, 104, 2040–2061. [Google Scholar] [CrossRef]

- Pan, B.; Zhang, D.; Zhang, X.; Wang, H.; Bai, J.; Yang, J.; Zhang, Y.; Kang, W.; Zhao, W. Skyrmion-Induced Memristive Magnetic Tunnel Junction for Ternary Neural Network. IEEE J. Electron Devices Soc. 2019, 7, 529–533. [Google Scholar] [CrossRef]

- Pan, B.; Kang, W.; Chen, X.; Bai, J.; Yang, J.; Zhang, Y.; Zhao, W. SR-WTA: Skyrmion racing winner-takes-all module for spiking neural computing. In Proceedings of the 2019 IEEE International Symposium on Circuits and Systems (ISCAS), Sapporo, Japan, 26–29 May 2019. [Google Scholar]

- Lequeux, S.; Sampaio, J.; Cros, V.; Yakushiji, K.; Fukushima, A.; Matsumoto, R.; Kubota, H.; Yuasa, S.; Grollier, J. A magnetic synapse: Multilevel spin-torque memristor with anisotropy. Sci. Rep. 2016, 6, 31510. [Google Scholar] [CrossRef]

- Donahue, M.J.; Porter, D.G. OOMMF User’s Guide; Interagency Report NISTIR; 1999; Available online: https://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.35.2118 (accessed on 9 August 2022).

- Peng, X.; Liu, R.; Yu, S. Optimizing Weight Mapping and Data Flow for Convolutional Neural Networks on RRAM Based Processing-In-Memory Architecture. In Proceedings of the 2019 IEEE International Symposium on Circuits and Systems (ISCAS), Sapporo, Japan, 26–29 May 2019. [Google Scholar]

- Lee, J.H.; Delbruck, T.; Pfeiffer, M. Training deep spiking neural networks using backpropagation. Front. Neurosci. 2016, 10, 508. [Google Scholar] [CrossRef] [Green Version]

| Parameter | Description | Value |

|---|---|---|

| MS | Sat. magnetization | 580 kA/m |

| A | Exchange constant | 15 pJ/m |

| D | DMI factor | 3 mJ/m2 |

| α | Gilbert damping factor | 0.3 |

| Ku0 | Magnetic anisotropy | 0.7 MJ/m3 |

| P | Spin polarization | 0.4 |

| l × w | Length and width | 300 nm × 80 nm |

| Device | Area (mm2) | Dynamic Energy (μJ) | Leakage Energy (μJ) | Latency (μs) | Energy Efficiency (TOPS/W) |

|---|---|---|---|---|---|

| Skyrmion SRAM | 29.58 | 76.60 | 65.74 | 625.45 | 4.33 |

| 55.76 | 174.23 | 230.32 | 726.57 | 1.52 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Wang, G.; Bai, T.; Mo, K.; Chen, J.; Mao, W.; Wang, W.; Yuan, Z.; Pan, B. Magnetic Skyrmion-Based Spiking Neural Network for Pattern Recognition. Appl. Sci. 2022, 12, 9698. https://doi.org/10.3390/app12199698

Liu S, Wang G, Bai T, Mo K, Chen J, Mao W, Wang W, Yuan Z, Pan B. Magnetic Skyrmion-Based Spiking Neural Network for Pattern Recognition. Applied Sciences. 2022; 12(19):9698. https://doi.org/10.3390/app12199698

Chicago/Turabian StyleLiu, Shuang, Guangyao Wang, Tianshuo Bai, Kefan Mo, Jiaqi Chen, Wanru Mao, Wenjia Wang, Zihan Yuan, and Biao Pan. 2022. "Magnetic Skyrmion-Based Spiking Neural Network for Pattern Recognition" Applied Sciences 12, no. 19: 9698. https://doi.org/10.3390/app12199698