Sim–Real Mapping of an Image-Based Robot Arm Controller Using Deep Reinforcement Learning

Abstract

:1. Introduction

- We proposed a mapping approach of training a DRL policy to work in the real world for a grasping operation of a robot arm. After training the DRL policy in a virtual environment, we introduced a mapping CNN model that takes in real-world images and maps them to the learnt-simulated environment.

- We demonstrated the sim–real transfer without any real-world training data.

2. Materials and Methods

2.1. Structure of 4-DoF Robot Arm

2.2. Real and Virtual Workspace

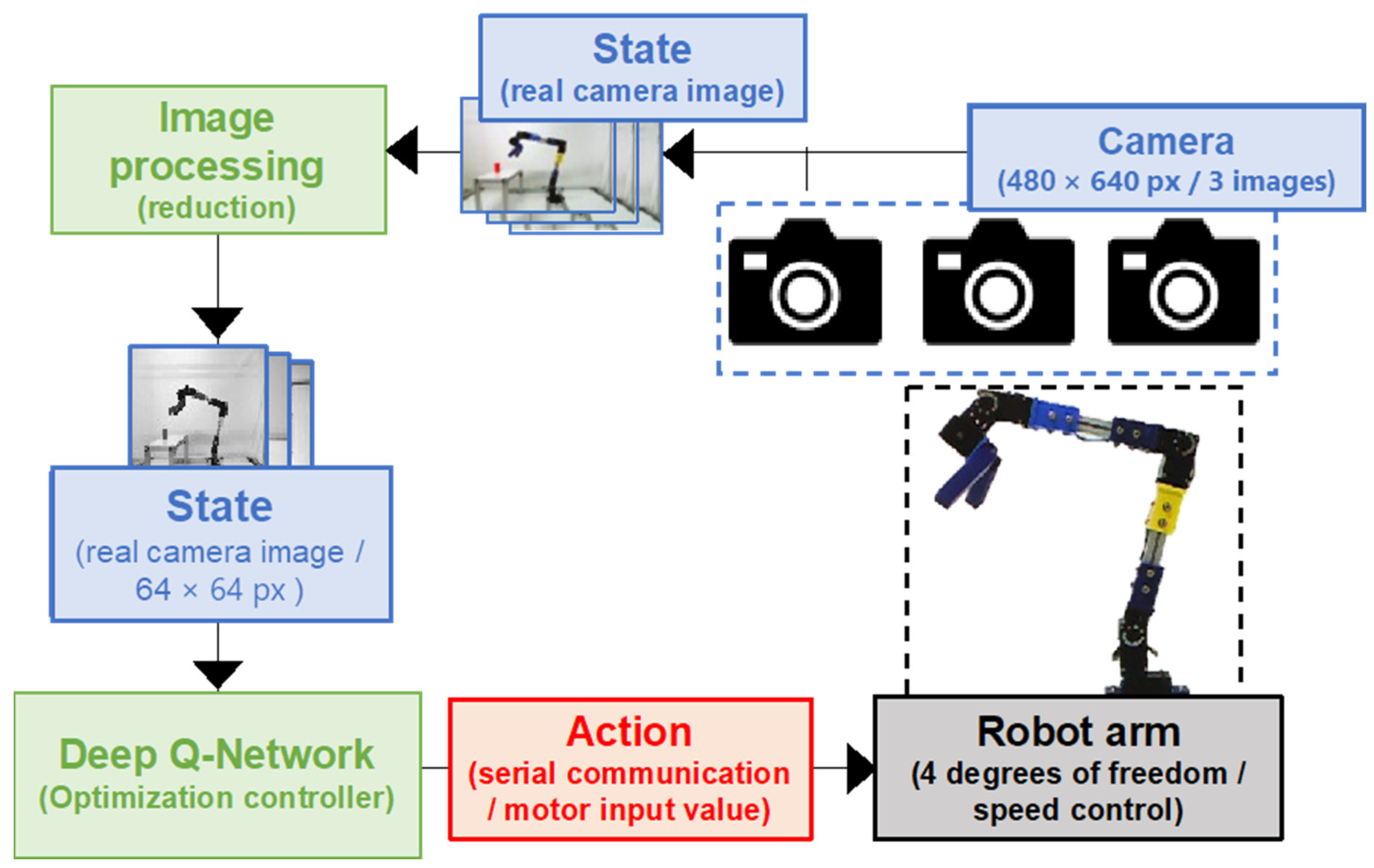

2.3. Deep Reinforcement Learning System

3. Sim–Real Model Performance

3.1. Image Processing for Environment Matching

3.2. Model Gripping Operation

3.3. Gripping by the Actual Robot

4. Real–Sim Mapping

4.1. Real–Sim Model

4.2. Gripping by the Actual Robot

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, R.; Nageotte, F.; Zanne, P.; de Mathelin, M.; Dresp, B. Deep Reinforcement Learning for the Control of Robotic Manipulation: A Focussed Mini-Review. Robotics 2021, 10, 22. [Google Scholar] [CrossRef]

- Zhao, W.; Queralta, J.P.; Westerlund, T. Sim-to-Real Transfer in Deep Reinforcement Learning for Robotics: A Survey. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, ACT, Australia, 1–4 December 2020; pp. 737–744. [Google Scholar] [CrossRef]

- Munikoti, S.; Agarwal, D.; Das, L.; Halappanavar, M.; Natarajan, B. Challenges and Opportunities in Deep Reinforcement Learning with Graph Neural Networks: A Comprehensive review of Algorithms and Applications. arXiv 2022, arXiv:2206.07922. [Google Scholar]

- Dulac-Arnold, G.; Mankowitz, D.J.; Hester, T. Challenges of Real-World Reinforcement Learning. arXiv 2019, arXiv:1904.12901. [Google Scholar]

- Breyer, M.; Furrer, F.; Novkovic, T.; Siegwart, R.Y.; Nieto, J.I. Flexible Robotic Grasping with Sim-to-Real Transfer based Reinforcement Learning. arXiv 2018, arXiv:1803.04996. [Google Scholar]

- Salvato, E.; Fenu, G.; Medvet, E.; Pellegrino, F.A. Crossing the Reality Gap: A Survey on Sim-to-Real Transferability of Robot Controllers in Reinforcement Learning. IEEE Access 2021, 9, 153171–153187. [Google Scholar] [CrossRef]

- Nagabandi, A.; Clavera, I.; Liu, S.; Fearing, R.S.; Abbeel, P.; Levine, S.; Finn, C. Learning to Adapt in Dynamic, Real-World Environments through Meta-Reinforcement Learning. arXiv 2019, arXiv:1803.11347. [Google Scholar]

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the real world. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Volpi, R.; Larlus, D.; Rogez, G. Continual Adaptation of Visual Representations via Domain Randomization and Meta-learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4441–4451. [Google Scholar]

- Jiang, Y.; Zhang, T.; Ho, D.; Bai, Y.; Liu, C.K.; Levine, S.; Tan, J. SimGAN: Hybrid Simulator Identification for Domain Adaptation via Adversarial Reinforcement Learning. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 2884–2890. [Google Scholar]

- Ho, D.; Rao, K.; Xu, Z.; Jang, E.; Khansari, M.; Bai, Y. RetinaGAN: An Object-aware Approach to Sim-to-Real Transfer. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 10920–10926. [Google Scholar]

- Arndt, K.; Hazara, M.; Ghadirzadeh, A.; Kyrki, V. Meta Reinforcement Learning for Sim-to-real Domain Adaptation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2725–2731. [Google Scholar] [CrossRef]

- Traoré, K.R.; Caselles-Dupré, H.; Lesort, T.; Sun, T.; Rodríguez, N.D.; Filliat, D. Continual Reinforcement Learning deployed in Real-life using Policy Distillation and Sim2Real Transfer. arXiv 2019, arXiv:1906.04452. [Google Scholar]

- Zhang, F.; Leitner, J.; Milford, M.; Upcroft, B.; Corke, P. Towards Vision-Based Deep Reinforcement Learning for Robotic Motion Control. arXiv 2015, arXiv:1511.03791. [Google Scholar]

- James, S.; Johns, E. 3D Simulation for Robot Arm Control with Deep Q-Learning. arXiv 2016, arXiv:1609.03759. [Google Scholar]

- Liu, N.; Cai, Y.; Lu, T.; Wang, R.; Wang, S. Real–Sim–Real Transfer for Real-World Robot Control Policy Learning with Deep Reinforcement Learning. Appl. Sci. 2020, 10, 1555. [Google Scholar] [CrossRef] [Green Version]

- Rao, K.; Harris, C.; Irpan, A.; Levine, S.; Ibarz, J.; Khansari, M. RL-CycleGan: Reinforcement learning aware simulation-to-real. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11154–11163. [Google Scholar] [CrossRef]

- Du, Y.; Watkins, O.; Darrell, T.; Abbeel, P.; Pathak, D. Auto-Tuned Sim-to-Real Transfer. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1290–1296. [Google Scholar]

- Obando-Ceron, J.S.; Castro, P.S. Revisiting Rainbow: Promoting more insightful and inclusive deep reinforcement learning research. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar] [CrossRef]

- Behzadan, V.; Munir, A. Vulnerability of Deep Reinforcement Learning to Policy Induction Attacks. arXiv 2017, arXiv:1701.04143. [Google Scholar]

- Spears, T.; Jacques, B.; Howard, M.; Sederberg, P. Scale-invariant temporal history (SITH): Optimal slicing of the past in an uncertain world. arXiv 2017, arXiv:1712.07165. [Google Scholar]

- Cai, W.; Wen, X.; Wang, S.; Wang, L. A real-time detection method of building energy efficiency based on image processing. J. Vis. Commun. Image Represent. 2019, 60, 295–304. [Google Scholar] [CrossRef]

- Bui, H.M.; Lech, M.; Cheng, E.; Neville, K.; Burnett, I.S. Using grayscale images for object recognition with convolutional-recursive neural network. In Proceedings of the 2016 IEEE Sixth International Conference on Communications and Electronics (ICCE), Ha-Long, Vietnam, 27–29 July 2016; pp. 321–325. [Google Scholar] [CrossRef]

- Zhao, W.; Queralta, J.P.; Qingqing, L.; Westerlund, T. Towards Closing the Sim-to-Real Gap in Collaborative Multi-Robot Deep Reinforcement Learning. In Proceedings of the 2020 5th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 20–22 November 2020; pp. 7–12. [Google Scholar]

- Chen, X.; Ling, J.; Wang, S.; Yang, Y.; Luo, L.; Yan, Y. Ship detection from coastal surveillance videos via an ensemble Canny-Gaussian-morphology framework. J. Navig. 2021, 74, 1252–1266. [Google Scholar] [CrossRef]

- Tsai, Y.-Y.; Xu, H.; Ding, Z.; Zhang, C.; Johns, E.; Huang, B. DROID: Minimizing the Reality Gap Using Single-Shot Human Demonstration. IEEE Robot. Autom. Lett. 2021, 6, 3168–3175. [Google Scholar] [CrossRef]

- Zhou, S.; Pereida, K.; Zhao, W.; Schoellig, A.P. Bridging the Model-Reality Gap With Lipschitz Network Adaptation. IEEE Robot. Autom. Lett. 2022, 7, 642–649. [Google Scholar] [CrossRef]

| Name | Length [mm] | Wight [g] |

|---|---|---|

| Link1 | 150 | 212 |

| Link2 | 150 | 212 |

| Gripper | 115 | 22 |

| Name | Value |

|---|---|

| Stall torque | 6.9 [N·m] (at 11.1 [V], 4.2 [A]) 7.3 [N·m] (at 12.0 [V], 4.4 [A]) 8.9 [N·m] (at 14.8 [V], 5.5 [A]) |

| No-load rotation speed | 50 [rpm] (at 11.1 [V]) 53 [rpm] (at 12.0 [V]) 66 [rpm] (at 14.8 [V]) |

| Reduction ratio | 1/152.3 |

| Maximum operating angle | During positioning control: 0 to 360 [deg] (12 bit resolution) |

| Power supply voltage range | 10~14.8 [V] |

| Allowable axial load | 20 [N] |

| Weight | 165 [g] |

| Motor 1 | Motor 2 | Motor 3 | Motor 4 | Motor 5 | |

|---|---|---|---|---|---|

| Action 1 | S | S | S | S | S |

| Action 2 | R | S | S | S | S |

| Action 3 | L | S | S | S | S |

| Action 4 | S | R | S | S | S |

| Action 5 | S | L | S | S | S |

| Action 6 | S | S | R | S | S |

| Action 7 | S | S | L | S | S |

| Action 8 | S | S | S | R | S |

| Action 9 | S | S | S | L | S |

| Action 10 | S | S | S | S | C |

| Parameter | Value |

|---|---|

| Learning rate | 0.0001 |

| Maximum number of steps | 300 |

| Sampling cycle | 0.15 [s] |

| Discount rate | 0.95 |

| Replay memory size | 1 × 106 |

| Start epsilon | 1 |

| Final epsilon | 0.1 |

| Epsilon attenuation coefficient | 6 × 107 |

| Model update interval in steps | 10 |

| Target model update interval in steps | 1000 |

| State | Reward |

|---|---|

| Successful grip | 1000 |

| The distance between the robot hand and the cylinder is within 1 mm | |

| When not grasping |

| Image Processing | Left [%] | Center [%] | Right [%] | Total [%] |

|---|---|---|---|---|

| Grayscale | 100 | 100 | 100 | 100 |

| Binarization | 100 | 100 | 100 | 100 |

| 10-gradation gray scale | 100 | 100 | 100 | 100 |

| 4-gradation gray scale | 100 | 100 | 100 | 100 |

| 10-gradation gray scale w/median filter | 100 | 100 | 100 | 100 |

| 4-gradation gray scale w/median filter | 100 | 100 | 100 | 100 |

| Image Processing | Left [%] | Center [%] | Right [%] | Total [%] |

|---|---|---|---|---|

| Grayscale | 0 | 0 | 0 | 0 |

| Binarization | 50 | 100 | 60 | 70 |

| 10-gradation gray scale | 40 | 40 | 70 | 50 |

| 4-gradation gray scale | 0 | 100 | 0 | 33 |

| 10-gradation gray scale w/median filter | 0 | 0 | 0 | 0 |

| 4-gradation gray scale w/median filter | 0 | 100 | 0 | 33 |

| Image Processing | Key Frames (Labels) |

|---|---|

| Grayscale | 65 |

| Binarization | 64 |

| 10-gradation gray scale | 62 |

| 4-gradation gray scale | 59 |

| 10-gradation gray scale w/median filter | 63 |

| 4-gradation gray scale w/median filter | 67 |

| Image Processing | Training Data | Validation Data | Total |

|---|---|---|---|

| Grayscale | 7020 | 1755 | 8775 |

| Binarization | 6912 | 1728 | 8640 |

| 10-gradation gray scale | 6696 | 1674 | 8370 |

| 4-gradation gray scale | 6404 | 1601 | 8005 |

| 10-gradation gray scale w/median filter | 6804 | 1701 | 8505 |

| 4-gradation gray scale w/median filter | 7128 | 1782 | 8910 |

| Image Processing | Left [%] | Center [%] | Right [%] | Total [%] |

|---|---|---|---|---|

| Grayscale | 100 | 100 | 100 | 100 |

| Binarization | 100 | 100 | 100 | 100 |

| 10-gradation gray scale | 100 | 100 | 100 | 100 |

| 4-gradation gray scale | 100 | 100 | 100 | 100 |

| 10-gradation gray scale w/median filter | 100 | 100 | 100 | 100 |

| 4-gradation gray scale w/median filter | 100 | 100 | 100 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sasaki, M.; Muguro, J.; Kitano, F.; Njeri, W.; Matsushita, K. Sim–Real Mapping of an Image-Based Robot Arm Controller Using Deep Reinforcement Learning. Appl. Sci. 2022, 12, 10277. https://doi.org/10.3390/app122010277

Sasaki M, Muguro J, Kitano F, Njeri W, Matsushita K. Sim–Real Mapping of an Image-Based Robot Arm Controller Using Deep Reinforcement Learning. Applied Sciences. 2022; 12(20):10277. https://doi.org/10.3390/app122010277

Chicago/Turabian StyleSasaki, Minoru, Joseph Muguro, Fumiya Kitano, Waweru Njeri, and Kojiro Matsushita. 2022. "Sim–Real Mapping of an Image-Based Robot Arm Controller Using Deep Reinforcement Learning" Applied Sciences 12, no. 20: 10277. https://doi.org/10.3390/app122010277

APA StyleSasaki, M., Muguro, J., Kitano, F., Njeri, W., & Matsushita, K. (2022). Sim–Real Mapping of an Image-Based Robot Arm Controller Using Deep Reinforcement Learning. Applied Sciences, 12(20), 10277. https://doi.org/10.3390/app122010277