1. Introduction

Chemistry is an experiment-based discipline. Chemistry experiments are an important way to learn chemistry knowledge. Chemistry experiments can help students quickly understand and learn from chemical experiments, thus gaining knowledge beyond what they read in textbooks. The task of teaching chemical experiments in a traditional laboratory has some limitations. For example, many phenomena in chemical experiments are unexplainable or unobservable, some chemical experiments are dangerous, and some chemicals are expensive [

1].

For these reasons, the virtual laboratory has become increasingly important. Virtual laboratories provide integration of laboratory and computer simulations [

2]. In addition, the virtual lab provides a safe learning environment for students. In the virtual environment, students can pause, continue, and repeat experiments many times. They waste no time or chemicals and can observe phenomena that are not easily observable. However, existing virtual laboratories often use devices such as a computer mouse [

3], fingers [

4], and handles [

5] for interaction, and users lack the sense of operation and realism gained from using experimental equipment in a physical laboratory environment. This is clearly an important missing link for experimental courses that exercise students’ hands-on skills. Therefore, virtual labs that combine software and hardware can better meet the teaching objectives. However, there is a problem of a low experimental rate because the existing virtual labs lack timely guidance from the system on the user’s operation process [

6]. In addition, learning is a boring process and proper entertainment is important for students to maintain their interest in learning [

7]. Therefore, it is necessary to design an intelligent experimental system that can intelligently guide experimenters and provide them with some appropriate game elements and incentives to improve students’ learning interest. To achieve these functions, the system needs to understand the experimenter’s operations and thus provide timely responses. Unimodal information is one-sided and experiments using unimodal information are less interactive and less accurate [

8].

To solve these problems, we designed a multimodal perception gameplay virtual and real fusion intelligence laboratory (GVRFL). GVRFL uses behavioral information from two channels, touch and voice channels, for fusion to obtain the user’s operational intent for natural multimodal human–computer interaction [

9]. We proposed a multimodal intention active understanding algorithm to improve the efficiency of human–computer interaction and user experience and designed a set of new experimental equipment to sense the user’s wrong behavior during the experiment. We adopted the method of the fusion of virtual and real to enhance the user’s sense of reality and operation in virtual experiments. At the same time, we proposed a novel game-based intelligent experimental mode combining virtual and real elements. We added gameplay to the process of the virtual and real fusion experiment. In this way, students’ interest in and motivation for learning are stimulated during the experiment, their interest in virtual experiments is increased, and their learning efficiency is improved.

This article makes the following three contributions:

Propose a multimodal intention active understanding algorithm suitable for chemical experiments and construct the core engine of virtual and real fusion experiments.

Propose a novel intelligent experimental mode of fusion of virtual and real gameplay and introduce gameplay into the experiment.

Complete the prototype of a multimodal perception gameplay virtual–real fusion experimental platform, and prove the usability of the platform through experiments.

The structure of this article is as follows: Part 2 briefly discusses related work, Part 3 outlines understanding the user’s intention in the gameplay virtual and real fusion experimental mode, Part 4 presents the design strategy of the gameplay virtual and real fusion experimental mode, Part 5 describes the experiment and evaluations, and Part 6 discusses the of conclusion and prospects.

2. Related Work

With the development of technology, the application of artificial intelligence in the field of education has become increasingly more extensive, and the virtual laboratory is a typical application. The concept of virtual experiments was first proposed by Professor William Wolf of the University of Virginia in 1989. Due to the lack of laboratories or inadequate laboratory equipment, few hands-on chemistry experiments are conducted in Turkish schools. Therefore, Tysz et al. [

10] developed a 2D virtual environment for school chemistry education where students perform experiments in a virtual environment, and the results showed that the virtual laboratory had a positive impact on students’ academic performance and learning attitudes. Tsovaltzi [

11] and others developed a web-based virtual experiment platform “Vlab”, where students could use menus and dialog boxes to control chemical instruments to complete chemical experiments. The above two virtual experimental platforms are both 2D experimental environments, and authenticity is lacking in the experimental environment. In the experiment, the students use a keyboard and mouse to interact with the system, thus lacking a sense of operation.

Ali et al. [

5] developed a 3D interactive multimodal virtual chemistry laboratory (MMVCL). In MMVCL, the user completes the experiment with a virtual hand controlled by Wiimote. The information in the experimental process is received through audio and text information. The experimental results showed that MMVCL improved students’ learning skills and grades. Wu et al. [

12] developed a virtual reality chemistry laboratory that uses Leap Motion to detect users’ gestures for operation. Users wear a head-mounted display and use gestures to interact with virtual objects. The results showed that at an appropriate learning intensity, the virtual reality chemistry laboratory could improve and enhance users’ learning confidence. Lam et al. [

13] developed an augmented reality chemical experiment platform that uses augmented reality (AR) markers to control chemical experiment instruments and realize movement such as shaking, and dumping. All the above experimental platforms are 3D experimental environments that enhance the immersive sense and sense of reality of virtual experiments. However, these experimental platforms have a single mode of interaction due to the limitations of the input behavioral information methods, such as just using handles or gestures.

Human–computer collaboration is essentially a process of communication and understanding between humans and machines. If the platform can understand the user’s intentions and communicate with the user during the virtual experiment, then the user’s human–computer interaction process is natural and comfortable. In the process of human–computer interaction, researchers have been attempting to provide a natural and harmonious way of interaction. Multimodal human–computer interaction (MMHCI) is an important way to solve the problem of human–computer interaction. It captures the user’s interaction intention by integrating accurate and imprecise input from multiple channels and improves the naturalness and efficiency of human–computer interaction. Kaiser et al. [

14] proposed a multimodal interactive system that fuses symbolic and statistical information from a set of 3D gestures, spoken language, and referential agents. The experimental results showed that multimodal input could effectively eliminate ambiguity and reduce uncertainty. Hui et al. [

15] fused the user’s voice and pen input information. This method enabled the platform to understand the user’s intention and improve robustness. Liang et al. [

16] proposed an augmented approach to help a robot understand human motion behaviors based on human kinematics and human postural impedance adaptation. The results showed that the proposed approach to human–robot collaboration (HRC) is intuitive, stable, efficient, and compliant; thus, it may have various applications in human–robot collaboration scenarios. Gavril et al. [

17] also proposed a multimodal interface that makes use of hand tracking and speech interactions for ambient intelligent and ambient assisted living environments. The system has a spoken language interaction module and a hand tracking interaction module. The results showed that because of the user’s interaction with the multimodal interface, the ability to understand the user’s intention was improved. Zhao et al. [

18] published a prototype of a human–computer interaction system that integrates face, gesture, and voice to better understand the user’s intention. Jung et al. [

19] proposed a combination of voice and tactile interaction in a driving environment. The experimental results showed that the interactive mode of voice and touch improved the efficiency of interaction and user experience.

If the system is able to understand the user’s intent and communicate with the user in a virtual experiment, the human–computer interaction process for the user is natural and comfortable. Isabel et al. [

20] proposed a multimodal interaction method, adding vision, hearing, and kinesthesia to virtual experiments. The experimental results showed that this method improved students’ interest in learning. Ismail et al. [

21] proposed a method combining gesture and voice input for multimodal interaction in AR, with experimenters controlling virtual objects by a combination of gestures and voice. Wolski et al. [

22] developed a virtual chemistry laboratory based on a hand movement system, with users using actions and gestures to perform the experiment. The experimental results showed that students’ learning performance was better in the virtual laboratory. Edwards et al. [

23] developed a virtual reality multisensory classroom (VRMC), with users using haptic gloves and Leap Motion to complete the experiment in the VR multisensory laboratory (VRML). The abovementioned experimental platform uses multichannel interactions. Multichannel interaction in the virtual experiment can improve the efficiency and naturalness of human–computer interaction.

Many scholars have also explored the field of smart chemistry labs. Song et al. [

24] proposed a chemical experiment system, CheMO, which uses a stereo camera to detect the users’ gestures and the positions of the instruments, mixed object (MO) beakers and an MO eyedropper as instruments for the chemistry experiments, and a digital workbench. When the user uses an MO instrument to experiment, he or she can obtain the sense of operation of the real experiment. The experimental results showed that MO enhanced the realism of virtual experiments, and users could learn effectively during the experiments. In 2019, Hartmann et al. [

25] allowed users to communicate with people and objects in physical space in a virtual reality system, so that users would not lose their sense of presence in the virtual world. The experiments showed that the user experience is enhanced by the system. Amador et al. [

26] designed a physical tactile burette to embed the physical sense of the laboratory in a virtual experiment. Students can control the physical tactile burette to operate the virtual burette in virtual reality. Zeng et al. [

27] also proposed an intelligent dropper and built a multimodal intelligent interactive virtual experiment platform (MIIVEP). The system integrates three modalities, speech, touch, and gesture, for interaction. The experimental results showed that this method improved students’ learning efficiency. Yuan et al. [

28] designed an intelligent beaker with multimodal sensing capabilities. The intelligent beaker is equipped with multiple sensors. To obtain the real intention of the user during the experiment more accurately, a multimodal fusion algorithm is proposed to fuse the user’s voice information and tactile information. The user uses the intelligent beaker to complete a variety of experiments under the guidance of teaching navigation. Xiao et al. [

29] also designed an intelligent beaker and proposed another multimodal fusion algorithm to fuse the user’s voice information and tactile information. The experimental results showed that users could feel the sense of operation in a traditional laboratory when using the above two intelligent beakers. Wang et al. [

30] proposed a multimodal fusion algorithm (MFA) that integrates multi-channel data such as speech, vision, and sensors to capture the user’s experimental intention and also navigate, guide, or warn the user’s operation behavior, and the experimental results showed that the smart glove was applied to teach chemistry experiments based on virtual–real fusion with a good teaching effect. Xie et al. [

31] proposed a virtual reality elementary school mathematics teaching system based on GIS data fusion, which applied a virtual experiment teaching system, intelligent assisted teaching system, and virtual classroom teaching system analysis-related technologies to teaching. The experimental data and investigation results showed that the system could help students simulate operation experience and understand the principles, and at the same time, improve users’ learning interest. Wang et al. [

32] proposed a smart glove-based scene perception algorithm to capture users’ experimental behaviors more accurately. Students are allowed to conduct exploratory experiments on a virtual experimental platform to guide and monitor users’ behavior in a targeted manner. The experiments showed that the smart glove can also infer the experimental intention of the operator and provide timely feedback and guidance to the user’s experimental behavior. Pan et al. [

33] proposed a new demand model for virtual experiments by combining human demand theory. An integrated MR system called MagicChem was also designed and developed to support realistic visual interaction, tangible interaction, gesture and touch interaction, voice interaction, temperature interaction, olfactory interaction, and avatar interaction. User studies have shown that MagicChem satisfies the requirements model better than other MR experimental environments that partially satisfy the requirements model. The above experimental platforms propose the use of instruments and devices in real scenes to operate objects in virtual environments, which greatly retains the user’s sense of operation and realism in traditional experiments.

The combination of virtual experiments and education is still in the trial stage, and many researchers have carried out different explorations of virtual experiments. Ullah et al. [

34] added teaching guidance to the MMVCL experiment. The experimental results showed that teaching guidance could improve students’ performance in the virtual laboratory. Su et al. [

35] designed and developed a virtual reality chemistry laboratory simulation game and proposed a sustainable innovative experiential learning model to verify the learning effect. The experimental results showed that the virtual chemistry lab had a significant effect on academic performance. Students using the sustainable development innovative experiential learning model gain a better understanding of chemical concepts. Oberdrfer et al. [

36] combined learning, games, and VR technology. The experiment showed that this approach improved the quality and effect of learning and enhanced the presentation of abstract knowledge. Rodrigues D et al. [

37] used an experimental study of artificial intelligence techniques to customize the educational game interface based on the player’s profile, and the empirical results show that the game components adjusted in real time for a better player experience and, at the same time, improved the correctness rate of the players participating in the study. Marín D et al. [

38] proposed a multimodal digital teaching approach based on the VARK (visual, auditory, reading/writing, kinesthetic) model that matches different learning modes to different students’ styles. The experimental results show that the use of the VARK multimodal digital teaching approach has proven to be effective, efficient, and beneficial in HCI instruction, with significant improvements in users’ learning scores and satisfaction. Hong et al. [

39] designed a gamification platform called TipOn that allows students to ask and gamify questions based on different game modes in order to facilitate students’ practice of English grammar. The focus was on designing a reward system to gamify students’ learning content. The results suggest that teachers can use this gamification system in the flipped classroom to motivate students to tap into their cognitive curiosity in order to increase their content learning and improve learning outcomes.

In summary, the multimodal interaction method can make it easier for computers to understand the user’s intentions and can effectively improve the efficiency of human–computer interaction and user experience. To improve the understanding of user intention in the virtual–real fusion experiment in this article, we propose a multimodal intention active understanding algorithm. The algorithm integrates the user’s tactile information and voice information during the experiment to improve the efficiency of human–computer interaction and reduce the user’s interaction burden.

Absorbing the above research results and experience, this paper proposes a game-based intelligent experiment based on the fusion of virtuality and reality. Through real experimental equipment and a virtual experimental platform, students can complete chemical experiments that combine virtual and real elements. At the same time, to eliminate the boring nature of virtual experiments, game elements are added to the virtual experiments. By adding gameplay to the experimental mode of fusion of virtual and reality, students’ enthusiasm for learning is stimulated, and their inquiry ability and practice level are improved.

3. Understanding User Intentions in the Experimental Mode of Gameplay

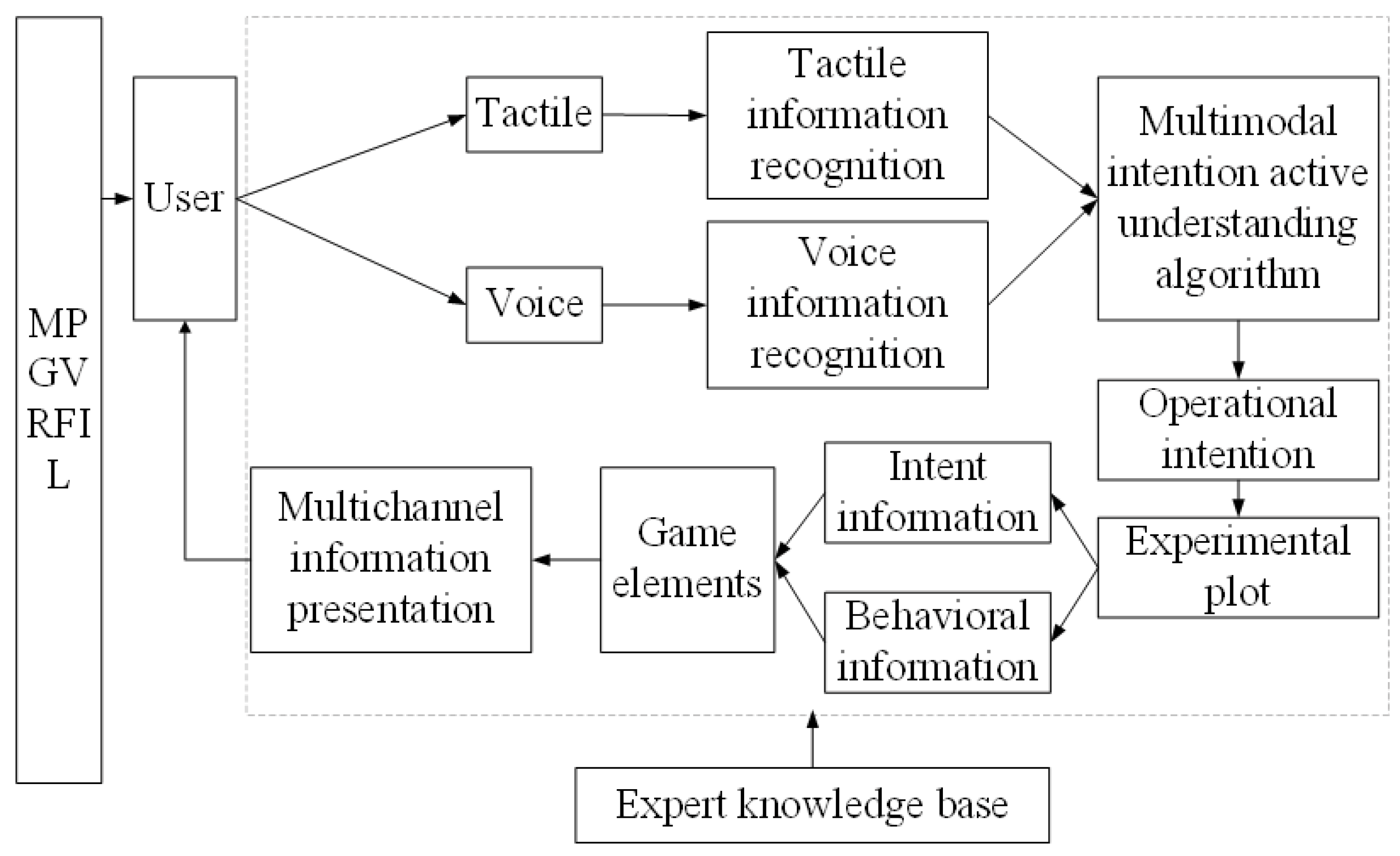

To simulate the chemistry experiment scene and operation in the traditional laboratory, we use the method of virtual and real fusion to perform the chemistry experiment. Real refers to the intelligent beaker, and virtual refers to the virtual experimental platform. At the same time, to improve the ability of the virtual and real fusion experimental platform to understand the user’s intention, we have added a multimodal intention active understanding algorithm. On this basis, we learn from the existing game platforms and add game elements to the virtual and real fusion experimental platform. The above methods can increase the exploratory and interesting nature of the experiment, improve the sense of immersion and participation in the experiment, and stimulate students’ learning enthusiasm. The overall framework of the multimodal perception gameplay virtual and real fusion experimental mode is shown in

Figure 1.

The experimental platform obtains tactile information and voice information through the intelligent beaker and microphone and recognizes the obtained tactile information and voice information. The tactile recognition results and voice recognition results are input into multimodal intention active understanding algorithms to obtain the user intention. Finally, the user intention is input into the plot of the virtual and real fusion experimental scene. In the experimental plot, the platform analyzes the user’s intention information and operational information and combines game elements to present the experimental process to the user through a multichannel method.

3.1. Design and Interaction of Intelligent Devices

We use the method of fusion of virtual and real for chemical experiments. Real refers to the new intelligent device that senses tactile information. The intelligent device (

Figure 2) is a 3D-printed beaker model equipped with two touch sensors, a posture sensor, and a Bluetooth module. To facilitate users’ use of intelligent devices, we use Bluetooth to output tactile information. The intelligent beaker inputs sensor information into the computer through the Bluetooth module.

The interaction of intelligent devices occurs through touch sensors and posture sensors. The interactive mode of the intelligent beaker is as follows:

The No.1 touch sensor has the function of selecting chemical reagents. Users can choose different chemical substances through the touch sensor on the intelligent beaker.

The function of the No.2 touch sensor is to detect whether the way the user holds the intelligent beaker is standard. The No.2 touch sensor is set at the lowest end of the beaker, a position that cannot be touched when the intelligent beaker is held normally. If the user touches the No.2 touch sensor when holding the intelligent beaker, it will prompt the user to hold the beaker properly.

The posture sensor on the intelligent beaker is used to sense the beaker’s own information, including the angle, direction, and speed.

The posture sensor gives the beaker a rough dumping quantification function. The dumping quantification function is determined by the dumping time, angle, and speed. As shown in

Table 1, angle

is the angle of the current tipping direction of the intelligent beaker, the speed

v is

, and

are the angular velocities of the coordinate axes of the intelligent beaker. The rough dumping quantification is divided into three levels: slow, normal, and fast.

,

, and

are an estimate of the amount of liquid poured in one moment t when the pouring angle (

) matches the range of the four hierarchical classifications in

Table 1, respectively, and in this paper, one moment represents 1 s. Since the values of pouring quantification are roughly estimated, we also present them in hierarchical levels in terms of animation presentation. For example, the values in the interval (30,50) are presented with the same effect in the virtual experimental scenario, where the same amount of liquid is viewed. For the quantification of the pouring speed (

), we reflect it by the pouring time, when the pouring speed is less than 30. Based on experience, we believe that the amount of 1-s pouring at this time can be calculated according to 0.8 times the normal speed. Similarly, when the dumping speed is greater than 60, we believe that the amount of dumping in 1 s can be calculated according to 1.2 times of the normal speed. Finally, the total amount of dumping is correlated with the dumping volume and dumping time, and the calculation of the total amount depends on the product of the dumping volume and dumping time for different levels.

Intelligent beakers have the common requirements of chemical experiment beakers and can be used to perform different chemical experiments.

3.2. Multimodal Information Perception

First, we define a set of intents in the chemical experiment. Since we use a combination of tactile and speech information to obtain user intent, the set of chemical experiment intents corresponding to tactile and speech information is the same. We set up multiple paths and obstacles in the experimental process so that the chemical experiment is no longer a single operational process. Since chemical experiments are relatively independent processes, different sets of intentions are set up for different experiments. We divide the whole experimental process into several experimental steps

, where

denotes all steps in the nth experimental process. To complete a step requires multiple operations. We set one or more paths

in each step according to the rules of chemical experimental knowledge points, where

denotes all the paths in the nth experimental step, i.e., the set of all the operation behaviors required to achieve this step. Finally, a different set of intentions

is set according to the combination of multiple paths in each step, where

denotes the set of all intentions for the nth experiment and

denotes the number of experimental intentions. Behavior and intent are a one-to-many relationship, and the user’s operational intent is predicted by behavior. We assign the intention corresponding to each step according to the steps of the experiment and the path. For example, an experimental intention set is

=

. According to the three steps

= {

,

,

} of the experiment, the intention set assigns intention

= {(

,

|

), (

,

|

), (

|

)} according to the step. Among them, two intentions

,

are set in step

to reflect the concept of multipath and obstacles in the experimental process. A concrete example is shown in

Figure 3.

After entering the experimental scene, the user uses the intelligent beaker to perform experimental operations. The intelligent beaker perceives the user’s tactile information

under the current experimental step

. User tactile information, the current experimental step, and the intention set input the tactile information conversion function

Finally, the tactile information is recognized by the tactile information conversion function to obtain the recognition result

:

where

is tactile information,

is the tactile intention set of the current experiment, and

is the current experimental step.

In the process of the virtual and real fusion experiment, users can not only use tactile information but also voice information to express intention. First, we build a voice command database

based on different experimental intention sets. Among them, the speech database has a one-to-one correspondence with the intention set

of the chemical experiments,

represents the type of experiment, and

represents the number of intentions of the experiment. We interviewed 20 students and asked how they would use language to express the intentions in the intention set, and finally, each intention had five expressions. Using the laboratory preparation of carbon dioxide as an example, the set of intents is

=

react limestone with dilute hydrochloric acid, react limestone with dilute sulfuric acid, react limestone with concentrated sulfuric acid, react calcium carbonate with dilute hydrochloric acid, react calcium carbonate with dilute sulfuric acid, react calcium carbonate with concentrated sulfuric acid

. The voice command database corresponding to the intent

-React limestone with dilute hydrochloric acid is

mix limestone with dilute hydrochloric acid, add dilute hydrochloric acid to limestone, react limestone with dilute hydrochloric acid, pour dilute hydrochloric acid into a conical flask containing limestone, put limestone with dilute hydrochloric acid into a reaction reagent flask

. Second, we found that the commands issued by users are all shorter voice commands. Therefore, we use word vectors to calculate the similarity between the speech information and the command set in the speech intention database. We use word2vec to train the word vector model and then use the word vector model to convert the user’s voice information

Aud and voice commands in the voice database

of the current step

into word vectors. The maximum cosine similarity between the two is the result of speech information recognition:

where

Aud is voice information,

is the speech intention set of the current experiment, and

is the current experimental step.

3.3. Active Understanding of Multimodal Intentions

The proposed algorithm focuses on the basic purpose that the experimental system can understand the user’s operation intention based on the multimodal fusion. After obtaining the user’s tactile results and voice results, the multimodal fusion algorithm fuses multimodal information to obtain the user’s intentions. To improve the accuracy of intention understanding, an active understanding process is added after multimodal fusion. The specific algorithm framework is shown in

Figure 4.

As the figure shows, the user inputs voice information and tactile information. We use a multimodal information fusion model to fuse the recognition results of speech and touch. After obtaining the fusion results, we preprocess the fusion results and analyze the fusion results with lower confidence. The tactile information and voice information of the fusion result are input into a single-modal evaluation function, and the evaluation function analyzes which channel of the input information is missing or incomplete. The system requests that the user enhances or supplements the information of a certain channel according to the result of the single-modal evaluation function. The fusion result with higher confidence is considered to be the user’s current intention. The multimodal intention active understanding algorithm uses the evaluation of the fusion result and the single-modal information evaluation to request that the user enhances the input information of a certain channel to improve the intention understanding ability.

We choose to fuse the multimodal information in the experimental process at the decision-making level and input the multimodal information into the multimodal information fusion function

:

In terms of multimodal information fusion, we consider three situations: (1) only tactile information, (2) only voice information, and (3) tactile information and voice information at the same time. When tactile information and voice information exist at the same time, the average weighting method is used for fusion: .

After the fusion result is obtained, the system preprocesses the fusion result.

If

, the system will input the obtained user intention

into the experimental scene. If

, the system inputs the tactile and auditory recognition results into the single-channel evaluation function

:

where 1 and 2 represent touch and sound, respectively, and

,

are the thresholds of the tactile and speech channels, respectively.

According to the results of , we analyze which channel has low information quality. According to the evaluation results, the system reminds the user to enhance the information of a certain channel and combines the context to obtain new user intentions. Finally, the real intention of the user is input into the current experimental scene.

The multimodal intention active understanding Algorithm 1 is as follows:

| Algorithm 1 Multimodal intention active understanding algorithm (MIAUA) |

| Input: Tactile recognition results . Speech recognition result ; |

| Output: User intention ; |

|

1: while is not empty do |

|

2: input multimodal information fusion function ;

|

|

3: while is not empty do |

|

4: if then |

| 5: Get user intention ;

|

| 6: end if |

| 7: if then |

| 8: Get user intention ;

|

| 9: When the fusion result is less than the threshold, are input to the single-channel evaluation function ;

|

| 10: if then |

| 11: Remind users to enhance tactile channel information;

|

| 12: end if |

| 13: if then |

| 14: Remind users to enhance auditory channel information;

|

| 15: end if |

| 16: if then |

| 17: Remind users that they cannot obtain accurate intentions and continue to predict user intentions;

|

| 18: end if |

| 19: end if |

| 20: end while |

| 21: end while |

3.4. Algorithm Analysis

The intention capture result of the fusion layer does not necessarily reflect the real intention of the user, so its credibility needs to be evaluated. Under a specific algorithm framework, one of the key factors affecting credibility is the quality of multichannel information at the perceptual level. For example, if the user’s voice information is unclear, incomplete, or cannot be captured by voice recognition, the credibility of the intention perception based on the voice channel is low. In this case, the system can actively ask the user to input voice information again or change to a voice that can express the same intention. Therefore, this article evaluates the results on two levels: one to evaluate the result of fusion intention, and the other to evaluate the credibility of each channel.

The MFPA algorithm in the literature and the MMNI algorithm in the literature are both multimodal fusion algorithms in virtual and real fusion experiments. The MIAUA algorithm in this paper similarly fuses voice and tactile information at the decision-making level. The difference is that MIAUA verifies the fusion result, unlike the MFPA algorithm and the MMNI algorithm, which directly confirm or deny whether the fusion result is the real intention of the user. The MIAUA algorithm evaluates the fusion result, and the fusion result obtained through the evaluation is considered the real intention. For fusion results with low credibility, the MIAUA algorithm evaluates the information of each channel. For channels with low credibility, the system actively requires users to supplement and strengthen the information of the current channel. We use the method of active understanding of the intention to accurately identify the user’s intention. The ability of the MIAUA algorithm to recognize user intentions is stronger than that of the MFPA algorithm and MMNI algorithm. During the experiment, the user’s interaction burden will be lower, and the human–computer interaction will be more natural and coordinated.

4. Gameplay Design of Virtual and Real Fusion Experimental Mode

People always experience a series of psychological changes when they study or play games. “Flow” is one of the most representative positive psychological experiences. Mind flow is a phenomenon in which participants focus on the goal and task at hand, concentrate so intensely that they forget about the passage of time, and filter out all external influences [

40]. In terms of guiding teaching activities, Liao et al. [

41] created an evaluation model to analyze students’ motivation and behavior by combining the practice of mind flow theory for guiding distance learning and concluded that when students enter a state of flow while learning, the learning effect is greatly improved. If students enter a state of flow when studying, the learning effect will be greatly improved. With the help of this mechanism, we incorporate game elements into the virtual experiment. In the game state, it is easier for students to enter the state of flow, which improves their learning interest and motivation to a great extent. Therefore, we combine the characteristics of existing game platforms to introduce gameplay into the experimental interactive mode of virtual and real fusion. The introduction of gameplay stimulates students’ interest and motivation in the experimental process and improves their learning efficiency.

The addition of game elements to virtual experiments can make learning a means of passing game levels and eliminate students’ negative emotions about learning. Since different chemical experiments have their own operating points and knowledge points, we design game rules in the virtual and real fusion experiment. We take the learning path as the experimental plot and learn chemical experiments by guiding the main line. At the same time, real-time feedback is realized in the form of points, levels, and rewards during the experiment so that users can quickly and intuitively understand the situation of their experiments. We design the experimental mode of the virtual and real fusion experiment as a breakthrough game mode and design the number of experimental levels according to the complexity of the chemical experiments. According to the chemical experiment steps, we set the plot at each experimental level. We add a multipath strategy, obstacle setting strategy, and incentive strategy to the experimental mode of gameplay fusion of virtual and reality. The level rules are established on the basis of the multipath strategy and the obstacle setting strategy. The experimental levels are formed through individual experimental steps, so that the entire experimental process is consistent; at the same time, setting obstacles during the experiment increases the difficulty of the experiment and enhances the interest and playability of the experiment. In psychology, reward is a universal state of mind that causes pleasant feelings and leads those who experience it to expect to be recognized and appreciated by others. Therefore, an incentive strategy is added during the experiment. When the learner completes an expected goal or passes the checkpoint, he or she will be rewarded according to the performance of the experiment.

4.1. Multipath Strategy

Chemistry is a natural subject based on experiments. Chemistry experiments are an important part of chemistry teaching and can cultivate students’ scientific inquiry ability and practical ability. Different chemical experiments have corresponding experimental steps, as do certain exploratory experiments. We propose a multipath strategy in the game-based virtual and real fusion experiment and add multiple learning paths to the experimental plot. The multipath approach is defined as the design of multiple paths for users to choose based on the knowledge points of the chemical experiment in a certain plot in the game process, increasing the exploratory nature of the virtual and real fusion experiment. For example, the method of producing carbon dioxide in a traditional laboratory is the reaction of limestone with dilute hydrochloric acid. In exploratory experiments, limestone can be replaced with marble or sodium carbonate, dilute hydrochloric acid can be replaced with dilute sulfuric acid, the amount of limestone or the concentration of dilute hydrochloric acid can be changed, etc.

4.2. Set Obstacle Strategy

We use three methods to set obstacles in the experiment. Method 1: When the user wants to obtain nonexploratory experimental results, he or she can observe only the desired experimental phenomenon by selecting the correct path in the multipath format. Method 2: We set up game levels in complex chemistry experiments, and users can enter the next level after passing the first level. For example, in the experiment of preparing carbon dioxide from limestone and dilute hydrochloric acid, the carbon dioxide produced is not pure carbon dioxide (containing water and hydrogen chloride gas). We set up two levels according to the degree of difficulty: the first level is to produce carbon dioxide, and the second level is to remove impurities from carbon dioxide to obtain pure carbon dioxide. Method 3: During the experiment, we add a question-and-answer session related to knowledge points. The user can enter the next step only if the answers are correct. If the user answers incorrectly, the system will feed the user several answer options for the user to choose until the user chooses the correct option. In addition, in the observation of experimental phenomena, part of the experimental phenomenon is obscured by a mosaic. The user can observe the experimental phenomenon after passing the knowledge points of the question-and-answer session.

4.3. Incentive Strategy

The incentive strategy uses real-time feedback in the form of scores and rankings, so that users can quickly and intuitively understand their situation. The score consists of three parts: (1) During the experiment, the question-and-answer session about knowledge points. Set the score according to the number of times the user answers a question. (2) Operational behavior during the experiment. Set the score according to the user’s operational behavior. Users use intelligent beakers for the experiments. The intelligent beaker is equipped with a sensor that can sense the state of the beaker and detect the dumping function of the beaker. In the course of the experiment, two kinds of incorrect operations can be detected: the user dumping an empty beaker or dumping a breaker too quickly. Different path combinations correspond to different experimental results and experimental phenomena and are scored according to the path scores set in the game rules.