A Topology Based Automatic Registration Method for Infrared and Polarized Coupled Imaging

Abstract

1. Introduction

2. Methodology

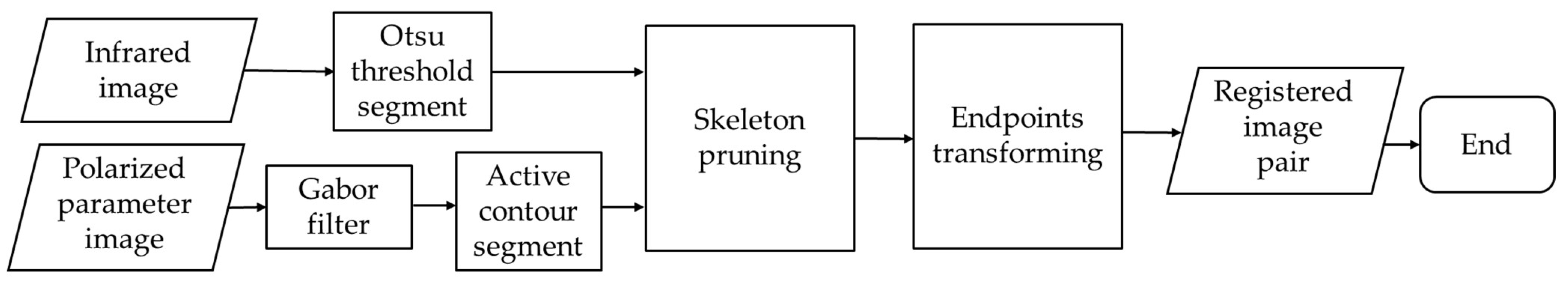

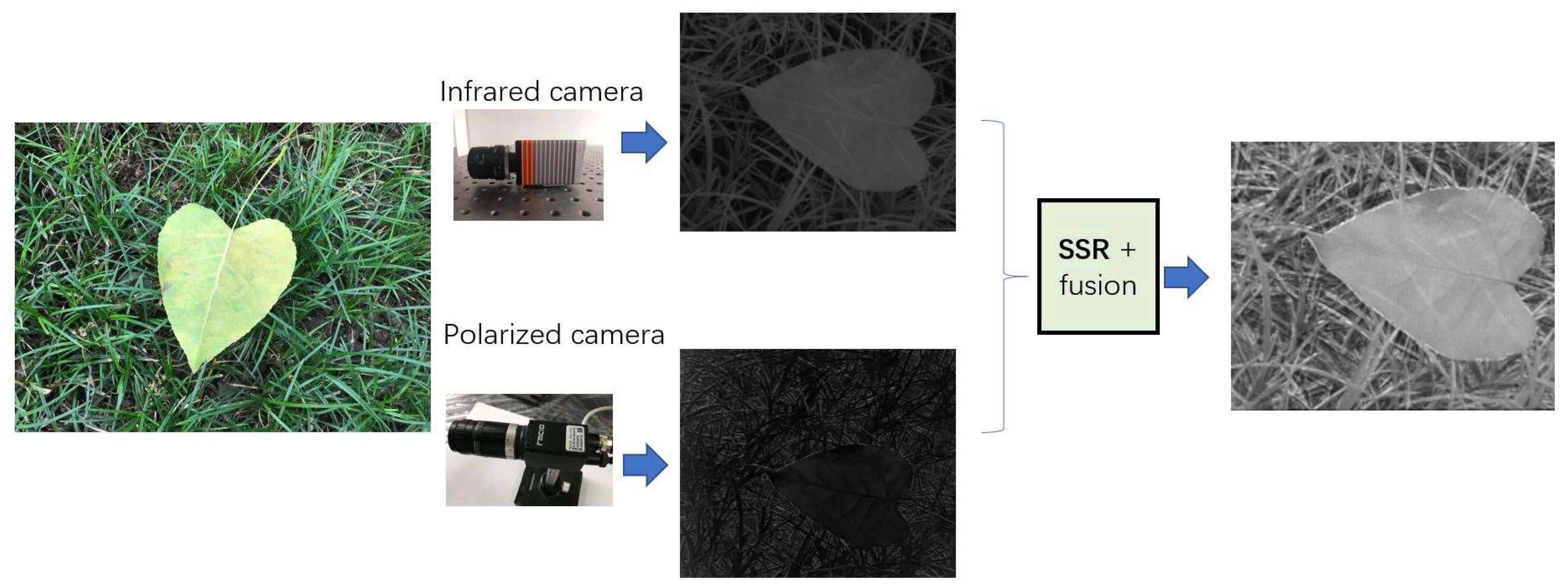

2.1. Overview of the Proposed Algorithm

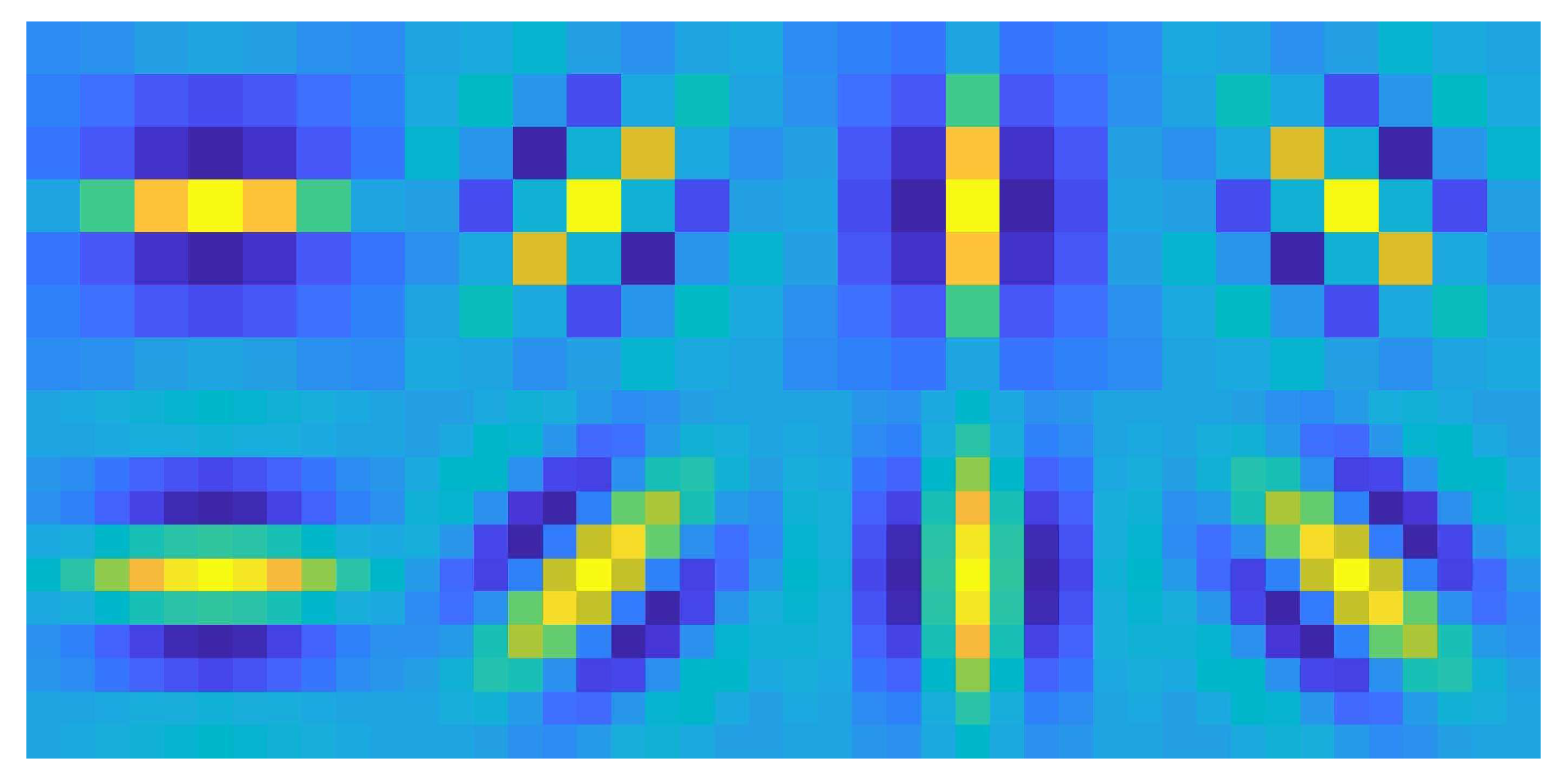

2.2. Target Segmentation from Pending Images

2.3. Region Skeletonization for Control Points

2.4. Transformation

3. Results and Discussion

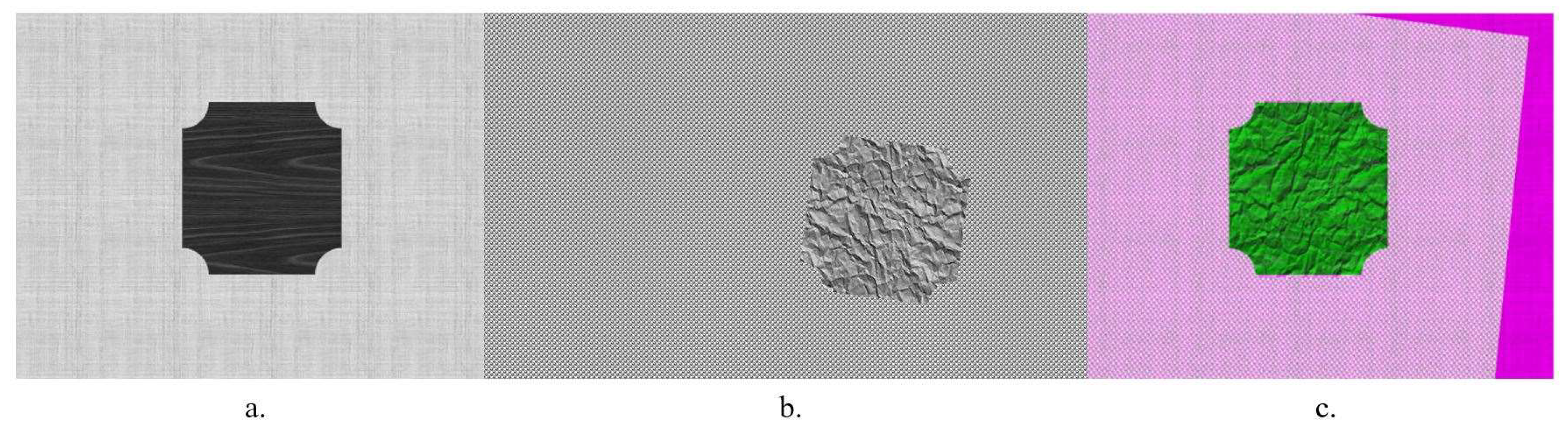

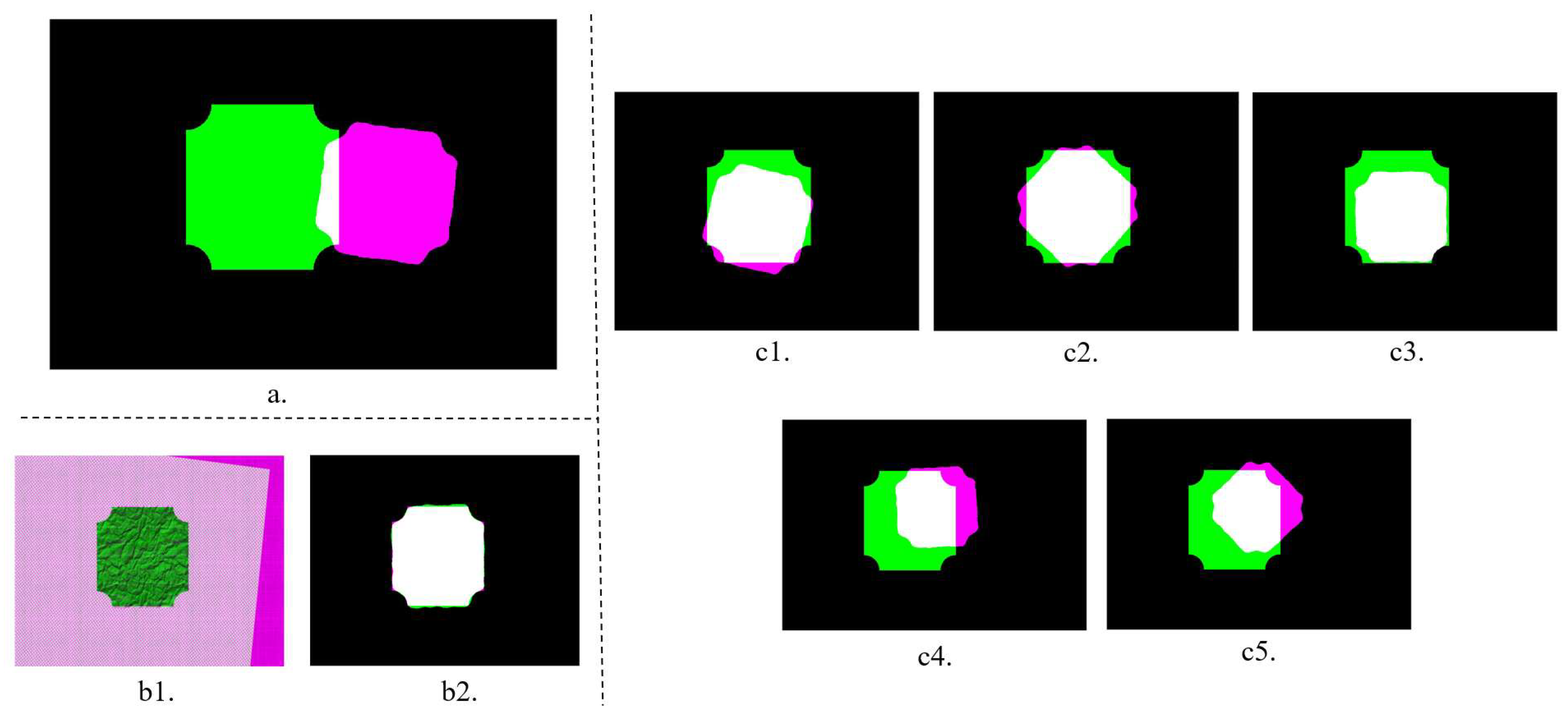

3.1. Synthetic Images Experiment

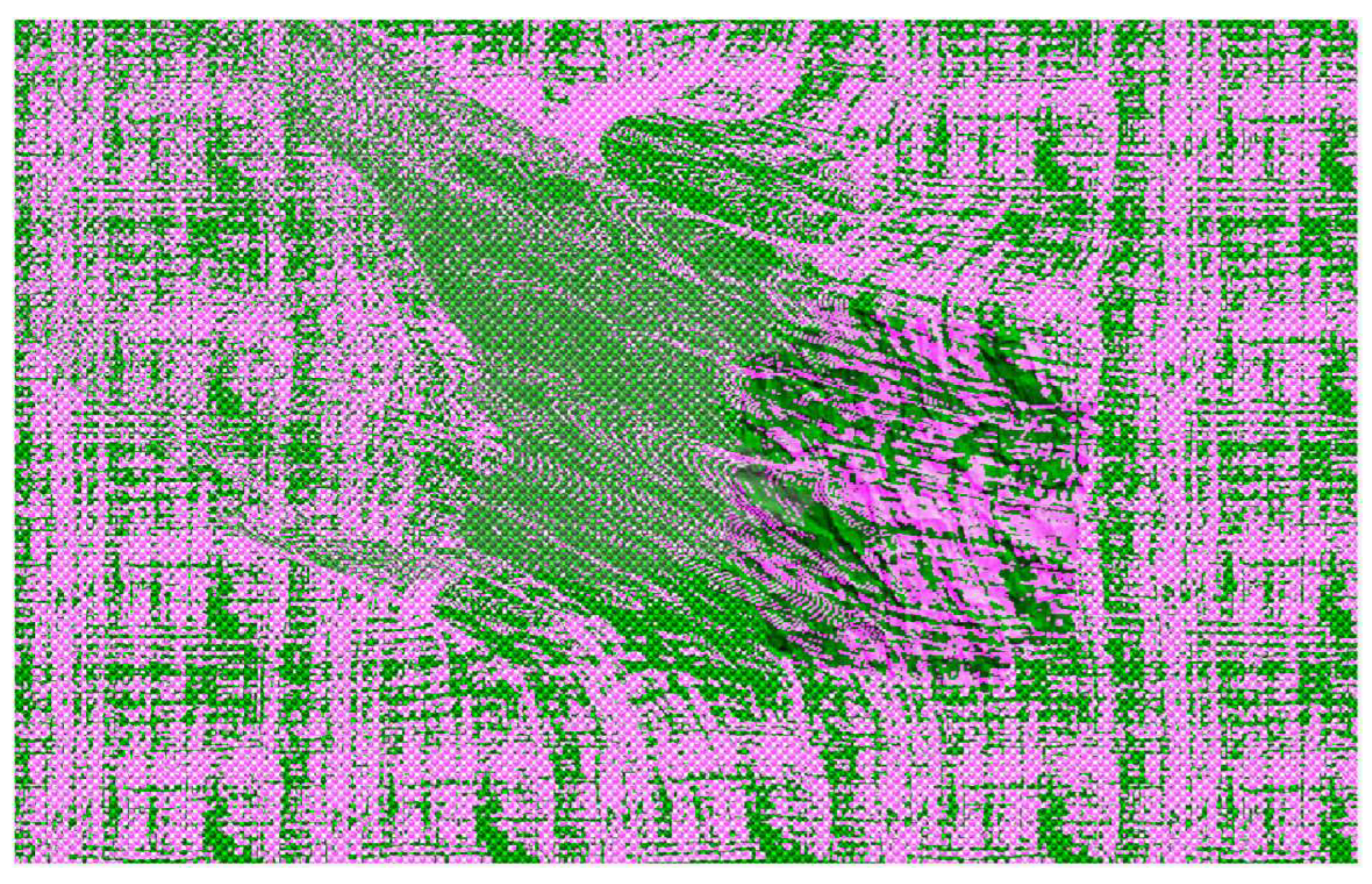

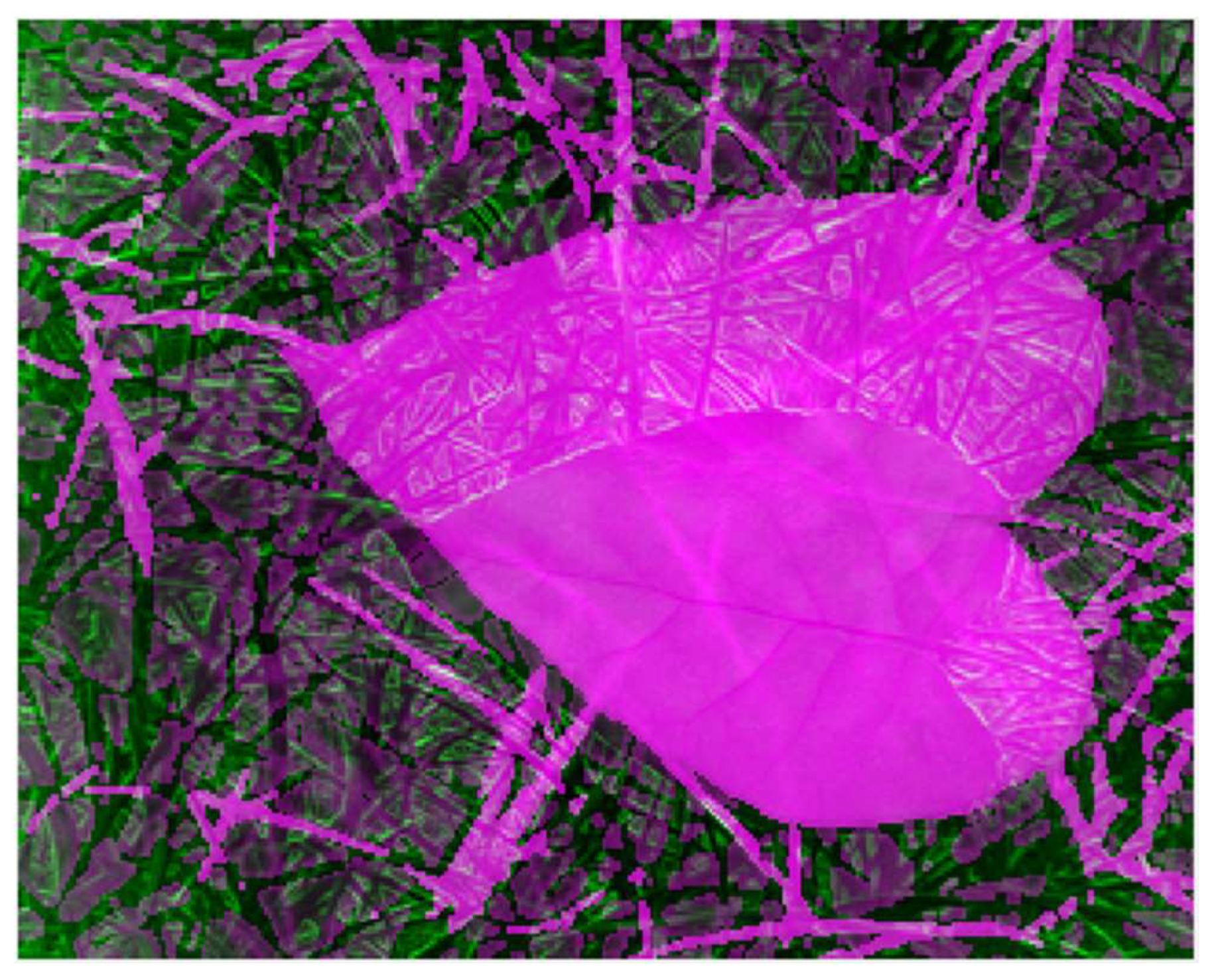

3.2. Real World Image Experiment

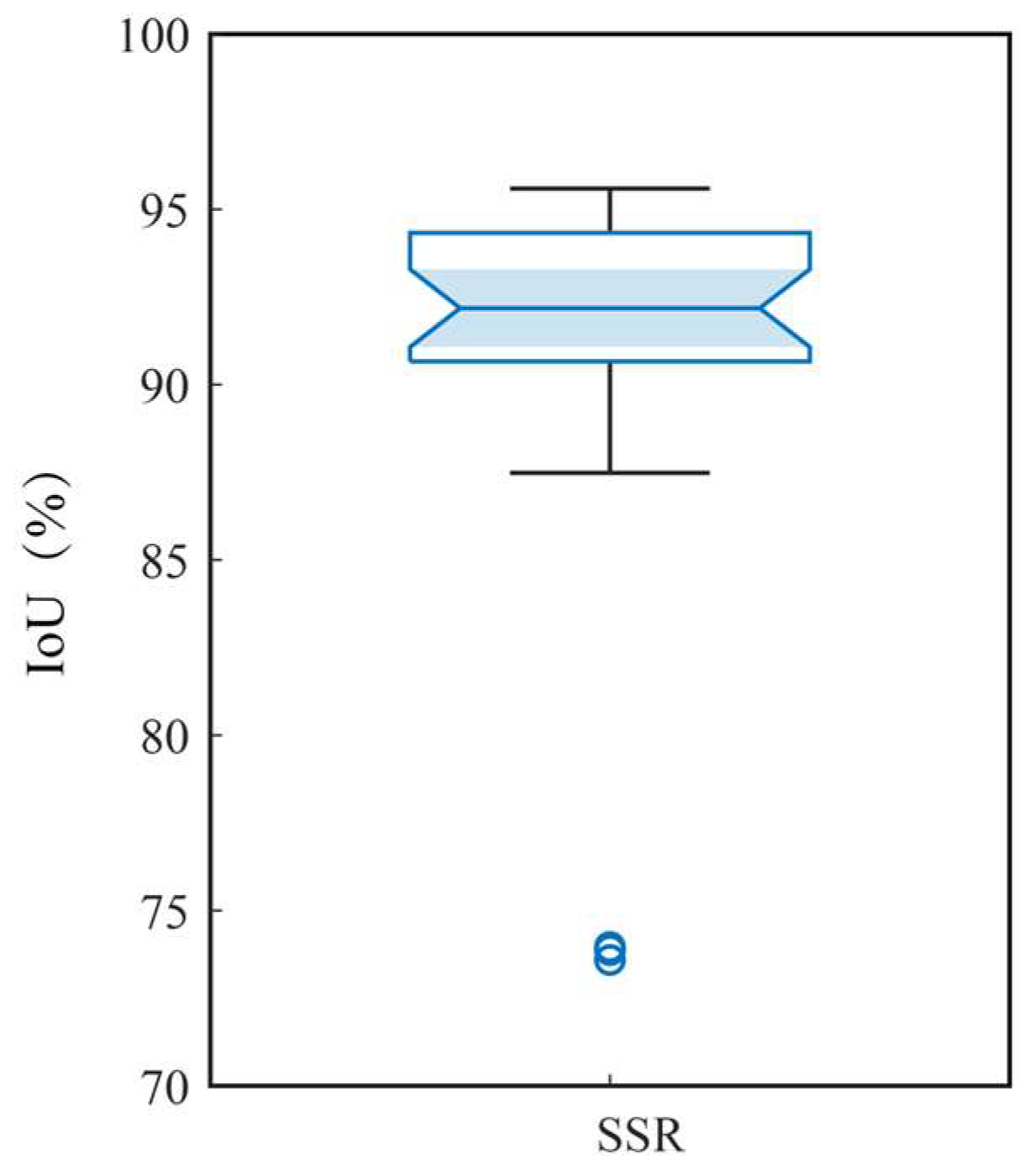

3.3. Objective Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Henry, C.; Poudel, S.; Lee, S.-W.; Jeong, H. Automatic Detection System of Deteriorated PV Modules Using Drone with Thermal Camera. Appl. Sci. 2020, 10, 3802. [Google Scholar] [CrossRef]

- O’Malley, R.; Jones, E.; Glavin, M. Detection of pedestrians in far-infrared automotive night vision using region-growing and clothing distortion compensation. Infrared Phys. Technol. 2010, 53, 439–449. [Google Scholar] [CrossRef]

- Wang, B.; Dong, L.; Zhao, M.; Wu, H.; Ji, Y.; Xu, W. An infrared maritime target detection algorithm applicable to heavy sea fog. Infrared Phys. Technol. 2015, 71, 56–62. [Google Scholar] [CrossRef]

- Zhang, S.; Zhan, J.; Fu, Q.; Duan, J.; Li, Y.; Jiang, H. Effects of environment variation of glycerol smoke particles on the persistence of linear and circular polarization. Opt. Express 2020, 28, 20236–20248. [Google Scholar] [CrossRef] [PubMed]

- Fu, Q.; Luo, K.; Song, Y.; Zhang, M.; Zhang, S.; Zhan, J.; Duan, J.; Li, Y. Study of Sea Fog Environment Polarization Transmission Characteristics. Appl. Sci. 2022, 12, 8892. [Google Scholar] [CrossRef]

- Juntong, Z.; Shicheng, B.; Su, Z.; Qiang, F.; Yingchao, L.; Jin, D.; Wei, Z. The Research of Long-Optical-Path Visible Laser Polarization Characteristics in Smoke Environment. FrPhy 2022, 10, 874956. [Google Scholar] [CrossRef]

- Hart, K.A.; Kupinski, M.; Wu, D.; Chipman, R.A. First results from an uncooled LWIR polarimeter for cubesat deployment. OptEn 2020, 59, 17. [Google Scholar] [CrossRef]

- Santamaría, J.; Rivero-Cejudo, M.L.; Martos-Fernández, M.A.; Roca, F. An Overview on the Latest Nature-Inspired and Metaheuristics-Based Image Registration Algorithms. Appl. Sci. 2020, 10, 1928. [Google Scholar] [CrossRef]

- Xue, W.; Jianguo, L.; Hongshi, Y. The Illumination Robustness of Phase Correlation for Image Alignment. ITGRS 2015, 53, 5746–5759. [Google Scholar] [CrossRef]

- Mattes, D.; Haynor, D.R.; Vesselle, H.; Lewellyn, T.K.; Eubank, W. Nonrigid Multimodality Image Registration. Med. Imaging Image Process. 2001, 4322, 1609–1620. [Google Scholar]

- Mattes, D.; Haynor, D.R.; Vesselle, H.; Lewellen, T.K.; Eubank, W. PET-CT image registration in the chest using free-form deformations. IEEE Trans. Med. Imaging 2003, 22, 120–128. [Google Scholar] [CrossRef] [PubMed]

- Xinyu, Z.H.U.; Ziyi, W.; Guogang, C.A.O.; Ying, C.; Yijie, W.; Mengxue, L.I.; Shunkun, L.I.U.; Hongdong, M.A.O. Chaotic Brain Storm Optimization Algorithm in Objective Space for Medical Image Registration. In Proceedings of the 2020 5th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 18–20 November 2020; pp. 81–84. [Google Scholar]

- Panda, S.; Sarangi, S.K.; Sarangi, A. Biomedical Image Registration Using Genetic Algorithm. In Intelligent Computing, Communication and Devices; Springer: New Delhi, Indian, 2015; Volume 309, pp. 289–296. Available online: https://link.springer.com/chapter/10.1007/978-81-322-2009-1_34#chapter-info (accessed on 10 October 2022).

- Senthilnath, J.; Omkar, S.N.; Mani, V.; Karthikeyan, T. Multiobjective Discrete Particle Swarm Optimization for Multisensor Image Alignment. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1095–1099. [Google Scholar] [CrossRef]

- Zhuang, Y.; Gao, K.; Miu, X.; Han, L.; Gong, X. Infrared and visual image registration based on mutual information with a combined particle swarm optimization—Powell search algorithm. Optik 2016, 127, 188–191. [Google Scholar] [CrossRef]

- Li, Y.; Huang, D.; Qi, J.; Chen, S.; Sun, H.; Liu, H.; Jia, H. Feature Point Registration Model of Farmland Surface and Its Application Based on a Monocular Camera. Sensors 2020, 20, 3799. [Google Scholar] [CrossRef] [PubMed]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Harris, C.G.; Stephens, M.J. A Combined Corner and Edge Detector. In Proceedings of the Proceedings of Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151.

- Rosten, E.; Porter, R.; Drummond, T. Faster and better: A machine learning approach to corner detection. IEEE Trans Pattern Anal Mach Intell 2008, 32, 105–119. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust Wide Baseline Stereo from Maximally Stable Extremal Regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Heinrich, M.P.; Jenkinson, M.; Bhushan, M.; Matin, T.; Gleeson, F.V.; Brady, S.M.; Schnabel, J.A. MIND: Modality independent neighbourhood descriptor for multi-modal deformable registration. Med. Image Anal. 2012, 16, 1423–1435. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Gao, M.; Chen, H.; Zheng, S.; Fang, B. A factorization based active contour model for texture segmentation. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 4309–4313. [Google Scholar]

- Arcelli, C.; Baja, G.S.d. Skeletons of planar patterns. In Topological Algorithms for Digital Image Processing; Kong, T.Y., Rosenfeld, A., Eds.; North-Holland: Amsterdam, The Netherlands, 1996; Volume 19, pp. 99–143. [Google Scholar]

- Choi, S.; Kim, J.; Kim, W.; Kim, C. Skeleton-Based Gait Recognition via Robust Frame-Level Matching. Ieee Trans. Inf. Forensics Secur. 2019, 14, 2577–2592. [Google Scholar] [CrossRef]

- Meng, F.Y.; Liu, H.; Liang, Y.S.; Tu, J.H.; Liu, M.Y. Sample Fusion Network: An End-to-End Data Augmentation Network for Skeleton-Based Human Action Recognition. ITIP 2019, 28, 5281–5295. [Google Scholar] [CrossRef] [PubMed]

- Rhodin, H.; Tompkin, J.; Kim, K.I.; Varanasi, K.; Seidel, H.P.; Theobalt, C. Interactive motion mapping for real-time character control. Comput. Graph. Forum 2014, 33, 273–282. [Google Scholar] [CrossRef]

- Jain, A.K.; Feng, J.J. Latent Fingerprint Matching. ITPAM 2011, 33, 88–100. [Google Scholar] [CrossRef] [PubMed]

- Serra, J. Image Analysis and Mathematical Morphology; Academic Press: New York, NY, USA, 1982. [Google Scholar]

| Feature | Original Image Pair | Segmented Image Pair | ||

|---|---|---|---|---|

| Detected Points | Matched Pairs | Detected Points | Matched Pairs | |

| SURF | 152-692 | 0 | 88-273 | 2 |

| MSER | 1-1107 | 0 | 0-0 | 0 |

| Brisk | 13-1 | 13 | 64-21 | 0 |

| FAST | 1-1 | 1 | 7-8 | 4 |

| Harris | 8-0 | 0 | 8-79 | 0 |

| MinEigen | 8-87540 | 0 | 8-91 | 0 |

| Feature | Original Image Pair | Segmented Image Pair | ||

|---|---|---|---|---|

| Detected Points | Matched Pairs | Detected Points | Matched Pairs | |

| SURF | 0-55 | 0 | 80-366 | 13 |

| MSER | 14-122 | 0 | 2-6 | 0 |

| Brisk | 0-0 | 0 | 32-552 | 0 |

| FAST | 0-0 | 0 | 9-259 | 0 |

| Harris | 17-12 | 0 | 2-30 | 0 |

| MinEigen | 45-40 | 0 | 2-49 | 1 |

| Algorithm | Indices of Synthetic Image | Indices of Real World Image | ||

|---|---|---|---|---|

| Time | IoU | Time | IoU | |

| SSR | 1.31 s | 96.67% | 1.76 s | 91.02% |

| Manual | 9 min+ | 93.49% | 15 min+ | 94.49% |

| Mean Squares | 5.60 s | 73.35% | / | / |

| Mattes MI | 4.20 s | 82.52% | / | / |

| Phase correlation | 0.85 s | 69.84% | / | / |

| Powell | 10.36 s | 69.29% | 6.99 s | 53.94% |

| PSO | 10.72 s | 68.63% | 6.47 s | 56.08% |

| BSO | / | / | 4.95 s | 53.99% |

| GA | / | / | 9.19 s | 55.80% |

| MIND | 154.41 s | / | 16.17 s | / |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, A.; Fu, Q.; Huang, D.; Zong, K.; Jiang, H. A Topology Based Automatic Registration Method for Infrared and Polarized Coupled Imaging. Appl. Sci. 2022, 12, 12596. https://doi.org/10.3390/app122412596

Zhong A, Fu Q, Huang D, Zong K, Jiang H. A Topology Based Automatic Registration Method for Infrared and Polarized Coupled Imaging. Applied Sciences. 2022; 12(24):12596. https://doi.org/10.3390/app122412596

Chicago/Turabian StyleZhong, Aiqi, Qiang Fu, Danfei Huang, Kang Zong, and Huilin Jiang. 2022. "A Topology Based Automatic Registration Method for Infrared and Polarized Coupled Imaging" Applied Sciences 12, no. 24: 12596. https://doi.org/10.3390/app122412596

APA StyleZhong, A., Fu, Q., Huang, D., Zong, K., & Jiang, H. (2022). A Topology Based Automatic Registration Method for Infrared and Polarized Coupled Imaging. Applied Sciences, 12(24), 12596. https://doi.org/10.3390/app122412596