Implementation of the XR Rehabilitation Simulation System for the Utilization of Rehabilitation with Robotic Prosthetic Leg

Abstract

:Abstract

1. Introduction

2. Background Theory

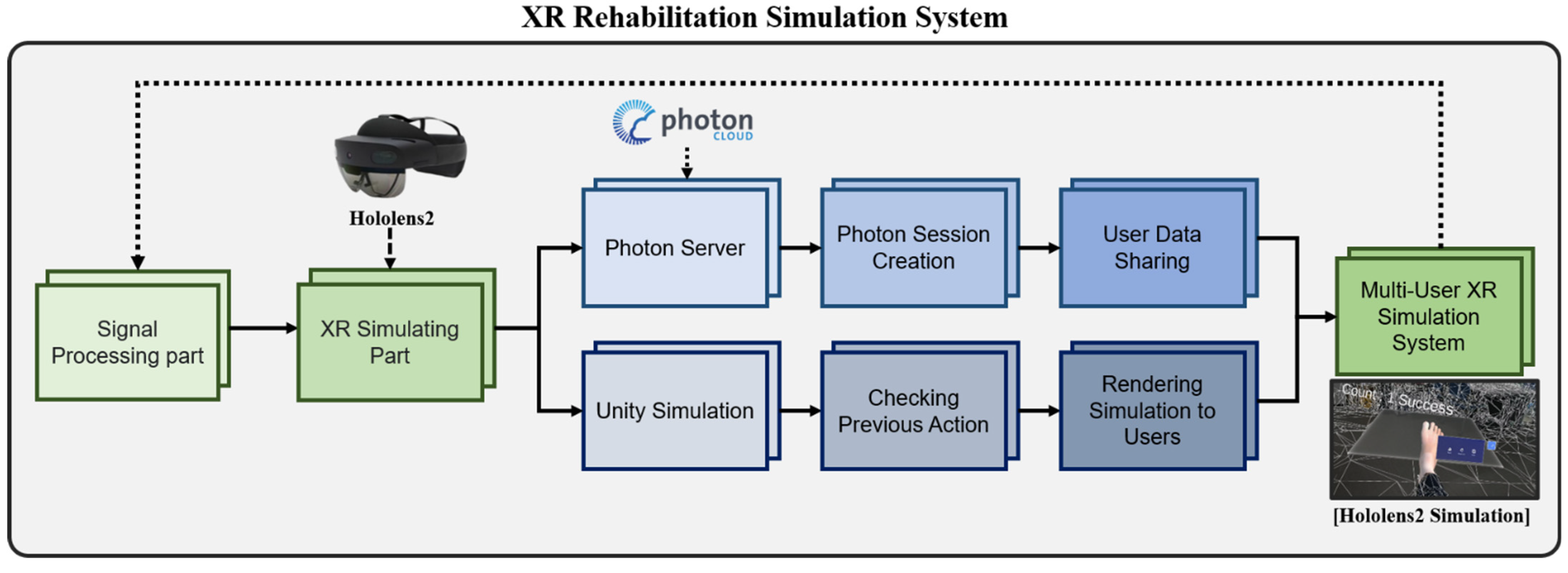

2.1. XR Rehabilitation Simulation System

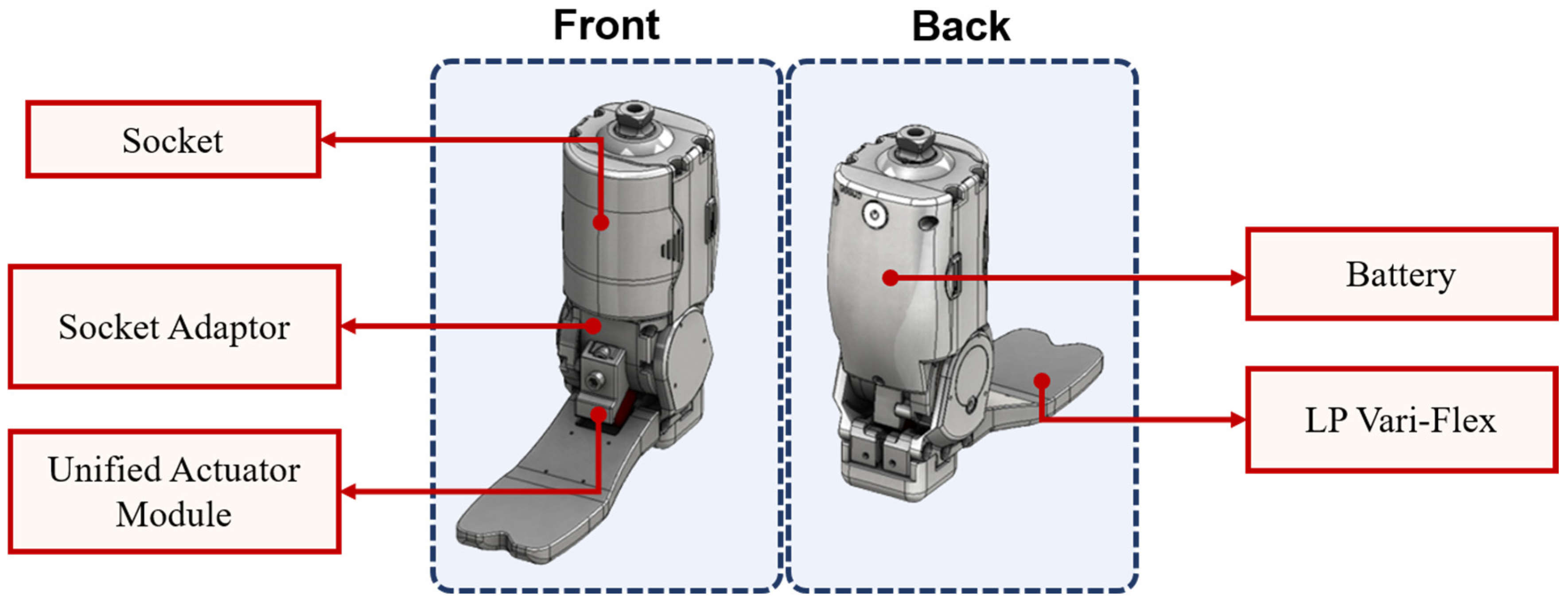

2.2. Robotic Ankle Prosthetic Leg

2.3. Related Work

3. Algorithm of System

3.1. XR Rehabilitation Simulation System

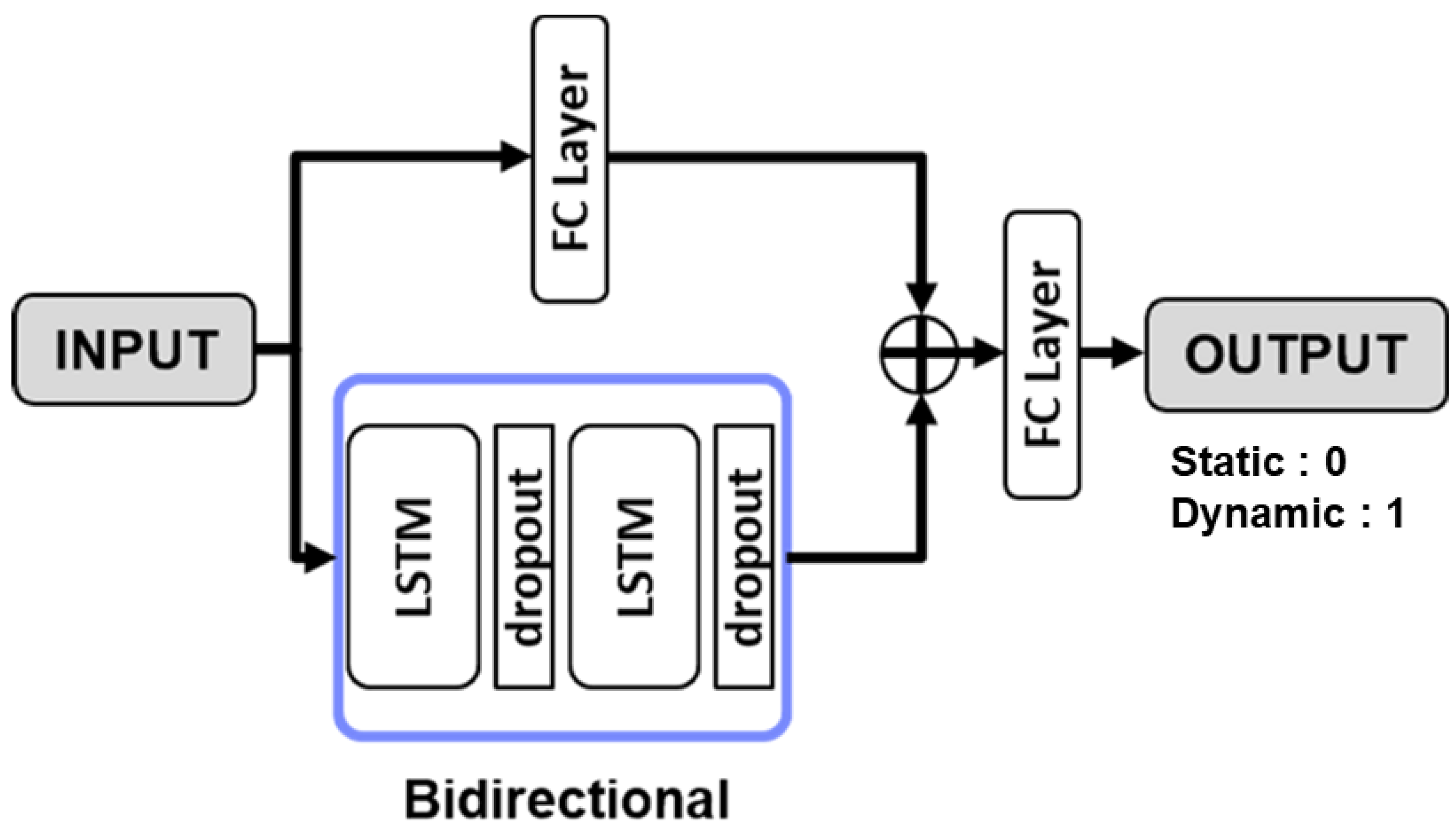

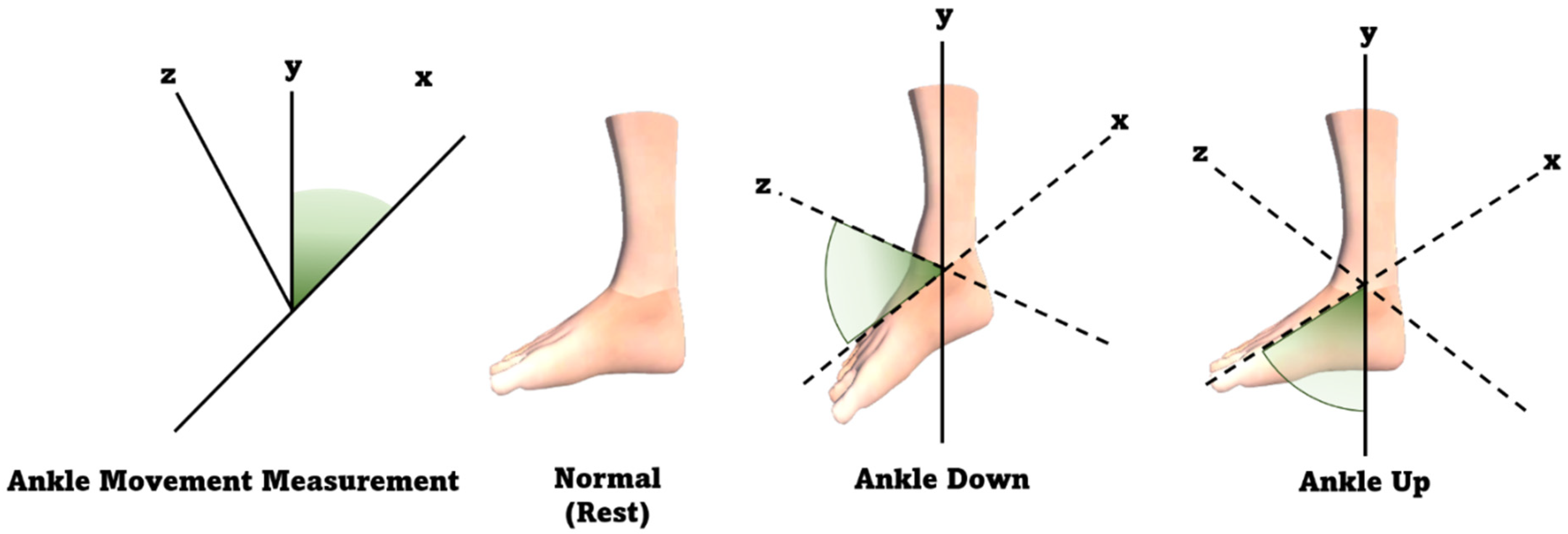

3.2. Movement Recognition

3.3. Rehabilitation Content

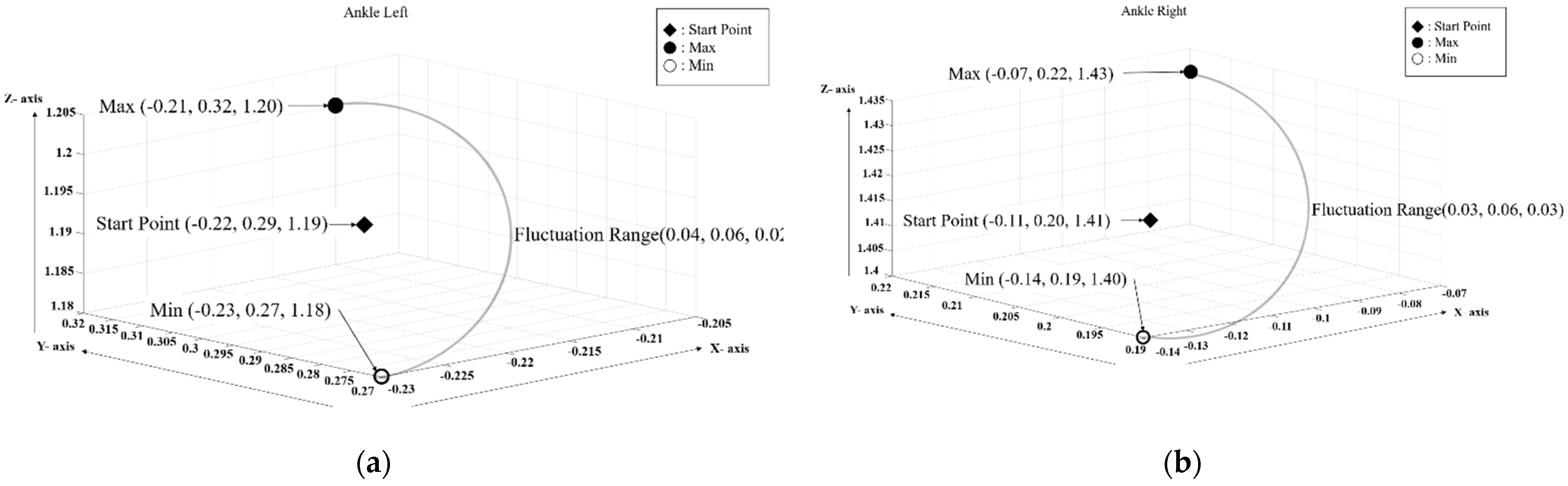

3.3.1. Joint Vertex Movement

3.3.2. 3D Model 6DoF Acquisition

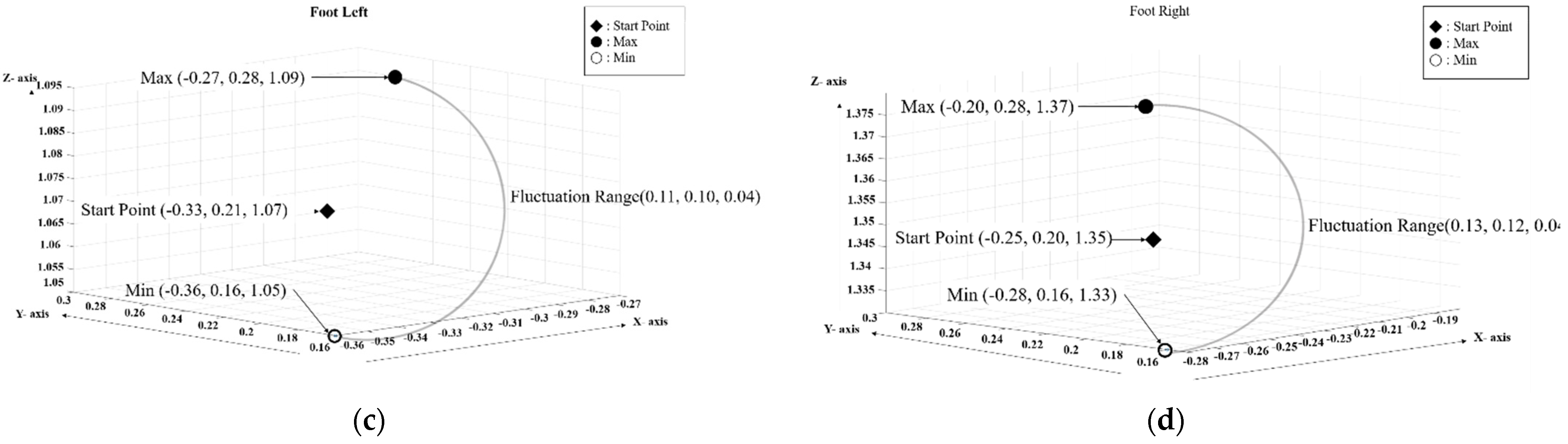

3.4. Rehabilitation Method

4. Results

4.1. Experimental Environment

4.2. Evaluation Method

4.2.1. EMG Signal Motion Recognition

4.2.2. t-test Analysis

4.3. Results of EMG Signal Processing and t-test

4.3.1. EMG Signal Processing Results

4.3.2. t-test Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Howard, M.C. A meta-analysis and systematic literature review of virtual reality rehabilitation programs. Comput. Hum. Behav. 2017, 70, 317–327. [Google Scholar] [CrossRef]

- Yoshioka, K. Development and psychological effects of a VR device rehabilitation program: Art program with feedback system reflecting achievement levels in rehabilitation exercises. Adv. Intell. Syst. Comput. 2018, 538–546. [Google Scholar] [CrossRef]

- Lee, Y.; Kumar, Y.S.; Lee, D.; Kim, J.; Kim, J.; Yoo, J.; Kwon, S. An extended method for saccadic eye movement measurements using a head-mounted display. Healthcare 2020, 8, 104. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, C.; Kim, J.; Cho, S.; Kim, J.; Yoo, J.; Kwon, S. Development of real-time hand gesture recognition for tabletop holographic display interaction using azure kinect. Sensors 2020, 20, 4566. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Yoo, J.; Park, M.; Kim, J.; Kwon, S. Robust extrinsic calibration of multiple RGB-D cameras with body tracking and feature matching. Sensors 2021, 21, 1013. [Google Scholar] [CrossRef]

- Choi, Y.-H.; Ku, J.; Lim, H.; Kim, Y.H.; Paik, N.-J. Mobile game-based virtual reality rehabilitation program for upper limb dysfunction after ischemic stroke. Restor. Neurol. Neurosci. 2016, 34, 455–463. [Google Scholar] [CrossRef]

- Leng-Feng, L.; Narayanan, M.S.; Kannan, S.; Mendel, F.; Krovi, V.N. Case studies of musculoskeletal-simulation-based rehabilitation program evaluation. IEEE Trans. Robot. 2009, 25, 634–638. [Google Scholar] [CrossRef]

- Smith, P.A.; Dombrowski, M.; Buyssens, R.; Barclay, P. Usability testing games for prosthetic training. In Proceedings of the 2018 IEEE 6th International Conference on Serious Games and Applications for Health (SeGAH), Vienna, Austria, 16–18 May 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Maqbool, H.F.; Husman, M.A.B.; Awad, M.I.; Abouhossein, A.; Iqbal, N.; Dehghani-Sanij, N.N. A real-time gait event detection for lower limb prosthesis control and evaluation. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1500–1509. [Google Scholar] [CrossRef]

- Gunal, M.M. Simulation and the fourth industrial revolution. In Simulation for Industry 4.0; Springer International Publishing: Cham, Switzerland, 2019; pp. 1–17. [Google Scholar]

- Choi, Y.-H.; Paik, N.-J. Mobile game-based virtual reality program for upper extremity stroke rehabilitation. J. Vis. Exp. 2018, 133, 56241. [Google Scholar] [CrossRef]

- D’angelo, M.; Narayanan, S.; Reynolds, D.B.; Kotowski, S.; Page, S. Application of virtual reality to the rehabilitation field to aid amputee rehabilitation: Findings from a systematic review. Disabil. Rehabil.: Assist. Technol. 2010, 5, 136–142. [Google Scholar] [CrossRef]

- Vieira, Á.; Melo, C.; Machado, J.; Gabriel, J. Virtual reality exercise on a home-based phase III cardiac rehabilitation program, effect on executive function, quality of life and depression, anxiety and stress: A randomized controlled trial. Disabil. Rehabil. Assist. Technol. 2017, 13, 112–123. [Google Scholar] [CrossRef] [PubMed]

- Andrews, C.; Southworth, M.K.; Silva, J.N.A.; Silva, J.R. Extended reality in medical practice. Curr. Treat. Options Cardiovasc. Med. 2019, 21, 18. [Google Scholar] [CrossRef]

- Wüest, S.; van de Langenberg, R.; de Bruin, E.D. Design considerations for a theory-driven exergame-based rehabilitation program to improve walking of persons with stroke. Eur. Rev. Aging Phys. Act. 2013, 11, 119–129. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nowak, A.; Woźniak, M.; Pieprzowski, M.; Romanowski, A. Advancements in medical practice using mixed reality technology. Adv. Intell. Syst. Comput. 2019, 431–439. [Google Scholar] [CrossRef]

- Li, Q.; Chen, S.; Xu, C.; Chu, X.; Li, Z. Design, Control and implementation of a powered prosthetic leg. In Proceedings of the 2018 11th International Workshop on Human Friendly Robotics (HFR), Shenzhen, China, 13–14 November 2018; pp. 85–90. [Google Scholar] [CrossRef]

- Kumar, S.; Mohammadi, A.; Quintero, D.; Rezazadeh, S.; Gans, N.; Gregg, R.D. Extremum seeking control for model-free auto-tuning of powered prosthetic legs. IEEE Trans. Control Syst. Technol. 2020, 28, 2120–2135. [Google Scholar]

- Yang, U.-J.; Kim, J.-Y. Mechanical design of powered prosthetic leg and walking pattern generation based on motion capture data. Adv. Robot. 2015, 29, 1061–1079. [Google Scholar] [CrossRef]

- Azimi, V.; Nguyen, T.T.; Sharifi, M.; Fakoorian, S.A.; Simon, D. Robust ground reaction force estimation and control of lower-limb prostheses: Theory and simulation. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 3024–3035. [Google Scholar] [CrossRef]

- Abbas, R.L.; Cooreman, D.; Al Sultan, H.; El Nayal, M.; Saab, I.M.; El Khatib, A. The effect of adding virtual reality training on traditional exercise program on balance and gait in unilateral, traumatic lower limb amputee. Games Health J. 2021, 10, 50–56. [Google Scholar] [CrossRef]

- Hashim, N.A.; Abd Razak, N.A.; Gholizadeh, H.; Abu Osman, N.A. Video game–based rehabilitation approach for individuals who have undergone upper limb amputation: Case-control study. JMIR Serious Games 2021, 9, e17017. [Google Scholar] [CrossRef]

- Davoodi, R.; Loeb, G.E. MSMS software for VR simulations of neural prostheses and patient training and rehabilitation. Stud. Health Technol. Inform. 2011, 163, 156–162. [Google Scholar]

- Phelan, I.; Arden, M.; Matsangidou, M.; Carrion-Plaza, A.; Lindley, S. Designing a Virtual Reality Myoelectric Prosthesis Training System for Amputees. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI EA ’21); Association for Computing Machinery: New York, NY, USA, 2021; Article 49; pp. 1–7. [Google Scholar] [CrossRef]

- Knight, A.; Carey, S.; Dubey, R. An Interim Analysis of the Use of Virtual Reality to Enhance Upper Limb Prosthetic Training and Rehabilitation. In Proceedings of the 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments (PETRA ’16); Association for Computing Machinery: New York, NY, USA, 2016; Article 65; pp. 1–4. [Google Scholar] [CrossRef]

- Bates, T.J.; Fergason, J.R.; Pierrie, S.N. Technological advances in prosthesis design and rehabilitation following upper extremity limb loss. Curr. Rev. Musculoskelet. Med. 2020, 13, 485–493. [Google Scholar] [CrossRef] [PubMed]

| Joint | Max. (Unit: m) | Min. (Unit: m) | Fluctuation Range (Unit: m) |

|---|---|---|---|

| Ankle Left | X: −0.21 Y: 0.32 Z: 1.20 | X: −0.23 Y: 0.27 Z: 1.18 | X: 0.04 Y: 0.06 Z: 0.02 |

| Ankle Right | X: −0.07 Y: 0.22 Z: 1.43 | X: −0.14 Y: 0.19 Z: 1.40 | X: 0.03 Y: 0.06 Z: 0.03 |

| Foot Left | X: −0.27 Y: 0.28 Z: 1.09 | X: −0.36 Y: 0.16 Z: 1.05 | X: 0.11 Y: 0.10 Z: 0.04 |

| Foot Right | X: 0.20 Y: 0.28 Z: 1.37 | X: −0.28 Y: 0.16 Z: 1.33 | X: 0.13 Y: 0.12 Z: 0.04 |

| Category | eXtended Reality |

|---|---|

| Device | HoloLens 2 Raspberry Pi 4 Model B |

| Measuring Device | Azure Kinect Intan RHD 2216 |

| Language | C# |

| Software | Unity (2020.3.8) |

| Number of Axes | 6 Degrees of Freedom |

| OS | Windows Holographic |

| Parameter | Value |

|---|---|

| Epoch | 100 |

| Btach Size | 200 |

| Optimizer | Adam |

| Initial Learning Rate | 0.005 |

| Scheduler | Polynomial Decay |

| Dropout | 0.5 |

| CPU | AMD 5800x |

| Ram | 32 GB |

| GPU | NVIDIA RTX 3090 24 GB |

| Framework | Tensorflow 2.6.0 |

| Number | Items |

| 1.1 | It has an extroverted shape considering the amputation patient |

| 1.2 | It was an interface for patients with amputated lower limbs |

| 2.1 | The physical controls were convenient to reach |

| 2.2 | The operation method was simple |

| 2.3 | There was a help function for users. |

| 2.4 | It was easy to switch to another menu when needed. |

| 2.5 | It responded to the user’s different usage environments (home, hospital, etc.) |

| 2.6 | It was easy to access functions frequently. |

| 3.1 | Rehabilitation time was shortened compared to conventional methods. |

| 3.2 | The rehabilitation training process was more effective than the existing methods. |

| 3.3 | Compared with the existing method, the virtual prosthesis helped to accurately perform rehabilitation training. |

| 3.4 | Rehabilitation training was more efficient than existing methods. |

| 3.5 | It was easy to adapt to the Hololens2 device. |

| 3.6 | It was easy to adapt to the virtual prosthesis rehabilitation training. |

| 3.7 | Convenience compared with the existing rehabilitation methods. |

| 3.8 | There was a motivating factor compared with the existing rehabilitation method. |

| 3.9 | The virtual prosthesis was more friendly and comfortable than the robotic prosthesis. |

| 3.10 | Overall satisfied with this program |

| 3.11 | The rehabilitation content of this program was more helpful than the existing methods. |

| 3.12 | Whether this program can be reused. |

| 3.13 | I want to recommend this program to other amputated patients. |

| Accuracy | 92.43% |

| Loss | 0.405% |

| Inference Time | 0.98 ms |

| Serial | Items | Public Group (N = 8) M ± SD | Amputation Group (N = 7) M ± SD | z | p-Value |

|---|---|---|---|---|---|

| 1 | 1.1 | 6.375 ± 1.847 | 7.000 ± 1.291 | −0.652 | 0.514 |

| 1.2 | 6.000 ± 2.138 | 6.571 ± 2.300 | −0.409 | 0.682 | |

| 2 | 2.1 | 6.375 ± 2.386 | 6.143 ± 1.952 | −0.409 | 0.682 |

| 2.2 | 6.875 ± 1.959 | 7.000 ± 2.000 | −0.237 | 0.812 | |

| 2.3 | 6.000 ± 2.563 | 6.714 ± 0.951 | −0.299 | 0.765 | |

| 2.4 | 6.375 ± 2.133 | 5.714 ± 1.380 | −1.063 | 0.288 | |

| 2.5 | 7.625 ± 2.200 | 5.143 ± 1.070 | −2.245 | *0.025 | |

| 2.6 | 7.000 ± 2.070 | 4.857 ± 1.215 | −2.102 | *0.036 | |

| 3 | 3.1 | 7.000 ± 2.138 | 5.857 ± 1.573 | −1.425 | 0.154 |

| 3.2 | 7.000 ± 2.138 | 5.857 ± 1.773 | −1.251 | 0.211 | |

| 3.3 | 7.125 ± 2.800 | 6.581 ± 1.512 | −1.001 | 0.317 | |

| 3.4 | 7.000 ± 2.138 | 5.857 ± 1.864 | −1.254 | 0.210 | |

| 3.5. | 4.625 ± 1.408 | 6.571 ± 1.272 | −2.294 | *0.022 | |

| 3.6 | 6.750 ± 2.435 | 5.000 ± 1.633 | −1.756 | 0.079 | |

| 3.7 | 7.375 ± 2.387 | 5.428 ± 1.397 | −2.051 | *0.040 | |

| 3.8 | 8.000 ± 2.777 | 6.857 ± 1.345 | −1.704 | 0.088 | |

| 3.9 | 7.125 ± 2.700 | 7.714 ± 2.360 | −0.480 | 0.631 | |

| 3.10 | 7.125 ± 2.232 | 7.286 ± 1.254 | −0.303 | 0.762 | |

| 3.11. | 7.375 ± 1.922 | 7.000 ± 1.155 | −1.027 | 0.305 | |

| 3.12 | 7.250 ± 2.605 | 7.429 ± 1.512 | −0.236 | 0.813 | |

| 3.13 | 7.000 ± 2.828 | 8.000 ± 1.732 | −0.648 | 0.517 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shim, W.; Kim, H.; Lim, G.; Lee, S.; Kim, H.; Hwang, J.; Lee, E.; Cho, J.; Jeong, H.; Pak, C.; et al. Implementation of the XR Rehabilitation Simulation System for the Utilization of Rehabilitation with Robotic Prosthetic Leg. Appl. Sci. 2022, 12, 12659. https://doi.org/10.3390/app122412659

Shim W, Kim H, Lim G, Lee S, Kim H, Hwang J, Lee E, Cho J, Jeong H, Pak C, et al. Implementation of the XR Rehabilitation Simulation System for the Utilization of Rehabilitation with Robotic Prosthetic Leg. Applied Sciences. 2022; 12(24):12659. https://doi.org/10.3390/app122412659

Chicago/Turabian StyleShim, Woosung, Hoijun Kim, Gyubeom Lim, Seunghyun Lee, Hyojin Kim, Joomin Hwang, Eunju Lee, Jeongmok Cho, Hyunghwa Jeong, Changsik Pak, and et al. 2022. "Implementation of the XR Rehabilitation Simulation System for the Utilization of Rehabilitation with Robotic Prosthetic Leg" Applied Sciences 12, no. 24: 12659. https://doi.org/10.3390/app122412659

APA StyleShim, W., Kim, H., Lim, G., Lee, S., Kim, H., Hwang, J., Lee, E., Cho, J., Jeong, H., Pak, C., Suh, H., Hong, J., & Kwon, S. (2022). Implementation of the XR Rehabilitation Simulation System for the Utilization of Rehabilitation with Robotic Prosthetic Leg. Applied Sciences, 12(24), 12659. https://doi.org/10.3390/app122412659