Users’ Information Disclosure Behaviors during Interactions with Chatbots: The Effect of Information Disclosure Nudges

Abstract

:1. Introduction

2. Literature Review and Theoretical Foundation

2.1. Chatbots as Recommendation Agents and Users’ Privacy Concerns

2.2. Antecedents to Information Disclosure

2.2.1. Question Sensitivity

2.2.2. Question Relevance

2.2.3. Information Disclosure

2.2.4. Privacy Calculus

2.2.5. Online Privacy Paradox

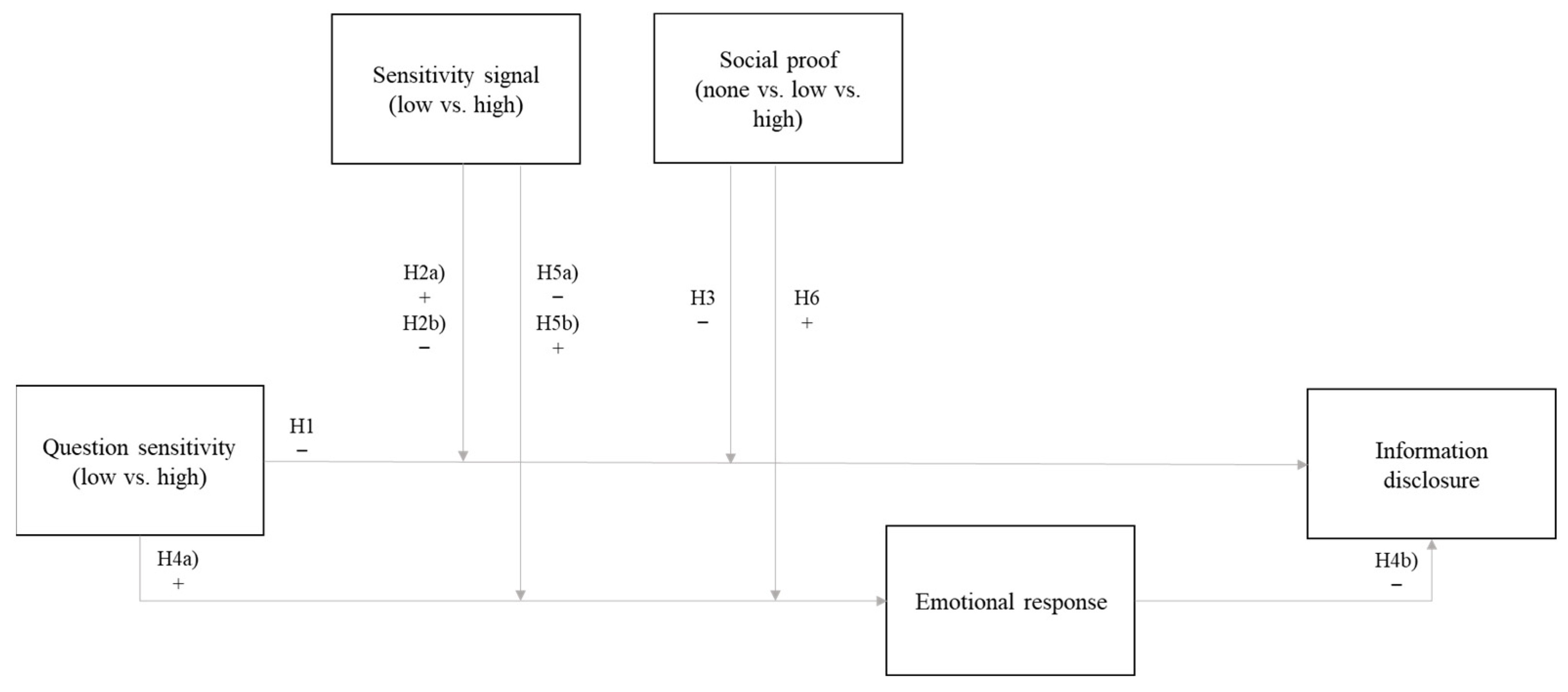

2.3. Information Disclosure Nudges (Sensitivity Signal and Social Proof) Effect on Information Disclosure

2.3.1. Elaboration Likelihood Model

2.3.2. Nudge Theory and Sensitivity Signal

2.3.3. Cialdini’s Persuasion Tactics

2.4. Mediating Effect of Emotional Response

3. Methods

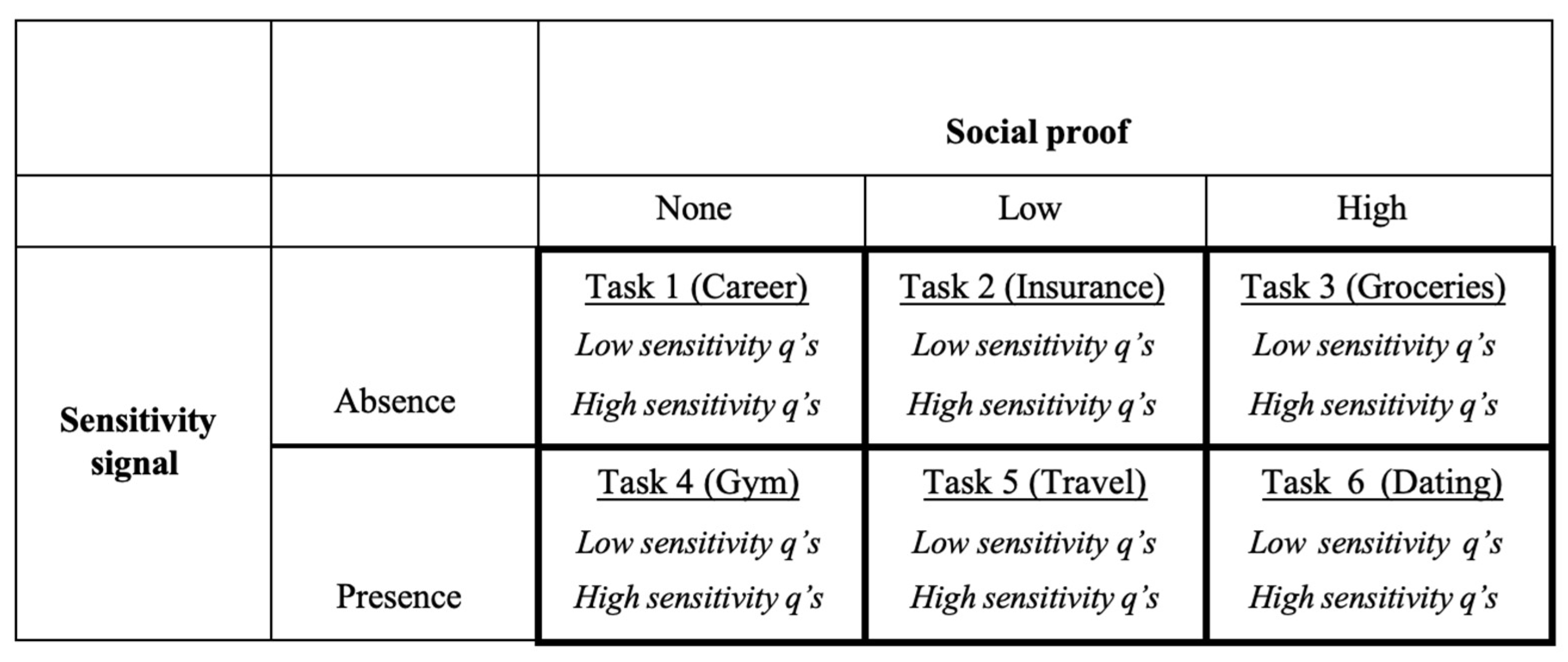

3.1. Experimental Design

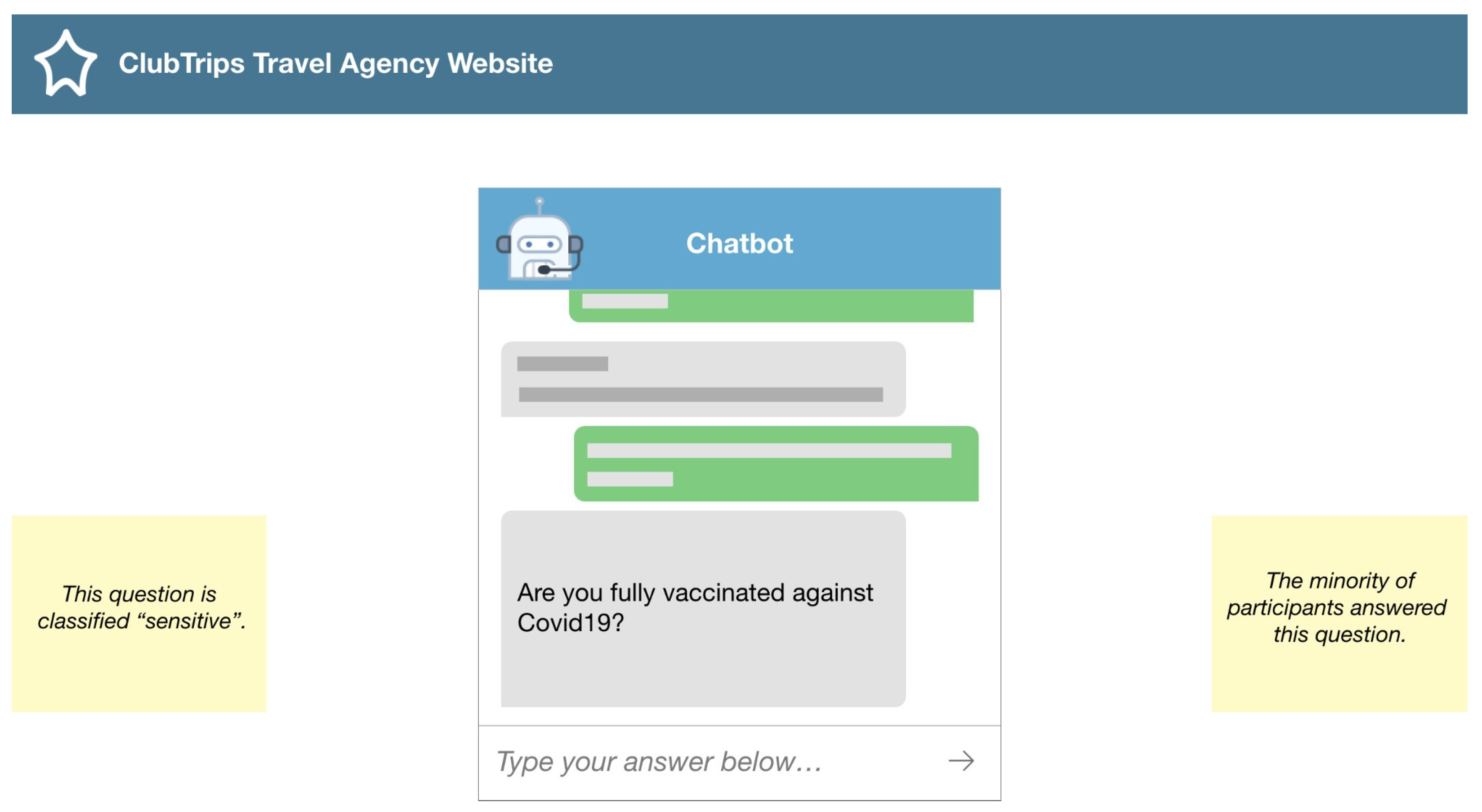

3.2. Stimuli Development

3.2.1. Chatbot Interface

3.2.2. Question Sensitivity (Pre-Test)

3.2.3. Sensitivity Signal

3.2.4. Social Proof

3.3. Lab Experiment

3.3.1. Participants

3.3.2. Procedure

3.3.3. Measures

4. Results

4.1. Results

4.1.1. Manipulation Check

4.1.2. Descriptive Statistics

4.2. Hypotheses Testing

4.2.1. Effect of Question Sensitivity on Information Disclosure (H1)

4.2.2. Effect of Information Disclosure Nudges on Information Disclosure (H2 and H3)

4.2.3. Effect of Emotional Response (H4 to H6)

5. Discussion

5.1. Main Findings

5.2. Theoretical Contributions

5.3. Managerial Implications

5.4. Limitations and Research Avenues

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Question | Context | Sensitivity Level |

|---|---|---|

| How many years of work experience do you have? | Career | Low |

| What country do you currently live in? | Career | Low |

| What is your biggest strength? | Career | Low |

| What is your highest completed education level? | Career | Low |

| What languages do you speak fluently? | Career | Low |

| What high school did you go to? | Career | Low |

| What country were you born in? | Career | Low |

| Are you a hard worker or the less the better? | Career | Low |

| Do you feel like you earn enough money? | Career | High |

| Have you ever been in trouble with the law? | Career | High |

| Have you ever lied to your superior to get a day off work? | Career | High |

| Do you prioritize your professional or your personal life? | Career | High |

| Have you ever lied in a job interview or on your CV? | Career | High |

| Have you ever lied on your CV? | Career | High |

| What’s the biggest mistake you’ve made at work? | Career | High |

| Have you ever drank at work? | Career | High |

| Do you tend to be an optimist or pessimist and why? | Dating | Low |

| Do you want to have children/do you have children? | Dating | Low |

| Is intelligence or looks more important for you? | Dating | Low |

| What is your eye colour? | Dating | Low |

| What is your favorite movie? | Dating | Low |

| What is your favorite music genre? | Dating | Low |

| What is your gender? | Dating | Low |

| What is your relationship status? | Dating | Low |

| Are you religious? If so, what religion do you practice? | Dating | High |

| Do you fall in love easily? | Dating | High |

| During sex, do you take precautions against unwanted pregnancies? | Dating | High |

| During sex, do you take precautions against STDs? | Dating | High |

| Have you ever been on a date with the sole purpose of having sex with the person? | Dating | High |

| Have you ever cheated on your significant other? | Dating | High |

| How many serious relationships have you been in throughout | Dating | High |

| What is your sexual orientation? | Dating | High |

| Do you prefer sweet or savoury food? | Groceries | Low |

| Do you enjoy trying new foods? | Groceries | Low |

| Do you enjoy eating different cuisines of the world? | Groceries | Low |

| Do you always buy brand-name products? | Groceries | Low |

| Do you usually use coupons and discount while groceries shopping? | Groceries | Low |

| Do you always shop at the same grocery store? | Groceries | Low |

| How often do you shop for your groceries online? | Groceries | Low |

| Do you prefer vegetables or fruits? | Groceries | Low |

| Overall, how healthy is your diet? | Groceries | High |

| Do you track your calories? | Groceries | High |

| Do you take any supplements? | Groceries | High |

| Counting yourself, how many people live in your household? | Groceries | High |

| Do you have any allergies? | Groceries | High |

| Would you say your diet is healthier than most people’s diet? | Groceries | High |

| What is your address? | Groceries | High |

| How much do you spend on groceries per week? | Groceries | High |

| Do you play sports? | Gym | Low |

| How many cups of coffee/tea do you drink per day? | Gym | Low |

| How many glasses of water do you drink per day? | Gym | Low |

| How many hours do you practice physical activity per week? | Gym | Low |

| How many meals do you eat per day? | Gym | Low |

| What is your height (cm/feet and inches)? | Gym | Low |

| How much time per week are you willing to dedicate to personal training? | Gym | Low |

| What sports do you play? | Gym | Low |

| How many cigarettes do you smoke per week? | Gym | High |

| How many glasses of alcohol do you drink per week? | Gym | High |

| How much do you weight (kg/lbs)? | Gym | High |

| What is one thing you would like to change about yourself (physically or mentally)? | Gym | High |

| Do you experience binge eating episodes (uncontrollable eating of large amounts of food) | Gym | High |

| How often do you think you feel too much stress? | Gym | High |

| Do you have a stressful lifestyle? | Gym | High |

| Have you ever been told by a physician that you have a metabolic disease (e.g., heart disease, high blood pressure)? | Gym | High |

| Do you always read the terms and conditions before checking the box? | Insurance | Low |

| Do you have a car? | Insurance | Low |

| Do you have any pets? | Insurance | Low |

| Do you have renters/homeowners insurance? | Insurance | Low |

| How old are you? | Insurance | Low |

| What is your current occupation? | Insurance | Low |

| What is your phone model? | Insurance | Low |

| Do you smoke? | Insurance | Low |

| Do you have more than 5000 USD in savings at this time? | Insurance | High |

| Do you pay off your credit card in full every month? | Insurance | High |

| How many credit cards do you have? | Insurance | High |

| How much do you pay on rent/mortgage per month? | Insurance | High |

| What is your current income per year? | Insurance | High |

| What is your email address? | Insurance | High |

| What is your phone number? | Insurance | High |

| Do you have an investment portfolio? | Insurance | High |

| Would you also try typical dishes—that you would normally never eat—while traveling? | Travel | Low |

| Is room service important to you? | Travel | Low |

| What type of accommodation do you prefer when travelling? | Travel | Low |

| Do you like to talk to the local people when you travel? | Travel | Low |

| What modes of transportation do you prefer to use when you travel? | Travel | Low |

| Have you ever traveled abroad? | Travel | Low |

| Which country would you most like to visit? | Travel | Low |

| What is your dream destination for a vacation? | Travel | Low |

| Are you fully vaccinated against Covid19? | Travel | High |

| Which countries, regions, or cities irritate you the most and why? | Travel | High |

| What would you never do on your travels and why? | Travel | High |

| How much money do you typically spend per day while travelling? | Travel | High |

| Would you feel insecure if you were to travel alone? | Travel | High |

| Are there regions that you would never want to visit and why? | Travel | High |

| Is there a legal reason why you could not travel to a specific country? | Travel | High |

References

- Hussain, S.; Ameri Sianaki, O.; Ababneh, N. A Survey on Conversational Agents/Chatbots Classification and Design Techniques; Springer: Cham, Switzerland; pp. 946–956.

- Jannach, D.; Manzoor, A.; Cai, W.; Chen, L. A Survey on Conversational Recommender Systems. ACM Comput. Surv. 2021, 54, 105. [Google Scholar] [CrossRef]

- Shi, W.; Wang, X.; Oh, Y.J.; Zhang, J.; Sahay, S.; Yu, Z. Effects of Persuasive Dialogues: Testing Bot Identities and Inquiry Strategies. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Schanke, S.; Burtch, G.; Ray, G. Estimating the Impact of “Humanizing” Customer Service Chatbots. Inf. Syst. Res. 2021, 32, 736–751. [Google Scholar] [CrossRef]

- Liao, M.; Sundar, S.S. How Should AI Systems Talk to Users when Collecting their Personal Information? Effects of Role Framing and Self-Referencing on Human-AI Interaction. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; p. 151. [Google Scholar]

- Dev, J.; Camp, L.J. User Engagement with Chatbots: A Discursive Psychology Approach. In Proceedings of the 2nd Conference on Conversational User Interfaces, Bilbao, Spain, 22–24 July 2020; p. 52. [Google Scholar]

- Ali, N. Text Stylometry for Chat Bot Identification and Intelligence Estimation; University of Louiseville: St. Louisville, KY, USA, 2014. [Google Scholar]

- Gondaliya, K.; Butakov, S.; Zavarsky, P. SLA as a mechanism to manage risks related to chatbot services. In Proceedings of the 2020 IEEE 6th Intl Conference on Big Data Security on Cloud (BigDataSecurity), Baltimore, MD, USA, 25–27 May 2020; pp. 235–240. [Google Scholar]

- Roland, T.R. The future of marketing. Int. J. Res. Mark. 2020, 37, 15–26. [Google Scholar] [CrossRef]

- Saleilles, J.; Aïmeur, E. SecuBot, a Teacher in Appearance: How Social Chatbots Can Influence People. In Proceedings of the AIofAI 2021: 1st Workshop on Adverse Impacts and Collateral Effects of Artificial Intelligence Technologies, Montreal, QC, Canada, 19 August 2021; p. 19. [Google Scholar]

- Fan, H.; Han, B.; Gao, W.; Li, W. How AI chatbots have reshaped the frontline interface in China: Examining the role of sales–service ambidexterity and the personalization–privacy paradox. Int. J. Emerg. Mark. 2022, 17, 967–986. [Google Scholar] [CrossRef]

- Cheng, Y.; Jiang, H. How Do AI-driven Chatbots Impact User Experience? Examining Gratifications, Perceived Privacy Risk, Satisfaction, Loyalty, and Continued Use. J. Broadcast. Electron. Media 2020, 64, 592–614. [Google Scholar] [CrossRef]

- Rese, A.; Ganster, L.; Baier, D. Chatbots in retailers’ customer communication: How to measure their acceptance? J. Retail. Consum. Serv. 2020, 56, 102176. [Google Scholar] [CrossRef]

- Rodríguez-Priego, N.; van Bavel, R.; Monteleone, S. The disconnection between privacy notices and information disclosure: An online experiment. Econ. Politica 2016, 33, 433–461. [Google Scholar] [CrossRef] [Green Version]

- Wu, K.-W.; Huang, S.Y.; Yen, D.C.; Popova, I. The effect of online privacy policy on consumer privacy concern and trust. Comput. Hum. Behav. 2012, 28, 889–897. [Google Scholar] [CrossRef]

- Lusoli, W.; Bacigalupo, M.; Lupiáñez-Villanueva, F.; Andrade, N.; Monteleone, S.; Maghiros, I. Pan-European Survey of Practices, Attitudes and Policy Preferences as Regards Personal Identity Data Management. JRC Sci. Policy Rep. 2012. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2086579 (accessed on 30 November 2022).

- Wang, Y.-C.; Burke, M.; Kraut, R. Modeling Self-Disclosure in Social Networking Sites. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing, San Francisco, CA, USA, 27 February–2 March 2016; pp. 74–85. [Google Scholar]

- Ischen, C.; Araujo, T.; Voorveld, H.; van Noort, G.; Smit, E. Privacy Concerns in Chatbot Interactions; Springer: Cham, Switzerland; pp. 34–48.

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Groom, V.; Calo, M. Reversing the Privacy Paradox: An Experimental Study. TPRC 2011. 2011. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1993125 (accessed on 30 November 2022).

- Hoofnagle, C.; King, J.; Li, S.; Turow, J. How Different Are Young Adults From Older Adults When It Comes to Information Privacy Attitudes & Policies? SSRN Electron. J. 2010. [Google Scholar] [CrossRef] [Green Version]

- Martin, K. Privacy Notices as Tabula Rasa: An empirical investigation into how complying with a privacy notice is related to meeting privacy expectations online. J. Public Policy Mark. 2015, 34, 210–227. [Google Scholar] [CrossRef] [Green Version]

- Mao, T.-W.; Ouyang, S. Digital Nudge Chat Bot. Master’s Thesis, Cornell University, New York, NY, USA, 2020. [Google Scholar]

- Kim, J.; Giroux, M.; Lee, J. When do you trust AI? The effect of number presentation detail on consumer trust and acceptance of AI recommendations. Psychol. Mark. 2021, 38, 1140–1155. [Google Scholar] [CrossRef]

- Das, G.; Spence, M.T.; Agarwal, J. Social selling cues: The dynamics of posting numbers viewed and bought on customers’ purchase intentions. Int. J. Res. Mark. 2021, 38, 994–1016. [Google Scholar] [CrossRef]

- He, Y.; Oppewal, H. See How Much We’ve Sold Already! Effects of Displaying Sales and Stock Level Information on Consumers’ Online Product Choices. J. Retail. 2018, 94, 45–57. [Google Scholar] [CrossRef]

- Kahneman, D. Thinking, Fast and Slow; Penguin Random House: Toronto, ON, Canada, 2011. [Google Scholar]

- Petty, R.E.; Cacioppo, J.T. The Elaboration Likelihood Model of Persuasion. In Communication and Persuasion: Central and Peripheral Routes to Attitude Change; Springer: New York, NY, USA, 1986; pp. 1–24. [Google Scholar] [CrossRef]

- Acquisti, A.; Brandimarte, L.; Loewenstein, G. Privacy and human behavior in the age of information. Science 2015, 347, 509–514. [Google Scholar] [CrossRef]

- Cialdini, R.B. Influence: The Psychology of Persuasion; EPub, Ed.; Collins: New York, NY, USA, 2009. [Google Scholar]

- Thaler, R.H.; Sunstein, C.R. Nudge: Improving Decisions about Health, Wealth, and Happiness; Yale University Press: New Haven, CT, USA, 2008; p. 293. [Google Scholar]

- Forgas, J.P. Mood and judgment: The affect infusion model (AIM). Psychol. Bull. 1995, 117, 39–66. [Google Scholar] [CrossRef]

- Liu, B.; Sundar, S.S. Should Machines Express Sympathy and Empathy? Experiments with a Health Advice Chatbot. Cyberpsycholog. Behav. Soc. Netw. 2018, 21, 625–636. [Google Scholar] [CrossRef]

- Tärning, B.; Silvervarg, A. “I Didn’t Understand, I’m Really Not Very Smart”—How Design of a Digital Tutee’s Self-Efficacy Affects Conversation and Student Behavior in a Digital Math Game. Educ. Sci. 2019, 9, 197. [Google Scholar] [CrossRef]

- Wang, X.; Nakatsu, R. How Do People Talk with a Virtual Philosopher: Log Analysis of a Real-World Application; Springer: Berlin/Heidelberg, Germany, 2013; pp. 132–137. [Google Scholar]

- Gupta, A.; Royer, A.; Wright, C.; Khan, F.; Heath, V.; Galinkin, E.; Khurana, R.; Ganapini, M.; Fancy, M.; Sweidan, M.; et al. The State of AI Ethics Report (January 2021); Montreal AI Ethics Institute: Montréal, QC, Canada, 2021. [Google Scholar]

- Bang, J.; Kim, S.; Nam, J.W.; Yang, D.-G. Ethical Chatbot Design for Reducing Negative Effects of Biased Data and Unethical Conversations. In Proceedings of the 2021 International Conference on Platform Technology and Service (PlatCon), Jeju, Republic of Korea, 23–25 August 2021; pp. 1–5. [Google Scholar]

- Cote, C. 5 Pinciples of Data Ethics For Business. In Business Insights; Harvard Business School: Boston, MA, USA, 2021. [Google Scholar]

- Martineau, J.T. Ethical issues in the development of AI [Workshop presentation]. In Proceedings of the 1st IVADO Research Workshop on Human-Centered AI, Montréal, QC, Canada, 28–29 April 2022. [Google Scholar]

- Følstad, A.; Araujo, T.; Law, E.L.-C.; Brandtzaeg, P.B.; Papadopoulos, S.; Reis, L.; Baez, M.; Laban, G.; McAllister, P.; Ischen, C.; et al. Future directions for chatbot research: An interdisciplinary research agenda. Computing 2021, 103, 2915–2942. [Google Scholar] [CrossRef]

- Qomariyah, N.N. Definition and History of Recommender Systems. Ph.D. Thesis, BINUS University International, Jakarta, Indonesia, 2020. [Google Scholar]

- Chew, H.S.J. The Use of Artificial Intelligence-Based Conversational Agents (Chatbots) for Weight Loss: Scoping Review and Practical Recommendations. JMIR Med. Inform. 2022, 10, e32578. [Google Scholar] [CrossRef] [PubMed]

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Ikemoto, Y.; Asawavetvutt, V.; Kuwabara, K.; Huang, H.-H. Conversation Strategy of a Chatbot for Interactive Recommendations. In Intelligent Information and Database Systems; Springer: Cham, Switzerland, 2018; pp. 117–126. [Google Scholar]

- Mahmood, T.; Ricci, F. Improving recommender systems with adaptive conversational strategies. In Proceedings of the 20th ACM Conference on Hypertext and Hypermedia, Torino, Italy, 29 June–1 July 2009; pp. 73–82. [Google Scholar]

- Nica, I.; Tazl, O.A.; Wotawa, F. Chatbot-based Tourist Recommendations Using Model-based Reasoning. In Proceedings of the ConfWS, Graz, Austria, 27–28 September 2018. [Google Scholar]

- Eeuwen, M.V. Mobile Conversational Commerce: Messenger Chatbots as the Next Interface between Businesses and Consumers. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2017. [Google Scholar]

- Awad, N.F.; Krishnan, M.S. The Personalization Privacy Paradox: An Empirical Evaluation of Information Transparency and the Willingness to Be Profiled Online for Personalization. MIS Q. 2006, 30, 13–28. [Google Scholar] [CrossRef] [Green Version]

- Ng, M.; Coopamootoo, K.P.L.; Toreini, E.; Aitken, M.; Elliot, K.; Moorsel, A.v. Simulating the Effects of Social Presence on Trust, Privacy Concerns & Usage Intentions in Automated Bots for Finance. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Genoa, Italy, 7–11 September 2020; pp. 190–199. [Google Scholar]

- Thomaz, F.; Salge, C.; Karahanna, E.; Hulland, J.S. Learning from the Dark Web: Leveraging conversational agents in the era of hyper-privacy to enhance marketing. J. Acad. Mark. Sci. 2019, 48, 43–63. [Google Scholar] [CrossRef] [Green Version]

- Murtarelli, G.; Gregory, A.; Romenti, S. A conversation-based perspective for shaping ethical human–machine interactions: The particular challenge of chatbots. J. Bus. Res. 2021, 129, 927–935. [Google Scholar] [CrossRef]

- Lee, H.; Lim, D.; Kim, H.; Zo, H.; Ciganek, A.P. Compensation paradox: The influence of monetary rewards on user behaviour. Behav. Inf. Technol. 2015, 34, 45–56. [Google Scholar] [CrossRef]

- Metzger, M.J. Communication Privacy Management in Electronic Commerce. J. Comput.-Mediat. Commun. 2007, 12, 335–361. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Sarathy, R.; Xu, H. Understanding Situational Online Information Disclosure as a Privacy Calculus. J. Comput. Inf. Syst. 2010, 51, 62–71. [Google Scholar] [CrossRef]

- Li, H.; Sarathy, R.; Xu, H. The role of affect and cognition on online consumers’ decision to disclose personal information to unfamiliar online vendors. Decis. Support Syst. 2011, 51, 434–445. [Google Scholar] [CrossRef]

- Kolotylo-Kulkarni, M.; Xia, W.; Dhillon, G. Information disclosure in e-commerce: A systematic review and agenda for future research. J. Bus. Res. 2021, 126, 221–238. [Google Scholar] [CrossRef]

- Ohm, P. Sensitive information. South. Calif. Law Rev. 2015, 88, 1125–1196. [Google Scholar]

- Harrison, M.E. Doing Development Research; SAGE Publications, Ltd.: London, UK, 2006. [Google Scholar] [CrossRef]

- Mothersbaugh, D.; Ii, W.; Beatty, S.; Wang, S. Disclosure Antecedents in an Online Service Context The Role of Sensitivity of Information. J. Serv. Res. 2012, 15, 76–98. [Google Scholar] [CrossRef]

- Lee, Y.-C.; Yamashita, N.; Huang, Y.; Fu, W. “I Hear You, I Feel You”: Encouraging Deep Self-disclosure through a Chatbot. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–12. [Google Scholar]

- Stone, D.L. The Effects of Valence of Outcomes for Providing Data and the Perceived Relevance of the Data Requested on Privacy-Related Behaviors, Beliefs, and Attitudes. Ph.D Thesis, Purdue University, Ann Arbor, MI, USA, 1981. [Google Scholar]

- Al-Sharafi, M.A.; Al-Emran, M.; Iranmanesh, M.; Al-Qaysi, N.; Iahad, N.A.; Arpaci, I. Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interact. Learn. Environ. 2022, 1–20. [Google Scholar] [CrossRef]

- Cozby, P.C. Self-disclosure: A literature review. Psychol. Bull. 1973, 79, 73–91. [Google Scholar] [CrossRef]

- Xiao, B.; Benbasat, I. E-Commerce Product Recommendation Agents: Use, Characteristics, and Impact. MIS Q. 2007, 31, 137–209. [Google Scholar] [CrossRef] [Green Version]

- Hasal, M.; Nowaková, J.; Ahmed Saghair, K.; Abdulla, H.; Snášel, V.; Ogiela, L. Chatbots: Security, privacy, data protection, and social aspects. Concurr. Comput. 2021, 33, e6426. [Google Scholar] [CrossRef]

- Knijnenburg, B.P.; Kobsa, A.; Jin, H. Dimensionality of information disclosure behavior. Int. J. Hum.-Comput. Stud. 2013, 71, 1144–1162. [Google Scholar] [CrossRef] [Green Version]

- Joinson, A. Knowing Me, Knowing You: Reciprocal Self-Disclosure in Internet-Based Surveys. Cyberpsycholog. Behav. Impact Internet Multimed. Virtual Real. Behav. Soc. 2001, 4, 587–591. [Google Scholar] [CrossRef]

- Joinson, A.N.; Paine, C.; Buchanan, T.; Reips, U.-D. Measuring self-disclosure online: Blurring and non-response to sensitive items in web-based surveys. Comput. Hum. Behav. 2008, 24, 2158–2171. [Google Scholar] [CrossRef]

- van der Lee, C.; Croes, E.; de Wit, J.; Antheunis, M. Digital Confessions: Exploring the Role of Chatbots in Self-Disclosure. In Proceedings of the Conversations 2019, Amsterdam, The Netherlands, 19–20 November 2019. [Google Scholar]

- van Wezel, M.M.C.; Croes, E.A.J.; Antheunis, M.L. “I’m Here for You”: Can Social Chatbots Truly Support Their Users? A Literature Review. In International Workshop on Chatbot Research and Design; Springer: Cham, Switzerland; pp. 96–113.

- Ajzen, I.; Fishbein, M. Understanding Attitudes and Predicting Social Behavior; Prentice-Hall: Englewood Cliffs, NJ, USA, 1980. [Google Scholar]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. An Extended Privacy Calculus Model for E-Commerce Transactions. Inf. Syst. Res. 2006, 17, 61–80. [Google Scholar] [CrossRef]

- Hui, K.-L.; Teo, H.H.; Lee, S.-Y.T. The Value of Privacy Assurance: An Exploratory Field Experiment. MIS Q. 2007, 31, 19–33. [Google Scholar] [CrossRef] [Green Version]

- Kobsa, A.; Cho, H.; Knijnenburg, B.P. The effect of personalization provider characteristics on privacy attitudes and behaviors: An Elaboration Likelihood Model approach. J. Assoc. Inf. Sci. Technol. 2016, 67, 2587–2606. [Google Scholar] [CrossRef] [Green Version]

- Brown, B. Studying the Internet Experience. HP Laboratories Technical Report HPL. 2001. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=563a300a287ff45eb897d100f26d59d4d87c62c2 (accessed on 30 November 2022).

- Kokolakis, S. Privacy attitudes and privacy behaviour: A review of current research on the privacy paradox phenomenon. Comput. Secur. 2017, 64, 122–134. [Google Scholar] [CrossRef]

- Dienlin, T.; Trepte, S. Is the privacy paradox a relic of the past? An in-depth analysis of privacy attitudes and privacy behaviors The relation between privacy attitudes and privacy behaviors. Eur. J. Soc. Psychol. 2015, 45, 285–297. [Google Scholar] [CrossRef]

- Carlton, A.M. The Relationship between Privacy Notice Formats and Consumer Disclosure Decisions: A Quantitative Study. Ph.D. Thesis, Northcentral University, Ann Arbor, MI, USA, 2019. [Google Scholar]

- Zierau, N.; Flock, K.; Janson, A.; Söllner, M.; Leimeister, J.M. The Influence of AI-Based Chatbots and Their Design on Users Trust and Information Sharing in Online Loan Applications. In Proceedings of the Hawaii International Conference on System Sciences (HICSS), Koloa, HI, USA, 4–7 January 2011. [Google Scholar]

- Wilson, D.W.; Valacich, J. Unpacking the Privacy Paradox: Irrational Decision-Making within the Privacy Calculus. In Proceedings of the International Conference on Information Systems, ICIS 2012, Orlando, FL, USA, 16–19 December 2012; pp. 4152–4162. [Google Scholar]

- Simons, H.W. Persuasion: Understanding, Practice, and Analysis; Addison-Wesley: Reading, MA, USA, 1976. [Google Scholar]

- Rönnberg, S. Persuasive Chatbot Conversations: Towards a Personalized User Experience; Linköping University: Linköping, Sweden, 2020. [Google Scholar]

- Nass, C.; Moon, Y. Machines and Mindlessness: Social Responses to Computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Petty, R.; Cacioppo, J. Source Factors and the Elaboration Likelihood Model of Persuasion. Adv. Consum. Res. Assoc. Consum. Res. 1984, 11, 668–672. [Google Scholar]

- Schneider, C.; Weinmann, M.; Brocke, J.V. Digital Nudging: Guiding Online User Choices through Interface Design. Commun. ACM 2018, 61, 67–73. [Google Scholar] [CrossRef]

- Sunstein, C.R. Nudging: A Very Short Guide. J. Consum. Policy 2014, 37, 583–588. [Google Scholar] [CrossRef]

- Weinmann, M.; Schneider, C.; Brocke, J.V. Digital Nudging. Bus. Inf. Syst. Eng. 2016, 58, 433–436. [Google Scholar] [CrossRef]

- Kahneman, D.; Knetsch, J.L.; Thaler, R.H. Anomalies: The Endowment Effect, Loss Aversion, and Status Quo Bias. J. Econ. Perspect. 1991, 5, 193–206. [Google Scholar] [CrossRef] [Green Version]

- Adam, M.; Klumpe, J. Onboarding with a Chat—The effects of Message Interactivity and Platform Self-Disclosure on User Disclosure propensity. In Proceedings of the European Conference on Information Systems (ECIS), Stockholm & Uppsala, Sweden, 8–14 June 2019. [Google Scholar]

- Benlian, A. Web Personalization Cues and Their Differential Effects on User Assessments of Website Value. J. Manag. Inf. Syst. 2015, 32, 225–260. [Google Scholar] [CrossRef]

- Fleischmann, M.; Amirpur, M.; Grupp, T.; Benlian, A.; Hess, T. The role of software updates in information systems continuance—An experimental study from a user perspective. Decis. Support Syst. 2016, 83, 83–96. [Google Scholar] [CrossRef]

- Wessel, M.; Adam, M.; Benlian, A. The impact of sold-out early birds on option selection in reward-based crowdfunding. Decis. Support Syst. 2019, 117, 48–61. [Google Scholar] [CrossRef]

- Kerr, M.A.; McCann, M.T.; Livingstone, M.B. Food and the consumer: Could labelling be the answer? Proc. Nutr. Soc. 2015, 74, 158–163. [Google Scholar] [CrossRef] [Green Version]

- Borgi, L. Does Menu Labeling Lead to Healthier Food Choices? Harvard Medical School: Boston, MA, USA, 2018. [Google Scholar]

- Mirsch, T.; Lehrer, C.; Jung, R. Digital Nudging: Altering User Behavior in Digital Environments. In Proceedings of the 13th International Conference on Wirtschaftsinformatik, St. Gallen, Switzerland, 12–15 February 2017; pp. 634–648. [Google Scholar]

- Ioannou, A.; Tussyadiah, I.; Miller, G.; Li, S.; Weick, M. Privacy nudges for disclosure of personal information: A systematic literature review and meta-analysis. PLoS ONE 2021, 16, e0256822. [Google Scholar] [CrossRef]

- Klumpe, J. Social Nudges as Mitigators in Privacy Choice Environments. Ph.D. Thesis, Technische Universität Darmstadt, Darmstadt, Germany, 2020. [Google Scholar]

- Zhang, B.; Xu, H. Privacy Nudges for Mobile Applications: Effects on the Creepiness Emotion and Privacy Attitudes. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work, San Francisco, CA, USA, 27 February–2 March 2016; pp. 1676–1690. [Google Scholar]

- Cialdini, R.B.; Goldstein, N.J. Social Influence: Compliance and Conformity. Annu. Rev. Psychol. 2004, 55, 591–621. [Google Scholar] [CrossRef]

- Brave, S.; Nass, C. Emotion in Human–Computer Interaction. In The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar] [CrossRef]

- Rapp, A.; Curti, L.; Boldi, A. The human side of human-chatbot interaction: A systematic literature review of ten years of research on text-based chatbots. Int. J. Hum.-Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Cosby, S.; Sénécal, S.; Léger, P.M. The Impact of Online Product and Service Picture Characteristics on Consumers’ Perceptions and Intentions. Ph.D. Thesis, HEC Montréal, Montréal, QC, Canada, 2020. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Clore, G.L.; Parrott, G. Moods and their vicissitudes: Thoughts and feelings as information. In Emotion and Social Judgments; Forgas, J.P., Ed.; Pergamon Press: Elmsford, NY, USA, 1991; pp. 107–123. [Google Scholar]

- Schwarz, N.; Clore, G.L. How do I feel about it? The informative function of affective states. In Affect, Cognition, and Social Behavior; Fiedler, I.K., Forgas, J.P., Eds.; Hogrefe: Gottingen, Germany, 1988; pp. 44–62. [Google Scholar]

- Paulhus, D.L.; Lim, T.K. Arousal and evaluative extremity in social judgments: A dynamic complexity model. Eur. J. Soc. Psychol. 1994, 24, 89–100. [Google Scholar] [CrossRef]

- Pérez-Marín, D.; Pascual-Nieto, I. An exploratory study on how children interact with pedagogic conversational agents. Behav. Inf. Technol. 2013, 32, 955–964. [Google Scholar] [CrossRef]

- Xu, A.; Liu, Z.; Guo, Y.; Sinha, V.; Akkiraju, R. A New Chatbot for Customer Service on Social Media. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 3506–3510. [Google Scholar]

- Gaffey, A.E.; Wirth, M.M. Physiological Arousal. In Encyclopedia of Quality of Life and Well-Being Research; Michalos, A.C., Ed.; Springer: Dordrecht, The Netherlands, 2014; pp. 4807–4810. [Google Scholar] [CrossRef]

- Cannon, W.B. Bodily Changes in Pain, Hunger, Fear and Rage: An Account of Recent Researches into the Function of Emotional Excitement; Appleton: New York, NY, USA, 1915. [Google Scholar]

- Miniard, P.W.; Sirdeshmukh, D.; Innis, D.E. Peripheral persuasion and brand choice. J. Consum. Res. 1992, 19, 226–239. [Google Scholar] [CrossRef]

- Knapp, H.; Kirk, S.A. Using pencil and paper, Internet and touch-tone phones for self-administered surveys: Does methodology matter? Comput. Hum. Behav. 2003, 19, 117–134. [Google Scholar] [CrossRef]

- Rudnicka, A.; Cox, A.L.; Gould, S.J.J. Why Do You Need This? Selective Disclosure of Data Among Citizen Scientists. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; p. 392. [Google Scholar]

- Schmidt, A.; Engelen, B. The ethics of nudging: An overview. Philos. Compass 2020, 15, e12658. [Google Scholar] [CrossRef]

- Becker, M.; Matt, C.; Hess, T. It’s Not Just About the Product: How Persuasive Communication Affects the Disclosure of Personal Health Information. SIGMIS Database 2020, 51, 37–50. [Google Scholar] [CrossRef] [Green Version]

- Wakefield, R. The influence of user affect in online information disclosure. J. Strateg. Inf. Syst. 2013, 22, 157–174. [Google Scholar] [CrossRef]

- Coker, B.; McGill, A.L. Arousal increases self-disclosure. J. Exp. Soc. Psychol. 2020, 87, 103928. [Google Scholar] [CrossRef]

- Riedl, R.; Léger, P.-M. Fundamentals of NeuroIS: Information Systems and the Brain; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Prince, J.; Wallsten, S. How much is privacy worth around the world and across platforms? J. Econ. Manag. Strategy 2022, 31, 841–861. [Google Scholar] [CrossRef]

| Variable | Item | Scale | Source |

|---|---|---|---|

| Question sensitivity | Rank the sensitivity of each question the chatbot asks you | 7-point Likert scale from “Extremely general” to “extremely sensitive” | Developed by researchers |

| Question relevance | Rank the relevance of each question to the context | 7-point Likert scale from “Extremely irrelevant” to “extremely relevant” | Developed by researchers |

| Low Sensitivity Question | High Sensitivity Questions | |||||||

|---|---|---|---|---|---|---|---|---|

| Context | Question Sensitivity Comparison | N | Mean | Std. | N | Mean | Std. | p-Value |

| Career | Low vs. High | 8 | 2.82 | 0.44 | 8 | 4.76 | 0.46 | <0.0001 |

| Dating | Low vs. High | 8 | 2.84 | 0.62 | 8 | 4.94 | 0.67 | <0.0001 |

| Grocery | Low vs. High | 8 | 2.78 | 0.21 | 8 | 4.2 | 0.31 | <0.0001 |

| Gym | Low vs. High | 8 | 2.88 | 0.25 | 8 | 4.51 | 0.33 | <0.0001 |

| Insurance | Low vs. High | 8 | 3.36 | 0.41 | 8 | 4.74 | 0.26 | <0.0001 |

| Travel | Low vs. High | 8 | 2.91 | 0.13 | 8 | 4.58 | 0.57 | <0.0001 |

| Degrees of Freedom | Sum of Squares | Mean Square | |||

|---|---|---|---|---|---|

| Source | DF | SS | MS | F-Stat | p-Value |

| Between Groups | 5 | 1.8546 | 0.3709 | 2.5645 | 0.041 |

| Within Groups | 42 | 6.0746 | 0.1446 | ||

| Total | 47 | 7.9291 |

| Degrees of Freedom | Sum of Squares | Mean Square | |||

|---|---|---|---|---|---|

| Source | DF | SS | MS | F-Stat | p-Value |

| Between Groups | 5 | 2.6537 | 0.5307 | 2.5544 | 0.042 |

| Within Groups | 42 | 8.7265 | 0.2078 | ||

| Total | 47 | 11.3802 |

| Variable/ Response | Response Frequency | Response Count | ||

|---|---|---|---|---|

| Gender | Man | 47% | [9] | |

| Woman | 53% | [10] | ||

| Non-binary/agender/other | 0% | [0] | ||

| Birth Country | Canada | 53% | [10] | |

| France | 5% | [1] | ||

| Japan | 5% | [1] | ||

| Iran | 5% | [1] | ||

| Mexico | 5% | [1] | ||

| Morocco | 11% | [2] | ||

| Turkey | 5% | [1] | ||

| South Korea | 5% | [1] | ||

| United-States | 5% | [1] | ||

| Occupation | Full-time worker | 11% | [2] | |

| Student | 84% | [16] | ||

| Both | 5% | [1] | ||

| Age | Mean | 26.16 | ||

| Median | 26 | |||

| Mode | 23 and 30 | |||

| Std. Deviation | 3.06 | |||

| Minimum | 22 | |||

| Maximum | 31 |

| Construct | Definition | Measure | Source |

|---|---|---|---|

| Visual attention | Duration (in seconds) of fixations on each area of interest (i.e., sensitivity signal and social proof) | Seconds | Eye tracker Tobii Pro Lab (Danderyd, Stockholm, Sweden) |

| Emotional response | Level of arousal | Phasic EDA | Biopac inc. (Goleta, CA, USA) |

| Information disclosure | Response rate | Answer vs. no answer to the question | Developed by researchers |

| Social Proof/ Sensitivity Signal | No Social Proof | Low Social Proof | High Social Proof |

|---|---|---|---|

| No Sensitivity Signal | Low sensitivity q’s 96.7 ± 17.9 | Low sensitivity q’s 95.6 ± 20.6 | Low sensitivity q’s 100.0 ± 00.0 |

| High sensitivity q’s 94.0 ± 22.6 | High sensitivity q’s 84.4 ± 36.3 | High sensitivity q’s 93.6 ± 24.7 | |

| With Sensitivity Signal | Low sensitivity q’s 100.0 ± 00.0 | Low sensitivity q’s 98.8 ± 11.0 | Low sensitivity q’s 99.2 ± 8.7 |

| High sensitivity q’s 95.9 ± 20.0 | High sensitivity q’s 93.5 ± 24.7 | High sensitivity q’s 84.2 ± 36.4 |

| Social Proof/ Sensitivity Signal | No Social Proof | Low Social Proof | High Social Proof |

|---|---|---|---|

| No Sensitivity Signal | Low sensitivity q’s 9.932 ± 4.936 | Low sensitivity q’s 9.352 ± 4.642 | Low sensitivity q’s 10.499 ± 5.288 |

| High sensitivity q’s 10.372 ± 5.334 | High sensitivity q’s 9.118 ± 4.849 | High sensitivity q’s 10.101 ± 4.403 | |

| With Sensitivity Signal | Low sensitivity q’s 10.633 ± 6.003 | Low sensitivity q’s 8.970 ± 4.935 | Low sensitivity q’s 8.871 ± 5.096 |

| High sensitivity q’s 10.269 ± 5.177 | High sensitivity q’s 8.972 ± 5.043 | High sensitivity q’s 10.232 ± 5.147 |

| Nudge Comparison | Question Sensitivity | Estimate | StdErr | DF | t-Value | One-Tail Probt | Hypothesis |

|---|---|---|---|---|---|---|---|

| Sensitivity signal: Present vs. Absent | Low | 1.47 | 0.80 | 1774 | 1.84 | 0.0334 | H2a |

| High | −1.03 | 0.85 | 1774 | −1.21 | 0.1142 | H2b | |

| Social proof: Low vs. High | Low | −1.29 | 1.15 | 1772 | −1.13 | 0.1301 | H3 |

| High | −1.32 | 1.5 | 1770 | −1.14 | 0.1269 | H3 | |

| Social proof: Low vs. None | Low | −0.75 | 0.73 | 1772 | −1.03 | 0.1519 | - |

| High | −0.38 | 0.82 | 1772 | −0.47 | 0.6418 | - | |

| Social proof: High vs. None | Low | 1.14 | 1.17 | 1772 | 0.98 | 0.1637 | - |

| High | −1.67 | 1.23 | 1772 | −1.36 | 0.3248 | - |

| Nudge Comparison | Question Sensitivity | Estimate | StdErr | DF | t-Value | One-Tail Probt | Hypothesis |

|---|---|---|---|---|---|---|---|

| Sensitivity signal: Present vs. Absent | Low | −0.13 | 0.15 | 1728 | −0.86 | 0.1948 | H5a |

| High | −0.03 | 0.21 | 1728 | −0.13 | 0.0511 | H5b | |

| Social proof: Low vs. High | Low | −0.12 | 0.26 | 1726 | 0.44 | 0.1717 | H6 |

| High | −0.11 | 0.26 | 1724 | 0.43 | 0.1651 | H6 | |

| Social proof: Low vs. None | Low | −0.59 | 0.18 | 1726 | −3.28 | 0.0006 | - |

| High | −0.20 | 0.25 | 1726 | −0.77 | 0.2196 | - | |

| Social proof: High vs. None | Low | 0.03 | 0.18 | 1726 | 0.18 | 0.4281 | - |

| High | −0.08 | 0.25 | 1726 | −0.31 | 0.1231 | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carmichael, L.; Poirier, S.-M.; Coursaris, C.K.; Léger, P.-M.; Sénécal, S. Users’ Information Disclosure Behaviors during Interactions with Chatbots: The Effect of Information Disclosure Nudges. Appl. Sci. 2022, 12, 12660. https://doi.org/10.3390/app122412660

Carmichael L, Poirier S-M, Coursaris CK, Léger P-M, Sénécal S. Users’ Information Disclosure Behaviors during Interactions with Chatbots: The Effect of Information Disclosure Nudges. Applied Sciences. 2022; 12(24):12660. https://doi.org/10.3390/app122412660

Chicago/Turabian StyleCarmichael, Laurie, Sara-Maude Poirier, Constantinos K. Coursaris, Pierre-Majorique Léger, and Sylvain Sénécal. 2022. "Users’ Information Disclosure Behaviors during Interactions with Chatbots: The Effect of Information Disclosure Nudges" Applied Sciences 12, no. 24: 12660. https://doi.org/10.3390/app122412660