Abstract

The learning-based model predictive control (LB-MPC) is an effective and critical method to solve the path tracking problem in mobile platforms under uncertain disturbances. It is well known that the machine learning (ML) methods use the historical and real-time measurement data to build data-driven prediction models. The model predictive control (MPC) provides an integrated solution for control systems with interactive variables, complex dynamics, and various constraints. The LB-MPC combines the advantages of ML and MPC. In this work, the LB-MPC technique is summarized, and the application of path tracking control in mobile platforms is discussed by considering three aspects, namely, learning and optimizing the prediction model, the controller design, and the controller output under uncertain disturbances. Furthermore, some research challenges faced by LB-MPC for path tracking control in mobile platforms are discussed.

1. Introduction

The path tracking control is a core technique in autonomous driving. It is used to control driving in mobile platforms, such as vehicles and robots, along a given reference path, as well as to minimize the lateral and heading errors [1,2,3]. With the development of control theory [4,5], various advanced control algorithms [6,7,8,9] are adopted for path tracking control, including feedback linearization control [10], sliding mode control [11], optimal control [12], and intelligent control [13,14]. The design process of feedback linearization control is simple and has good response characteristics in control. Similarly, the sliding mode control has the advantages of fast response and strong robustness. The model predictive control (MPC) method in the optimal control has the ability to explicitly deal with the system constraints and extend the algorithms to multi-input multi-output systems [15]. When a reference state is introduced, the changing trend of the reference path can be added to MPC. The robust [16] and stochastic [17] model predictive controls are the main methods of dealing with uncertain systems [18]. The intelligent control achieves a better control based on self-learning, self-adaptation, and self-organization. Its performance depends on the control framework of the adopted control method [19].

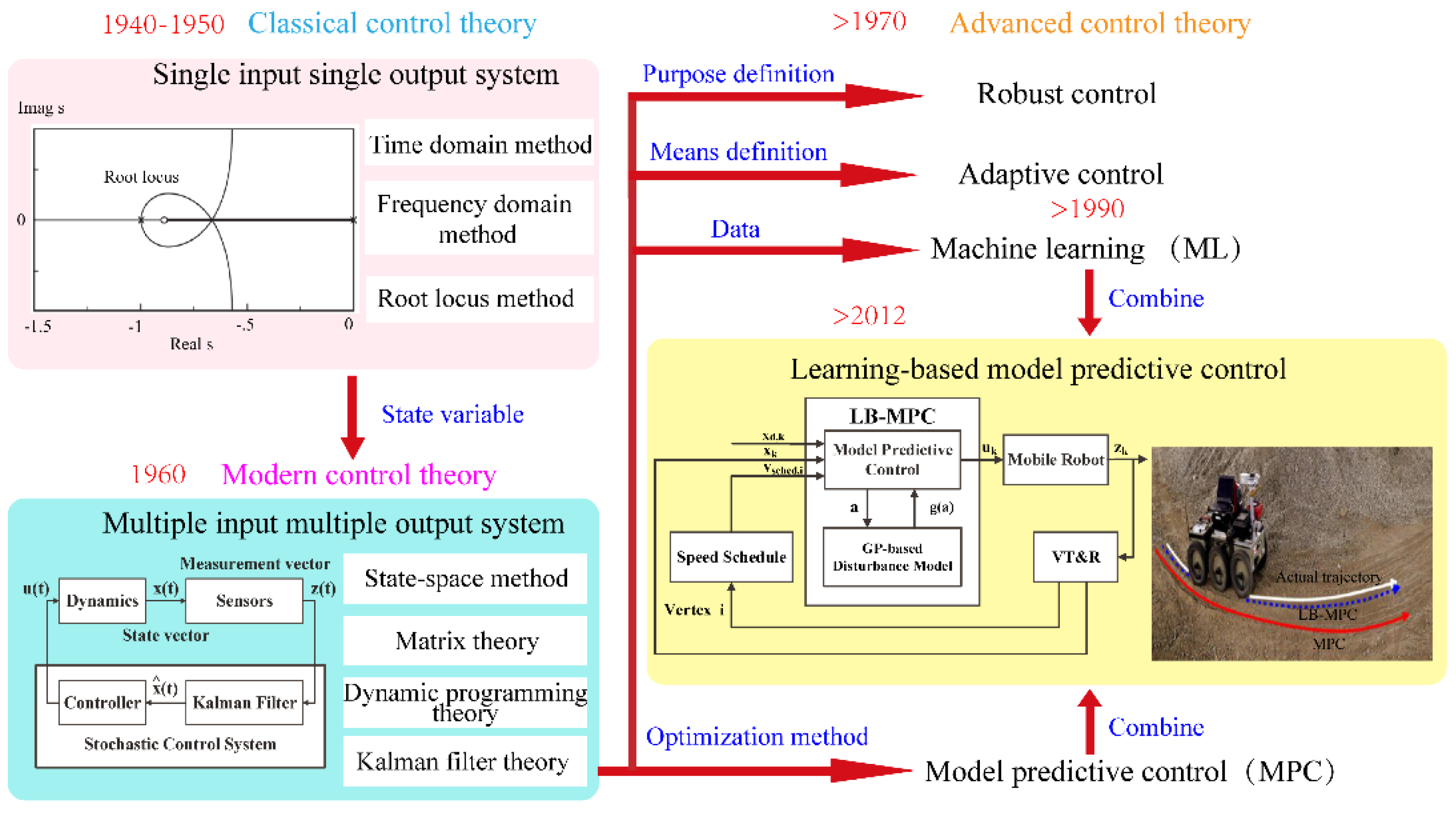

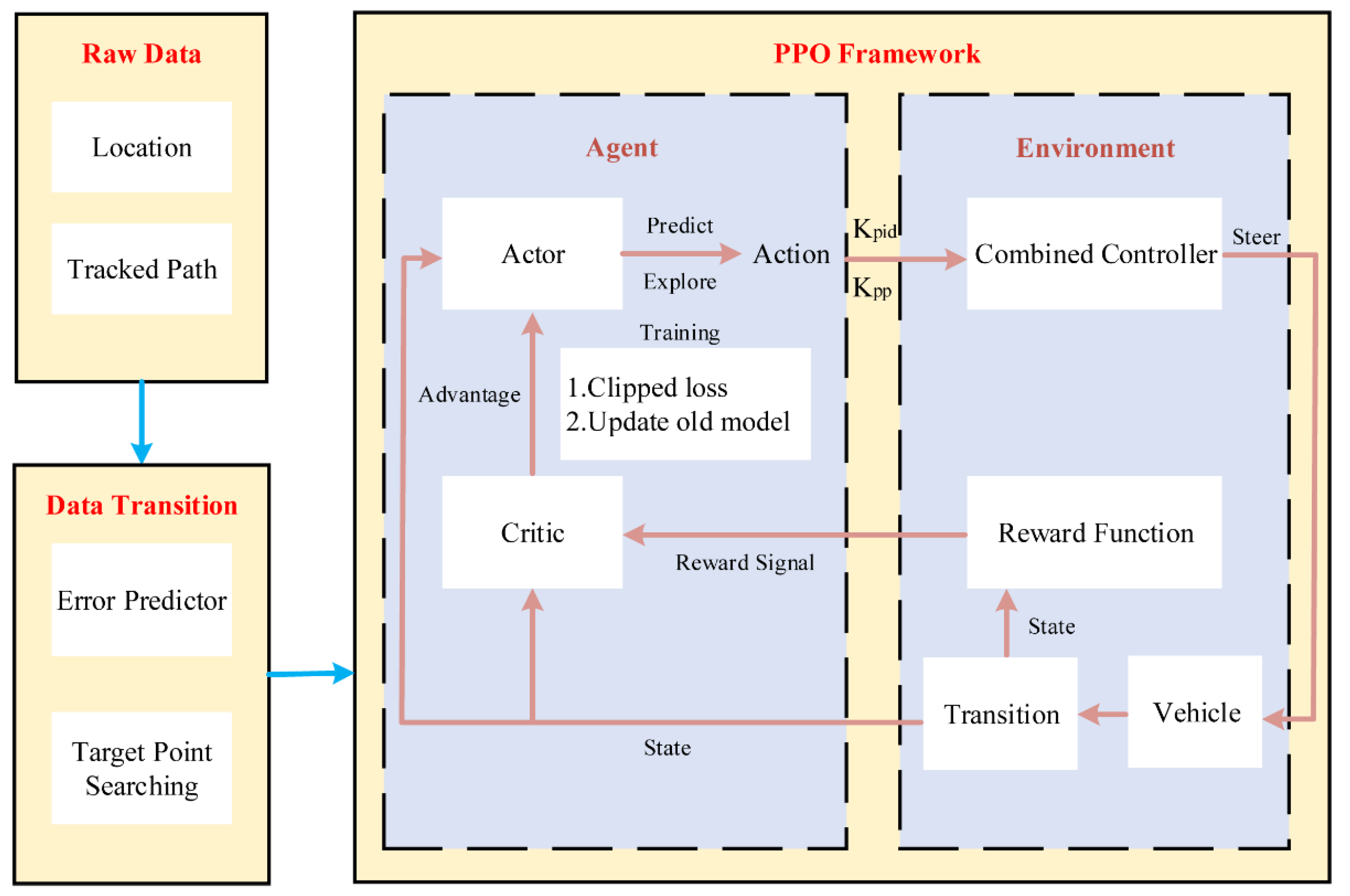

Under uncertain environmental disturbances, the aforementioned methods are not fully effective in meeting the operational requirements of path tracking control. Especially in the high-speed driving environment, the complex curvature variation conditions pose a major challenge to the path tracking performance of the mobile platforms. The nonlinear motion of the mobile platforms and the complex variability of road conditions require that the control system can intelligently achieve the established goals and ensure the real-time control of the system. The combination of machine learning (ML) and MPC has better performance in path tracking control. In [20,21], the learning-based model predictive control (LB-MPC) is applied to the path tracking control in mobile platforms. During the flight, a dual extended Kalman filter was used as a method for learning by the quadrotor to learn about its uncertainties, while an MPC was used to solve the optimization control problem. The LB-MPC technique rigorously combines statistics and learning with control engineering while providing levels of guarantees about safety, robustness, and convergence. It handles system constraints, optimizes performance with respect to a cost function, uses statistical identification tools to learn model uncertainties, and provably converges. Afterwards, the LB-MPC method was researched [22,23,24], and different schemes were designed [25,26]. There are various ML techniques that have been explored and applied to MPC, such as regression learning [27], reinforcement learning [28], and deep learning [29,30]. In [31], LB-MPC is reviewed from the perspective of security control, and the current applications of LB-MPC in the control field have been discussed. The LB-MPC method has been demonstrated to result in competitive high-performance control systems and has the potential to reduce modeling and engineering effort in controller design. The development process of LB-MPC is shown in Figure 1.

Figure 1.

The development process of LB-MPC [4,5,6,7,8,9,32].

The paper is organized as follows. Section 2 summarizes the LB-MPC technique that combines the advantages of ML and MPC. Section 3 introduces the application of path tracking control in mobile platforms by considering three aspects. Section 4 discusses some research challenges faced by LB-MPC. Finally, Section 5 concludes this paper regarding the learning-based model predictive control technique and its application in the field of mobile platforms for path tracking control.

2. Overview of Learning-Based Model Predictive Control

2.1. Model Predictive Control

Currently, the MPC is a well-known and standard technique used for implementing constrained and multivariable control in process industries [33,34]. It provides an integrated solution for path tracking control in mobile platforms [35,36,37]. Unlike other optimal control methods, MPC employs a unique receding horizon technique that enables rescheduling of the optimal control strategies at each control interval to eliminate the accumulation of control errors. In mobile platforms, the MPC first establishes a prediction model. Then, all the possible states of the mobile platforms are predicted by combining the current state of the mobile platforms and the feasible control input. Finally, the state closest to the reference state is obtained by optimizing the cost function, and the corresponding control input is attained. The MPC aims to achieve optimality in a long period of time on the basis of short-term optimization. This process involves three major steps, namely, prediction using model, rolling optimization, and feedback correction. The combination of prediction and optimization is the main difference between MPC and other control methods.

However, the MPC needs to consider the perception and response to the time-varying characteristics of a system that are caused by the uncertain disturbances. The dynamic characteristics of a system increase the uncertainty, thus affecting the performance of MPC [38,39]. In practice, model descriptions can be subject to considerable uncertainty, originating, e.g., from insufficient data, restrictive model classes, or the presence of external disturbances. Therefore, it is necessary to actively learn the uncertainties in a system and incorporate regular adaption for preserving the performance of MPC in uncertain systems.

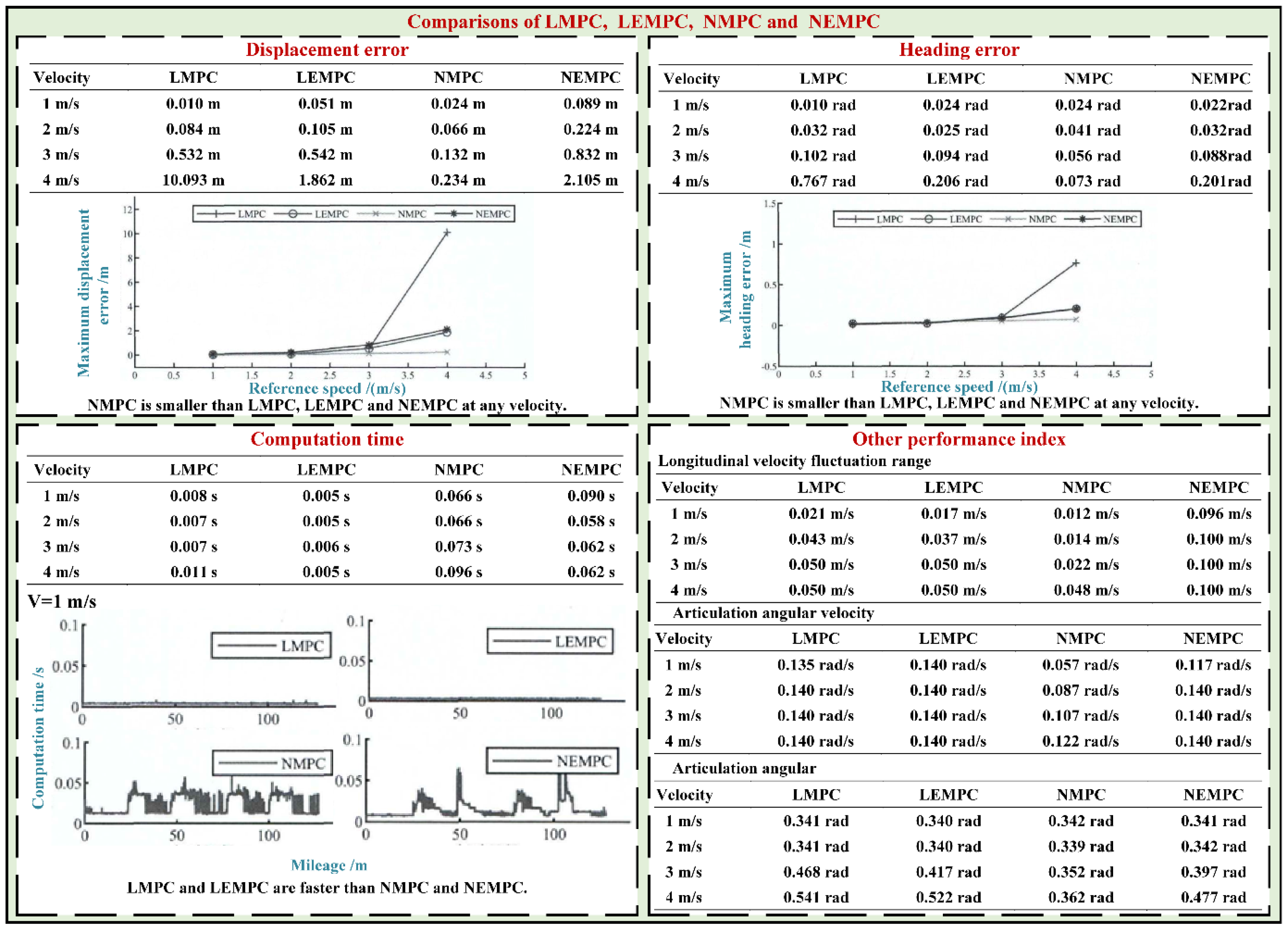

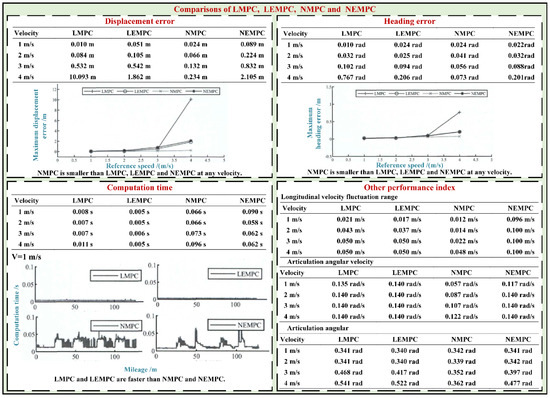

The MPC for path tracking control in mobile platforms can be divided into linear model predictive control (LMPC), linear error model predictive control (LEMPC), nonlinear model predictive control (NMPC), and nonlinear error model predictive control (NEMPC). Figure 2 presents the comparisons of the articulated vehicle path tracking controller on the basis of LMPC, LEMPC, NMPC, and NEMPC when tracking the path with complex curvature variation [40]. The simulation results show that the performance of the NMPC controller is better as compared to LMPC, LEMPC, and NEMPC controllers. The articulated vehicle path tracking controller based on NMPC performs well in terms of stability and accuracy. However, NMPC controller needs to be further optimized in real time. Therefore, the NMPC controllers are usually used for path tracking of robots [41]. Additionally, the whole algorithm efficiency can be improved by the prediction model, cost function, and minimization algorithm.

Figure 2.

Comparisons of LMPC, LEMPC, NMPC, and NEMPC [40].

The MPC has the ability to deal with general nonlinear dynamics, hard state, input constraints, and general cost functions. Currently, solving online optimization problems has become a future research trend of MPC due to its high computational complexity. Especially in the cases of complex physical models, numerous optimization variables, or high sampling frequency, the online optimization becomes more difficult. Moreover, it is noteworthy that the MPC is usually set up in advance by the controller and is difficult adjust in accordance with the situation. The availability of increasing computational power and sensing and communication capabilities, as well as advances in the field of machine learning, has given rise to a renewed interest in automating controller design and adaptations based on data collected during operation, e.g., for improved performance, facilitated deployment, and a reduced need for manual controller tuning.

2.2. Machine Learning

Recent successes in the field of ML, as well as the availability of increased sensing and computational capabilities in modern control systems, have led to a growing interest in learning and data-driven control techniques. The ML techniques have been widely used to solve complex engineering problems, such as object classification, object detection, and numerical prediction [42,43]. The ML techniques build statistical models on the basis of the training data. The resulting models are then used to predict and analyze new data on the basis of the data-driven controller [44].

Among ML techniques, neural networks have shown great success in regression problems. Additionally, neural network-based modeling outperforms other data-driven modeling methods due to its ability to implicitly detect complex nonlinearities and the availability of multiple training algorithms. In addition, ensemble learning, a machine learning paradigm that trains multiple models for the same problem, has been gaining increasing attention in recent years. By taking advantage of multiple learning algorithms or various training datasets, ensemble learning provides better predictive performance than a single machine learning model. ML techniques offer sophisticated tools for highly automated inference of prediction models from data.

The data-driven methods are effective for solving uncertain problems. During the prediction process, an uncertain model is built on the basis of the uncertain data caused by unexpected disturbances and estimation error. Then, the data-driven methods automatically use the rich information embedded in the uncertain data to make intelligent and data-driven decisions [45]. The data-driven methods are also used in the optimal design of the controller. They play an important role in learning and optimizing for the control precision and real-time response.

Iterative learning control (ILC) improves the current iterative control input signal by using the data obtained during the previous iteration [46]. As the number of iterations increases, the tracking error decreases. Therefore, ILC is widely used in ML prediction. ILC is a model-based control method that requires high model accuracy. Therefore, the learning-based prediction models are built on the basis of the data-driven methods and ILC [47]. The controller design only depends on the input and output data of the system and in not affected by the accuracy of the model. This combination improves the prediction performance of the controller.

In learning-based prediction methods, the uncertain disturbances in the mobile platforms are usually modeled as a Gaussian process (GP). The GP uses an inherent description function to estimate uncertainty [48]. The GP is a function of system state, input, and other related variables, and GP is updated on the basis of the data collected in the previous experiments. It is notable that the GP is also used to represent the dynamics of traditional systems [49]. On this basis, the GP models can be used to model complex motion modes of moving objects and compute the probability distribution of different motion modes. Afterwards, the path can be divided into different GP components to realize accurate and efficient position prediction [50]. This method does not require large number of parameters in arithmetic operations, and the position information in various motion modes can be obtained by the probability and statistical distribution characteristics of the data itself. The learning-based prediction methods adapt to various complex road conditions and improve the path tracking control performance in the mobile platforms.

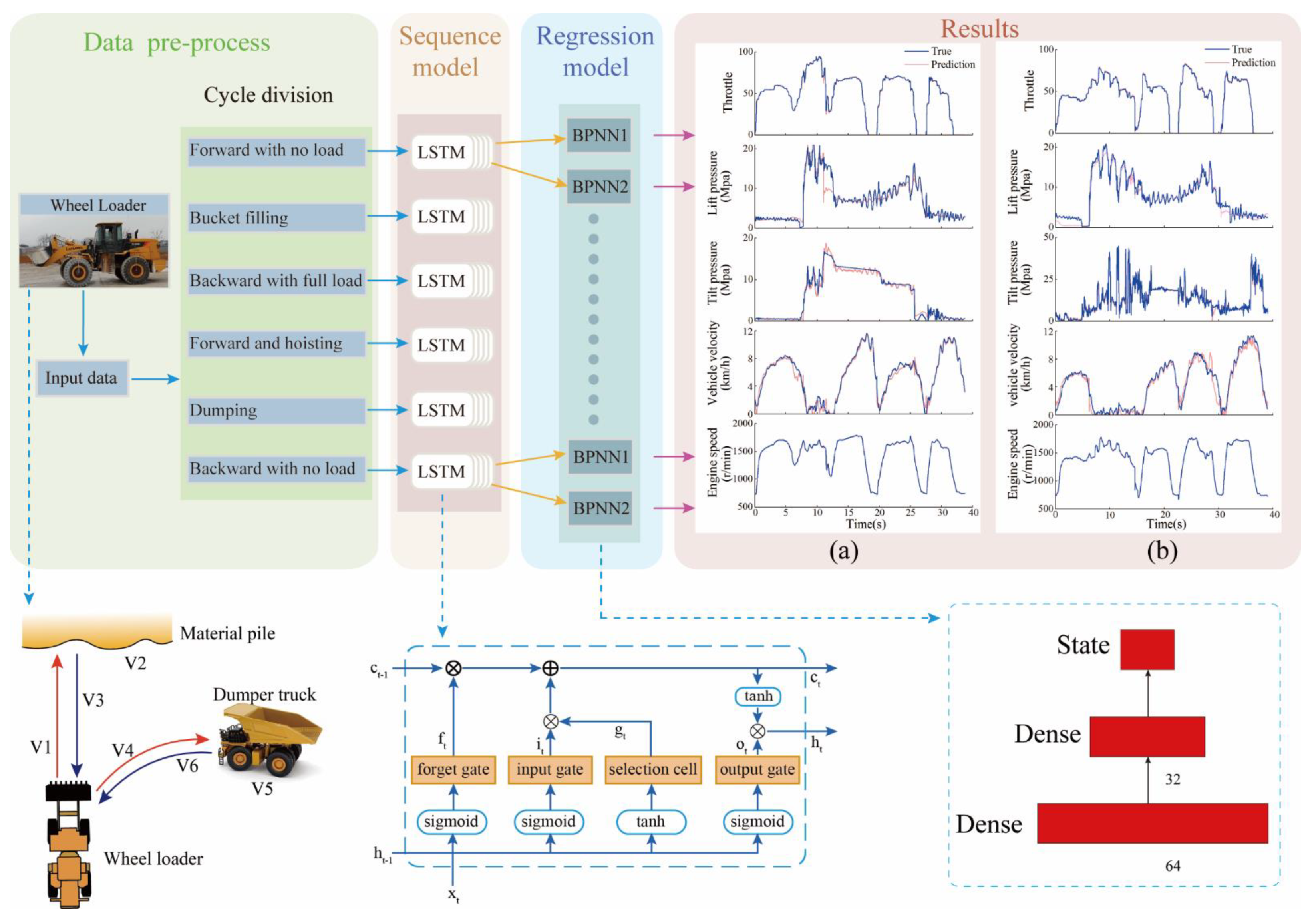

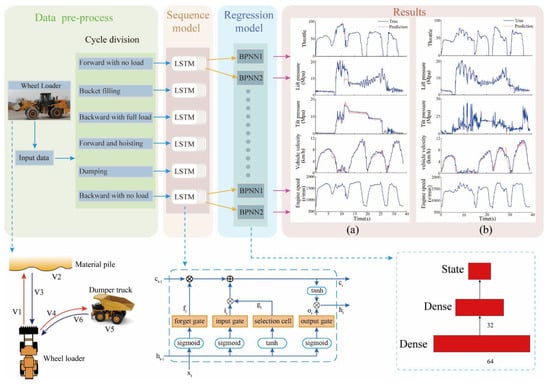

As the learning-based prediction methods are more adaptable to uncertain disturbances, in the previous work, a deep learning-based approach is proposed to accurately predict the throttle and state on the basis of driving data of experienced operators [51]. The driving data comes from the field test of experimental wheel loader with sensors and GPS. Considering the time series characteristics of the process, the long short-term memory (LSTM) networks are used to extract features. The driving data are manually divided into six stages. Six LSTM networks are used for the feature extraction of six stages. The prediction of throttle and state share the same weights of LSTM in order to reduce the computational complexity. Two backward-propagation neural networks following by LSTM are used to perform regression. Two backward-propagation neural networks (BPNNs) are used to obtain the prediction results, as the throttle is controlled by the driver and the state of wheel loaders is randomly influenced by the environment. The prediction results at different stages are output by the neural networks with different parameters to improve the prediction accuracy. Wheel loaders work on different material piles during the working operation. Thus, in the previous work, two different material piles were used to study the adaptability of the prediction model. Figure 3 presents the flowchart of learning-based prediction method.

Figure 3.

The flowchart of learning-based prediction method to accurately predict throttle and status for wheel loaders [51].

2.3. Learning-Based on Model Predictive Control

The MPC method provides an integrated solution for the control system with interactive variables, complex dynamics, and constraints, but it relies on the accuracy of the physical model [52]. In order for the control performance to be improved, usually more complex models are built and non-linear optimization techniques are used. The ML methods make predictions by the data-driven methods [53,54]. The ML methods build statistical models on the basis of training data without model identification and use historical data to derive control strategy [55,56,57]. The ILC is applied to improve the predictive performance of the controller [58]. Theoretically, with the accumulation of valid data, the prediction ability of ML continuously improves. However, the prediction performance of ML significantly depends on the amount of training data. The perfect combination of the MPC and ML forms the LB-MPC. Applying the learning algorithm to the MPC will improve the performance of the system and guarantee safety, robustness, and convergence in the presence of states and control inputs constraints.

Most research has focused on an automatic data-based adaptation of the prediction model or uncertainty description. The feedback techniques have the ability to overcome and reduce the impact of uncertainty [59]. The LB-MPC embeds the ML method in the MPC framework to eradicate the influence of uncertain disturbances, thus improving the performance of path tracking in mobile platforms [26,60]. The LB-MPC decouples the robustness and performance requirements by employing an additional learned model and introducing it into the MPC framework along with the nominal model. The nominal model helps to ensure the closed-loop system’s safety and stability, and the learned model aims to improve the tracking behaviors. The LB-MPC effectively evaluates both the current and historical effects of uncertainties, leading to superior estimating performance compared with conventional methods.

The combination of MPC and ML in nonlinear control systems has been a focus of industrial control research and development [61]. First, the LB-MPC method is used to train the model on the basis of the input data and ML, such as the GP regression method [62,63,64,65]. Second, the MPC control strategy is generated, and the calibration of MPC control parameters is performed by directly learning from the data on the basis of ML. In order for the real-time response of the controller to be realized, the sample database is trained offline by using the deep neural network [66,67,68,69]. Afterwards, non-direct measurement and the state variables for MPC are designed on the basis of ML, such as reinforcement learning [70]. Finally, the controller output is optimized on the basis of the security control framework.

In this work, we review the application of LB-MPC for path tracking control in mobile platforms, including learning and optimizing the prediction model, the controller, and the controller output in the presence of uncertain disturbances.

3. LB-MPC in Path Tracking of Mobile Platforms

3.1. Learning and Optimizing Prediction Model

The prediction model forms the basis of path tracking control. The accuracy of model determines the control performance. The simplified physical model can only be used to simulate the real mobile platform and uncertain environment to a certain extent. The prediction results are not very accurate [71]. In order for the control performance to be improved, the usual approach is to build complex physical models or use nonlinear optimization solvers. However, in the control system of mobile platforms operating in uncertain environmental disturbances, a complex physical model does not perform well in some situations. In addition, the physical model does not truly reflect the interaction between the platform and environment in real time. The prediction model is adjusted on the basis of the latest data by using data-driven ML methods [72]. For instance, the neural networks adjust their parameters on the basis of the newly acquired data [73]. The reinforcement learning realizes real-time interaction between the system and environment. The GP regression assesses the uncertainty in the residual error model to adapt the complex and dynamic working environment. The performance comparisons of the physical model, deep learning model, and reinforcement learning is presented in Table 1.

Table 1.

The comparisons between the physical model, deep learning model, and reinforcement learning model under different evaluation parameters (ratings: none, low, medium, and high).

3.1.1. Theoretical Basis for Modeling

The prediction models of different governing equations in MPC have a different effect on the path tracking control. The Gaussian model considers the Gaussian distribution as the model parameter [74]. It has a better control performance when dealing with periodically time-varying disturbances. The robust model predictive control (RMPC) and stochastic model predictive control (SMPC) are commonly used in uncertain systems. RMPC is especially suitable for control systems in which the stability and reliability are the primary objectives because the dynamic characteristics in the process are known and the range of uncertain factors is predictable. Moreover, RMPC does not require an accurate process model [76,77]. On the other hand, SMPC is generally intended to guarantee stability and performance of the closed-loop system in probabilistic terms by explicitly incorporating the probabilistic description of model uncertainty into an optimal control problem [78]. The disturbance rejection model predictive control (DRMPC) is based on MPC and is used for compensating the disturbances in real time. It tries to bring the disturbed system as close as possible to the calibrated system and find the nominal optimal solution by using compensation methods [79]. This is the main difference between RMPC and SMPC. In this work, both RMPC and SMPC are designed on the basis of the upper bound of disturbance. As a result, both methods are highly conservative and sacrifice some performance. The control equations of the aforementioned methods are shown in Table 2.

Table 2.

The governing equations for different models.

3.1.2. Data-Driven Prediction Models

The data-driven prediction models include two parts, i.e., the nominal system model and the dynamic model composed of additional uncertainties. The nominal system model is learned on the basis of the data, and it ensures the security and stability of the closed-loop system. However, due to prior uncertainty, the experimental data does not incorporate the entire state space. Consequently, the prediction accuracy of the model is not satisfactory. The uncertainty in the system arises from unmodeled nonlinearities or external disturbances and are contained in a finite set of dependent states [25]. Therefore, the uncertainty set is obtained from the data by using GP regression [80,81] or reinforcement learning [82,83], and then a dynamic model is formed with additional uncertainties. Finally, a data-driven prediction model is formed by combining the nominal system model.

The data-driven prediction models perform path tracking in mobile platforms efficiently and improve the path tracking performance in uncertain environments. An accurate vehicle model that covers the entire performance envelope of a vehicle is highly nonlinear, complex, and unrecognized. In order for uncertainties to be dealt with and for safe driving to be achieved [84], GP regression is used to obtain the residual model, which is also applied to the remote-control racing cars [48]. The trained reinforcement learning model is integrated with the controller to efficiently deal with the tracking error [85]. The inaccuracy in the prior model leads to a significant decline in the performance of MPC. The reinforcement learning based on the online model assists in learning the unknown parameters and updating the prediction model, thereby reducing the path error [86].

In the field of robotics, high precision path tracking forms the basis of robot operations. The GP of offline training is used to estimate the mismatch between the actual model and the estimated model. The extended Kalman filter estimates the mismatches in the residual model online to achieve the robot arm offset-free tracking [87]. The prediction model based on ML methods and MPC is the best solution for path tracking in cooperative autonomous unmanned aerial vehicles in the cases of different formation [88,89,90].

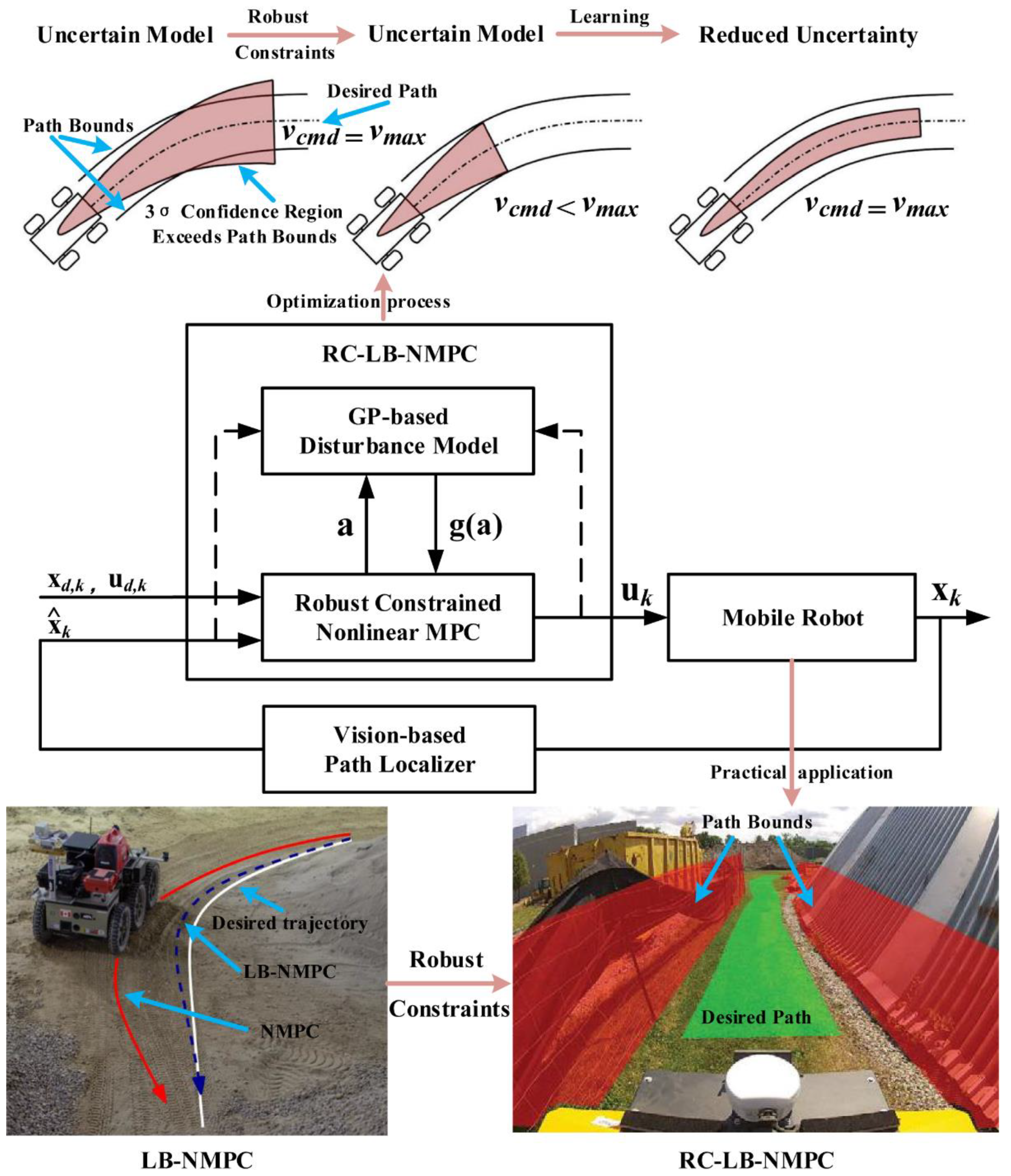

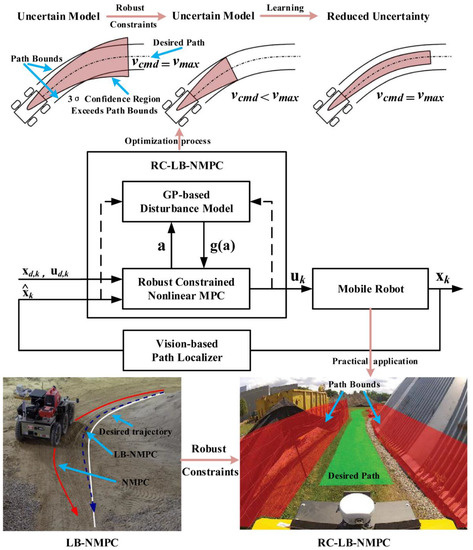

In unknown environments, such as the unstructured or off-road terrains, the robot–terrain interaction model usually does not exist. Even if such a model exists, finding the suitable model parameters is very difficult. The prior model is unable to deal with the influence of complex and dynamic terrains. The learning-based nonlinear model predictive control (LB-NMPC) algorithm includes a simple prior model and a learning disturbance model of environmental disturbance [32,75]. The disturbance is modeled as a GP function based on the system state, input, and other related variables to reduce the path tracking error of repeated traversal along the reference path. On the basis of the existing LB-NMPC algorithm, researchers have proposed a robust min–max learning-based nonlinear model predictive control [91] and a robust constraint learning-based nonlinear model predictive control (RC-LB-NMPC) method [92] to track the path of off-road terrain. The learning is used to generate low-uncertainty and non-parametric models on site. According to these models, the linear velocity and angular velocity are predicted in real time. The control framework and experimental terrain of RC-LB-NMPC are presented in Figure 4. The neural networks and model-free reinforcement learning have been used to generate walking gait for motion tasks. The neural networks are used to learn complex state transition dynamics [93]. The information learned regarding the terrain height is helpful for the robot MPC controller to track paths in uneven terrains.

Figure 4.

The control framework and experimental terrain of RC-LB-NMPC method [32,75,92].

3.1.3. Prediction Model Pre-Training Based on Transfer Learning

In ML, a large amount of data is often required to learn complex features. The process of acquiring the data for training the mobile platform prediction model is a laborious task. It is noteworthy that as soon as the feature space or feature distribution of the test data changes, the performance of model degrades. As a result, new data must be collected to retrain the model for enhancing the performance.

The transfer learning methods exploit the knowledge accumulated from data in auxiliary domains to facilitate the predictive modeling consisting of different data patterns in the current domain [94]. These methods include sample transfer, feature transfer, model transfer, and relation transfer. In natural language processing and computer vision, the pre-trained models created for a specific task are used to solve problems of a different domain [95]. However, there are not many neural networks available for path tracking control in mobile platforms. Therefore, the simulation dataset for model pre-training is obtained by building simulation models, such as dynamics. The data obtained from the real mobile platform is used to fine-tune the prediction model. As a result, the requirement for real data is reduced. The degree of similarity between the simulation and real data significantly influences the results of pre-training.

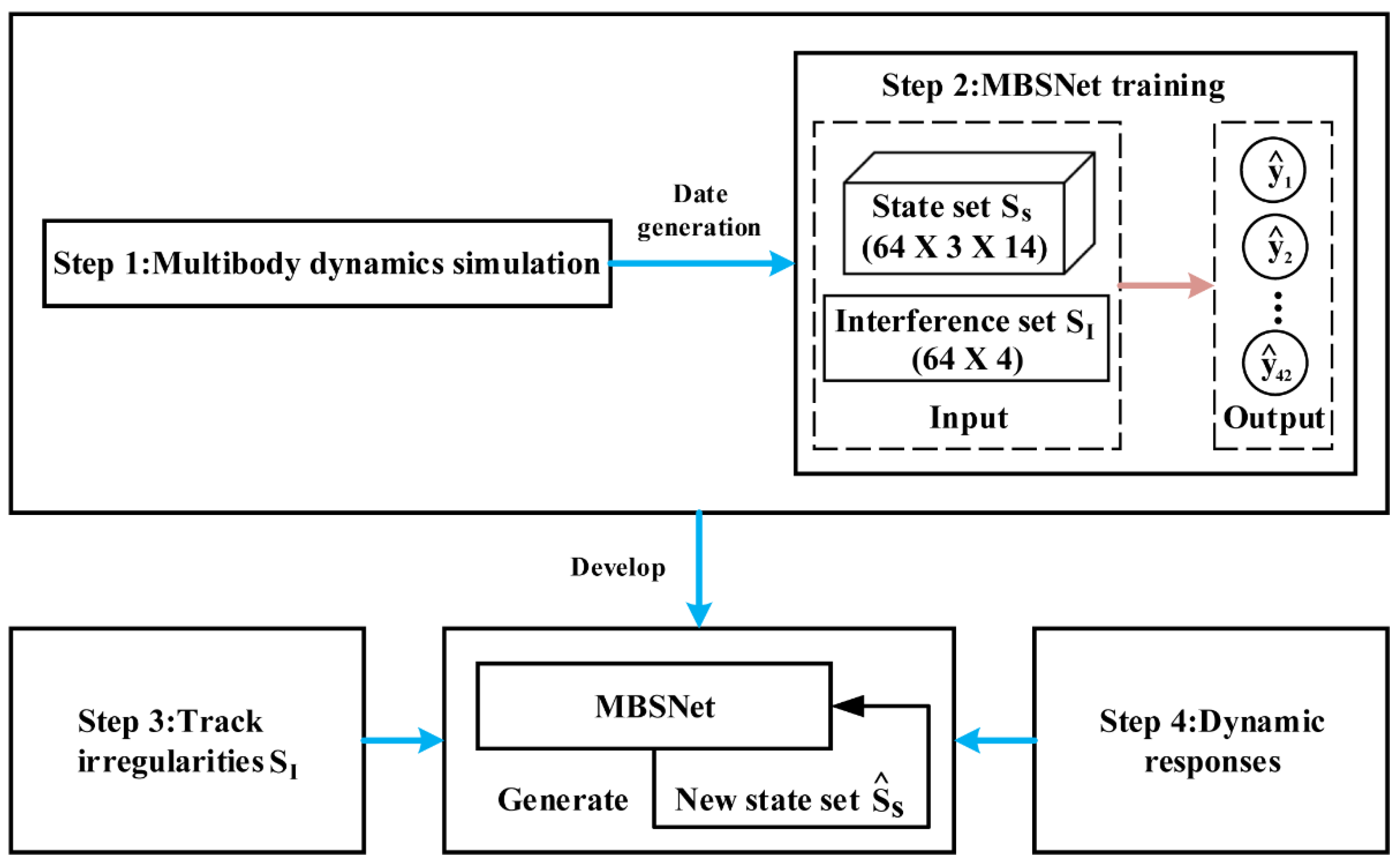

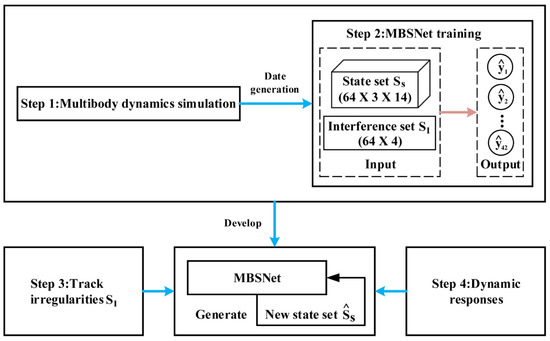

In multibody dynamics simulation (MBS) analysis, there are three challenges, namely, high modeling difficulty, high computational complexity, and restricted solver. The MBS based on deep learning network (MBSNet) is applied to vehicle tracking systems [96]. This model is robust in the presence of different track irregularities. The MBSNet accurately and quickly predicts the low-frequency components of dynamic response. The technical flowchart of MBSNet is presented in Figure 5.

Figure 5.

The technical flowchart of multi-body dynamics simulation based on deep learning network [96].

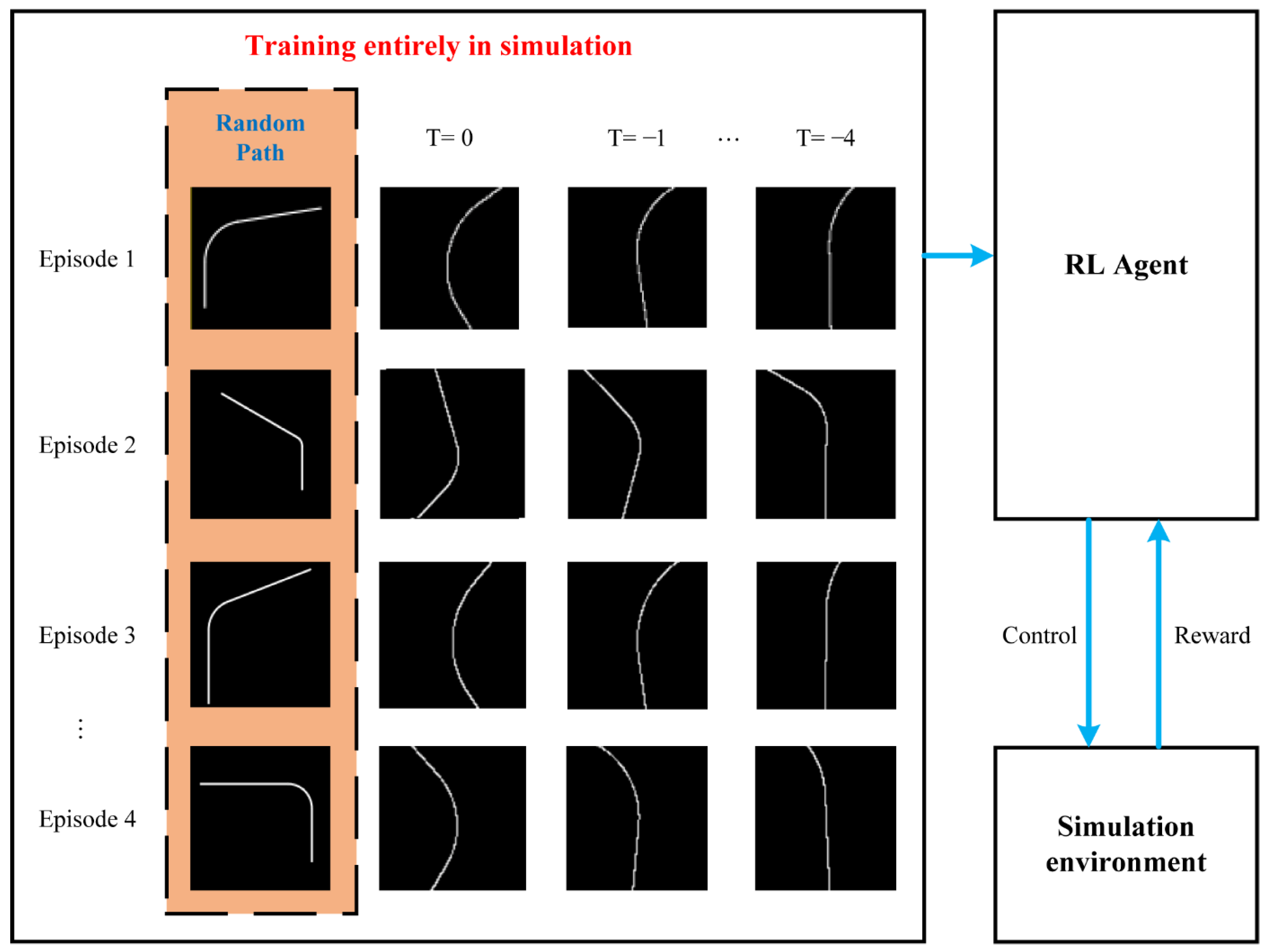

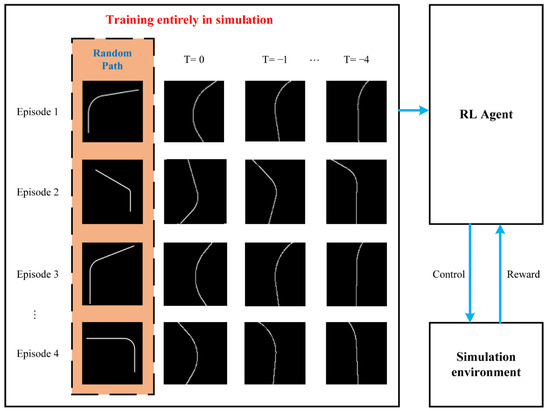

The end-to-end training methods use the original sensor data as the input and train the output directly by commands. These methods decrease the volume of training data because they are only trained on real devices or simulations without experiments. The convolutional neural network (CNN) is used as an image embedder for estimating the current and future position of the vehicle relative to the path [97]. As presented in Figure 6, a region randomization method is proposed to generate different types of paths with random curvature and length, initial lateral, and heading errors for the vehicle during simulation. This method covers all the possible training scenarios. In addition, this method prevents the network from overfitting, which makes it more universal as compared to other similar methods. The mobile platforms follow the path smoothly and slow down where the curvature is larger.

Figure 6.

The path randomization for training [97].

In cases of uncertain disturbances, the data-driven prediction models have a better environmental adaptability as compared to the traditional physical models. The transfer learning is used to pre-train the prediction model in the simulation environment, which solves the problem of high demand for real training data. The transfer learning also improves the reliability of the data-driven prediction models, which is the basis of LB-MPC.

3.2. Learning and Optimizing for Controller

It is important to control the mobile platforms to follow a complex curvature path. The prediction model is the core element of the model predictive controller, and other elements, such as cost functions, constraints, and real-time response, also have a significant impact on the final closed-loop control performance.

3.2.1. Learning and Optimizing for Control Precision

The model predictive controller optimizes the cost function under various constraints. The optimization design of the controller precision is based on the parameterization of the cost function and constraints. The cost function and the constraint condition can be parameterized into the function of the system state variables, application input, and random variables. Table 3 shows a parameterized version of an MPC problem, including a parameterization of the cost function and constraints , [31]. This is convenient for subsequent learning and optimization of the controller parameters under uncertain disturbances.

Table 3.

The parameterized version of an MPC problem [31].

It is difficult to track the paths effectively by using the model-based controller under uncertain environments. In addition, it is unrealistic to adjust the controller parameters manually for all the conditions. In order for the aforementioned problems to be solved, the data-driven ML methods described in Section 3.1 are usually used to estimate the environmental disturbance, and the data-driven model is used to design the controller. However, the lack of data can easily lead to incorrect approximation of uncertain disturbances. Therefore, the adaptive predictive control strategy is the key to achieving high-performance path tracking control. The recorded data are converted to the corresponding MPC parameters. On one hand, the closed-loop performance is improved by adjusting the parameterized cost function and constraint conditions on the basis of the performance-driven controller learning. On the other hand, the path tracking controller adjusts its parameters online by adapting to uncertain environmental disturbances. The input and state trajectories of the dynamic system are parameterized by the basis function to reduce the computational complexity of MPC [98]. When the input constraints are considered, the uncertainty is estimated and compensated in the design of the controller, and the recorded data are converted to the corresponding parameterization of the MPC problem.

The performance-driven controller learning focuses on finding the parameterization of the cost function and constraint conditions in MPC. The closed-loop performance is optimized by solving the optimal control problem. One solution is to adjust the controller on the basis of Bayesian optimization, and the other solution is to learn the terminal components to counteract the finite-horizon nature of the controller.

The Bayesian optimization method models the unknown function as a GP, evaluates the function, and guides it to the optimal position. Then, the controller parameters are estimated on the basis of experiments to optimize the controller performance. The information samples in Bayesian optimization are usually far away from the original control law, which leads to unstable evaluation and system failure of the controller in the early optimization process [99]. The security problems are solved by using the improved security optimization controllers and exploring new controller parameters whose performance lies above a safe performance threshold [100]. By combining the linear optimal control with Bayesian optimization, a parameterized cost function is introduced and optimized to compensate the deviation between real dynamics and linear predictive dynamics, resulting in an improved controller with fewer evaluations [101]. The controller is designed using the current linear dynamic model and the parameterized cost function on the basis of the Bayesian optimization. This method evaluates the controller performance gap with the actual physical device in the closed-loop operation, and iteratively updates the dynamic model on the basis of this information to improve the controller performance [102]. The inverse optimization control algorithm is used to learn the appropriate cost function parameters of MPC from the human demonstrated data [103]. The motion generated by the path tracking controller matches the specific characteristics of human-generated motion and avoids massive parameter adjustments. In the consideration of the online estimation and adjustment of control parameters, a framework composed of real-time parameter estimators and feedback control strategies can improve the path tracking performance in mobile platforms [104].

The terminal set is an important design parameter in MPC. The MPC reduces the bad effects caused by the limitation of the prediction range by using terminal cost functions and constraints. The data are collected through the ML methods to improve the terminal components. A large terminal set leads to a large area, and it is quick and feasible in solving the MPC problem in this area. The state convex hull terminated on the trajectory of the origin is proven to be control invariant [105]. On this basis, a method for constructing a terminal set from a given trajectory solves the optimization problem required for parameterized offline calculation terminal controllers [106]. The final terminal controller is a solution for state-dependent optimization problem. For constrained uncertain systems, a robust learning model predictive controller is used for collecting the data from iterative tasks and estimating the current value function, which meets the system constraints accurately [107]. The terminal security set and the terminal cost function for iterative learning are designed to improve the closed-loop tracking performance of the controller [108]. This method estimates the unknown system parameters and generates high-performance state trajectory at the same time. The iterative learning has been further studied, and a task decomposition method for iterative learning model predictive control has been proposed [109]. This method quickly converges to the local optimal minimum time trajectory as compared to simple methods.

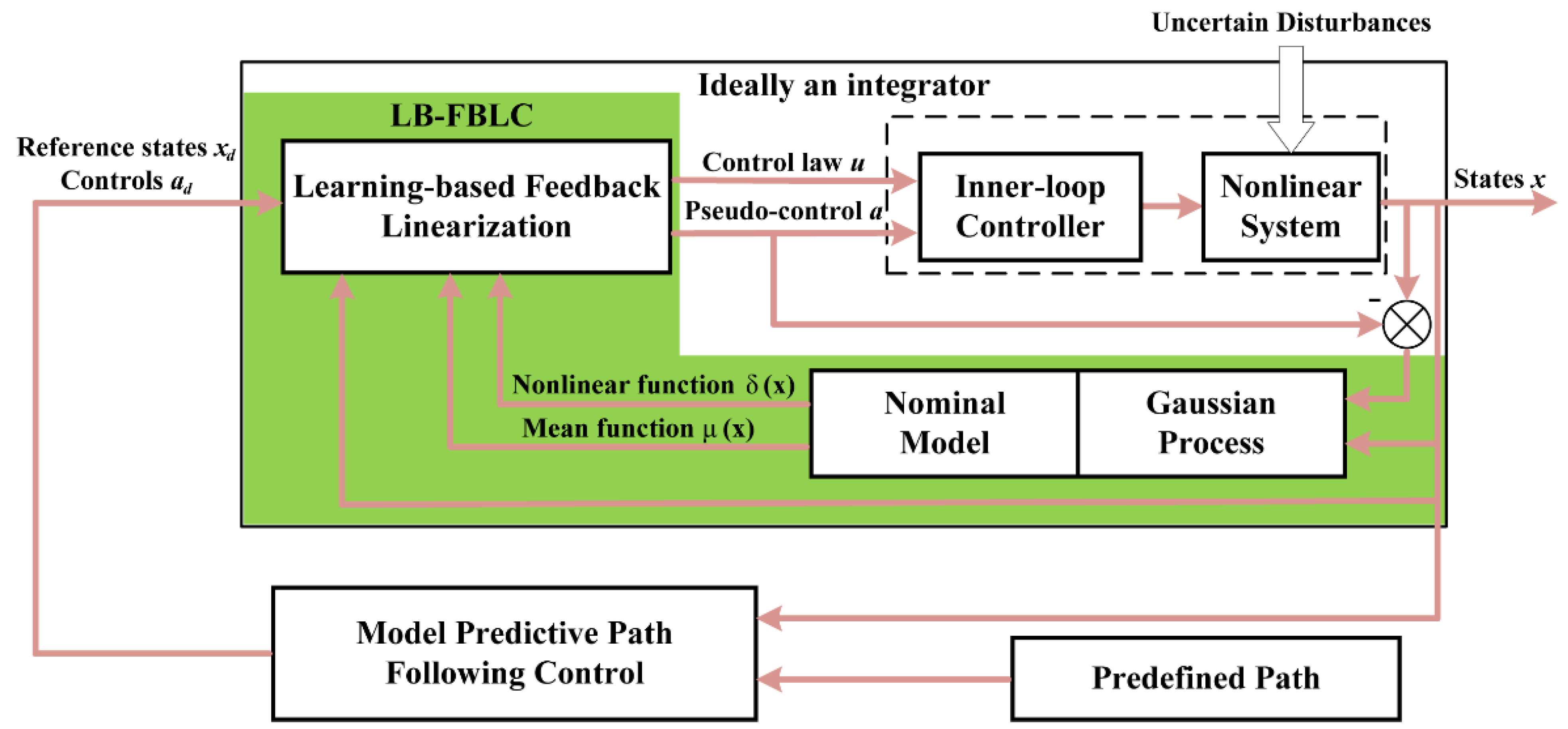

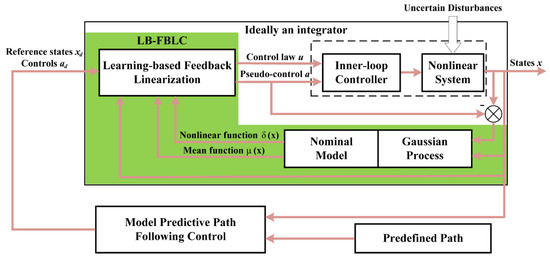

It is necessary for the controller to ensure that the mobile platforms accurately track the predefined path under uncertain disturbances. In order for the influence of uncertain environmental disturbances on parameterized objective functions and constraint definitions to be overcome, the components of the controller are learned and adjusted to adapt the environment, and an improved predictive control strategy is established. The combination of the high-level model predictive path following controller (MPFC) and the low-level learning-based feedback linearization controller (LB-FBLC) is used for nonlinear systems under uncertain disturbances [110], as shown in Figure 7. The LB-FBLC uses GP to learn the uncertain environmental disturbances online and accurately track the reference state on the premise of probability stability. The MPFC uses the linearized system model and the virtual linear path dynamics model to optimize the evolution of path reference targets and provides reference states and controls for LB-FBLC. The deep neural networks with a large number of hidden layers significantly improve the learning process of NMPC control law as compared to shallow networks [111]. The integrated design of model learning and model-based control design obtains the advanced prediction model for MPC cost function, the disturbance state space model satisfying robust constraints, and the robust MPC law [112]. Finally, the data-driven controller effectively deals with the constraints and tracks the desired reference output.

Figure 7.

The architecture of the proposed strategy for nonlinear system path under uncertain disturbances [110].

3.2.2. Learning and Optimization for Controller Real-Time Response

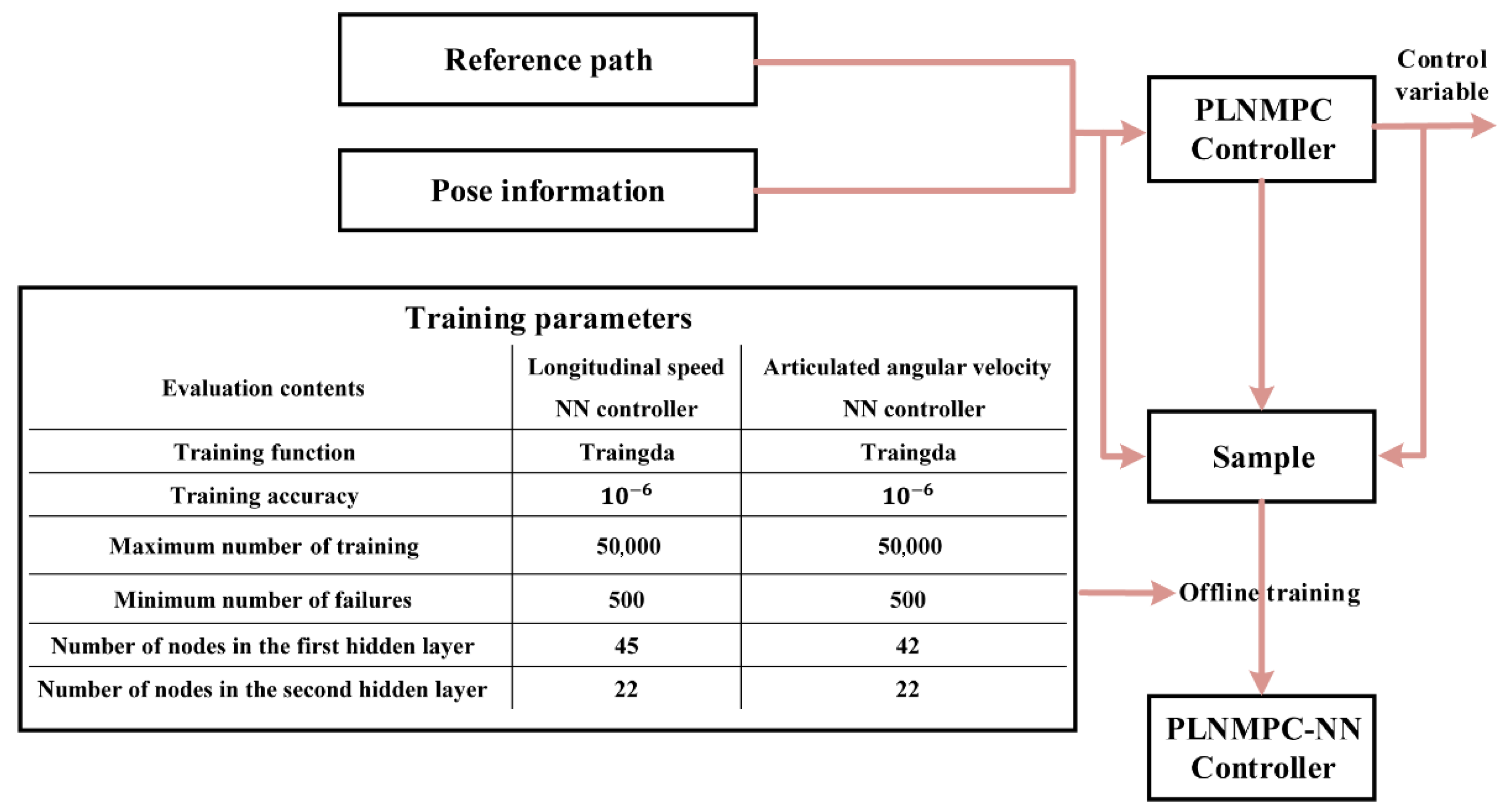

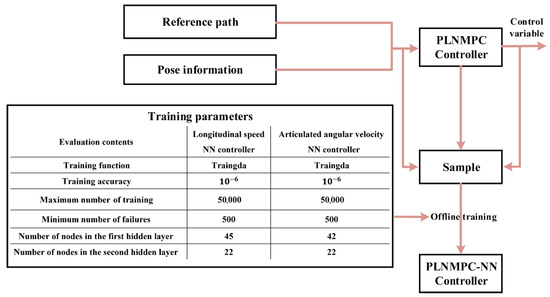

In addition to ensuring the control precision of path tracking control, the controller should also improve the computational complexity. The method for generating a neural network controller with MPC training samples solves the problem of poor real-time response of MPC controllers while ensuring control precision [113]. However, the training samples used in the aforementioned method lose the feedforward information. This problem can be solved by reducing the number of control steps of the controller and using a point-to-line NMPC neural network (PLNMPC-NN) in articulated vehicle path tracking control [114]. The PLNMPC method uses the position and state errors between the predictive horizon and on the reference path as penalty terms and generates the training samples in the non-global coordinate system. The training process and training parameters of PLNMPC-NN are presented in Figure 8.

Figure 8.

The PLNMPC-NN training process and its training parameters [114].

The online update of the recurrent neural network (RNN) models captures the nonlinear dynamics when the model is uncertain. In [115], the RNN method is applied to a chemical process with time-varying disturbances under LMPC and LEMPC. A real-time control Lyapunov barrier function-based model predictive control (CLBF-MPC) system is developed in the aforementioned research [116]. The CLBF-MPC system considers time-varying disturbances to ensure the closed-loop stability and operation safety, which proves its effectiveness in dealing with the problem of ML model online updating in real-time control.

The reinforcement learning (RL) learns the optimal control command on the basis of the predefined reward function, simulates the real environment in advance, and improves the real-time control process response in the real environment. The RL network is trained offline, and a large volume of offline training data is used for the RL agent, including initial positions, headings, and velocities of the vehicle [97]. The evaluation of the real vehicle shows that the trained agent steadily controls the vehicle and adaptively reduces the speed to adapt the sample path. Such a control process has better real-time performance.

It is noteworthy that the learning and optimization of controller parameters significantly influence the control precision. The offline training of samples is a very effective way to improve the real-time response of the controller. The controller design based on learning methods improves the performance of the path tracking controller itself.

3.3. Learning and Optimizing for Controller Output under Uncertain Disturbances

When the uncertain disturbances act on the mobile platforms, the predicted position of the controller may be different from the actual tracking position. Most of the path tracking control methods are unable to ensure that security constraints under physical limitations, especially during learning iterations. It is necessary to choose soft output constraints instead of hard constraints on the input and the rate for achieving more accurate path tracking in mobile platforms.

3.3.1. Learning and Optimizing Controller Output Based on Reinforcement Learning

When the mobile platform is driving at a high speed in a known environment or driving in an unknown complex terrain, the MPC controller is unable to slow down the mobile platforms considerably during steering. It is necessary to optimize the output of the MPC controller. The reinforcement learning algorithm evaluates the feedback signal of the environment to improve the action plan and adapt the environment in order to achieve the intended goals. The adaptive MPC path tracking controller based on reinforcement learning is designed to correct the predicted output of the model by interacting with the real environment. The reinforcement learning algorithm is used to adaptively adjust the MPC controller online to realize path tracking in mobile platforms with high robustness on complex terrains.

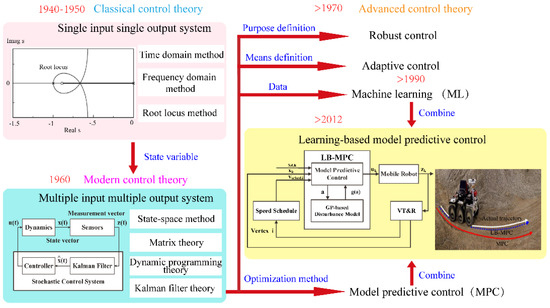

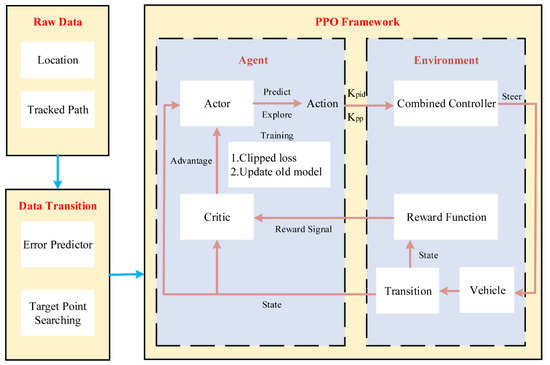

As compared with the traditional MPC controller, the adaptive MPC path tracking controller based on reinforcement learning further reduces the system overshoot and oscillations. It optimizes the performance of system dynamics and steady-state error. The hierarchical reinforcement learning method improves the generalization ability of reinforcement learning for optimal path tracking in wheeled mobile robots [117]. The behavior–reward scoring mechanism of reinforcement learning is used to learn the behavior rules so that the unmanned surface ships are able to estimate the best path tracking control behavior [118]. The proximal policy optimization (PPO) method is used as a deep reinforcement learning algorithm and combined with the traditional pure pursuit method to construct the vehicle controller architecture [119]. The blend of such controllers makes the overall system operations more robust, adaptive, and effective in terms of path tracking. Similarly, the PPO method is also used to achieve the trade-off between smooth control and path errors by designing the reward function to consider the smoothness and tracking error [120]. Figure 9 presents the flowchart of PPO control framework. The trained model can be nested in the combined controller to improve the accuracy of path tracking control on the basis of the interactions with the environment.

Figure 9.

The control framework of PPO [120].

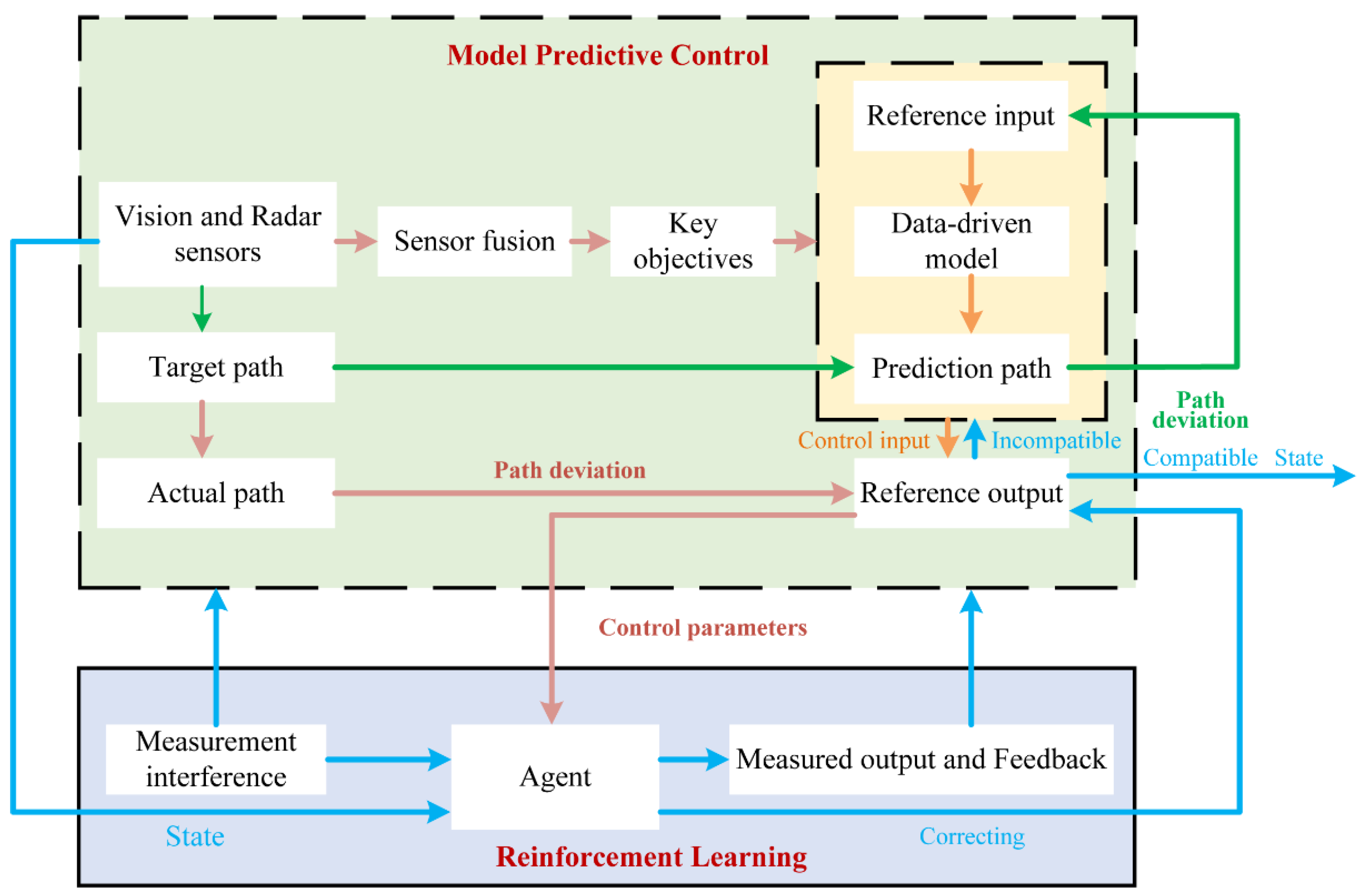

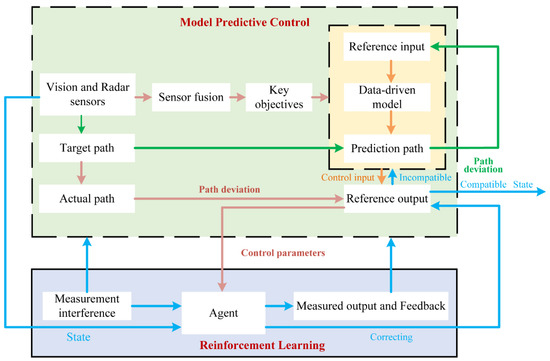

On the basis of the aforementioned works, we propose a reinforcement learning-based model predictive control (RLB-MPC) path tracking control framework. Additionally, the output of the controller is optimized on the basis of the reinforcement learning model. First, the predictive path is obtained by controlling the data-driven prediction model in the path-tracking controller. The target path is obtained by using visual and radar sensors. Second, the path deviation generated by the comparison between the predicted path and the target path is considered as the optimization objective. Finally, the path-tracking controller interacts with the real environment on the basis of the reinforcement learning model and corrects the path deviation generated by the comparison between the actual path and the optimized predicted path. The state information that conforms to the internal reward and punishment function of the reinforcement learning model is returned to the reference output and output directly. The other state information is returned to the MPC internal optimization until it meets the requirements of direct output. The RLB-MPC control framework is shown in Figure 10.

Figure 10.

The control framework of RLB-MPC.

3.3.2. Learning and Optimizing Controller Output Based on Security Framework

The complex and high-dimensional control performance is achieved without the prior knowledge of the system by general learning control technique, especially the reinforcement learning control technique [121]. However, most of the learning control techniques are unable to ensure the security constraints with the physical limitations, especially during the learning iterations. In order for the aforementioned problem to be solved, the security framework was proposed in the control theory [122].

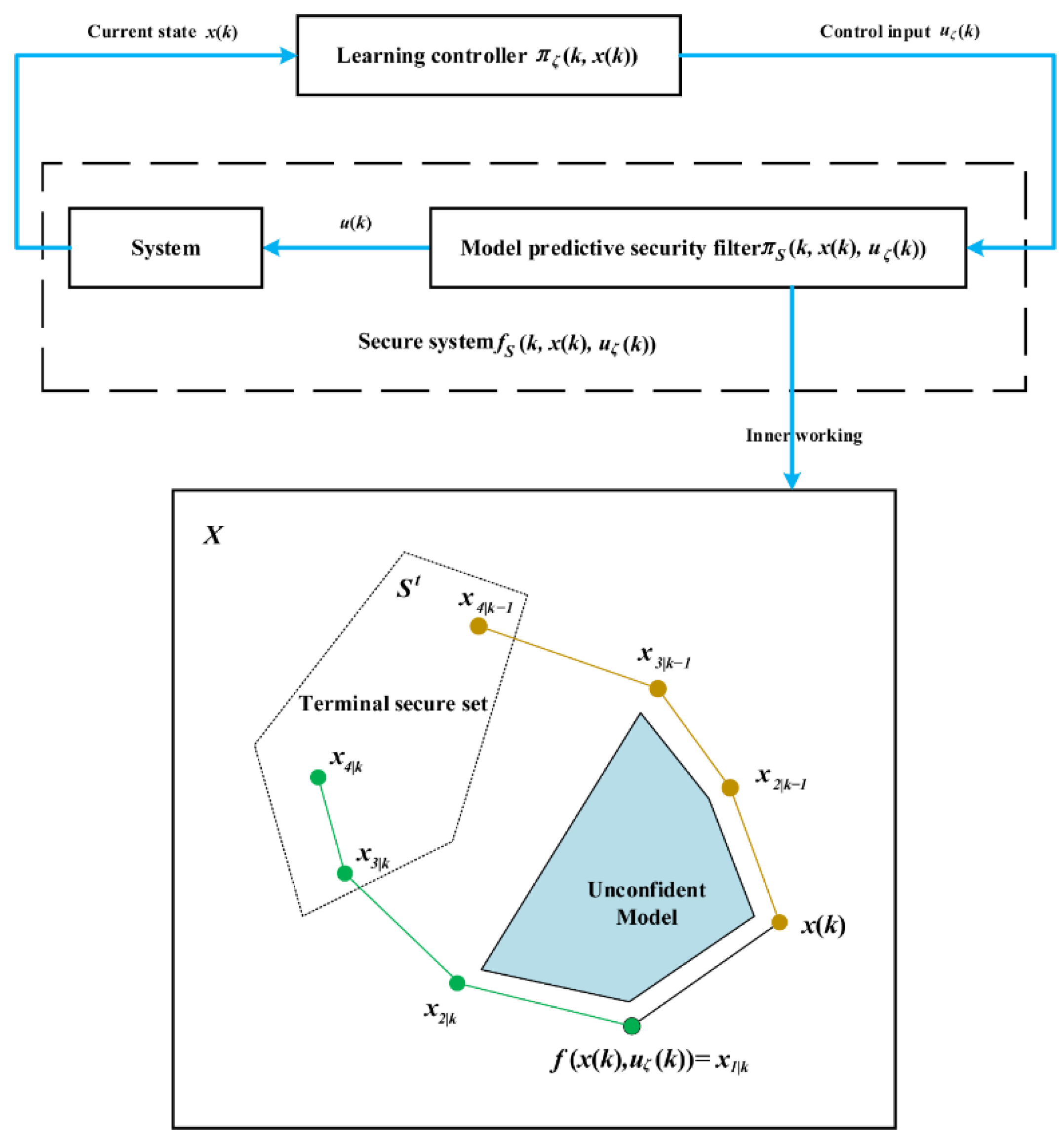

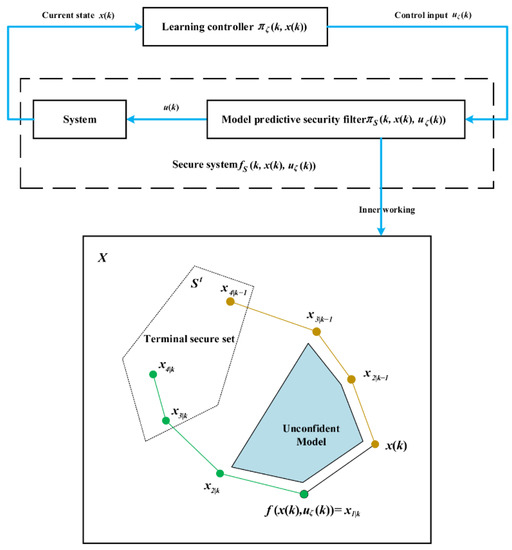

The centralized linear system security framework ensures the security by matching the learning-based input with the initial input of the MPC law online. The MPC law drives the system to a known secure terminal set. The model predictive security filter is applied to control the state and input space [123], as shown in Figure 11. The filter transforms the constrained dynamic system into an unconstrained security system, and any reinforcement learning algorithm can be applied in the security system without any constraint. The security system is established by constantly updating the security strategy. The security strategy is based on MPC formulation using a data-driven prediction model and considering state- and input-dependent uncertainties. The filter ensures the vehicle security during aggressive maneuvers, which was demonstrated by the applications for assisted manual driving and deep imitation learning using a miniature remote-controlled vehicle [124].

Figure 11.

The model predictive security filter. On the basis of the current state , a learning-based algorithm provides a control input , which is processed by the security filter, i.e., , and is applied to the real system. The detailed working of a model predictive security filter is presented at the bottom of the illustrations, which shows the state of the system at time k with a secure backup plan for a shorter horizon obtained from the solution at time k-1 (depicted in brown), as well as areas with poor model quality (depicted in green). An arbitrary learning input can pass through the model predictive security filter if a feasible solution towards the terminal secure set is obtained (depicted in green). If this new backup solution cannot be found and the planning problem is infeasible, the system can be driven to the secure set along the previously computed trajectory (depicted in brown) [123].

As compared to the centralized linear system security framework, the distributed model predictive security certification scheme ensures the state and input constraint satisfaction when applying any learning-based control algorithm to an uncertain distributed linear system with dynamic couplings [125]. In addition, two different sets of distributed system security have been proposed on the basis of the latest research findings regarding structural invariant sets [126]. Different sets are different in their dynamic allocation of local sets and provide different trade-offs between the required communication and the realized set size. The synthesis of a security set and control law offer improved scalability by relying on the approximations based on convex optimization problems [127]. For nonlinear and potentially larger-scale systems with security certification, a security framework is proposed to improve the learning-based and insecure control strategies. Furthermore, a probabilistic model predictive security authentication that can be combined with any reinforcement learning algorithm is proposed, relying on the Bayesian scheme. This model provides security assurance in terms of state and input [128].

The control of complex systems faces a trade-off between high performance and security assurance, which in particular limits the application of learning-based methods in security-critical systems. Ensuring the security constraints under physical limitations and improving the performance of mobile platforms during the learning process are important to the security control system. The LB-MPC can be used in common situations where the model prediction security filter has uncertainties and needs to learn from data. The general security framework based on Hamilton–Jacobi reachability methods uses approximate knowledge of the system dynamics to satisfy the constraints and minimize the disturbances during the learning process [129]. The Bayesian mechanism is further introduced to improve the security analysis by obtaining new data through the system. The reachability analysis is combined with ML [130]. The reachability analysis maintains the security performance, and the ML improves the system performance. When both control inputs and disturbances are bounded, a secure mandatory control action is required only when the system approaches the boundary of the insecure set. At other times, the system can freely use any other controller. The statistical identification tools are used to identify better prediction models that can deal with state and input constraints and optimize the system performance on the basis of the cost function [131]. The statistical model of the system is established by using the regularity assumption of GP on dynamics [132]. More data are collected from the system and the statistical model is updated, improving the control performance and ensuring the security of the learning process.

The proposed security framework removes the security constraints of learning-based control under physical limitations and optimizes the controller by using the model predictive security filters. The combination of model predictive security filters and different types of learning-based control methods not only meets the high-performance requirements of complex systems but also ensures the security in the control process.

4. Future Research Challenges

For the interpretability of the prediction model, the prediction model built using the ML method is a kind of black box model and is applicable to specific small-scale environment. However, once the learning fails, it is difficult to ensure the system security based on ML only. Most of the traditional physical models are simplified, linearized, and theoretical. However, the complex modeling process is time-consuming, and the model has calculation errors. The model built by using the LB-MPC belongs to the gray-box model. When the advantages and characteristics of ML and MPC are combined, the LB-MPC method achieves interpretable optimal performance. However, it has poor adaptability to the path without learning tests.

For the accuracy of the prediction model, the traditional prediction model simplified by expressions is not comprehensive because it does not consider the influence of uncertain disturbances. The data-driven prediction model is constructed on the basis of the data generated by the actual operations in mobile platforms. It has good adaptability and higher accuracy in the special working conditions. However, the data-driven prediction model requires a large number of labeled data, and the reinforcement learning model needs to interact with the environment. In order for the unexpected states to be dealt with, both prediction models require a large amount of training time. Reducing the volume of data required by the data-driven prediction model and promoting greater adaptive capacity are challenges in the future.

For controller design, only small random uncertainties can be handled in the mobile platform path tracking controller design, and all behavioral responses under uncertain disturbances cannot be considered. It is necessary to continuously learn from the uncertain disturbances during the operation process in order to improve the performance of the controller.

For the real-time capability of LB-MPC, the data may not capture the mobile platform operation characteristics when it is controlled by MPC. The mobile platform model also requires continuous updating to capture the changes of some physical characteristics over time due to changes in external and internal factors, such as weather, complex curvature variation conditions, mechanical friction, and fatigue failure. However, the automatic model update mechanism is an important challenge for the LB-MPC systems proposed in previous studies. The way to solve the challenge in the future is to develop a self-adaptive ML-based prediction model that exploits online mobile platform operation data to update the prediction model continuously as the mobile platforms are controlled by MPC in real time.

For the optimization of the controller, the input and output constraints limit the path tracking the performance in mobile platforms. The current security control framework combines the model predictive security filters with different types of learning-based control methods. It solves the trade-off problem between high performance and security assurance faced by the control of complex systems. In order for the performance of path tracking controllers to be further improved under uncertain disturbances, the seeking of more reasonable optimization methods and addressing input and output constraint methods have great research potential.

5. Conclusions

The present work reviews the LB-MPC technique and its application in the field of mobile platforms for path tracking control. The LB-MPC and its two components, namely, MPC and ML, are summarized. According to the relevant literature and research results, the application of the LB-MPC in path tracking is classified, and the characters and advantages of the application is explained. Under uncertain environmental disturbances, the data-driven prediction models obtained by LB-MPC can better adapt to complex situations. In controller design, the parameterized version of the LB-MPC problems and offline training samples are introduced to ensure control precision and real-time response. Moreover, combined with the security control framework, the controller output can be optimized in path tracking control. This work also highlights the current research challenges of prediction model interpretability and accuracy, controller design, and output optimization in LB-MPC. It will provide a reference for the research and application of LB-MPC in path tracking control in mobile platforms.

Author Contributions

Conceptualization, K.Z., J.W. and X.X.; data curation, X.L.; funding acquisition, J.W; methodology, K.Z., J.W. and J.H.; software, C.S. and W.K.; supervision, X.L.; project administration, X.X. and X.L.; writing—original draft preparation, K.Z., J.W. and X.X.; writing—review and editing, K.Z., J.W. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 51875239.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

The authors would like to thank all anonymous reviewers and editors for their helpful suggestions for the improvement of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Amer, N.H.; Zamzuri, H.; Hudha, K.; Kadir, Z.A. Modelling and Control Strategies in Path Tracking Control for Autonomous Ground Vehicles: A Review of State of the Art and Challenges. J. Intell. Robot. Syst. 2016, 86, 225–254. [Google Scholar] [CrossRef]

- Faulwasser, T.; Matschek, J.; Zometa, P.; Findeisen, R. Predictive path-following control: Concept and implementation for an industrial robot. In Proceedings of the International Conference on Control Applications (CCA), Hyderabad, India, 28–30 August 2013; pp. 128–133. [Google Scholar] [CrossRef]

- Kayacan, E.; Chowdhary, G. Tracking Error Learning Control for Precise Mobile Robot Path Tracking in Outdoor Environment. J. Intell. Robot. Syst. 2019, 59, 975–986. [Google Scholar] [CrossRef]

- De Paor, A. The root locus method: Famous curves, control designs and non-control applications. Int. J. Electr. Eng. Educ. 2000, 37, 344–356. [Google Scholar] [CrossRef]

- Li, Q.; Li, R.; Ji, K.; Dai, W. Kalman Filter and Its Application. In Proceedings of the 8th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2015; pp. 74–77. [Google Scholar]

- Tao, G. Multivariable adaptive control: A survey. Automatica 2014, 50, 2737–2764. [Google Scholar] [CrossRef]

- Sariyildiz, E.; Oboe, R.; Ohnishi, K. Disturbance Observer-Based Robust Control and Its Applications: 35th Anniversary Overview. IEEE Trans. Ind. Electron. 2020, 67, 2042–2053. [Google Scholar] [CrossRef]

- Williams, G.; Drews, P.; Goldfain, B.; Rehg, J.; Theodorou, E.A. Information-Theoretic Model Predictive Control: Theory and Applications to Autonomous Driving. IEEE Trans. Robot. 2018, 34, 1603–1622. [Google Scholar] [CrossRef]

- Li, P. Research on radar signal recognition based on automatic machine learning. Neural Comput. Appl. 2019, 32, 1959–1969. [Google Scholar] [CrossRef]

- Alshaer, B.; Darabseh, T.; Momani, A. Modelling and control of an autonomous articulated mining vehicle navigating a predefined path. Int. J. Heavy Veh. Syst. 2014, 21, 152. [Google Scholar] [CrossRef]

- Mat-Noh, M.; Mohd-Mokhtar, R.; Arshad, M.R.; Zain, Z.M.; Khan, Q. Review of sliding mode control application in au-tonomous underwater vehicles. Indian J. Geo-Mar. Sci. 2019, 48, 973–984. [Google Scholar]

- Zhang, Y.; Li, S.; Liao, L. Near-optimal control of nonlinear dynamical systems: A brief survey. Annu. Rev. Control 2019, 47, 71–80. [Google Scholar] [CrossRef]

- Khan, S.G.; Herrmann, G.; Lewis, F.L.; Pipe, T.; Melhuish, C. Reinforcement learning and optimal adaptive control: An overview and implementation examples. Annu. Rev. Control 2012, 36, 42–59. [Google Scholar] [CrossRef]

- Jiang, Y.; Yang, C.; Ma, H. A Review of Fuzzy Logic and Neural Network Based Intelligent Control Design for Discrete-Time Systems. Discret. Dyn. Nat. Soc. 2016, 2016, 1–11. [Google Scholar] [CrossRef]

- Garriga, J.L.; Soroush, M. Model Predictive Control Tuning Methods: A Review. Ind. Eng. Chem. Res. 2010, 49, 3505–3515. [Google Scholar] [CrossRef]

- Saltık, M.B.; Özkan, L.; Ludlage, J.H.; Weiland, S.; Hof, P.M.V.D. An outlook on robust model predictive control algorithms: Reflections on performance and computational aspects. J. Process Control 2018, 61, 77–102. [Google Scholar] [CrossRef]

- Mesbah, A. Stochastic Model Predictive Control: An Overview and Perspectives for Future Research. IEEE Control Syst. Mag. 2016, 36, 30–44. [Google Scholar] [CrossRef]

- Yuan, S.; Zhao, P.; Zhang, Q.; Hu, X. Research on Model Predictive Control-based Trajectory Tracking for Unmanned Vehicles. In Proceedings of the 4th International Conference on Control and Robotics Engineering (ICCRE), SE University, Nanjing, China, 20–23 April 2019; pp. 79–86. [Google Scholar] [CrossRef]

- Jamshidi, M. Tools for intelligent control: Fuzzy controllers, neural networks and genetic algorithms. Philos. Trans. R. Soc. a-Math. Phys. Eng. Sci. 2003, 361, 1781–1808. [Google Scholar] [CrossRef]

- Aswani, A.; Bouffard, P.; Tomlin, C. Extensions of learning-based model predictive control for real-time application to a quadrotor helicopter. In Proceedings of the 2012 American Control Conference (ACC), Montreal, Canada, 27–29 June 2012; pp. 4661–4666. [Google Scholar] [CrossRef]

- Bouffard, P.; Aswani, A.; Tomlin, C. Learning-based model predictive control on a quadrotor: Onboard implementation and experimental results. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–19 May 2012; pp. 279–284. [Google Scholar] [CrossRef]

- Wu, Z.; Christofides, P.D. Economic Machine-Learning-Based Predictive Control of Nonlinear Systems. Mathematics 2019, 7, 494. [Google Scholar] [CrossRef]

- Manzano, J.M.; Limon, D.; de la Peña, D.M.; Calliess, J.-P. Robust learning-based MPC for nonlinear constrained systems. Automatica 2020, 117, 108948. [Google Scholar] [CrossRef]

- Hertneck, M.; Kohler, J.; Trimpe, S.; Allgower, F. Learning an Approximate Model Predictive Controller with Guarantees. IEEE Control Syst. Lett. 2018, 2, 543–548. [Google Scholar] [CrossRef]

- Xie, J.; Zhao, X.; Dong, H. Learning-based nonlinear model predictive control with accurate uncertainty compensation. Nonlinear Dyn. 2021, 104, 3827–3843. [Google Scholar] [CrossRef]

- Wabersich, K.P.; Zeilinger, M.N. Nonlinear learning-based model predictive control supporting state and input dependent model uncertainty estimates. Int. J. Robust Nonlinear Control 2021, 31, 8897–8915. [Google Scholar] [CrossRef]

- Chen, D.; Hu, F.; Nian, G.; Yang, T. Deep Residual Learning for Nonlinear Regression. Entropy 2020, 22, 193. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Ryu, S.; Kim, T.; Kim, W.; Kim, H.J. Learning-based path tracking control of a flapping-wing micro air vehicle. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7096–7102. [Google Scholar]

- Adhau, S.; Patil, S.; Ingole, D.; Sonawane, D. Embedded Implementation of Deep Learning-based Linear Model Predictive Control. In Proceedings of the 2019 Sixth Indian Control Conference (ICC), Hyderabad, India, 18–20 December 2019; pp. 200–205. [Google Scholar] [CrossRef]

- Ning, C.; You, F. Optimization under uncertainty in the era of big data and deep learning: When machine learning meets mathematical programming. Comput. Chem. Eng. 2019, 125, 434–448. [Google Scholar] [CrossRef]

- Hewing, L.; Wabersich, K.P.; Menner, M.; Zeilinger, M.N. Learning-Based Model Predictive Control: Toward Safe Learning in Control. Annu. Rev. Control. Robot. Auton. Syst. 2020, 3, 269–296. [Google Scholar] [CrossRef]

- Ostafew, C.J.; Schoellig, A.P.; Barfoot, T.D.; Collier, J. Learning-based Nonlinear Model Predictive Control to Improve Vision-based Mobile Robot Path Tracking. J. Field Robot. 2016, 33, 133–152. [Google Scholar] [CrossRef]

- Schwenzer, M.; Ay, M.; Bergs, T.; Abel, D. Review on model predictive control: An engineering perspective. Int. J. Adv. Manuf. Technol. 2021, 117, 1327–1349. [Google Scholar] [CrossRef]

- Biegler, L.T. A perspective on nonlinear model predictive control. Korean J. Chem. Eng. 2021, 38, 1317–1332. [Google Scholar] [CrossRef]

- Yakub, F.; Mori, Y. Comparative study of autonomous path-following vehicle control via model predictive control and linear quadratic control. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2015, 229, 1695–1714. [Google Scholar] [CrossRef]

- Zhiwei, G.; Jianfeng, D.; Feng, D. Simulation research on trajectory tracking controller based on MPC algorithm. In Proceedings of the 2017 2nd International Conference on Robotics and Automation Engineering (ICRAE), Shanghai, China, 29–31 December 2017; pp. 212–216. [Google Scholar]

- Hu, Z.; Zhu, D.; Cui, C.; Sun, B. Trajectory Tracking and Re-planning with Model Predictive Control of Autonomous Underwater Vehicles. J. Navig. 2018, 72, 321–341. [Google Scholar] [CrossRef]

- Patwardhan, R.S.; Shah, S.L. Issues in performance diagnostics of model-based controllers. J. Process Control 2002, 12, 413–427. [Google Scholar] [CrossRef]

- MacGregor, J.; Cinar, A. Monitoring, fault diagnosis, fault-tolerant control and optimization: Data driven methods. Comput. Chem. Eng. 2012, 47, 111–120. [Google Scholar] [CrossRef]

- Bai, G.; Meng, Y.; Liu, L.; Luo, W.; Gu, Q.; Liu, L. Review and Comparison of Path Tracking Based on Model Predictive Control. Electronics 2019, 8, 1077. [Google Scholar] [CrossRef]

- Nascimento, T.P.; Dórea, C.E.T.; Gonçalves, L.M.G. Nonlinear model predictive control for trajectory tracking of nonho-lonomic mobile robots. Int. J. Adv. Robot. Syst. 2018, 15, 1–14. [Google Scholar] [CrossRef]

- Kim, D.-H.; Kim, T.J.Y.; Wang, X.; Kim, M.; Quan, Y.-J.; Oh, J.W.; Min, S.-H.; Kim, H.; Bhandari, B.; Yang, I.; et al. Smart Machining Process Using Machine Learning: A Review and Perspective on Machining Industry. Int. J. Precis. Eng. Manuf. Technol. 2018, 5, 555–568. [Google Scholar] [CrossRef]

- Choi, H.; Park, S. A Survey of Machine Learning-Based System Performance Optimization Techniques. Appl. Sci. 2021, 11, 3235. [Google Scholar] [CrossRef]

- Gambella, C.; Ghaddar, B.; Naoum-Sawaya, J. Optimization problems for machine learning: A survey. Eur. J. Oper. Res. 2021, 290, 807–828. [Google Scholar] [CrossRef]

- Radac, M.-B.; Precup, R.-E.; Petriu, E.M.; Preitl, S.; Dragos, C.-A. Data-Driven Reference Trajectory Tracking Algorithm and Experimental Validation. IEEE Trans. Ind. Inform. 2013, 9, 2327–2336. [Google Scholar] [CrossRef]

- Xie, S.; Ren, J. Iterative Learning-based Model Predictive Control for Precise Trajectory Tracking of Piezo Nanopositioning Stage. In Proceedings of the 2018 Annual American Control Conference (ACC), Milwaukee, WI, USA, 27–29 June 2018. [Google Scholar] [CrossRef]

- Lv, Y.; Chi, R. Data-driven Adaptive Iterative Learning Predictive Control. In Proceedings of the 6th IEEE Data Driven Control and Learning Systems Conference (DDCLS), Chongqing, China, 26–27 May 2017; pp. 374–377. [Google Scholar]

- Hewing, L.; Kabzan, J.; Zeilinger, M.N. Cautious Model Predictive Control Using Gaussian Process Regression. IEEE Trans. Control Syst. Technol. 2020, 28, 2736–2743. [Google Scholar] [CrossRef]

- Pan, Y.; Boutselis, G.I.; Theodorou, E.A. Efficient Reinforcement Learning via Probabilistic Trajectory Optimization. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 5459–5474. [Google Scholar] [CrossRef]

- Qiao, S.J.; Jin, K.; Han, N.; Tang, C.J.; Gesangduoji, G.L. Trajectory prediction algorithm based on Gaussian mixture model. Ruan Jian Xue Bao/J. Softw. 2015, 26, 1048–1063. (In Chinese) [Google Scholar]

- Huang, J.; Cheng, X.; Shen, Y.; Kong, D.; Wang, J. Deep Learning-Based Prediction of Throttle Value and State for Wheel Loaders. Energies 2021, 14, 7202. [Google Scholar] [CrossRef]

- Darby, M.L.; Nikolaou, M. MPC: Current practice and challenges. Control Eng. Pract. 2012, 20, 328–342. [Google Scholar] [CrossRef]

- Rosolia, U.; Zhang, X.; Borrelli, F. Data-Driven Predictive Control for Autonomous Systems. Annu. Rev. Control. Robot. Auton. Syst. 2018, 1, 259–286. [Google Scholar] [CrossRef]

- Berberich, J.; Kohler, J.; Muller, M.A.; Allgower, F. Data-Driven Model Predictive Control with Stability and Robustness Guarantees. IEEE Trans. Autom. Control 2021, 66, 1702–1717. [Google Scholar] [CrossRef]

- Yang, L.; Lu, J.; Xu, Y.; Li, D.; Xi, Y. Constrained robust model predictive control embedded with a new data-driven technique. IET Control Theory Appl. 2020, 14, 2395–2405. [Google Scholar] [CrossRef]

- Jianwang, H.; Ramirez-Mendoza, R.A.; Xiaojun, T. Robust analysis for data-driven model predictive control. Syst. Sci. Control Eng. 2021, 9, 393–404. [Google Scholar] [CrossRef]

- Yang, L.; Li, D.; Lu, J.; Xi, Y.; Li, B. Robust MPC for Constrained Uncertain Systems with Data-Driven Improvement. In Proceedings of the 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018. [Google Scholar] [CrossRef]

- Wang, J.; Liu, X. A new kind of nonlinear model predictive iterative learning control. In Proceedings of the 24th Chinese Control and Decision Conference (CCDC), Taiyuan, China, 23–25 May 2012; pp. 1422–1426. [Google Scholar] [CrossRef]

- Alhajeri, M.S.; Wu, Z.; Rincon, D.; Albalawi, F.; Christofides, P.D. Machine-learning-based state estimation and predictive control of nonlinear processes. Chem. Eng. Res. Des. 2021, 167, 268–280. [Google Scholar] [CrossRef]

- Bonzanini, A.; Paulson, J.; Makrygiorgos, G.; Mesbah, A. Fast approximate learning-based multistage nonlinear model predictive control using Gaussian processes and deep neural networks. Comput. Chem. Eng. 2021, 145, 107174. [Google Scholar] [CrossRef]

- Jiang, Z.-P.; Bian, T.; Gao, W. Learning-Based Control: A Tutorial and Some Recent Results. Found. Trends Syst. Control 2020, 8, 176–284. [Google Scholar] [CrossRef]

- Janssen, N.H.J.; Kools, L.; Antunes, D.J. Embedded Learning-based Model Predictive Control for Mobile Robots using Gaussian Process Regression. In Proceedings of the 2020 American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020. [Google Scholar] [CrossRef]

- Gángó, D.; Péni, T.; Tóth, R. Learning Based Approximate Model Predictive Control for Nonlinear Systems. IFAC-PapersOnLine 2019, 52, 152–157. [Google Scholar] [CrossRef]

- Maiworm, M.; Limon, D.; Findeisen, R. Online learning-based model predictive control with Gaussian process models and stability guarantees. Int. J. Robust Nonlinear Control 2021, 31, 8785–8812. [Google Scholar] [CrossRef]

- Maiworm, M.; Limon, D.; Manzano, J.M.; Findeisen, R. Stability of Gaussian Process Learning Based Output Feedback Model Predictive Control. IFAC-PapersOnLine 2018, 51, 455–461. [Google Scholar] [CrossRef]

- Karg, B.; Lucia, S. Approximate moving horizon estimation and robust nonlinear model predictive control via deep learning. Comput. Chem. Eng. 2021, 148, 107266. [Google Scholar] [CrossRef]

- Karg, B.; Lucia, S. Deep learning-based embedded mixed-integer model predictive control. In Proceedings of the 2018 European Control Conference (ECC), Limassol, Cyprus, 12–15 June 2018; pp. 2075–2080. [Google Scholar] [CrossRef]

- Perez, E.A.M.; Iba, H. Deep Learning-Based Inverse Modeling for Predictive Control. IEEE Control Syst. Lett. 2021, 6, 956–961. [Google Scholar] [CrossRef]

- Karg, B.; Alamo, T.; Lucia, S. Probabilistic performance validation of deep learning-based robust NMPC controllers. Int. J. Robust Nonlinear Control 2021, 31, 8855–8876. [Google Scholar] [CrossRef]

- Recht, B. A Tour of Reinforcement Learning: The View from Continuous Control. Annu. Rev. Control. Robot. Auton. Syst. 2019, 2, 253–279. [Google Scholar] [CrossRef]

- Dadhich, S.; Bodin, U.; Andersson, U. Key challenges in automation of earth-moving machines. Autom. Constr. 2016, 68, 212–222. [Google Scholar] [CrossRef]

- Wu, Z.; Tran, A.; Rincon, D.; Christofides, P.D. Machine-learning-based predictive control of nonlinear processes. Part II: Computational implementation. AIChE J. 2019, 65, 16734. [Google Scholar] [CrossRef]

- Moumouh, H.; Langlois, N.; Haddad, M. Robustness of Model Predictive Control Using a Novel Tuning Approach Based on Artificial Neural Network. In Proceedings of the 2020 28th Mediterranean Conference on Control and Automation (MED), Saint-Raphaël, France, 16–18 September 2020; pp. 127–132. [Google Scholar] [CrossRef]

- Matschek, J.; Gonschorek, T.; Hanses, M.; Elkmann, N.; Ortmeier, F.; Findeisen, R. Learning References with Gaussian Processes in Model Predictive Control applied to Robot Assisted Surgery. In Proceedings of the 2020 European Control Conference (ECC), Saint Petersburg, Russia, 12–15 May 2020; pp. 362–367. [Google Scholar] [CrossRef]

- Ostafew, C.J.; Schoellig, A.P.; Barfoot, T.D. Learning-based nonlinear model predictive control to improve vision-based mobile robot path-tracking in challenging outdoor environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 4029–4036. [Google Scholar] [CrossRef]

- Langson, W.; Chryssochoos, I.; Raković, S.V.; Mayne, D.Q. Robust model predictive control using tubes. Automatica 2004, 40, 125–133. [Google Scholar] [CrossRef]

- Mayne, D.Q.; Kerrigan, E.C.; van Wyk, E.J.; Falugi, P. Tube-based robust nonlinear model predictive control. Int. J. Robust Nonlinear Control. 2011, 21, 1341–1353. [Google Scholar] [CrossRef]

- Mesbah, A. Stochastic model predictive control with active uncertainty learning: A Survey on dual control. Annu. Rev. Control 2018, 45, 107–117. [Google Scholar] [CrossRef]

- Sun, Z.; Xia, Y.; Dai, L.; Liu, K.; Ma, D. Disturbance Rejection MPC for Tracking of Wheeled Mobile Robot. IEEE/ASME Trans. Mechatron. 2017, 22, 2576–2587. [Google Scholar] [CrossRef]

- Rasmussen, C.E. Gaussian Processes in machine learning. Lect. Notes Comput. Sci. 2004, 3176, 63–71. [Google Scholar]

- Soloperto, R.; Mueller, M.A.; Trimpe, S.; Allgoewer, F. Learning-Based Robust Model Predictive Control with State-Dependent Uncertainty. In Proceedings of the 6th International-Federation-of-Automatic-Control (IFAC) Conference on Nonlinear-Model-Predictive-Control (NMPC), Madison, WI, USA, 19–22 August 2018; pp. 442–447. [Google Scholar]

- Doerr, A.; Daniel, C.; Nguyen-Tuong, D.; Marco, A.; Schaal, S.; Marc, T.; Trimpe, S. Optimizing long-term predictions for model-based policy search. In Proceedings of the Conference on Robot Learning, PMLR, Mountain View, CA, USA, 13–15 November 2017; Volume 78, pp. 227–238. [Google Scholar]

- Kamthe, S.; Deisenroth, M.P. Data-Efficient Reinforcement Learning with Probabilistic Model Predictive Control. In Proceedings of the 21st International Conference on Artificial Intelligence and Statistics (AISTATS), Lanzarote, Spain, 09–11 April 2018. [Google Scholar]

- Kabzan, J.; Hewing, L.; Liniger, A.; Zeilinger, M.N. Learning-Based Model Predictive Control for Autonomous Racing. IEEE Robot. Autom. Lett. 2019, 4, 3363–3370. [Google Scholar] [CrossRef]

- Shan, Y.; Zheng, B.; Chen, L.; Chen, L.; Chen, D. A Reinforcement Learning-Based Adaptive Path Tracking Approach for Autonomous Driving. IEEE Trans. Veh. Technol. 2020, 69, 10581–10595. [Google Scholar] [CrossRef]

- Kim, T.; Kim, H.J. Path tracking control and identification of tire parameters using on-line model-based reinforcement learning. In Proceedings of the 2016 16th International Conference on Control, Automation and Systems (ICCAS), Gyeongju, Korea, 13–16 October 2016; pp. 215–219. [Google Scholar] [CrossRef]

- Carron, A.; Arcari, E.; Wermelinger, M.; Hewing, L.; Hutter, M.; Zeilinger, M.N. Data-Driven Model Predictive Control for Trajectory Tracking with a Robotic Arm. IEEE Robot. Autom. Lett. 2019, 4, 3758–3765. [Google Scholar] [CrossRef]

- Hafez, A.T.; Givigi, S.N.; Ghamry, K.A.; Yousefi, S. Multiple cooperative UAVs target tracking using Learning Based Model Predictive Control. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 1017–1024. [Google Scholar] [CrossRef]

- Hafez, A.T.; Givigi, S.N.; Yousefi, S.; Noureldin, A. Cooperative Unmanned Aerial Vehicles formation via decentralized LBMPC. In Proceedings of the 2015 23rd Mediterranean Conference on Control and Automation (MED), Torremolinos, Spain, 16–19 June 2015; pp. 377–383. [Google Scholar] [CrossRef]

- Hafez, A.T.; Givigi, S.N.; Yousefi, S. Unmanned Aerial Vehicles Formation Using Learning Based Model Predictive Control. Asian J. Control 2018, 20, 1014–1026. [Google Scholar] [CrossRef]

- Ostafew, C.J.; Schoellig, A.P.; Barfoot, T.D. Conservative to confident: Treating uncertainty robustly within Learning-Based Control. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, DC, USA, 26–30 May 2015; pp. 421–427. [Google Scholar] [CrossRef]

- Ostafew, C.J.; Schoellig, A.; Barfoot, T.D. Robust Constrained Learning-based NMPC enabling reliable mobile robot path tracking. Int. J. Robot. Res. 2016, 35, 1547–1563. [Google Scholar] [CrossRef]

- Sonker, R.; Dutta, A. Adding Terrain Height to Improve Model Learning for Path Tracking on Uneven Terrain by a Four Wheel Robot. IEEE Robot. Autom. Lett. 2020, 6, 239–246. [Google Scholar] [CrossRef]

- Lu, J.; Behbood, V.; Hao, P.; Zuo, H.; Xue, S.; Zhang, G. Transfer learning using computational intelligence: A survey. Knowl. Based Syst. 2015, 80, 14–23. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Ye, Y.; Huang, P.; Sun, Y.; Shi, D. MBSNet: A deep learning model for multibody dynamics simulation and its application to a vehicle-track system. Mech. Syst. Signal Process. 2021, 157, 107716. [Google Scholar] [CrossRef]

- Kamran, D.; Zhu, J.; Lauer, M. Learning Path Tracking for Real Car-like Mobile Robots from Simulation. In Proceedings of the European Conference on Mobile Robots (ECMR), Prague, Czech Republic, 4–6 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Sferrazza, C.; Muehlebach, M.; D’Andrea, R. Learning-based parametrized model predictive control for trajectory tracking. Optim. Control Appl. Methods 2020, 41, 2225–2249. [Google Scholar] [CrossRef]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; De Freitas, N. Taking the Human Out of the Loop: A Review of Bayesian Optimization. Proc. IEEE 2016, 104, 148–175. [Google Scholar] [CrossRef]

- Berkenkamp, F.; Schoellig, A.P.; Krause, A. Safe controller optimization for quadrotors with Gaussian processes. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Royal Inst Technol, Ctr Autonomous Syst, Stockholm, Sweden, 16–21 May 2016; pp. 491–496. [Google Scholar] [CrossRef]

- Marco, A.; Hennig, P.; Bohg, J.; Schaal, S.; Trimpe, S. Automatic LQR tuning based on Gaussian process global optimization. In Proceedings of the International Conference on Robotics and Automation (ICRA), Royal Institute of Technology, Ctr Autonomous Syst, Stockholm, Sweden, 16–21 May 2016; pp. 270–277. [Google Scholar] [CrossRef]

- Bansal, S.; Calandra, R.; Xiao, T.; Levine, S.; Tomlin, C.J. Goal-Driven Dynamics Learning via Bayesian Optimization. In Proceedings of the 56th Annual IEEE Conference on Decision and Control (CDC), Melbourne, Australia, 12–15 December 2017. [Google Scholar]

- Rokonuzzaman, M.; Mohajer, N.; Nahavandi, S.; Mohamed, S. Learning-based Model Predictive Control for Path Tracking Control of Autonomous Vehicle. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 2913–2918. [Google Scholar] [CrossRef]

- Kayacan, E.; Park, S.; Ratti, C.; Rus, D. Learning-based Nonlinear Model Predictive Control of Reconfigurable Autonomous Robotic Boats: Roboats. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 8230–8237. [Google Scholar] [CrossRef]

- Blanchini, F.; Pellegrino, F.A. Relatively Optimal Control: The Static Solution. IFAC Proc. Vol. 2005, 38, 676–681. [Google Scholar] [CrossRef]

- Brunner, F.D.; Lazar, M.; Allgöwer, F. Stabilizing model predictive control: On the enlargement of the terminal set. Int. J. Robust Nonlinear Control 2015, 25, 2646–2670. [Google Scholar] [CrossRef]

- Rosolia, U.; Zhang, X.; Borrelli, F. Robust learning model predictive control for iterative tasks: Learning from experience. In Proceedings of the 56th Annual IEEE Conference on Decision and Control (CDC), Melbourne, Australia, 12–15 December 2017; pp. 1157–1162. [Google Scholar] [CrossRef]

- Rosolia, U.; Borrelli, F. Learning Model Predictive Control for Iterative Tasks. A Data-Driven Control Framework. IEEE Trans. Autom. Control 2018, 63, 1883–1896. [Google Scholar] [CrossRef]

- Vallon, C.; Borrelli, F. Task Decomposition for Iterative Learning Model Predictive Control. In Proceedings of the American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020. [Google Scholar] [CrossRef]

- Yang, R.; Zheng, L.; Pan, J.; Cheng, H. Learning-Based Predictive Path Following Control for Nonlinear Systems Under Un-certain Disturbances. IEEE Robot. Autom. Lett. 2021, 6, 2854–2861. [Google Scholar] [CrossRef]

- Lucia, S.; Karg, B. A deep learning-based approach to robust nonlinear model predictive control. In Proceedings of the 6th Internation-al-Federation-of-Automatic-Control (IFAC) Conference on Nonlinear-Model-Predictive-Control (NMPC), Madison, WI, USA, 19–22 August 2018; pp. 511–516. [Google Scholar]

- Terzi, E.; Fagiano, L.; Farina, M.; Scattolini, R. Learning-based predictive control for linear systems: A unitary approach. Automatica 2019, 108, 108473. [Google Scholar] [CrossRef]

- Gomez-Ortega, J.; Camacho, E.F. Neural network MBPC for mobile robot path tracking. Robot. Comput. -Integr. Manuf. 1994, 11, 271–278. [Google Scholar] [CrossRef]

- Guoxing, B.; Li, L.; Yu, M.; Siyan, L.; Li, L.; Weidong, L. Real-time Path Tracking of Mobile Robot Based on Nonlinear Model Predictive Control. China Acad. J. Electron. Publ. House 2020, 51, 47–60. [Google Scholar]