Handwriting-Based Text Line Segmentation from Malayalam Documents

Abstract

:Featured Application

Abstract

1. Introduction

2. Related Works

3. Proposed Method

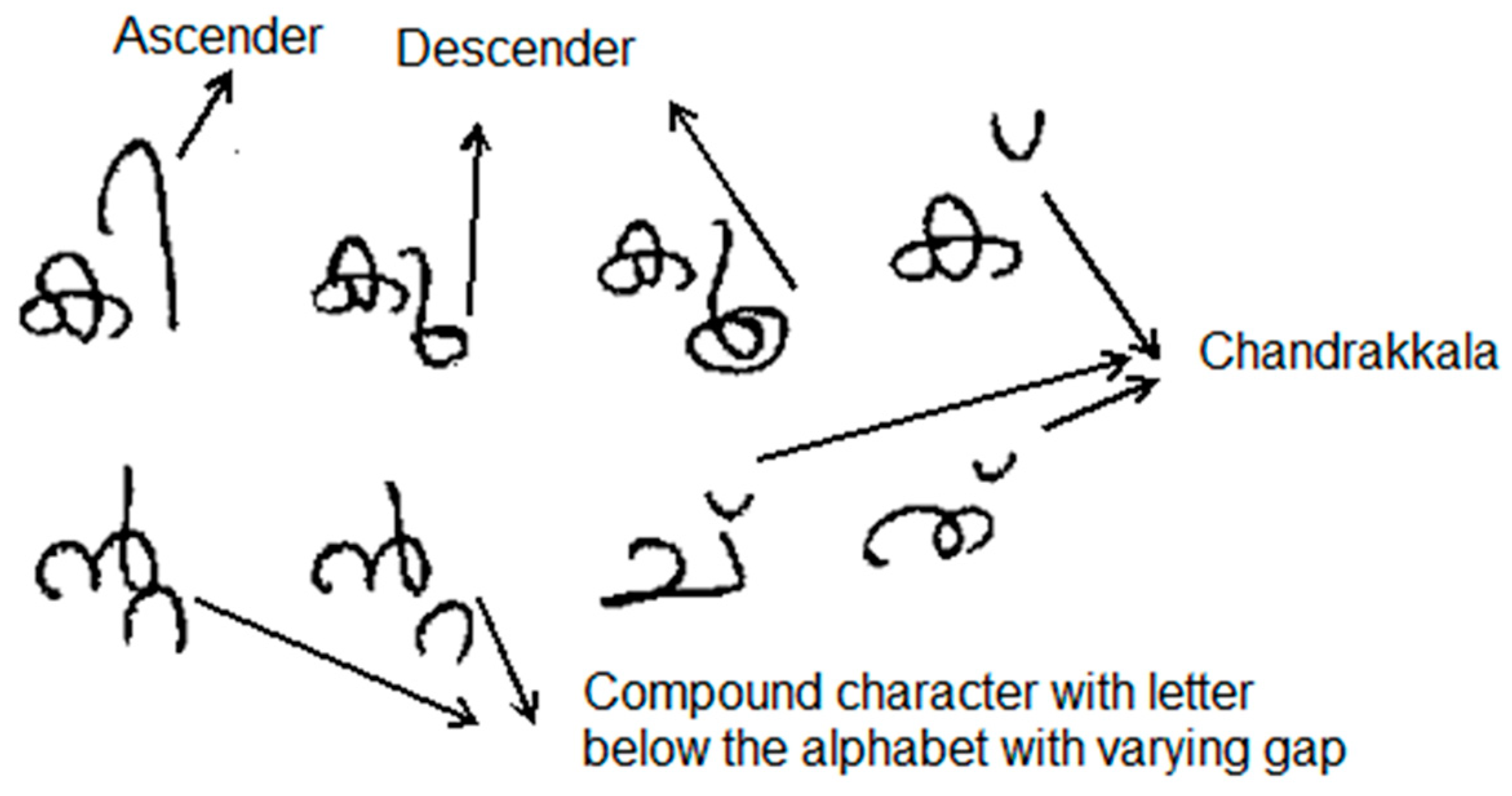

3.1. Preprocessing

3.2. Detection of Overlapping Lines

- Find the average value of the height of the characters () in the preprocessed handwritten image, . This is obtained by finding the average height of the connected components in .

- Identify the region containing the lines in each vertical stripe. Then, find the median value of the number of rows in each region, which indicates the line height, . The obtained value is the median value of the line heights, , in a vertical stripe.

- The threshold value, , for identifying the overlapping lines is calculated based on the values obtained from step 1 and step 2. Threshold is computed as follows.If ( ),Otherwise,

- Compare the height of each line with the threshold value, .

- If the height of the line segment is above or equal to , it is detected as an overlapped line.

- If a line is detected as an overlapped line segment, then the number of lines in the overlapped line is calculated as follows.If (),If ( and ),If ( and ),If ( and ),

3.3. Separation of Overlapping Lines

3.4. Detection of Incorrectly Segmented Short Lines

- Determine the threshold value using the equationwhere is the average character width in a document image.

- A second threshold value is determined as follows.If (),If (),If (),

- Compute the number of non-zero vertical projection values, VPN, in the given text line segmented from the handwritten document. This is determined to check the sparsity of character fragments present in the short line.

- A short line is detectedIf ( and )OrIf ().Depending on the handwriting, the values of , may vary from one document to another.

3.5. Joining of Incorrectly Segmented Line to the Correct Line

4. Results and Discussion

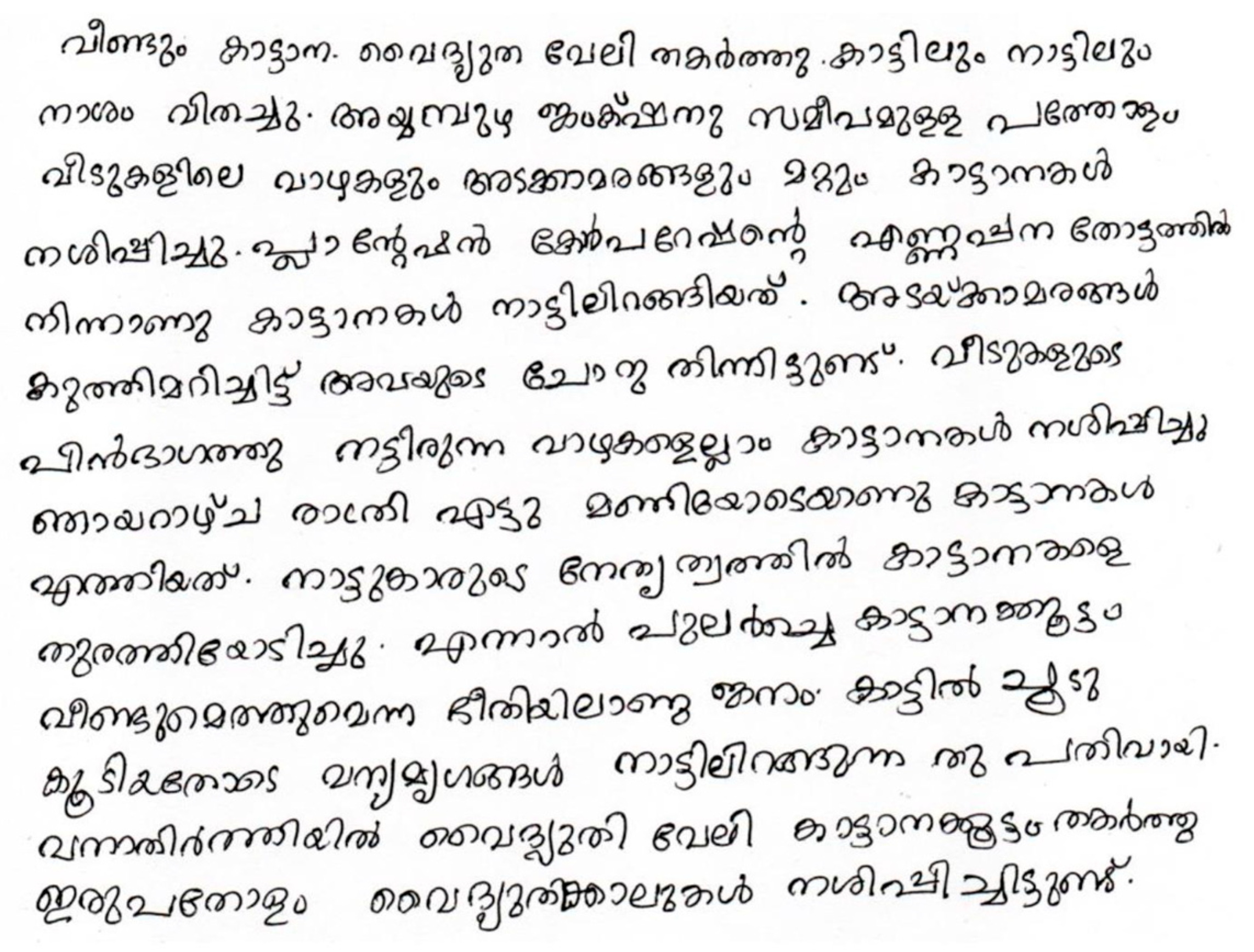

4.1. Database for Malayalam Handwritten Documents

4.2. Implementation Results

4.3. Analysis of Word Area and Text Line Density in Malayalam Handwritten Documents

4.4. Performance Evaluation

4.4.1. MatchScore

4.4.2. Detection Rate (DR), Recognition Accuracy (RA) and F-Measure (FM)

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liritzis, I.; Iliopoulos, I.; Andronache, I.; Kokkaliari, M.; Xanthopoulou, V. Novel Archaeometrical and Historical Transdisciplinary Investigation of Early 19th Century Hellenic Manuscript Regarding Initiation to Secret “Philike Hetaireia”. Mediterr. Archaeol. Archaeom. 2023, 23, 135–164. [Google Scholar] [CrossRef]

- Andronache, I.; Liritzis, I.; Jelinek, H.F. Fractal Algorithms and RGB Image Processing in Scribal and Ink Identification on an 1819 Secret Initiation Manuscript to the “Philike Hetaereia”. Sci. Rep. 2023, 13, 1735. [Google Scholar] [CrossRef] [PubMed]

- Srihari, S.N.; Yang, X.; Ball, G.R. Offline Chinese Handwriting Recognition: An Assessment of Current Technology. Front. Comput. Sci. China 2007, 1, 137–155. [Google Scholar] [CrossRef]

- Memon, J.; Sami, M.; Khan, R.A.; Uddin, M. Handwritten Optical Character Recognition (OCR): A Comprehensive Systematic Literature Review (SLR). IEEE Access 2020, 8, 142642–142668. [Google Scholar] [CrossRef]

- Likforman-Sulem, L.; Zahour, A.; Taconet, B. Text Line Segmentation of Historical Documents: A Survey. Int. J. Doc. Anal. Recognit. 2007, 9, 123–138. [Google Scholar] [CrossRef]

- Khandelwal, A.; Choudhury, P.; Sarkar, R.; Basu, S.; Nasipuri, M.; Das, N. Text Line Segmentation for Unconstrained Handwritten Document Images Using Neighborhood Connected Component Analysis. In Pattern Recognition and Machine Intelligence; Chaudhury, S., Mitra, S., Murthy, C.A., Sastry, P.S., Pal, S.K., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5909, pp. 369–374. ISBN 978-3-642-11163-1. [Google Scholar]

- Louloudis, G.; Gatos, B.; Halatsis, C. Text Line Detection in Unconstrained Handwritten Documents Using a Block-Based Hough Transform Approach. In Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), Parana, Brazil, 23–26 September 2007; Volume 2, pp. 599–603. [Google Scholar]

- Lee, S.-W. Advances in Handwriting Recognition; Series in Machine Perception and Artificial Intelligence; World Scientific: Singapore, 1999; Volume 34, ISBN 978-981-02-3715-8. [Google Scholar]

- Souhar, A.; Boulid, Y.; Ameur, E.; Ouagague, M. Segmentation of Arabic Handwritten Documents into Text Lines Using Watershed Transform. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 96. [Google Scholar] [CrossRef]

- Barakat, B.; Droby, A.; Kassis, M.; El-Sana, J. Text Line Segmentation for Challenging Handwritten Document Images Using Fully Convolutional Network. arXiv 2021, arXiv:2101.08299. [Google Scholar] [CrossRef]

- Kundu, S.; Paul, S.; Kumar Bera, S.; Abraham, A.; Sarkar, R. Text-Line Extraction from Handwritten Document Images Using GAN. Expert Syst. Appl. 2020, 140, 112916. [Google Scholar] [CrossRef]

- Barakat, B.K.; Droby, A.; Alaasam, R.; Madi, B.; Rabaev, I.; Shammes, R.; El-Sana, J. Unsupervised Deep Learning for Text Line Segmentation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 2304–2311. [Google Scholar]

- Kurar Barakat, B.; Cohen, R.; Droby, A.; Rabaev, I.; El-Sana, J. Learning-Free Text Line Segmentation for Historical Handwritten Documents. Appl. Sci. 2020, 10, 8276. [Google Scholar] [CrossRef]

- Tripathy, N.; Pal, U. Handwriting Segmentation of Unconstrained Oriya Text. In Proceedings of the Ninth International Workshop on Frontiers in Handwriting Recognition, Tokyo, Japan, 26–29 October 2004; pp. 306–311. [Google Scholar]

- Pal, U.; Datta, S. Segmentation of Bangla Unconstrained Handwritten Text. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, UK, 3–6 August 2003; Volume 1, pp. 1128–1132. [Google Scholar]

- Mamatha, H.R.; Srikantamurthy, K. Morphological Operations and Projection Profiles Based Segmentation of Handwritten Kannada Document. Int. J. Appl. Inf. Syst. 2012, 4, 13–19. [Google Scholar] [CrossRef]

- Kannan, B.; Jomy, J.; Pramod, K.V. A System for Offline Recognition of Handwritten Characters in Malayalam Script. Int. J. Image Graph. Signal Process. 2013, 5, 53–59. [Google Scholar] [CrossRef]

- Rahiman, M.A.; Rajasree, M.S.; Masha, N.; Rema, M.; Meenakshi, R.; Kumar, G.M. Recognition of Handwritten Malayalam Characters Using Vertical & Horizontal Line Positional Analyzer Algorithm. In Proceedings of the 2011 3rd International Conference on Electronics Computer Technology, Kanyakumari, India, 8–10 April 2011; pp. 268–274. [Google Scholar]

- John, J.; Pramod, K.V.; Balakrishnan, K. Offline Handwritten Malayalam Character Recognition Based on Chain Code Histogram. In Proceedings of the 2011 International Conference on Emerging Trends in Electrical and Computer Technology, Nagercoil, India, 23–24 March 2011; pp. 736–741. [Google Scholar]

- Gayathri, P.; Ayyappan, S. Off-Line Handwritten Character Recognition Using Hidden Markov Model. In Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI), New Delhi, India, 24–27 September 2014; pp. 518–523. [Google Scholar]

- Jino, P.J.; John, J.; Balakrishnan, K. Offline Handwritten Malayalam Character Recognition Using Stacked LSTM. In Proceedings of the 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 6–7 July 2017; pp. 1587–1590. [Google Scholar]

- Raju, G. Recognition of Unconstrained Handwritten Malayalam Characters Using Zero-Crossing of Wavelet Coefficients. In Proceedings of the 2006 International Conference on Advanced Computing and Communications, Mangalore, India, 20–23 December 2006; pp. 217–221. [Google Scholar]

- John, R.; Raju, G.; Guru, D.S. 1D Wavelet Transform of Projection Profiles for Isolated Handwritten Malayalam Character Recognition. In Proceedings of the International Conference on Computational Intelligence and Multimedia Applications (ICCIMA 2007), Sivakasi, India, 13–15 December 2007; pp. 481–485. [Google Scholar]

- Manjusha, K.; Kumar, M.A.; Soman, K.P. On Developing Handwritten Character Image Database for Malayalam Language Script. Eng. Sci. Technol. Int. J. 2019, 22, 637–645. [Google Scholar] [CrossRef]

- Optical Character Recognition. Available online: https://ocr.smc.org.in/ (accessed on 12 July 2023).

- Malayalam Typing Utility. Available online: https://kuttipencil.in/ (accessed on 12 July 2023).

- OCR for Indian Languages. Available online: https://ocr.tdil-dc.gov.in/ (accessed on 16 September 2021).

- Shanjana, C.; James, A. Offline Recognition of Malayalam Handwritten Text. Procedia Technol. 2015, 19, 772–779. [Google Scholar] [CrossRef]

- Gonzales, R.C.; Wintz, P. Digital Image Processing; Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1987. [Google Scholar]

- Marana, A.N.; Da Fontoura Costa, L.; Lotufo, R.A.; Velastin, S.A. Estimating Crowd Density with Minkowski Fractal Dimension. In Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing, Phoenix, AZ, USA, 15–19 March 1999; Volume 6, pp. 3521–3524. [Google Scholar]

- Gatos, B.; Stamatopoulos, N.; Louloudis, G. ICDAR2009 Handwriting Segmentation Contest. Int. J. Doc. Anal. Recognit. 2011, 14, 25–33. [Google Scholar] [CrossRef]

- Papavassiliou, V.; Stafylakis, T.; Katsouros, V.; Carayannis, G. Handwritten Document Image Segmentation into Text Lines and Words. Pattern Recognit. 2010, 43, 369–377. [Google Scholar] [CrossRef]

- Surinta, O.; Holtkamp, M.; Karabaa, F.; Oosten, J.-P.V.; Schomaker, L.; Wiering, M. A Path Planning for Line Segmentation of Handwritten Documents. In Proceedings of the 2014 14th International Conference on Frontiers in Handwriting Recognition, Crete, Greece, 1–4 September 2014; pp. 175–180. [Google Scholar]

- Alaei, A.; Pal, U.; Nagabhushan, P. A New Scheme for Unconstrained Handwritten Text-Line Segmentation. Pattern Recognit. 2011, 44, 917–928. [Google Scholar] [CrossRef]

| No. of Writers | Resolution of the Image | No. of Malayalam Handwritten Document Images | Average Number of Lines per Page | Total No. of Text Lines in the Document Images | No. of Ground Truth Images Created for the Text Lines Extracted from the Documents |

|---|---|---|---|---|---|

| 200 | 2338 × 1654 | 402 | 18 | 7535 | 7535 |

| Type of Text Line | No. of Lines | No. of Correctly Segmented Lines | Accuracy (%) |

|---|---|---|---|

| Text lines | 7535 | 6443 | 85.507 |

| Overlapping lines | 629 | 441 | 70.11 |

| Short lines | 2607 | 2577 | 98.85 |

| No. of GT Lines | No. of Detected Lines | DR (%) | RA (%) | FM (%) |

|---|---|---|---|---|

| 7535 | 6482 | 85.5 | 99.39 | 91.92 |

| Sl. No. | Algorithm for Text Line Extraction | No. of Correctly Segmented Text Lines | Accuracy (%) |

|---|---|---|---|

| 1 | A* Path Planning | 3258 | 58.19 |

| 2 | Piecewise Painting | 1495 | 26.7 |

| 3 | Proposed Method | 4912 | 87.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

P V, P.; Sankar, D. Handwriting-Based Text Line Segmentation from Malayalam Documents. Appl. Sci. 2023, 13, 9712. https://doi.org/10.3390/app13179712

P V P, Sankar D. Handwriting-Based Text Line Segmentation from Malayalam Documents. Applied Sciences. 2023; 13(17):9712. https://doi.org/10.3390/app13179712

Chicago/Turabian StyleP V, Pearlsy, and Deepa Sankar. 2023. "Handwriting-Based Text Line Segmentation from Malayalam Documents" Applied Sciences 13, no. 17: 9712. https://doi.org/10.3390/app13179712