A Scientific Document Retrieval and Reordering Method by Incorporating HFS and LSD

Abstract

:1. Introduction

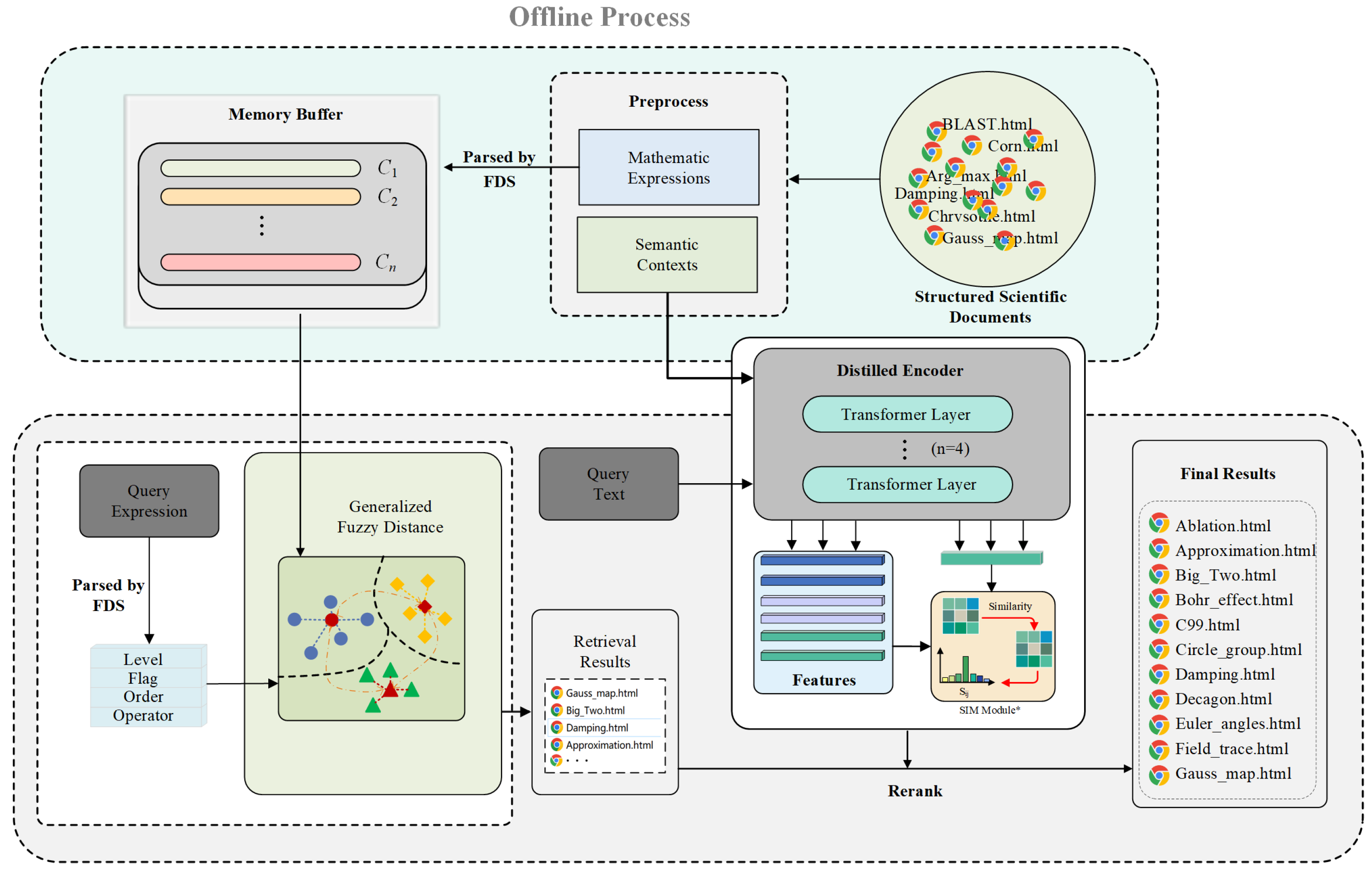

- An efficient data structure is designed to preserve the characteristics of the mathematical expression itself and optimize the data storage form, thereby taking advantage of the hesitant fuzzy set in multi-characteristic decision-supporting to obtain the similarity of the mathematical expression.

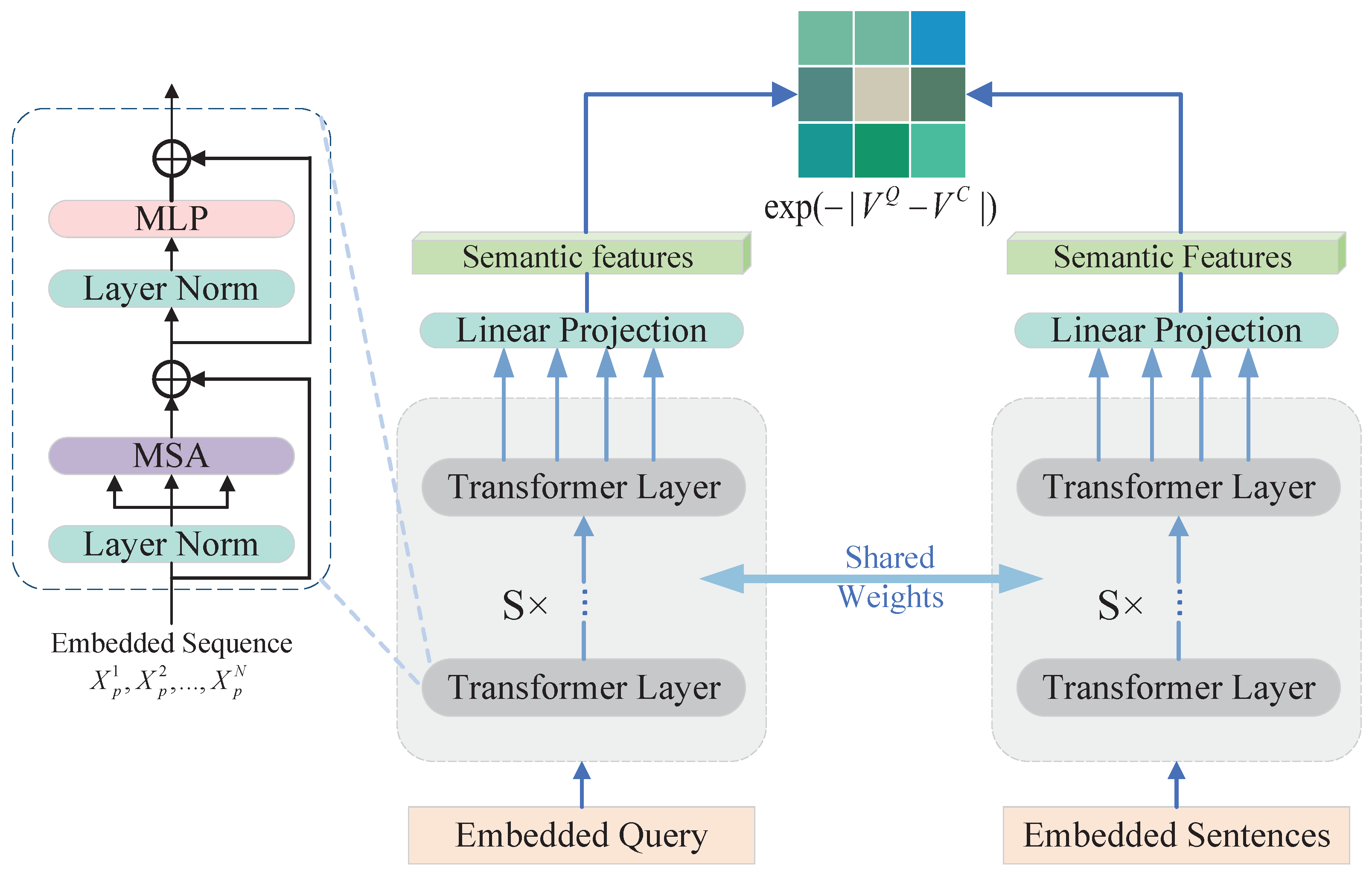

- Based on the teacher–student structure model, a knowledge distillation loss function that combines the intermediate layer hidden units and the output layer logical units is designed to obtain high-quality local semantic information.

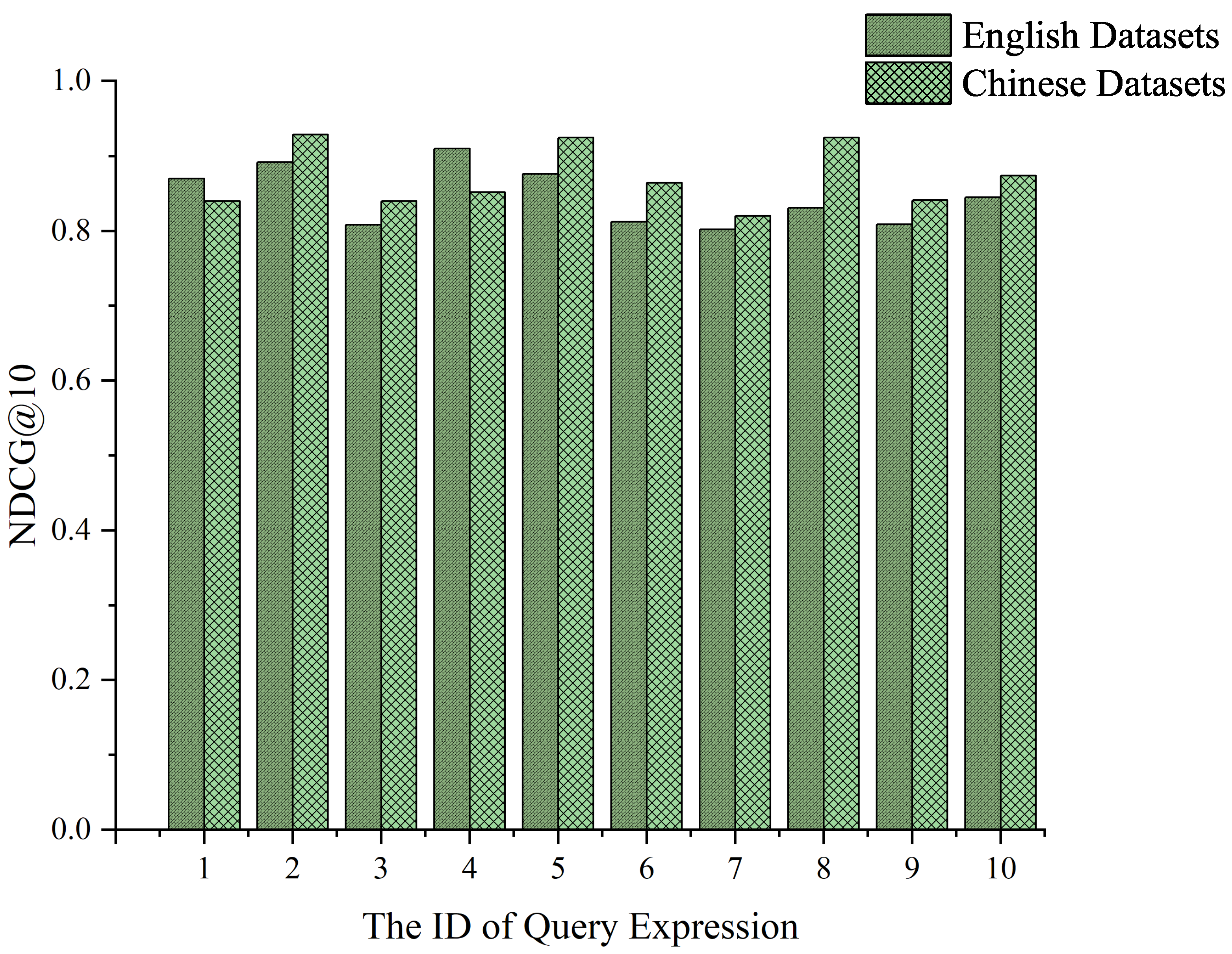

- By using semantic information to rearrange the retrieval results derived from mathematical expressions, the retrieval results of scientific documents are improved in terms of efficiency and accuracy. Furthermore, the dataset was augmented by including Chinese scientific documents (CSDs).

2. Related Work

2.1. Scientific Document Retrieval

2.2. Hesitant Fuzzy Set

2.3. Knowledge Distillation

3. Method

3.1. Mathematical Expression Matching

3.1.1. HFS

3.1.2. Establish HFS of Expression

3.1.3. Expression Similarity Calculation

| Algorithm 1 Match degree calculation of mathematical expression. |

|

3.2. Content Semantic Matching Based on Distilled Model

3.2.1. Knowledge Distillation Framework

3.2.2. Intermediate-Layer Local Semantic Transfer

3.2.3. Output-Layer Knowledge Transfer

3.2.4. Calculation of Contextual Semantic Similarity

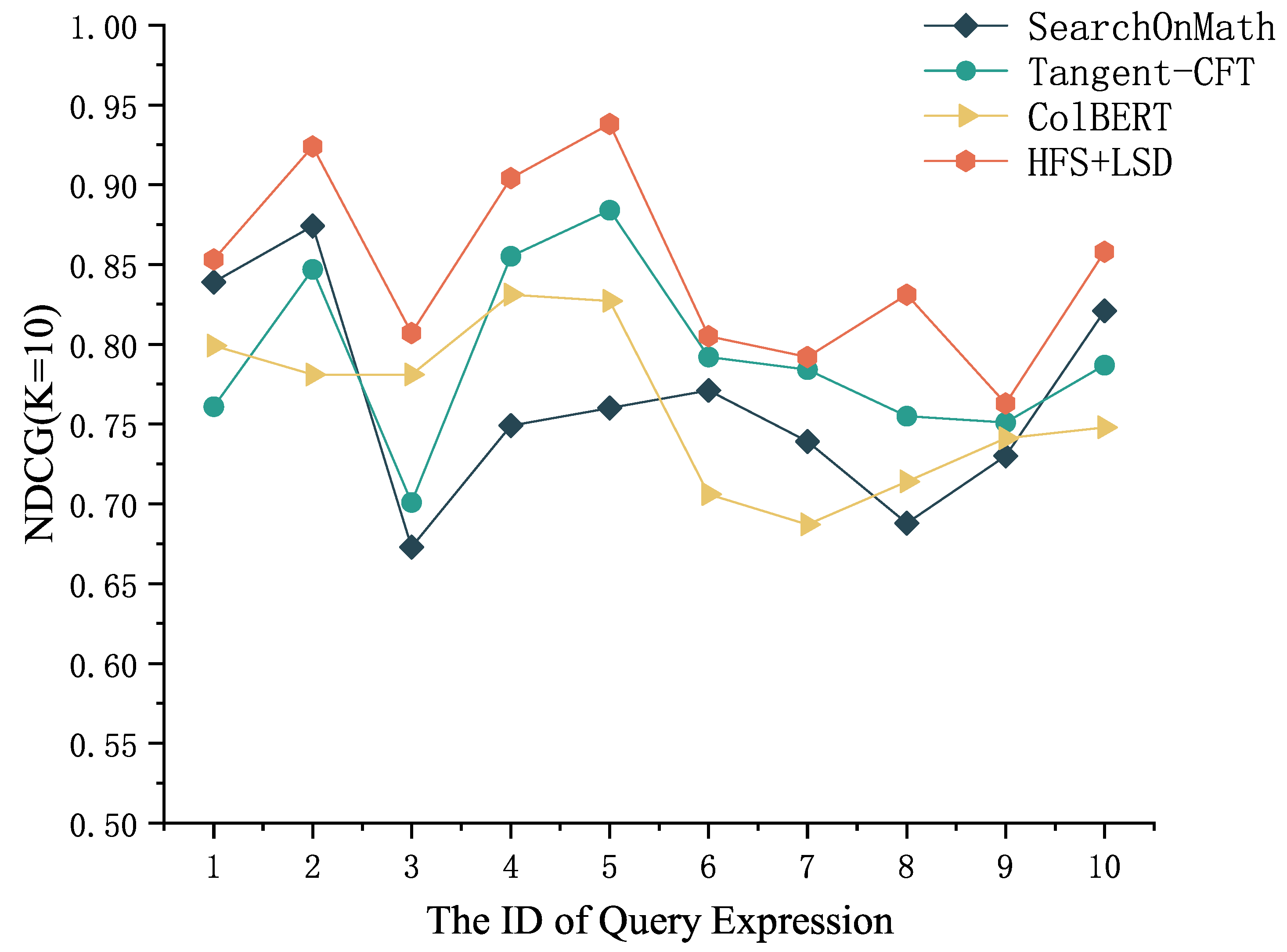

4. Experimental Process and Result Analysis

4.1. Experimental Data

4.2. System Experiment

4.2.1. Matching Results of Mathematical Expression Based on HFS

4.2.2. Retrieval Results of Scientific Documents by Incorporating HFS and LSD

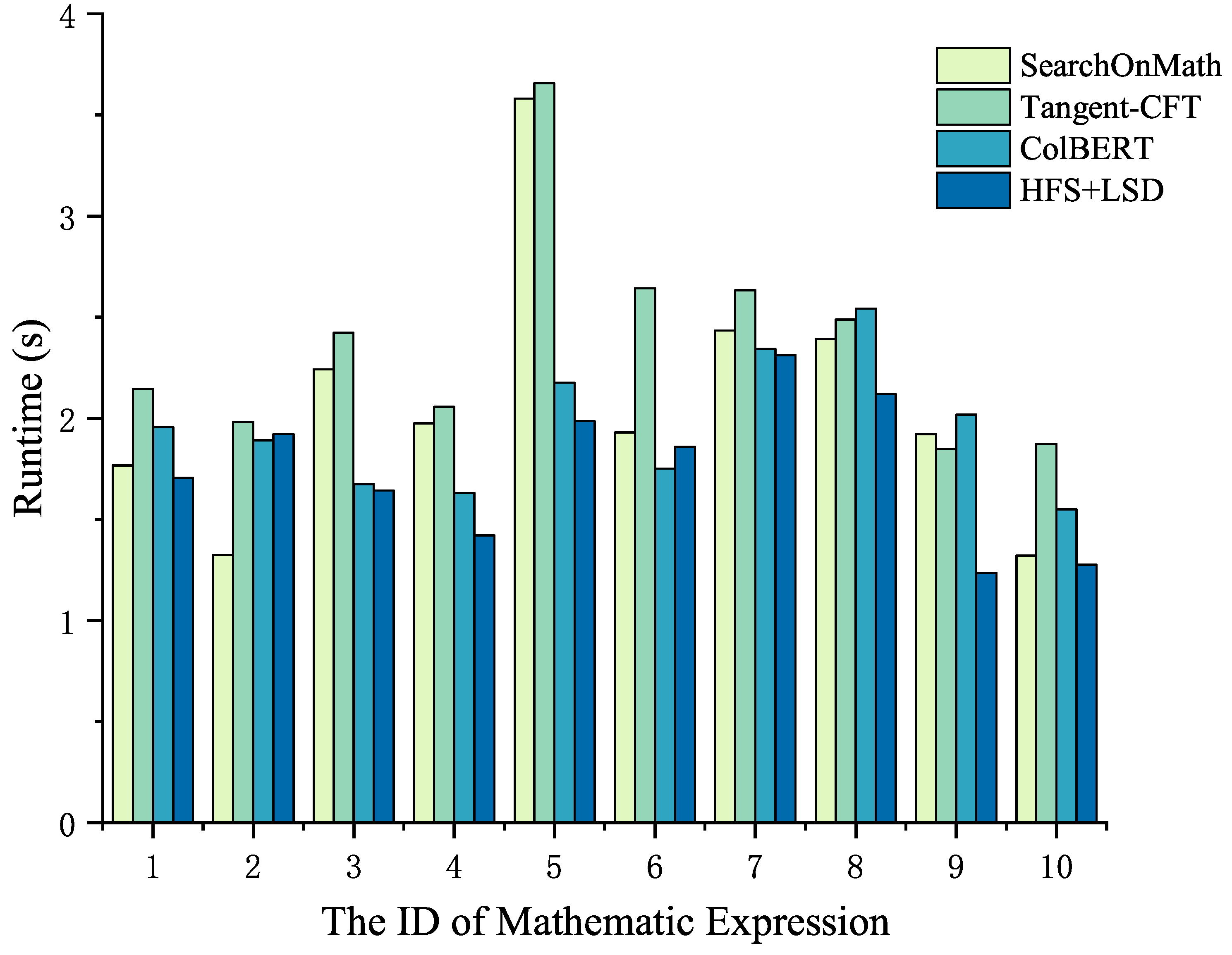

4.2.3. Comparative Experiment

5. Conclusions

- Investigating various knowledge distillation approaches, striving for optimization in areas such as model architecture and loss functions, and aiming to enhance the accuracy of semantic matching while accelerating model inference.

- Delving into the attribute contents of scientific documents from numerous perspectives, proficiently incorporating multi-attribute features during the retrieval process, and striving to further enhance the precision and utility of the scientific document retrieval system.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mansouri, B.; Zanibbi, R.; Oard, D.W. Learning to rank for mathematical formula retrieval. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 11–15 July 2021; pp. 952–961. [Google Scholar]

- Nishizawa, G.; Liu, J.; Diaz, Y.; Dmello, A.; Zhong, W.; Zanibbi, R. MathSeer: A math-aware search interface with intuitive formula editing, reuse, and lookup. In Proceedings of the Advances in Information Retrieval: 42nd European Conference on IR Research—ECIR 2020, Lisbon, Portugal, 14–17 April 2020; pp. 470–475. [Google Scholar]

- Mallia, A.; Siedlaczek, M.; Suel, T. An experimental study of index compression and DAAT query processing methods. In Proceedings of the Advances in Information Retrieval: 41st European Conference on IR Research, ECIR 2019, Cologne, Germany, 14–18 April 2019; pp. 353–368. [Google Scholar]

- Ni, J.; Ábrego, G.H.; Constant, N.; Ma, J.; Hall, K.B.; Cer, D.; Yang, Y. Sentence-t5: Scalable sentence encoders from pre-trained text-to-text models. arXiv 2021, arXiv:2108.08877. [Google Scholar]

- Mehta, S.; Shah, D.; Kulkarni, R.; Caragea, C. Semantic Tokenizer for Enhanced Natural Language Processing. arXiv 2023, arXiv:2304.12404. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Torra, V. Hesitant fuzzy sets. Int. J. Intell. Syst. 2010, 25, 529–539. [Google Scholar] [CrossRef]

- Pfahler, L.; Morik, K. Self-Supervised Pretraining of Graph Neural Network for the Retrieval of Related Mathematical Expressions in Scientific Articles. arXiv 2022, arXiv:2209.00446. [Google Scholar]

- Beltagy, I.; Lo, K.; Cohan, A. SciBERT: A pretrained language model for scientific text. arXiv 2019, arXiv:1903.10676. [Google Scholar]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef] [PubMed]

- Razdaibiedina, A.; Brechalov, A. MIReAD: Simple Method for Learning High-quality Representations from Scientific Documents. arXiv 2023, arXiv:2305.04177. [Google Scholar]

- Peng, S.; Yuan, K.; Gao, L.; Tang, Z. Mathbert: A pre-trained model for mathematical formula understanding. arXiv 2021, arXiv:2105.00377. [Google Scholar]

- Dadure, P.; Pakray, P.; Bandyopadhyay, S. Embedding and generalization of formula with context in the retrieval of mathematical information. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 6624–6634. [Google Scholar] [CrossRef]

- Ali, J. Hesitant fuzzy partitioned Maclaurin symmetric mean aggregation operators in multi-criteria decision-making. Phys. Scr. 2022, 97, 075208. [Google Scholar] [CrossRef]

- Ali, J. Probabilistic hesitant bipolar fuzzy Hamacher prioritized aggregation operators and their application in multi-criteria group decision-making. Comput. Appl. Math. 2023, 42, 260. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Mishra, A.R.; Chen, S.M.; Rani, P. Multiattribute decision making based on Fermatean hesitant fuzzy sets and modified VIKOR method. Inf. Sci. 2022, 607, 1532–1549. [Google Scholar] [CrossRef]

- Mahapatra, G.; Maneckshaw, B.; Barker, K. Multi-objective reliability redundancy allocation using MOPSO under hesitant fuzziness. Expert Syst. Appl. 2022, 198, 116696. [Google Scholar] [CrossRef]

- Pattanayak, R.M.; Behera, H.S.; Panigrahi, S. A novel high order hesitant fuzzy time series forecasting by using mean aggregated membership value with support vector machine. Inf. Sci. 2023, 626, 494–523. [Google Scholar] [CrossRef]

- Li, X.; Tian, B.; Tian, X. Scientific Documents Retrieval Based on Graph Convolutional Network and Hesitant Fuzzy Set. IEEE Access 2023, 11, 27942–27954. [Google Scholar] [CrossRef]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Sun, S.; Cheng, Y.; Gan, Z.; Liu, J. Patient knowledge distillation for bert model compression. arXiv 2019, arXiv:1908.09355. [Google Scholar]

- Wang, W.; Bao, H.; Huang, S.; Dong, L.; Wei, F. Minilmv2: Multi-head self-attention relation distillation for compressing pretrained transformers. arXiv 2020, arXiv:2012.15828. [Google Scholar]

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. Fitnets: Hints for thin deep nets. arXiv 2014, arXiv:1412.6550. [Google Scholar]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. Tinybert: Distilling bert for natural language understanding. arXiv 2019, arXiv:1909.10351. [Google Scholar]

- Chen, X.; He, B.; Hui, K.; Sun, L.; Sun, Y. Simplified tinybert: Knowledge distillation for document retrieval. In Proceedings of the Advances in Information Retrieval: 43rd European Conference on IR Research, ECIR 2021, Virtual, 28 March–1 April 2021; pp. 241–248. [Google Scholar]

- Tian, X. A mathematical indexing method based on the hierarchical features of operators in formulae. In Proceedings of the 2nd International Conference on Automatic Control and Information Engineering (ICACIE 2017), Hong Kong, China, 26–28 August 2017; pp. 49–52. [Google Scholar]

- Xu, Z.; Xia, M. Distance and similarity measures for hesitant fuzzy sets. Inf. Sci. 2011, 181, 2128–2138. [Google Scholar] [CrossRef]

- Wang, H.; Tian, X.; Zhang, K.; Cui, X.; Shi, Q.; Li, X. A multi-membership evaluating method in ranking of mathematical retrieval results. Sci. Technol. Eng. 2019, 8. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Nguyen, H.T.; Duong, P.H.; Cambria, E. Learning short-text semantic similarity with word embeddings and external knowledge sources. Knowl.-Based Syst. 2019, 182, 104842. [Google Scholar] [CrossRef]

- Oliveira, R.M.; Gonzaga, F.B.; Barbosa, V.C.; Xexéo, G.B. A distributed system for SearchOnMath based on the Microsoft BizSpark program. arXiv 2017, arXiv:1711.04189. [Google Scholar]

- Mansouri, B.; Rohatgi, S.; Oard, D.W.; Wu, J.; Giles, C.L.; Zanibbi, R. Tangent-CFT: An embedding model for mathematical formulas. In Proceedings of the ACM SIGIR International Conference on Theory of Information Retrieval, Santa Clara, CA, USA, 2–5 October 2019; pp. 11–18. [Google Scholar]

- Khattab, O.; Zaharia, M. Colbert: Efficient and effective passage search via contextualized late interaction over bert. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 39–48. [Google Scholar]

| Evaluation Attribute | Membership Function | Description |

|---|---|---|

| Level | represent the r and t symbols in the query expression and storage expression, respectively. | |

| Flag | represents the spatial relationship between and . If they are identical, it is assigned a value of 1; otherwise, it is set to 0. | |

| Order | is a balancing factor that ensures the value of falls within the range of 0–1. | |

| Operator | When represents an operator, the value of is set to 1; otherwise, it is assigned a value of 0. |

| No. | Query Expression | Query Text |

|---|---|---|

| 1 | The basic relationship between sines and cosines is called the Pythagorean theorem | |

| 2 | The mathematical representation of the Gaussian function | |

| 3 | Two solutions of any quadratic polynomial can be expressed as follows | |

| 4 | The relationship between the change in kinetic energy of an object and the work performed by the resultant external force | |

| 5 | ƒ(x) can be made as close as desired to 0 by making x close enough but not equal to a | |

| 6 | Any two particles have a mutual attractive force in the direction of the line connecting their centers | |

| 7 | Derivatives of power functions | |

| 8 | The Taylor formula uses the information of a function at a certain point to describe its nearby values | |

| 9 | The arithmetic mean of two non-negative real numbers is greater than or equal to their geometric mean | |

| 10 | first explicit solution of the quadratic equation |

| Dataset | MAP_5 | MAP_10 | MAP_20 |

|---|---|---|---|

| English dataset | 0.912 | 0.833 | 0.784 |

| Chinese dataset | 0.907 | 0.827 | 0.776 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Z.; Tian, X. A Scientific Document Retrieval and Reordering Method by Incorporating HFS and LSD. Appl. Sci. 2023, 13, 11207. https://doi.org/10.3390/app132011207

Feng Z, Tian X. A Scientific Document Retrieval and Reordering Method by Incorporating HFS and LSD. Applied Sciences. 2023; 13(20):11207. https://doi.org/10.3390/app132011207

Chicago/Turabian StyleFeng, Ziyang, and Xuedong Tian. 2023. "A Scientific Document Retrieval and Reordering Method by Incorporating HFS and LSD" Applied Sciences 13, no. 20: 11207. https://doi.org/10.3390/app132011207

APA StyleFeng, Z., & Tian, X. (2023). A Scientific Document Retrieval and Reordering Method by Incorporating HFS and LSD. Applied Sciences, 13(20), 11207. https://doi.org/10.3390/app132011207