Coal Gangue Target Detection Based on Improved YOLOv5s

Abstract

:1. Introduction

- (1)

- While maintaining a high detection speed in the field of coal gangue detection, the precision is significantly improved over the original network.

- (2)

- It pays more attention to the global features and increases the receptive field.

- (3)

- It provides an important reference for the coal gangue sorting industry.

2. Network Model Improvements

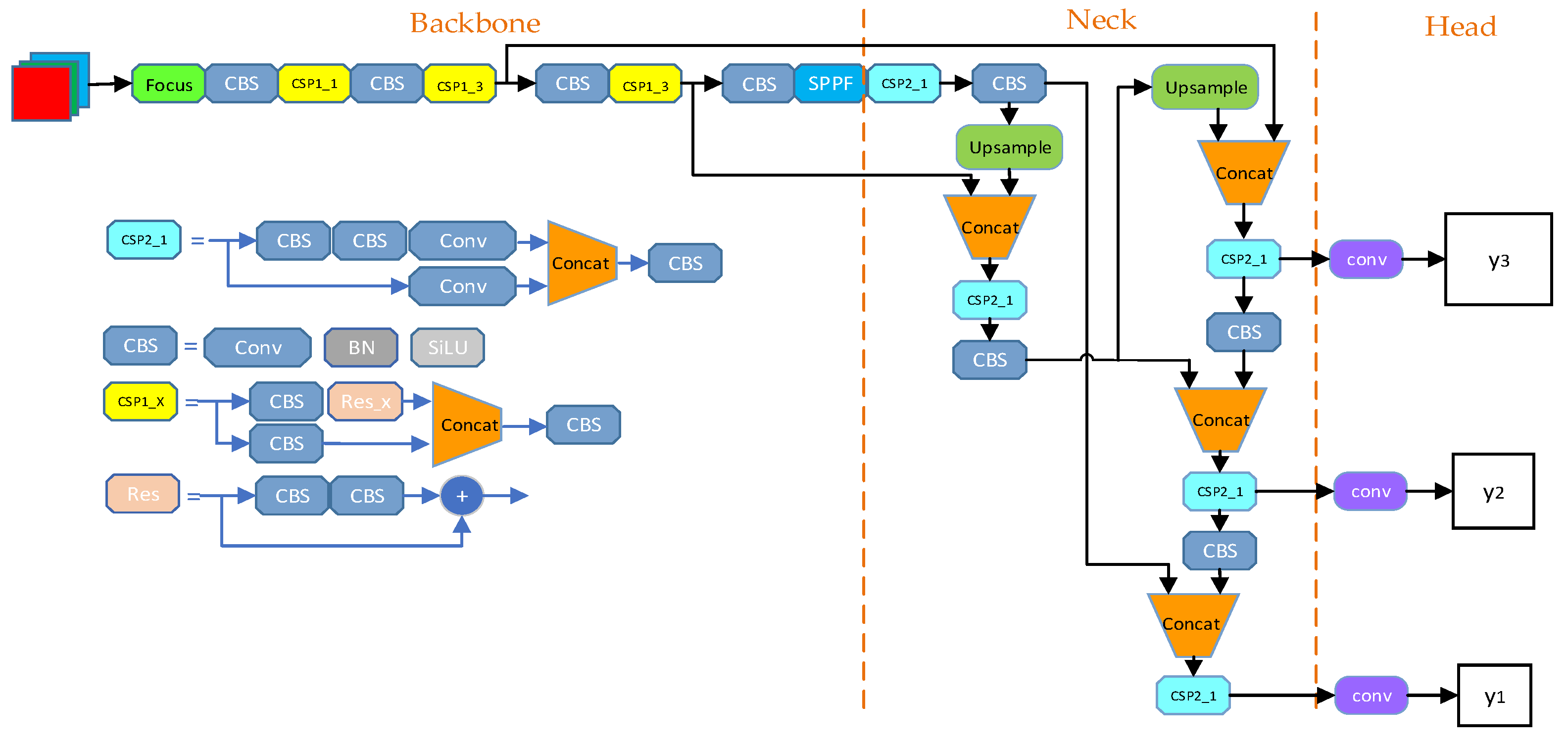

2.1. YOLOv5s Network Architecture

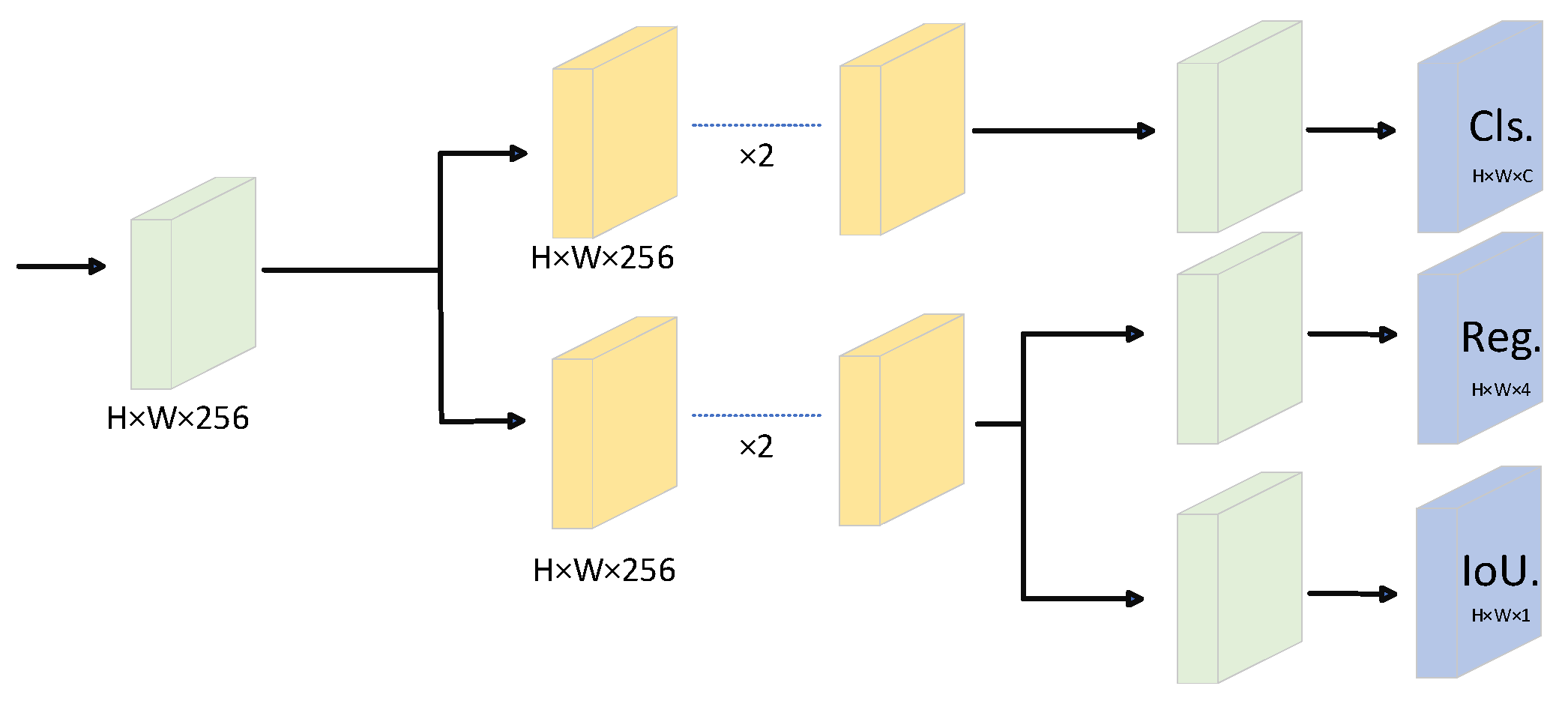

2.2. Decoupled Head

2.3. SimAM Attention Mechanism

2.4. Slim-Neck Structure

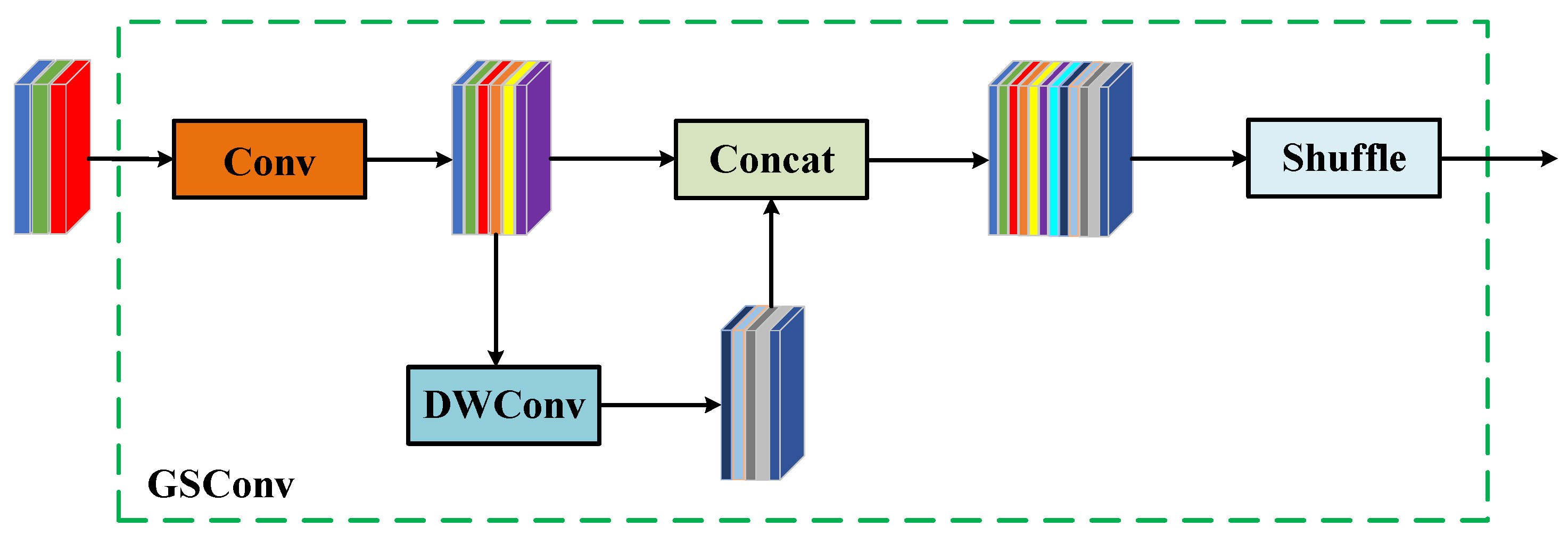

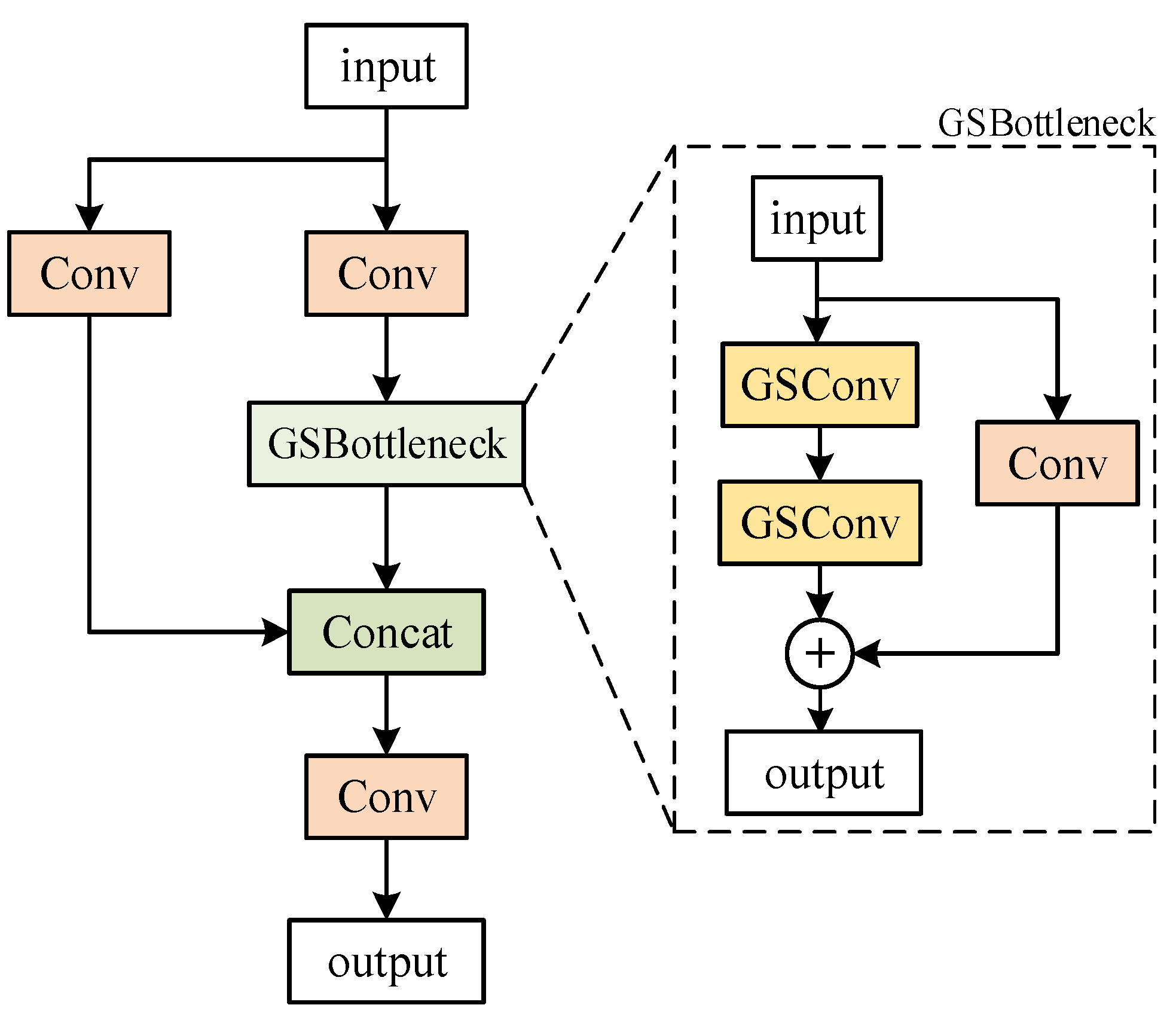

2.5. DGSV-YOLOv5s-SA Network Structure

3. Experimental Results and Analysis

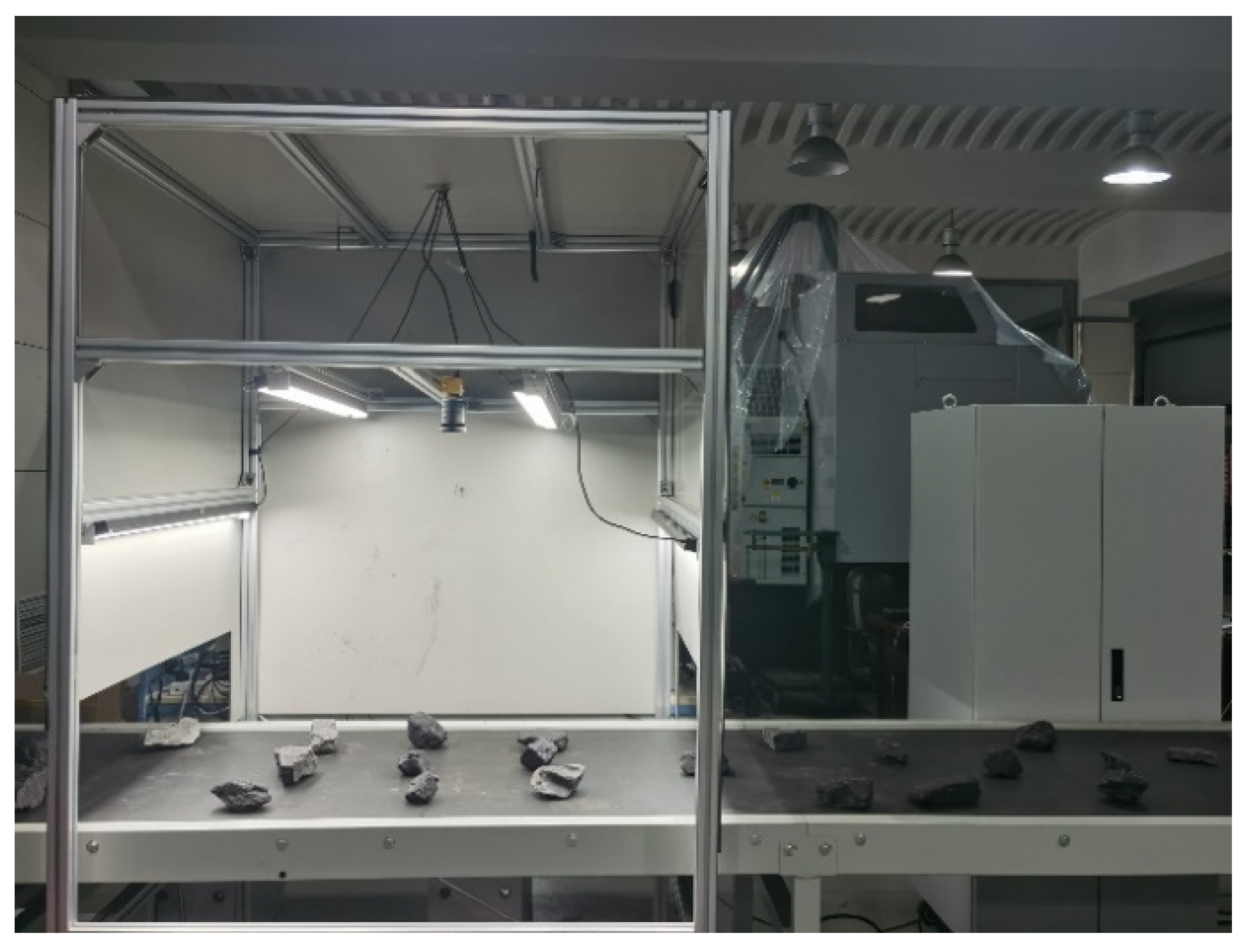

3.1. Experimental Platform

3.2. Evaluation Indicators

- —Precision, as the proportion of correct portions detected to the number of positive samples detected.

- —Recall, as the proportion of the fraction of correct detections to the total number of positive samples.

- —An important metric for evaluating binary classification problems; the higher the value, the better the detection.

- —Mean average precision.

- —The number of positive samples that the model correctly detects.

- —The number of samples that were incorrectly detected.

- —The number of samples that were missed.

- —Average precision.

- —Number of categories.

- —Distance between predicted and desired information (labels).

- —Weighted confidence level loss.

- —Weighted detection box loss.

- —Weighted classification loss.

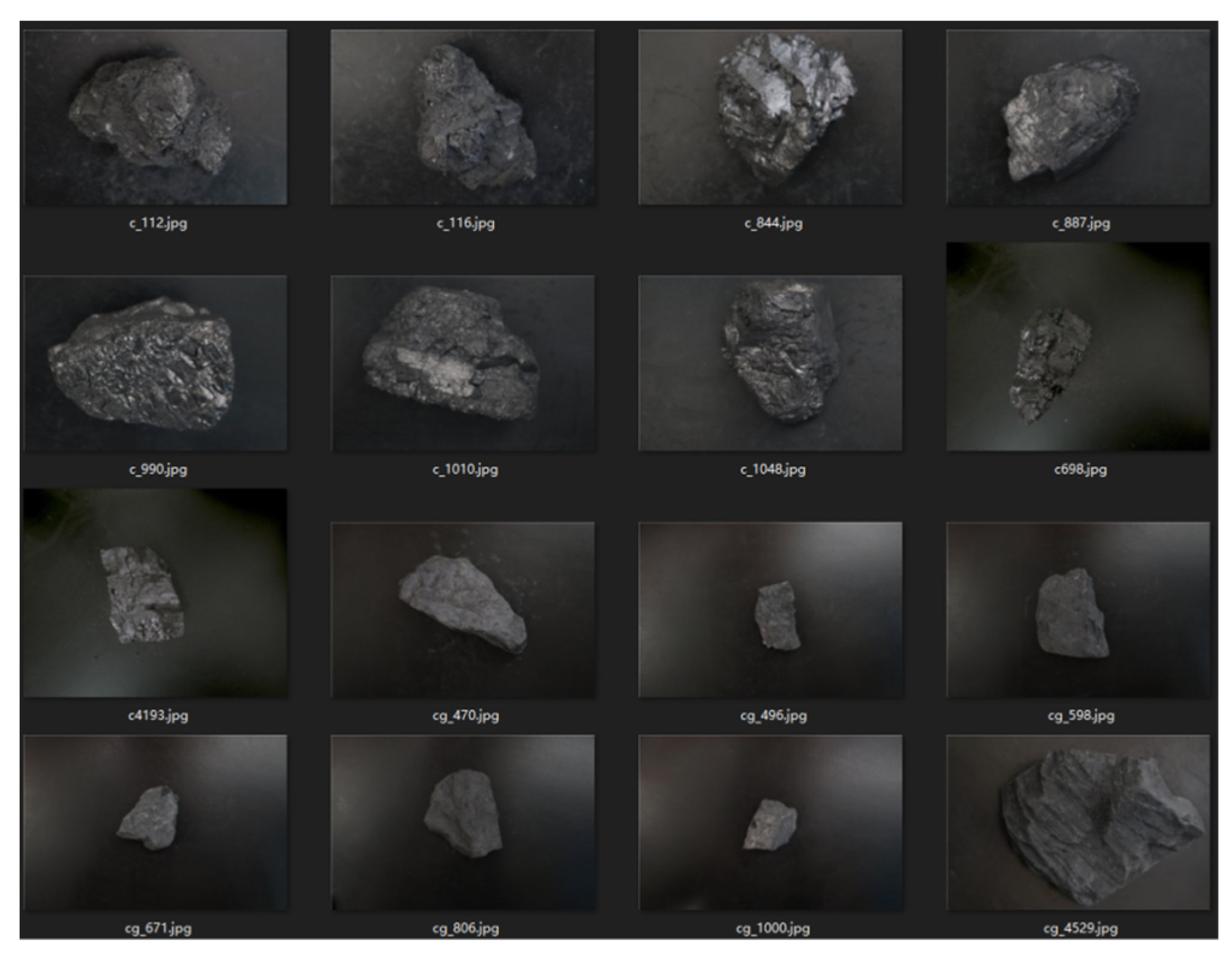

3.3. Dataset Establishment

3.4. Experimental Results and Analysis

4. Conclusions

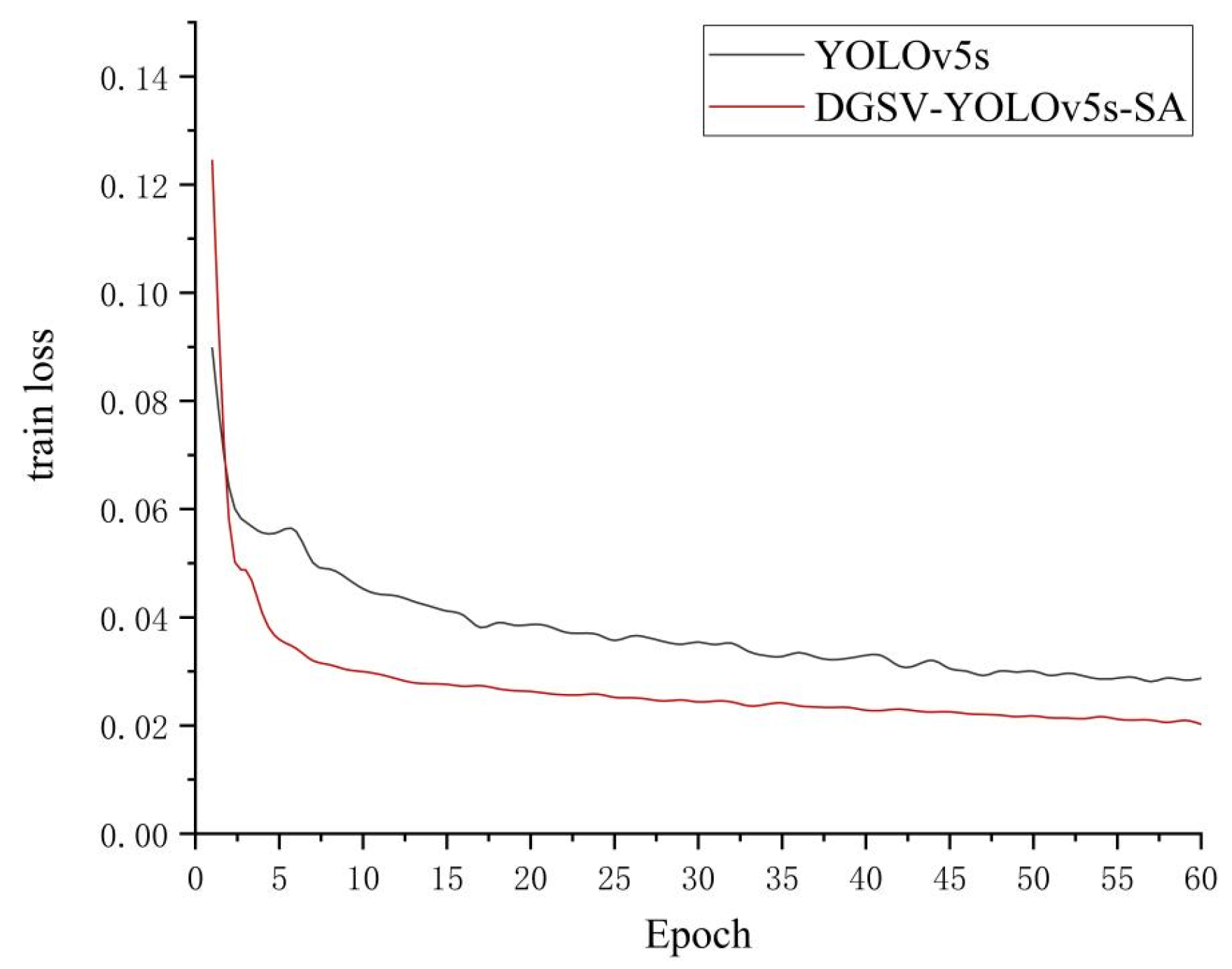

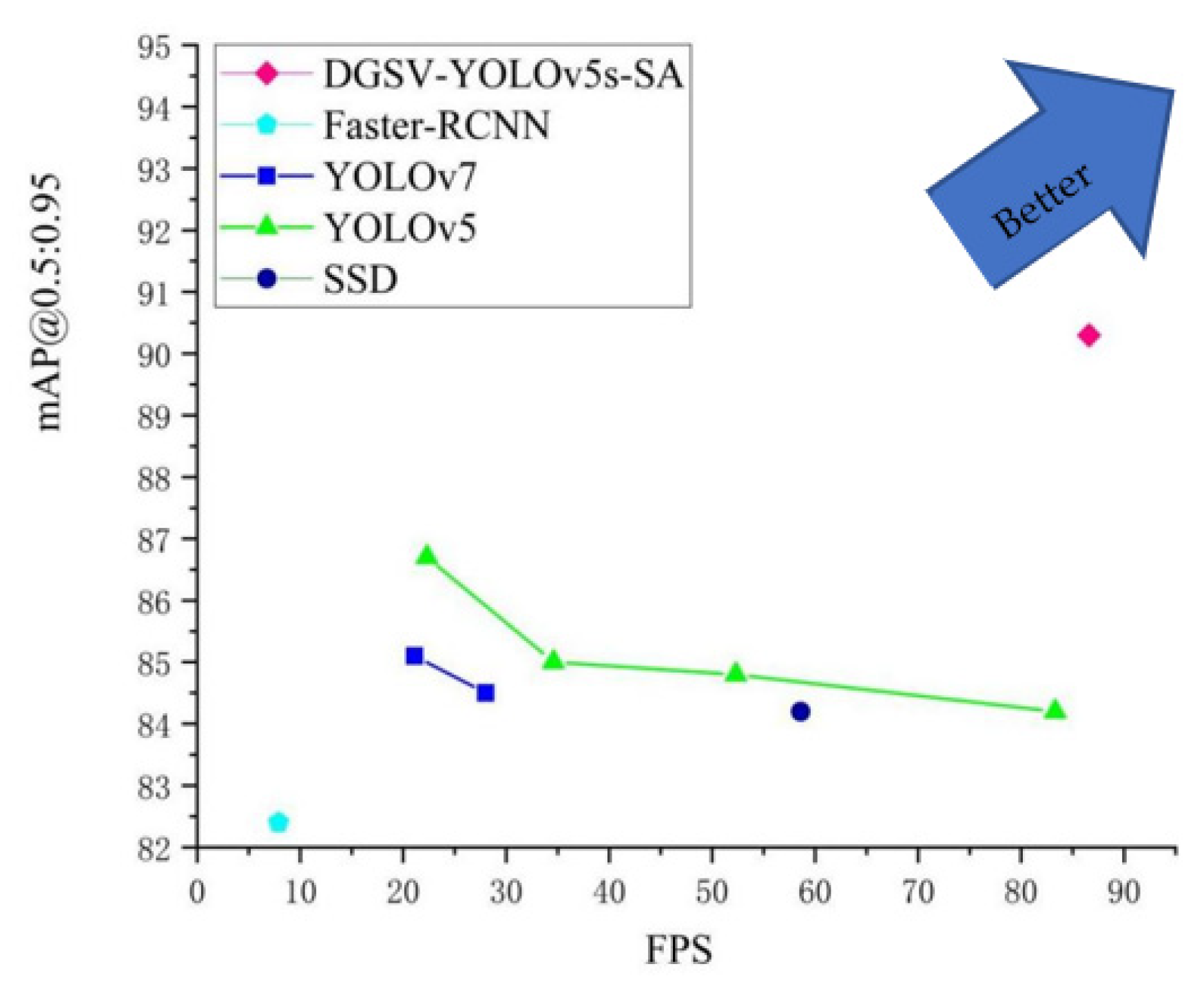

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chang, Y.X.; Zhu, X.S.; Song, C.B.; Wei, Z.R. Hazard of gangue and its control. Chin. J. Geol. Hazard Control 2001, 02, 42–46. [Google Scholar]

- Guo, X.J. Research and application of coal gangue separation technology. Coal Eng. 2017, 49, 74–76. [Google Scholar]

- Lu, Y.C.; Yu, Z.S. Study on a coal gangue photoelectric sorting system and its anti-interference technology. Mining R&D 2020, 40, 144–147. [Google Scholar]

- Wang, S.; He, L.; Guo, Y.C.; Hu, K.; Li, D.Y.; Zhao, Y.Q.; Ma, X. Dual-energy X-ray transmission identification method of multi-thickness coal and gangue based on SVM distance transformation. Fuel 2024, 356, 129593. [Google Scholar] [CrossRef]

- Zhao, L.J.; Han, L.G.; Zhang, H.N.; Liu, Z.F.; Gao, F.; Yang, S.J.; Wang, Y.D. Study on recognition of coal and gangue based on multimode feature and image fusion. PLoS ONE 2023, 18, e0281397. [Google Scholar] [CrossRef] [PubMed]

- Yuan, L.H.; Fu, L.; Yang, Y.; Miao, J. Analysis of texture feature extracted by gray level co-occurrence matrix. J. Comput. Appl. 2009, 29, 1018–1021. [Google Scholar] [CrossRef]

- Yu, G.F. Expanded order co-occurrence matrix to differentiate between coal and gangue based on interval grayscale compression. J. Image Graph. 2012, 17, 966–970. [Google Scholar]

- Guo, Y.C.; Wang, X.Q.; Wang, S.; Hu, K.; Wang, W.S. Identification Method of Coal and Coal Gangue Based on Dielectric Characteristics. IEEE Access 2021, 9, 9845–9854. [Google Scholar] [CrossRef]

- Sun, Z.Y.; Lu, W.H.; Xuan, P.C.; Li, H.; Zhang, S.S.; Niu, S.C.; Jia, R.Q. Separation of gangue from coal based on supplementary texture by morphology. Int. J. Coal Prep. Util. 2019, 42, 221–237. [Google Scholar] [CrossRef]

- Fu, C.C.; Lu, F.L.; Zhang, G.Y. Discrimination analysis of coal and gangue using multifractal properties of optical texture. Int. J. Coal Prep. Util. 2020, 42, 1925–1937. [Google Scholar] [CrossRef]

- Tripathy, D.P.; Reddy, K.G.R. Novel Methods for Separation of Gangue from Limestone and Coal using Multispectral and Joint Color-Texture Features. J. Inst. Eng. (India) Ser. D 2017, 98, 109–117. [Google Scholar] [CrossRef]

- Hou, W. Identification of Coal and Gangue by Feed-forward Neural Network Based on Data Analysis. Int. J. Coal Prep. Util. 2019, 39, 33–43. [Google Scholar] [CrossRef]

- Dou, D.Y.; Wu, W.Z.; Yang, J.G.; Zhang, Y. Classification of coal and gangue under multiple surface conditions via machine vision and relief-SVM. Powder Technol. 2019, 356, 1024–1028. [Google Scholar] [CrossRef]

- Li, Y. Research on Coal Gangue Detection Based on Deep Learning; Xi’an University of Science and Technology: Xi’an, China, 2020. [Google Scholar]

- Pu, Y.Y.; Apel, D.B.; Szmigiel, A.; Chen, J. Image Recognition of Coal and Coal Gangue Using a Convolutional Neural Network and Transfer Learning. Energies 2019, 12, 1735. [Google Scholar] [CrossRef]

- Alfarzaeai, M.S.; Niu, Q.; Zhao, J.Q.; Eshaq, R.M.A.; Hu, E.Y. Coal/Gangue Recognition Using Convolutional Neural Networks and Thermal Images. IEEE Access 2020, 8, 76780–76789. [Google Scholar] [CrossRef]

- Liu, Q.; Li, J.G.; Li, Y.S.; Gao, M.W. Recognition Methods for Coal and Coal Gangue Based on Deep Learning. IEEE Access 2021, 9, 77599–77610. [Google Scholar] [CrossRef]

- Cao, X.G.; Liu, S.Y.; Wang, P.; Xu, G.; Wu, X.D. Research on coal gangue identification and positioning system based on coal-gangue sorting robot. Coal Sci. Technol. 2022, 50, 237–246. [Google Scholar]

- Gao, R.; Sun, Z.Y.; Li, W.; Pei, L.L.; Hu, Y.J.; Xiao, L.Y. Automatic Coal and Gangue Segmentation Using U-Net Based Fully Convolutional Networks. Energies 2020, 13, 829. [Google Scholar] [CrossRef]

- Lai, W.H.; Zhou, M.R.; Hu, F.; Bian, K.; Song, H.P. Coal Gangue Detection Based on Multi-spectral Imaging and Improved YOLO v4. Acta Opt. Sin. 2020, 40, 72–80. [Google Scholar]

- Glenn, J. Yolo v5 [EB/OL]. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 10 January 2022).

- Ge, Z.; Liu, S.T.; Wang, F.; Li, Z.M.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Yang, L.X.; Zhang, R.Y.; Li, L.D.; Xie, X.H. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Li, H.L.; Li, J.; Wei, H.B.; Liu, Z.; Zhan, Z.F.; Ren, Q.L. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2016, arXiv:1512.02325. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Ramprasaath, R.S.; Michael, C.; Abhishek, D.; Ramakrishna, V.; Devi, P.; Dhruv, B. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2019, 128, 336–359. [Google Scholar]

| Name | Information |

|---|---|

| Operating system | Ubuntu 20.04 |

| Deep learning frameworks | Pytorch 1.12.1 |

| Processor | Intel(R) Xeon(R) Gold 6226R ×2 |

| RAM | 196 Gb |

| Video card | NVIDIA Tesla T4 ×4 |

| Operational environment | Anaconda3 |

| Python version | 3.9 |

| Model | F1 (%) | R (%) | FPS | mAP@0.5:0.95(%) |

|---|---|---|---|---|

| YOLOv5s | 98.8 | 98.8 | 83.3 | 84.2 |

| YOLOv5m | 98.4 | 98.5 | 52.3 | 84.8 |

| YOLOv5l | 98.5 | 98.7 | 34.6 | 85.0 |

| YOLOv5x | 99.2 | 99.3 | 22.3 | 86.7 |

| YOLOv7 | 98.8 | 99.1 | 28 | 84.5 |

| YOLOv7x | 99.1 | 99.3 | 21.1 | 85.1 |

| SSD | 99.2 | 99 | 58.6 | 84.2 |

| Faster-RCNN | 98 | 99.4 | 7.9 | 82.4 |

| DGSV-YOLOv5s-SA | 99.7 | 99.7 | 86.6 | 90.3 |

| Model | F1 (%) | R (%) | FPS | mAP@0.5:0.95(%) |

|---|---|---|---|---|

| YOLOv5s | 98.8 | 98.8 | 83.3 | 84.2 |

| Decoupled Head-YOLOv5s | 98.7 | 98.8 | 58.4 | 86.3 |

| YOLOv5s-SimAM | 98.6 | 98.8 | 91.7 | 85.8 |

| GS-VoV-YOLOv5s | 99.5 | 99.6 | 97.1 | 88.2 |

| DGSV-YOLOv5s-SA | 99.7 | 99.7 | 86.6 | 90.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Zhu, J.; Li, Z.; Sun, X.; Wang, G. Coal Gangue Target Detection Based on Improved YOLOv5s. Appl. Sci. 2023, 13, 11220. https://doi.org/10.3390/app132011220

Wang S, Zhu J, Li Z, Sun X, Wang G. Coal Gangue Target Detection Based on Improved YOLOv5s. Applied Sciences. 2023; 13(20):11220. https://doi.org/10.3390/app132011220

Chicago/Turabian StyleWang, Shuxia, Jiandong Zhu, Zuotao Li, Xiaoming Sun, and Guoxin Wang. 2023. "Coal Gangue Target Detection Based on Improved YOLOv5s" Applied Sciences 13, no. 20: 11220. https://doi.org/10.3390/app132011220

APA StyleWang, S., Zhu, J., Li, Z., Sun, X., & Wang, G. (2023). Coal Gangue Target Detection Based on Improved YOLOv5s. Applied Sciences, 13(20), 11220. https://doi.org/10.3390/app132011220