Multi-Object Deep-Field Digital Holographic Imaging Based on Inverse Cross-Correlation

Abstract

:1. Introduction

2. Principle

2.1. Digital Holographic Reconstruction

2.2. Introduction of Image Cross-Correlation

2.3. Calculation Flow Chart

3. Numerical Calculation Results

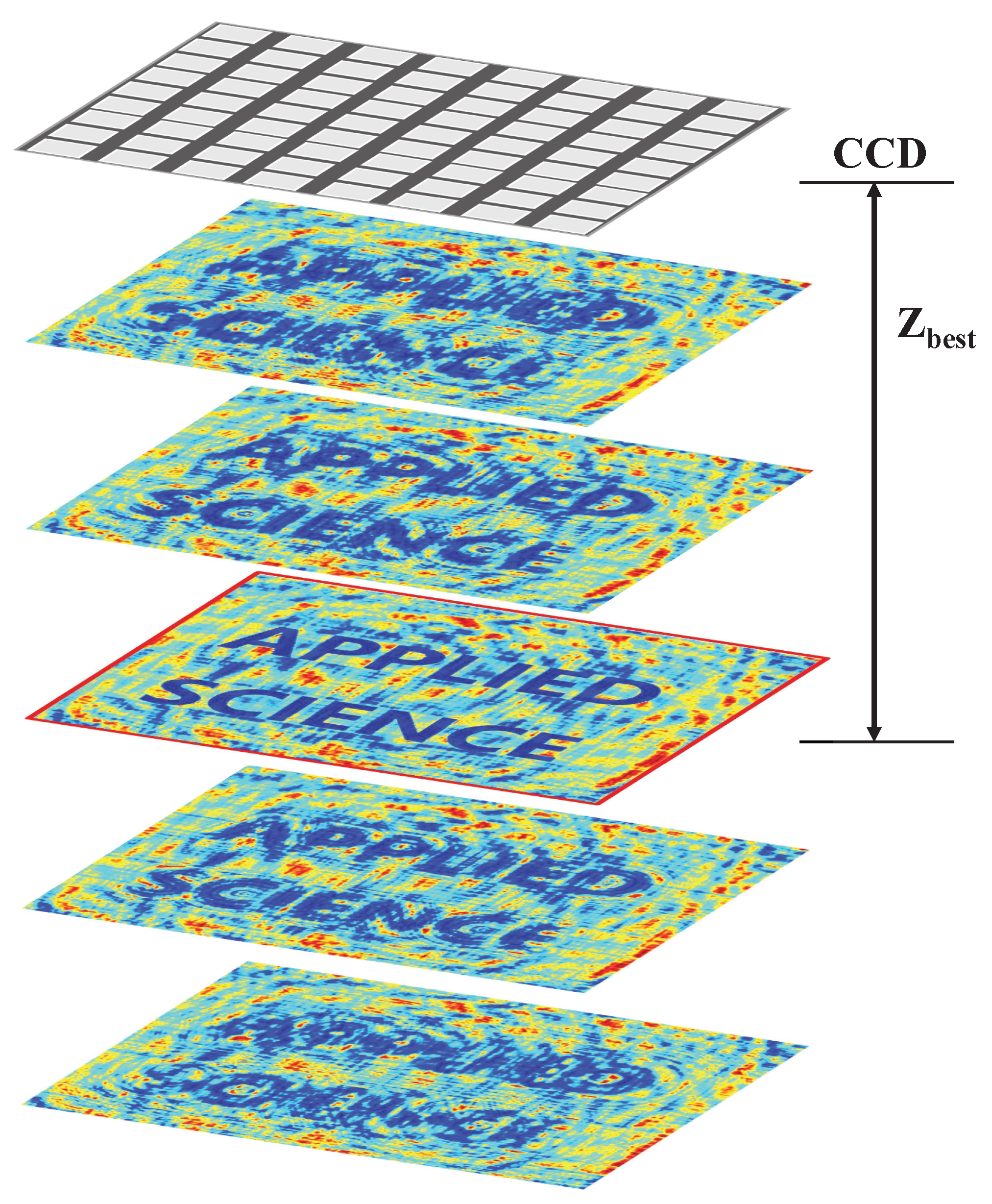

3.1. Viability Verification

3.2. Comparison with Single-Depth Estimation Algorithms

4. Experiment

4.1. Large Depth-of-Field Reconstruction in the Non-Overlapping Case

4.2. Large-Depth-of-Field Reconstruction in an Overlapping Case

5. Discussion

5.1. Factors Affecting Depth of Field

5.2. Axial Resolution

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yaroslavskii, L.P.; Merzlyakov, N.S. Methods of Digital Holography; Springer: Berlin/Heidelberg, Germany, 1980. [Google Scholar]

- Goodman, J.W. Introduction to Fourier Optics; Roberts and Company Publishers: Greenwood Village, CO, USA, 2005. [Google Scholar]

- Goodman, J.W.; Lawrence, R. Digital image formation from electronically detected holograms. Appl. Phys. Lett. 1967, 11, 77–79. [Google Scholar] [CrossRef]

- Wang, X.L.; Zhai, H.C.; Wang, Y.; Mu, G.G. Spatially angular multiplexing in ultra-short pulsed digital holography. Acta Phys. Sin. 2006, 55, 1137–1142. [Google Scholar] [CrossRef]

- Curtis, J.E.; Koss, B.A.; Grier, D.G. Dynamic holographic optical tweezers. Opt. Commun. 2002, 207, 169–175. [Google Scholar] [CrossRef]

- Many, G.; de Madron, X.D.; Verney, R.; Bourrin, F.; Renosh, P.; Jourdin, F.; Gangloff, A. Geometry, fractal dimension and settling velocity of flocs during flooding conditions in the Rhône ROFI. Estuar. Coast. Shelf Sci. 2019, 219, 1–13. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, Y.; Xu, T.; Upadhya, A.; Lim, Y.J.; Mathews, A.; Xie, L.; Lee, W.M. Holo-UNet: Hologram-to-hologram neural network restoration for high fidelity low light quantitative phase imaging of live cells. Biomed. Opt. Express 2020, 11, 5478–5487. [Google Scholar] [CrossRef]

- Kim, J.; Go, T.; Lee, S.J. Volumetric monitoring of airborne particulate matter concentration using smartphone-based digital holographic microscopy and deep learning. J. Hazard. Mater. 2021, 418, 126351. [Google Scholar] [CrossRef] [PubMed]

- Seebacher, S.; Osten, W.; Baumbach, T.; Jüptner, W. The determination of material parameters of microcomponents using digital holography. Opt. Lasers Eng. 2001, 36, 103–126. [Google Scholar] [CrossRef]

- Kumar, R.; Dwivedi, G. Emerging scientific and industrial applications of digital holography: An overview. Eng. Res. Express 2023, 5, 032005. [Google Scholar] [CrossRef]

- Osten, W.; Faridian, A.; Gao, P.; Körner, K.; Naik, D.; Pedrini, G.; Singh, A.K.; Takeda, M.; Wilke, M. Recent advances in digital holography. Appl. Opt. 2014, 53, G44–G63. [Google Scholar] [CrossRef]

- Fonseca, E.S.; Fiadeiro, P.T.; Pereira, M.; Pinheiro, A. Comparative analysis of autofocus functions in digital in-line phase-shifting holography. Appl. Opt. 2016, 55, 7663–7674. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, H.; Ma, Y. A new auto-focus measure based on medium frequency discrete cosine transform filtering and discrete cosine transform. Appl. Comput. Harmon. Anal. 2016, 40, 430–437. [Google Scholar] [CrossRef]

- Xie, H.; Rong, W.; Sun, L. Wavelet-based focus measure and 3-d surface reconstruction method for microscopy images. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 229–234. [Google Scholar]

- Wolbromsky, L.; Turko, N.A.; Shaked, N.T. Single-exposure full-field multi-depth imaging using low-coherence holographic multiplexing. Opt. Lett. 2018, 43, 2046–2049. [Google Scholar] [CrossRef] [PubMed]

- Tang, M.; Liu, C.; Wang, X.P. Autofocusing and image fusion for multi-focus plankton imaging by digital holographic microscopy. Appl. Opt. 2020, 59, 333–345. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Wu, J.; Jin, S.; Cao, L.; Jin, G. Dense-U-net: Dense encoder–decoder network for holographic imaging of 3D particle fields. Opt. Commun. 2021, 493, 126970. [Google Scholar] [CrossRef]

- Ren, Z.; Chen, N.; Lam, E.Y. Extended focused imaging and depth map reconstruction in optical scanning holography. Appl. Opt. 2016, 55, 1040–1047. [Google Scholar] [CrossRef]

- Jiao, A.; Tsang, P.; Poon, T.; Liu, J.; Lee, C.; Lam, Y. Automatic decomposition of a complex hologram based on the virtual diffraction plane framework. J. Opt. 2014, 16, 075401. [Google Scholar] [CrossRef]

- Tsang, P.W.M.; Poon, T.C.; Liu, J.P. Fast extended depth-of-field reconstruction for complex holograms using block partitioned entropy minimization. Appl. Sci. 2018, 8, 830. [Google Scholar] [CrossRef]

- Anand, A.; Chhaniwal, V.; Patel, N.; Javidi, B. Automatic identification of malaria-infected RBC with digital holographic microscopy using correlation algorithms. IEEE Photonics J. 2012, 4, 1456–1464. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks 28. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems, Indore, India, 7 December 2015. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Avants, B.B.; Epstein, C.L.; Grossman, M.; Gee, J.C. Symmetric diffeomorphic image registration with cross-correlation: Evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 2008, 12, 26–41. [Google Scholar] [CrossRef]

- Briechle, K.; Hanebeck, U.D. Template matching using fast normalized cross correlation. In Proceedings of the Optical Pattern Recognition XII, SPIE, Orlando, FL, USA, 16–20 April 2001; Volume 4387, pp. 95–102. [Google Scholar]

- Luo, J.; Konofagou, E.E. A fast normalized cross-correlation calculation method for motion estimation. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2010, 57, 1347–1357. [Google Scholar]

- Sarvaiya, J.N.; Patnaik, S.; Bombaywala, S. Image registration by template matching using normalized cross-correlation. In Proceedings of the IEEE 2009 International Conference on Advances in Computing, Control, and Telecommunication Technologies, Bangalore, India, 28–29 December 2009; pp. 819–822. [Google Scholar]

- Zahiri-Azar, R.; Salcudean, S.E. Motion estimation in ultrasound images using time domain cross correlation with prior estimates. IEEE Trans. Biomed. Eng. 2006, 53, 1990–2000. [Google Scholar] [CrossRef]

- Komarov, A.S.; Barber, D.G. Sea ice motion tracking from sequential dual-polarization RADARSAT-2 images. IEEE Trans. Geosci. Remote. Sens. 2013, 52, 121–136. [Google Scholar] [CrossRef]

- Deville, S.; Penjweini, R.; Smisdom, N.; Notelaers, K.; Nelissen, I.; Hooyberghs, J.; Ameloot, M. Intracellular dynamics and fate of polystyrene nanoparticles in A549 Lung epithelial cells monitored by image (cross-) correlation spectroscopy and single particle tracking. Biochim. Biophys. Acta (BBA)-Mol. Cell Res. 2015, 1853, 2411–2419. [Google Scholar] [CrossRef]

- Zhao, F.; Huang, Q.; Gao, W. Image matching by normalized cross-correlation. In Proceedings of the 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, Toulouse, France, 14–19 May 2006; Volume 2, p. II. [Google Scholar]

- Debella-Gilo, M.; Kääb, A. Sub-pixel precision image matching for measuring surface displacements on mass movements using normalized cross-correlation. Remote Sens. Environ. 2011, 115, 130–142. [Google Scholar] [CrossRef]

- Hisham, M.; Yaakob, S.N.; Raof, R.; Nazren, A.A.; Wafi, N. Template matching using sum of squared difference and normalized cross correlation. In Proceedings of the 2015 IEEE student conference on research and development (SCOReD), Kuala Lumpur, Malaysia, 13–14 December 2015; pp. 100–104. [Google Scholar]

- Rao, Y.R.; Prathapani, N.; Nagabhooshanam, E. Application of normalized cross correlation to image registration. Int. J. Res. Eng. Technol. 2014, 3, 12–16. [Google Scholar]

- Wan, Y.; Liu, C.; Ma, T.; Qin, Y. Incoherent coded aperture correlation holographic imaging with fast adaptive and noise-suppressed reconstruction. Opt. Express 2021, 29, 8064–8075. [Google Scholar] [CrossRef]

- Kumar, R.; Anand, V.; Rosen, J. 3D single shot lensless incoherent optical imaging using coded phase aperture system with point response of scattered airy beams. Sci. Rep. 2023, 13, 2996. [Google Scholar] [CrossRef]

- Rosen, J.; Anand, V.; Rai, M.R.; Mukherjee, S.; Bulbul, A. Review of 3D imaging by coded aperture correlation holography (COACH). Appl. Sci. 2019, 9, 605. [Google Scholar] [CrossRef]

- Latychevskaia, T. Lateral and axial resolution criteria in incoherent and coherent optics and holography, near-and far-field regimes. Appl. Opt. 2019, 58, 3597–3603. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, J.; Gao, Z.; Wang, S.; Niu, Y.; Deng, L.; Sa, Y. Multi-Object Deep-Field Digital Holographic Imaging Based on Inverse Cross-Correlation. Appl. Sci. 2023, 13, 11430. https://doi.org/10.3390/app132011430

Zhao J, Gao Z, Wang S, Niu Y, Deng L, Sa Y. Multi-Object Deep-Field Digital Holographic Imaging Based on Inverse Cross-Correlation. Applied Sciences. 2023; 13(20):11430. https://doi.org/10.3390/app132011430

Chicago/Turabian StyleZhao, Jieming, Zhan Gao, Shengjia Wang, Yuhao Niu, Lin Deng, and Ye Sa. 2023. "Multi-Object Deep-Field Digital Holographic Imaging Based on Inverse Cross-Correlation" Applied Sciences 13, no. 20: 11430. https://doi.org/10.3390/app132011430

APA StyleZhao, J., Gao, Z., Wang, S., Niu, Y., Deng, L., & Sa, Y. (2023). Multi-Object Deep-Field Digital Holographic Imaging Based on Inverse Cross-Correlation. Applied Sciences, 13(20), 11430. https://doi.org/10.3390/app132011430