Early Predictability of Grasping Movements by Neurofunctional Representations: A Feasibility Study

Abstract

:1. Introduction

1.1. Motion Classes Due to Activation Patterns

1.2. Source Network Upstreaming

1.3. Timing in Hand Pre-Shaping

1.4. Neurofunctional Synergies

1.5. Derived Study Aims

- To study the suitability of the currently used classifiers for discriminating grasping postures in general;

- To identify the most meaningful way to categorize grasping motions to achieve a good balance between achievable discrimination accuracy and adequate grasping postures for prosthetics;

- To study whether it is, in principle, possible to consider a time range up to the actual MGA completion for the classification, or whether it is even possible to focus on a time range before movement initiation, to better meet the critical problem regarding timing in hand pre-shaping;

- To identify whether only waking-state frequency bands are suitable, as found in other studies, or whether the complete EEG band is more suitable due to earlier and/or other neurofunctional representations compared to previous BCI studies;

- To investigate whether the straining risk of an amputee in generating his individual classifier can be avoided using a pre-trained source network.

2. Methods

2.1. Study Protocol

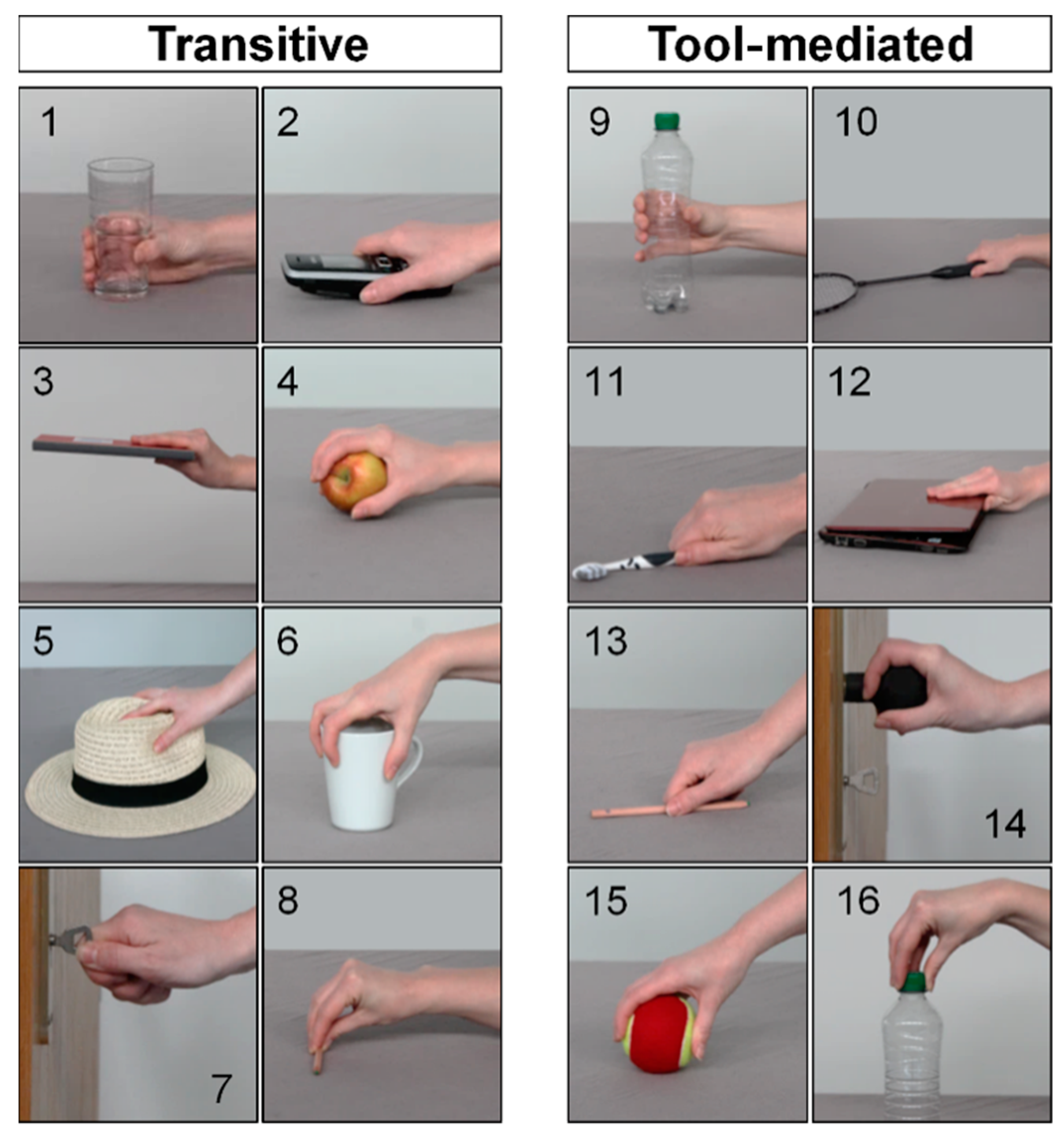

2.2. Grasping Posture Categorizations

2.3. Data Processing and Epoching

2.4. Feature Extraction and Classifiers

2.5. Validation and Hyper-Parameters

2.6. Learning Scenarios

3. Results

3.1. Classifiers

3.2. Grasping Posture Categorizations

3.3. Frequency Bands and Epoch Length

4. Discussion

4.1. Pre-Training

4.2. Classifiers

4.3. Categorization of Grasping Postures

4.4. Epoching

4.5. Frequency Bands

4.6. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| General Acronyms | |

| Acronym | Definition |

| ADLs | activities of daily living |

| BA | balanced accuracy |

| BCI | brain–computer interface |

| C5 | grasping category, 5 classes, Cutkowsky taxonomy |

| CNS | central nervous system |

| CV | cross-validation |

| DOF | degree of freedom |

| DV | digital video |

| EEG | electroencephalography |

| EL, ES | long and short epoch |

| F4 | grasping category, 4 classes, Feix taxonomy |

| G6 | grasping category with 6 classes |

| MGA | maximum grip aperture |

| MRI | magnetic resonance imaging |

| S1-S13 | subjects 1–13 |

| sEMG | surface electromyography |

| T1, T2, T3 | time intervals 1–3 lasting 500 ms each |

| Feature Extraction and Classifier Acronyms | |

| Acronym | Definition |

| CSP | common spatial pattern filter |

| FgMDM | MDM classifier extended by geodesic filtering |

| LDA | linear discriminant analysis classifier |

| LR | linear regression classifier |

| MDM | (Riemannian) minimum distance to mean |

| SVM | support vector machine classifier |

| TS | tangent space |

| TS_LR | LR classifier in tangent space |

| TS_SVM | SVM classifier in tangent space |

| xDAWN | spatial filter with covariance matrix estimation |

References

- Engdahl, S.M.; Gates, D.H. Differences in quality of movements made with body-powered and myoelectric prostheses during activities of daily living. Clin. Biomech. 2021, 84, 105311. [Google Scholar] [CrossRef]

- Ison, M.; Artemiadis, P. The role of muscle synergies in myoelectric control: Trends and challenges for simultaneous multifunction control. J. Neural Eng. 2014, 11, 051001. [Google Scholar] [CrossRef] [PubMed]

- Ciancio, A.L.; Cordella, F.; Barone, R.; Romeo, R.A.; Bellingegni, A.D.; Sacchetti, R.; Davalli, A.; Di Pino, G.; Ranieri, F.; Di Lazzaro, V.; et al. Control of prosthetic hands via the peripheral nervous system. Front. Neurosci. 2016, 10, 116. [Google Scholar] [CrossRef]

- Belter, J.T.; Segil, J.L.; Dollar, A.M.; Weir, R.F. Mechanical design and performance specifications of anthropomorphic prosthetic hands: A review. J. Rehabil. Res. Dev. 2013, 50, 599–618. [Google Scholar] [CrossRef]

- Biddiss, E.; Chau, T.T. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthet. Orthot. Int. 2007, 31, 236–257. [Google Scholar] [CrossRef]

- Resnik, L.; Huang, H.; Winslow, A.; Crouch, D.L.; Zhang, F.; Wolk, N. Evaluation of EMG pattern recognition for upper limb prosthesis control: A case study in comparison with direct myoelectric control. J. Neuroeng. Rehabil. 2018, 15, 23. [Google Scholar] [CrossRef] [PubMed]

- Savescu, A.; Cheze, L.; Wang, X.; Beurier, G.; Verriest, J.P. A 25 degrees of freedom hand geometrical model for better hand attitude simulation. SAE Tech. Pap. 2004. [Google Scholar] [CrossRef]

- Jarque-Bou, N.J.; Vergara, M.; Sancho-Bru, J.L.; Gracia-Ibáñez, V.; Roda-Sales, A. A calibrated database of kinematics and EMG of the forearm and hand during activities of daily living. Sci. Data. 2019, 6, 270. [Google Scholar] [CrossRef]

- Li, S.; He, J.; Sheng, X.; Liu, H.; Zhu, X. Synergy-driven myoelectric control for EMG-based prosthetic manipulation: A case study. Int. J. Human Robot. 2014, 11, 1450013. [Google Scholar] [CrossRef]

- Tresch, M.C.; Saltiel, P.; d’Avella, A.; Bizzi, E. Coordination and localization in spinal motor systems. Brain Res. Brain Res. Rev. 2002, 40, 66–79. [Google Scholar] [CrossRef] [PubMed]

- Vujaklija, I.; Shalchyan, V.; Kamavuako, N.; Jiang, N.; Marateb, H.R.; Farina, D. Online mapping of EMG signals into kinematics by autoencoding. J. Neuroeng. Rehabil. 2018, 15, 21–30. [Google Scholar] [CrossRef]

- Scheme, E.; Englehart, K. Electromyogram pattern recognition for control of powered upper-limb prostheses: State of the art and challenges for clinical use. J. Rehabil. Res. Dev. 2011, 48, 643–659. [Google Scholar] [CrossRef] [PubMed]

- Allard, U.C.; Nougarou, F.; Fall, C.L.; Giguere, P.; Gosselin, C.; Laviolette, F.; Gosselin, B. A convolutional neural network for robotic arm guidance using sEMG based frequency-features. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 2464–2470. [Google Scholar]

- Atzori, M.; Cognolato, M.; Muller, H. Deep learning with convolutional neural networks applied to electromyography data: A resource for the classification of movements for prosthetic hands. Front. Neurorobot. 2016, 10, 9. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Jin, W.; Wei, W.; Hu, Y.; Geng, W. Surface EMG-based inter-session gesture recognition enhanced by deep domain adaptation. Sensors. 2017, 17, 458. [Google Scholar] [CrossRef]

- Cote-Allard, U.; Fall, C.L.; Drouin, A.; Campeau-Lecours, A.; Gosselin, C.; Glette, K.; Laviolette, F.; Gosselin, B. Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 760–771. [Google Scholar] [CrossRef] [PubMed]

- Jeannerod, M. The timing of natural prehension movements. J. Mot. Behav. 1984, 16, 235–254. [Google Scholar] [CrossRef]

- Sivakumar, P.; Quinlan, D.J.; Stubbs, K.M.; Culham, J.C. Grasping performance depends upon the richness of hand feedback. Exp. Brain Res. 2021, 239, 835–846. [Google Scholar] [CrossRef]

- Leo, A.; Handjaras, G.; Bianchi, M.; Marino, H.; Gabiccini, M.; Guidi, A.; Scilingo, E.P.; Pietrini, P.; Bicchi, A.; Santello, M.; et al. A synergy-based hand control is encoded in human motor cortical areas. Elife 2016, 15, e13420. [Google Scholar] [CrossRef]

- Colachis, S.C., IV.; Bockbrader, M.A.; Zhang, M.; Friedenberg, D.A.; Annetta, N.V.; Schwemmer, M.A.; Skomrock, N.D.; Mysiw, W.J.; Rezai, A.R.; Bresler, H.S.; et al. Dexterous control of seven functional hand movements using cortically-controlled transcutaneous muscle stimulation in a person with tetraplegia. Front. Neurosci. 2018, 4, 208. [Google Scholar] [CrossRef]

- Houweling, S.; Beek, P.J.; Daffertshofer, A. Spectral changes of interhemispheric crosstalk during movement instabilities. Cereb. Cortex. 2010, 20, 2605–2613. [Google Scholar] [CrossRef]

- Seeber, M.; Scherer, R.; Müller-Putz, G.R. EEG oscillations are modulated in different be-havior-related networks during rhythmic finger movements. J. Neurosci. 2016, 36, 11671–11681. [Google Scholar] [CrossRef] [PubMed]

- Erdler, M.; Beisteiner, R.; Mayer, D.; Kaindl, T.; Edward, V.; Windischberger, C.; Lindinger, G.; Deecke, L. Supplementary motor area activation preceding voluntary movement is detecta-ble with a whole-scalp magnetoencephalography system. Neuroimage 2000, 11, 697–707. [Google Scholar] [CrossRef] [PubMed]

- Gaur, P.; Pachori, R.B.; Wang, H.; Prasad, G. A multi-class EEG-based BCI classification using multivariate empirical mode decomposition based filtering and Riemannian geometry. Expert Syst. Appl. 2018, 95, 201–211. [Google Scholar] [CrossRef]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Multiclass brain-computer interface classifycation by Riemannian geometry. IEEE Trans. Biomed. Eng. 2012, 59, 920–928. [Google Scholar] [CrossRef]

- Blankertz, B.; Tomioka, R.; Lemm, S.; Kawanabe, M.; Muller, K.-R. Optimizing spatial filters for robust EEG single-trial analysis. IEEE Signal Process. Mag. 2008, 25, 41–56. [Google Scholar] [CrossRef]

- Roy, R.; Sikdar, D.; Mahadevappa, M. Chaotic behaviour of EEG responses with an identical grasp posture. Comput. Biol. Med. 2020, 123, 103822. [Google Scholar] [CrossRef]

- Sburlea, A.I.; Wilding, M.; Müller-Putz, G.R. Disentangling human grasping type from the ob-ject’s intrinsic properties using low-frequency EEG signals. Neuroimage Rep. 2021, 1, 100012. [Google Scholar] [CrossRef]

- Schwarz, A.; Ofner, P.; Pereira, J.; Sburlea, A.I.; Müller-Putz, G.R. Decoding natural reach-and-grasp actions from human EEG. J. Neural Eng. 2018, 15, 016005. [Google Scholar] [CrossRef]

- Cutkosky, M.R. On grasp choice, grasp models, and the design of hands for manufacturing tasks. IEEE Trans Robot. Autom. 1989, 5, 269–279. [Google Scholar] [CrossRef]

- Feix, T.; Pawlik, R.; Schmiedmayer, H.; Romero, J.; Kragic, D. A comprehensive grasp taxonomy. In Robotics, Science and Systems: Workshop on Understanding the Human Hand for Advancing Robotic Manipulation; 2009; Available online: https://ps.is.mpg.de/publications/feix-rssws-2010 (accessed on 30 March 2023).

- Klem, G.H.; Lüders, H.O.; Jasper, H.H.; Elger, C. The ten-twenty electrode system of the international federation. The International Federation of Clinical Neurophysiology. Electroen-Cephalogr Clin. Neurophysiol. Suppl. 1999, 52, 3–6. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Sburlea, A.I.; Müller-Putz, G.R. Exploring representations of human grasping in neural, muscle and kinematic signals. Sci. Rep. 2018, 8, 16669. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Wang, S.; Zhang, X.; Yao, L.; Yue, L.; Qian, B.; Li, X. EEG-based motion intention recognition via multi-task RNNs. In Proceedings of the 2018 SIAM International Conference on Data Mining, San Diego, CA, USA, 3–5 May 2018; pp. 279–287. [Google Scholar]

- Ramoser, H.; Müller-Gerking, J.; Pfurtscheller, G. Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 2000, 8, 441–446. [Google Scholar] [CrossRef] [PubMed]

- Rivet, B.; Souloumiac, A.; Attina, V.; Gibert, G. xDAWN algorithm to enhance evoked poten-tials: Application to brain-computer interface. IEEE Trans. Biomed. Eng. 2009, 56, 2035–2043. [Google Scholar] [CrossRef]

- Prabhat, A.; Khuller, V. Sentiment classification on big data using naive bayes and logistic regression. In Proceedings of the International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 5–7 January 2017; pp. 1–5. [Google Scholar]

- Sita, J.; Nair, G. Feature extraction and classification of EEG signals for mapping motor area of the brain. In Proceedings of the International Conference on Control Communication and Computing (ICCC), Thiruvananthapuram, India, 13–15 December 2013; pp. 463–468. [Google Scholar]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Riemannian geometry applied to BCI classification. In Latent Variable Analysis and Signal Separation; Vigneron, V., Zarzoso, V., Moreau, E., Gribonval, R., Vincent, E., Eds.; LVA/ICA, Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6365. [Google Scholar]

- Lotte, F.; Congedo, M.; Lécuyer, A.; Lamarche, F.; Arnaldi, B. A review of classification algorithms for EEG-based brain-computer interfaces. J. Neural. Eng. 2007, 4, R1–R13. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Rodríguez, J.D.; Pérez, A.; Lozano, J.A. Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 30, 569–575. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2021, 17, 168–192. [Google Scholar] [CrossRef]

- Dietterich, T.G. Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 1998, 10, 1895–1923. [Google Scholar] [CrossRef]

- Schwarz, A.; Escolano, C.; Montesano, L.; Müller-Putz, G.R. Analyzing and decoding natural reach-and-grasp actions using gel, water and dry EEG systems. Front. Neurosci. 2020, 14, 849. [Google Scholar] [CrossRef]

- Schwarz, A.; Pereira, J.; Lindner, L.; Müller-Putz, G.R. Combining frequency and time-domain EEG features for classification of self-paced reach-and-grasp actions. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 3036–3041. [Google Scholar]

- Agashe, H.A.; Paek, A.Y.; Zhang, Y.; Contreras-Vidal, J.L. Global cortical activity predicts shape of hand during grasping. Front. Neurosci. 2015, 9, 121. [Google Scholar] [CrossRef] [PubMed]

- Ameri, A.; Akhaee, M.A.; Scheme, E.; Englehart, K. A deep transfer learning approach to reducing the effect of electrode shift in EMG pattern recognition-based control. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 370–379. [Google Scholar] [CrossRef] [PubMed]

- Combrisson, E.; Jerbi, K. Exceeding chance level by chance: The caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J. Neurosci. Methods. 2015, 250, 126–136. [Google Scholar] [CrossRef] [PubMed]

- Yahya, N.; Musa, H.; Ong, Z.Y.; Elamvazuthi, I. Classification of motor functions from electroencephalogram (EEG) Signals based on an integrated method comprised of common spatial pattern and wavelet transform framework. Sensors 2019, 19, 4878. [Google Scholar] [CrossRef] [PubMed]

- Wesselink, D.B.; van den Heiligenberg, F.M.; Ejaz, N.; Dempsey-Jones, H.; Cardinali, L.; Tarall-Jozwiak, A.; Diedrichsen, J.; Makin, T.R. Obtaining and maintaining cortical hand representation as evidenced from acquired and congenital handlessness. Elife 2019, 8, e37227. [Google Scholar] [CrossRef] [PubMed]

| Var. | No. | Grip Pattern | ADLs |

|---|---|---|---|

| G6 | 1 | Power prismatic grip | 1, 9, 10 |

| 2 | Power prismatic adducted thumb | 2, 5 | |

| 3 | Power lateral pinch | 3, 12 | |

| 4 | Power circular grip | 4, 6, 14, 15 | |

| 5 | Key pinch grip | 7, 16 | |

| 6 | Precision | 8, 11, 13 | |

| C5 | 1 | Power prehensile lateral pinch | 3, 12 |

| 2 | Power prehensile circular sphere | 4, 15 | |

| 3 | Power prehensile prismatic wrap | 1, 9 | |

| 4 | Precision circular sphere | 6, 14 | |

| 5 | Precision prismatic thumb 3 finger | 8 | |

| F4 | 1 | Power palm | 1, 4, 9, 15 |

| 2 | Intermediate side | 7, 10, 11, 13, 16 | |

| 3 | Precision pad 2–5 thumb abduction | 6, 14 | |

| 4 | Precision pad 2–5 thumb adduction | 3, 12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jakubowitz, E.; Feist, T.; Obermeier, A.; Gempfer, C.; Hurschler, C.; Windhagen, H.; Laves, M.-H. Early Predictability of Grasping Movements by Neurofunctional Representations: A Feasibility Study. Appl. Sci. 2023, 13, 5728. https://doi.org/10.3390/app13095728

Jakubowitz E, Feist T, Obermeier A, Gempfer C, Hurschler C, Windhagen H, Laves M-H. Early Predictability of Grasping Movements by Neurofunctional Representations: A Feasibility Study. Applied Sciences. 2023; 13(9):5728. https://doi.org/10.3390/app13095728

Chicago/Turabian StyleJakubowitz, Eike, Thekla Feist, Alina Obermeier, Carina Gempfer, Christof Hurschler, Henning Windhagen, and Max-Heinrich Laves. 2023. "Early Predictability of Grasping Movements by Neurofunctional Representations: A Feasibility Study" Applied Sciences 13, no. 9: 5728. https://doi.org/10.3390/app13095728