Adaptive Detection and Classification of Brain Tumour Images Based on Photoacoustic Imaging

Abstract

:1. Introduction

2. Materials and Methods

2.1. An Overview of the Framework

2.2. Photoacoustic Simulation Image

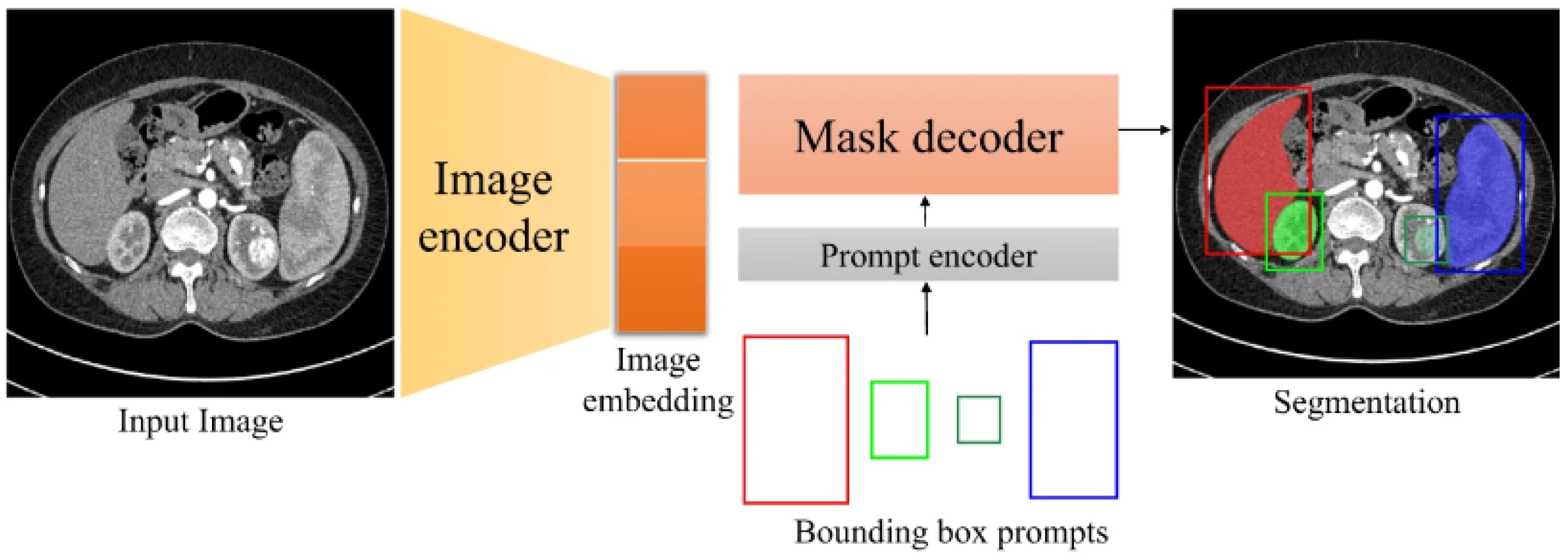

2.3. Deep Learning Algorithm for Brain Tumour Detection Segmentation

2.4. Deep Learning Algorithms for Brain Tumour Classification

3. Results and Discussion

3.1. Experimental Data

3.2. Indicators for Model Evaluation

3.2.1. Accuracy, Precision, Recall, Confusion Matrix, Sensitivity, Dice Coefficient, and Specificity

3.2.2. F1 Confidence Level

3.3. Detection and Segmentation Experiment Results and Analysis

3.4. CNN Classification Experiment Results and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Focusing on brain tumours and brain metastasis. Nat. Rev. Cancer 2020, 20, 1. [CrossRef] [PubMed]

- Li, R. Precise intraoperative brain tumor classification. Nat. Biotechnol. 2023, 41, 1521. [Google Scholar] [CrossRef] [PubMed]

- Iyer, V.R.; Kuo, J.S. Emerging Frontiers of Therapeutic Strategies for Brain Tumors: A NeuroMolecular Medicine Special Issue Introduction. Neuromolecular Med. 2022, 24, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Solar, P.; Hendrych, M.; Barak, M.; Valekova, H.; Hermanova, M.; Jancalek, R. Blood-Brain Barrier Alterations and Edema Formation in Different Brain Mass Lesions. Front. Cell. Neurosci. 2022, 16, 922181. [Google Scholar] [CrossRef] [PubMed]

- Kumaria, A. Tumor treating fields in pediatric brain tumors: Overcoming challenges. Childs Nerv. Syst. 2022, 38, 1847–1848. [Google Scholar] [CrossRef] [PubMed]

- Bruschi, M.; Grill, J.; Guerrini-Rousseau, L. Pediatric brain tumors as a developmental disease. Curr. Opin. Oncol. 2021, 33, 608–614. [Google Scholar] [CrossRef] [PubMed]

- Miller, K.D.; Ostrom, Q.T.; Kruchko, C.; Patil, N.; Tihan, T.; Cioffi, G.; Fuchs, H.E.; Waite, K.A.; Jemal, A.; Siegel, R.L.; et al. Brain and other central nervous system tumor statistics, 2021. CA Cancer J. Clin. 2021, 71, 381–406. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, Z.; Hua, C.; Xu, Y.; Li, Y.; Zhao, G. Primary malignant brain tumors following systemic malignancies: A population-based analysis. Neuroepidemiology 2023, 56, 452–459. [Google Scholar] [CrossRef] [PubMed]

- Al-Kadi, O.S.; Almallahi, I.; Abu-Srhan, A.; Abushariah, A.M.M.; Mahafza, W. Unpaired MR-CT brain dataset for unsupervised image translation. Data Brief 2022, 42, 108109. [Google Scholar] [CrossRef] [PubMed]

- Juan, W.Y. Generating Synthesized Computed Tomography (CT) from Magnetic Resonance Imaging Using Cycle-Consistent Generative Adversarial Network for Brain Tumor Radiation Therapy. Int. J. Radiat. Oncol. Biol. Phys. 2021, 111, E111–E112. [Google Scholar] [CrossRef]

- Romano, M.; Bravin, A.; Mittone, A.; Eckhardt, A.; Barbone, G.E.; Sancey, L.; Dinkel, J.; Bartzsch, S.; Ricke, J.; Alunni-Fabbroni, M.; et al. A Multi-Scale and Multi-Technique Approach for the Characterization of the Effects of Spatially Fractionated X-ray Radiation Therapies in a Preclinical Model. Cancers 2021, 13, 4953. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.K.H.; Guo, W.; Liu, J.; Dong, F.; Li, Z.; Patterson, T.A.; Hong, H. Machine learning and deep learning for brain tumor MRI image segmentation. Exp. Biol. Med. 2023, 248, 1974–1992. [Google Scholar] [CrossRef] [PubMed]

- Qiu, T.; Lan, Y.; Gao, W.; Zhou, M.; Liu, S.; Huang, W.; Zeng, S.; Pathak, J.L.; Yang, B.; Zhang, J. Photoacoustic imaging as a highly efficient and precise imaging strategy for the evaluation of brain diseases. Quant. Imaging Med. Surg. 2021, 11, 2169–2186. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Chen, B.; Zhou, M.; Lan, H.; Gao, F. Photoacoustic Image Classification and Segmentation of Breast Cancer: A Feasibility Study. IEEE Access 2019, 7, 5457–5466. [Google Scholar] [CrossRef]

- He, Q.; Duan, Y.; Miles, J.; Takahashi, N. A Context-Sensitive Active Contour for 2D Corpus Callosum Segmentation. Int. J. Biomed. Imaging 2007, 2007, 024826. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Chi, Z. MR brain image segmentation based on self-organizing map network. Int. J. Inf. Technol. 2005, 11, 45–53. [Google Scholar]

- Noori, M.; Bahri, A.; Mohammadi, K. Attention-guided version of 2D UNet for automatic brain tumor segmentation. In Proceedings of the 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 24–25 October 2019; pp. 269–275. [Google Scholar]

- Thias, A.H.; Al Mubarok, A.F.; Handayani, A.; Danudirdjo, D.; Rajab, T.E. Brain tumor semi-automatic segmentation on MRI T1-weighted images using active contour models. In Proceedings of the 2019 International Conference on Mechatronics, Robotics and Systems Engineering (MoRSE), Bali, Indonesia, 4–6 December 2019; pp. 217–221. [Google Scholar]

- Sungheetha, A.; Sharma, R. Gtikf-gabor-transform incorporated k-means and fuzzy c means clustering for edge detection in ct and mri. J. Soft Comput. Paradig. 2020, 2, 111–119. [Google Scholar] [CrossRef]

- Lan, H.; Duan, T.; Zhong, H.; Zhou, M.; Gao, F. Photoacoustic classification of tumor model morphology based on support vector machine: A simulation and phantom study. IEEE J. Sel. Top. Quantum Electron. 2018, 25, 1–9. [Google Scholar] [CrossRef]

- Al-Galal, S.A.Y.; Alshaikhli, I.F.T.; Abdulrazzaq, M.M.; Hassan, R. Brain Tumor MRI Medical Images Classification Model Based on CNN (BTMIC-CNN). J. Eng. Sci. Technol. 2022, 17, 4410–4432. [Google Scholar]

- Ayadi, W.; Elhamzi, W.; Charfi, I.; Atri, M. Deep CNN for brain tumor classification. Neural Process. Lett. 2021, 53, 671–700. [Google Scholar] [CrossRef]

- Haq, A.U.; Li, J.P.; Kumar, R.; Ali, Z.; Khan, I.; Uddin, M.I.; Agbley, B.L.Y. MCNN: A multi-level CNN model for the classification of brain tumors in IoT-healthcare system. J. Ambient Intell. Humaniz. Comput. 2023, 14, 4695–4706. [Google Scholar] [CrossRef]

- Kasukurthi, A.; Paleti, L.; Brahmaiah, M.; Sree, C.S. Enhanced CNN Model for Brain Tumor Classification. Int. J. Comput. Sci. Netw. Secur. 2022, 22, 143–148. [Google Scholar] [CrossRef]

- Treeby, B.E.; Cox, B.T. k-Wave: MATLAB toolbox for the simulation and reconstruction of photoacoustic wave fields. J. Biomed. Opt. 2010, 15, 021314. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar]

- Uygun, T.; Ozguven, M.M. Determination of tomato leafminer: Tuta absoluta (Meyrick) (Lepidoptera: Gelechiidae) damage on tomato using deep learning instance segmentation method. Eur. Food Res. Technol. 2024, 250, 1837–1852. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Latif, G. DeepTumor: Framework for Brain MR Image Classification, Segmentation and Tumor Detection. Diagnostics 2022, 12, 2888. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Oshino, S.; Kato, A.; Wakayama, A.; Taniguchi, M.; Hirata, M.; Yoshimine, T. Magnetoencephalographic analysis of cortical oscillatory activity in patients with brain tumors: Synthetic aperture magnetometry (SAM) functional imaging of delta band activity. Neuroimage 2007, 34, 957–964. [Google Scholar] [CrossRef]

- Tiwari, P.; Pant, B.; Elarabawy, M.M.; Abd-Elnaby, M.; Mohd, N.; Dhiman, G.; Sharma, S. CNN Based Multiclass Brain Tumor Detection Using Medical Imaging. Comput. Intell. Neurosci. 2022, 2022, 1830010. [Google Scholar] [CrossRef]

- Mukkapati, N.; Anbarasi, M. Brain tumor classification based on enhanced CNN model. Rev. Intell. Artif. 2022, 36, 125. [Google Scholar] [CrossRef]

| Accuracy (%) | Dice Score (%) | |

|---|---|---|

| Background | 96.52 | 88.07 |

| Brain tumour | 94.23 | 81.35 |

| Precision (%) | Recall (%) | Binary Accuracy (%) | ||

|---|---|---|---|---|

| Original image | all | 94.12 | 94.07 | 94.01 |

| positive | 96.03 | 92.31 | ||

| negative | 92.21 | 95.83 | ||

| Segmented image | all | 97.02 | 97.04 | 97.02 |

| positive | 96.08 | 98.03 | ||

| negative | 97.96 | 96.05 |

| Specificity (%) | Sensitivity (%) | |

|---|---|---|

| CNN classification | 92.31 | 94.07 |

| Methods in this paper CNN classification | 96.08 | 98.03 |

| Method | Data | Accuracy (%) |

|---|---|---|

| Deep convolutional neural network model | BraTS | 96.30 |

| Support Vector Machine (SVM) Algorithms | Photoacoustic tumour images | 90.00 |

| GoogLeNet model | Breast cancer images | 91.18 |

| AlexNet model | Breast cancer images | 87.69 |

| K-means algorithms | Photoacoustic breast cancer images | 82.14 |

| Deep convolutional neural network model | Photoacoustic brain tumour images | 94.01 |

| The methods proposed in this paper | Photoacoustic brain tumour images | 97.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Jiang, Y.; He, R.; Yan, S.; Lei, Y.; Zhang, J.; Cao, H. Adaptive Detection and Classification of Brain Tumour Images Based on Photoacoustic Imaging. Appl. Sci. 2024, 14, 5270. https://doi.org/10.3390/app14125270

Chen Y, Jiang Y, He R, Yan S, Lei Y, Zhang J, Cao H. Adaptive Detection and Classification of Brain Tumour Images Based on Photoacoustic Imaging. Applied Sciences. 2024; 14(12):5270. https://doi.org/10.3390/app14125270

Chicago/Turabian StyleChen, Yi, Yufei Jiang, Ruonan He, Shengxian Yan, Yuyang Lei, Jing Zhang, and Hui Cao. 2024. "Adaptive Detection and Classification of Brain Tumour Images Based on Photoacoustic Imaging" Applied Sciences 14, no. 12: 5270. https://doi.org/10.3390/app14125270