Three-Dimensional Dead-Reckoning Based on Lie Theory for Overcoming Approximation Errors

Abstract

1. Introduction

- Enhanced Accuracy in Pose Prediction: The proposed method significantly improves the accuracy of pose prediction for vehicle motion. By employing Lie theory-based methods, the approach enables rigorous DR calculations without approximations, precisely modeling translations and rotations, especially in three-dimensional space. This results in more accurate vehicle pose determination.

- Elimination of Linear Space Assumptions: Utilizing Lie groups, the method eliminates the necessity to assume that vehicle rotation or attitude forms a linear space. This avoids the need to force rotations or attitudes into a linear space, thereby preventing the introduction of approximation errors and enhancing overall prediction accuracy.

- Reduction of Cumulative Approximation Errors: The proposed method addresses and overcomes the limitations of conventional DR methods that suffer from cumulative approximation errors in integration operations. These errors are typically caused by inaccuracies in linear and angular velocity measurements, motion prediction time intervals, and computational limitations. By performing accurate computations in nonlinear spaces, the proposed method effectively reduces these cumulative errors, enhancing the overall estimation accuracy.

- Application in Pose Estimation Algorithms: The method can be seamlessly integrated as a process model in pose estimation algorithms, such as the KF. It provides highly accurate predictions of the current state during the prediction phase, thereby reducing estimation uncertainty and improving the robustness and reliability of the estimation process.

- The results demonstrate a substantial improvement in the accuracy and reliability of vehicle pose prediction.

- The proposed method’s ability to mitigate cumulative errors and operate effectively in nonlinear spaces highlights its potential for practical applications in autonomous driving and other related fields.

- The findings suggest that adopting this method can lead to more precise and dependable navigation systems, ultimately contributing to the advancement of autonomous vehicle technologies.

- This novel approach not only enhances the theoretical foundation of vehicle pose estimation but also provides a practical solution that can be directly implemented in real-world autonomous systems.

- The method offers significant benefits in terms of performance and reliability, making it a valuable contribution to the field of autonomous driving.

2. Limitation of Previously Used Dead-Reckoning Approach

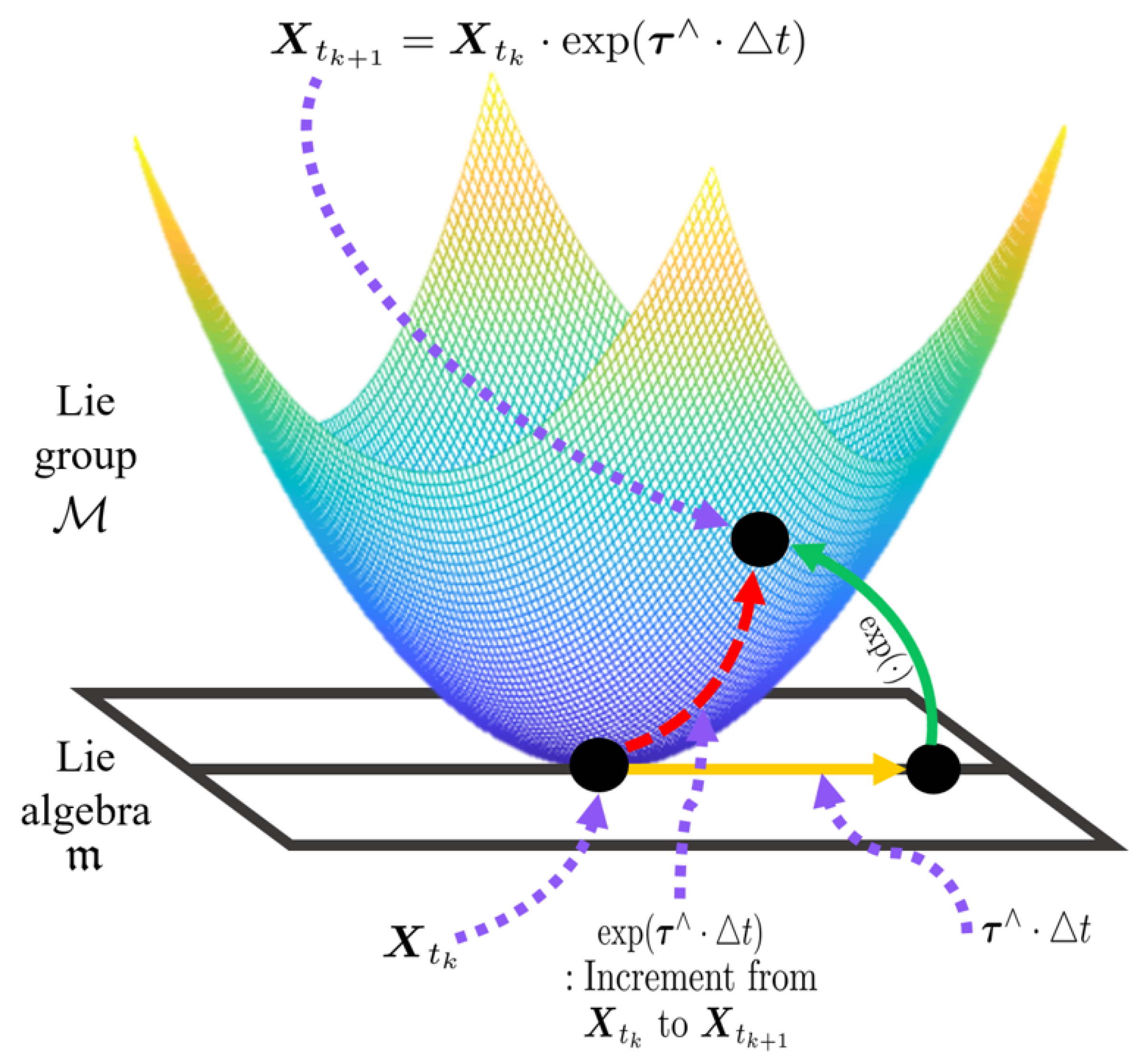

3. Lie Theory-Based Dead-Reckoning Approach

3.1. Lie Theory Tools for Dead-Reckoning

3.2. Lie Theory Application for Three-Dimensional Dead-Reckoning of Vehicles

4. Experiments and Results

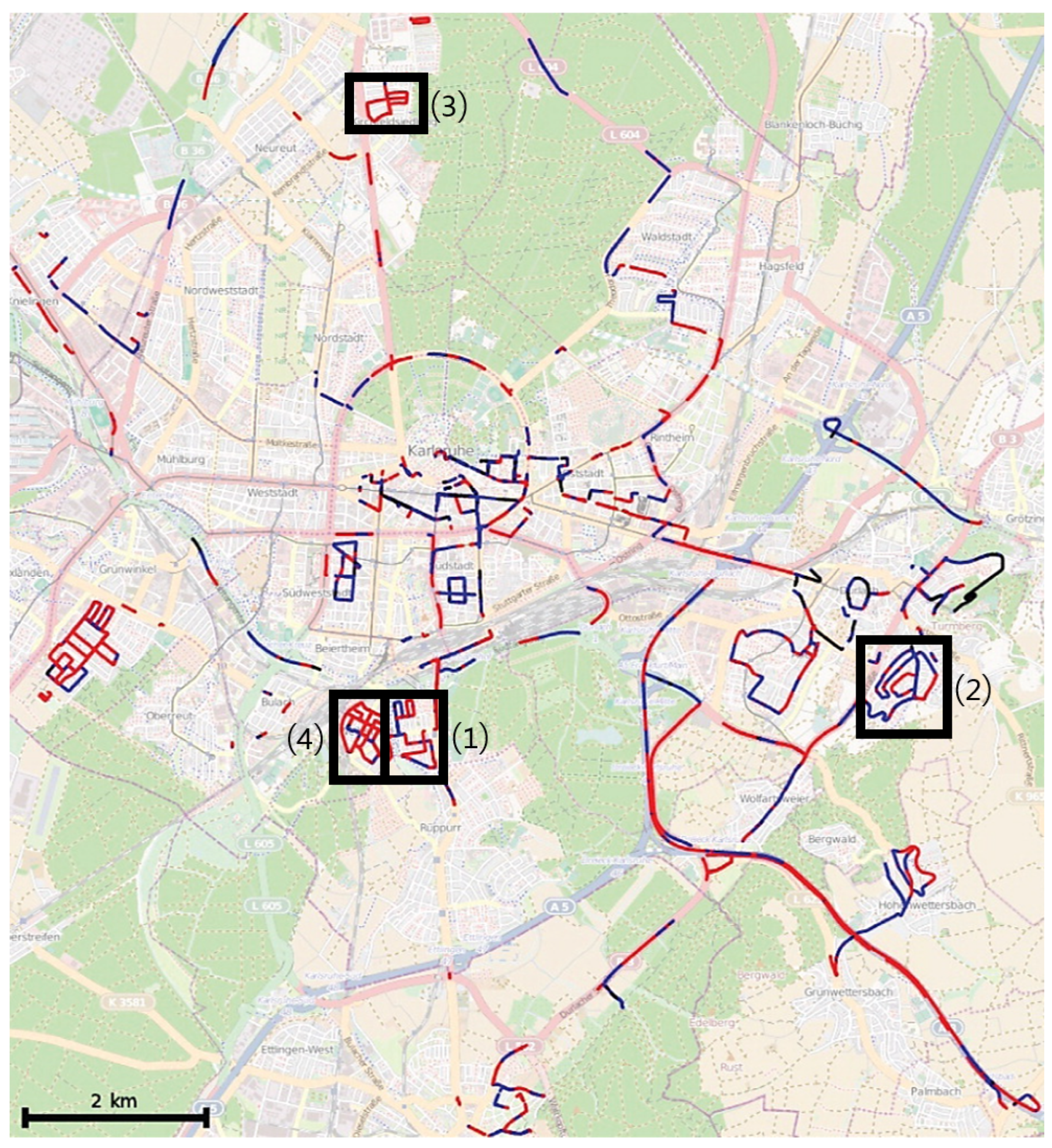

4.1. Experimental Environment

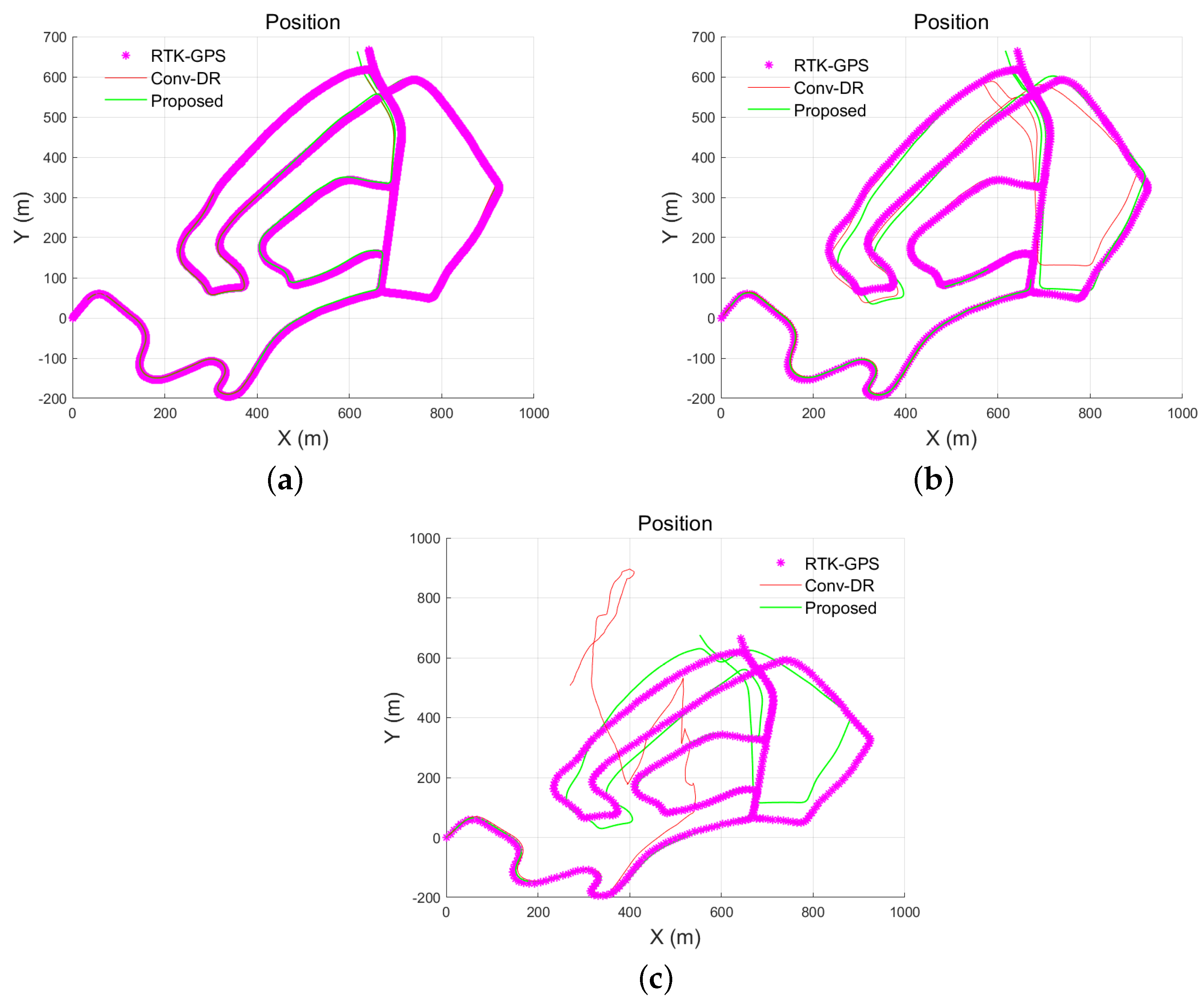

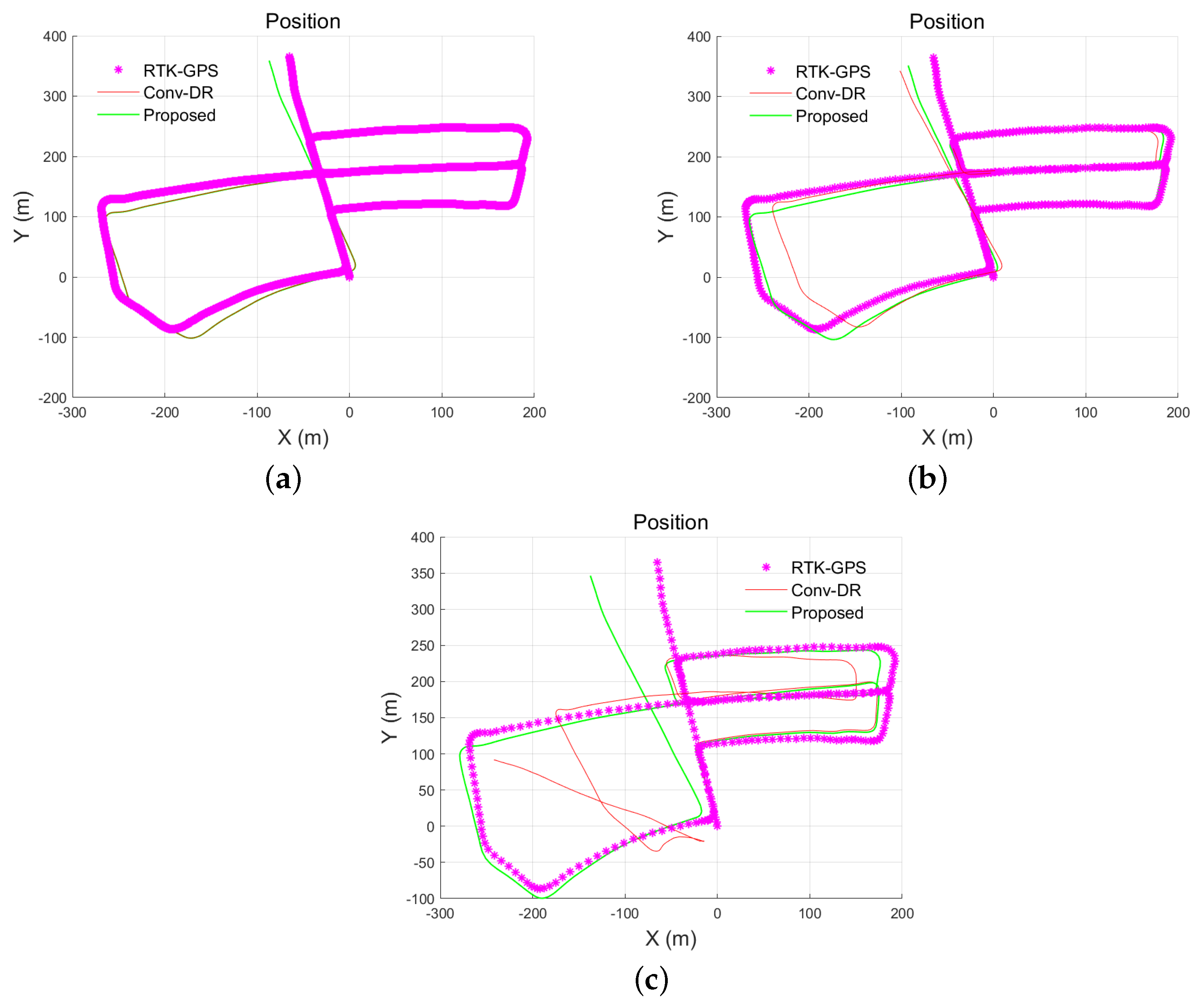

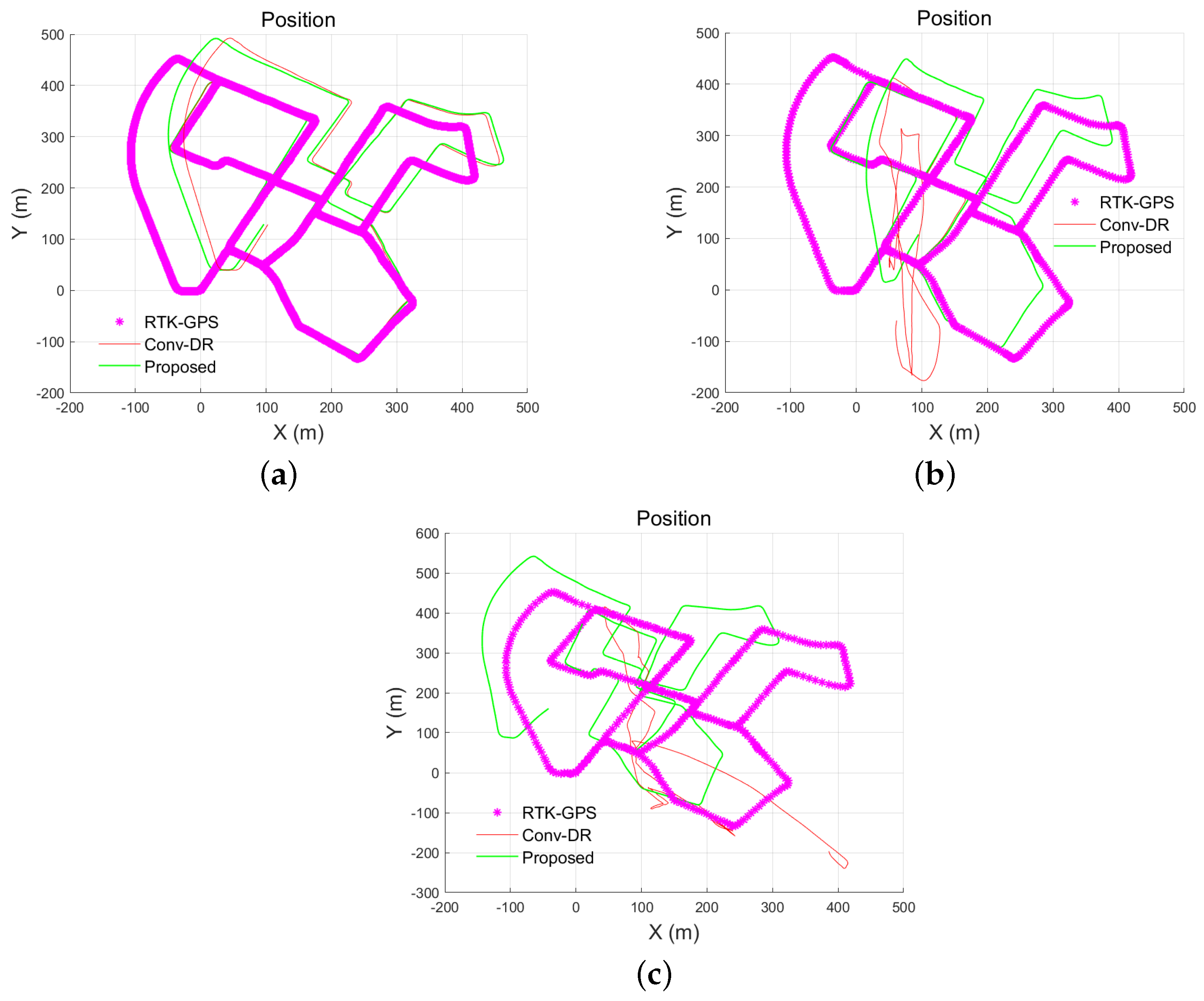

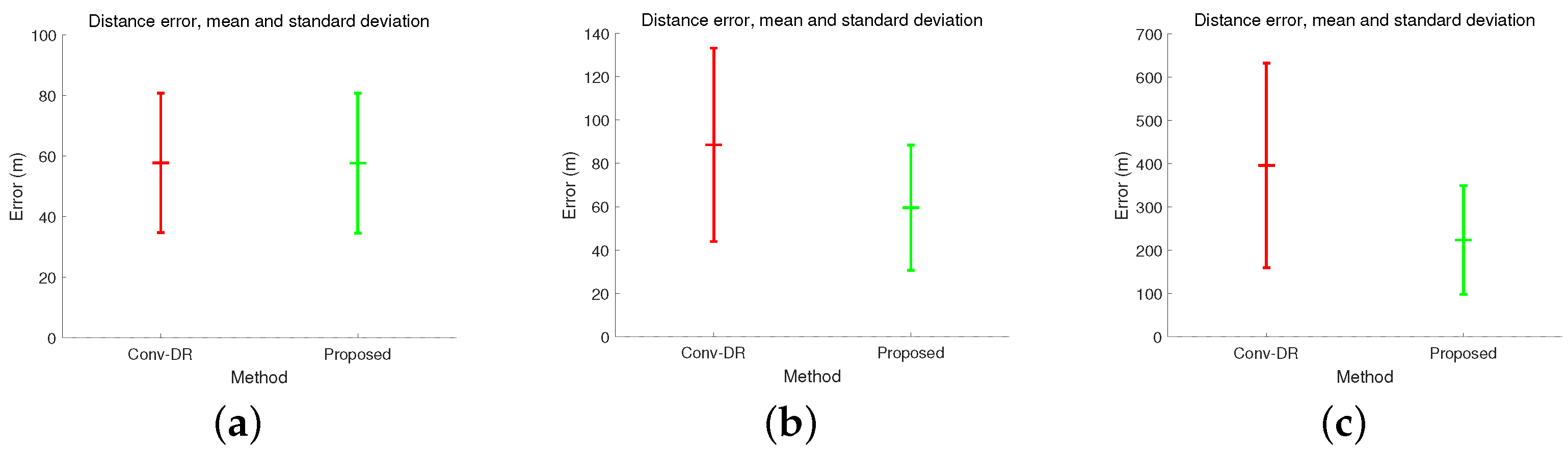

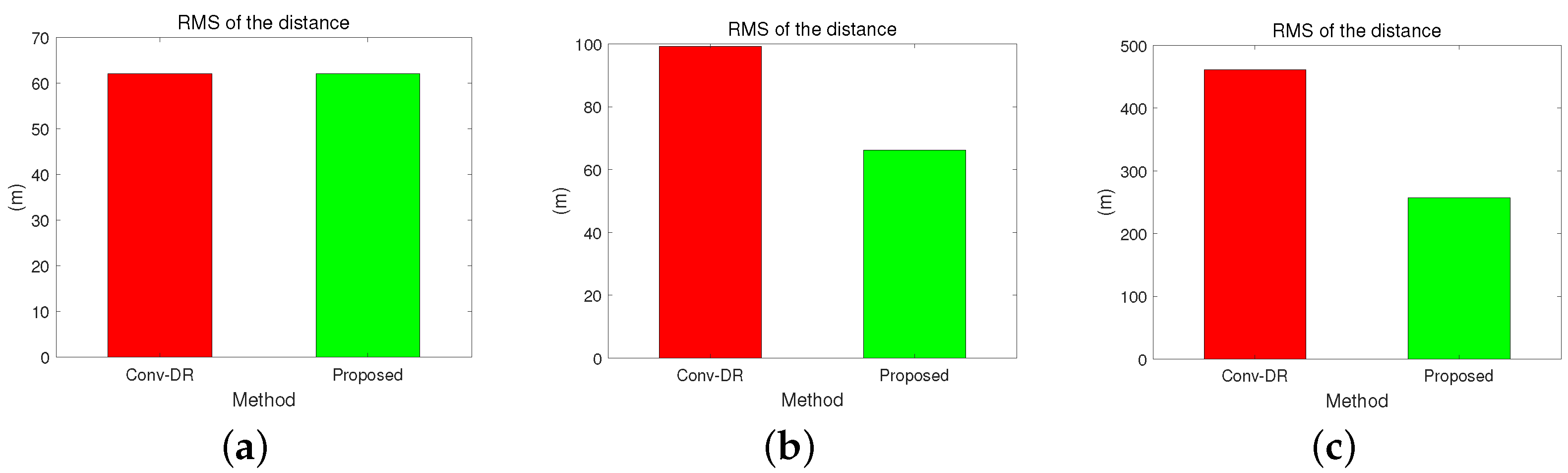

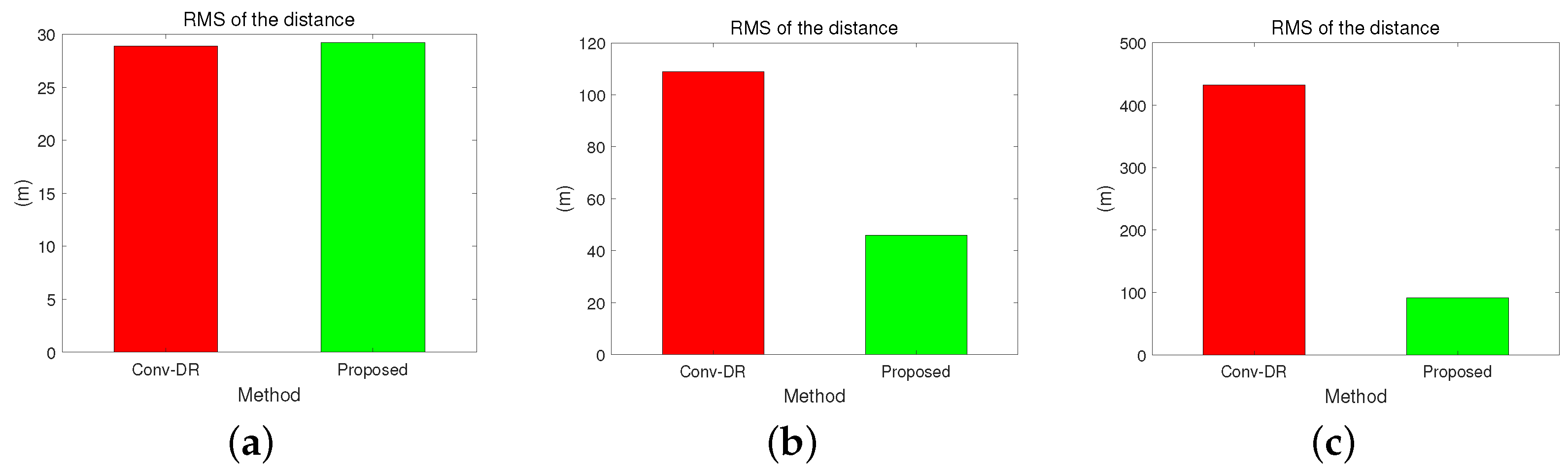

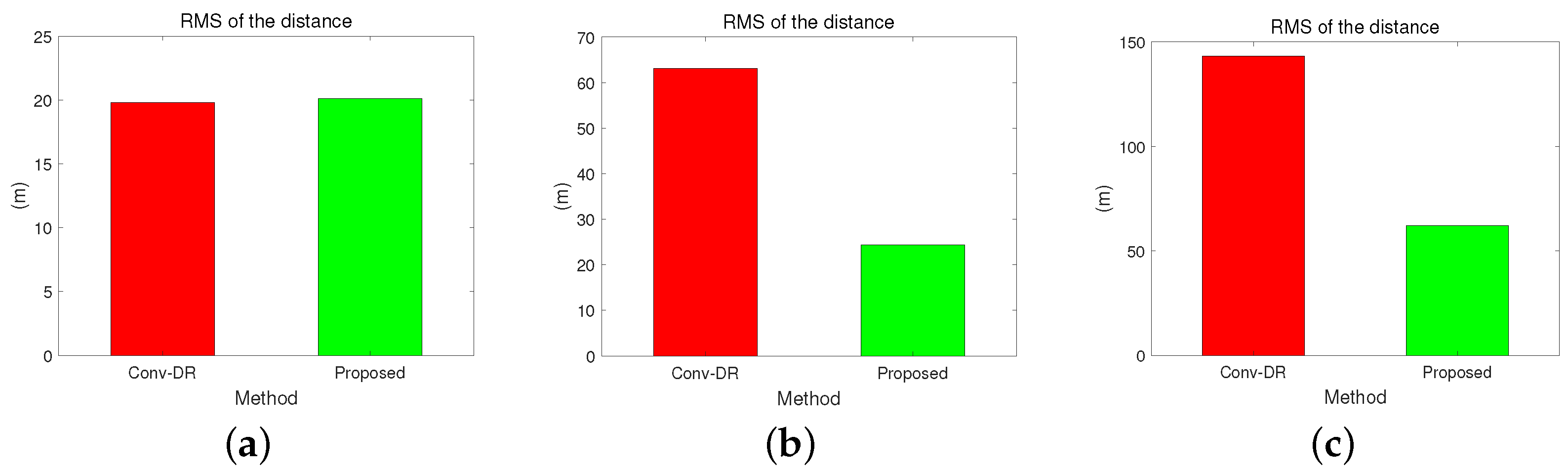

4.2. Position Results

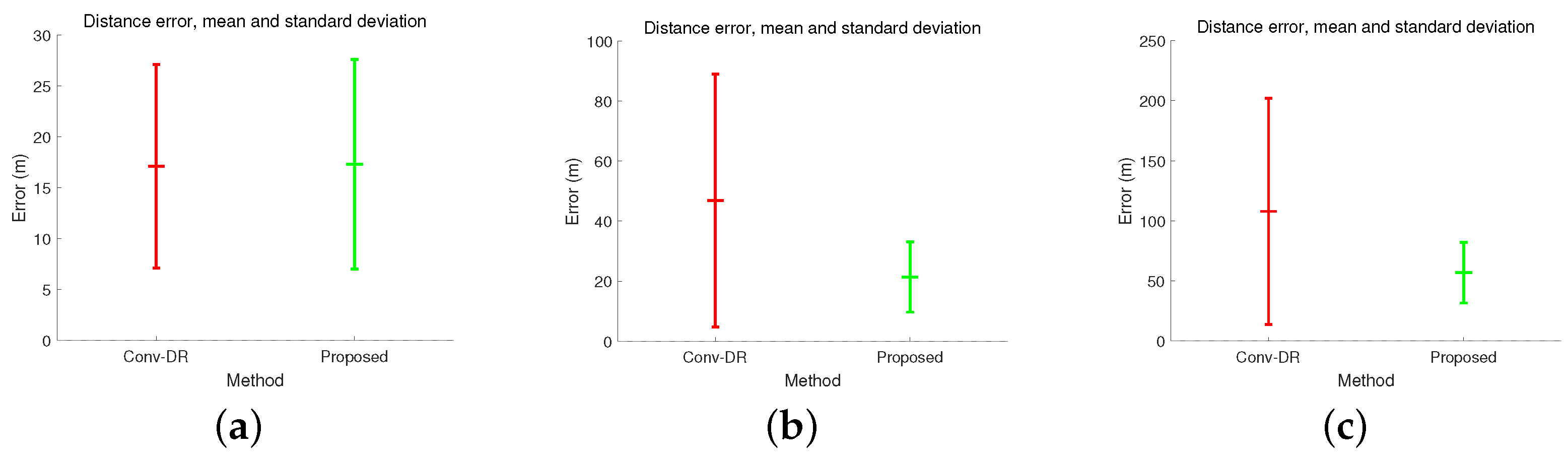

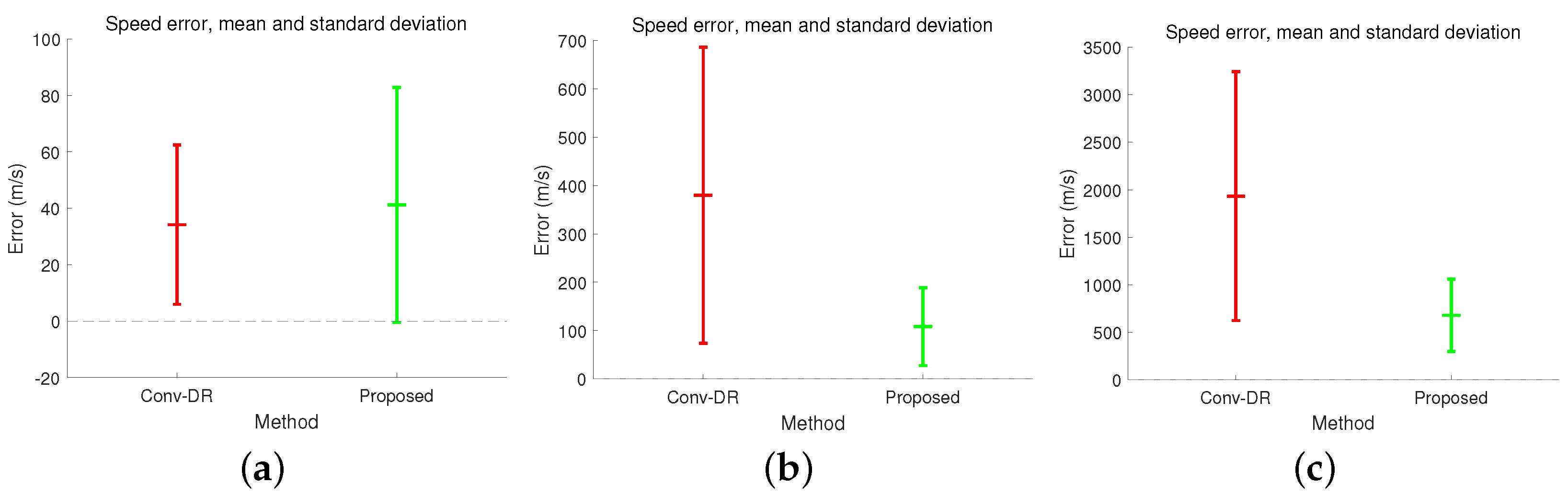

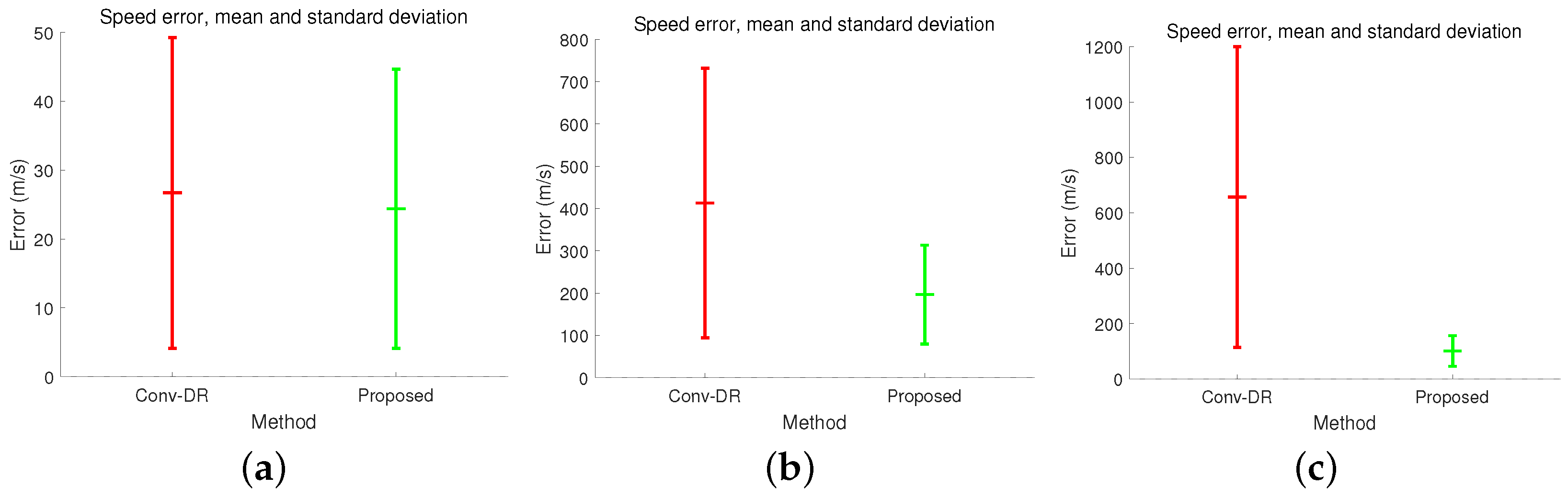

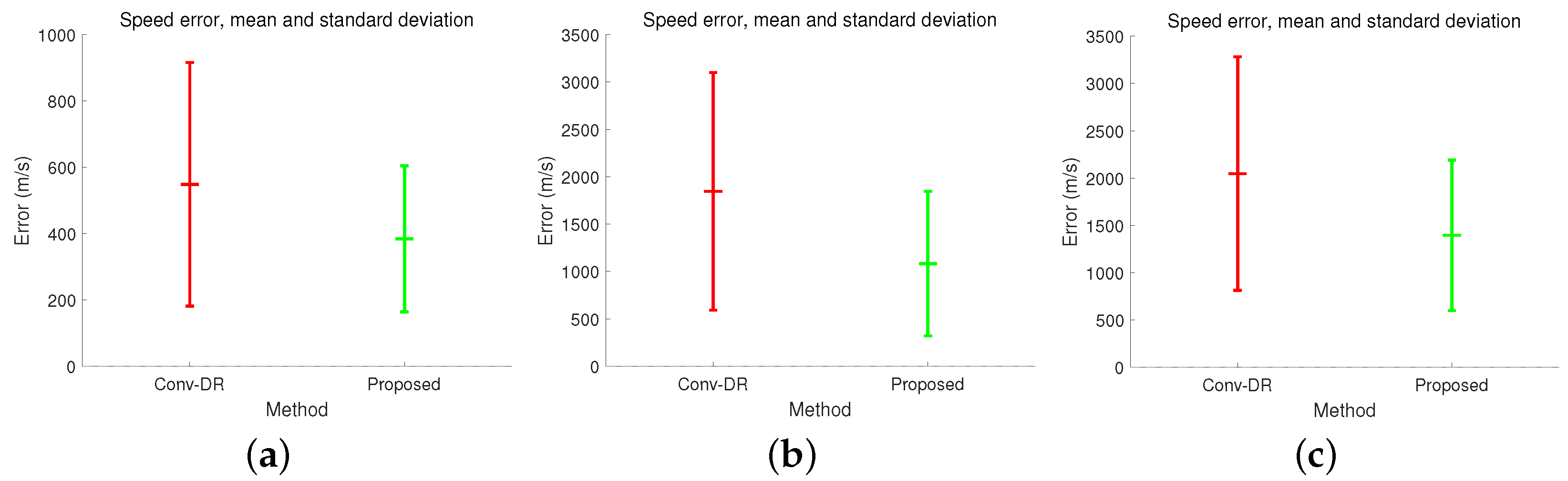

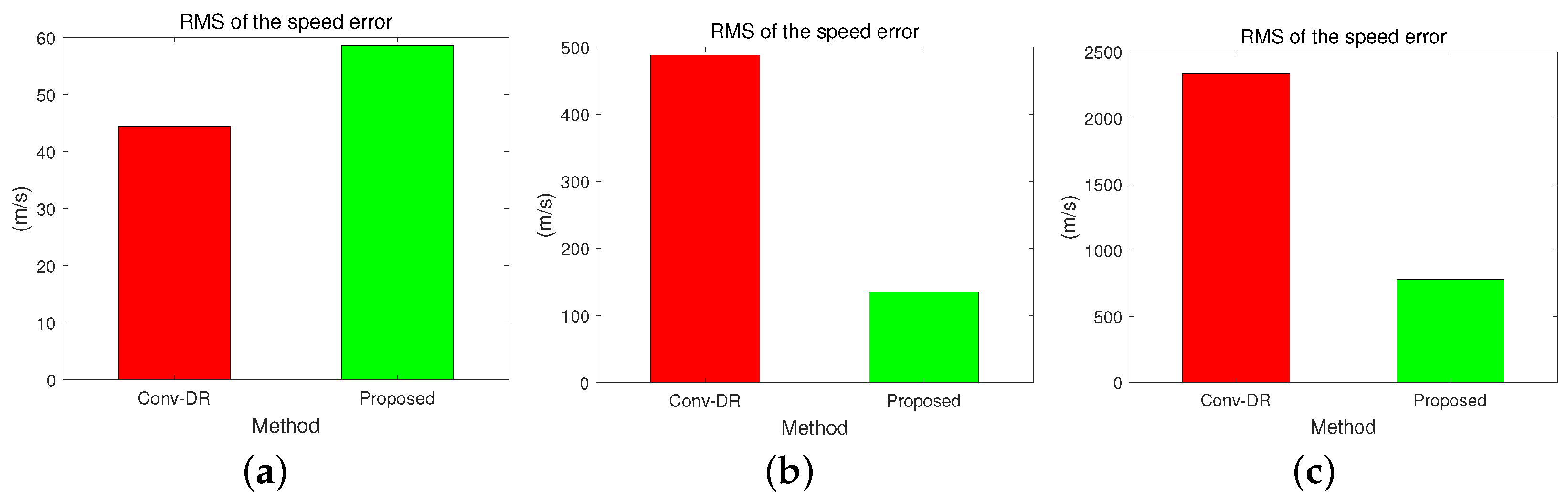

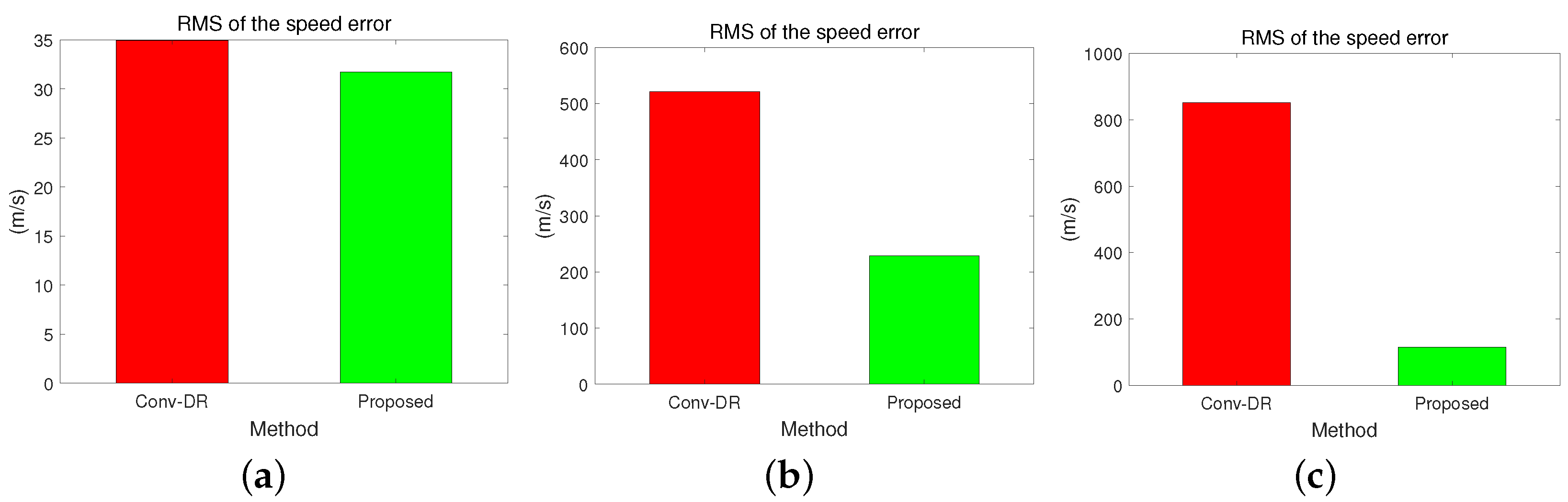

4.3. Speed Results

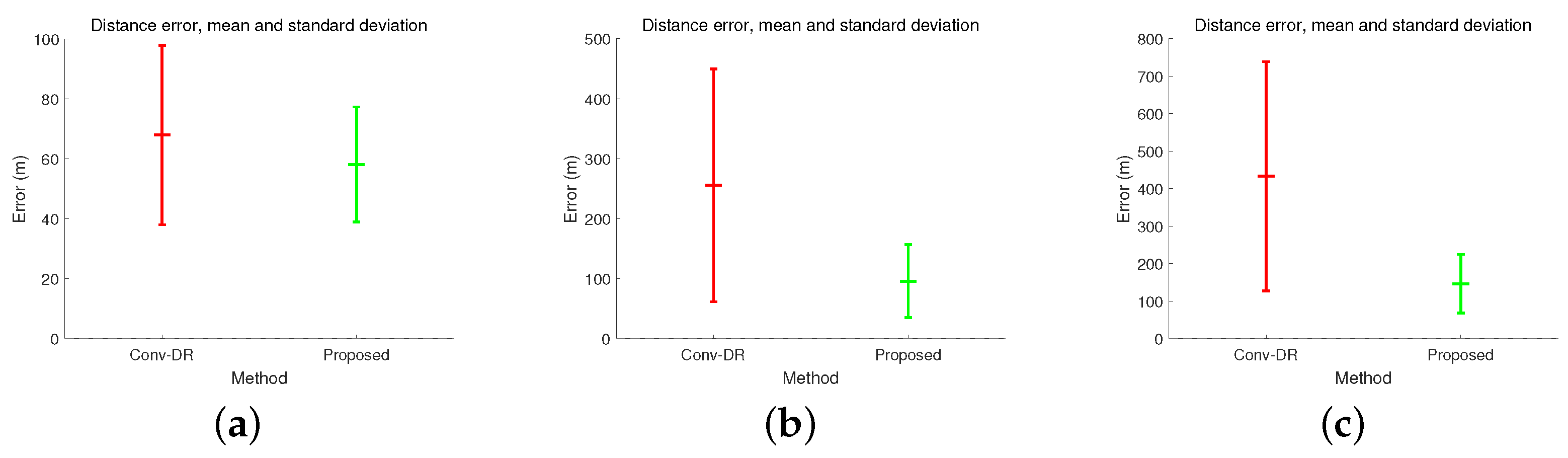

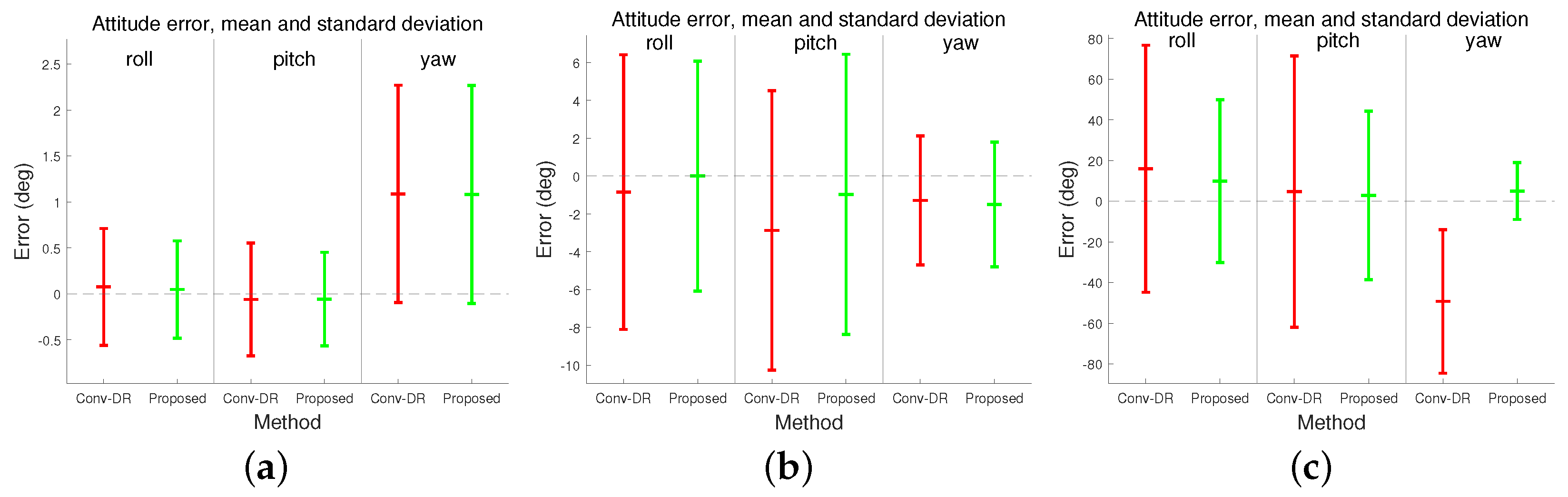

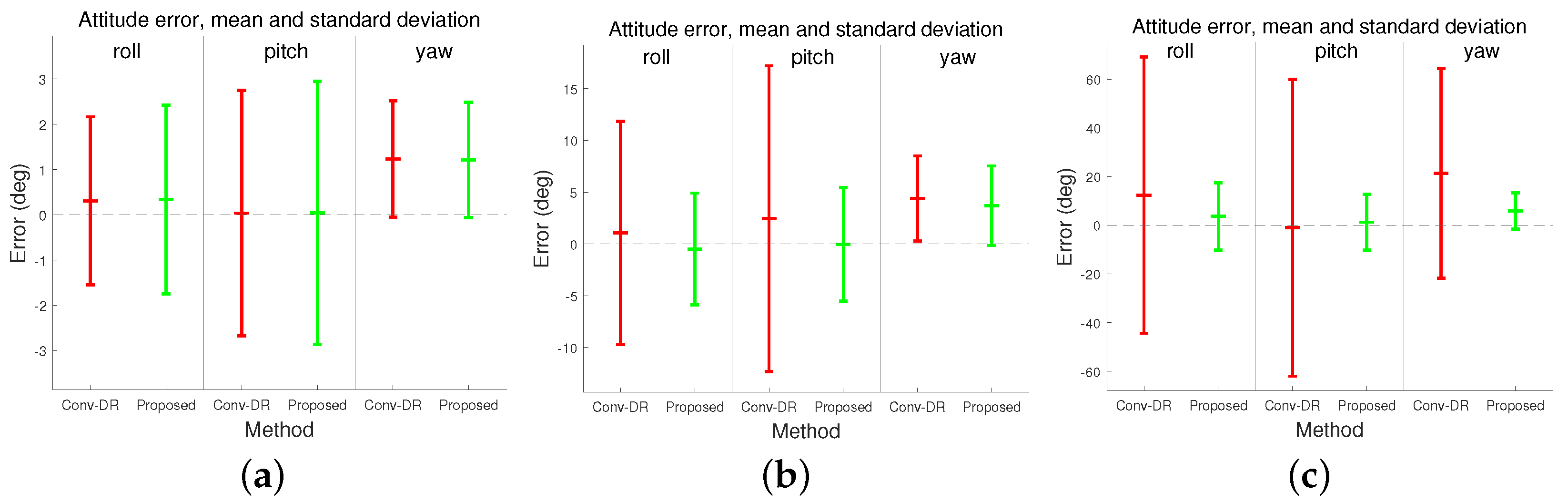

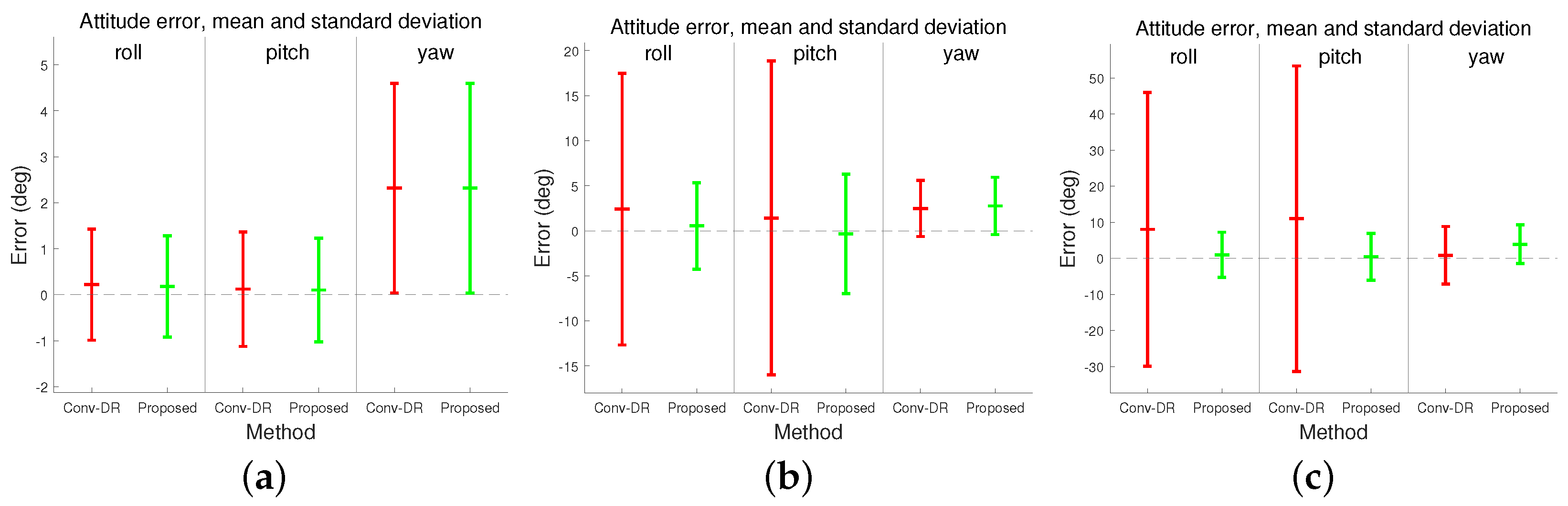

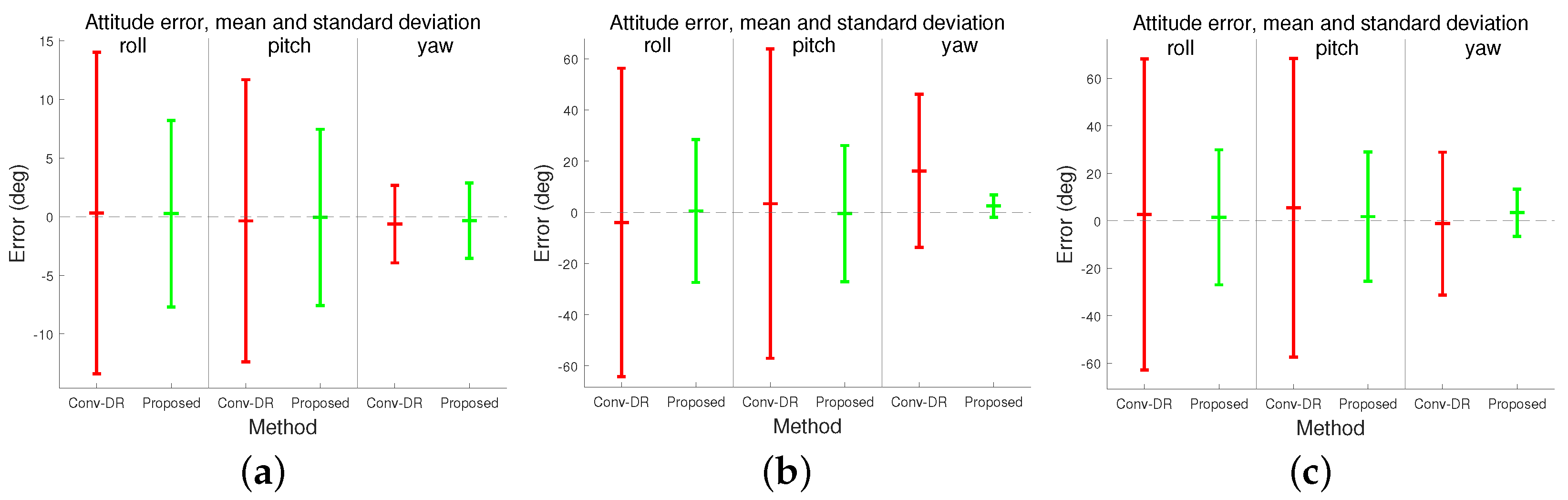

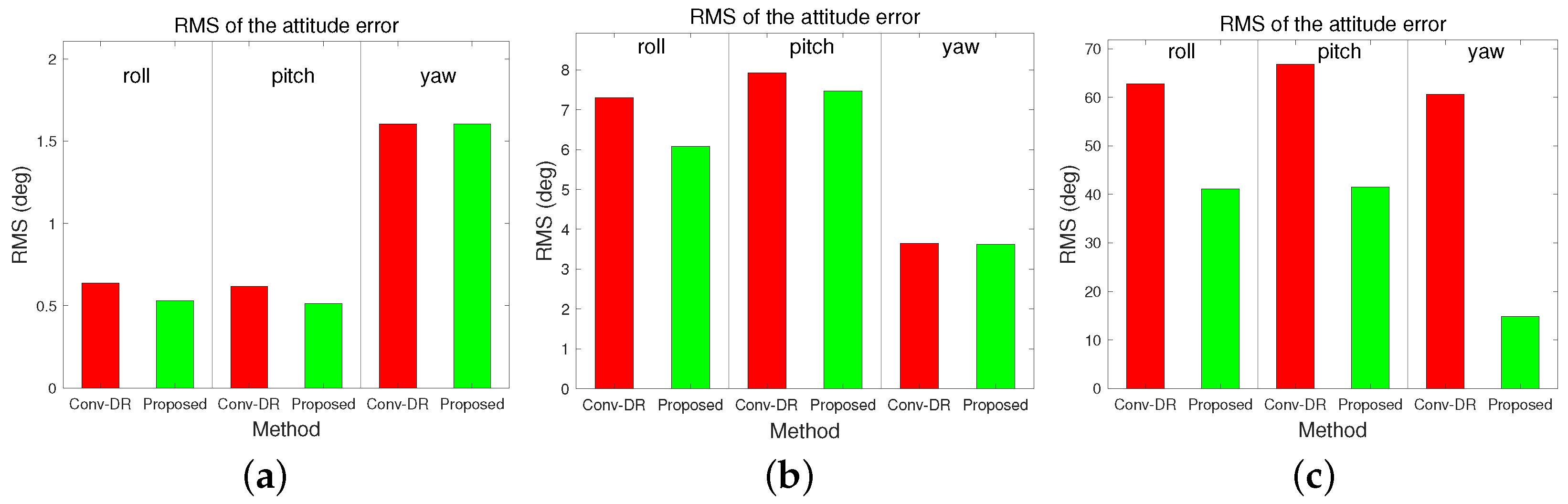

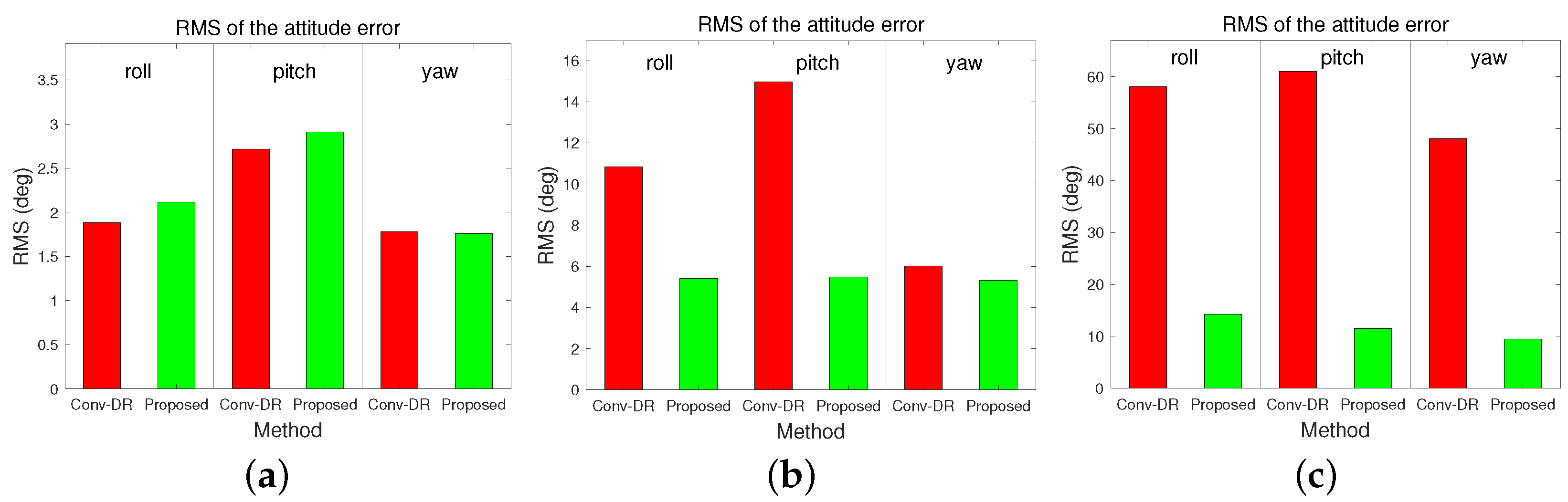

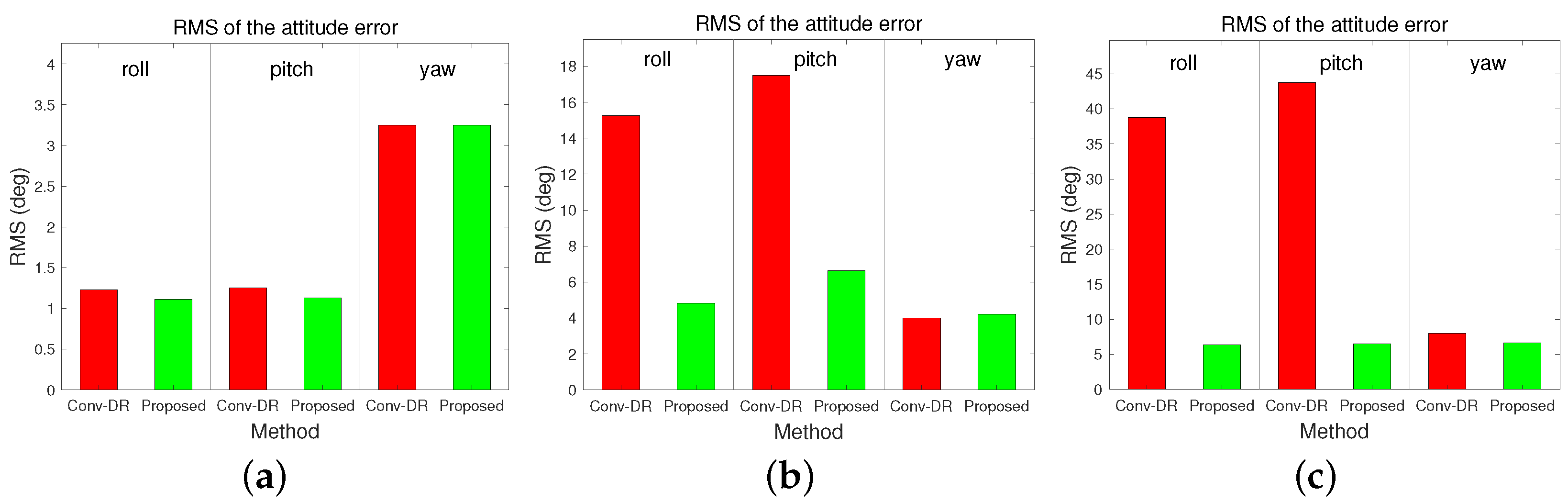

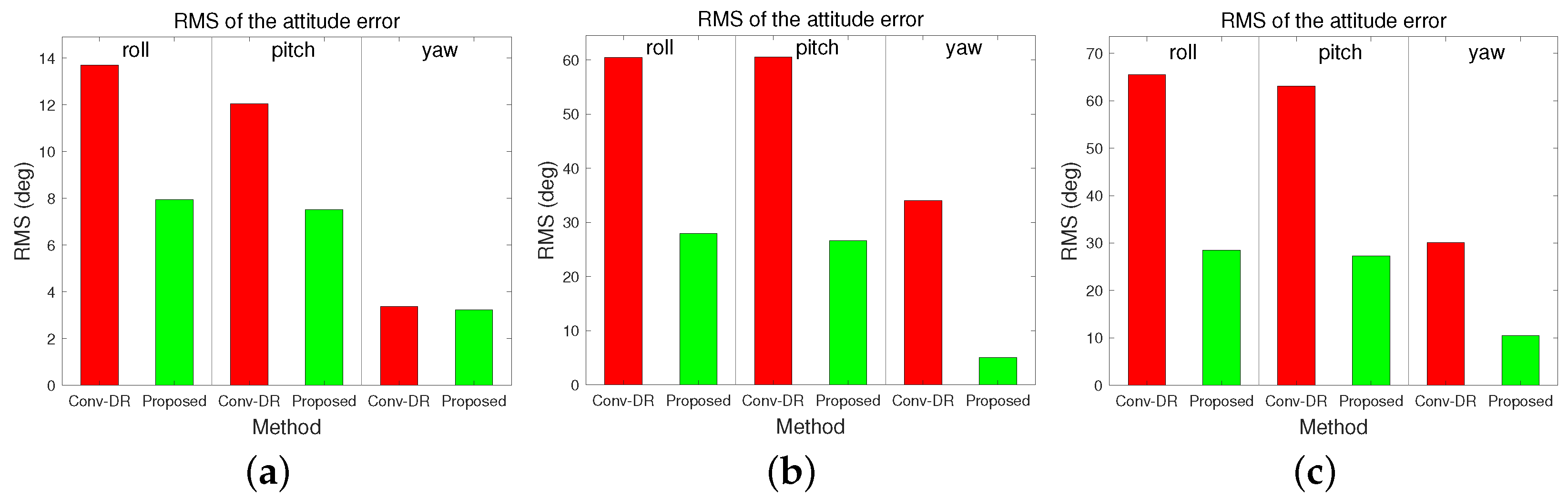

4.4. Attitude Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hashim, H.A. GPS-denied navigation: Attitude, position, linear velocity, and gravity estimation with nonlinear stochastic observer. In Proceedings of the 2021 American Control Conference, New Orleans, LA, USA, 25–28 May 2021; pp. 1146–1151. [Google Scholar]

- Nezhadshahbodaghi, M.; Mosavi, M.R.; Hajialinajar, M.T. Fusing denoised stereo visual odometry, INS and GPS measurements for autonomous navigation in a tightly coupled approach. GPS Solut. 2021, 25, 47. [Google Scholar] [CrossRef]

- Liao, J.; Li, X.; Wang, X.; Li, S.; Wang, H. Enhancing navigation performance through visual-inertial odometry in GNSS-degraded environment. GPS Solut. 2021, 25, 50. [Google Scholar] [CrossRef]

- Shi, L.F.; Zhao, Y.L.; Liu, G.X.; Chen, S.; Wang, Y.; Shi, Y.F. A robust pedestrian dead reckoning system using low-cost magnetic and inertial sensors. IEEE Trans. Instrum. Meas. 2018, 68, 2996–3003. [Google Scholar] [CrossRef]

- Jeon, J.; Hwang, Y.; Jeong, Y.; Park, S.; Kweon, I.S.; Choi, S.B. Lane detection aided online dead reckoning for GNSS denied environments. Sensors 2021, 21, 6805. [Google Scholar] [CrossRef] [PubMed]

- Nagin, I.A.; Inchagov, Y.M. Effective integration algorithm for pedestrian dead reckoning. In Proceedings of the 2018 Moscow Workshop Electronic and Networking Technologies, Moscow, Russia, 14–16 March 2018; pp. 1–4. [Google Scholar]

- Welte, A.; Xu, P.; Bonnifait, P. Four-wheeled dead-reckoning model calibration using RTS smoothing. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 312–318. [Google Scholar]

- Zlotnik, D.E.; Forbes, J.R. Exponential convergence of a nonlinear attitude estimator. Automatica 2016, 72, 11–18. [Google Scholar] [CrossRef]

- Hashim, H.A.; Abouheaf, M.; Vamvoudakis, K.G. Neural-adaptive stochastic attitude filter on SO(3). IEEE Control Sys. Lett. 2021, 6, 1549–1554. [Google Scholar] [CrossRef]

- Hashim, H.A. Systematic convergence of nonlinear stochastic estimators on the special orthogonal group SO(3). Int. J. Robust Nonlinear Control 2020, 30, 3848–3870. [Google Scholar] [CrossRef]

- Hashim, H.A.; Brown, L.J.; McIsaac, K. Nonlinear stochastic attitude filters on the special orthogonal group 3: Ito and Stratonovich. IEEE Trans. Sys. Man Cyber. Sys. 2019, 49, 1853–1865. [Google Scholar] [CrossRef]

- Medeiros, R.A.; Pimentel, G.A.; Garibotti, R. Embedded quaternion-based extended Kalman filter pose estimation for six degrees of freedom systems. J. Intell. Robot. Syst. 2021, 102, 18. [Google Scholar] [CrossRef]

- Candan, B.; Soken, H.E. Estimation of attitude using robust adaptive Kalman filter. In Proceedings of the IEEE 8th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Naples, Italy, 23–25 June 2021; pp. 159–163. [Google Scholar]

- Shurin, A.; Klein, I. QuadNet: A Hybrid Framework for Quadrotor Dead Reckoning. Sensors 2022, 22, 1426. [Google Scholar] [CrossRef]

- Jang, E.; Eom, S.-H.; Lee, E.-H. A Study on the UWB-Based Position Estimation Method Using Dead Reckoning Information for Active Driving in a Mapless Environment of Intelligent Wheelchairs. Appl. Sci. 2024, 14, 620. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, F.; Wang, Z.; Zhang, X. Localization Uncertainty Estimation for Autonomous Underwater Vehicle Navigation. J. Mar. Sci. Eng. 2023, 11, 1540. [Google Scholar] [CrossRef]

- Bai, L.; Pepper, M.G.; Wang, Z.; Mulvenna, M.D.; Bond, R.R.; Finlay, D.; Zheng, H. Upper Limb Position Tracking with a Single Inertial Sensor Using Dead Reckoning Method with Drift Correction Techniques. Sensors 2023, 23, 360. [Google Scholar] [CrossRef] [PubMed]

- Cao, S.; Jin, Y.; Trautmann, T.; Liu, K. Design and Experiments of Autonomous Path Tracking Based on Dead Reckoning. Appl. Sci. 2023, 13, 317. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, Y.; Guan, L. Optimizing AUV Navigation Using Factor Graphs with Side-Scan Sonar Integration. J. Mar. Sci. Eng. 2024, 12, 313. [Google Scholar] [CrossRef]

- Ma, X.; Liu, X.; Li, C.-L.; Che, S. Multi-source information fusion based on factor graph in autonomous underwater vehicles navigation systems. Assem. Autom. 2021, 41, 536–545. [Google Scholar] [CrossRef]

- Shahoud, A.; Shashev, D.; Shidlovskiy, S. Visual Navigation and Path Tracking Using Street Geometry Information for Image Alignment and Servoing. Drones 2022, 6, 107. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, X.; Shen, L. Optimal UAV Circumnavigation Control with Input Saturation Based on Fisher Information. IFAC-PapersOnLine 2020, 53, 2471–2476. [Google Scholar] [CrossRef]

- Xie, B.; Dai, S. A comparative study of extended Kalman filtering and unscented Kalman filtering on lie group for stewart platform state estimation. In Proceedings of the 2021 6th International Conference on Control and Robotics Engineering (ICCRE), Beijing, China, 16–18 April 2021; pp. 145–150. [Google Scholar]

- Wong, J.N.; Yoon, D.J.; Schoellig, A.P.; Barfoot, T.D. A data-driven motion prior for continuous-time trajectory estimation on SE(3). IEEE Robot. Autom. Lett. 2020, 5, 1429–1436. [Google Scholar] [CrossRef]

- Tang, T.Y.; Yoon, D.J.; Barfoot, T.D. A white-noise-on-jerk motion prior for continuous-time trajectory estimation on SE(3). IEEE Robot. Autom. Lett. 2019, 4, 594–601. [Google Scholar] [CrossRef]

- Luo, Y.; Guo, C.; You, S.; Hu, J.; Liu, J. SE2(3) based Extended Kalman Filter and Smoothing for Inertial-Integrated Navigation. arXiv 2021, arXiv:2102.12897. [Google Scholar]

- Brossard, M.; Barrau, A.; Chauchat, P.; Bonnabel, S. Associating Uncertainty to Extended Poses for on Lie Group IMU Preintegration with Rotating Earth. IEEE Trans. Robot. 2022, 38, 998–1015. [Google Scholar] [CrossRef]

- Hauffe-Waschbüsch, A.; Krieg, A. The Hilbert modular group and orthogonal groups. Res. Number Theory 2022, 8, 47. [Google Scholar] [CrossRef]

- Wu, Y.; Carricato, M. Persistent manifolds of the special Euclidean group SE(3): A review. Comput. Aided Geom. Des. 2020, 79, 101872. [Google Scholar] [CrossRef]

- He, X.; Geng, Z. Trajectory tracking of nonholonomic mobile robots by geometric control on special Euclidean group. Int. J. Robust Nonlinear Control 2021, 31, 5680–5707. [Google Scholar] [CrossRef]

- Jeong, D.B.; Ko, N.Y. Sensor Fusion for Underwater Vehicle Navigation Compensating Misalignment Using Lie Theory. Sensors 2024, 24, 1653. [Google Scholar] [CrossRef] [PubMed]

- Sola, J. Quaternion kinematics for the error-state Kalman filter. arXiv 2017, arXiv:1711.02508. [Google Scholar]

- Sola, J.; Deray, J.; Atchuthan, D. A micro Lie theory for state estimation in robotics. arXiv 2018, arXiv:1812.01537. [Google Scholar]

- Ko, N.Y.; Song, G.; Youn, W.; You, S.H. Lie group approach to dynamic-model-aided navigation of multirotor unmanned aerial vehicles. IEEE Access 2022, 10, 72717–72730. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets Robotics: The Kitti Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

| Stat. | Data | |||

|---|---|---|---|---|

| (1) | (2) | (3) | (4) | |

| Driving time (s) | 537.1492 | 483.2752 | 287.5341 | 470.5266 |

| Total distance (m) | 4207 | 5070 | 2207 | 3722 |

| Maximum speed (m/s) | 12.3288 | 14.8504 | 11.6550 | 12.8511 |

| Minimum speed (m/s) | 0.0218 | 2.4847 | 0.0065 | 0.0136 |

| Starting Lat. (°N) | 48°59′6.4 | 48°59′15.4 | 48°58′57.2 | 48°2′58.3 |

| Starting Lon. (°E) | 8°23′38.4 | 8°28′11.1 | 8°23′25.3 | 8°23′47.7 |

| Stat | Ti. | Md. | Distance Error (m) | |||

|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | |||

| Mn | 0.1 (s) | Conv. DR | 57.7120 | 26.1833 | 17.1059 (B) | 67.9617 (W) |

| Proposed | 57.6346 | 26.1902 | 17.2910 | 58.1539 | ||

| 0.5 (s) | Conv. DR | 88.5603 | 82.2978 | 46.8934 | 255.4870 (W) | |

| Proposed | 59.5332 | 37.0723 | 21.3908 (B) | 95.7408 | ||

| 1 (s) | Conv. DR | 396.2126 | 355.2294 | 107.9019 | 433.2224 (W) | |

| Proposed | 223.9200 | 76.5151 | 56.8564 (B) | 146.5781 | ||

| Std | 0.1 (s) | Conv. DR | 23.0549 | 12.2213 | 10.0197 (B) | 29.9314 (W) |

| Proposed | 23.0563 | 12.9547 | 10.3032 | 19.1637 | ||

| 0.5 (s) | Conv. DR | 44.6968 | 71.2740 | 42.1647 | 194.0085 (W) | |

| Proposed | 28.8588 | 27.1759 | 11.7212 (B) | 60.7879 | ||

| 1 (s) | Conv. DR | 236.6208 | 245.9355 | 94.0146 | 305.5643 (W) | |

| Proposed | 125.8985 | 50.2558 | 25.2438 (B) | 78.2221 | ||

| RMS | 0.1 (s) | Conv. DR | 62.1466 | 28.8951 | 19.8243 (B) | 74.2609 (W) |

| Proposed | 62.0753 | 29.2190 | 20.1280 | 61.2301 | ||

| 0.5 (s) | Conv. DR | 99.2005 | 108.8710 | 63.0623 | 320.8004 (W) | |

| Proposed | 66.1591 | 45.9662 | 24.3917 (B) | 113.4084 | ||

| 1 (s) | Conv. DR | 461.4909 | 432.0558 | 143.1139 | 530.1427 (W) | |

| Proposed | 256.8863 | 91.5435 | 62.2085 (B) | 166.1441 | ||

| Stat | Ti. | Md. | Speed Error (m/s) | |||

|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | |||

| Mn | 0.1 (s) | Conv. DR | 28.7996 | 34.1915 | 26.7012 | 548.2722 (W) |

| Proposed | 20.7235 (B) | 41.1633 | 24.3850 | 384.1330 | ||

| 0.5 (s) | Conv. DR | 265.8342 | 379.7410 | 412.9848 | 1845.6265 (W) | |

| Proposed | 85.4411 (B) | 108.0631 | 196.7227 | 1082.5576 | ||

| 1 (s) | Conv. DR | 2160.5948 (W) | 1932.6978 | 656.9144 | 2047.2024 | |

| Proposed | 1716.4039 | 679.1729 | 101.4829 (B) | 1394.4212 | ||

| Std | 0.1 (s) | Conv. DR | 20.7475 | 28.2561 | 22.5549 | 367.4999 (W) |

| Proposed | 14.5014 (B) | 41.6856 | 20.2872 | 219.8812 | ||

| 0.5 (s) | Conv. DR | 204.8562 | 306.3114 | 318.4042 | 1253.6116 (W) | |

| Proposed | 89.4406 | 80.5858 (B) | 116.8470 | 762.7432 | ||

| 1 (s) | Conv. DR | 1402.8605 (W) | 1310.4113 | 542.7032 | 1234.7650 | |

| Proposed | 1191.4488 | 381.7053 | 54.9646 (B) | 795.9201 | ||

| RMS | 0.1 (s) | Conv. DR | 35.4947 | 44.3561 | 34.9525 | 660.0443 (W) |

| Proposed | 25.2934 (B) | 58.5842 | 31.7206 | 442.6126 | ||

| 0.5 (s) | Conv. DR | 335.6097 | 487.8831 | 521.4764 | 2231.1162 (W) | |

| Proposed | 123.6324 (B) | 134.8025 | 228.8079 | 1324.2764 | ||

| 1 (s) | Conv. DR | 2576.0799 (W) | 2335.0586 | 852.0935 | 2390.7493 | |

| Proposed | 2089.4001 | 779.0858 | 115.4118(B) | 1605.5838 | ||

| Stat | Ti. | Md. | Di. | Attitude Error (deg) | |||

|---|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | ||||

| Mn | 0.1 (s) | Conv. DR | 0.0763 | 0.3077 | 0.2194 | 0.3015 | |

| −0.0602 | 0.0373 (B) | 0.1218 | −0.3586 | ||||

| 1.0869 | 1.2329 | 2.3185 (W) | −0.6320 | ||||

| Proposed | 0.0481 | 0.3408 | 0.1801 | 0.2553 | |||

| −0.0574 | 0.0405 | 0.1014 | −0.0608 | ||||

| 1.0800 | 1.2151 | 2.3165 | −0.3437 | ||||

| 0.5 (s) | Conv. DR | −0.8458 | 1.0514 | 2.3939 | −3.9552 | ||

| −2.8671 | 2.4405 | 1.4226 | 3.3844 | ||||

| −1.2849 | 4.4015 | 2.4816 | 16.2129 (W) | ||||

| Proposed | 0.0034 (B) | −0.4830 | 0.5417 | 0.5554 | |||

| −0.9671 | −0.0283 | −0.3320 | −0.0539 | ||||

| −1.5038 | 3.6899 | 2.7614 | 2.4823 | ||||

| 1 (s) | Conv. DR | 15.9446 | 12.3953 | 8.0376 | 2.7170 | ||

| 4.6852 | −1.0113 | 10.9729 | 5.5419 | ||||

| −49.2481 (W) | 21.3984 | 0.8525 | −1.1596 | ||||

| Proposed | 9.8586 | 3.6791 | 0.9710 | 1.4649 | |||

| 2.8610 | 1.2904 | 0.3972 (B) | 1.7685 | ||||

| 4.9715 | 5.9009 | 3.8896 | 3.4412 | ||||

| Std | 0.1 (s) | Conv. DR | 0.6347 | 1.8583 | 1.2082 | 13.7041 (W) | |

| 0.6135 | 2.7149 | 1.2460 | 12.0352 | ||||

| 1.1821 | 1.2830 | 2.2789 | 3.3131 | ||||

| Proposed | 0.5288 | 2.0863 | 1.0988 | 7.9447 | |||

| 0.5090 (B) | 2.9105 | 1.1268 | 7.5067 | ||||

| 1.1851 | 1.2749 | 2.2821 | 3.2049 | ||||

| 0.5 (s) | Conv. DR | 7.2562 | 10.7870 | 15.0643 | 60.2891 | ||

| 7.3873 | 14.7664 | 17.4287 | 60.4238 (W) | ||||

| 3.4161 | 4.1048 | 3.1230 (B) | 29.9377 | ||||

| Proposed | 6.0787 | 5.3967 | 4.7974 | 27.9586 | |||

| 7.4019 | 5.4767 | 6.6260 | 26.6421 | ||||

| 3.2928 | 3.8430 | 3.1803 | 4.3988 | ||||

| 1 (s) | Conv. DR | 60.7190 | 56.7711 | 37.9345 | 65.4590 | ||

| 66.6400 (W) | 60.9945 | 42.3434 | 62.8656 | ||||

| 35.2968 | 43.0970 | 7.9762 | 30.0545 | ||||

| Proposed | 39.9334 | 13.7924 | 6.2721 | 28.4368 | |||

| 41.3916 | 11.4413 | 6.4881 | 27.2554 | ||||

| 13.9436 | 7.4663 | 5.3514 (B) | 9.9476 | ||||

| RMS | 0.1 (s) | Conv. DR | 0.6393 | 1.8836 | 1.2279 | 13.7074 (W) | |

| 0.6164 | 2.7152 | 1.2519 | 12.0405 | ||||

| 1.6058 | 1.7794 | 3.2510 | 3.3728 | ||||

| Proposed | 0.5310 | 2.1140 | 1.1134 | 7.9488 | |||

| 0.5122 (B) | 2.9108 | 1.1314 | 7.5069 | ||||

| 1.6034 | 1.7612 | 3.2518 | 3.2233 | ||||

| 0.5 (s) | Conv. DR | 7.2562 | 10.7870 | 15.0643 | 60.2891 | ||

| 7.3873 | 14.7664 | 17.4287 | 60.4238 (W) | ||||

| 3.4161 | 4.1048 | 3.1230 (B) | 29.9377 | ||||

| Proposed | 6.0787 | 5.3967 | 4.7974 | 27.9586 | |||

| 7.4019 | 5.4767 | 6.6260 | 26.6421 | ||||

| 3.2928 | 3.8430 | 3.1803 | 4.3988 | ||||

| 1 (s) | Conv. DR | 62.7776 | 58.1086 | 38.7767 | 65.5153 | ||

| 66.8045 (W) | 61.0029 | 43.7424 | 63.1094 | ||||

| 60.5907 | 48.1170 | 8.0216 | 30.0769 | ||||

| Proposed | 41.1323 | 14.2747 | 6.3468 (B) | 28.4746 | |||

| 41.4904 | 11.5139 | 6.5002 | 27.3128 | ||||

| 14.8033 | 9.5166 | 6.6156 | 10.5260 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, D.B.; Lee, B.; Ko, N.Y. Three-Dimensional Dead-Reckoning Based on Lie Theory for Overcoming Approximation Errors. Appl. Sci. 2024, 14, 5343. https://doi.org/10.3390/app14125343

Jeong DB, Lee B, Ko NY. Three-Dimensional Dead-Reckoning Based on Lie Theory for Overcoming Approximation Errors. Applied Sciences. 2024; 14(12):5343. https://doi.org/10.3390/app14125343

Chicago/Turabian StyleJeong, Da Bin, Boeun Lee, and Nak Yong Ko. 2024. "Three-Dimensional Dead-Reckoning Based on Lie Theory for Overcoming Approximation Errors" Applied Sciences 14, no. 12: 5343. https://doi.org/10.3390/app14125343

APA StyleJeong, D. B., Lee, B., & Ko, N. Y. (2024). Three-Dimensional Dead-Reckoning Based on Lie Theory for Overcoming Approximation Errors. Applied Sciences, 14(12), 5343. https://doi.org/10.3390/app14125343