The Effectiveness of UWB-Based Indoor Positioning Systems for the Navigation of Visually Impaired Individuals

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. UWB-BLE Beacons

3.2. Mobile App and API Apple U1 Interface

- Providing constant values during a specific test that are to be saved in the database, e.g., distance measured by tape or voltage supplied to the beacon during the test;

- The ability to select one beacon from a drop-down list or more beacons, taking into account their location in relation to the phone;

- Each beacon was displayed using its unique UUID and the current and updated distance value;

- Saving to the database using the “Start Recording” switch button—recording all incoming measurements from the previously selected beacon until the button is disabled, or using the “Send Data to Server” button, which allows for a single measurement to be sent and saved in the database from a selected moment in time;

- The “Export to CSV” button allows the user to download data from the database in CVS format;

- An extension of the “Export to CSV” button is the “Select Export Date” button, which allows the user to select the starting date from the calendar view from which they would like to export data;

- It is also possible to disconnect all beacons using the “Disconnect All” or select the “Disconnect” button located next to each of the found beacons—it stops the process of distance updating.

3.3. Measurement Methods

3.4. Subject of Research and System Analysis

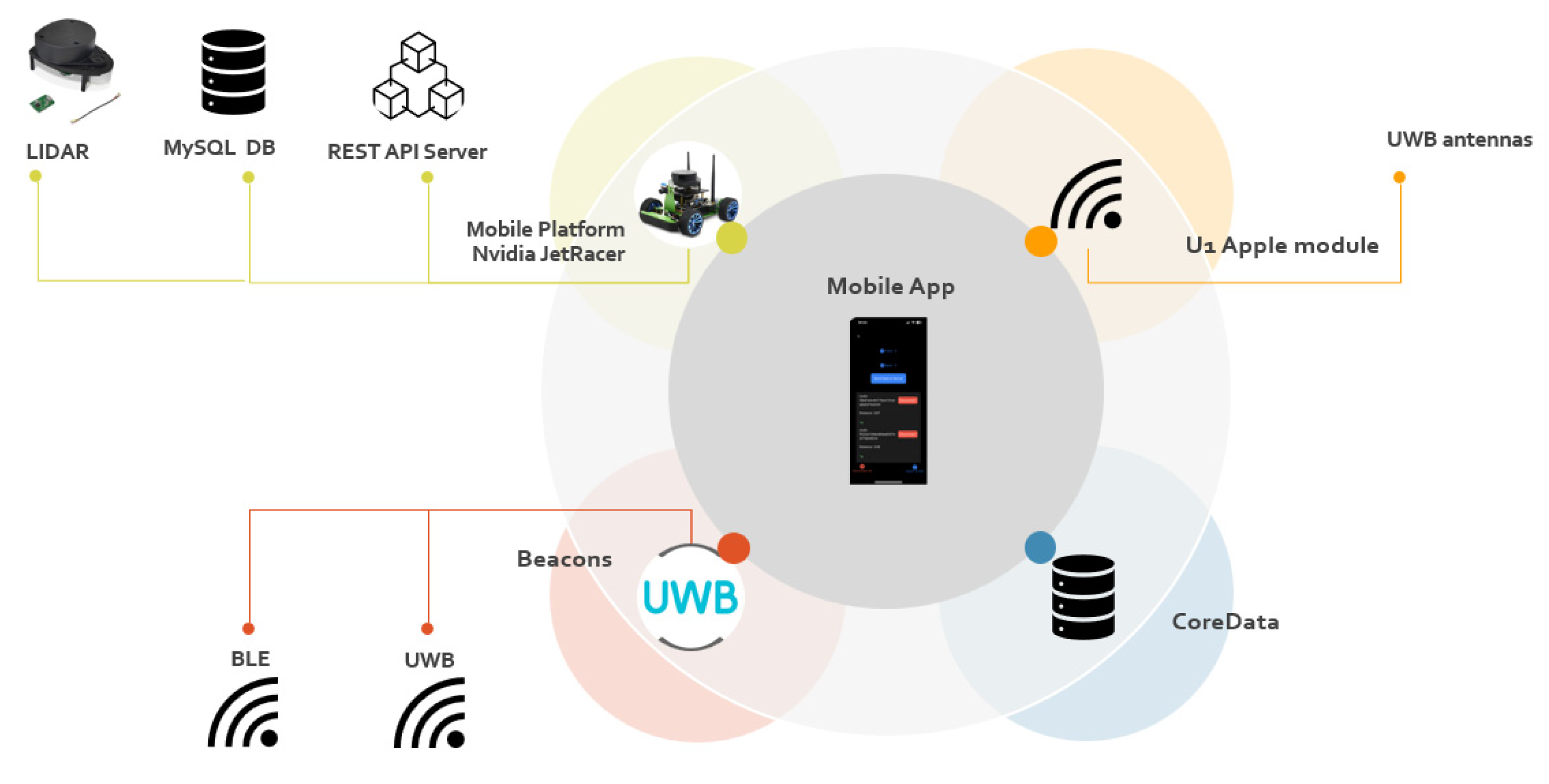

- A set of UWB beacons based on TWR technology;

- A phone with a designed mobile application dedicated to iOS (an iPhone 13 with U1 chipset was used in this research) enabling distance measurement using UWB beacons. The mobile application enables entering additional information about the environment, such as the measured distance using a measuring tape, or turning on/off system options, e.g., CameraAssist;

- The reference system used in this study was the JetRacer mobile platform equipped with LIDAR, in two main cases, static and dynamic;

- A REST API server enabling communication between the mobile application, the JetRacer platform, and the MySQL database;

- Two databases—CoreData (collecting data when the mobile platform was not involved in the measurements) and MySQL (collecting data during dynamic tests using the Jetracer platform); the databases collected distance measurements as well as environmental information.

- The diagram of the measurement system used in this research is shown in Figure 1.

- In addition, the following elements were used to conduct the research:

- GTEM (Gigahertz Transverse Electro Magnetic) cell for simulating LoS/NLoS conditions. GTEM is the chamber that simulates free-space conditions for electromagnetic wave propagation and is utilized to test the electromagnetic interference and immunity of electronic devices. Overall, GTEM cells are valuable tools in the field of electromagnetic compatibility, providing a convenient and efficient way to conduct testing in a controlled environment;

- Various obstacles such as concrete walls, glass walls, and wooden doors;

- A designed test environment, which consisted of rooms in a residential building, to best replicate the everyday situation; a snapshot of the test room using the SLAM (simultaneous localization and mapping) algorithm is presented in Figure 2.

3.5. Experiment Plan

3.5.1. Influence of Phone Position

3.5.2. Impact of CameraAssist

3.5.3. Impact of LoS/NLoS Conditions

- Accuracy of the device;

- Durability/reliability of the device;

- Adaptability to a dynamic environment.

4. Results

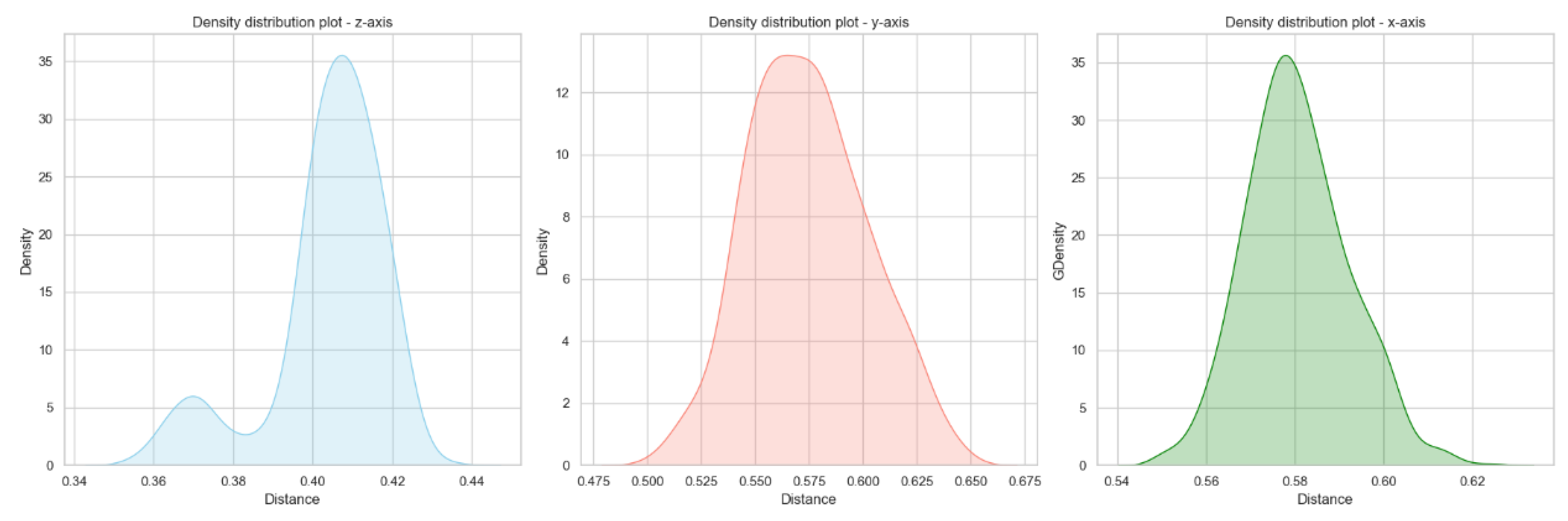

4.1. Phone Multi-Axis Position

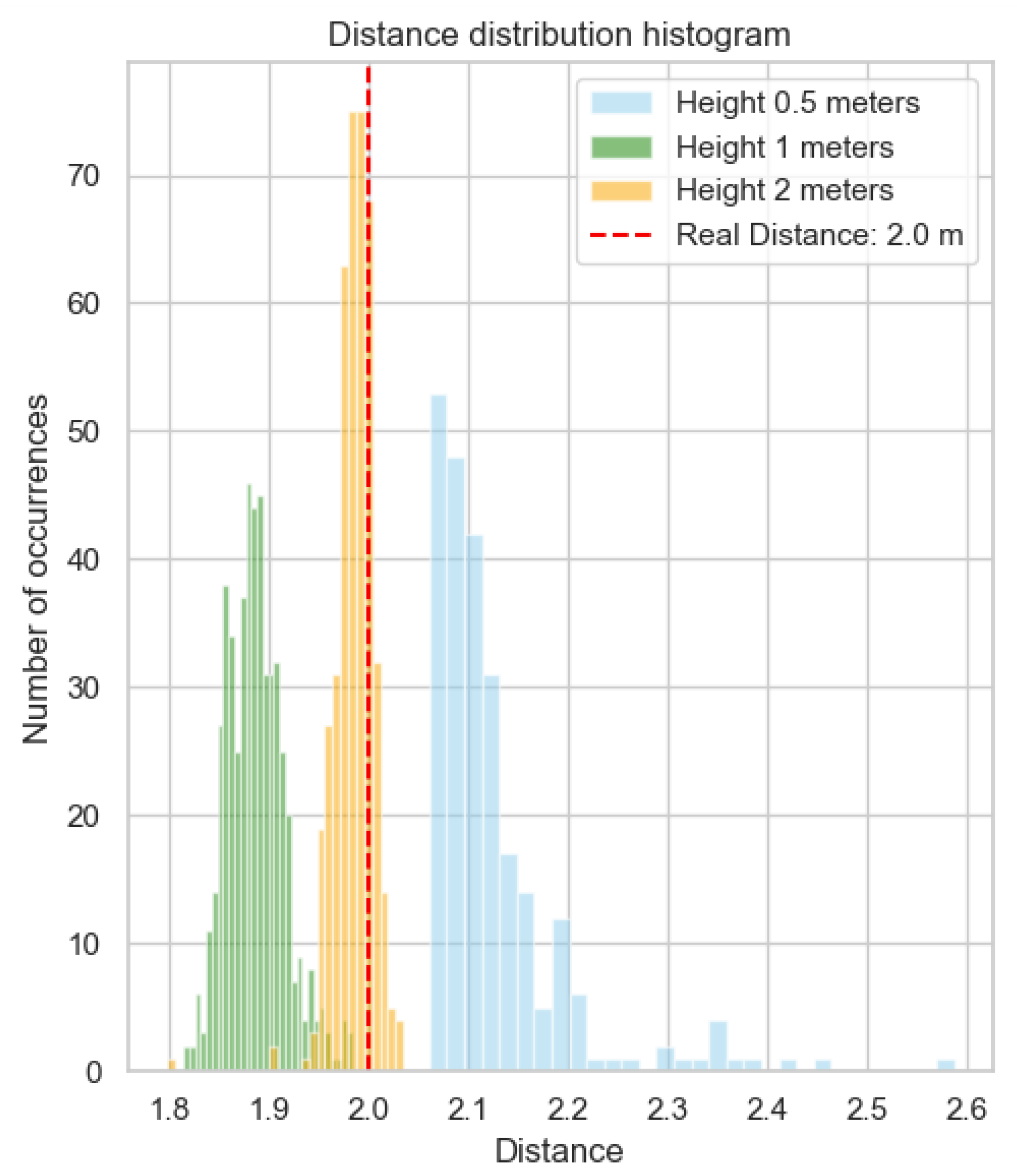

4.2. Different Phone Height

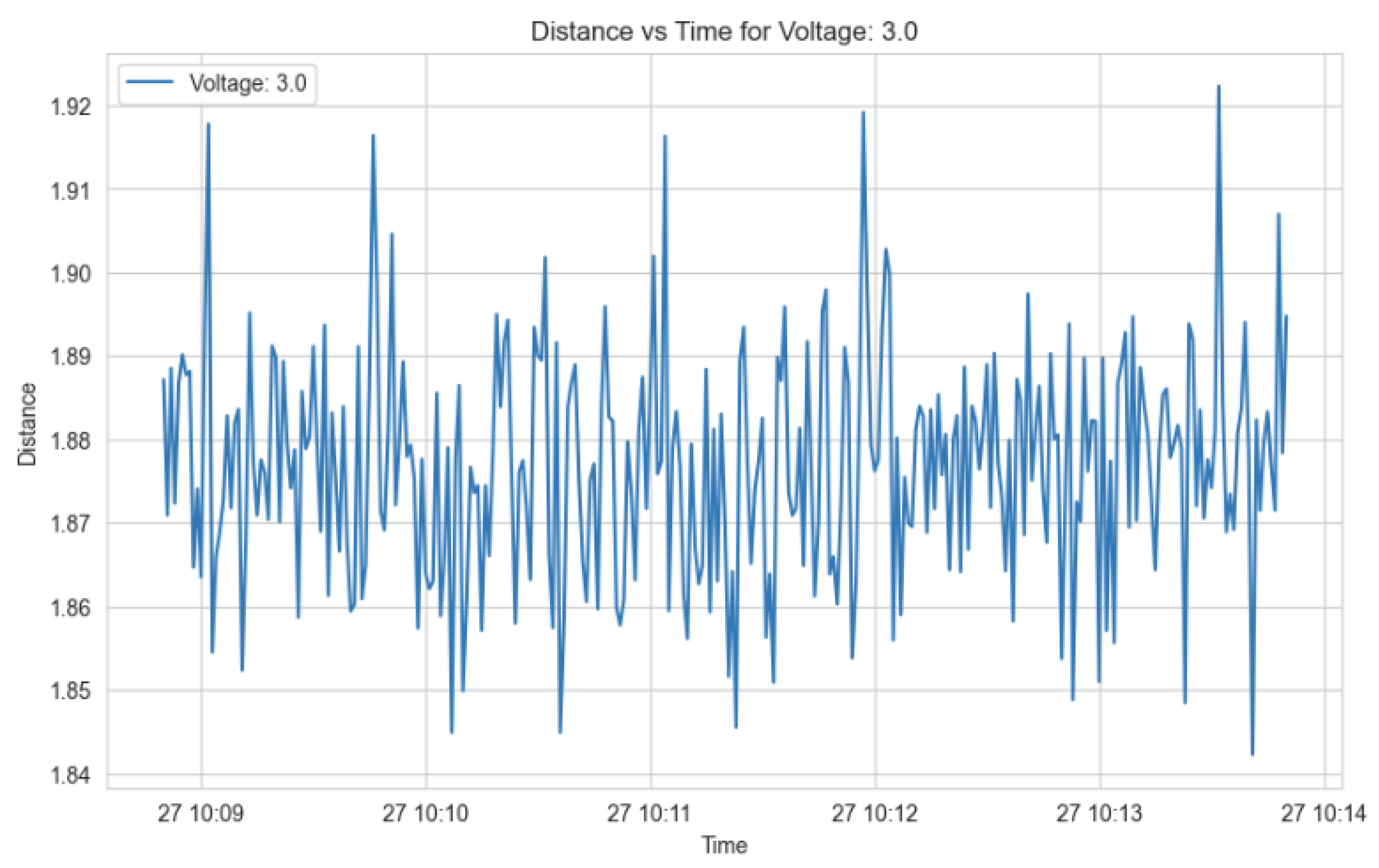

4.3. Various Distance

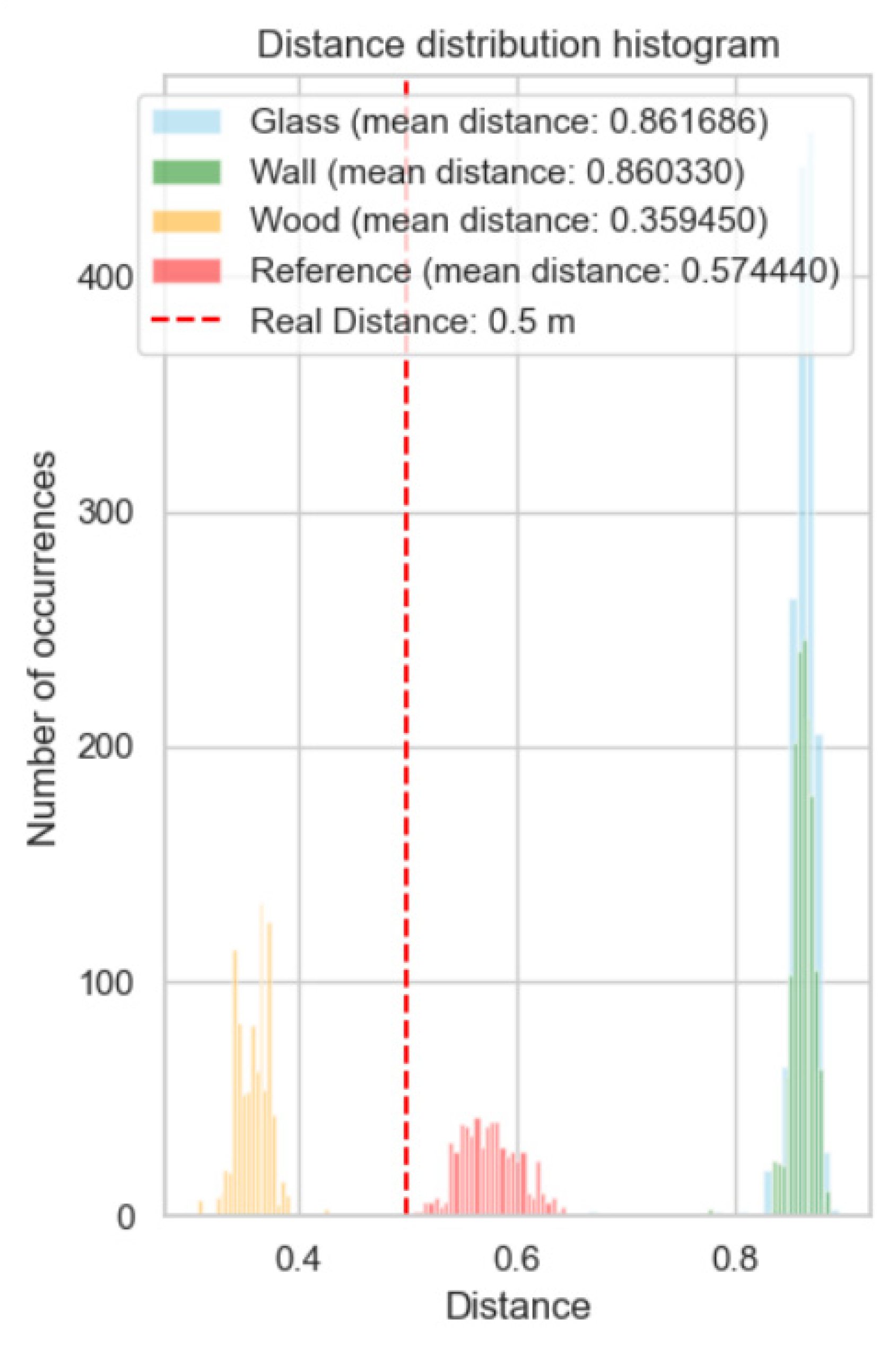

4.4. CameraAssist Option

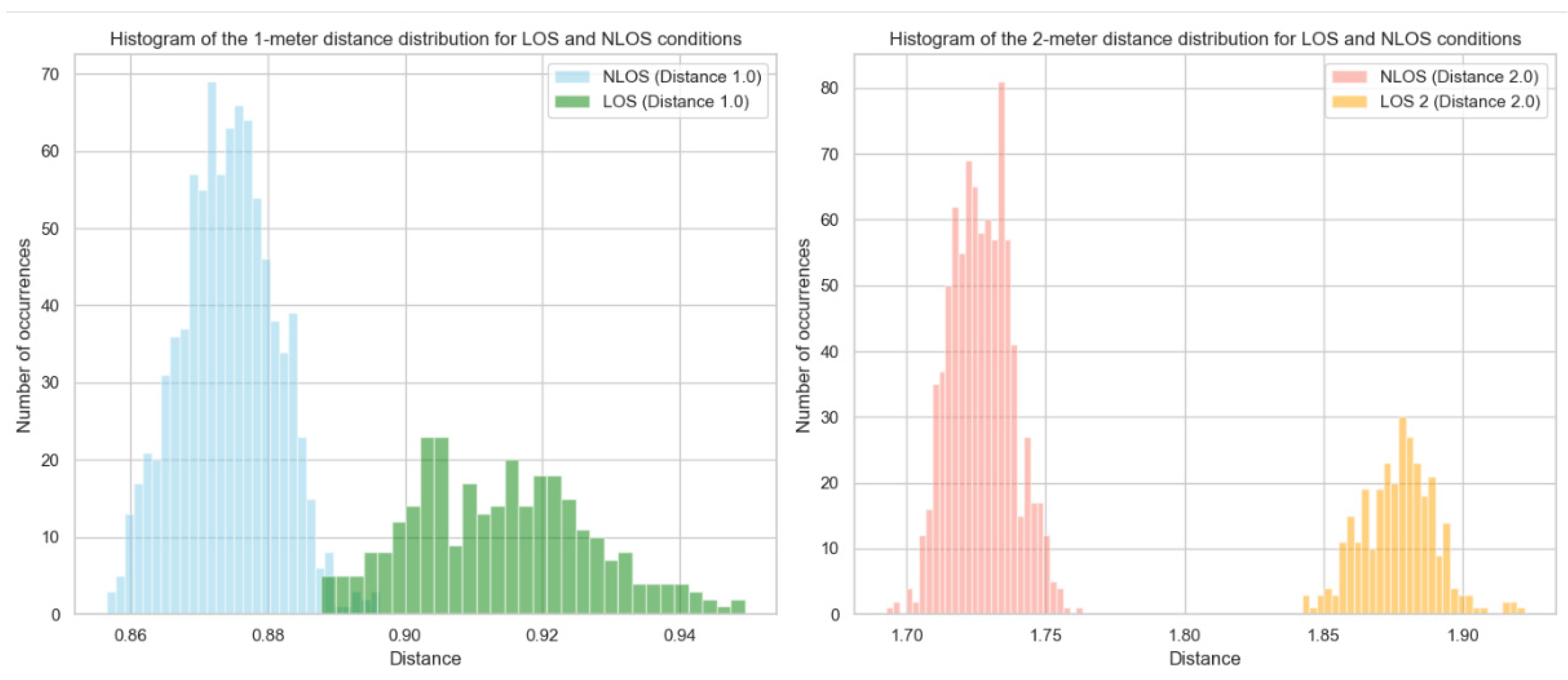

4.5. LoS/NLoS Conditions

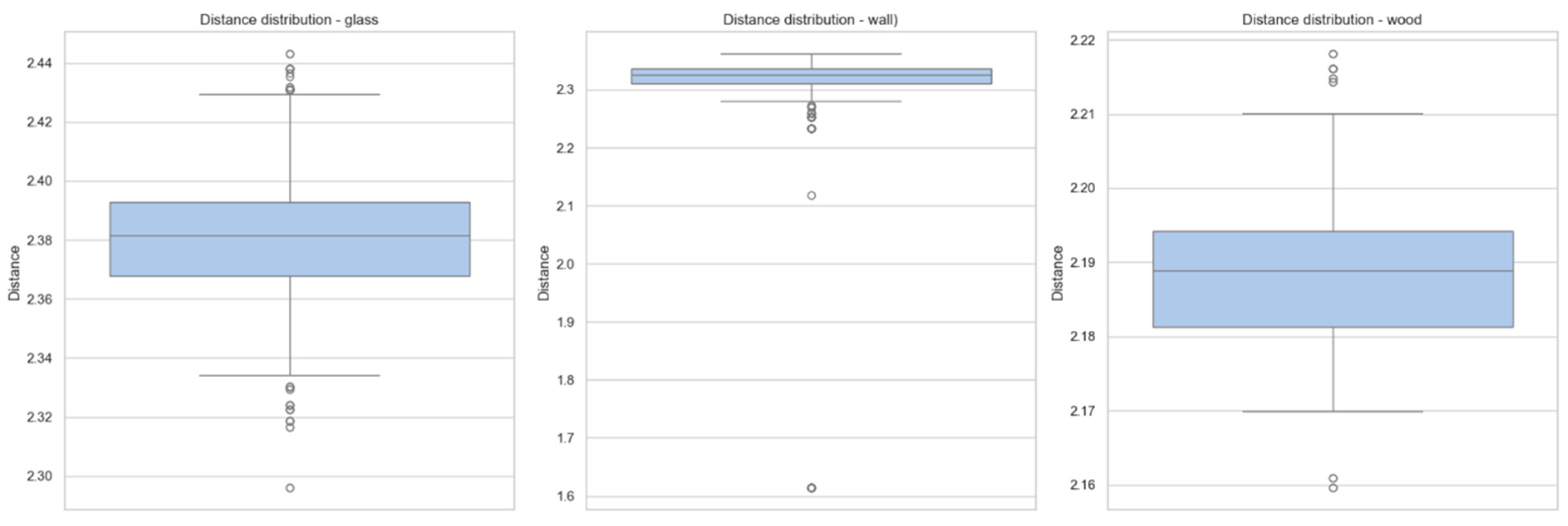

4.6. Impact of Obstacles

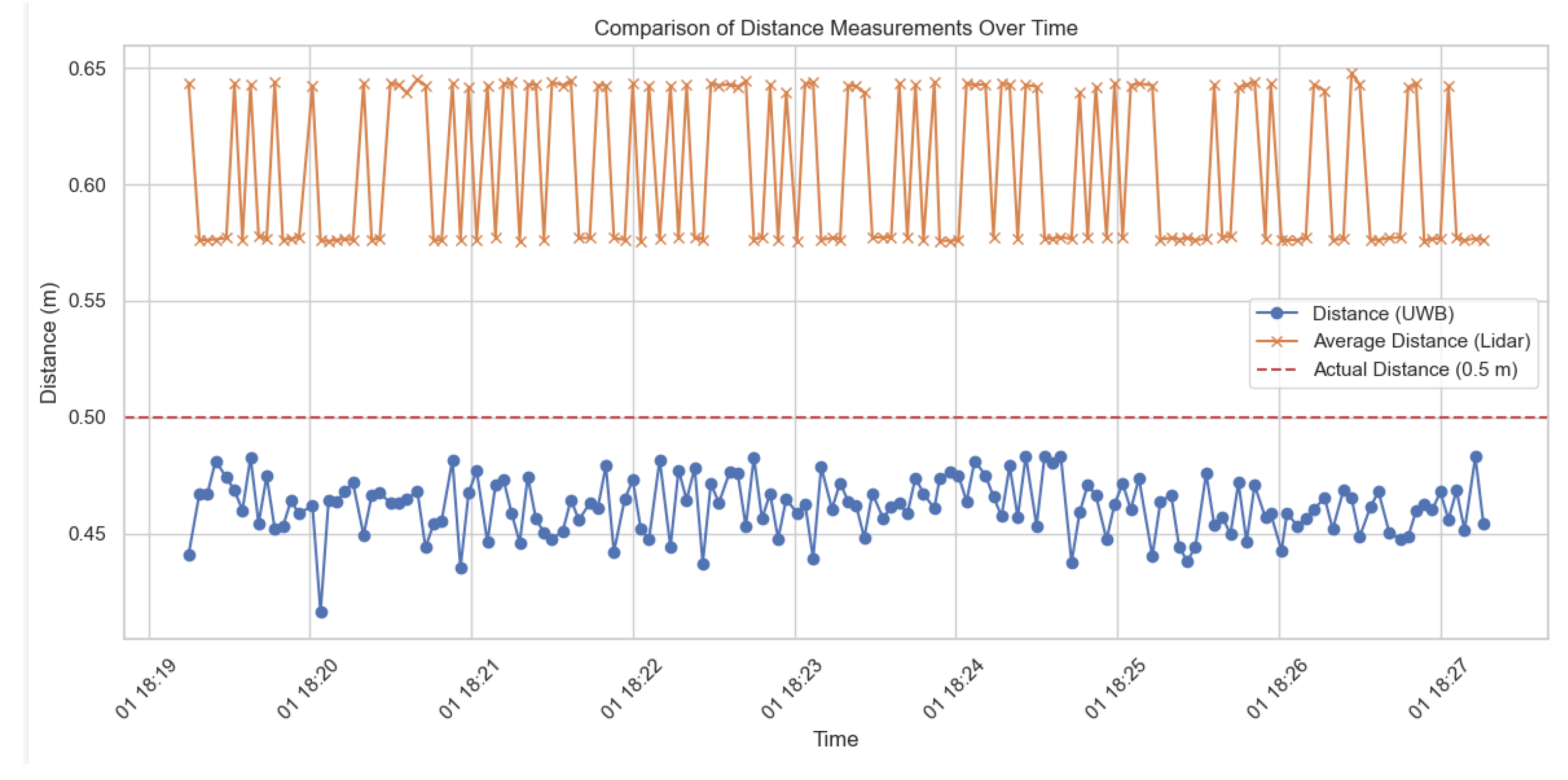

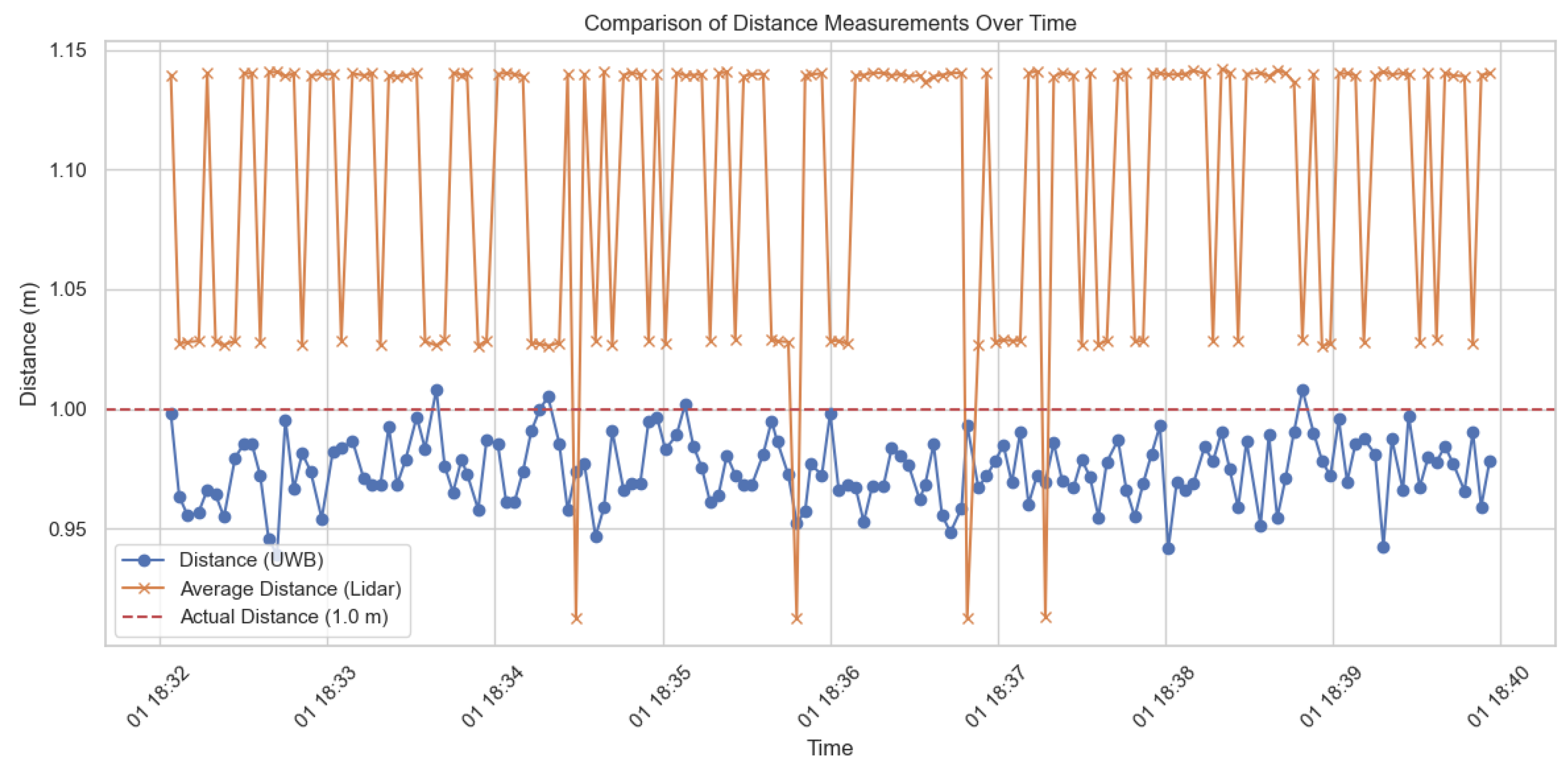

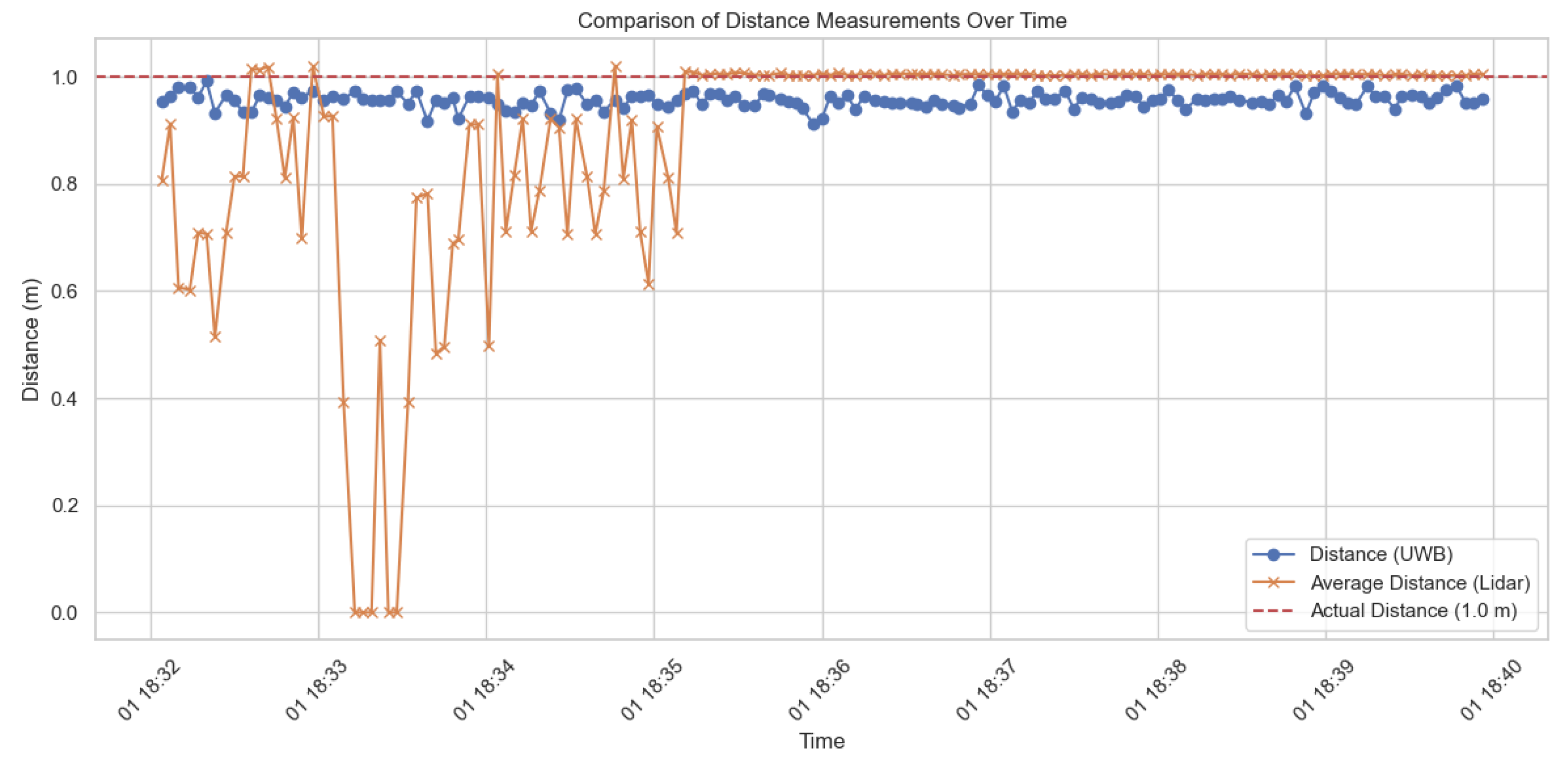

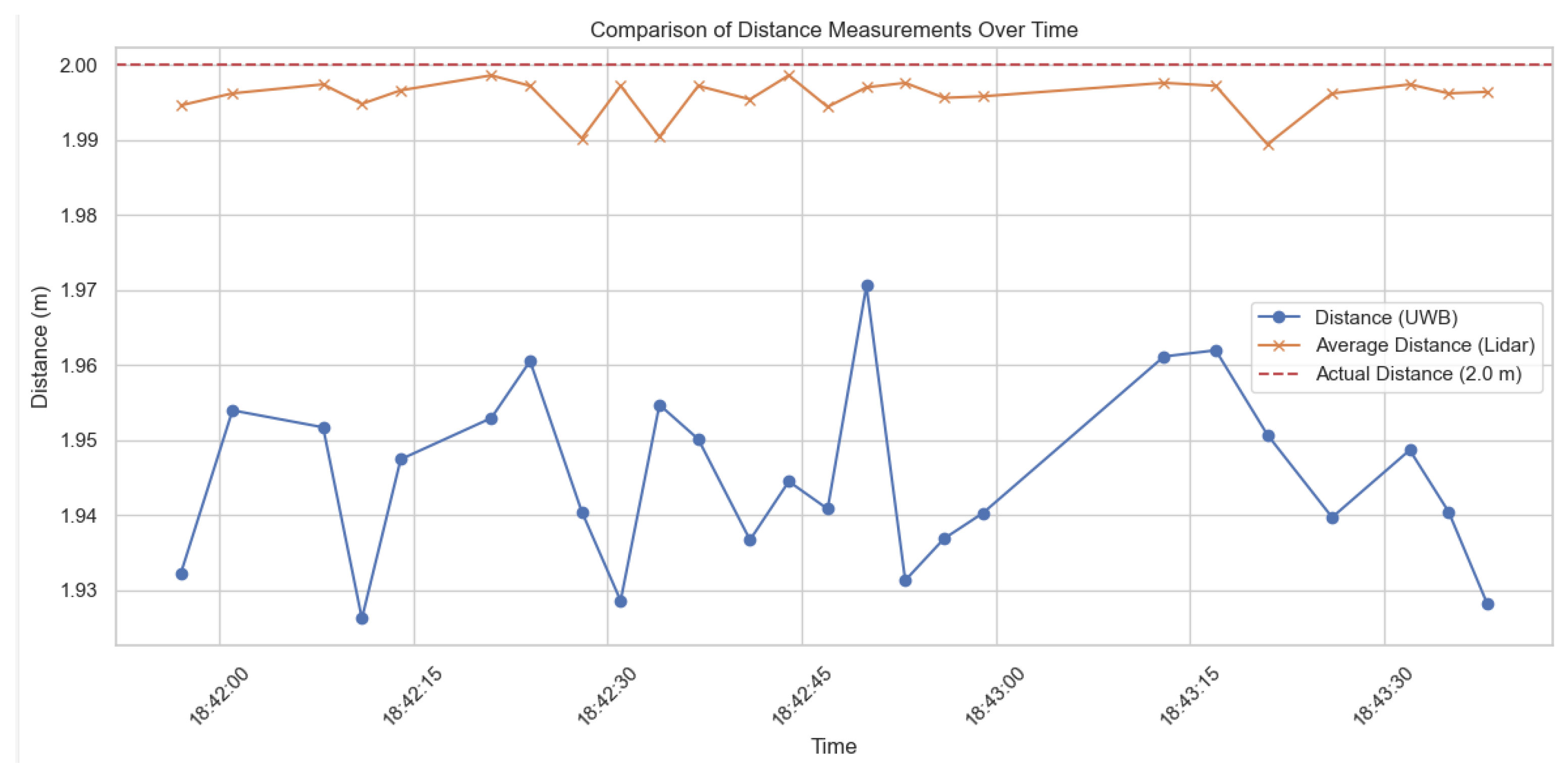

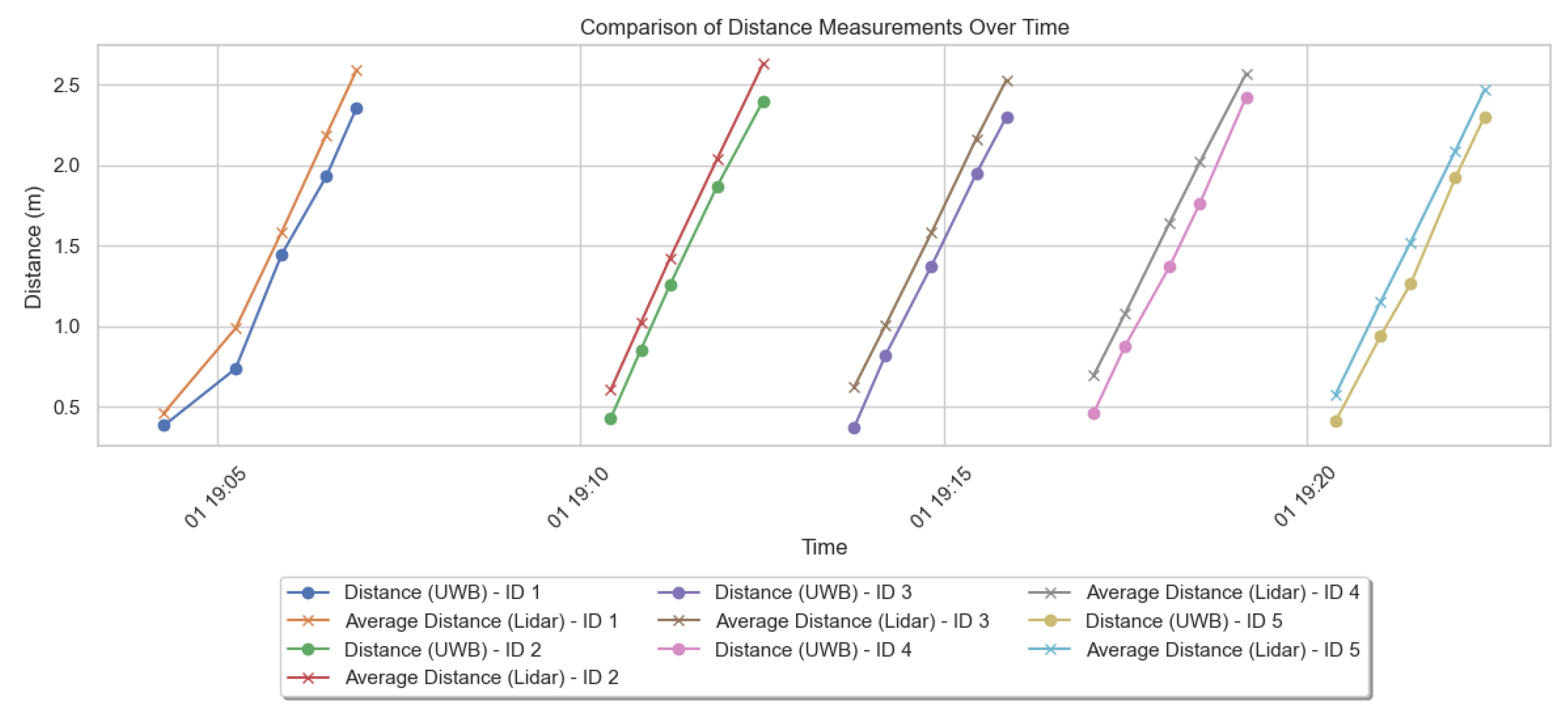

4.7. LIDAR and UWB Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Forrest, S.L.; Mercado, C.L.; Engmann, C.M.; Stacey, A.W.; Hariharan, L.; Khan, S.; Cabrera, M.T. Does the Current Global Health Agenda Lack Vision? Glob. Health Sci. Pract. 2023, 11, e2200091. [Google Scholar] [CrossRef] [PubMed]

- Fisher, D.E.; Ward, M.M.; Hoffman, H.J.; Li, C.-M.; Cotch, M.F. Impact of Sensory Impairments on Functional Disability in Adults with Arthritis. Am. J. Prev. Med. 2016, 50, 454–462. [Google Scholar] [CrossRef] [PubMed]

- Peter, M.G.; Porada, D.K.; Regenbogen, C.; Olsson, M.J.; Lundström, J.N. Sensory loss enhances multisensory integration performance. Cortex 2019, 120, 116–130. [Google Scholar] [CrossRef]

- Lu, C.-L.; Liu, Z.-Y.; Huang, J.-T.; Huang, C.-I.; Wang, B.-H.; Chen, Y.; Wu, N.-H.; Wang, H.-C.; Giarré, L.; Kuo, P.-Y. Assistive Navigation Using Deep Reinforcement Learning Guiding Robot With UWB/Voice Beacons and Semantic Feedbacks for Blind and Visually Impaired People. Front. Robot. AI 2021, 8, 654132. [Google Scholar] [CrossRef] [PubMed]

- Dakopoulos, D.; Bourbakis, N.G. Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2010, 40, 25–35. [Google Scholar] [CrossRef]

- Patel, I.; Kulkarni, M.; Mehendale, N. Review of sensor-driven assistive device technologies for enhancing navigation for the visually impaired. Multimed. Tools Appl. 2023, 83, 52171–52195. [Google Scholar] [CrossRef]

- E Kruk, M.; Pate, M. The Lancet Global Health Commission on High Quality Health Systems 1 year on: Progress on a global imperative. Lancet Glob. Health 2020, 8, E30–E32. [Google Scholar] [CrossRef] [PubMed]

- The Technology of Electronic Travel Aids—Electronic Travel AIDS: New Directions for Research—NCBI Bookshelf. Available online: https://www.ncbi.nlm.nih.gov/books/NBK218025/ (accessed on 26 June 2024).

- Soleimanijavid, A.; Konstantzos, I.; Liu, X. Challenges and opportunities of occupant-centric building controls in real-world implementation: A critical review. Energy Build. 2024, 308, 113958. [Google Scholar] [CrossRef]

- Messaoudi, M.D.; Menelas, B.-A.J.; Mcheick, H. Review of Navigation Assistive Tools and Technologies for the Visually Impaired. Sensors 2022, 22, 7888. [Google Scholar] [CrossRef]

- Li, C.T.; Cheng, J.C.; Chen, K. Top 10 technologies for indoor positioning on construction sites. Autom. Constr. 2020, 118, 103309. [Google Scholar] [CrossRef]

- Su, H.-K.; Liao, Z.-X.; Lin, C.-H.; Lin, T.-M. A hybrid indoor-position mechanism based on bluetooth and WiFi communications for smart mobile devices. In Proceedings of the 2015 International Symposium on Bioelectronics and Bioinformatics (ISBB), Beijing, China, 14–17 October 2015; pp. 188–191. [Google Scholar] [CrossRef]

- Syazwani, C.J.N.; Wahab, N.H.A.; Sunar, N.; Ariffin, S.H.S.; Wong, K.Y.; Aun, Y. Indoor Positioning System: A Review. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 477–490. [Google Scholar] [CrossRef]

- Elsanhoury, M.; Siemuri, A.; Nieminen, J.; Välisuo, P.; Koljonen, J.; Kuusniemi, H.; Elmusrati, M.S. Emerging Wireless Technologies for Reliable Indoor Navigation in Industrial Environments. In Proceedings of the 36th International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GNSS+ 2023), Denver, CO, USA, 11–15 September 2023; pp. 1706–1714. [Google Scholar] [CrossRef]

- FiRA Annual Report 2022. Available online: https://www.firaconsortium.org/sites/default/files/2023-02/fira-annual-report-2022.pdf (accessed on 19 May 2024).

- Smartphone High Accuracy Real-Time Location to Drive UWB-Enabled Devices to 1.3 Billion Shipments by 2026. Available online: https://www.abiresearch.com/press/smartphone-high-accuracy-real-time-location-drive-uwb-enabled-devices-13-billion-shipments-2026/ (accessed on 19 May 2024).

- Unleashing the Potential of UWB: Regulatory Considerations. Available online: https://www.allaboutcircuits.com/uploads/articles/UWBWP.pdf (accessed on 18 May 2024).

- Annual Report 2021. Available online: https://www.firaconsortium.org/sites/default/files/2022-02/FiRa-Annual-Report-2021.pdf (accessed on 18 May 2024).

- Ultra-Wideband UWB: Omlox. Available online: https://omlox.com/omlox-explained/ultra-wideband-uwb (accessed on 18 May 2024).

- Alhadhrami, S.; Alnafessah, A.; Al-Ammar, M.; Alarifi, A.; Al-Khalifa, H.; Alsaleh, M. UWB Indoor Tracking System for Visually Impaired People. In Proceedings of the MoMM 2015: The 13th International Conference on Advances in Mobile Computing and Multimedia, MoMM 2015—Proceedings, Brussels, Belgium, 11–13 December 2015. [Google Scholar] [CrossRef]

- Rivai, M.; Hutabarat, D.; Nafis, Z.M.J. 2D mapping using omni-directional mobile robot equipped with LiDAR. Telkomnika Telecommun. Comput. Electron. Control. 2020, 18, 1467–1474. [Google Scholar] [CrossRef]

- Deak, G.; Curran, K.; Condell, J. A survey of active and passive indoor localisation systems. Comput. Commun. 2012, 35, 1939–1954. [Google Scholar] [CrossRef]

- Hayward, S.; van Lopik, K.; Hinde, C.; West, A. A Survey of Indoor Location Technologies, Techniques and Applications in Industry. Internet Things 2022, 20, 100608. [Google Scholar] [CrossRef]

- Liu, Q.; Yin, Z.; Zhao, Y.; Wu, Z.; Wu, M. UWB LOS/NLOS identification in multiple indoor environments using deep learning methods. Phys. Commun. 2022, 52, 101695. [Google Scholar] [CrossRef]

- Arai, T.; Yoshizawa, T.; Aoki, T.; Zempo, K.; Okada, Y. Evaluation of Indoor Positioning System based on Attachable Infrared Beacons in Metal Shelf Environment. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019. [Google Scholar] [CrossRef]

- Xiao, Z.; Wen, H.; Markham, A.; Trigoni, N.; Blunsom, P.; Frolik, J. Non-Line-of-Sight Identification and Mitigation Using Received Signal Strength. IEEE Trans. Wirel. Commun. 2015, 14, 1689–1702. [Google Scholar] [CrossRef]

- Vaz, R.; Freitas, D.; Coelho, A. Blind and visually impaired visitors’ experiences in museums: Increasing accessibility through assistive technologies. Int. J. Incl. Mus. 2020, 13, 57–80. [Google Scholar] [CrossRef]

- Nakajima, M.; Haruyama, S. New indoor navigation system for visually impaired people using visible light communication. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 37. [Google Scholar] [CrossRef]

- Xue, L.; Zhang, Z.; Xu, L.; Gao, F.; Zhao, X.; Xun, X.; Zhao, B.; Kang, Z.; Liao, Q.; Zhang, Y. Information accessibility oriented self-powered and ripple-inspired fingertip interactors with auditory feedback. Nano Energy 2021, 87, 106117. [Google Scholar] [CrossRef]

- Faisal, F.; Hasan, M.; Sabrin, S.; Hasan, Z.; Siddique, A.H. Voice Activated Portable Braille with Audio Feedback. In Proceedings of the 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 5–7 January 2021. [Google Scholar] [CrossRef]

- Choi, J.; Gill, H.; Ou, S.; Lee, J. CCVoice: Voice to Text Conversion and Management Program Implementation of Google Cloud Speech API. KIISE Trans. Comput. Pract. 2019, 25, 191–197. [Google Scholar] [CrossRef]

- Gabler, D. András Márton: Les pratiques funéraires en Pannonie de l’époque augustéenne à la fin du 3e siècle. Acta Archaeol. Acad. Sci. Hung. 2021, 72, 241–245. [Google Scholar] [CrossRef]

- Santhosh, S.S.; Sasiprabha, T.; Jeberson, R. BLI—NAV embedded navigation system for blind people. In Proceedings of the 2010 Recent Advances in Space Technology Services and Climate Change (RSTSCC), Chennai, India, 13–15 November 2010. [Google Scholar] [CrossRef]

- Dian, Z.; Kezhong, L.; Rui, M. A precise RFID indoor localization system with sensor network assistance. China Commun. 2015, 12, 13–22. [Google Scholar] [CrossRef]

- Park, S.; Choi, I.-M.; Kim, S.-S.; Kim, S.-M. A portable mid-range localization system using infrared LEDs for visually impaired people. Infrared Phys. Technol. 2014, 67, 583–589. [Google Scholar] [CrossRef]

- Chen, H.; Wang, K.; Yang, K. Improving realsense by fusing color stereo vision and infrared stereo vision for the visually impaired. In Proceedings of the 2018 International Conference on Information Science and System, Jeju, Republic of Korea, 27 April 2018. [Google Scholar] [CrossRef]

- Rehrl, K.; Leitinger, S.; Bruntsch, S.; Mentz, H. Assisting orientation and guidance for multimodal travelers in situations of modal change. In Proceedings of the 2005 IEEE Intelligent Transportation Systems, Vienna, Austria, 16 September 2005. [Google Scholar] [CrossRef]

- Mahalle, S. Ultrasonic Spectacles & Waist- Belt for Visually Impaired & Blind Person. IOSR J. Eng. 2014, 4, 46–49. [Google Scholar] [CrossRef]

- dos Santos, A.D.P.; Medola, F.O.; Cinelli, M.J.; Ramirez, A.R.G.; Sandnes, F.E. Are electronic white canes better than traditional canes? A comparative study with blind and blindfolded participants. Univers. Access Inf. Soc. 2021, 20, 93–103. [Google Scholar] [CrossRef]

- Higuchi, H.; Harada, A.; Iwahashi, T.; Usui, S.; Sawamoto, J.; Kanda, J.; Wakimoto, K.; Tanaka, S. Network-based nationwide RTK-GPS and indoor navigation intended for seamless location based services. In Proceedings of the National Technical Meeting, Institute of Navigation, San Diego, CA, USA, 26–28 January 2004. [Google Scholar]

- Caffery, J.; Stuber, G. Overview of radiolocation in CDMA cellular systems. IEEE Commun. Mag. 1998, 36, 38–45. [Google Scholar] [CrossRef]

- Guerrero, L.A.; Vasquez, F.; Ochoa, S.F. An indoor navigation system for the visually impaired. Sensors 2012, 12, 8236–8258. [Google Scholar] [CrossRef] [PubMed]

- Satani, N.; Patel, S.; Patel, S. AI Powered Glasses for Visually Impaired Person. Int. J. Recent Technol. Eng. 2020, 9, 316–321. [Google Scholar] [CrossRef]

- Chen, H.-E.; Lin, Y.-Y.; Chen, C.-H.; Wang, I.-F. BlindNavi: A Navigation App for the Visually Impaired Smartphone User. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; Volume 18, pp. 19–24. [Google Scholar] [CrossRef]

- Huang, S.; Ishikawa, M.; Yamakawa, Y. An Active assistant robotic system based on high-speed vision and haptic feedback for human-robot collaboration. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018. [Google Scholar] [CrossRef]

- Mon, C.S.; Yap, K.M.; Ahmad, A. A preliminary study on requirements of olfactory, haptic and audio enabled application for visually impaired in edutainment. In Proceedings of the 2019 IEEE 9th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Kota Kinabalu, Malaysia, 27–28 April 2019. [Google Scholar] [CrossRef]

- Rehrl, K.; Göll, N.; Leitinger, S.; Bruntsch, S.; Mentz, H.-J. Smartphone-based information and navigation aids for public transport travellers. In Location Based Services and TeleCartography; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar] [CrossRef]

- Zhou, J.; Yeung, W.M.-C.; Ng, J.K.-Y. Enhancing indoor positioning accuracy by utilizing signals from both the mobile phone network and the wireless local area network. In Proceedings of the 22nd International Conference on Advanced Information Networking and Applications (Aina 2008), Okinawa, Japan, 25–28 March 2008. [Google Scholar] [CrossRef]

- Karkar, A.; Al-Maadeed, S. Mobile Assistive Technologies for Visual Impaired Users: A Survey. In Proceedings of the 2018 International Conference on Computer and Applications (ICCA), Beirut, Lebanon, 25–26 August 2018. [Google Scholar] [CrossRef]

- Menelas, B.; Picinalli, L.; Katz, B.F.G.; Bourdot, P. Audio haptic feedbacks for an acquisition task in a multi-target context. In Proceedings of the 2010 IEEE Symposium on 3D User Interfaces (3DUI 2010), Waltham, MA, USA, 20–21 March 2010. [Google Scholar] [CrossRef]

- Liu, Z.; Li, C.; Wu, D.; Dai, W.; Geng, S.; Ding, Q. A wireless sensor network based personnel positioning scheme in coal mines with blind areas. Sensors 2010, 10, 9891–9918. [Google Scholar] [CrossRef]

- Hairuman, I.F.B.; Foong, O.-M. OCR signage recognition with skew & slant correction for visually impaired people. In Proceedings of the 2011 11th International Conference on Hybrid Intelligent Systems (HIS 2011), Melacca, Malaysia, 5–8 December 2011; pp. 306–310. [Google Scholar] [CrossRef]

- Messaoudi, M.D.; Menelas, B.-A.J.; Mcheick, H. Autonomous Smart White Cane Navigation System for Indoor Usage. Technologies 2020, 8, 37. [Google Scholar] [CrossRef]

- A Cellphone Based Indoor wayfindingsystem for the Visually Challenged. Available online: https://assistech.iitd.ac.in/doc/Roshni_Pamphlet.pdf (accessed on 19 May 2024).

- Chen, Q.; Khan, M.; Tsangouri, C.; Yang, C.; Li, B.; Xiao, J.; Zhu, Z. CCNY Smart Cane. In Proceedings of the 2017 IEEE 7th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Honolulu, HI, USA, 31 July–4 August 2017; pp. 1246–1251. [Google Scholar] [CrossRef]

- Aladren, A.; Lopez-Nicolas, G.; Puig, L.; Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. IEEE Syst. J. 2016, 10, 922–932. [Google Scholar] [CrossRef]

- Teng, C.-F.; Chen, Y.-L. Syndrome-Enabled Unsupervised Learning for Neural Network-Based Polar Decoder and Jointly Optimized Blind Equalizer. IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 177–188. [Google Scholar] [CrossRef]

- Bai, J.; Liu, D.; Su, G.; Fu, Z. A cloud and vision-based navigation system used for blind people. In Proceedings of the AIACT ‘17: 2017 International Conference on Artificial Intelligence, Automation and Control Technologies, Wuhan, China, 7–9 April 2017; p. 22. [Google Scholar] [CrossRef]

- Oladayo, O.O. A Multidimensional Walking Aid for Visually Impaired Using Ultrasonic Sensors Network with Voice Guidance. Int. J. Intell. Syst. Appl. 2014, 6, 53–59. [Google Scholar] [CrossRef]

- Sahoo, N.; Lin, H.-W.; Chang, Y.-H. Design and implementation of a walking stick aid for visually challenged people. Sensors 2019, 19, 130. [Google Scholar] [CrossRef]

- Kuc, R. Binaural sonar electronic travel aid provides vibrotactile cues for landmark, reflector motion and surface texture classification. IEEE Trans. Biomed. Eng. 2002, 49, 1173–1180. [Google Scholar] [CrossRef]

- Nivishna, S.; Vivek, C. Smart indoor and outdoor guiding system for blind people using android and IOT. Indian J. Public Health Res. Dev. 2019, 10, 1108. [Google Scholar] [CrossRef]

- Mahmud, N.; Saha, R.; Zafar, R.; Bhuian, M.; Sarwar, S. Vibration and voice operated navigation system for visually impaired person. In Proceedings of the 2014 International Conference on Informatics, Electronics & Vision (ICIEV), Dhaka, Bangladesh, 23–24 May 2014. [Google Scholar] [CrossRef]

- Grubb, P.W.; Thomsen, P.R.; Hoxie, T.; Wright, G. Patents for Chemicals, Pharmaceuticals, and Biotechnology; Oxford University Press (OUP): Oxford, UK, 2016. [Google Scholar] [CrossRef]

- Kwiecień, A.; Maćkowski, M.; Kojder, M.; Manczyk, M. Reliability of Bluetooth Smart Technology for Indoor Localization System. Commun. Comput. Inf. Sci. 2015, 522, 444–454. [Google Scholar] [CrossRef]

- Cominelli, M.; Patras, P.; Gringoli, F. Dead on Arrival: An empirical study of the Bluetooth 5.1 positioning system. In Proceedings of the MobiCom ‘19: The 25th Annual International Conference on Mobile Computing and Networking, Los Cabos, Mexico, 25 October 2019. [Google Scholar] [CrossRef]

- Qian, M.; Zhao, K.; Seneviratne, A.; Li, B. Performance analysis of ble 5.1 new feature angle of arrival for relative positioning. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 46, 155–161. [Google Scholar] [CrossRef]

- Leitch, S.G.; Ahmed, Q.Z.; Bin Abbas, W.; Hafeez, M.; Laziridis, P.I.; Sureephong, P.; Alade, T. On Indoor Localization Using WiFi, BLE, UWB, and IMU Technologies. Sensors 2023, 23, 8598. [Google Scholar] [CrossRef]

- Bandukda, M.; Singh, A.; Berthouze, N.; Holloway, C. Understanding Experiences of blind individuals in outdoor nature. In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings, Glasgow, UK, 4–9 May 2019; p. LBW1711. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, A.; Sui, X.; Wang, C.; Wang, S.; Gao, J.; Shi, Z. Improved-UWB/LiDAR-SLAM Tightly Coupled Positioning System with NLOS Identification Using a LiDAR Point Cloud in GNSS-Denied Environments. Remote Sens. 2022, 14, 1380. [Google Scholar] [CrossRef]

- Martins, P.; Abbasi, M.; Sa, F.; Celiclio, J.; Morgado, F.; Caldeira, F. Intelligent beacon location and fingerprinting. Procedia Comput. Sci. 2019, 151, 9–16. [Google Scholar] [CrossRef]

- Better Together: How Combining UWB and Bluetooth Low Energy Enables Innovation|NXP Semiconductors. Available online: https://www.nxp.com/company/blog/better-together-how-combining-uwb-and-bluetooth-low-energy-enables-innovation:BL-UWB-AND-BLUETOOTH-LOW-ENERGY (accessed on 18 May 2024).

- Farooq-i-Azam, M.; Ayyaz, M.N. Location and Position Estimation in Wireless Sensor Networks; ResearchGate: Berlin, Germany, 2016; pp. 179–214. [Google Scholar] [CrossRef]

- Che, F.; Ahmed, Q.Z.; Lazaridis, P.I.; Sureephong, P.; Alade, T. Indoor Positioning System (IPS) Using Ultra-Wide Bandwidth (UWB)—For Industrial Internet of Things (IIoT). Sensors 2023, 23, 5710. [Google Scholar] [CrossRef]

- Kim, H. Double-sided two-way ranging algorithm to reduce ranging time. IEEE Commun. Lett. 2009, 13, 486–488. [Google Scholar] [CrossRef]

- Ferrari, P.; Flammini, A.; Sisinni, E.; Depari, A.; Rizzi, M.; Exel, R.; Sauter, T. Timestamping and Ranging Performance for IEEE 802.15.4 CSS Systems. IEEE Trans. Instrum. Meas. 2014, 63, 1244–1252. [Google Scholar] [CrossRef]

- Fakhoury, S.; Ismail, K. Ultra-Wideband-Based Time Occupancy Analysis for Safety Studies. Sensors 2023, 23, 7551. [Google Scholar] [CrossRef]

- Nearby Interaction|Apple Developer Documentation. Available online: https://developer.apple.com/documentation/nearbyinteraction (accessed on 18 May 2024).

- GitHub—Estimote/iOS-Estimote-UWB-SDK: iOS SDK and Demo App for Estimote UWB Beacons. Available online: https://github.com/Estimote/iOS-Estimote-UWB-SDK (accessed on 19 May 2024).

- isCameraAssistanceEnabled|Apple Developer Documentation. Available online: https://developer.apple.com/documentation/nearbyinteraction/ninearbypeerconfiguration/4013050-iscameraassistanceenabled (accessed on 18 May 2024).

- Delamare, M.; Boutteau, R.; Savatier, X.; Iriart, N. Static and Dynamic Evaluation of an UWB Localization System for Industrial Applications. Sci 2020, 2, 23. [Google Scholar] [CrossRef]

- Smartphones with UWB: Evaluating the Accuracy and Reliability of UWB Ranging|Request PDF. Available online: https://www.researchgate.net/publication/369379894_Smartphones_with_UWB_Evaluating_the_Accuracy_and_Reliability_of_UWB_Ranging (accessed on 18 May 2024).

- (PDF) High-Accuracy Ranging and Localization with Ultra-Wideband Communications for Energy-Constrained Devices. Available online: https://www.researchgate.net/publication/351062898_High-Accuracy_Ranging_and_Localization_with_Ultra-Wideband_Communications_for_Energy-Constrained_Devices (accessed on 18 May 2024).

- Flueratoru, L.; Wehrli, S.; Magno, M.; Niculescu, D. On the Energy Consumption and Ranging Accuracy of Ultra-Wideband Physical Interfaces. In Proceedings of the GLOBECOM 2020—2020 IEEE Global Communications Conference, Taipei, China, 7–11 December 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Wang, F.; Tang, H.; Chen, J. Survey on NLOS Identification and Error Mitigation for UWB Indoor Positioning. Electronics 2023, 12, 1678. [Google Scholar] [CrossRef]

- Decarli, N.O.; Wilab, D.D.; Gezici, S.; Alberto, A.; Amico, D. LOS/NLOS Detection for UWB Signals: A Comparative Study Using Experimental Data. In Proceedings of the IEEE 5th International Symposium on Wireless Pervasive Computing 2010, Modena, Italy, 5–7 May 2010. [Google Scholar]

- Crețu-Sîrcu, A.L.; Schiøler, H.; Cederholm, J.P.; Sîrcu, I.; Schjørring, A.; Larrad, I.R.; Berardinelli, G.; Madsen, O. Evaluation and Comparison of Ultrasonic and UWB Technology for Indoor Localization in an Industrial Environment. Sensors 2022, 22, 2927. [Google Scholar] [CrossRef]

- Wan, Q.; Wu, T.; Zhang, K.; Liu, X.; Cheng, K.; Liu, J.; Zhu, J. A high precision indoor positioning system of BLE AOA based on ISSS algorithm. Measurement 2024, 224, 113801. [Google Scholar] [CrossRef]

- Woolley, M. Bluetooth Core Specification v5.1 Feature Overview; Bluetooth Core Specification v5.1 Contains a Series of Updates to the Bluetooth® Core Specification. This Document Summarizes and Explains Each Change. Bluetooth Core Specification v5.1 Should be Consulted for Full Details; Bluetooth SIG: Kirkland, WA, USA, 2019. [Google Scholar]

- Ramirez, R.; Huang, C.-Y.; Liao, C.-A.; Lin, P.-T.; Lin, H.-W.; Liang, S.-H. A Practice of BLE RSSI Measurement for Indoor Positioning. Sensors 2021, 21, 5181. [Google Scholar] [CrossRef] [PubMed]

- You, W.; Li, F.; Liao, L.; Huang, M. Data Fusion of UWB and IMU Based on Unscented Kalman Filter for Indoor Localization of Quadrotor UAV. IEEE Access 2020, 8, 64971–64981. [Google Scholar] [CrossRef]

- Woo, H.-J.; Seo, D.-M.; Kim, M.-S.; Park, M.-S.; Hong, W.-H.; Baek, S.-C. Localization of Cracks in Concrete Structures Using an Unmanned Aerial Vehicle. Sensors 2022, 22, 6711. [Google Scholar] [CrossRef]

- UWB and AI overview—By Giorgio Zanella—Technotrend. Available online: https://technotrend.substack.com/p/uwb-and-ai-overview (accessed on 18 June 2024).

| Time-based: | ToA | TDoA | TW-ToA | PoA |

| Signal-based: | RSSI | CSI | ||

| Angulation: | AoA | AoD | ||

| Proximity Detection: | RFID | Cell-ID |

| Distance 0.5 [m] | Distance 1 [m] | Distance 1.5 [m] | Distance 2 [m] | Distance 2.5 [m] | |

|---|---|---|---|---|---|

| count | 937 | 602 | 1024 | 1039 | 993 |

| mean | 0.58 | 1.10 | 1.42 | 1.88 | 2.39 |

| std | 0.01 | 0.01 | 0.02 | 0.01 | 0.02 |

| min | 0.55 | 1.05 | 0.99 | 1.84 | 2.32 |

| max | 0.62 | 1.13 | 1.46 | 1.92 | 2.44 |

| Distance with CameraAssist (0.5 [m]) | Distance without CameraAssist (0.5 [m]) | Distance with Camera Assist (2 [m]) | Distance without CameraAssist (2 [m]) | |

|---|---|---|---|---|

| mean | 0.32 | 0.58 | 1.80 | 1.88 |

| std | 0.01 | 0.01 | 0.01 | 0.01 |

| min | 0.29 | 0.55 | 1.79 | 1.84 |

| max | 0.36 | 0.62 | 1.81 | 1.92 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rosiak, M.; Kawulok, M.; Maćkowski, M. The Effectiveness of UWB-Based Indoor Positioning Systems for the Navigation of Visually Impaired Individuals. Appl. Sci. 2024, 14, 5646. https://doi.org/10.3390/app14135646

Rosiak M, Kawulok M, Maćkowski M. The Effectiveness of UWB-Based Indoor Positioning Systems for the Navigation of Visually Impaired Individuals. Applied Sciences. 2024; 14(13):5646. https://doi.org/10.3390/app14135646

Chicago/Turabian StyleRosiak, Maria, Mateusz Kawulok, and Michał Maćkowski. 2024. "The Effectiveness of UWB-Based Indoor Positioning Systems for the Navigation of Visually Impaired Individuals" Applied Sciences 14, no. 13: 5646. https://doi.org/10.3390/app14135646

APA StyleRosiak, M., Kawulok, M., & Maćkowski, M. (2024). The Effectiveness of UWB-Based Indoor Positioning Systems for the Navigation of Visually Impaired Individuals. Applied Sciences, 14(13), 5646. https://doi.org/10.3390/app14135646