Intelligent Detection of Underwater Defects in Concrete Dams Based on YOLOv8s-UEC

Abstract

1. Introduction

2. Methodology

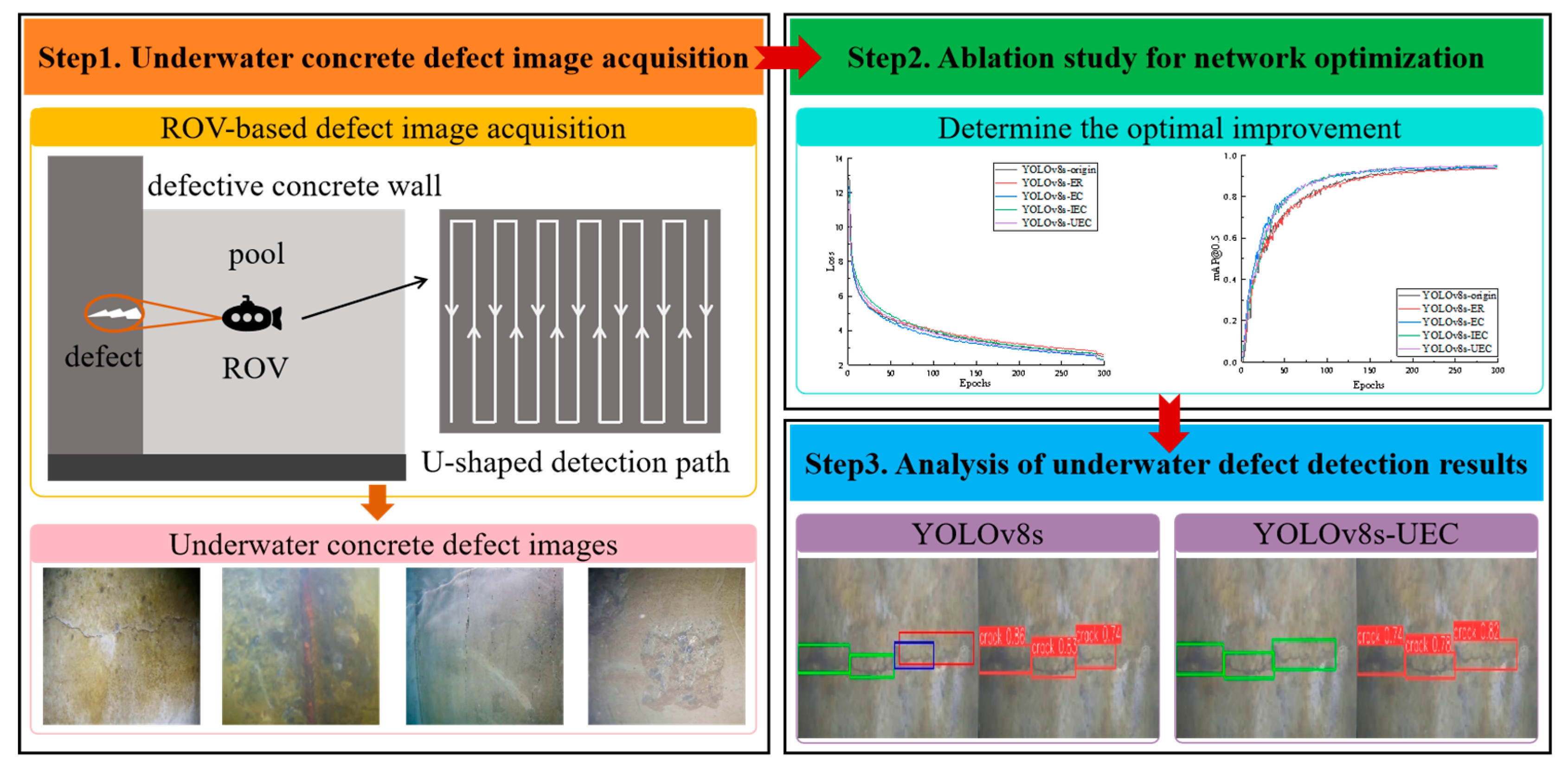

2.1. Procedure of Underwater Defects Detection

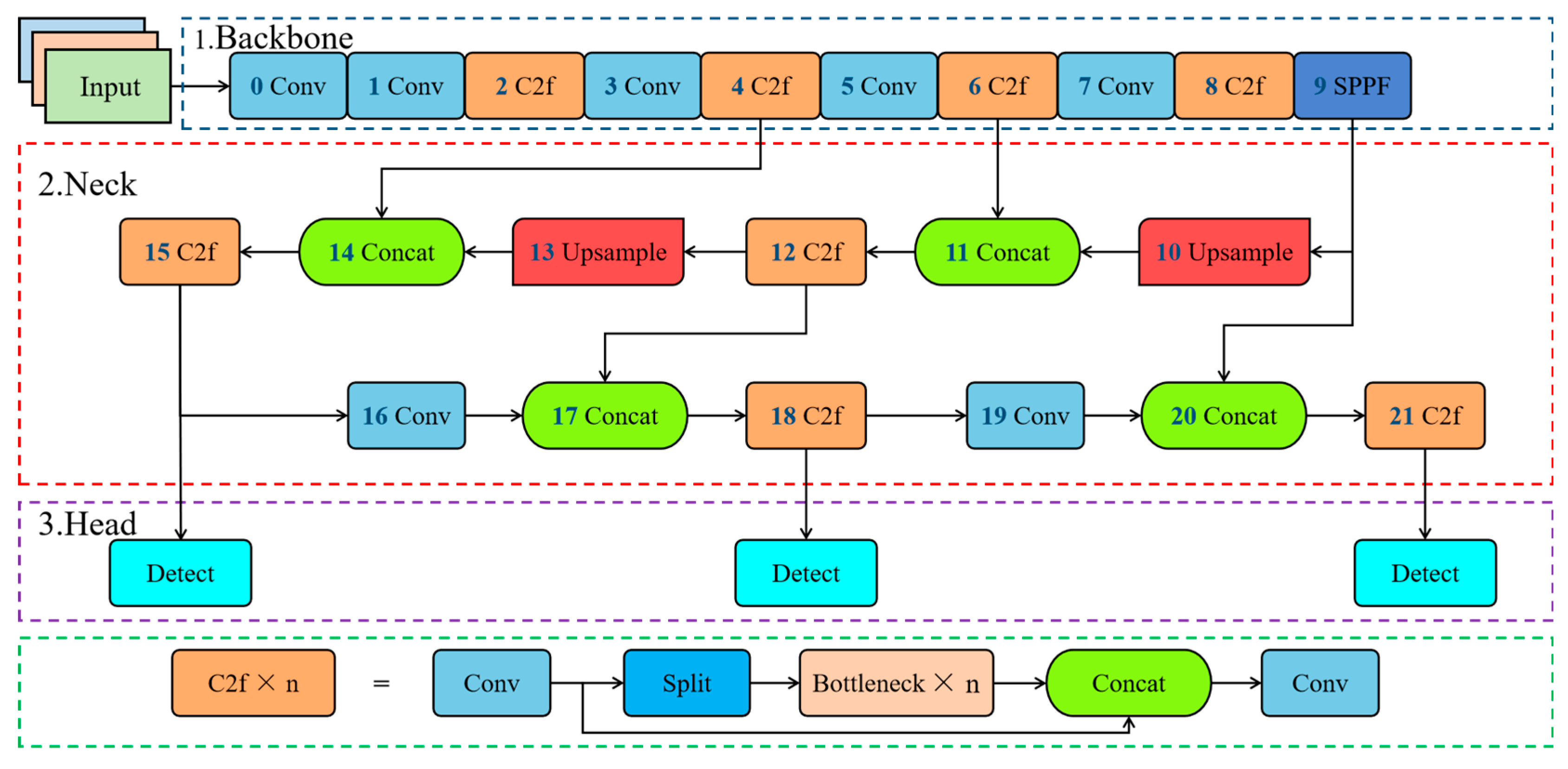

2.2. Original YOLOv8s Network

2.3. Network Improvement Component

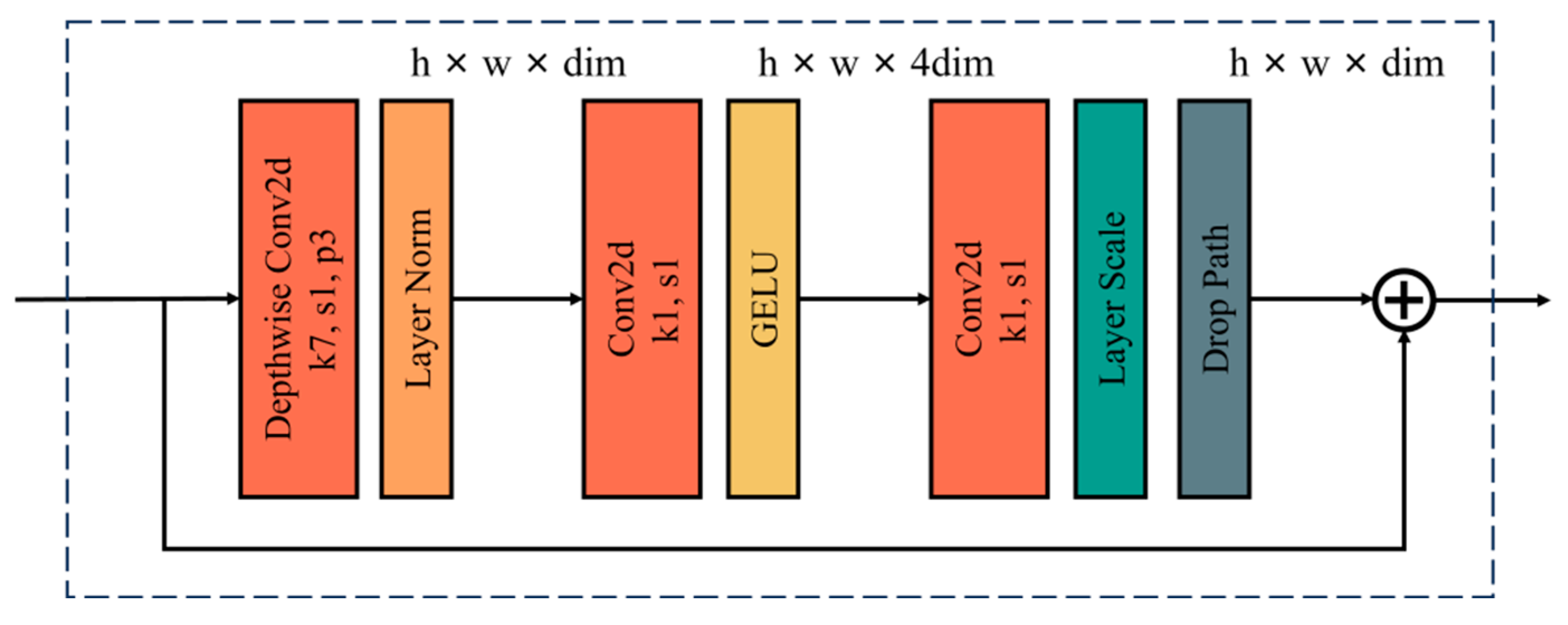

2.3.1. ConvNeXt Block Module

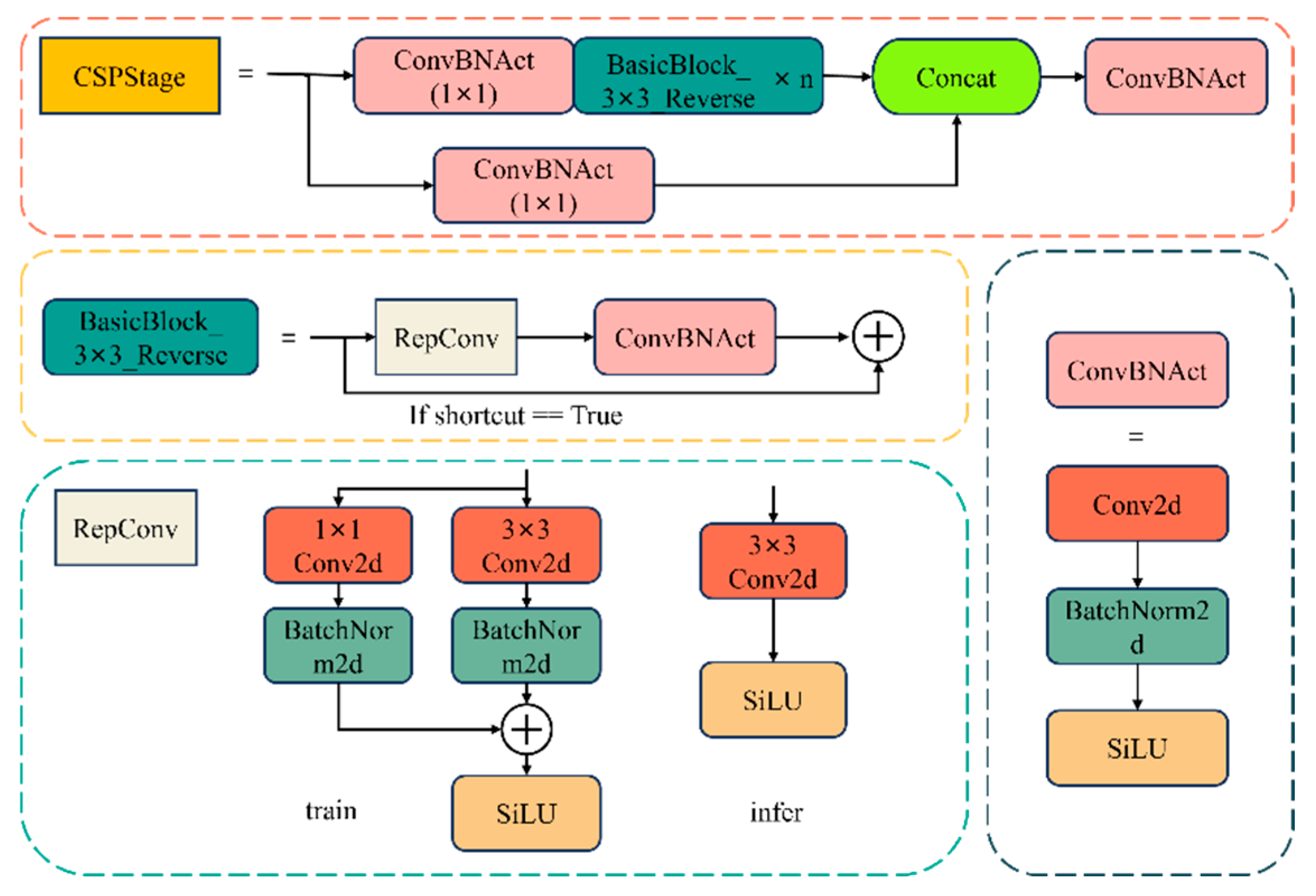

2.3.2. Efficient-RepGFPN Structure

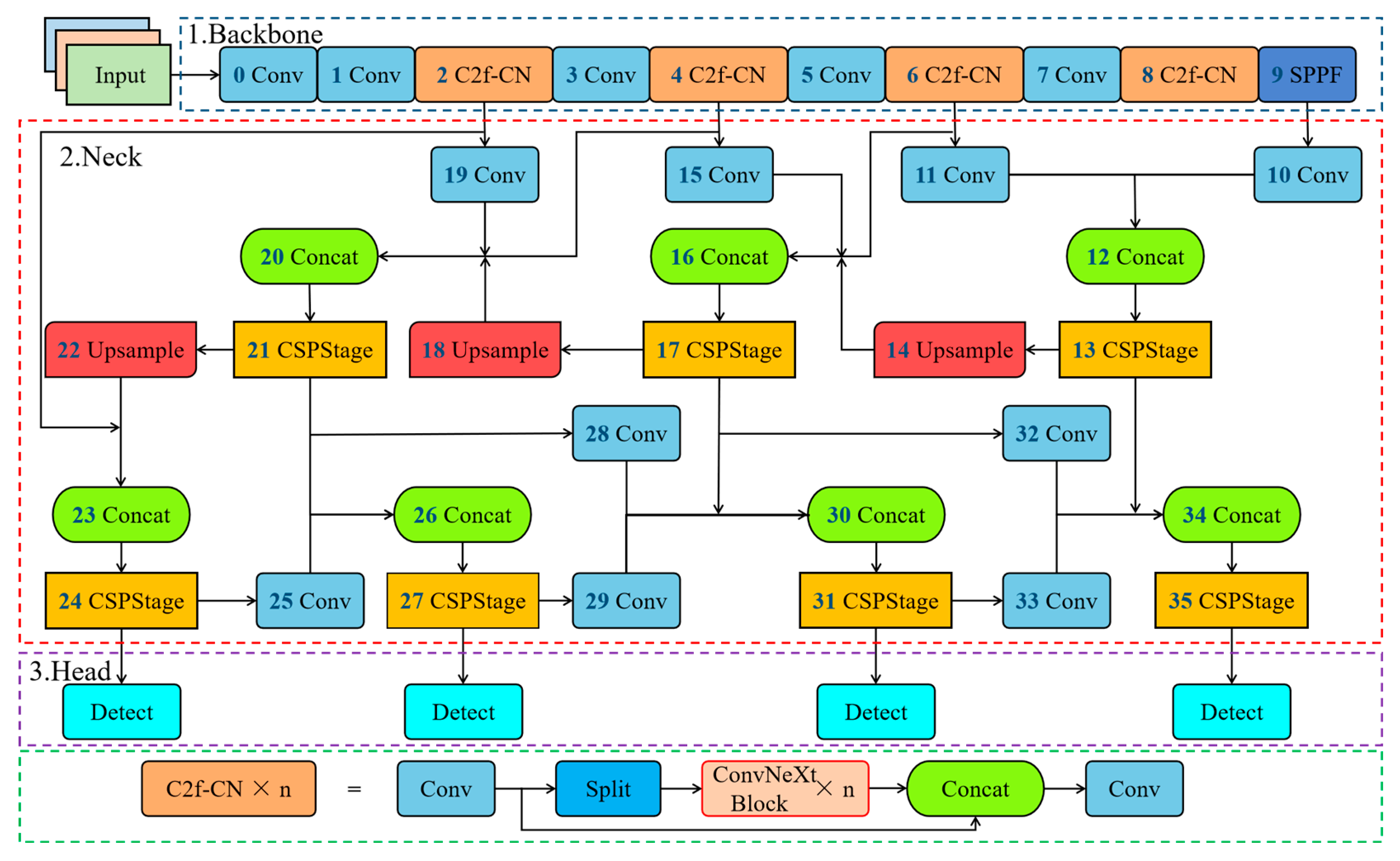

2.4. Proposed YOLOv8s-UEC Network

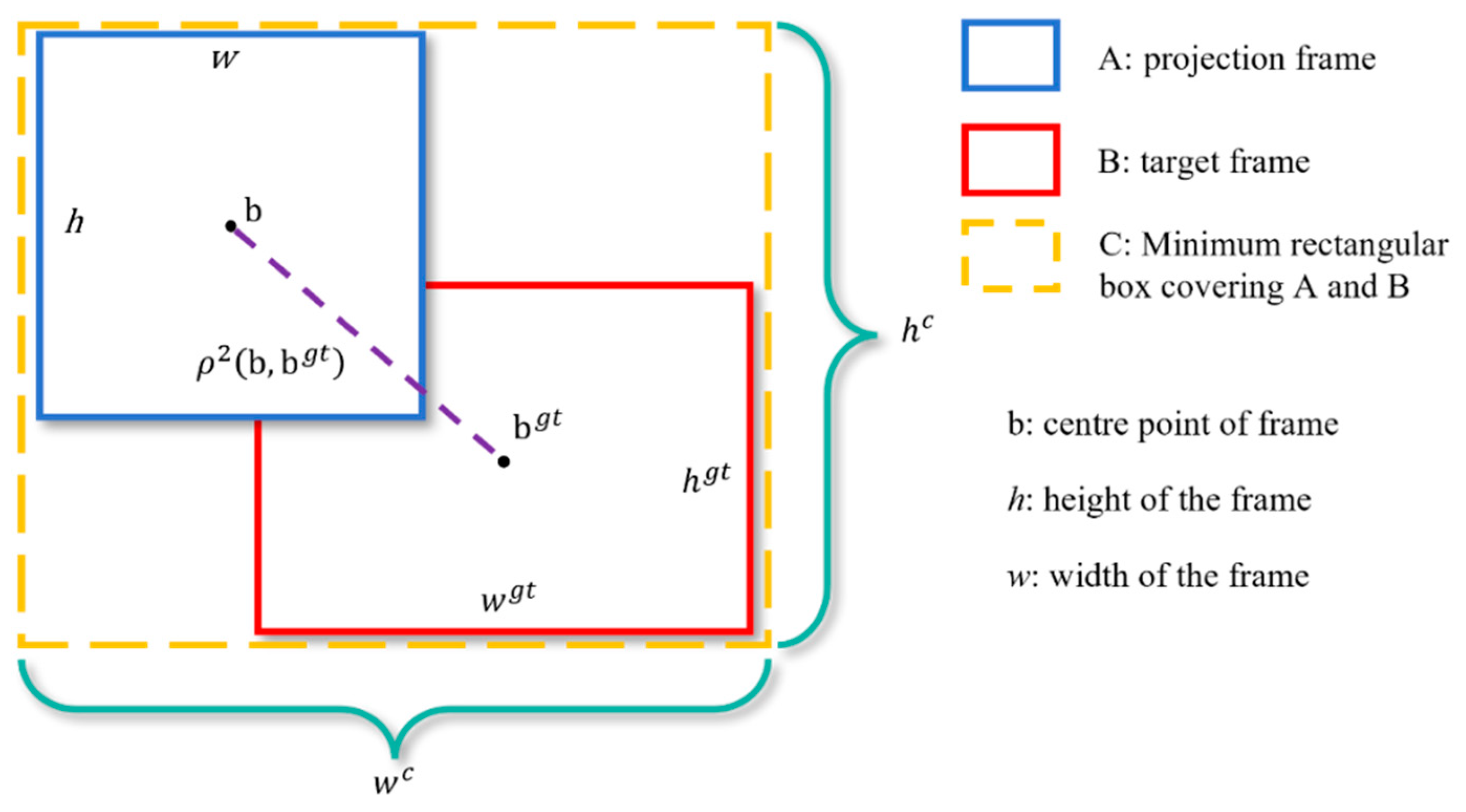

2.5. Adopted Loss Function

2.6. Evaluation Indicators

3. Experiments and Results

3.1. Data Collection and Preprocessing

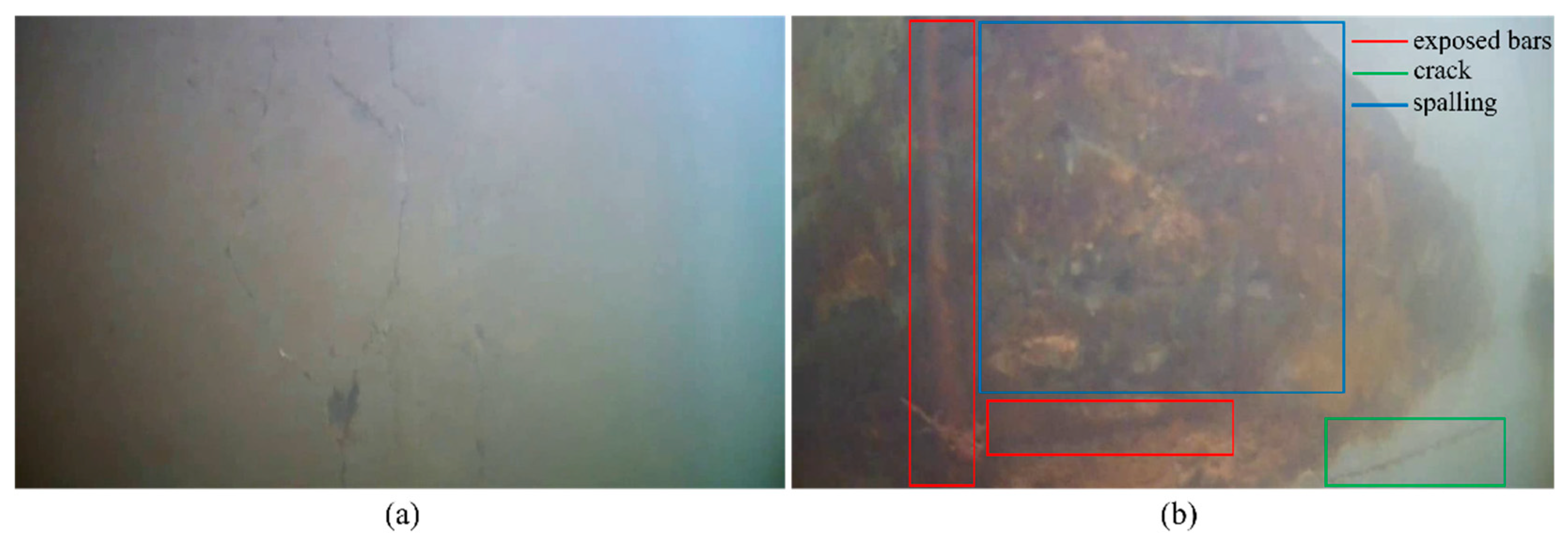

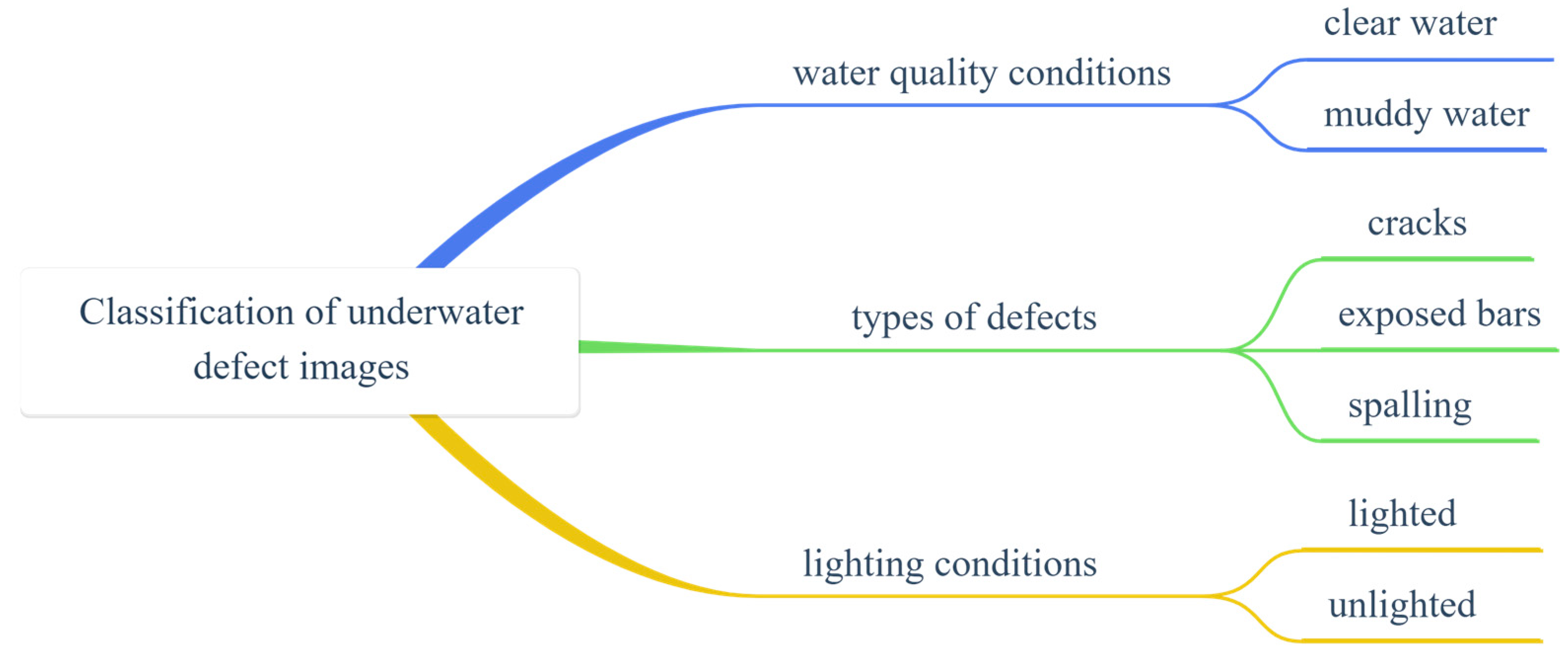

3.1.1. Classification of Underwater Defective Samples

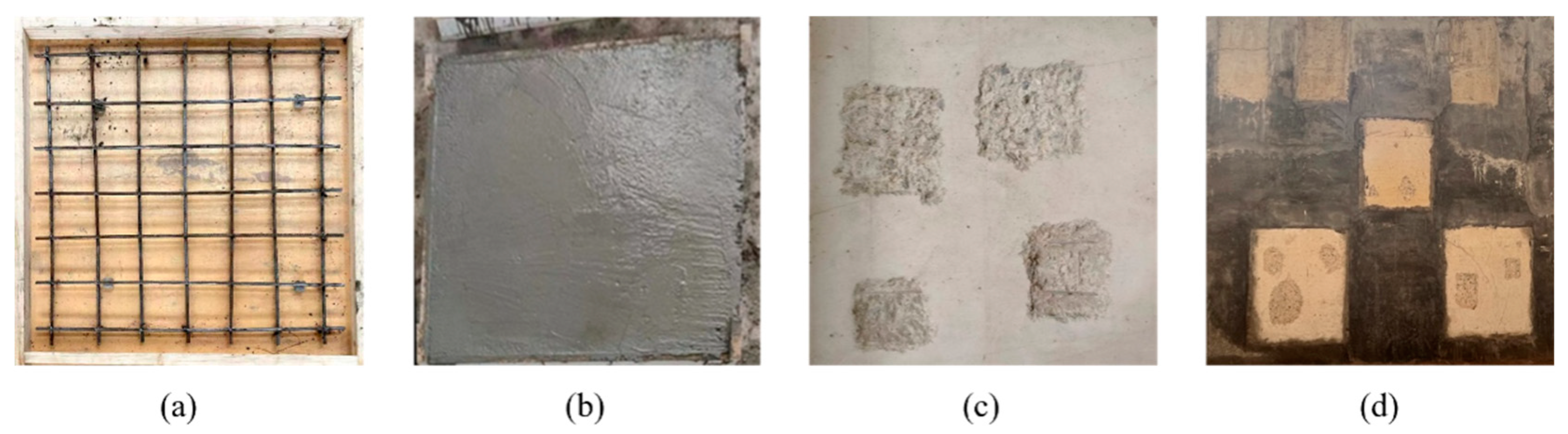

3.1.2. Construction with Defective Concrete Wall

3.1.3. Image Acquisition of Underwater Defects

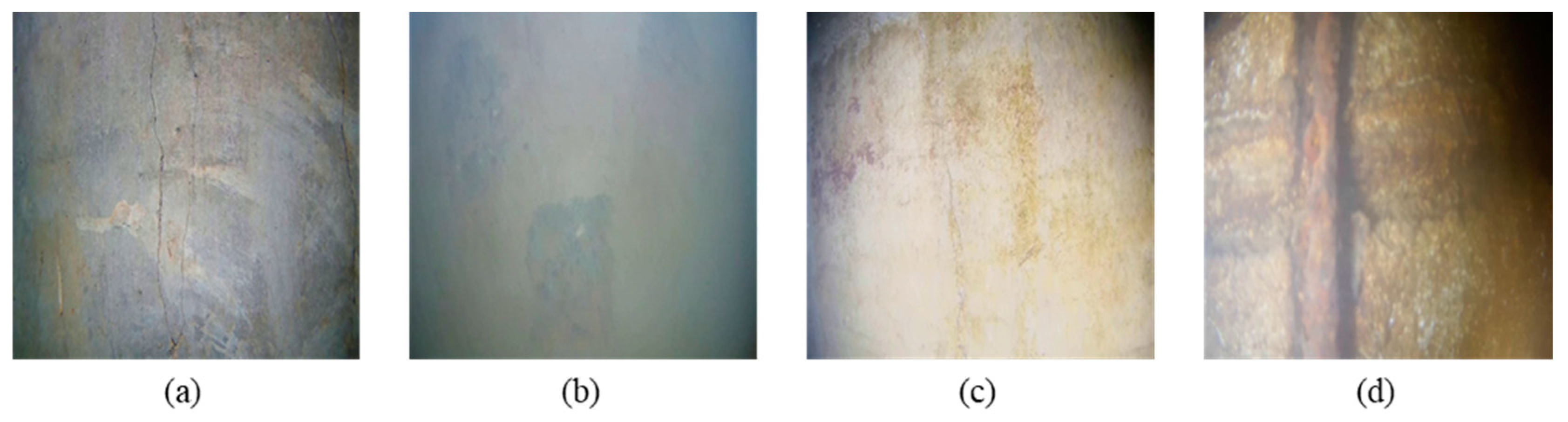

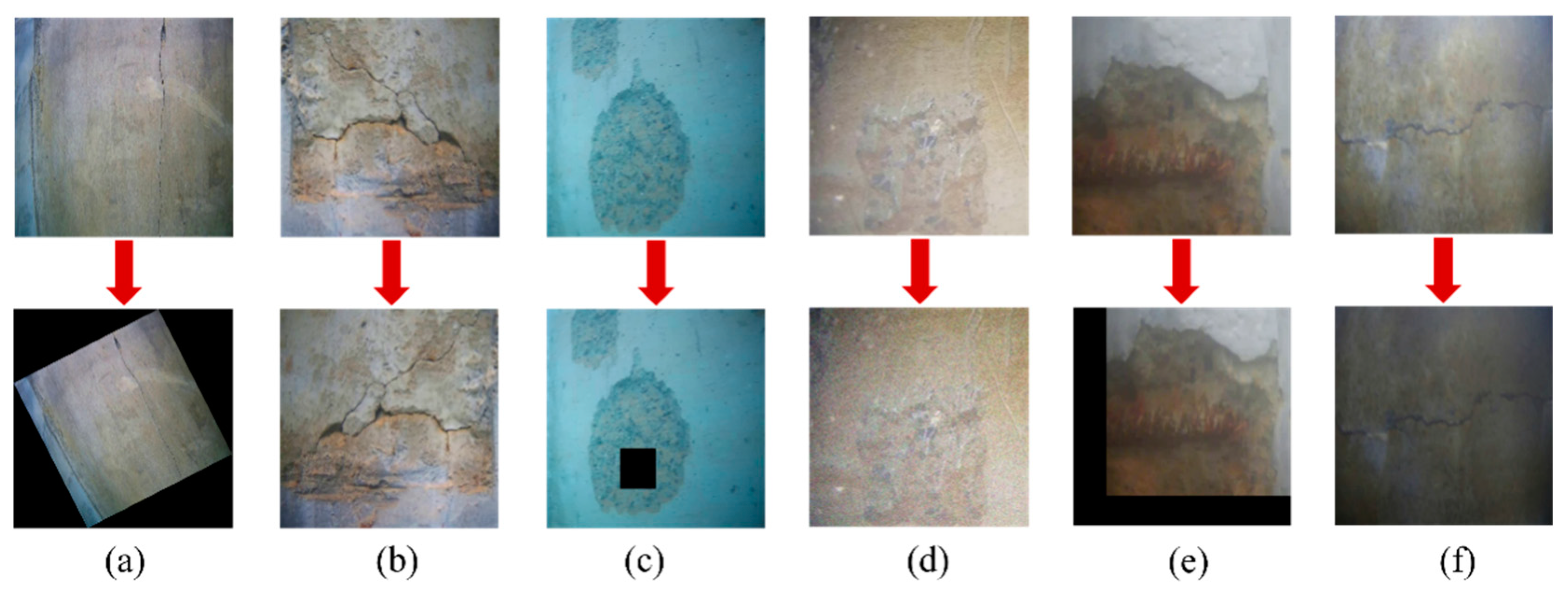

3.1.4. Data Processing

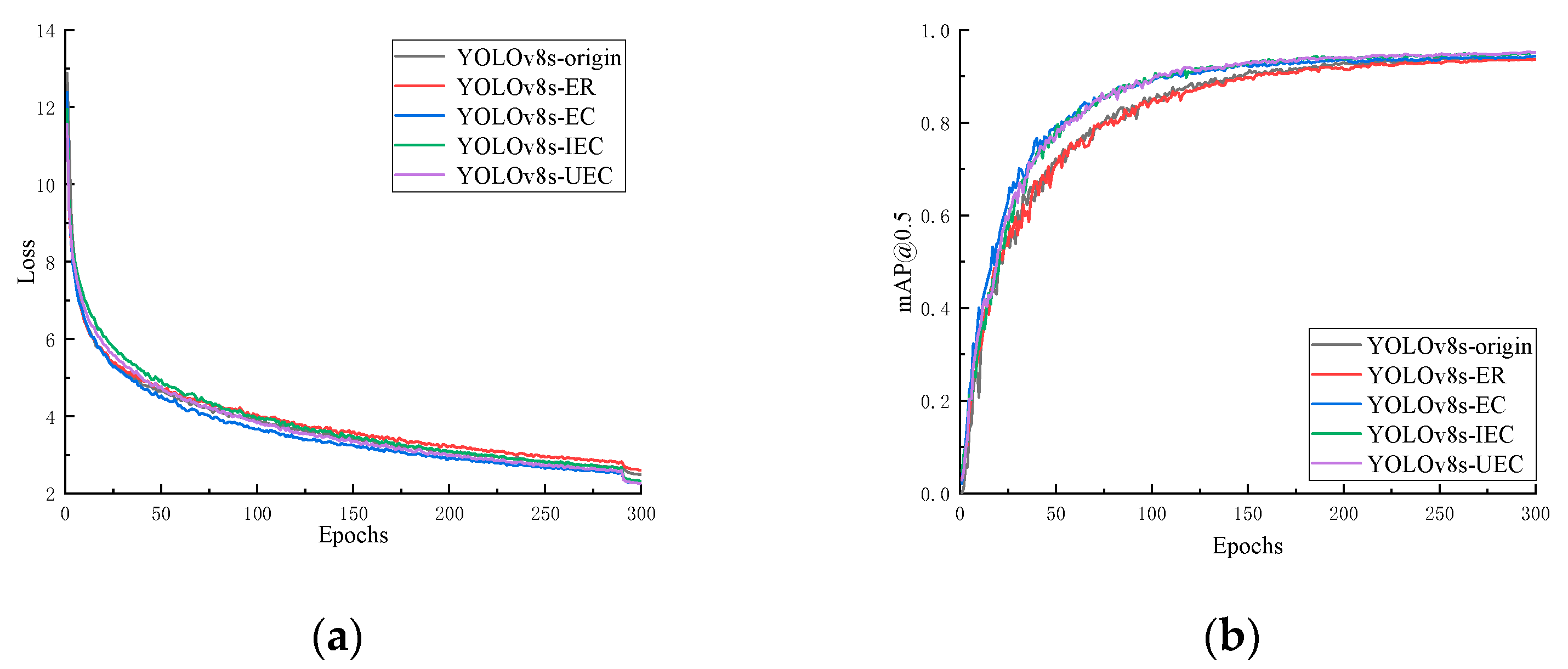

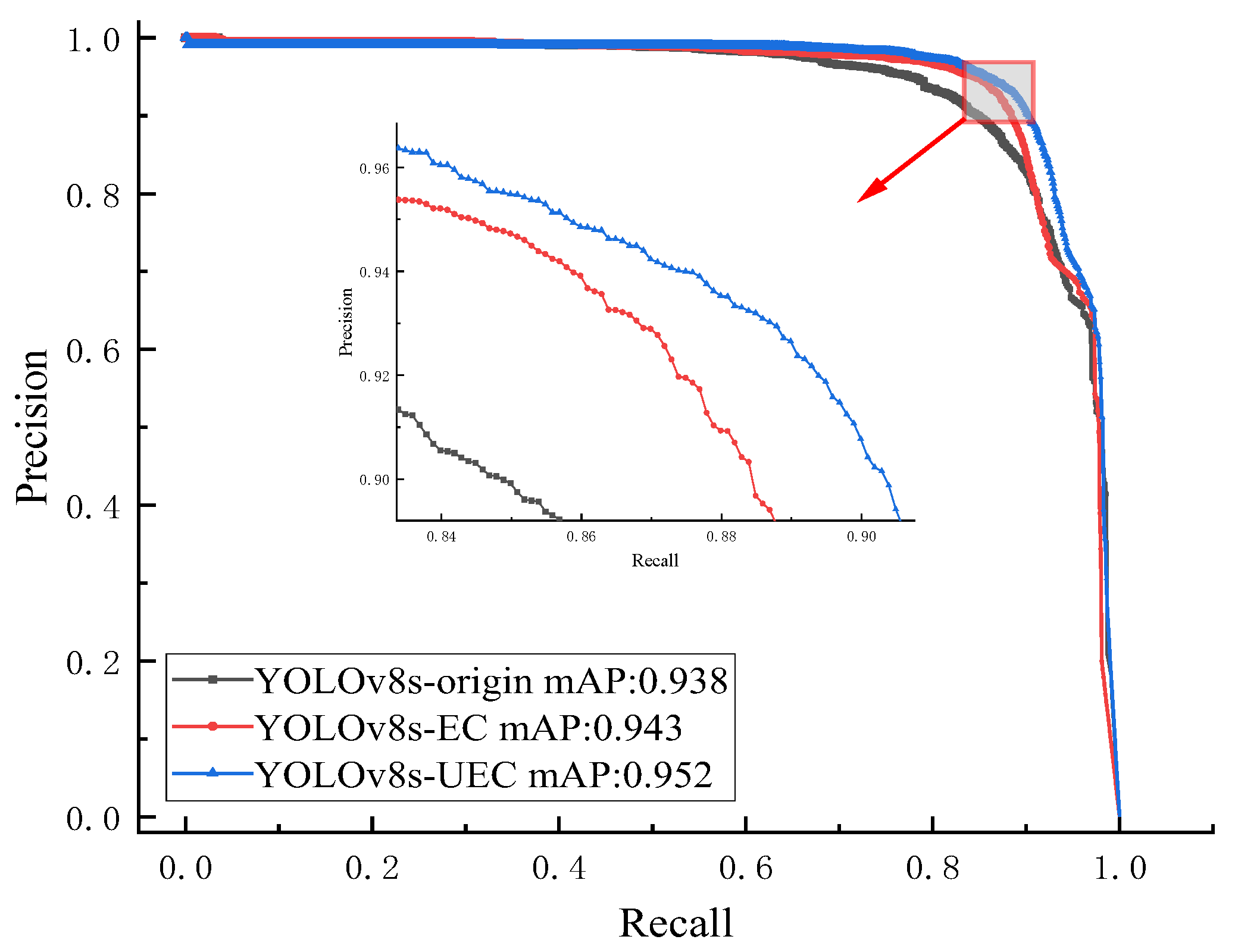

3.2. Network Ablation Study

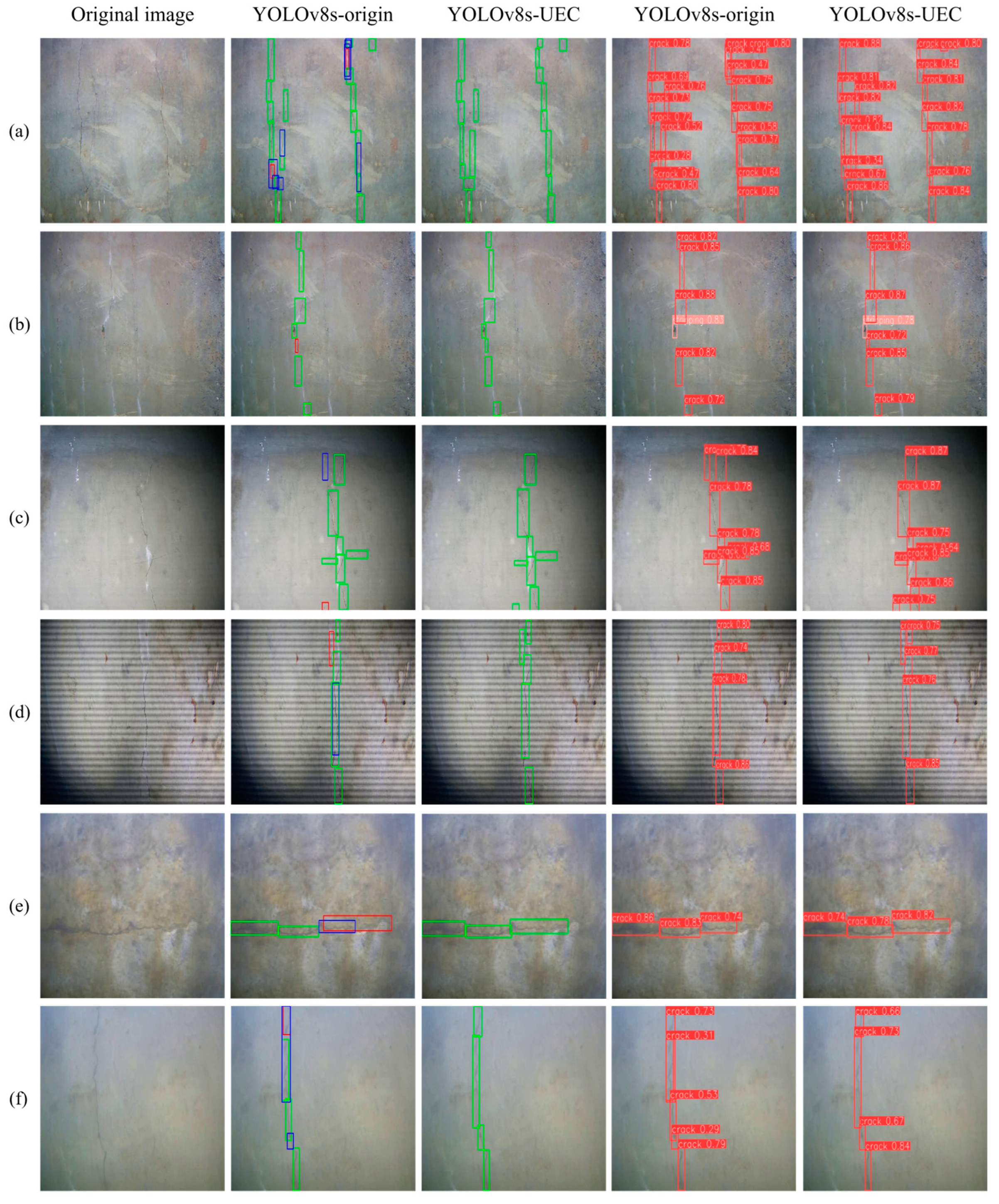

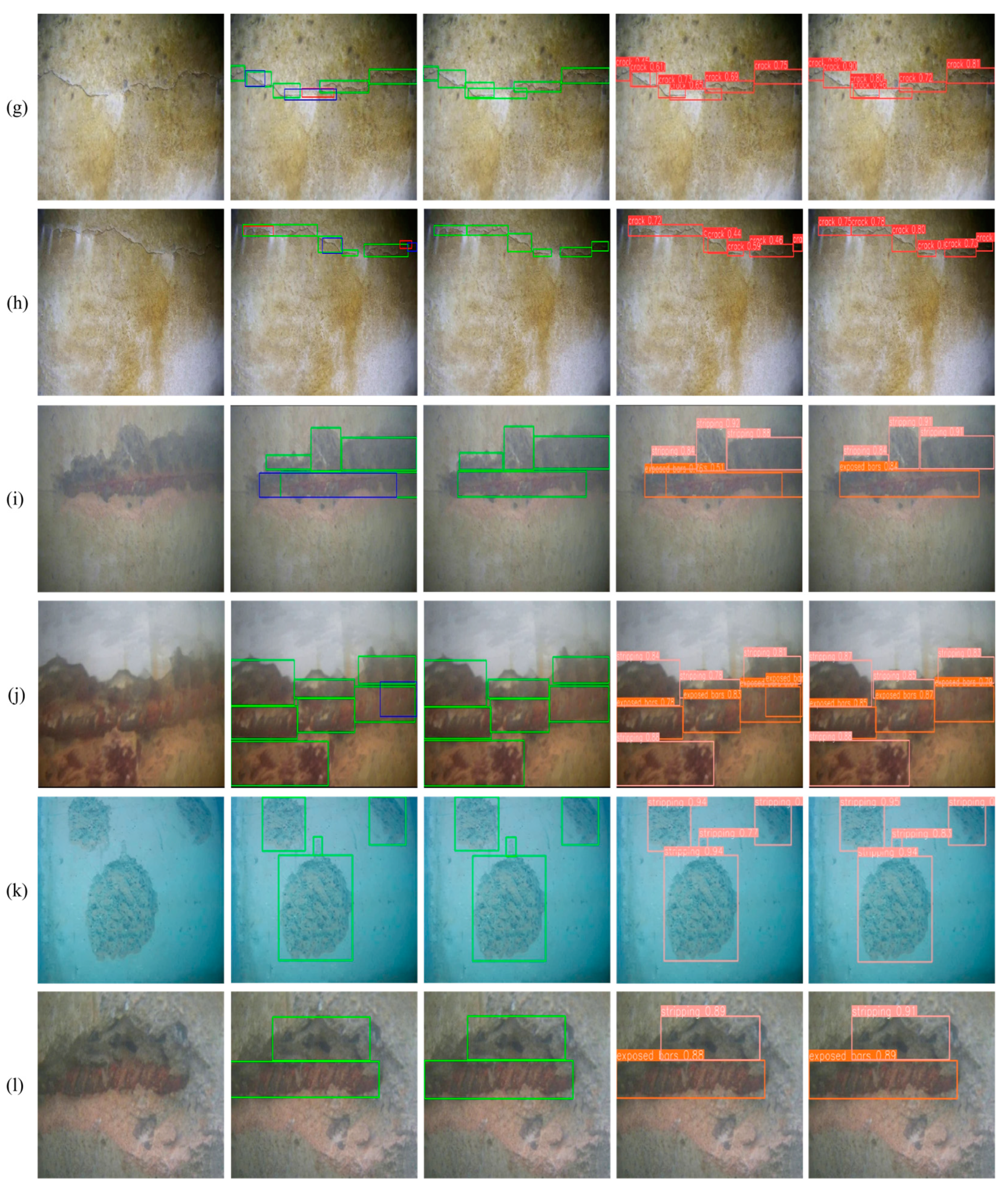

3.3. Underwater Defect Detection Based on YOLOv8s-UEC

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kang, F.; Li, J. Displacement model for concrete dam safety monitoring via Gaussian process regression considering extreme air temperature. J. Struct. Eng. 2020, 146, 05019001. [Google Scholar] [CrossRef]

- Jia, J. A technical review of hydro-project development in China. Engineering 2016, 2, 302–312. [Google Scholar] [CrossRef]

- Ma, H.; Chi, F. Technical progress on researches for the safety of high concrete-faced rockfill dams. Engineering 2016, 2, 332–339. [Google Scholar] [CrossRef]

- Zhao, S.; Kang, F.; Li, J. Concrete dam damage detection and localisation based on YOLOv5s-HSC and photogrammetric 3D reconstruction. Autom. Constr. 2022, 143, 104555. [Google Scholar] [CrossRef]

- Huang, B.; Kang, F.; Li, J.; Wang, F. Displacement prediction model for high arch dams using long short-term memory based encoder-decoder with dual-stage attention considering measured dam temperature. Eng. Struct. 2023, 280, 115686. [Google Scholar] [CrossRef]

- Kang, F.; Wu, Y.; Ma, J.; Li, J. Structural identification of super high arch dams using Gaussian process regression with improved salp swarm algorithm. Eng. Struct. 2023, 286, 116150. [Google Scholar] [CrossRef]

- Chen, D.; Kang, F.; Chen, J.; Zhu, S.; Li, H. Effect of light source wavelength on surface defect imaging in deep-water concrete dams. NDT E Int. 2024, 147, 103198. [Google Scholar] [CrossRef]

- Ma, C.; Zhao, W.; Li, G.; Liu, J.; Deng, J.; Ding, W. The ground penetrating radar response recognition of hidden dam defects using multi-output convolutional neural network. J. Appl. Geophys. 2024, 225, 105397. [Google Scholar] [CrossRef]

- Zheng, M.; Lei, Z.; Zhang, K. Intelligent detection of building cracks based on deep learning. Image Vis. Comput. 2020, 103, 103987. [Google Scholar] [CrossRef]

- Shimono, S.; Matsubara, O.; Toyama, S.; Nishizawa, U. Development of underwater inspection system for dam inspection. In Proceedings of the OCEANS 2015-MTS/IEEE, Washington, DC, USA, 19–22 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Qu, Y.; Zou, D.; Kong, X.; Yu, X.; Chen, K. Seismic cracking evolution for anti-seepage face slabs in concrete faced rockfill dams based on cohesive zone model in explicit SBFEM-FEM frame. Soil Dyn. Earthq. Eng. 2020, 133, 106106. [Google Scholar] [CrossRef]

- Grömer, M.; Nocerino, E.; Calantropio, A.; Menna, F.; Dreier, A.; Winiwarter, L.; Mandlburger, G. High-detail and low-cost underwater inspection of large-scale hydropower dams. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, 48, 115–120. [Google Scholar] [CrossRef]

- Paraschos, D.; Papadakis, N.K. Autonomous underwater vehicle challenge: Design and construction of a medium-sized, AI-enabled low-cost prototype. J. Def. Model. Simul. 2024, 21, 269–281. [Google Scholar] [CrossRef]

- Cardaillac, A.; Skjetne, R.; Ludvigsen, M. ROV-Based Autonomous Maneuvering for Ship Hull Inspection with Coverage Monitoring. J. Intell. Robot. Syst. Theory Appl. 2024, 110, 59. [Google Scholar] [CrossRef]

- Kazmi, W.; Ridao, P.; Romagos, D.R.; Hernandez, E. Dam wall detection and tracking using a mechanically scanned imaging sonar. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 3595–3600. [Google Scholar]

- Zhou, H.; Liu, A.; Li, W.; Chen, B.; Wang, J.; Wu, Z.; Yang, Y. Method for 3D reconstruction of surface diseases of underwater concrete structures based on ROV. Eng. Mech. 2024, 41, 129–135. [Google Scholar]

- Neto, E.C.; Sá, R.C.; Holanda, G.C.; da Mota, F.A.X.; Varela, A.T.; Araújo, A.L.C.; Loiola, I.J.; Oliveira, R.; de Alexandria, A.R.; de Albuquerque, V.H.C. Autonomous underwater vehicle to inspect hydroelectric dams. Int. J. Comput. Appl. 2014, 101, 1–11. [Google Scholar]

- Chen, D.; Huang, B.; Kang, F. A review of detection technologies for underwater cracks on concrete dam surfaces. Appl. Sci. 2023, 13, 3564. [Google Scholar] [CrossRef]

- Sakagami, N.; Takemura, F.; Ono, R.; Katagiri, C.; Nakanishi, Y.; Yamamoto, Y. Observation support system of an ROV for underwater archaeology. In Proceedings of the 2015 International Conference on Intelligent Informatics and Biomedical Sciences, Okinawa, Japan, 28–30 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 192–196. [Google Scholar]

- Cheng, C.; Wang, C.; Yang, D.; Liu, W.; Zhang, F. Underwater localization and mapping based on multi-beam forward looking sonar. Front. Neurorobotics 2022, 15, 801956. [Google Scholar] [CrossRef]

- Yoerger, D.; Bradley, A.; Jakuba, M.; German, C.; Shank, T.; Tivey, M. Autonomous and remotely operated vehicle technology for hydrothermal vent discovery, exploration, and sampling. Oceanography 2007, 20, 152–161. [Google Scholar] [CrossRef]

- Shi, P.; Fan, X.; Wang, G. A novel underwater dam crack detection algorithm based on sonar images. In Proceedings of the 2015 5th International Conference on Computer Sciences and Automation Engineering (ICCSAE 2015), Sanya, China, 14–15 November 2015; Atlantis Press: Amsterdam, The Netherlands, 2016; pp. 452–456. [Google Scholar]

- Sugimoto, H.; Moriya, Y.; Ogasawara, T. Underwater survey system of dam embankment by remotely operated vehicle. In Proceedings of the 2017 IEEE Underwater Technology (UT), Busan, Republic of Korea, 21–24 February 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Ridao, P.; Carreras, M.; Ribas, D.; Garcia, R. Visual inspection of hydroelectric dams using an autonomous underwater vehicle. J. Field Robot. 2010, 27, 759–778. [Google Scholar] [CrossRef]

- Sakagami, N.; Yumoto, Y.; Takebayashi, T.; Kawamura, S. Development of dam inspection robot with negative pressure effect plate. J. Field Robot. 2019, 36, 1422–1435. [Google Scholar] [CrossRef]

- Qi, N.; Nie, Q.; Lai, J.; Chen, Y.; Li, Y. Key Technology and Practice of Underwater Intelligent Inspection in Multiple Scenarios of Hydropower Station. J. Tsinghua Univ. (Sci. Technol.) 2023, 63, 1124–1134. [Google Scholar]

- Sun, Y.; Pang, Y.; Wan, L.; Qin, Z. Study of underwater robotic GDROV programme for dam detection. Ship Ocean Eng. 2006, 35, 84–86. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Shinde, P.; Shah, S. A review of machine learning and deep learning applications. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control And Automation (ICCUBEA), Pune, India, 16–18 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Feng, C.; Zhang, H.; Wang, H.; Wang, S.; Li, Y. Automatic pixel-level crack detection on dam surface using deep convolutional network. Sensors 2020, 20, 2069. [Google Scholar] [CrossRef] [PubMed]

- Ni, T.; Zhou, R.; Gu, C.; Yang, Y. Measurement of concrete crack feature with android smartphone APP based on digital image processing techniques. Measurement 2020, 150, 107093. [Google Scholar] [CrossRef]

- Miao, Z.; Ji, X.; Okazaki, T.; Takahashi, N. Pixel-level multicategory detection of visible seismic damage of reinforced concrete components. Comput.-Aided Civ. Infrastruct. Eng. 2021, 36, 620–637. [Google Scholar] [CrossRef]

- Tan, Y.; Cai, R.; Li, J.; Chen, P.; Wang, M. Automatic detection of sewer defects based on improved you only look once algorithm. Autom. Constr. 2021, 131, 103912. [Google Scholar] [CrossRef]

- Park, S.; Eem, S.; Jeon, H. Concrete crack detection and quantification using deep learning and structured light. Constr. Build. Mater. 2020, 252, 119096. [Google Scholar] [CrossRef]

- Yu, Z.; Shen, Y.; Shen, C. A real-time detection approach for bridge cracks based on YOLOv4-FPM. Autom. Constr. 2021, 122, 103514. [Google Scholar] [CrossRef]

- Zhu, J.; Zhong, J.; Ma, T.; Huang, X.; Zhang, W.; Zhou, Y. Pavement distress detection using convolutional neural networks with images captured via UAV. Autom. Constr. 2022, 133, 103991. [Google Scholar] [CrossRef]

- Li, Y.; Bao, T.; Huang, X.; Chen, H.; Xu, B.; Shu, X.; Zhou, Y.; Cao, Q.; Tu, J.; Wang, R.; et al. Underwater crack pixel-wise identification and quantification for dams via lightweight semantic segmentation and transfer learning. Autom. Constr. 2022, 144, 104600. [Google Scholar] [CrossRef]

- Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2010; pp. 242–264. [Google Scholar]

- Pan, S.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Fan, X.; Cao, P.; Shi, P.; Chen, X.; Zhou, X.; Gong, Q. An underwater dam crack image segmentation method based on multi-level adversarial transfer learning. Neurocomputing 2022, 505, 19–29. [Google Scholar] [CrossRef]

- Cao, W.; Li, J. Detecting large-scale underwater cracks based on remote operated vehicle and graph convolutional neural network. Front. Struct. Civ. Eng. 2022, 16, 1378–1396. [Google Scholar] [CrossRef]

- Qi, Z.; Liu, D.; Zhang, J.; Chen, J. Micro-concrete crack detection of underwater structures based on convolutional neural network. Mach. Vis. Appl. 2022, 33, 74. [Google Scholar] [CrossRef]

- Li, X.; Sun, H.; Song, T.; Zhang, T.; Meng, Q. A method of underwater bridge structure damage detection method based on a lightweight deep convolutional network. IET Image Process. 2022, 16, 3893–3909. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Wu, Y.; Han, Q.; Jin, Q.; Li, J.; Zhang, Y. LCA-YOLOv8-Seg: An Improved Lightweight YOLOv8-Seg for Real-Time Pixel-Level Crack Detection of Dams and Bridges. Appl. Sci. 2023, 13, 10583. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Bai, H.; Liang, X. A very lightweight image super-resolution network. Sci. Rep. 2024, 14, 13850. [Google Scholar] [CrossRef]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. Damo-yolo: A report on real-time object detection design. arXiv 2022, arXiv:2211.15444. [Google Scholar]

- Sun, Z.; Li, P.; Meng, Q.; Sun, Y.; Bi, Y. An Improved YOLOv5 Method to Detect Tailings Ponds from High-Resolution Remote Sensing Images. Remote Sens. 2023, 15, 1796. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

| Network Name | Network Structure | Adopted Module | Loss Function | Head Number |

|---|---|---|---|---|

| YOLOv8s | Origin | C2f | CIoU | 3 |

| YOLOv8s-ER | E-RepGFPN | C2f | CIoU | 3 |

| YOLOv8s-EC | E-RepGFPN | C2f | CIoU | 3 |

| YOLOv8s-IEC | E-RepGFPN + P2 | C2f-CN | CIoU | 4 |

| YOLOv8s-UEC | E-RepGFPN + P2 | C2f-CN | Focal-EIoU | 4 |

| Network | Precision | Recall | F1 | mAP0.5 | mAP0.5:0.95 | FPS |

|---|---|---|---|---|---|---|

| YOLOv8s | 91.6% | 90.0% | 0.9079 | 93.8% | 74.1% | 121 |

| YOLOv8s-ER | 92.1% | 90.6% | 0.9134 | 94.1% | 76.0% | 114 |

| YOLOv8s-EC | 93.9% | 91.4% | 0.9263 | 94.3% | 79.1% | 115 |

| YOLOv8s-IEC | 94.5% | 90.7% | 0.9256 | 95.1% | 79.5% | 82 |

| YOLOv8s-UEC | 93.9% | 91.6% | 0.9274 | 95.2% | 79.9% | 107 |

| Network Name | Crack | Spalling | Exposed Bars |

|---|---|---|---|

| YOLOv8s | 86.3% | 97.9% | 97.1% |

| YOLOv8s-ER | 87.5% | 97.6% | 97.2% |

| YOLOv8s-EC | 87.9% | 97.8% | 97.3% |

| YOLOv8s-IEC | 89.9% | 98.0% | 97.5% |

| YOLOv8s-UEC | 89.3% | 98.4% | 97.9% |

| Network | Precision | Recall | F1 | mAP0.5 | FPS |

|---|---|---|---|---|---|

| Faster R-CNN | 93.7% | 78.9% | 0.8567 | 90.3% | 39 |

| YOLOX-s | 91.3% | 90.6% | 0.9095 | 93.6% | 84 |

| YOLOv5s | 93.4% | 91.0% | 0.9218 | 94.2% | 101 |

| YOLOv8s | 91.6% | 90.0% | 0.9079 | 93.8% | 121 |

| YOLOv8s-UEC | 93.9% | 91.6% | 0.9274 | 95.2% | 107 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, C.; Zhao, Y.; Kang, F. Intelligent Detection of Underwater Defects in Concrete Dams Based on YOLOv8s-UEC. Appl. Sci. 2024, 14, 8731. https://doi.org/10.3390/app14198731

Liang C, Zhao Y, Kang F. Intelligent Detection of Underwater Defects in Concrete Dams Based on YOLOv8s-UEC. Applied Sciences. 2024; 14(19):8731. https://doi.org/10.3390/app14198731

Chicago/Turabian StyleLiang, Chenxi, Yang Zhao, and Fei Kang. 2024. "Intelligent Detection of Underwater Defects in Concrete Dams Based on YOLOv8s-UEC" Applied Sciences 14, no. 19: 8731. https://doi.org/10.3390/app14198731

APA StyleLiang, C., Zhao, Y., & Kang, F. (2024). Intelligent Detection of Underwater Defects in Concrete Dams Based on YOLOv8s-UEC. Applied Sciences, 14(19), 8731. https://doi.org/10.3390/app14198731