Leveraging IoT Devices for Atrial Fibrillation Detection: A Comprehensive Study of AI Techniques

Abstract

1. Introduction and Related Work

2. Materials and Methods

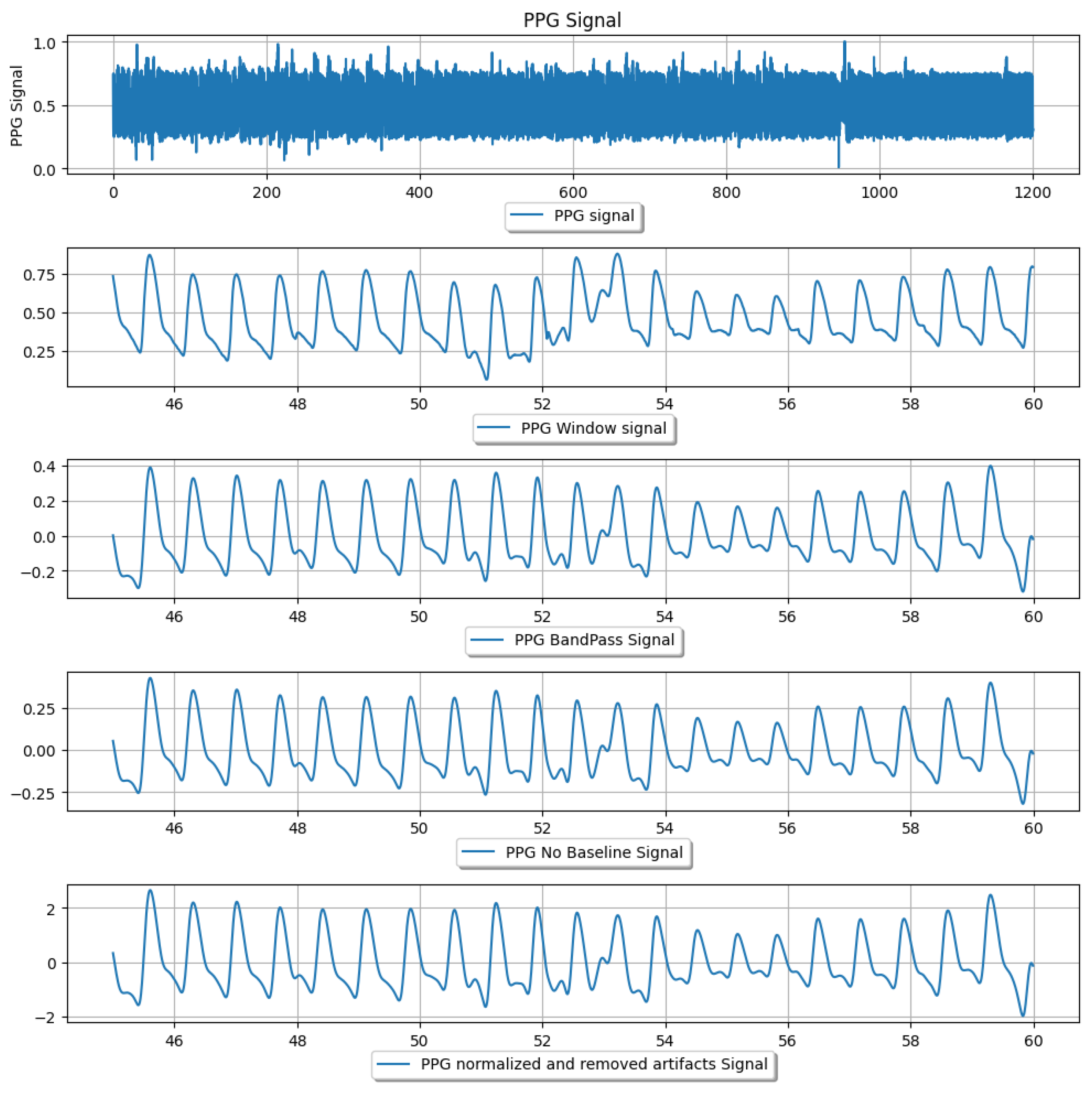

2.1. Dataset and Pre-Processing

2.2. Features Extraction

2.2.1. Signal-Based Features

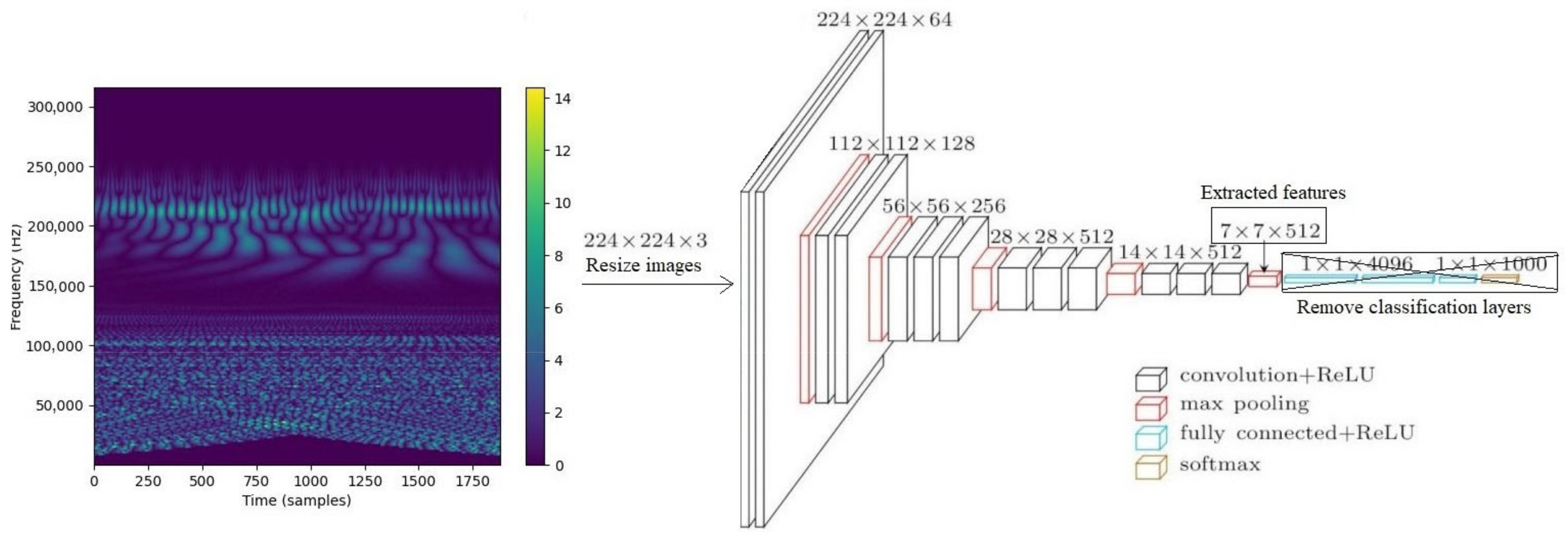

2.2.2. Wavelet Spectrogram-Based Features

2.2.3. CNN-Based Features from Wavelet Spectrogram Images

2.3. Classification

2.3.1. ML Models Applied to Extracted Features

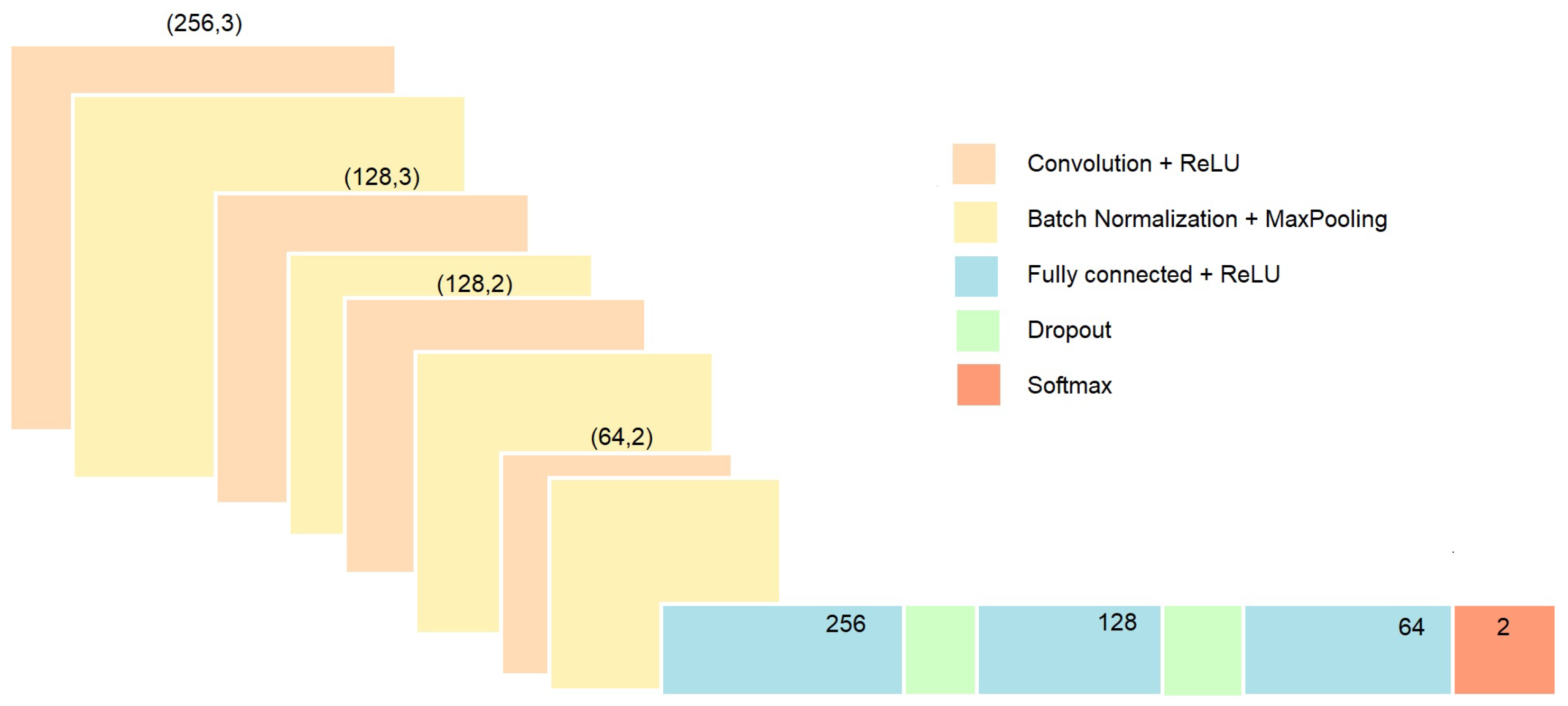

2.3.2. Deep Learning

2.4. Model Evaluation

3. Results

3.1. Option 1: ML Applied to Statistical Features

3.2. Option 2: ML Applied to Wavelet Spectrogram

3.3. Option 3: ML Applied to CNN-Based Wavelet Spectrogram Features

3.4. Option 4: Deep Learning on Signal Time Series Using a Custom CNN

3.5. Option 5: Transfer Learning on Spectrogram Images Using DenseNet121

3.6. Comparative Analysis of the Results

4. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- January, C.T.; Wann, L.S.; Alpert, J.S.; Calkins, H.; Cigarroa, J.E.; Cleveland, J.C., Jr.; Conti, J.B.; Ellinor, P.T.; Ezekowitz, M.D.; Field, M.E.; et al. 2014 AHA/ACC/HRS guideline for the management of patients with atrial fibrillation: Executive summary: A report of the American College of Cardiology/American Heart Association Task Force on practice guidelines and the Heart Rhythm Society. Circulation 2014, 130, 2071–2104. [Google Scholar] [CrossRef] [PubMed]

- Pereira, T.; Tran, N.; Gadhoumi, K.; Pelter, M.M.; Do, D.H.; Lee, R.J.; Colorado, R.; Meisel, K.; Hu, X. Photoplethysmography based atrial fibrillation detection: A review. NPJ Digit. Med. 2020, 3, 3. [Google Scholar] [CrossRef] [PubMed]

- Allen, J. Photoplethysmography and its application in clinical physiological measurement. Physiol. Meas. 2007, 28, R1. [Google Scholar] [CrossRef] [PubMed]

- Tarniceriu, A.; Harju, J.; Yousefi, Z.R.; Vehkaoja, A.; Parak, J.; Yli-Hankala, A.; Korhonen, I. The accuracy of atrial fibrillation detection from wrist photoplethysmography. A study on post-operative patients. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, Hawaii, 17–21 July 2018; IEEE: Piscataway Township, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Eerikäinen, L.M.; Bonomi, A.G.; Schipper, F.; Dekker, L.R.; Vullings, R.; de Morree, H.M.; Aarts, R.M. Comparison between electrocardiogram-and photoplethysmogram-derived features for atrial fibrillation detection in free-living conditions. Physiol. Meas. 2018, 39, 084001. [Google Scholar] [CrossRef]

- Fallet, S.; Lemay, M.; Renevey, P.; Leupi, C.; Pruvot, E.; Vesin, J.M. Can one detect atrial fibrillation using a wrist-type photoplethysmographic device? Med. Biol. Eng. Comput. 2019, 57, 477–487. [Google Scholar] [CrossRef]

- Fan, Y.Y.; Li, Y.G.; Li, J.; Cheng, W.K.; Shan, Z.L.; Wang, Y.T.; Guo, Y.T. Diagnostic performance of a smart device with photoplethysmography technology for atrial fibrillation detection: Pilot study (Pre-mAFA II registry). JMIR mHealth uHealth 2019, 7, e11437. [Google Scholar] [CrossRef]

- Reiss, A.; Schmidt, P.; Indlekofer, I.; Van Laerhoven, K. PPG-based heart rate estimation with time-frequency spectra: A deep learning approach. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018; pp. 1283–1292. [Google Scholar]

- Schäck, T.; Harb, Y.S.; Muma, M.; Zoubir, A.M. Computationally efficient algorithm for photoplethysmography-based atrial fibrillation detection using smartphones. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Republic of Korea, 11–15 July 2017; IEEE: Piscataway Township, NJ, USA, 2017; pp. 104–108. [Google Scholar]

- Poh, M.Z.; Poh, Y.C.; Chan, P.H.; Wong, C.K.; Pun, L.; Leung, W.W.C.; Wong, Y.F.; Wong, M.M.Y.; Chu, D.W.S.; Siu, C.W. Diagnostic assessment of a deep learning system for detecting atrial fibrillation in pulse waveforms. Heart 2018, 104, 1921–1928. [Google Scholar] [CrossRef]

- Shashikumar, S.P.; Shah, A.J.; Clifford, G.D.; Nemati, S. Detection of Paroxysmal Atrial Fibrillation using Attention-based Bidirectional Recurrent Neural Networks. arXiv 2018, arXiv:1805.09133. [Google Scholar]

- Pereira, T.; Ding, C.; Gadhoumi, K.; Tran, N.; Colorado, R.A.; Meisel, K.; Hu, X. Deep learning approaches for plethysmography signal quality assessment in the presence of atrial fibrillation. Physiol. Meas. 2019, 40, 125002. [Google Scholar] [CrossRef]

- Nguyen, D.H.; Chao, P.C.P.; Chung, C.C.; Horng, R.H.; Choubey, B. Detecting Atrial Fibrillation in Real Time Based on PPG via Two CNNs for Quality Assessment and Detection. IEEE Sens. J. 2022, 22, 24102–24111. [Google Scholar] [CrossRef]

- Han, D.; Bashar, S.K.; Zieneddin, F.; Ding, E.; Whitcomb, C.; McManus, D.D.; Chon, K.H. Digital image processing features of smartwatch photoplethysmography for cardiac arrhythmia detection. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; IEEE: Piscataway Township, NJ, USA, 2020; pp. 4071–4074. [Google Scholar]

- Petmezas, G.; Haris, K.; Stefanopoulos, L.; Kilintzis, V.; Tzavelis, A.; Rogers, J.A.; Katsaggelos, A.K.; Maglaveras, N. Automated atrial fibrillation detection using a hybrid CNN-LSTM network on imbalanced ECG datasets. Biomed. Signal Process. Control 2021, 63, 102194. [Google Scholar] [CrossRef]

- Chen, X.; Cheng, Z.; Wang, S.; Lu, G.; Xv, G.; Liu, Q.; Zhu, X. Atrial fibrillation detection based on multi-feature extraction and convolutional neural network for processing ECG signals. Comput. Methods Programs Biomed. 2021, 202, 106009. [Google Scholar] [CrossRef] [PubMed]

- Sai Kumar, S.; Rinku, D.R.; Pradeep Kumar, A.; Maddula, R.; Anna Palagan, C. An IOT framework for detecting cardiac arrhythmias in real-time using deep learning resnet model. Meas. Sens. 2023, 29, 100866. [Google Scholar] [CrossRef]

- Cinotti, E.; Centracchio, J.; Parlato, S.; Andreozzi, E.; Esposito, D.; Muto, V.; Bifulco, P.; Riccio, M. A Narrowband IoT Personal Sensor for Long-Term Heart Rate Monitoring and Atrial Fibrillation Detection. Sensors 2024, 24, 4432. [Google Scholar] [CrossRef] [PubMed]

- Kavsaoğlu, A.R.; Polat, K.; Hariharan, M. Non-invasive prediction of hemoglobin level using machine learning techniques with the PPG signal’s characteristics features. Appl. Soft Comput. 2015, 37, 983–991. [Google Scholar] [CrossRef]

- Njoum, H.; Kyriacou, P. Investigation of finger reflectance photoplethysmography in volunteers undergoing a local sympathetic stimulation. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2013; Volume 450, p. 012012. [Google Scholar]

- Charlton, P.H. MIMIC PERform Datasets (1.01) [Data Set]. Zenodo. 2022. Available online: https://zenodo.org/records/6807403 (accessed on 24 September 2024).

- Charlton, P.H.; Kotzen, K.; Mejía-Mejía, E.; Aston, P.J.; Budidha, K.; Mant, J.; Pettit, C.; Behar, J.A.; Kyriacou, P.A. Detecting beats in the photoplethysmogram: Benchmarking open-source algorithms. Physiol. Meas. 2022, 43, 085007. [Google Scholar] [CrossRef]

- Moody, B.; Moody, G.; Villarroel, M.; Clifford, G.D.; Silva, I. MIMIC-III Waveform Database Matched Subset (version 1.0); PhysioNet: 2020. Available online: https://physionet.org/content/mimic3wdb-matched/1.0/ (accessed on 24 September 2024).

- Bashar, S.K.; Ding, E.; Walkey, A.J.; McManus, D.D.; Chon, K.H. Noise Detection in Electrocardiogram Signals for Intensive Care Unit Patients. IEEE Access 2019, 7, 88357–88368. [Google Scholar] [CrossRef]

- Clifford, G.D.; Azuaje, F.; McSharry, P. Advanced Methods and Tools for ECG Data Analysis; Artech House Boston: Norwood, MA, USA, 2006; Volume 10. [Google Scholar]

- Shan, S.M.; Tang, S.C.; Huang, P.W.; Lin, Y.M.; Huang, W.H.; Lai, D.M.; Wu, A.Y.A. Reliable PPG-based algorithm in atrial fibrillation detection. In Proceedings of the 2016 IEEE Biomedical Circuits and Systems Conference (BioCAS), Shanghai, China, 17–19 October 2016; IEEE: Piscataway Township, NJ, USA, 2016; pp. 340–343. [Google Scholar]

- Park, J.; Seok, H.S.; Kim, S.S.; Shin, H. Photoplethysmogram Analysis and Applications: An Integrative Review. Front. Physiol. 2022, 12, 2511. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ruiz, P. Understanding and Visualizing DenseNets. 2018. Available online: http://www.pabloruizruiz10.com/resources/CNNs/DenseNets.pdf (accessed on 24 September 2024).

- Tihak, A.; Konjicija, S.; Boskovic, D. Deep learning models for atrial fibrillation detection: A review. In Proceedings of the 30th Telecommunications Forum (TELFOR), Belgrade, Serbia, 15–16 November 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Huang, C.W.; Ding, J.J. Atrial Fibrillation Detection Algorithm with Ratio Variation-Based Features. In Proceedings of the 4th Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 28–30 October 2022; pp. 16–21. [Google Scholar] [CrossRef]

- Abdelazez, M.; Rajan, S.; Chan, A.D.C. Transfer Learning for Detection of Atrial Fibrillation in Deterministic Compressive Sensed ECG. In Proceedings of the 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 5398–5401. [Google Scholar] [CrossRef]

- Salinas-Martínez, R.; De Bie, J.; Marzocchi, N.; Sandberg, F. Automatic Detection of Atrial Fibrillation Using Electrocardiomatrix and Convolutional Neural Network. In Proceedings of the 2020 Computing in Cardiology, Rimini, Italy, 13–16 September 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Bashar, S.K.; Han, D.; Hajeb-Mohammadalipour, S.; Ding, E.; Whitcomb, C.; McManus, D.D.; Chon, K.H. Atrial fibrillation detection from wrist photoplethysmography signals using smartwatches. Sci. Rep. 2019, 9, 15054. [Google Scholar] [CrossRef]

- Mohagheghian, F.; Han, D.; Peitzsch, A.; Nishita, N.; Ding, E.; Dickson, E.L.; DiMezza, D.; Otabil, E.M.; Noorishirazi, K.; Scott, J.; et al. Optimized signal quality assessment for photoplethysmogram signals using feature selection. IEEE Trans. Biomed. Eng. 2022, 69, 2982–2993. [Google Scholar] [CrossRef] [PubMed]

- Aldughayfiq, B.; Ashfaq, F.; Jhanjhi, N.; Humayun, M. A Deep Learning Approach for Atrial Fibrillation Classification Using Multi-Feature Time Series Data from ECG and PPG. Diagnostics 2023, 13, 2442. [Google Scholar] [CrossRef] [PubMed]

- Pachori, D.; Tripathy, R.K.; Jain, T.K. Detection of Atrial Fibrillation from PPG Sensor Data using Variational Mode Decomposition. IEEE Sens. Lett. 2024, 8, 1472–2475. [Google Scholar] [CrossRef]

- Talukdar, D.; de Deus, L.F.; Sehgal, N. Evaluation of Atrial Fibrillation Detection in short-term Photoplethysmography (PPG) signals using artificial intelligence. Cureus 2023, 15, e45111. [Google Scholar] [CrossRef]

- Voisin, M.; Shen, Y.; Aliamiri, A.; Avati, A.; Hannun, A.; Ng, A. Ambulatory Atrial Fibrillation Monitoring Using Wearable Photoplethysmography with Deep Learning. arXiv 2018, arXiv:1811.07774. [Google Scholar]

| Parameter | Description |

|---|---|

| Average Heart Rate | Indicates the overall heart rate level. |

| Heart Rate Standard Deviation | Captures heart rate variability over time. |

| Heart Rate Median | Represents the middle heart rate value, robust to outliers. |

| NN Interval Ratio | Measures irregularity in heart rate patterns, calculating the intervals greater than a threshold. |

| Root Mean Squared Successive Difference (RMSSD) | Reflects short-term heart rate variability, computing the RMSSD between consecutive NN intervals. |

| Low-Frequency to High-Frequency Power Ratio | Relates to autonomic nervous system activity based on the signal frequency spectrum. |

| Inflection Point Ratio (TPR) | Provides insights into waveform morphology. |

| Crest Time | Calculates time between the first peak and trough in the PPG signal. |

| Combined Peak-Rise and Fall Height | Captures pulse waveform shape. |

| Waveform Width | Measures the temporal extent of the pulse waveform. |

| Cross-correlation Coefficient | Evaluates similarity between consecutive pulse segments, providing information about consistency. |

| Adaptive Organization Index (AOI) | Quantifies adaptive organization in the PPG signal by measuring the changes in successive pulse segment differences. |

| Variance of Slope of Phase Difference | Characterizes variation in phase difference slope. |

| Spectral Entropy | Measures frequency spectrum complexity capturing the power distribution across different frequency bands. |

| Statistical Measures | |

| Mean Values | Reflects average energy distribution across different frequencies. |

| Variance Values | Captures energy distribution variability across frequencies. |

| Skewness Values | Measures asymmetry, revealing skewed frequency components. |

| Kurtosis Values | Characterizes energy distribution peakedness or flatness, showing sharp or broad frequency components. |

| Energy Distribution | |

| Energy Values | Represents total energy contribution of various frequency components, giving insight into spectral content. |

| Wavelet Coefficient Analysis | |

| Coefficient Mean | Captures average magnitude of spectral components. |

| Coefficient Variance | Indicates variability or spread of wavelet coefficients across frequencies. |

| Coefficient Skewness | Reflects asymmetry, showing presence of skewed frequency components. |

| Coefficient Kurtosis | Characterizes peakedness or flatness, revealing sharp or broad frequency components. |

| Option | Best-Model | Accuracy Score | F1-Score | AUC |

|---|---|---|---|---|

| Option 1 | SVM | 0.9438 | 0.9437 | 0.9872 |

| Stack Model | 0.9438 | 0.9437 | 0.9872 | |

| Option 2 | MLP | 0.9621 | 0.9620 | 0.9908 |

| Stack Model | 0.9707 | 0.9706 | 0.9974 | |

| Option 3 | SVM | 0.9707 | 0.9707 | 0.9872 |

| Stack Model | 0.9768 | 0.9768 | 0.9967 |

| Option | Accuracy Score |

|---|---|

| Option 4 | 0.9462 |

| Option 5 | 0.9406 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pedrosa-Rodriguez, A.; Camara, C.; Peris-Lopez, P. Leveraging IoT Devices for Atrial Fibrillation Detection: A Comprehensive Study of AI Techniques. Appl. Sci. 2024, 14, 8945. https://doi.org/10.3390/app14198945

Pedrosa-Rodriguez A, Camara C, Peris-Lopez P. Leveraging IoT Devices for Atrial Fibrillation Detection: A Comprehensive Study of AI Techniques. Applied Sciences. 2024; 14(19):8945. https://doi.org/10.3390/app14198945

Chicago/Turabian StylePedrosa-Rodriguez, Alicia, Carmen Camara, and Pedro Peris-Lopez. 2024. "Leveraging IoT Devices for Atrial Fibrillation Detection: A Comprehensive Study of AI Techniques" Applied Sciences 14, no. 19: 8945. https://doi.org/10.3390/app14198945

APA StylePedrosa-Rodriguez, A., Camara, C., & Peris-Lopez, P. (2024). Leveraging IoT Devices for Atrial Fibrillation Detection: A Comprehensive Study of AI Techniques. Applied Sciences, 14(19), 8945. https://doi.org/10.3390/app14198945