FRAMUX-EV: A Framework for Evaluating User Experience in Agile Software Development

Abstract

1. Introduction

2. Theoretical Background

2.1. User Experience (UX)

2.2. Agile Software Development

2.3. Related Work

3. Need for a UX Evaluation Framework

- Prioritization of design over evaluation: Practices such as software design (user-centered design) are prioritized over evaluation (evaluation-centered evaluation). Thus, the team develops software primarily by considering what users want (functionalities) rather than how they want it (needs and goals) [26].

- Lack of early UX evaluation: In agile software development, it is believed that UX can (or should) be evaluated only in the final stages. Therefore, in the early stages, decisions are made without considering users’ needs [27].

4. Methodology

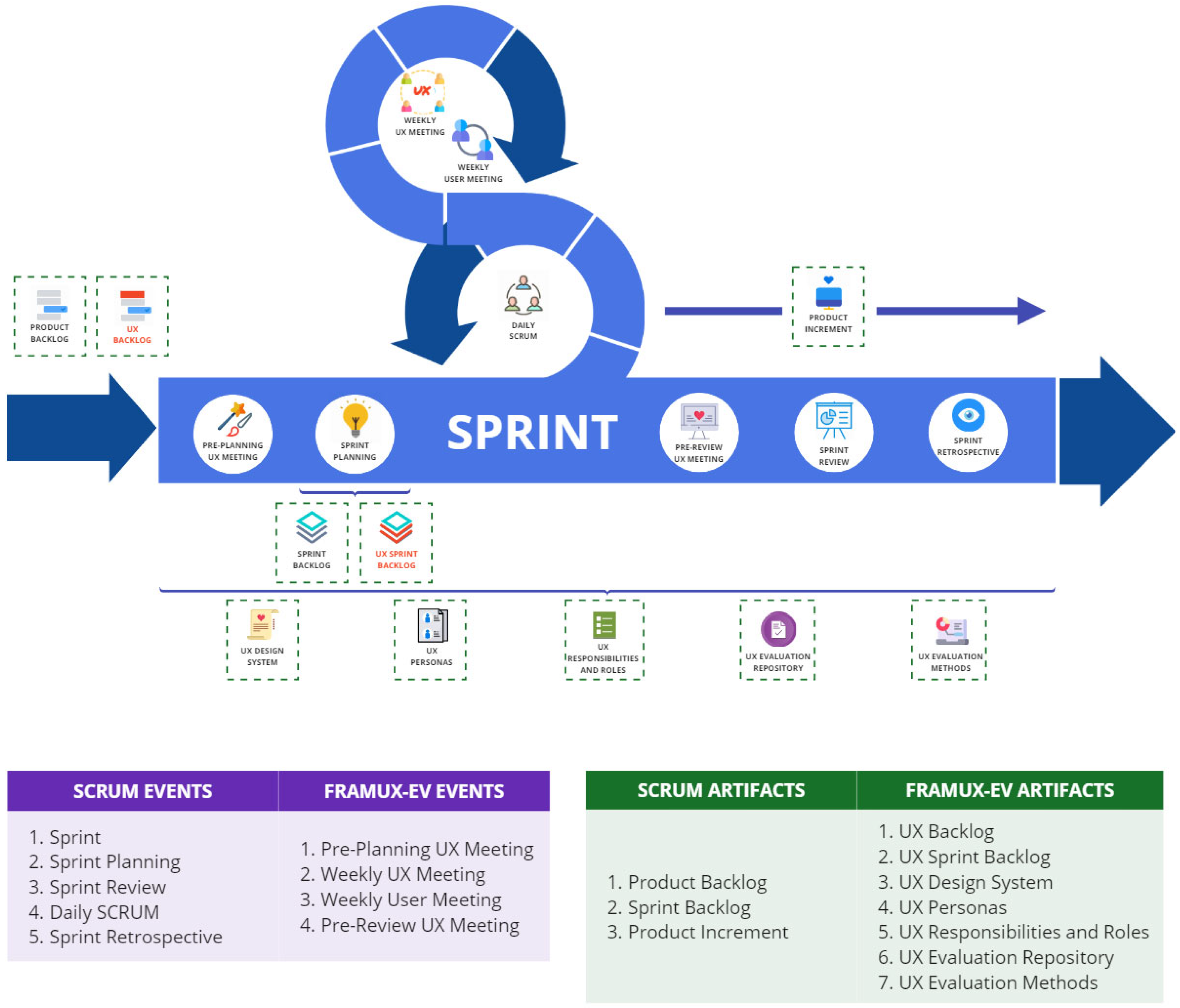

5. FRAMUX-EV: First Version

5.1. Inputs

- Systematic literature review: The systematic literature review provided frameworks or similar (e.g., methodologies, processes, or approaches), highlighting 23 UX evaluation methods, 56 problems/challenges, and 91 recommendations/practices, which were taken as a reference to propose new UX components [29].

- Interviews: The interviews with practitioners provided insight into the industry’s perspective, highlighting 9 UX evaluation methods, 25 problems/challenges, and 40 recommendations/practices experienced by UX roles and developers when developing software in agile projects [30].

5.2. UX Evaluation Methods

5.3. UX Artifacts

5.4. UX Events

6. Validating the Framework

6.1. Quantitative Results

6.1.1. Quantitative Results: UX Evaluation Methods

6.1.2. Quantitative Results: UX Artifacts

6.1.3. Quantitative Results: UX Events

6.2. Qualitative Results

6.2.1. Qualitative Results: UX Evaluation Methods

6.2.2. Qualitative Results: UX Artifacts

6.2.3. Qualitative Results: UX Events

7. Discussions

7.1. How to Use FRAMUX-EV

- Before the sprint planning, the team conducts a pre-planning UX meeting. This event aims to verify whether the prioritized UX work in the UX backlog is ready to be undertaken by the development team during the sprint.

- During the sprint planning, the Scrum team selects items from the product backlog to be developed during the sprint. Simultaneously, the UX tasks from the UX backlog are prioritized and integrated into the UX sprint backlog to ensure that the UX requirements are covered.

- The team meets daily during the daily meeting to track the progress of sprint tasks. During this event, both developers and UX roles ensure that any blockers or impediments in the UX work are promptly resolved. If UX team members have questions about any aspect of their tasks, they can refer to UX roles and responsibilities.

- Once per week, a weekly UX meeting is held, where the UX team and developers discuss the progress of UX design, review updates to the UX components, and resolve issues related to the feasibility of UX designs. In this meeting, potential changes to the UX design system may be reviewed, and the team discusses the evolution of the UX work

- Once per week, a weekly user meeting is held with users to evaluate UX work using some of the methods suggested in UX evaluation methods, discuss upcoming tasks, and obtain direct feedback on ongoing designs. The outcomes of these meetings are documented in the UX evaluation repository for subsequent analysis and refinement.

- Just before the sprint review, a pre-review UX meeting is conducted, where the UX and development teams validate whether the UX designs and functions implemented during the sprint meet the required standards and objectives.

- During the sprint review, the Scrum team presents the product increment, including both development and UX implementations. The UX team can demonstrate how the UX personas, UX design system, and UX evaluation repository guided the final design presented to the stakeholders.

- After the sprint review, the team holds a sprint retrospective to discuss what went well and what could be improved, focusing on both the development and the UX integration aspects. In this event, improvements to UX artifacts, UX events, or UX evaluation methods may be proposed.

7.2. Comparison between FRAMUX-EV and Existing Proposals

7.3. Challenges in Applying FRAMUX-EV

7.4. Contributions

7.5. Limitations

7.6. Opportunities to Improve FRAMUX-EV

- Iteration 2: In this phase, the changes identified during this first iteration will be implemented to develop the second version of FRAMUX-EV. This version will need to be validated with UX practitioners by conducting two experiments: (1) an experiment focused on evaluating the specification and detailed content of UX artifacts and (2) an experiment focused on evaluating the specification and feasibility of UX events. Both experiments aim to validate that the proposal meets the industry standards and project needs. Based on these expert evaluations, the necessary refinements will be identified, which will lay the groundwork for the next iteration of the framework.

- Iteration 3: The findings from the second iteration will be applied to further refine the framework, leading to the development of the third version of FRAMUX-EV. This version will be tested in real-world projects with two case studies to validate its effectiveness and applicability. In each experiment, feedback will be collected from UX and development roles to assess the effectiveness of integrating FRAMUX-EV in agile projects. The results of these validations will be used to obtain the final necessary adjustments, preparing the framework for its final iteration.

- Iteration 4: Finally, based on the feedback and improvements from the previous iteration, the necessary adjustments will be made to FRAMUX-EV to present its final version. This version will include all refinements identified during experiments in real-world projects and will represent a mature framework for evaluating and integrating UX into agile software development.

- Following these iterations, FRAMUX-EV will continuously evolve, ensuring the integration of UX evaluation and agile software development processes and providing more robust and effective results.

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Beck, K.; Beedle, M.; Bennekum, A.V.; Cockburn, A.; Cunningham, W.; Fowler, M.; Grenning, J.; Highsmith, J.; Hunt, A.; Jeffries, R.; et al. Agile Manifesto. 2001. Available online: https://agilemanifesto.org/ (accessed on 1 September 2024).

- Felker, C.; Slamova, R.; Davis, J. Integrating UX with scrum in an undergraduate software development project. In Proceedings of the 43rd ACM Technical Symposium on Computer Science Education, Raleigh, NC, USA, 29 February–3 March 2012; pp. 301–306. [Google Scholar]

- de Oliveira Sousa, A.; Valentim, N.M.C. Prototyping Usability and User Experience: A Simple Technique to Agile Teams. In Proceedings of the XVIII Brazilian Symposium on Software Quality, SBQS’19, Fortaleza, Brazil, 28 October–1 November 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 222–227. [Google Scholar] [CrossRef]

- Kuusinen, K.; Väänänen-Vainio-Mattila, K. How to Make Agile UX Work More Efficient: Management and Sales Perspectives. In Proceedings of the 7th Nordic Conference on Human-Computer Interaction: Making Sense Through Design, NordiCHI ’12, Copenhagen, Denmark, 14–17 October 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 139–148. [Google Scholar] [CrossRef]

- Digital.ai. 16th State of Agile Report. 2022. Available online: https://info.digital.ai/rs/981-LQX-968/images/AR-SA-2022-16th-Annual-State-Of-Agile-Report.pdf (accessed on 1 September 2024).

- Lárusdóttir, M.K.; Cajander, Å.; Gulliksen, J. The Big Picture of UX is Missing in Scrum Projects. In Proceedings of the International Workshop on the Interplay between User Experience (UX) Evaluation and System Development (I-UxSED), Copenhagen, Denmark, 14 October 2012; pp. 49–54. [Google Scholar]

- Kikitamara, S.; Noviyanti, A.A. A Conceptual Model of User Experience in Scrum Practice. In Proceedings of the 2018 10th International Conference on Information Technology and Electrical Engineering (ICITEE), Xiamen, China, 7–8 December 2018; pp. 581–586. [Google Scholar] [CrossRef]

- ISO 9241-210; 2010 Ergonomics of Human-System Interaction—Part 210: Human-Centred Design for Interactive Systems. ISO: Geneva, Switzerland, 2019. Available online: https://www.iso.org/standard/77520.html (accessed on 1 September 2024).

- Schulze, K.; Krömker, H. A framework to measure User eXperience of interactive online products. In Proceedings of the ACM International Conference on Internet Computing and Information Services, Washington, DC, USA, 17–18 September 2011. [Google Scholar] [CrossRef]

- Roto, V.; Väänänen-Vainio-Mattila, K.; Law, E.; Vermeeren, A. User experience evaluation methods in product development (UXEM’09). In Human-Computer Interaction–INTERACT 2009: 12th IFIP TC 13 International Conference, Uppsala, Sweden, 24–28 August 2009; Proceedings, Part II 12; Springer: Berlin/Heidelberg, Germany, 2009; pp. 981–982. [Google Scholar]

- Experience Research Society. User Experience. Available online: https://experienceresearchsociety.org/ux/ (accessed on 9 December 2023).

- Krause, R. Accounting for User Research in Agile. 2021. Available online: https://www.nngroup.com/articles/user-research-agile/ (accessed on 9 December 2023).

- Persson, J.S.; Bruun, A.; Lárusdóttir, M.K.; Nielsen, P.A. Agile software development and UX design: A case study of integration by mutual adjustment. Inf. Softw. Technol. 2022, 152, 107059. [Google Scholar] [CrossRef]

- Agile Alliance. What is Agile? | Agile 101 | Agile Alliance. Available online: https://www.agilealliance.org/agile101/ (accessed on 1 June 2022).

- Sommerville, I. Software Engineering, 9th ed.; Pearson Education India: Chennai, India, 2011. [Google Scholar]

- Schwaber, K.; Sutherland, J. The 2020 Scrum Guide. 2020. Available online: https://scrumguides.org/scrum-guide.html (accessed on 9 December 2023).

- Maguire, M. Using human factors standards to support user experience and agile design. In International Conference on Universal Access in Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2013; pp. 185–194. [Google Scholar]

- Pillay, N.; Wing, J. Agile UX: Integrating good UX development practices in Agile. In Proceedings of the 2019 Conference on Information Communications Technology and Society (ICTAS), Durban, South Africa, 6–8 March 2019; pp. 1–6. [Google Scholar]

- Weber, B.; Müller, A.; Miclau, C. Methodical Framework and Case Study for Εmpowering Customer-Centricity in an E-Commerce Agency–The Experience Logic as Key Component of User Experience Practices Within Agile IT Project Teams. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform. 2021, 12783, 156–177. [Google Scholar] [CrossRef]

- Argumanis, D.; Moquillaza, A.; Paz, F. A Framework Based on UCD and Scrum for the Software Development Process. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform. 2021, 12779, 15–33. [Google Scholar] [CrossRef]

- Gardner, T.N.; Aktunc, O. Integrating Usability into the Agile Software Development Life Cycle Using User Experience Practices. In ASEE Gulf Southwest Annual Conference, ASEE Conferences. 2022. Available online: https://peer.asee.org/39189 (accessed on 1 June 2022).

- Atlassian. What is Agile? | Atlassian. Available online: https://www.atlassian.com/agile (accessed on 1 June 2022).

- Vermeeren, A.P.O.S.; Law, E.L.C.; Roto, V.; Obrist, M.; Hoonhout, J.; Väänänen-Vainio-Mattila, K. User experience evaluation methods: Current state and development needs. In Proceedings of the Nordic 2010 Extending Boundaries—Proceedings of the 6th Nordic Conference on Human-Computer Interaction, Reykjavik, Iceland, 16–20 October 2010; pp. 521–530. [Google Scholar] [CrossRef]

- Blomkvist, S. Towards a Model for Bridging Agile Development and User-Centered Design; Springer: Berlin/Heidelberg, Germany, 2005; pp. 219–244. [Google Scholar] [CrossRef]

- Salah, D.; Paige, R.F.; Cairns, P. A systematic literature review for Agile development processes and user centred design integration. In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering, New York, NY, USA, 13–14 May 2014; pp. 1–10. [Google Scholar] [CrossRef]

- Isomursu, M.; Sirotkin, A.; Voltti, P.; Halonen, M. User experience design goes agile in lean transformation—A case study. In Proceedings of the 2012 Agile Conference, Dallas, TX USA, 13–17 August 2012; pp. 1–10. [Google Scholar] [CrossRef]

- Sy, D.; Miller, L. Optimizing Agile user-centred design. In Proceedings of the Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 3897–3900. [Google Scholar] [CrossRef]

- Chamberlain, S.; Sharp, H.; Maiden, N. Towards a framework for integrating agile development and user-centred design. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform. 2006, 4044, 143–153. [Google Scholar] [CrossRef]

- Rojas, L.F.; Quiñones, D. How to Evaluate the User Experience in Agile Software Development: A Systematic Literature Review. Submitt. J. Under Rev. 2024. [Google Scholar]

- Rojas, L.F.; Quiñones, D.; Cubillos, C. Exploring practitioners’ perspective on user experience and agile software development. In Proceedings of the International Conference on Industry Science and Computer Sciences Innovation, Porto, Portugal, 29–31 October 2024. [Google Scholar]

- Jordan, P. Designing Pleasurable Products: An Introduction to the New Human Factors, 1st ed.; CRC Press: London, UK, 2000. [Google Scholar]

- Nielsen, J.; Molich, R. Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Washington, DC, USA, 1–5 April 1990; pp. 249–256. [Google Scholar]

- Grace, E. Guerrilla Usability Testing: How to Introduce It in Your Next UX Project—Usability Geek. Available online: https://usabilitygeek.com/guerrilla-usability-testing-how-to/ (accessed on 1 July 2022).

- Medlock, M.C.; Wixon, D.; Terrano, M.; Romero, R.; Fulton, B. Using the RITE method to improve products: A definition and a case study. Usability Prof. Assoc. 2002, 51, 1562338474–1963813932. [Google Scholar]

- Nielsen, J. Putting A/B Testing in Its Place. August 2005. Available online: https://www.nngroup.com/articles/putting-ab-testing-in-its-place/ (accessed on 1 July 2022).

- Nielsen, J. Summary of Usability Inspection Methods. 1994. Available online: https://www.nngroup.com/articles/summary-of-usability-inspection-methods/ (accessed on 1 July 2022).

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

| Authors | Type of Study | Stages | UX Evaluation Methods | UX Artifacts or UX Events |

|---|---|---|---|---|

| Felker et al. [2] | Integration of elements to Scrum | 1. Scrum events | 1. Paper prototypes 2. Formative UX evaluation 3. RITE method 4. Brief-regular tests | Not mentioned |

| Maguire [17] | Integration of elements to Human-Centered Design | 1. Context of use 2. User and organizational requirements 3. Design & prototype 4. Evaluation of designs | 1. Heuristic evaluation 2. User walkthroughs 3. Labs tests/field trials | Not mentioned |

| Pillay and Wing [18] | Integration of elements to Scrum | 1. Sprint 0 2. Scrum events 3. A UX cycle embedded in the sprint | Not mentioned | UX event: 1. Lean UX cycle |

| Weber et al. [19] | Integration of elements to Scrum | 1. Phase 1 2. Phase 2 3. Phase 3 4. Phase 4 | 1. 5-s test 2. (Un)moderated usability test 3. Cognitive walkthrough 4. Card sorting 5. Eye tracking | UX artifacts: 1. Documented ‘lessons learned’ 2. User story map |

| Argumanis et al. [20] | Integration of elements to Scrum | 1. Initiation Phase 2. Planning Phase 3. Implementation phase | 1. Design Evaluation 2. User Test (Thinking aloud) 3. Pair Design | Not mentioned |

| Gardner and Aktunc [21] | Sequential and/or iterative activities for agile in general | 1. Understand 2. Ideate 3. Decide 4. Prototype 5. Test | 1. User testing | Not mentioned |

| UX Evaluation Method | Definition |

|---|---|

| Usability/user testing | Participants are asked to verbalize the thoughts that they have when experiencing a product or product concept. Where the item under evaluation is a finished product or an interactive prototype, participants may be set some specific tasks to do or may be given the chance for some free exploration of the product [31]. |

| Heuristic evaluation | Inspection method that allows to find potential usability/UX problems in the design of a user interface. A small group of usability experts judge a user interface to check if it complies with the principles of usability design regarding certain heuristics [32]. |

| Evaluation with mockups or prototypes | It consists of evaluating a mock-up or prototype by discussing it with a user and asking them questions about it. |

| Guerrilla testing | “Guerrilla usability testing is a way to evaluate how effective an interface is by testing out its visual design, functionality and general message on its intended audience and capturing their responses. What makes guerrilla usability testing unique is that participants are not recruited in advance. Instead, members of the public are approached by those conducting the study during live intercepts in cafés, libraries, and malls, or in any other natural environment” [33]. |

| RITE method | “A RITE test is very similar to a ‘traditional’ usability test. The usability engineer and team must define a target population for testing, schedule participants to come into the lab, decide on how the users behaviors will be measured, construct a test script and have participants engage in a verbal protocol (e.g., think aloud). RITE differs from a ‘traditional’ usability test by emphasizing extremely rapid changes and verification of the effectiveness of these changes” [34]. |

| A/B testing | “Unleash two different versions of a design on the world and see which performs the best” [35]. |

| Pluralistic walkthrough | “Uses group meetings where users, developers, and human factors people step through a scenario, discussing each dialogue element” [36]. |

| System usability scale (SUS) | An instrument used to measure usability perception. It is composed of 10 Likert-scale questions and produces a score from 0 to 100 where individual item scores are not significant on their own [37]. |

| UX Artifact | Description | How to Use It |

|---|---|---|

| UX guidelines that include a list of all recommended methods for evaluating UX in agile projects. It includes a definition of each method, a detailed explanation of their application, steps to follow, expected results, and recommendations on when to use them. | 1. Choose UX evaluation methods to use in your project. 2. Select the most appropriate UX evaluation method for each experiment. 3. Follow the step-by-step process defined for each UX evaluation method. 4. Use the results to inform UX decisions. |

| UX guidelines that contain principles, reusable components, interactions, color palettes, and typography defined by the UX team to incorporate designs. | 1. Create a comprehensive guide of reusable components and design standards. 2. Share with the development team to ensure the feasibility of components. 3. Update regularly to incorporate new patterns or design components. 4. Use as a reference during design and development to ensure alignment and consistency. |

| UX guidelines that include the personas created by the UX team. It includes a template for creating personas, recommendations, and best practices, and a database with contact information of representative users. | 1. Collect user data through UX research. 2. Create personas that capture relevant information of representative users. 3. Use personas during design discussions to ensure the user’s perspective is considered. 4. Update personas when project goals evolve or new user segments are identified. |

| UX guidelines that contain all the UX work that should be done during the development of a project. It includes a detailed description of the activities to be performed, the estimated time required, the UX role that has the responsibility to perform them, and recommendations on the activities. | 1. Select the UX task to be carried out during the project. 2. Assign UX tasks to different UX roles. 3. Ensure that each UX role understands the specific responsibilities for each task. 4. Consult for additional information on the assigned UX tasks |

| UX document that includes the results and conclusions of UX evaluations. It includes details about the type of evaluation, its objective, questions/activities to be performed, number of participants, results obtained (anonymous), and conclusions. | 1. Document the results and findings after each UX evaluation. 2. Store detailed reports of each UX evaluation, including key metrics and outcomes. 3. Review to guide future design decisions. 4. Use as a reference for UX improvements and trend analysis over time. |

| UX artifact that contains a prioritized list of UX work required to complete an agile project (i.e., research, design, and evaluation), including UX acceptance criteria, definition of ready, and definition of done. If the project does not have a UX team, UX acceptance criteria, definition of ready, and definition of done should be included for each user story. | 1. List all UX tasks required for the project (research, design, evaluation). 2. Assign priorities based on user needs and project objectives. 3. Review and update the UX backlog at each sprint to consider new priorities and tasks. 4. Ensure UX tasks are aligned with the development backlog to maintain focus on UX. |

| UX artifact that includes the UX sprint goal, the UX backlog items selected for the sprint, and the plan for delivering them. If the project does not have a UX team, the UX work should be included in the sprint backlog. | 1. Select priority UX tasks from the UX backlog for the sprint. 2. Assign deadlines and responsible members to each of the selected tasks. 3. Update the status of UX tasks during daily meetings to track progress. 4. Review the completion of UX tasks during the sprint retrospective and adjust future planning accordingly. |

| UX Event | Description | How to Conduct It |

|---|---|---|

| Meetings between the development team and the UX team to validate and/or refine designs prior to sprint planning. | 1. Prepare detailed designs. 2. Present UX work for validation and feedback. 3. Address any feasibility concerns or technical blockers with the developers. 4. Confirm that UX acceptance criteria, definition of ready, and definition of done for each user story are clearly defined and agreed upon. |

| Meetings between the development team and the UX team to validate and/or refine designs prior to sprint review. | 1. Review all UX work and ensure it meets UX acceptance criteria. 2. Identify any remaining issues. 3. Collaborate with developers to make final adjustments to the designs based on the review. 4. Confirm all designs developed are ready for the sprint review. |

| Weekly meetings between the development team and the UX team to discuss UX issues. For example, the feasibility of designs, changes to the UX component guide, design inquiries, or developer solutions for minor bugs. | 1. Document ongoing design issues or questions. 2. Analyze the feasibility of upcoming UX tasks with developers. 3. Discuss updates to the UX component guide. 4. Collaborate with the team to resolve minor design or technical problems. |

| Weekly meetings between users and the UX team (development team, product owner, stakeholders, and managers are optional) to (1) ask questions about designs, user stories, priorities, etc. and (2) evaluate the proposed designs. | 1. Select the UX evaluation methods to use. 2. Schedule a meeting with users. 3. Evaluate the UX work. 4. Ask questions about future UX work. |

| ID | Educational Level | Years of Experience | Roles Undertaken |

|---|---|---|---|

| P1 | Master | 12 | UX Writer |

| P2 | Master | 15 | Consulting and Design Director, UX Researcher, UX Consultant, UX Lead |

| P3 | College education | 2 | UX Researcher, Conversational UX |

| P4 | College education | 12 | UX Writer |

| P5 | College education | 1 | UX Researcher, UX Designer |

| P6 | Master | 12 | Project Leader, Scrum Master |

| P7 | Master | 17 | Project Leader, Lead UX |

| P8 | College education | 1 | UX Researcher, UX Designer |

| P9 | Doctorate | 10 | UX Researcher, Project Leader, Academic Professor |

| P10 | College education | 3 | Full-Stack Developer |

| P11 | Master | 17 | UX Researcher, UX Writer, Back-End Developer, Full-Stack Developer, Product Owner, Scrum Master, UI Designer, Product Manager |

| P12 | College education | 5 | Project Engineer, Manager, Developer |

| P13 | College education | 8 | UX/UI Designer |

| P14 | Master | 16 | Development Team Leader, Scrum Master |

| P15 | Post Doctorate | 15 | Full-Stack Developer, UX Designer, Scrum Master, Academic Professor |

| P16 | Doctorate | 20 | Full-Stack Developer, Front-End Developer, Creative Technologist, Software Engineer 2 |

| P17 | Master | 16 | Scrum Master, Agile Coach |

| P18 | College education | 5 | UX Researcher |

| P19 | College education | 16 | Lead UX, UX Designer |

| P20 | College education | 2 | Project Manager, Product Owner, Scrum Master, QA Producer |

| P21 | College education | 2 | UX Researcher, UX/UI Designer |

| P22 | Master | 14 | Agile Delivery Lead, Developer, Scrum Master, Agile Team Facilitator |

| P23 | College education | 1 | Product Owner |

| P24 | Post Doctorate | 22 | Product Owner, Academic Professor |

| P25 | Master | 10 | UX Researcher |

| P26 | College education | 1 | UX Designer, IT Auditor |

| P27 | College education | 1 | Front-End Developer |

| P28 | College education | 15 | Senior Software Engineer, Lead Front-End Engineer, Full-Stack Developer, UX Designer, Product Manager |

| P29 | College education | 1 | Android Front-End Developer |

| P30 | Master | 4 | IOS Developer |

| P31 | College education | 8 | Product Owner |

| P32 | Master | 5 | UX Researcher |

| P33 | College education | 8 | Senior Product Designer, UX/UI Designer, Product Designer |

| P34 | College education | 16 | Developer, Product Owner, UI Designer |

| UX Evaluation Methods | D1—Usefulness | D2—Ease of Integration | ||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Usability/user test | 4.79 | 0.41 | 3.65 | 0.98 |

| Heuristic evaluation | 3.82 | 0.83 | 3.53 | 0.99 |

| Evaluation with mockups or prototypes | 4.26 | 0.96 | 4.12 | 0.73 |

| Guerrilla testing | 3.97 | 1.03 | 3.35 | 0.92 |

| RITE method | 3.50 | 0.79 | 2.85 | 0.99 |

| A/B testing | 3.97 | 1.09 | 3.38 | 1.10 |

| Pluralistic walkthrough | 3.35 | 1.04 | 2.91 | 1.00 |

| System usability scale | 3.35 | 0.88 | 3.38 | 0.65 |

| Mean | 3.88 | 3.40 | ||

| UX Artifacts | D1—Usefulness | D2—Ease of Integration | ||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| UX evaluation methods | 4.15 | 0.74 | 3.85 | 0.86 |

| UX design system | 4.12 | 1.07 | 3.74 | 0.79 |

| UX persona | 3.82 | 0.97 | 3.50 | 0.93 |

| UX responsibilities and roles | 3.29 | 1.14 | 3.91 | 0.79 |

| UX evaluation repository | 4.18 | 0.80 | 3.59 | 0.78 |

| UX backlog | 4.26 | 0.90 | 3.26 | 0.90 |

| UX sprint backlog | 4.12 | 1.01 | 3.59 | 0.86 |

| Mean | 3.99 | 3.63 | ||

| UX Events | D1—Usefulness | D2—Ease of Integration | ||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Pre-planning UX meeting | 4.09 | 1.00 | 3.94 | 0.95 |

| Pre-review UX meeting | 3.85 | 1.10 | 3.97 | 0.87 |

| Weekly UX meeting | 4.41 | 0.74 | 3.85 | 1.08 |

| Weekly user meeting | 3.85 | 1.02 | 2.35 | 1.07 |

| Mean | 4.05 | 3.53 | ||

| Participant | Additional Comments |

|---|---|

| P2 | “It would be necessary to clarify the methodology you are thinking about for agile software development and what kind of teams you are going to have. The methods will eventually depend on that”. |

| P3 | “(Evaluation methods) must be used at the right time, always proportional to the problem to be addressed”. |

| P4 | “RITE is complex because you are improving from one opinion/experience, without considering more user observations to make a total improvement”. |

| P11 | “Each method is related to a stage and what you want to evaluate, not all of them work with everything”. |

| P12 | “A method based on guiding and supporting proposals is more effective because it helps to mitigate disagreements among the users”. |

| P15 | “I find this list quite interesting and complete. They are essential methods with which it is possible to conduct a complete evaluation of the usability and therefore of the user experience”. |

| P17 | “Evaluating mockups does not let you know what users do, but what they could do. They are useful for providing input to the development team”. |

| P19 | “Complicated question, as it depends on the type of system/flow/design you need to evaluate the method to use, but the user testing, evaluation of mockups or prototypes, or user interviews are effective and easy to estimate in time as a task”. |

| P23 | “I believe that the methods that work best are those that make the user interact directly with the implemented design”. |

| P24 | “(The evaluation methods mentioned) provides an excellent roadmap”. |

| P25 | “I believe that an appropriate combination of some of these can provide valuable information for the evaluation”. |

| P32 | “I consider that the most complex thing to do is the heuristic evaluations because of the time involved and that they do not go with any agile methodology”. |

| P34 | “Of all that exists, we use very little, which is bad for the final product”. |

| UX Artifact | Additional Comments | Future Actions |

|---|---|---|

| UX evaluation methods | - “I recommend performing evaluations that involve UX roles and developers together to somehow relate and involve them so they can understand the results together and better understand the design constraints and possibilities”. (P15) | The expert’s comment will not be considered. A method has already been proposed that focuses on evaluation by involving both UX roles and developers (pluralistic walkthrough). We also include methods that can be used independently by either UX roles or developers. |

| UX design system | - “There was a lack of consideration of UI content inputs”. (P4) - “The design system is a necessary tool in every project, but it will depend on its size if it should be taken as a project within an agile project”. (P19) | The comments of the experts will be considered. The UI components will be specified in greater detail in the final version. Additionally, we will include a consideration for each artifact to indicate that; depending on the team’s capacity, these could be specified in more or less detail. |

| UX personas | - “I believe that the artifact related to UX personas is unnecessary, as user personas are developed based on interviews with actual users. Predefined archetypes may not accurately reflect the specific users we are working with”. (P5) | The expert’s comment will not be considered. At no point did we indicate that archetypes or personas would be predefined. We agree that these should be created using UX research methods. |

| UX roles and responsibilities | - “The roles and responsibilities artifact may not be very useful”. (P14) | The expert’s comment will not be considered since the comment was made only once. |

| UX evaluation repository | - “Evaluation repositories are useless, but because people do not have time in the work process to review and contemplate them”. (P2) | The expert’s comment will not be considered since the comment was made only once. |

| UX backlog | - “We initially maintained a separate UX backlog but encountered two issues: (1) the UX work wasn’t adequately visualized, and (2) the development team often diverged and handled the UX tasks independently. Therefore, I recommend against keeping separate backlogs. Now, all work is consolidated into a single backlog”. (P3) - “Both the UX backlog and the UX sprint backlog could be included in the general backlog and sprint backlog of the project, they just need to be well specified to avoid confusion”. (P23) | The recommendations and comments of the experts will not be considered. We have already included a consideration indicating that if the project does not have a UX team, UX acceptance criteria, definition of ready, and definition of done for each user story should be included. |

| UX sprint backlog | - “Both the UX backlog and the UX sprint backlog could be included in the general backlog and sprint backlog of the project, they just need to be well specified to avoid confusion”. (P23) | The expert’s comment will not be considered since the comment was made only once. We have already included a consideration indicating that if the project does not have a UX team, UX acceptance criteria, definition of ready, and definition of done for each user story should be included. |

| UX Artifact | Additional Comments | Future Actions |

|---|---|---|

| Weekly user meeting | - “The weekly user meeting seems unnecessary to me”. (P4) - “The meeting with users would not be weekly”. (P20) - “Testing with users takes time, so I do not consider that doing it weekly would bring many benefits or differences because if you consider the time in which users test, analyze the information and make changes could take more than a week. So, I think that testing once a month or after a certain number of changes in the interface would be more enriching”. (P26) - “Given the time we have for the sprint we don’t get to have weekly meetings with users”. (P32) | The recommendations and comments of the experts will be considered. We will change the name of the event to User Meeting. In addition, we will define that the ideal frequency of the event would be one or two sprints. Finally, we will include a consideration that, instead of using weeks, the event could be held after a certain volume of work has been completed. |

| Pre-review UX meeting | - “I don’t see the sense of a meeting with UX before the sprint review”. (P17) - “The UX meeting before the review can be useful if there was no communication during the development to explain how it works before showing it in the review. But if there is constant communication, it may be unnecessary”. (P20) - “While it is always useful to hold meetings, holding them just before the review may not be the best time in my personal opinion”. (P30) | Some recommendations and comments from experts will be considered. We will keep the event since only two comments refer to the fact that it could be unnecessary. In addition, we will define the ideal frequency of the event to be one or two days before the sprint review. |

| Pre-planning UX meeting | - “If the backlog is defined from the beginning and is kept in a static way, this event is very unnecessary”. (P20) | The expert’s comment will not be considered. In agile environments, the backlog is an artifact that must always be refined and updated, never remaining static. |

| Weekly UX meeting | - “Perhaps a biweekly UX meeting would be appropriate to keep pace with the development of the sprint and reduce costs”. (P16) | The expert’s comment will be considered. We will change the name of the event to “UX meeting”. In addition, we will define the ideal frequency of the event to be one or two weeks. |

| Scenario | 1 | 2 | 3 |

|---|---|---|---|

| Description of roles | No dedicated UX roles. Developers handle basic UX evaluation alongside development tasks. | One UX role is part of the development team (three developers + one UX). The UX role collaborates closely with the developers on UX work. | Three UX roles are part of the development team (six developers + three UX). The UX team works in close collaboration with developers, sharing UX responsibilities across multiple areas. |

| Which artifacts to use and how to use them | 1. UX evaluation methods: Developers use it to learn about UX evaluation methods that do not require the involvement of a dedicated UX role. 2. UX backlog: Developers manage UX tasks within the same backlog as development work. 3. UX sprint backlog: Developers plan UX tasks within the same sprint backlog as development work. | 1. UX evaluation methods: The UX role uses it to identify appropriate UX evaluation methods based on available time and resources. 2. UX design system: The UX role uses it to define a basic design system with reusable components and interactions. 3. UX backlog: The UX role is responsible for updating the UX-related tasks, which are integrated into the general backlog. 4. UX sprint backlog: The UX role is responsible for tracking the UX tasks for the sprint, which are integrated into the general sprint backlog. | 1. UX evaluation methods: The UX team uses it to identify appropriate UX evaluation methods based on available time and resources. 2. UX roles and responsibilities: The UX team uses it to clearly define the responsibilities of each member to ensure task completion and alignment with project objectives. 3. UX persona: The UX team uses it to create the personas based on research. Contact information for representative users is maintained and regularly updated. 4. UX design system: The UX team uses it to define a detailed system with reusable components, interaction patterns, and style guides. 5. UX evaluation repository: The UX team uses it to store and reference a wide range of results and conclusions from UX evaluations. 6. UX backlog: The UX team is responsible for detailing, prioritizing, and updating UX tasks, which are maintained in a dedicated UX backlog. 7. UX sprint backlog: The UX team is responsible for tracking and managing the UX tasks and goals for the sprint, which are included in a dedicated UX sprint backlog. |

| Which events to conduct and how to conduct them | 1. Weekly user meeting: Developers meet some users to conduct basic UX evaluation. | 1. Weekly user meeting: The UX role conducts user meetings to collect feedback. 2. Weekly UX meeting: The UX role meets with developers to discuss and resolve design and usability issues. | 1. Pre-planning UX meeting: The UX team meets with developers to ensure that all design components are ready before the sprint planning. 2. Pre-review UX meeting: The UX team meets with developers to ensure that everything developed meets UX standards before the sprint review. 3. Weekly user meeting: The UX team conducts multiple user meetings to collect detailed feedback from representative users. 4. Weekly UX meeting: The UX team meets and collaborates with developers to discuss and resolve design and usability issues. |

| Which UX evaluation methods to use | There are UX evaluation methods that can be applied by developers as designing experiments or interpreting the results does not require advanced knowledge in UX design and/or evaluation. For instance, developers can conduct an “evaluation with mockups or prototypes” or apply a questionnaire like the “system usability scale (SUS)”. | Most of the proposed methods can be used by some roles with UX knowledge. In addition to the UX evaluation methods mentioned in the previous scenario, the presence of a UX role allows the use of “heuristic evaluation”, “usability/user testing”, and “A/B testing”. Furthermore, since both a UX role and developers are involved, the “pluralistic walkthrough” can be considered. | Any of the proposed UX evaluation methods can be applied regularly by the dedicated UX team, ensuring thorough feedback and evaluation of designs. Given the increased number of UX roles, “guerrilla testing” and “RITE method” have become viable options. |

| Felker et al. [2] | Maguire [17] | Pillay and Wing [18] | Weber et al. [19] | Argumanis et al. [20] | Gardner and Aktunc [21] | FRAMUX-EV | |

|---|---|---|---|---|---|---|---|

| Components | 0 UX events, 0 UX artifacts, and 4 UX evaluation methods | 0 UX event, 0 UX artifacts, and 3 UX evaluation methods | 1 UX event, 0 UX artifacts, and 0 UX evaluation methods | 0 UX events, 2 UX artifacts, and 5 UX evaluation methods | 0 UX events, 0 UX artifacts, and 3 UX evaluation methods | 0 UX events, 0 UX artifacts, and 1 UX evaluation method | 4 UX events, 7 UX artifacts, and 8 UX evaluation methods |

| Representation mode | Not included | Not included | Diagram (sprint 0 and sprint 1) | Tables to explain each phase | BPMN diagrams | Figure (questions and activities per stage) | Diagram (artifacts and events) and tables to explain each component |

| Validation performed | Not mentioned | Not mentioned | Not mentioned | Interviews with 5 experts, case study (user testing) | Software development project (four 1-week sprints) | Questionnaires and interviews (not many details mentioned) | Survey to 34 practitioners |

| Strengths | (1) Integrates Scrum events with UX evaluation methods (2) Uses iterative methods like RITE for rapid and frequent testing | (1) Focus on usability and user-centered design (2) Use of UX methods such as user testing and heuristic evaluations | (1) Introduces the Lean UX cycle within the sprint (2) Facilitates rapid and continuous iteration of design elements and UX evaluation | (1) Includes useful elements (user story mapping and lessons learned) for work planning (2) Includes several UX methods (such as user testing) | (1) Includes evaluation, design, and implementation phases within the agile cycle (2) Validation through a real case study (software development project) | (1) Proposes an iterative approach, facilitating recurrent UX evaluation (2) Flexible approach that can be adapted to different agile environments | (1) Provides a clear structure with seven UX artifacts and four UX events (2) Facilitates continuous UX evaluation throughout each sprint |

| Weakness | (1) It does not include specific UX artifacts, limiting the formalization of UX evaluation (2) It does not present a structure for integrating UX evaluation throughout the development cycle | (1) It is not adapted to agile frameworks (it is presented in a general way), which limits its applicability (2) It does not include specific UX artifacts or events to manage work continuously in an agile manner | (1) It does not clearly define UX artifacts (2) Lacks detail on how and when to conduct UX evaluations within agile events | (1) It does not provide a clear structure on how to manage UX work throughout iterations (2) It does not include specific UX events, making it difficult to integrate UX into agile software development | (1) It does not include specific UX events or artifacts to integrate within agile development (2) UX evaluation is performed in specific phases, which may delay user feedback | (1) Not specifically tailored for Scrum or other popular agile frameworks (2) It does not include specific UX artifacts or events, limiting clarity and structure for integrating UX into the agile process | (1) Lacks validation in large-scale real-world projects (2) The complexity of implementing some UX artifacts and events may pose challenges for teams without defined UX roles |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rojas, L.F.; Quiñones, D.; Cubillos, C. FRAMUX-EV: A Framework for Evaluating User Experience in Agile Software Development. Appl. Sci. 2024, 14, 8991. https://doi.org/10.3390/app14198991

Rojas LF, Quiñones D, Cubillos C. FRAMUX-EV: A Framework for Evaluating User Experience in Agile Software Development. Applied Sciences. 2024; 14(19):8991. https://doi.org/10.3390/app14198991

Chicago/Turabian StyleRojas, Luis Felipe, Daniela Quiñones, and Claudio Cubillos. 2024. "FRAMUX-EV: A Framework for Evaluating User Experience in Agile Software Development" Applied Sciences 14, no. 19: 8991. https://doi.org/10.3390/app14198991

APA StyleRojas, L. F., Quiñones, D., & Cubillos, C. (2024). FRAMUX-EV: A Framework for Evaluating User Experience in Agile Software Development. Applied Sciences, 14(19), 8991. https://doi.org/10.3390/app14198991