Thermal, Multispectral, and RGB Vision Systems Analysis for Victim Detection in SAR Robotics

Abstract

1. Introduction

2. Related Works

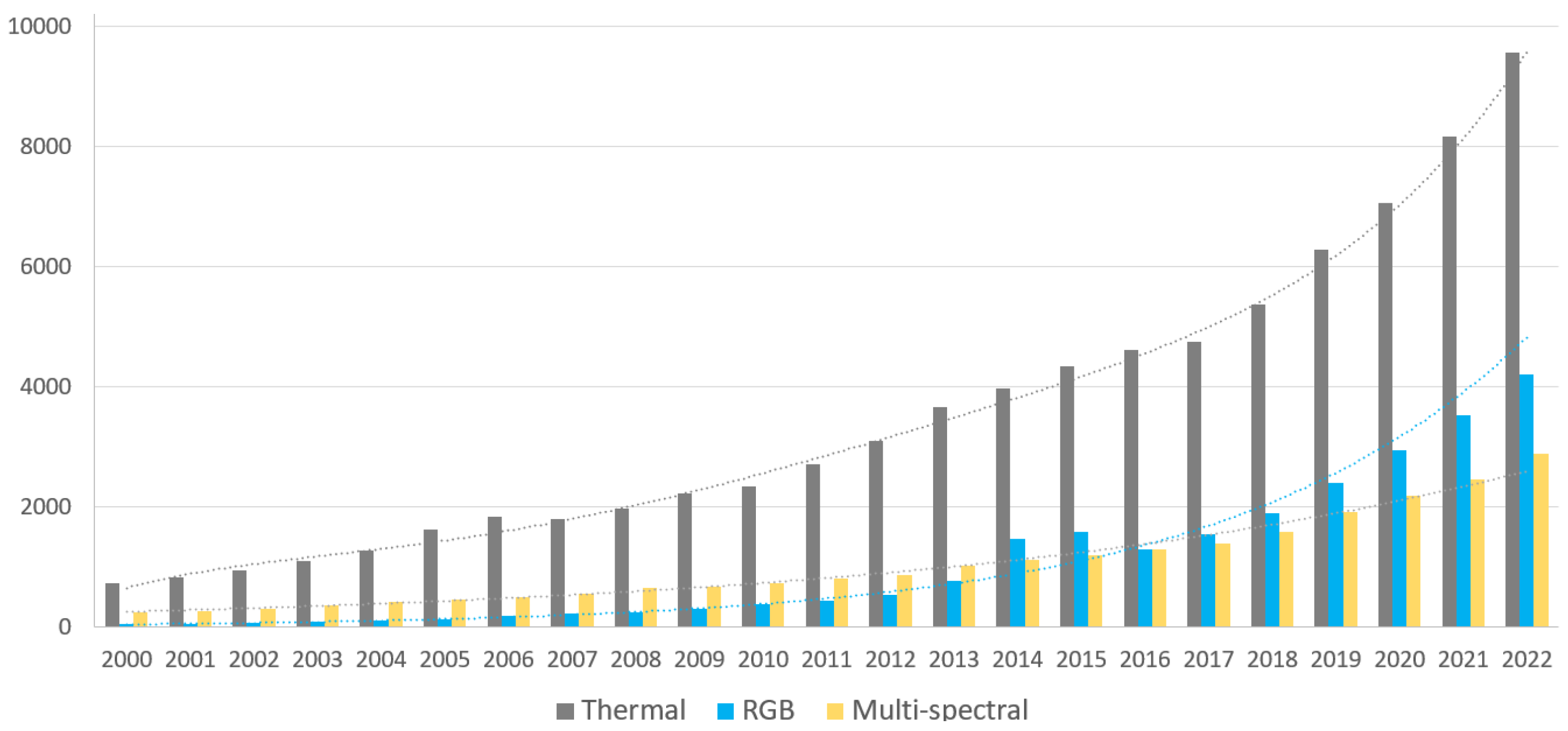

2.1. Context and Historical Evolution

2.2. Vision Sensors in Search and Rescue Robotics

3. Materials and Methods

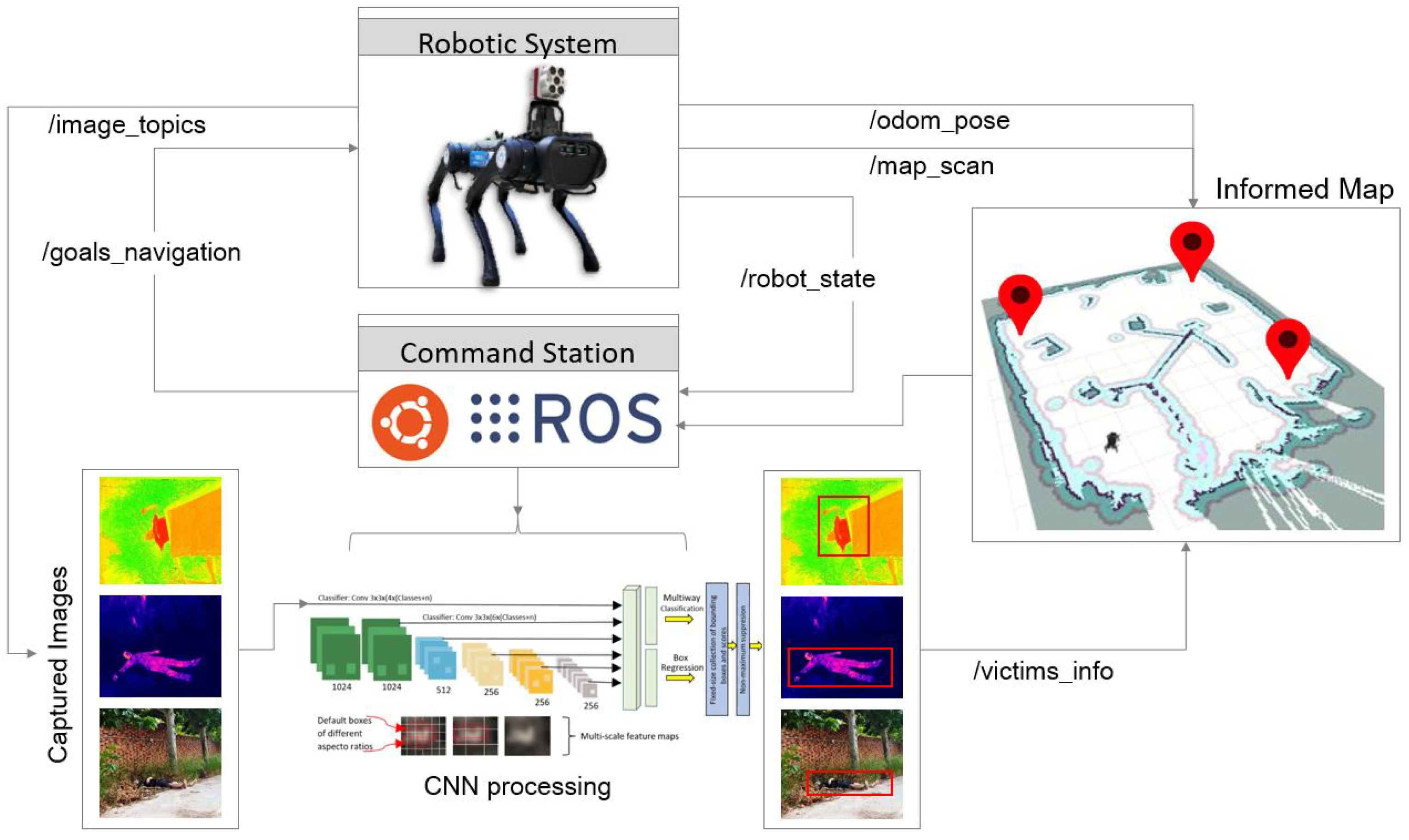

3.1. Robotic System and Processing

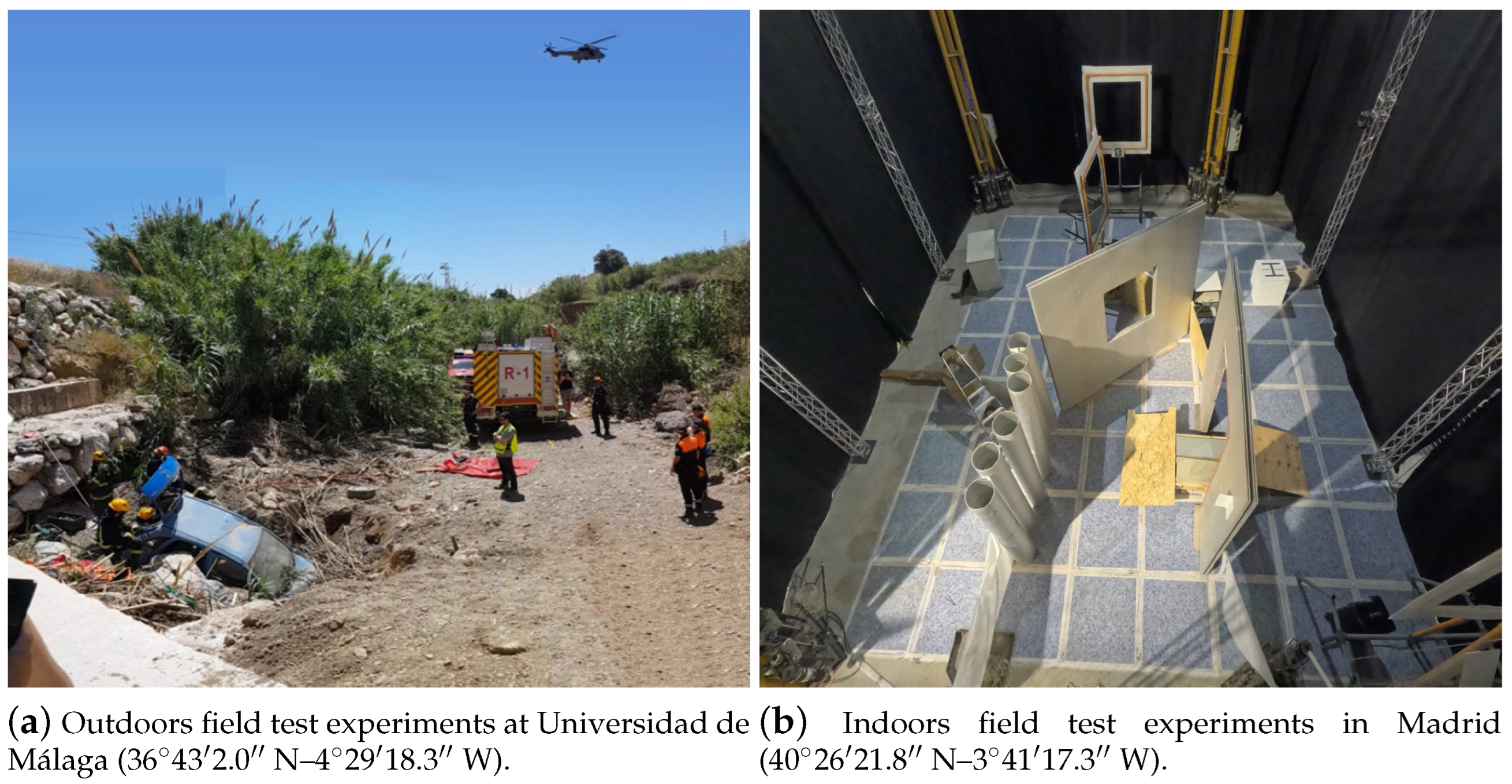

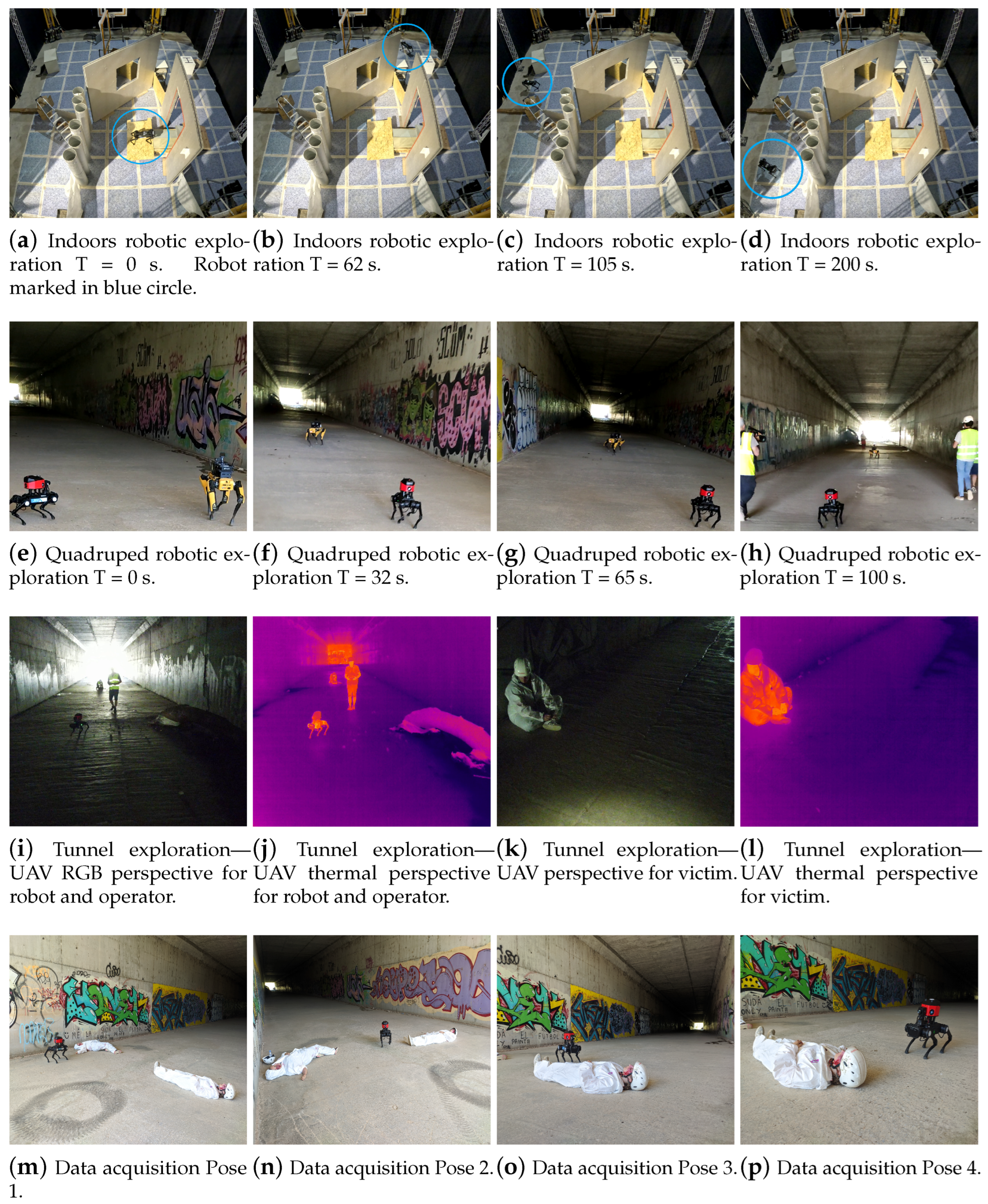

3.2. Field Tests

3.3. Algorithms and Evaluation Metrics

3.3.1. Implemented Algorithm

3.3.2. CNN-Based Algorithm

| Algorithm 1 Victim detection and robotic exploration system. |

|

- Victim, victim leg, victim torso, victim arm, victim head;

- Rescuer, rescuer leg.

| Algorithm 2 CNN-based algorithm. |

|

3.3.3. Proposed Metrics for Method Analysis

4. Results and Discussion

4.1. Mission Execution in Indoor–Outdoor Environments

4.2. System Performance Evaluation

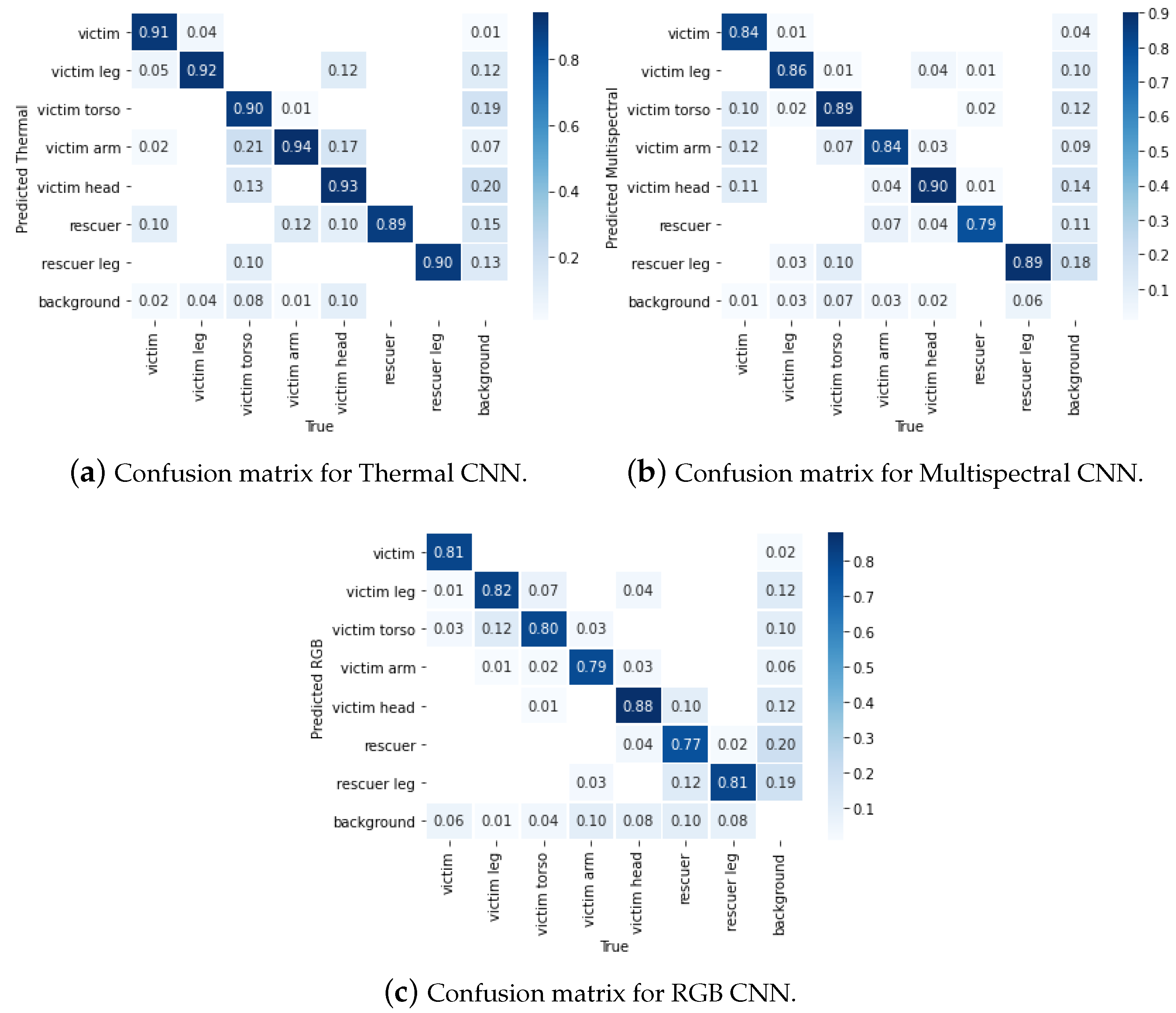

4.2.1. Evaluation of Victim Identification in SAR Missions Performed

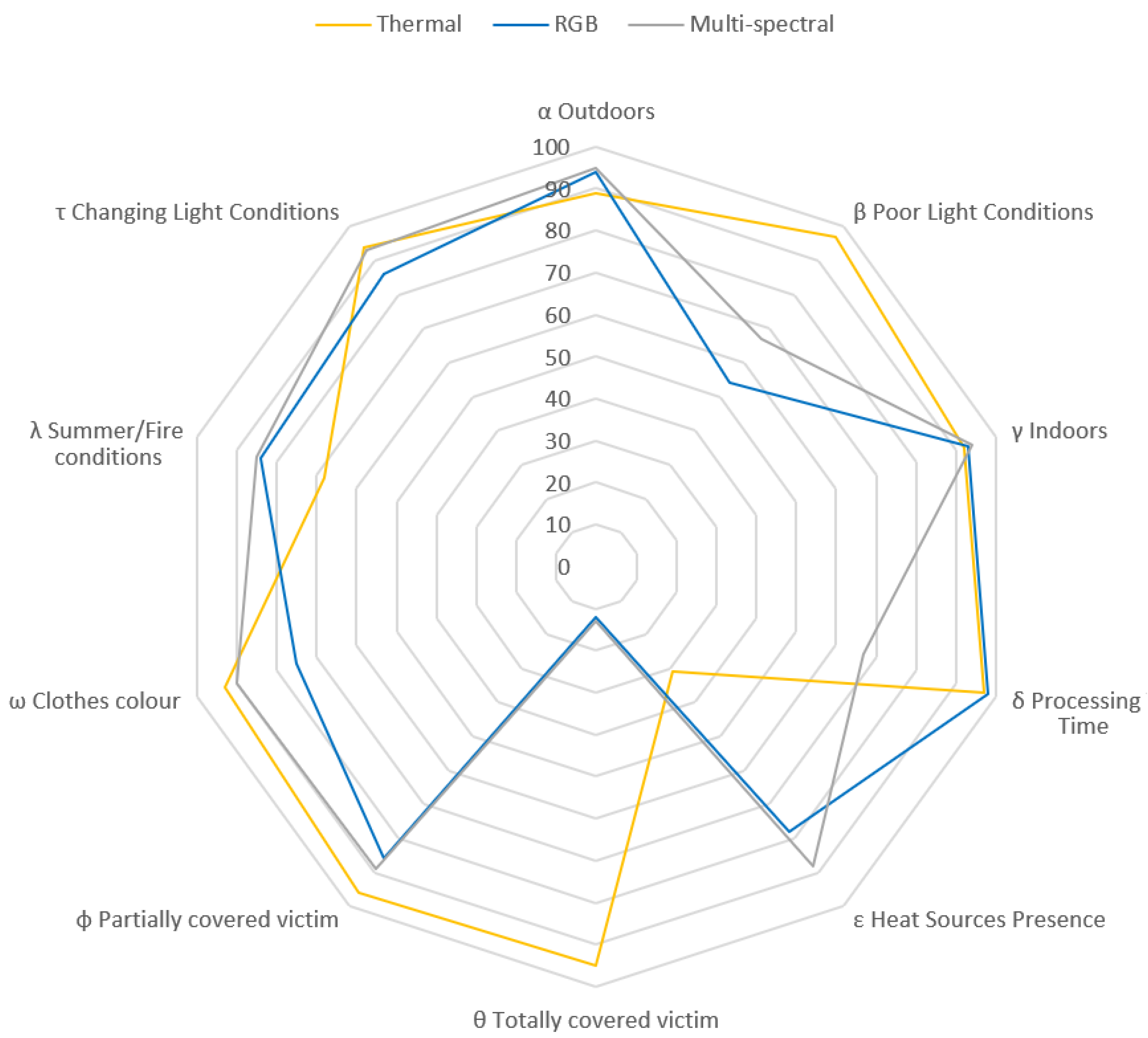

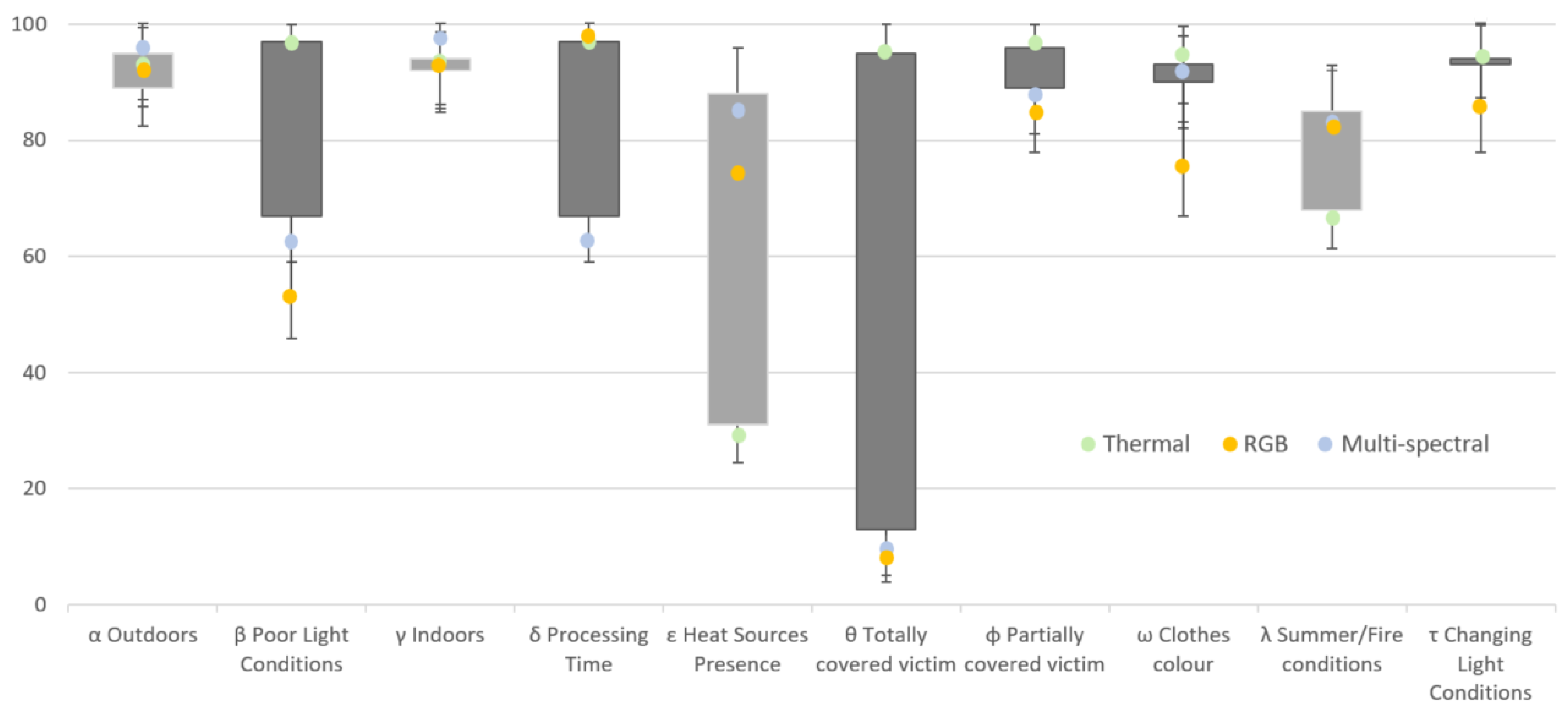

4.2.2. Individual Evaluation of Systems Using the Proposed Metrics

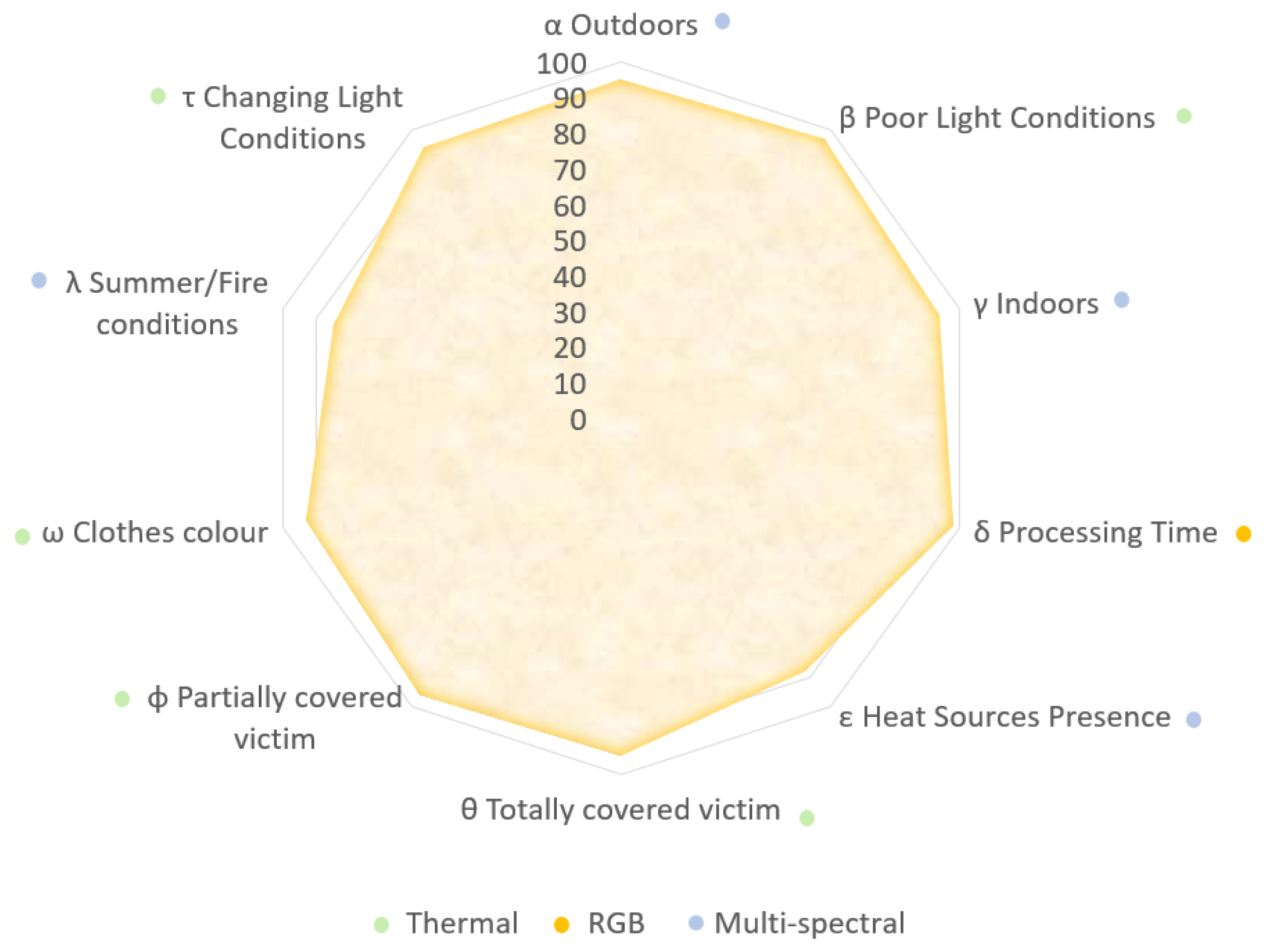

4.2.3. Combined Evaluation of Systems Using the Proposed Metrics

4.2.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RGB | Red Green Blue |

| CNN | Convolutional Neural Network |

| ROS | Robot Operating System |

| SAR | Search and Rescue |

| IR | Infrared |

| NIR | Near-infrared |

| NIST | National Institute of Standards and Technology |

| UAV | Unmanned Aerial Vehicle |

| UGV | Unmanned Ground Vehicle |

| TASAR | Team of Advanced Search And Rescue Robots |

| FPS | Frames Per Second |

| VDIN | Victim Detection Index |

References

- Adamkiewicz, M.; Chen, T.; Caccavale, A.; Gardner, R.; Culbertson, P.; Bohg, J.; Schwager, M. Vision-Only Robot Navigation in a Neural Radiance World. IEEE Robot. Autom. Lett. 2022, 7, 4606–4613. [Google Scholar] [CrossRef]

- Wilson, A.N.; Gupta, K.A.; Koduru, B.H.; Kumar, A.; Jha, A.; Cenkeramaddi, L.R. Recent Advances in Thermal Imaging and its Applications Using Machine Learning: A Review. IEEE Sens. J. 2023, 23, 3395–3407. [Google Scholar] [CrossRef]

- Zhang, H.; Lee, S. Robot Bionic Vision Technologies: A Review. Appl. Sci. 2022, 12, 7970. [Google Scholar] [CrossRef]

- Rizk, M.; Bayad, I. Human Detection in Thermal Images Using YOLOv8 for Search and Rescue Missions. In Proceedings of the 2023 Seventh International Conference on Advances in Biomedical Engineering (ICABME), Beirut, Lebanon, 12–13 October 2023; pp. 210–215. [Google Scholar]

- Lai, Y.L.; Lai, Y.K.; Yang, K.H.; Huang, J.C.; Zheng, C.Y.; Cheng, Y.C.; Wu, X.Y.; Liang, S.Q.; Chen, S.C.; Chiang, Y.W. An unmanned aerial vehicle for search and rescue applications. J. Phys. Conf. Ser. 2023, 2631, 012007. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote. Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Blekanov, I.; Molin, A.; Zhang, D.; Mitrofanov, E.; Mitrofanova, O.; Li, Y. Monitoring of grain crops nitrogen status from uav multispectral images coupled with deep learning approaches. Comput. Electron. Agric. 2023, 212, 108047. [Google Scholar] [CrossRef]

- AlAli, Z.T.; Alabady, S.A. A survey of disaster management and SAR operations using sensors and supporting techniques. Int. J. Disaster Risk Reduct. 2022, 82, 103295. [Google Scholar] [CrossRef]

- Karasawa, T.; Watanabe, K.; Ha, Q.; Tejero-De-Pablos, A.; Ushiku, Y.; Harada, T. Multispectral object detection for autonomous vehicles. In Proceedings of the Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017; pp. 35–43. [Google Scholar]

- Sharma, K.; Doriya, R.; Pandey, S.K.; Kumar, A.; Sinha, G.R.; Dadheech, P. Real-Time Survivor Detection System in SaR Missions Using Robots. Drones 2022, 6, 219. [Google Scholar] [CrossRef]

- Haq, H. Three Survivors Pulled Alive from Earthquake Rubble in Turkey, More Than 248 Hours after Quake. 2023. Available online: https://edition.cnn.com/2023/02/16/europe/turkey-syria-earthquake-rescue-efforts-intl/index.html (accessed on 14 January 2024).

- Pal, N.; Sadhu, P.K. Post Disaster Illumination for Underground Mines. TELKOMNIKA Indones. J. Electr. Eng. 2015, 13, 425–430. [Google Scholar]

- Safapour, E.; Kermanshachi, S. Investigation of the Challenges and Their Best Practices for Post-Disaster Reconstruction Safety: Educational Approach for Construction Hazards. In Proceedings of the Transportation Research Board 99th Annual Conference, Washington, DC, USA, 12–16 January 2020. [Google Scholar]

- Jacoff, A.; Messina, E.; Weiss, B.; Tadokoro, S.; Nakagawa, Y. Test arenas and performance metrics for urban search and rescue robots. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003 2003; Volume 4, pp. 3396–3403. [Google Scholar]

- Kleiner, A.; Brenner, M.; Bräuer, T.; Dornhege, C.; Göbelbecker, M.; Luber, M.; Prediger, J.; Stückler, J.; Nebel, B. Successful search and rescue in simulated disaster areas. In RoboCup 2005: Robot Soccer World Cup IX 9; Springer: Berlin/Heidelberg, Germany, 2006; pp. 323–334. [Google Scholar]

- Katsamenis, I.; Protopapadakis, E.; Voulodimos, A.; Dres, D.; Drakoulis, D. Man Overboard Event Detection from RGB and Thermal Imagery: Possibilities and Limitations. In Proceedings of the 13th ACM International Conference on PErvasive Technologies Related to Assistive Environments, PETRA ’20, New York, NY, USA, 30 June–3 July 2020. [Google Scholar]

- De Oliveira, D.C.; Wehrmeister, M.A. Using Deep Learning and Low-Cost RGB and Thermal Cameras to Detect Pedestrians in Aerial Images Captured by Multirotor UAV. Sensors 2018, 18, 2244. [Google Scholar] [CrossRef]

- Corradino, C.; Bilotta, G.; Cappello, A.; Fortuna, L.; Del Negro, C. Combining Radar and Optical Satellite Imagery with Machine Learning to Map Lava Flows at Mount Etna and Fogo Island. Energies 2021, 14, 197. [Google Scholar] [CrossRef]

- Cruz Ulloa, C.; Garcia, M.; del Cerro, J.; Barrientos, A. Deep Learning for Victims Detection from Virtual and Real Search and Rescue Environments. In ROBOT2022: Fifth Iberian Robotics Conference; Tardioli, D., Matellán, V., Heredia, G., Silva, M.F., Marques, L., Eds.; Springer: Cham, Switzerland, 2023; pp. 3–13. [Google Scholar]

- Cruz Ulloa, C.; Prieto Sánchez, G.; Barrientos, A.; Del Cerro, J. Autonomous Thermal Vision Robotic System for Victims Recognition in Search and Rescue Missions. Sensors 2021, 21, 7346. [Google Scholar] [CrossRef]

- Ulloa, C.C.; Llerena, G.T.; Barrientos, A.; del Cerro, J. Autonomous 3D Thermal Mapping of Disaster Environments for Victims Detection. In Robot Operating System (ROS): The Complete Reference; Koubaa, A., Ed.; Springer International Publishing: Cham, Switzerland, 2023; Volume 7, pp. 83–117. [Google Scholar]

- Ulloa, C.C.; Garrido, L.; del Cerro, J.; Barrientos, A. Autonomous victim detection system based on deep learning and multispectral imagery. Mach. Learn. Sci. Technol. 2023, 4, 015018. [Google Scholar] [CrossRef]

- Sambolek, S.; Ivasic-Kos, M. Automatic person detection in search and rescue operations using deep CNN detectors. IEEE Access 2021, 9, 37905–37922. [Google Scholar] [CrossRef]

- Lee, H.W.; Lee, K.O.; Bae, J.H.; Kim, S.Y.; Park, Y.Y. Using Hybrid Algorithms of Human Detection Technique for Detecting Indoor Disaster Victims. Computation 2022, 10, 197. [Google Scholar] [CrossRef]

- Lygouras, E.; Santavas, N.; Taitzoglou, A.; Tarchanidis, K.; Mitropoulos, A.; Gasteratos, A. Unsupervised human detection with an embedded vision system on a fully autonomous UAV for search and rescue operations. Sensors 2019, 19, 3542. [Google Scholar] [CrossRef]

- Domozi, Z.; Stojcsics, D.; Benhamida, A.; Kozlovszky, M.; Molnar, A. Real time object detection for aerial search and rescue missions for missing persons. In Proceedings of the SOSE 2020—IEEE 15th International Conference of System of Systems Engineering, Budapest, Hungary, 2–4 June 2020; pp. 519–524. [Google Scholar]

- Quan, A.; Herrmann, C.; Soliman, H. Project vulture: A prototype for using drones in search and rescue operations. In Proceedings of the 15th Annual International Conference on Distributed Computing in Sensor Systems, DCOSS 2019, Santorini Island, Greece, 29–31 May 2019; pp. 619–624. [Google Scholar]

- Perdana, M.I.; Risnumawan, A.; Sulistijono, I.A. Automatic Aerial Victim Detection on Low-Cost Thermal Camera Using Convolutional Neural Network. In Proceedings of the 2020 International Symposium on Community-Centric Systems, CcS 2020, Tokyo, Japan, 23–26 September 2020. [Google Scholar]

- Arrazi, M.H.; Priandana, K. Development of landslide victim detection system using thermal imaging and histogram of oriented gradients on E-PUCK2 Robot. In Proceedings of the 2020 International Conference on Computer Science and Its Application in Agriculture, ICOSICA 2020, Bogor, Indonesia, 16–17 September 2020; pp. 2–7. [Google Scholar]

- Gupta, M. A Fusion of Visible and Infrared Images for Victim Detection. In High Performance Vision Intelligence: Recent Advances; Nanda, A., Chaurasia, N., Eds.; Springer: Singapore, 2020; pp. 171–183. [Google Scholar]

- Seits, F.; Kurmi, I.; Bimber, O. Evaluation of Color Anomaly Detection in Multispectral Images for Synthetic Aperture Sensing. Eng 2022, 3, 541–553. [Google Scholar] [CrossRef]

- Dawdi, T.M.; Abdalla, N.; Elkalyoubi, Y.M.; Soudan, B. Locating victims in hot environments using combined thermal and optical imaging. Comput. Electr. Eng. 2020, 85, 106697. [Google Scholar] [CrossRef]

- Dong, J.; Ota, K.; Dong, M. UAV-Based Real-Time Survivor Detection System in Post-Disaster Search and Rescue Operations. IEEE J. Miniaturization Air Space Syst. 2021, 2, 209–219. [Google Scholar] [CrossRef]

- Zou, X.; Peng, T.; Zhou, Y. UAV-Based Human Detection with Visible-Thermal Fused YOLOv5 Network. IEEE Trans. Ind. Inform. 2023, 1–10. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, L.; Wu, W.; Jin, X. Dynamic Neural Network Accelerator for Multispectral detection Based on FPGA. In Proceedings of the International Conference on Advanced Communication Technology, ICACT, Pyeongchang, Republic of Korea, 19–22 February 2023; pp. 345–350. [Google Scholar]

- McGee, J.; Mathew, S.J.; Gonzalez, F. Unmanned Aerial Vehicle and Artificial Intelligence for Thermal Target Detection in Search and Rescue Applications. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems, ICUAS 2020, Athens, Greece, 1–4 September 2020; pp. 883–891. [Google Scholar]

- Goian, A.; Ashour, R.; Ahmad, U.; Taha, T.; Almoosa, N.; Seneviratne, L. Victim localization in USAR scenario exploiting multi-layer mapping structure. Remote. Sens. 2019, 11, 2704. [Google Scholar] [CrossRef]

- Petříček, T.; Šalanský, V.; Zimmermann, K.; Svoboda, T. Simultaneous exploration and segmentation for search and rescue. J. Field Robot. 2019, 36, 696–709. [Google Scholar] [CrossRef]

- Gallego, A.J.; Pertusa, A.; Gil, P.; Fisher, R.B. Detection of bodies in maritime rescue operations using unmanned aerial vehicles with multispectral cameras. J. Field Robot. 2019, 36, 782–796. [Google Scholar] [CrossRef]

- Qi, F.; Zhu, M.; Li, Z.; Lei, T.; Xia, J.; Zhang, L.; Yan, Y.; Wang, J.; Lu, G. Automatic Air-to-Ground Recognition of Outdoor Injured Human Targets Based on UAV Bimodal Information: The Explore Study. Appl. Sci. 2022, 12, 3457. [Google Scholar] [CrossRef]

- NIST, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2022. Available online: https://www.nist.gov/ (accessed on 14 January 2024).

| Work | Sensor Type | Camera Model | Spectral Resolution | Capture System | Environment | Processing Techniques | Application |

|---|---|---|---|---|---|---|---|

| [23] | RGB | DJI Phantom 4A Camera | 3 (RGB Bands) | UAV | Outdoors | Transfer Learning of pre-trained CNN | Search and rescue—People detection |

| [24] | RGB | CCTV RGB Images | 3 (RGB Bands) | - | Indoors | Hybrid Human Detection combining YOLO and RetinaNet | Search and rescue—Indoor disaster victims |

| [25] | RGB | GoPro | 3 (RGB Bands) | UAV | Maritime | Vision-based neural network controller for autonomous landing on human targets | Search and rescue—Help to human victim |

| [26] | RGB | Skywalker 1680 FPV camera | 3 (RGB Bands) | UAV | Wilderness | Single-Shot MultiBox Detector (SSD) Network to detect human | Search and rescue—Human detection |

| [27] | RGB | Dji Phantom 4 Pro V2.0 camera | 3 (RGB Bands) | UAV | Outdoors | Image processing and Yolo v3 detection | Search and rescue—Body detection |

| [20] | THERMAL | Optris Pi640 | 1 (IR) | UGV (quadruped robot) | Outdoors | Thermal image processing and deep learning techniques for body detection | Search and rescue—Victim detection |

| [28] | THERMAL | FLIR Lepton 3 | 1 (IR) | UAV | Outdoors | Single-Shot Multi-Box Detector (SSD) and Mobile Net for feature extraction | Search and rescue—Human detection |

| [29] | THERMAL | FLIR Camera 2.0 | 1 (IR) | Educational robot | Indoors | SVM classification with linear kernel and HOG feature | Body detection |

| [30] | RGB-THERMAL | FLIR E60 | 4 (red, green, blue, IR) | User carrying camera | Indoors–Outdoors | Skin detection and classification through feature extraction and SVM algorithm | Search and rescue—Victim detection |

| [31] | RGB-THERMAL | Dataset from () captured with Flir Vue Pro and RGB camera | 4 (red, green, blue, IR) | UAV | Outdoors | Airborne Optical Sectioning | Search and rescue—Body detection |

| [32] | RGB-THERMAL | FLIR Lepton 3 | 4 (red, green, blue, IR) | UAV | Outdoors | Image blending, matching, and processing of thermal images | Search and rescue—Victims localization |

| [33] | RGB-THERMAL | Zenmuse XT2 (FLIR Tau 2 and RGB camera) | 4 (red, green, blue, IR) | UAV | Outdoors | Deep Learning Techniques for UAV thermal image pedestrian detection | Search and rescue—Human detection |

| [34] | RGB-THERMAL | KAIST Multiespectral Pedestrian Dataset captured with PointGrey Flea3 and FLIR-A35 | 4 (red, green, blue, IR) | UAV | Outdoors | Visible–thermal fusion strategy processing and detection with Yolov5 | Search and rescue—Body detection |

| [35] | RGB-THERMAL | LLIV Dataset, captured with HIKVISION DS-2TD8166BJZFY-75H2F/V2 | 4 (red, green, blue, IR) | Security cameras | Outdoors | Dynamic neural network, replacing backbone of Yolov5, adopting Differential Modality-Aware Fusion Module (DMAF) | Search and rescue—Body detection |

| [36] | RGB-THERMAL | FLIR Tau 2 640 | 4 (red, green, blue, IR) | UAV | Outdoors | Thermal image processing and deep learning for detection (Darknet-53 NN) | Search and rescue—Body detection |

| [37] | RGB-THERMAL | ROS Simulated Camera | 4 (red, green, blue, IR) | UAV | Outdoors | Single-Shot Multi-Box Detector (SSD) for RGB, blob detector for thermal, and wireless localization of victim phone | Search and rescue—Victim localization |

| [38] | RGB-THERMAL | Point Gray Ladybug 3 and Micro Epsilon thermolMager TIM 160 thermal camera | 4 (red, green, blue, IR) | UGV | Outdoors | Human/background segmentation of the 3D voxel map and simultaneous control of thermal camera using multimodal CNN models | Search and rescue—Victim localization |

| [22] | MULTISPECTRAL | Micasense Altum | 7 (blue, green, red, red edge, near-IR, LWIR thermal infrared) | UGV (quadruped robot) | Outdoors | Convolutional Neural Network applied to multispectral imagery | Search and rescue—Victim detection |

| [39] | MULTISPECTRAL | Micasense RedEye | 6 (blue, green, red, red edge, near-IR) | UAV | Maritime | Convolutional Neural Network applied to multispectral imagery | Search and rescue—Body detection |

| [40] | MULTISPECTRAL | Foxtech MS600 | 6 (blue, green, red, red edge, near-IR) | UAV | Outdoors | Multispectral and bio radar bimodal information-based human recognition using respiration rate and image processing with decision trees | Search and rescue—Recognition of human target |

| [9] | MULTISPECTRAL | Logicool HD webcam, Nippon Avionics, InfReC R500, Nippon Avionics, InfRecH8000, Xenics, Xeva-1.7-320 | 7 (blue, green, red, MIR, near-IR, FIR) | Cart for data acquisition | Outdoors | Multispectral ensemble for using multispectral images for object detection | Autonomous vehicles—Body detection |

| Authors | RGB Thermal Multispectral | RealSense D435i OptrisPi640 Altum Micasense | 7 (blue, green, red, red edge, near-IR, LWIR thermal infrared) | Quadruped Robot | Indoors Outdoors | Real-time processing with Convolutional Neural Networks | Search and Rescue—victim detection |

| Item | Component | Description |

|---|---|---|

| 1 | ARTU-R | (A1 rescue Task UPM Robot) Quadrupedal Robot with instrumentation and embedded systems (Nvidia Jetson Xavier). |

| 2 | RealSense D435i | RGB-D Camera B = [450–495 nm] G = [495–570 nm] R = [620–750 nm] |

| 3 | Optris Pi640i | Thermal Camera [8 m–14 m] |

| 4 | MicaSense Altum | Multi-spectral Camera B = [440–510 nm] G = [520–590 nm] R = [630–685 nm] Red Edge = [690–730 nm] NIR = [750 nm–2.5 m] |

| Proposed Individual Evaluation Metrics | |

|---|---|

| Outdoors | |

| Poor Light Conditions | |

| Indoors | |

| Processing Time | |

| Heat Sources Presence | |

| Totally covered victim | |

| Partially covered victim | |

| Clothes colour | |

| Summer/Fire conditions | |

| Changing Light Conditions | |

| Parameter | Thermal Range | RGB Range | Multispectral Range | |

|---|---|---|---|---|

| Metrics Analysis | 89.3 | 97.1 | 95.4 | |

| 97.2 | 54.6 | 67.4 | ||

| 92.1 | 93.4 | 94.8 | ||

| 97.1 | 98.2 | 67.5 | ||

| 31.7 | 78.1 | 88.4 | ||

| 95.1 | 12.7 | 13.8 | ||

| 96.7 | 89.2 | 89.4 | ||

| 93.1 | 75.5 | 90.7 | ||

| 68.1 | 84.7 | 85.2 | ||

| 94.2 | 86.5 | 93.4 | ||

| General Score | 755 | 662 | 714 | |

| SAR Score | 966 | 744 | 839 | |

| Indoors Experiments | Victims detection success rate % | 92.4 | 84.7 | 86.5 |

| Outdoors Experiments | Victims detection success rate % | 91.1 | 79.4 | 81.2 |

| Time Evaluation | Inference time | |||

| f.p.s | 26 | 28 | 8 | |

| Individual area covered % | 85.2 | 76.0 | 78.1 | |

| Total Covered Area of Analysis % | 93.5 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cruz Ulloa, C.; Orbea, D.; del Cerro, J.; Barrientos, A. Thermal, Multispectral, and RGB Vision Systems Analysis for Victim Detection in SAR Robotics. Appl. Sci. 2024, 14, 766. https://doi.org/10.3390/app14020766

Cruz Ulloa C, Orbea D, del Cerro J, Barrientos A. Thermal, Multispectral, and RGB Vision Systems Analysis for Victim Detection in SAR Robotics. Applied Sciences. 2024; 14(2):766. https://doi.org/10.3390/app14020766

Chicago/Turabian StyleCruz Ulloa, Christyan, David Orbea, Jaime del Cerro, and Antonio Barrientos. 2024. "Thermal, Multispectral, and RGB Vision Systems Analysis for Victim Detection in SAR Robotics" Applied Sciences 14, no. 2: 766. https://doi.org/10.3390/app14020766

APA StyleCruz Ulloa, C., Orbea, D., del Cerro, J., & Barrientos, A. (2024). Thermal, Multispectral, and RGB Vision Systems Analysis for Victim Detection in SAR Robotics. Applied Sciences, 14(2), 766. https://doi.org/10.3390/app14020766