Development of Architectural Object Automatic Classification Technology for Point Cloud-Based Remodeling of Aging Buildings

Abstract

1. Introduction

2. Background and Related Research

3. Analysis of Aging Building Remodeling and Object Recognition Technology

3.1. Guidelines for Aging Building Remodeling and Analysis of Related Research

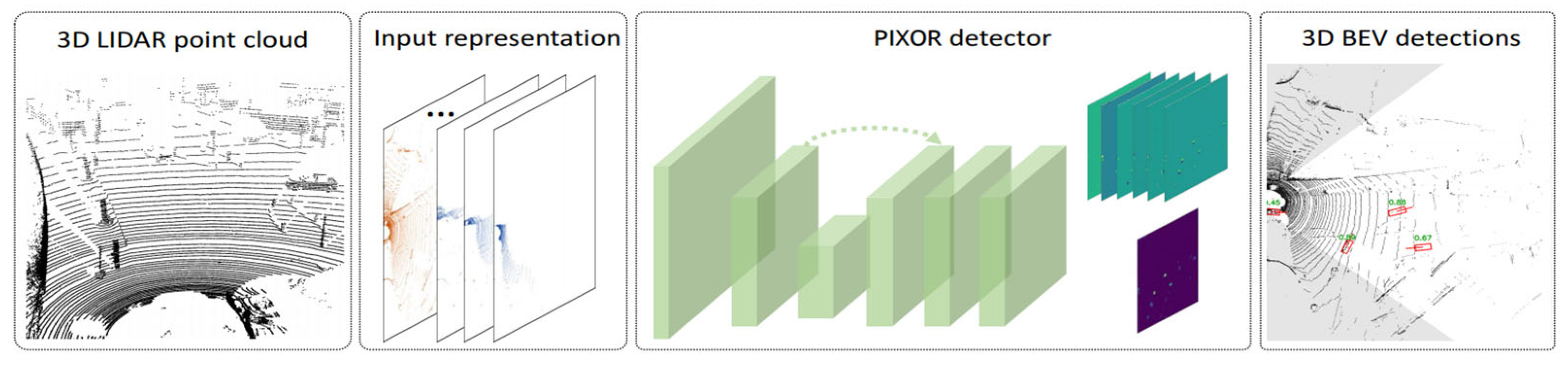

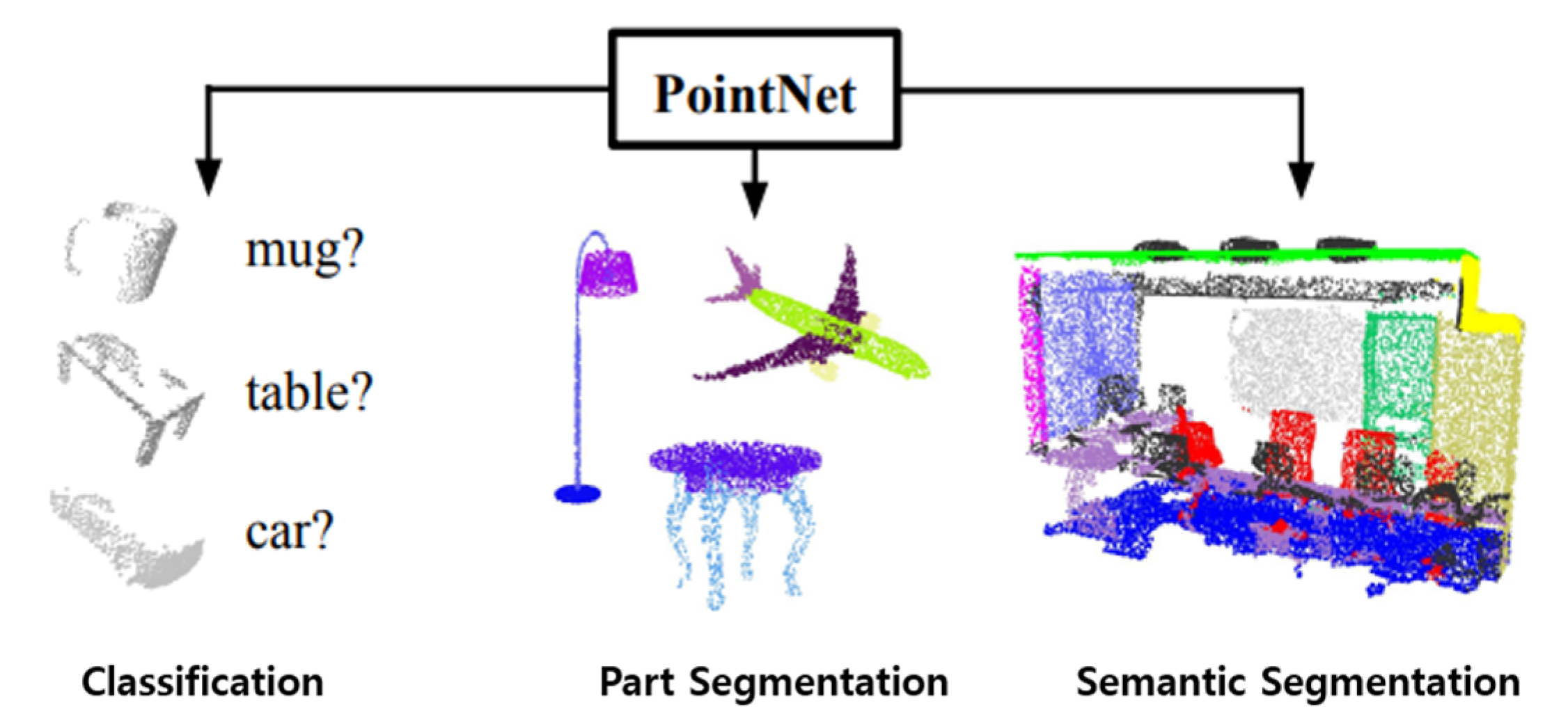

3.2. Analysis of Deep Learning-Based Point Cloud Object Recognition Technology

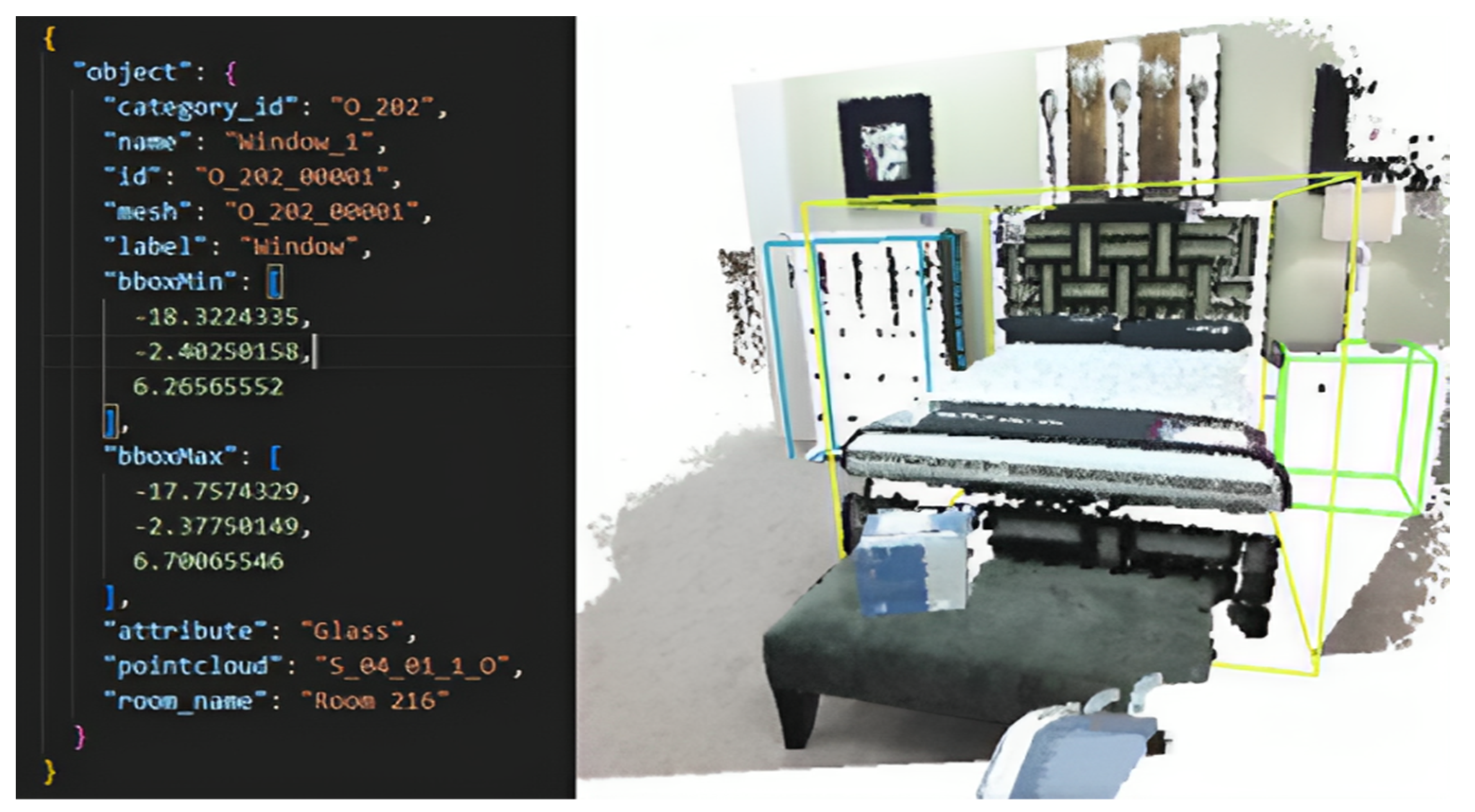

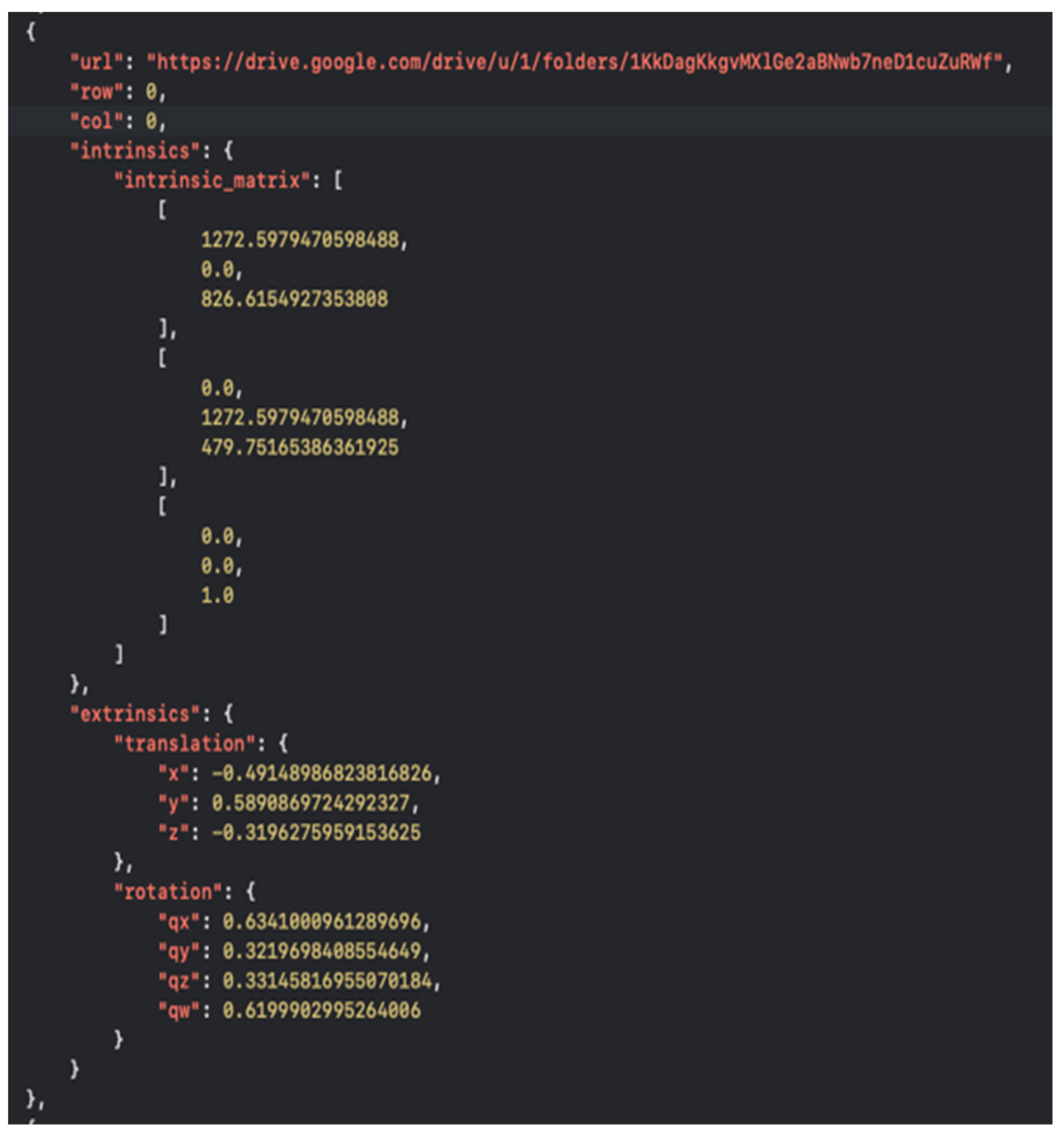

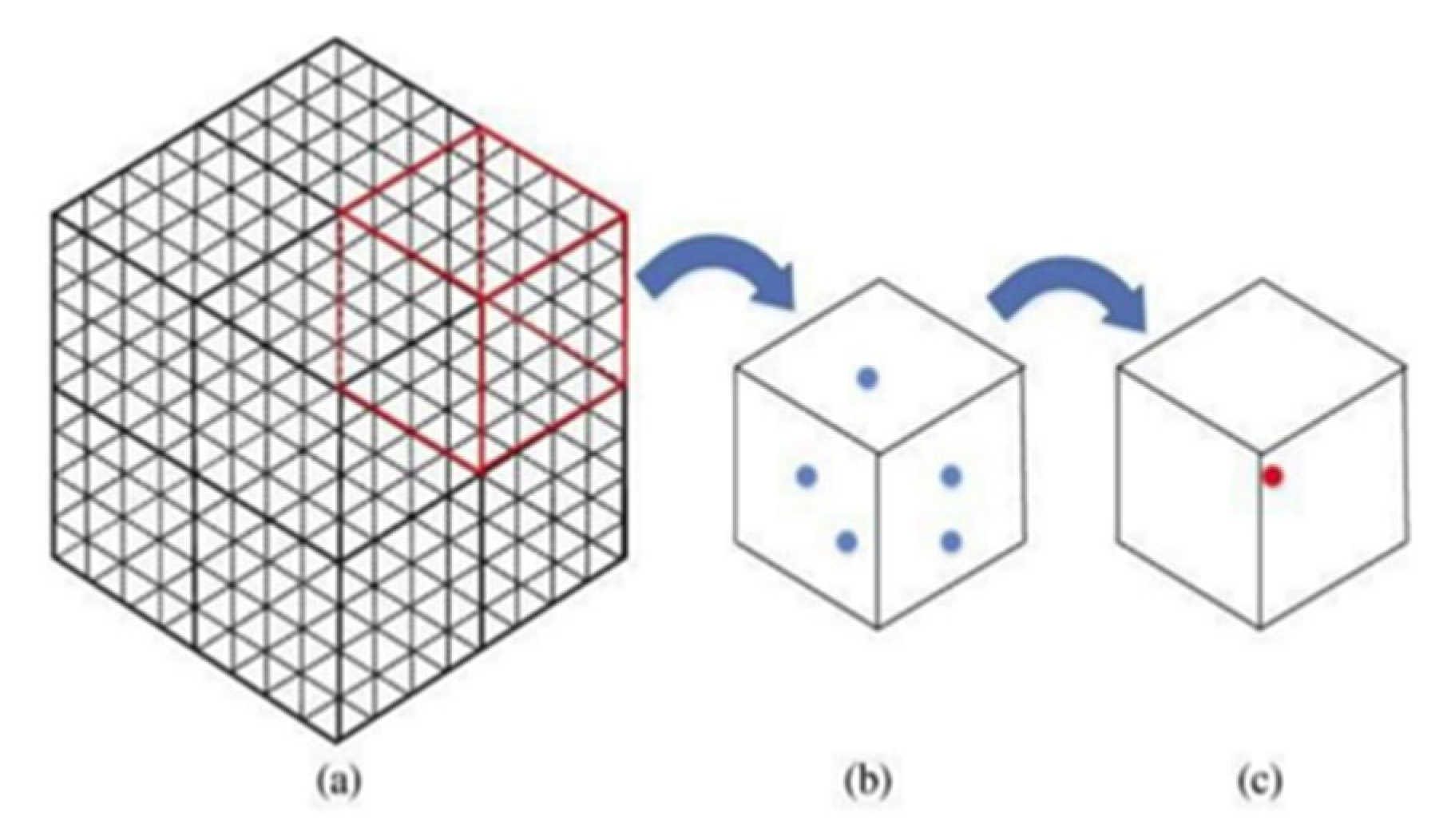

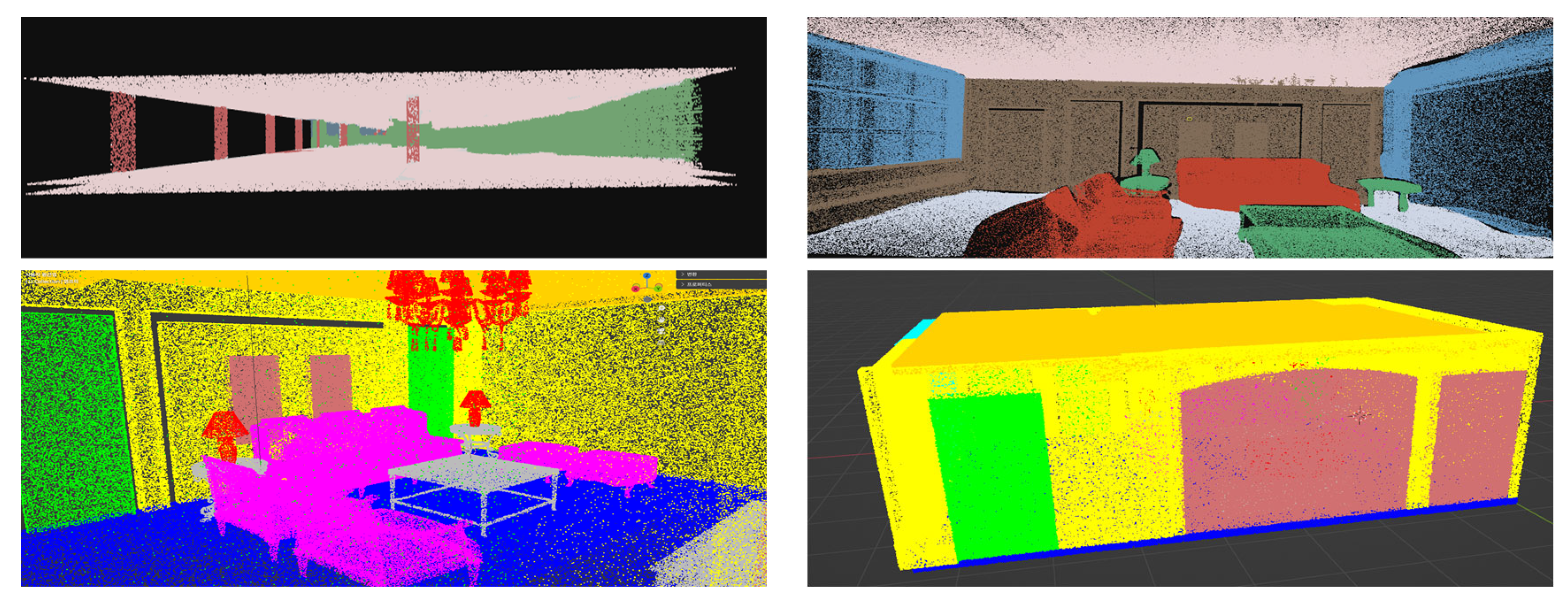

4. Construction Object Automatic Classification Dataset based on Point Cloud Data Construction

4.1. Building Object Segmentation Dataset Construction for Architectural Object Classification

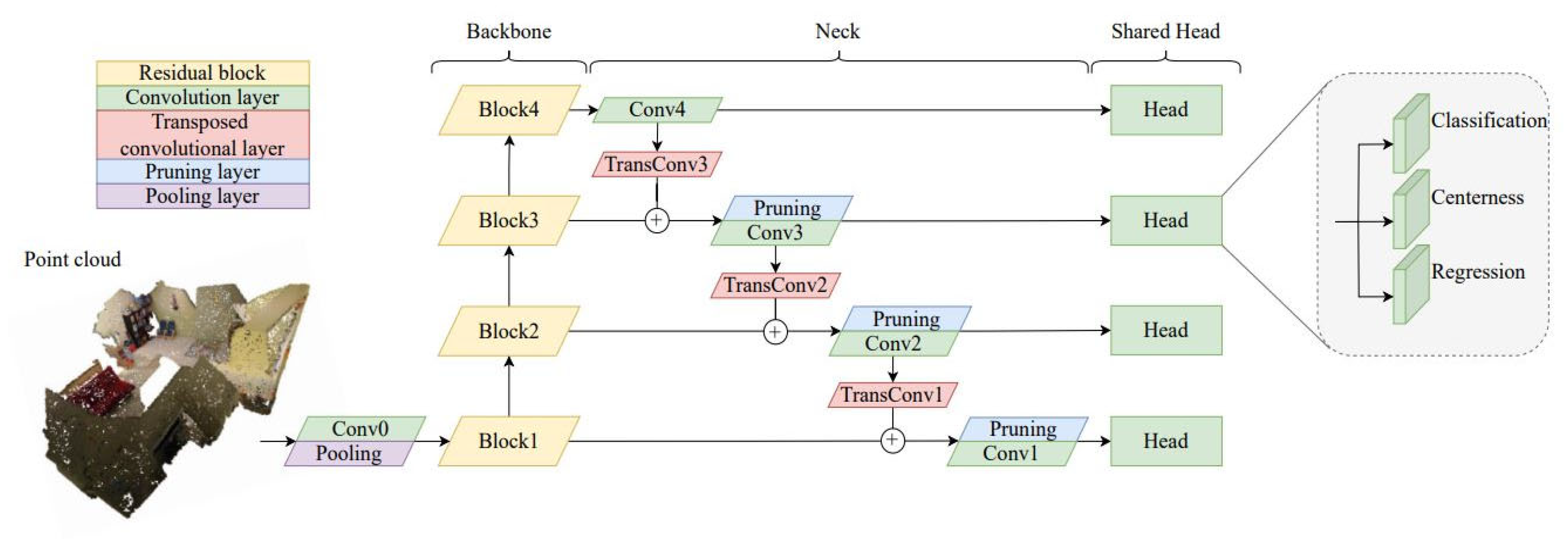

4.2. Building an Object Classification Network Establishment

5. Automatic Classification Model for Architectural Objects in Renovating Aging Buildings

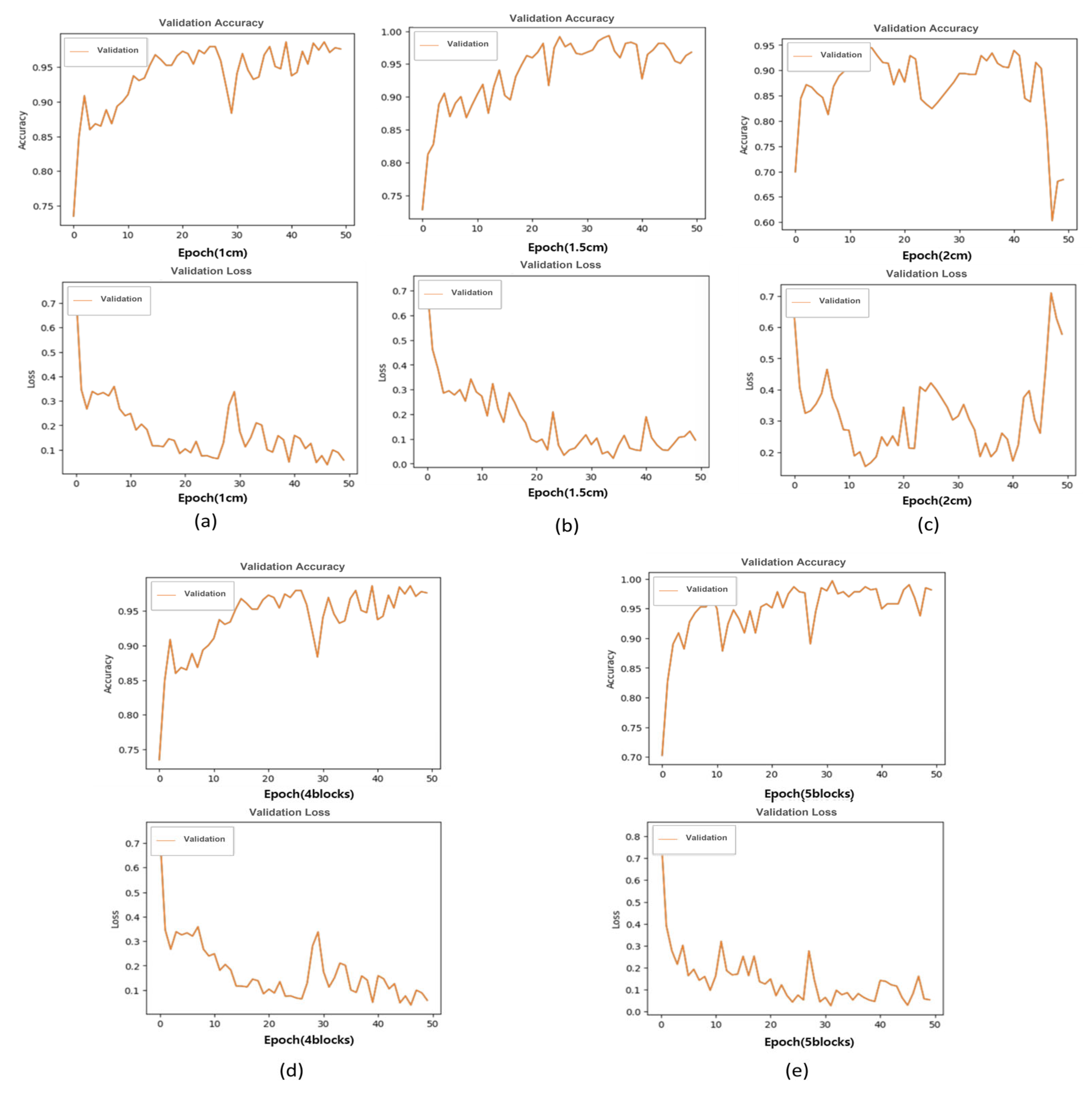

5.1. Segmentation Dataset Training

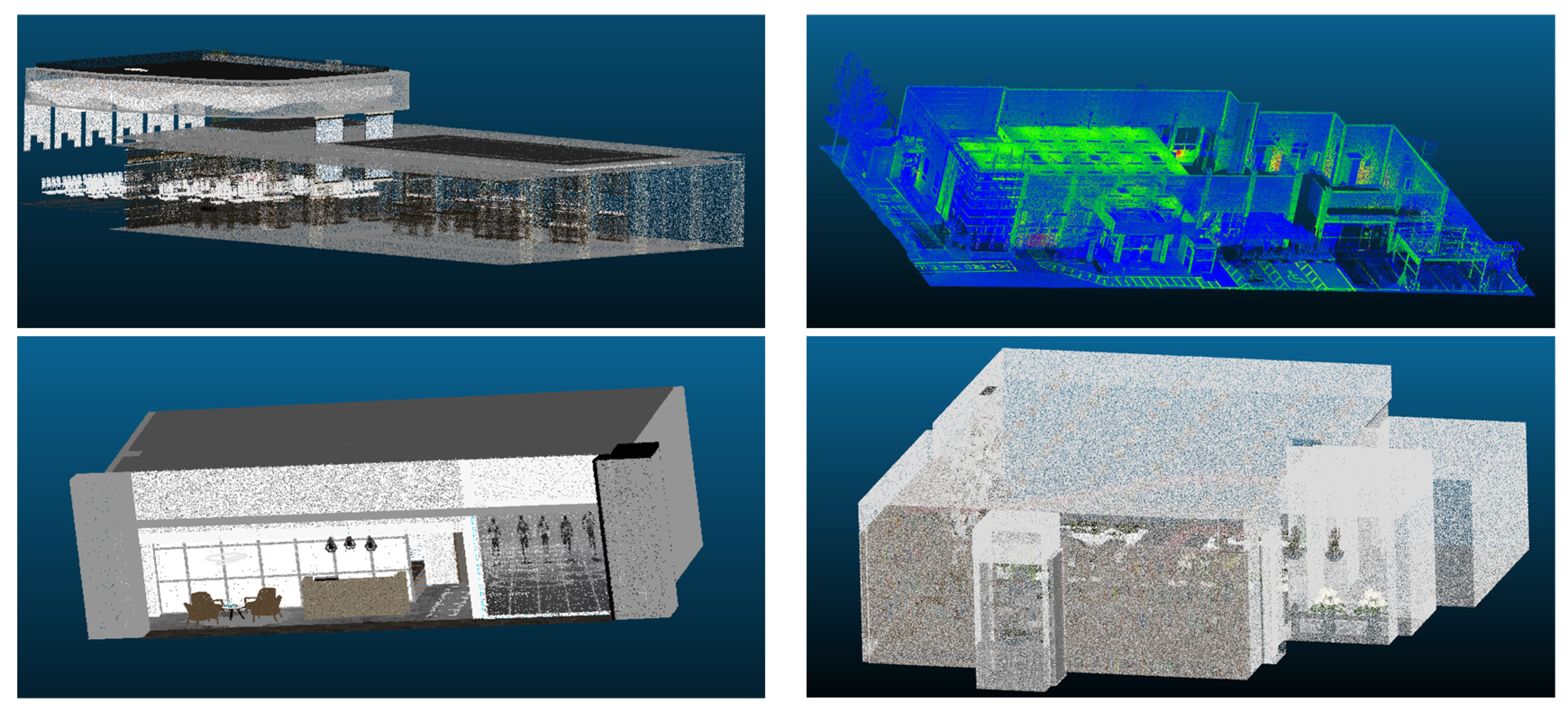

5.2. Automatic Classification of Architectural Objects Based on Point Cloud Data

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kim, K.S. A study on the Phase step of Applying Devices of Remodeling process. Korean Soc. Basic Des. Art 2003, 4, 272–279. [Google Scholar]

- Simeone, D. BIM and Behavioural Simulation for existing buildings re-use design. TEMA 2018, 4, 59–69. [Google Scholar] [CrossRef]

- Min, J.S.; Park, H.K. A Study on the Remodeling Process of Building. In Proceedings of the Autumn Annual Conference of AIK 2001, Los Angeles, CA, USA, 27 October 2001; Volume 212, pp. 127–130. [Google Scholar]

- Kwon, W.; Chun, J.Y. Constitution of Work Process for the Remodeling Construction Project in Planning Phase. Korean J. Constr. Eng. Manag. 2006, 7, 165–174. [Google Scholar]

- Hasik, V.; Escott, E.; Bates, R.; Carlisle, S.; Faircloth, B.; Bilec, M.M. Comparative whole-building life cycle assessment of renovation and new construction. Build. Environ. 2019, 161, 106218. [Google Scholar] [CrossRef]

- Kwon, S.C.; Kim, M.Y.; Kim, T.Y. The Choice of Measurement Techniques in Survey and Recording of Historic Buildings in Modern Ages of Korea. In Proceedings of the Autumn Annual Conference of AIK, Gwangju, Republic of Korea, 24 October 2008; Volume 28, pp. 431–434. [Google Scholar]

- Ryu, J.W.; Byun, N.H. Analysis study on patent for Scan-to-BIM related technology. J. Korea Acad. Ind. Coop. Soc. 2020, 21, 107–114. [Google Scholar]

- Czerniawski, T.; Leite, F. Automated digital modeling of existing buildings: A review of visual object recognition methods. Autom. Constr. 2020, 113, 103131. [Google Scholar] [CrossRef]

- Wu, Z.; Jiang, Y.; Wang, J.; Pu, J.; Xue, X. Exploring Inter-feature and Inter-class Relationships with Deep Neural Networks for Video Classification. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907v4. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. arXiv 2018, arXiv:1711.06396v1. [Google Scholar]

- Shi, C.; Wang, C.; Liu, X.; Sun, S.; Xi, G.; Ding, Y. Point cloud object recognition method via histograms of dual deviation angle feature. Int. J. Remote Sens. 2023, 44, 3031–3058. [Google Scholar] [CrossRef]

- Shi, G.; Wang, K.; Li, R.; Ma, C. Real-Time Point Cloud Object Detection via Voxel-Point Geometry Abstraction. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5971–5982. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. Evolution of Image Segmentation using Deep Convolutional Neural Network: A Survey. Knowl. Based Syst. 2020, 201–202, 106062. [Google Scholar] [CrossRef]

- Feng, M.; Zhang, L.; Lin, X.; Gilani, S.Z.; Mian, A. Point attention network for semantic segmentation of 3D point clouds. Pattern Recognit. 2020, 107, 107446. [Google Scholar] [CrossRef]

- Ghosh, A.; Sufian, A.; Sultana, F.; Chakrabarti, A.; De, D. Fundamental Concepts of Convolutional Neural Network. In Intelligent Systems Reference Library; Springer: Berlin/Heidelberg, Germany, 2019; pp. 519–567. [Google Scholar] [CrossRef]

- Zhi, S.; Liu, Y.; Li, X.; Guo, Y. Toward real-time 3D object recognition: A lightweight volumetric CNN framework using multitask learning. Comput. Graph. 2018, 71, 199–207. [Google Scholar] [CrossRef]

- Fei, B.; Yang, W.; Chen, W.M.; Li, Z.; Li, Y.; Ma, T.; Hu, X.; Ma, L. Comprehensive Review of Deep Learning-Based 3D Point Cloud Completion Processing and Analysis. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22862–22883. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. arXiv 2017, arXiv:1711.06396v1. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. arXiv 2017, arXiv:1706.02413v1. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep Learning for Generic Object Detection: A Survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Rukhovich, D.; Vorontsova, A.; Konushin, A. FCAF3D: Fully Convolutional Anchor-Free 3D Object Detection. arXiv 2021, arXiv:2112.00322v2. [Google Scholar]

- Stjepandić, J.; Sommer, M. Object Recognition Methods in a Built Environment. In DigiTwin: An Approach for Production Process Optimization in a Built Environment; Springer Series in Advanced Manufacturing; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, T.; Gu, H.; Hong, S.; Choo, S. Development of Architectural Object Automatic Classification Technology for Point Cloud-Based Remodeling of Aging Buildings. Appl. Sci. 2024, 14, 862. https://doi.org/10.3390/app14020862

Kim T, Gu H, Hong S, Choo S. Development of Architectural Object Automatic Classification Technology for Point Cloud-Based Remodeling of Aging Buildings. Applied Sciences. 2024; 14(2):862. https://doi.org/10.3390/app14020862

Chicago/Turabian StyleKim, Taehoon, Hyeongmo Gu, Soonmin Hong, and Seungyeon Choo. 2024. "Development of Architectural Object Automatic Classification Technology for Point Cloud-Based Remodeling of Aging Buildings" Applied Sciences 14, no. 2: 862. https://doi.org/10.3390/app14020862

APA StyleKim, T., Gu, H., Hong, S., & Choo, S. (2024). Development of Architectural Object Automatic Classification Technology for Point Cloud-Based Remodeling of Aging Buildings. Applied Sciences, 14(2), 862. https://doi.org/10.3390/app14020862