An Immersive Virtual Reality Simulator for Echocardiography Examination

Abstract

:1. Introduction

2. Materials and Methods

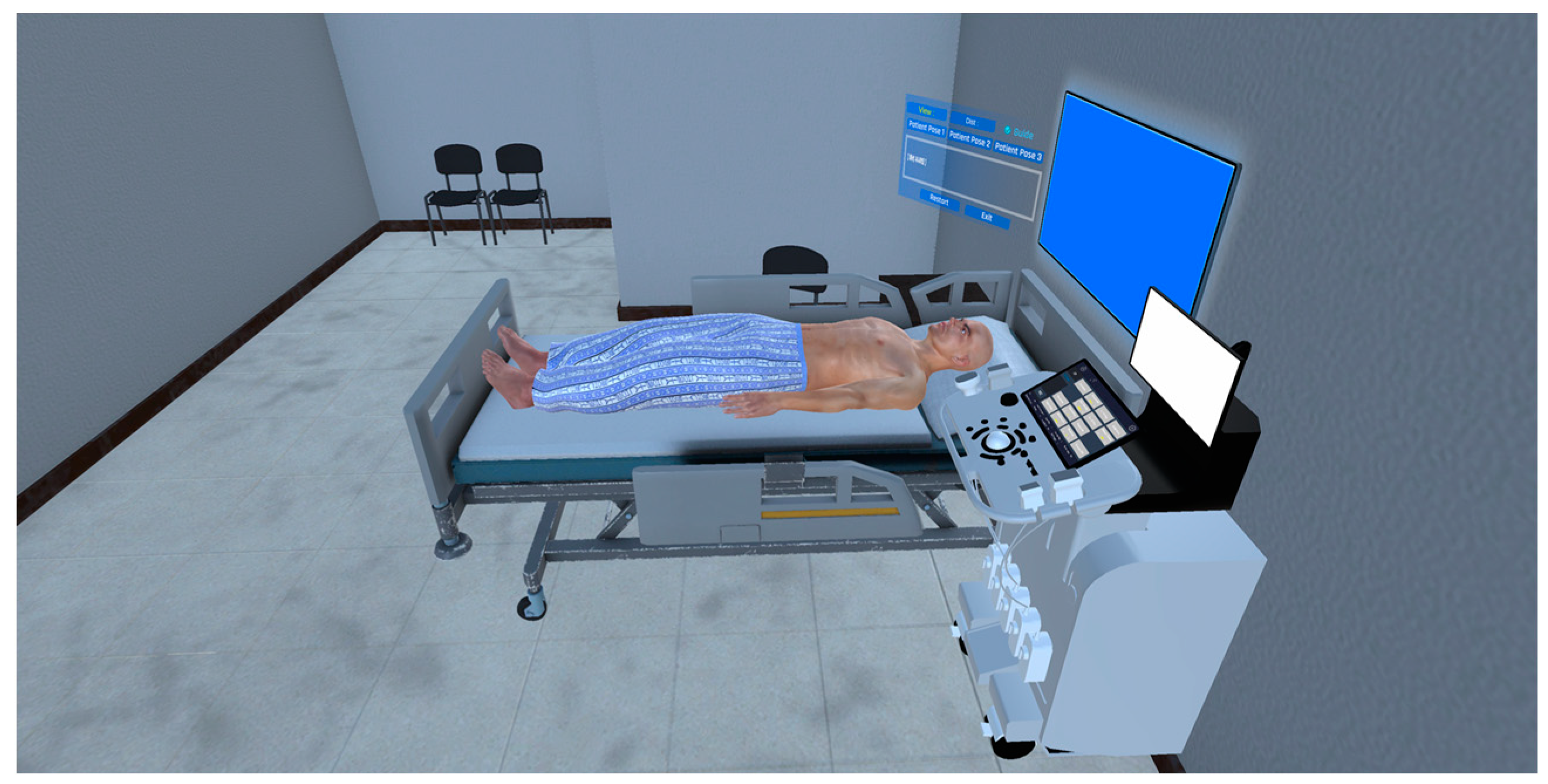

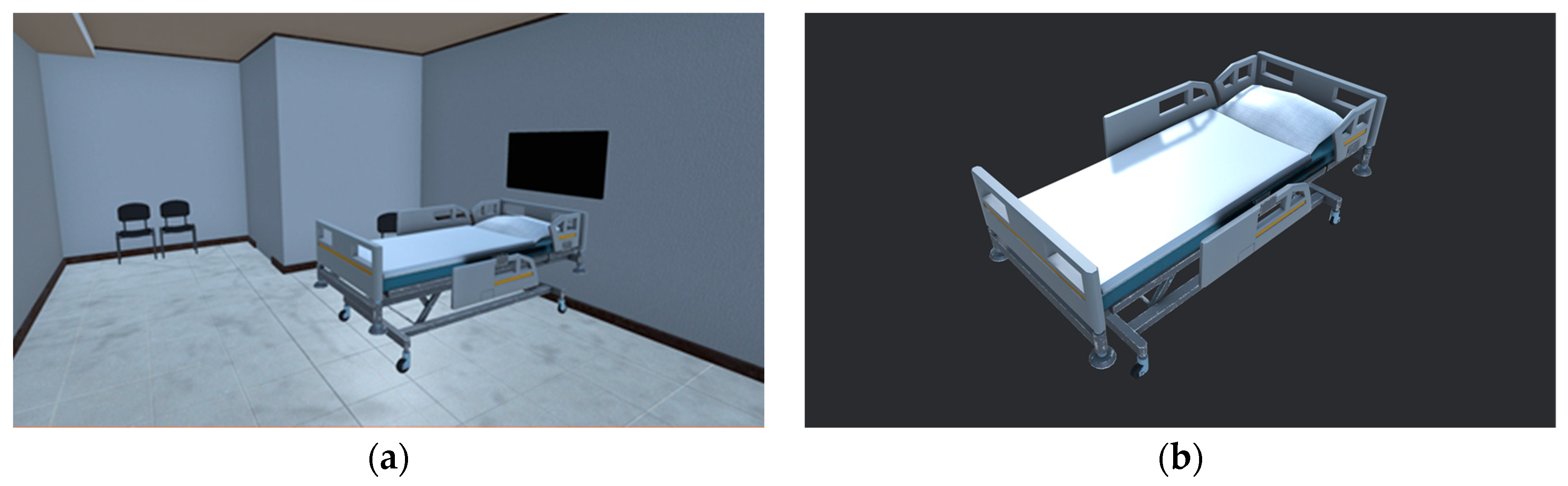

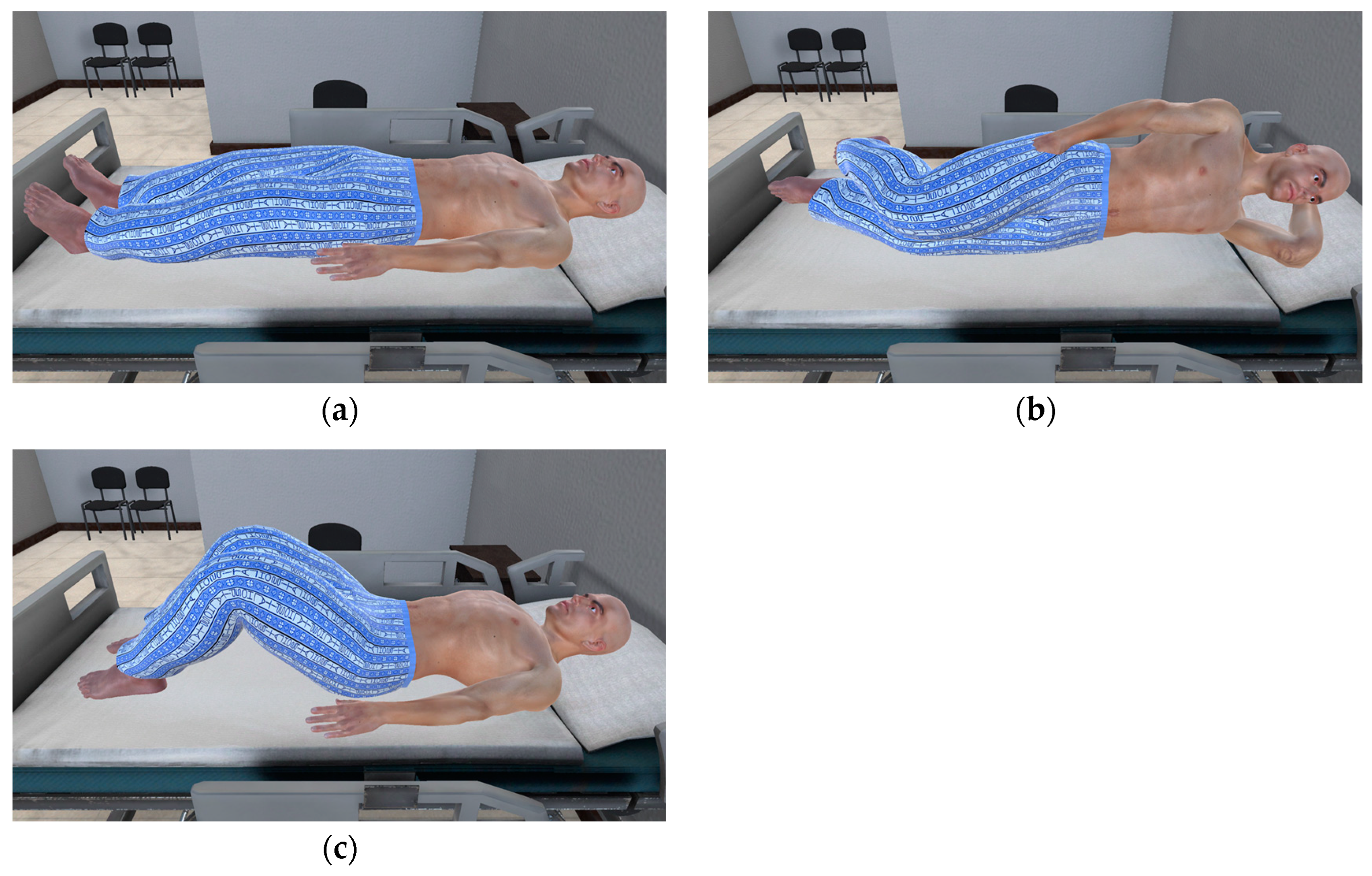

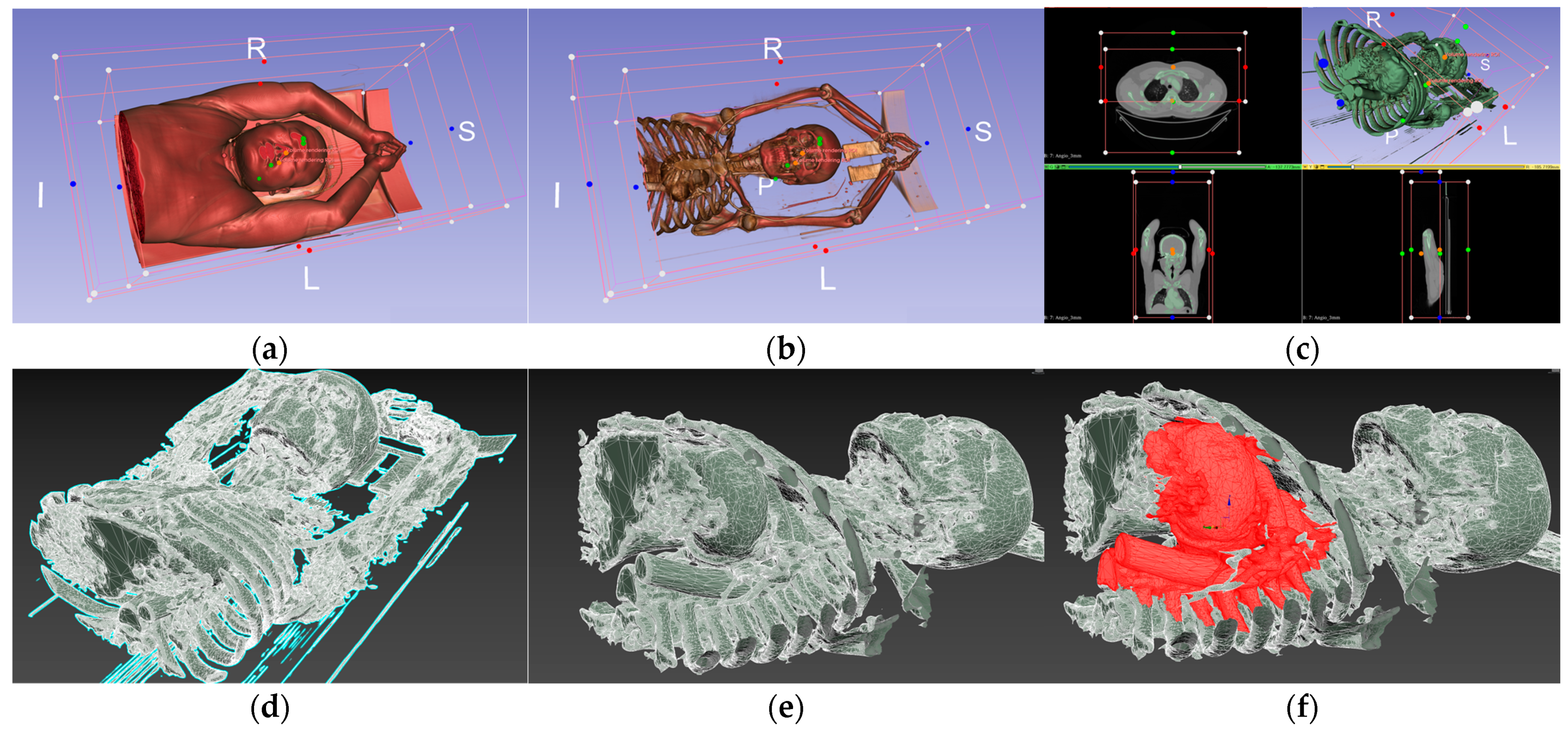

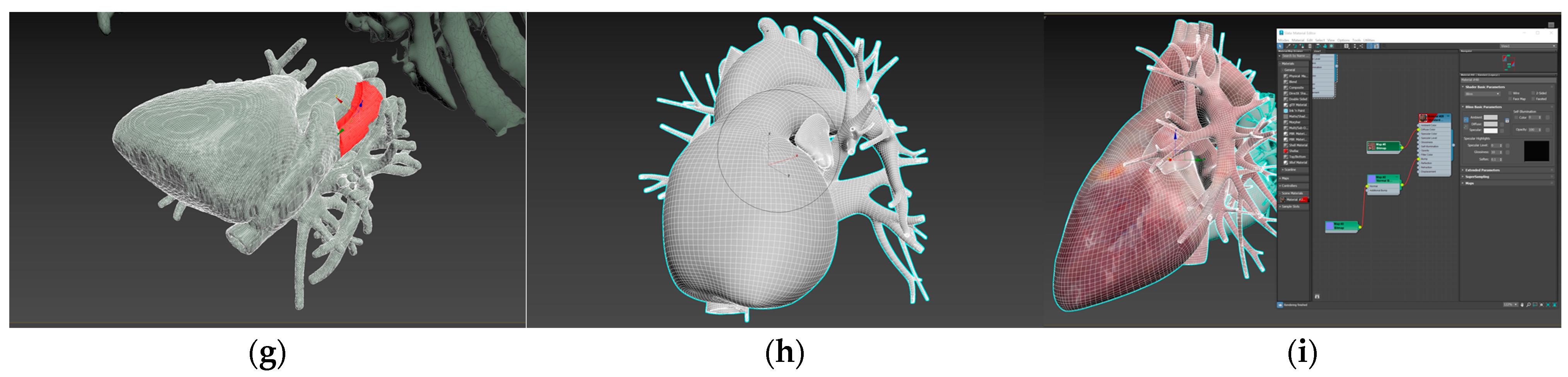

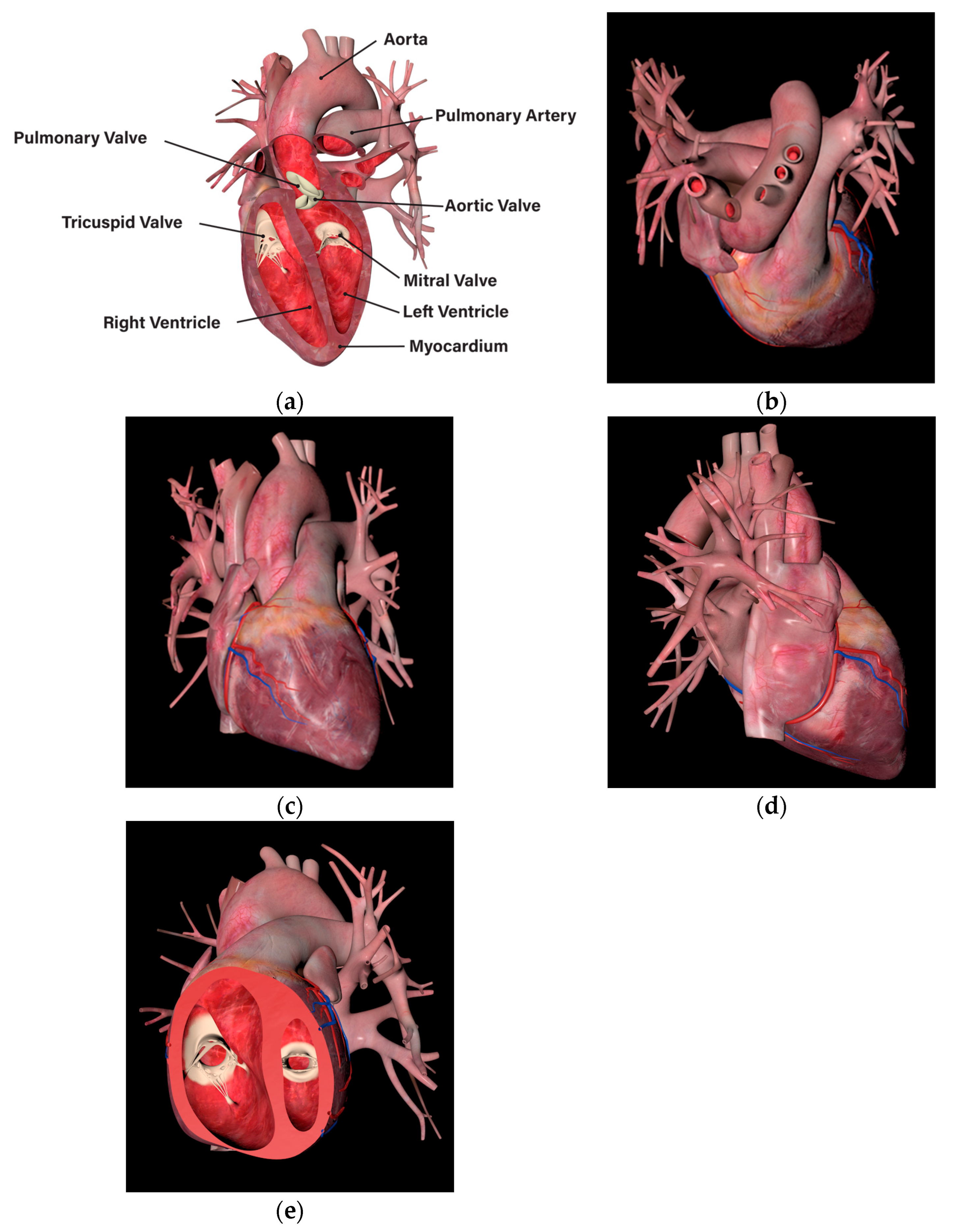

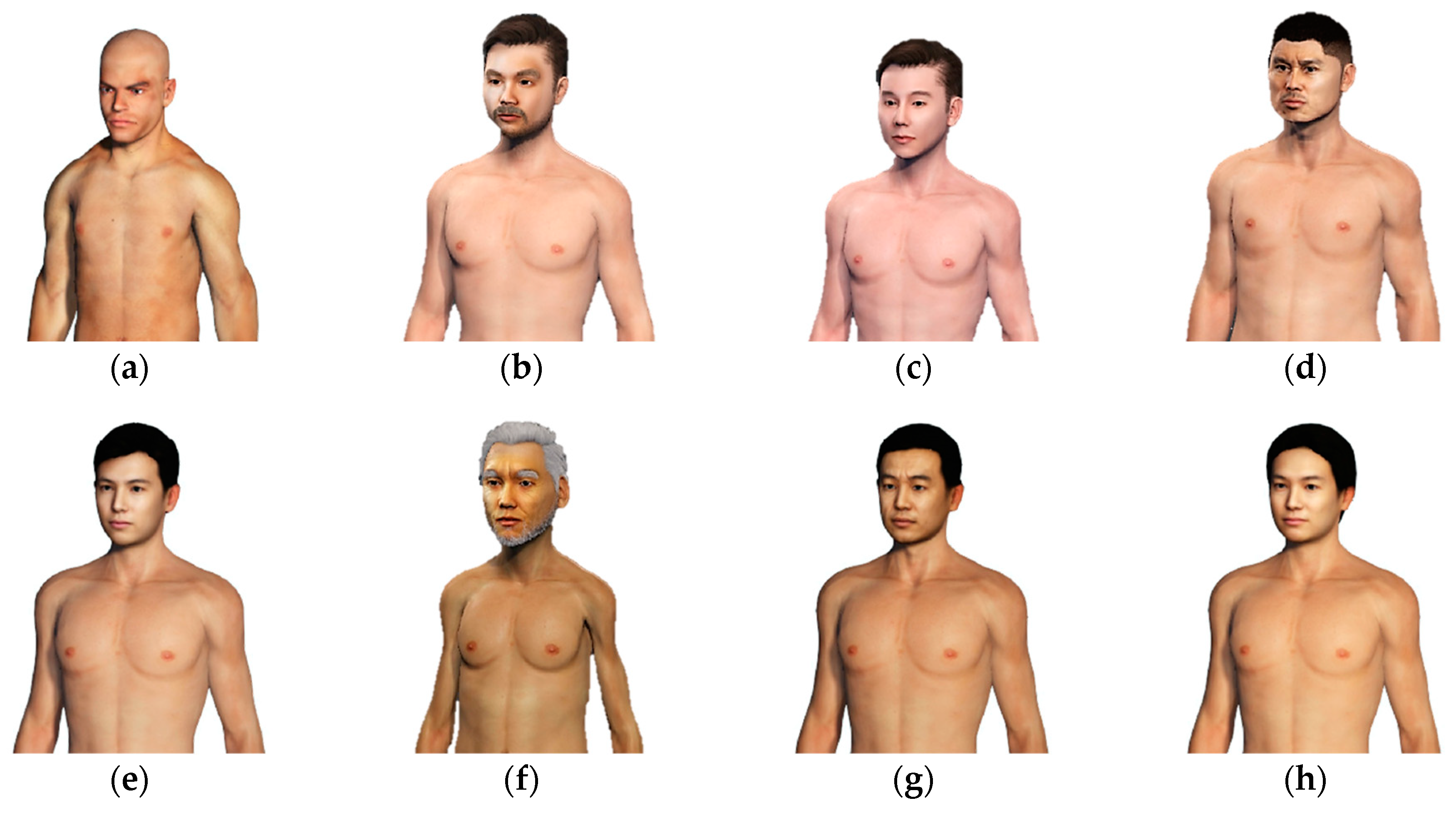

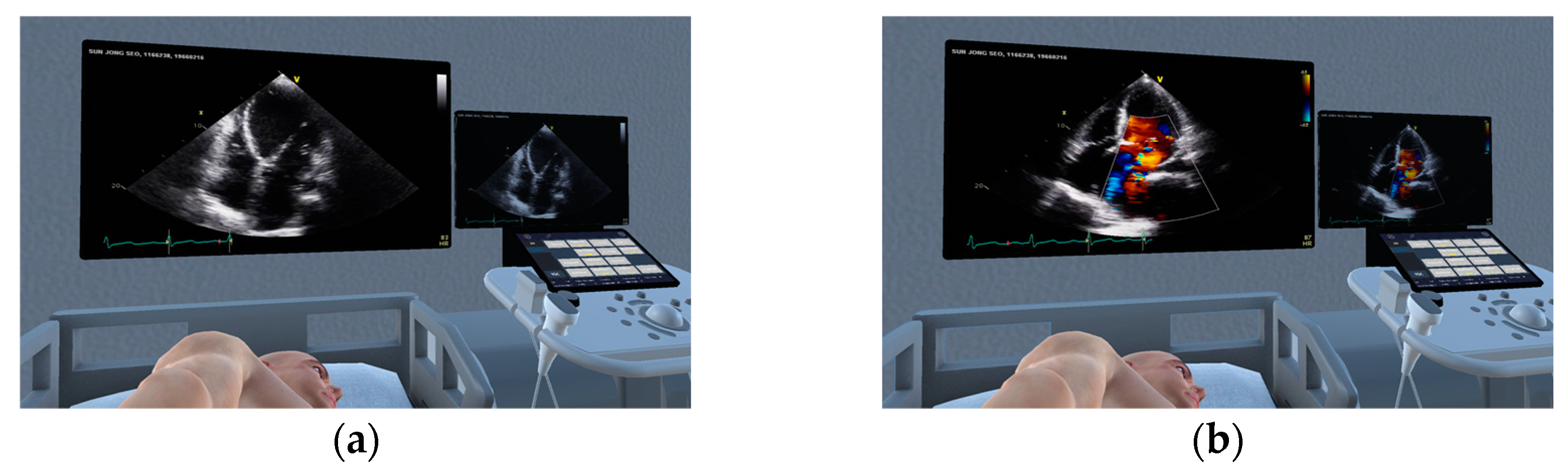

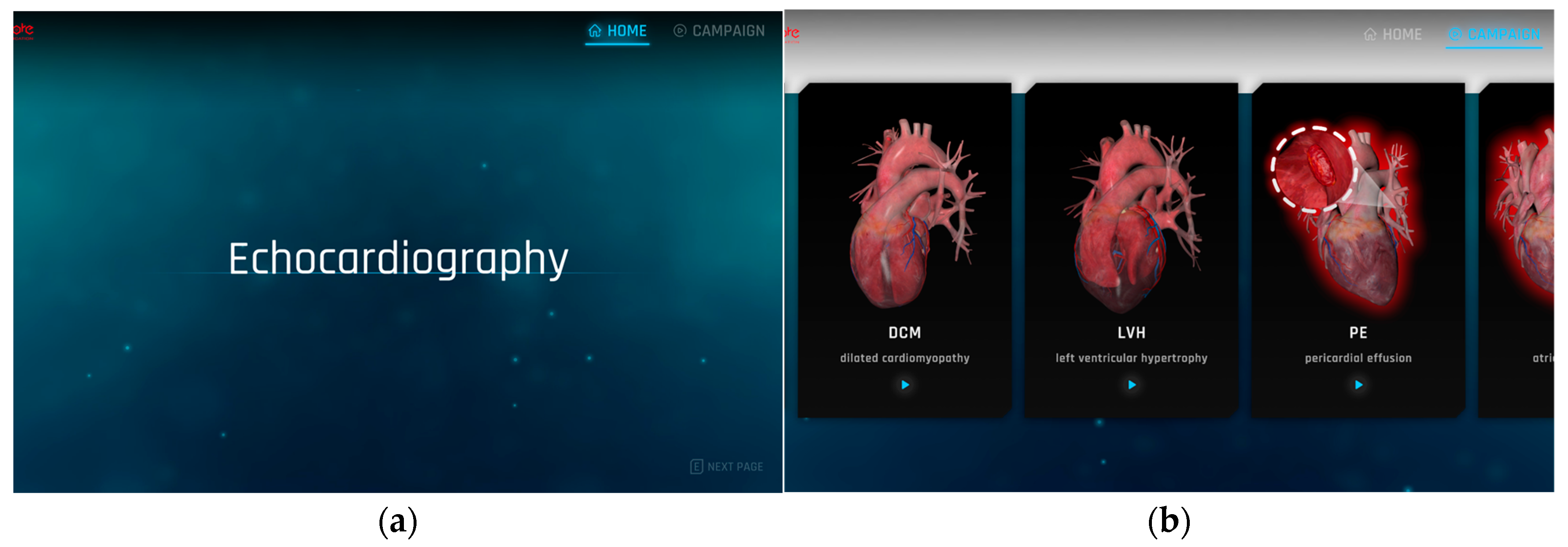

2.1. Production of Virtual Space for Echocardiography Examination Practice

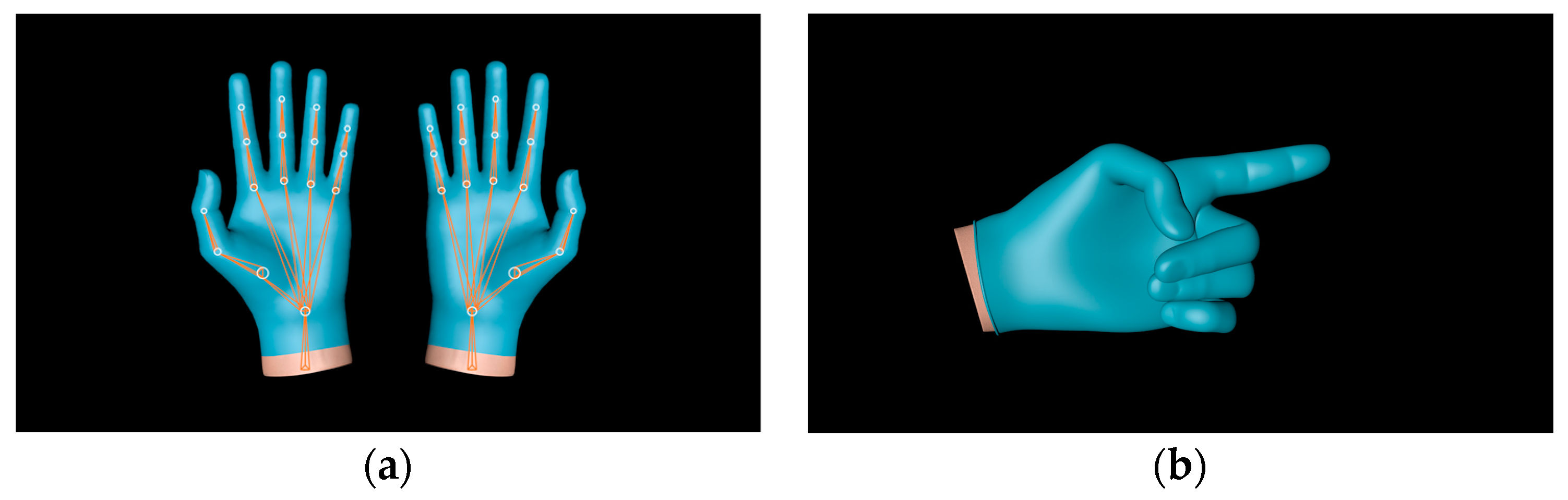

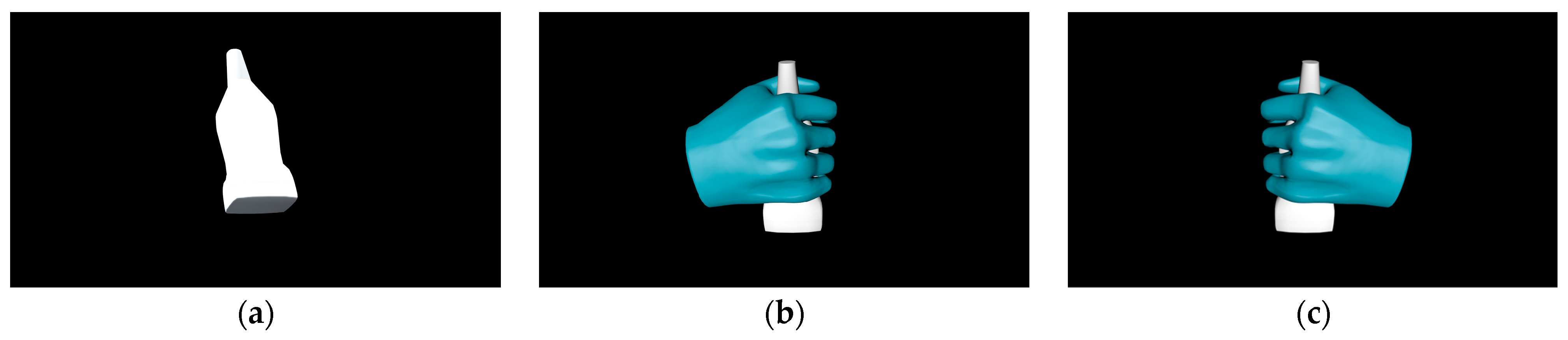

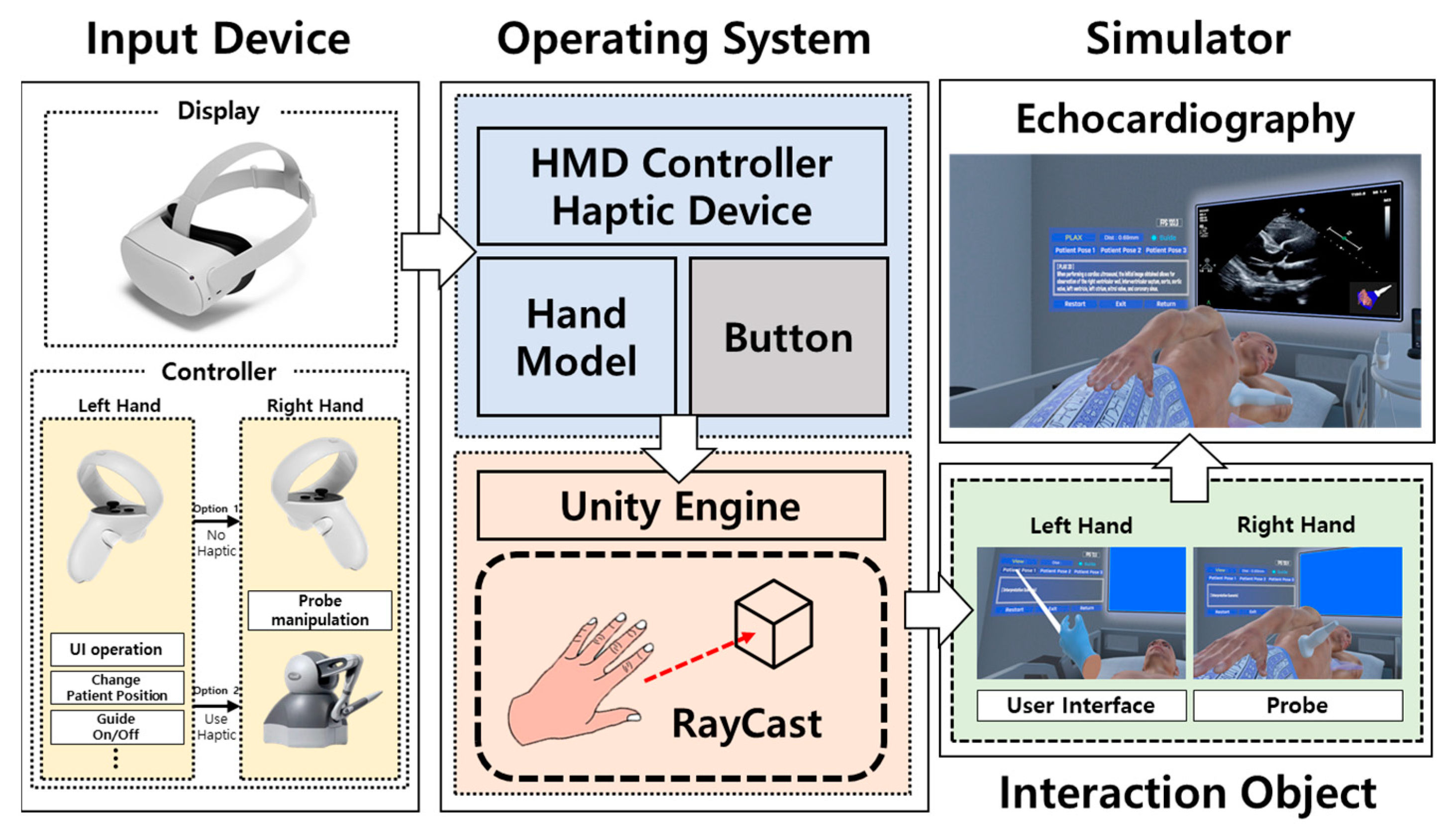

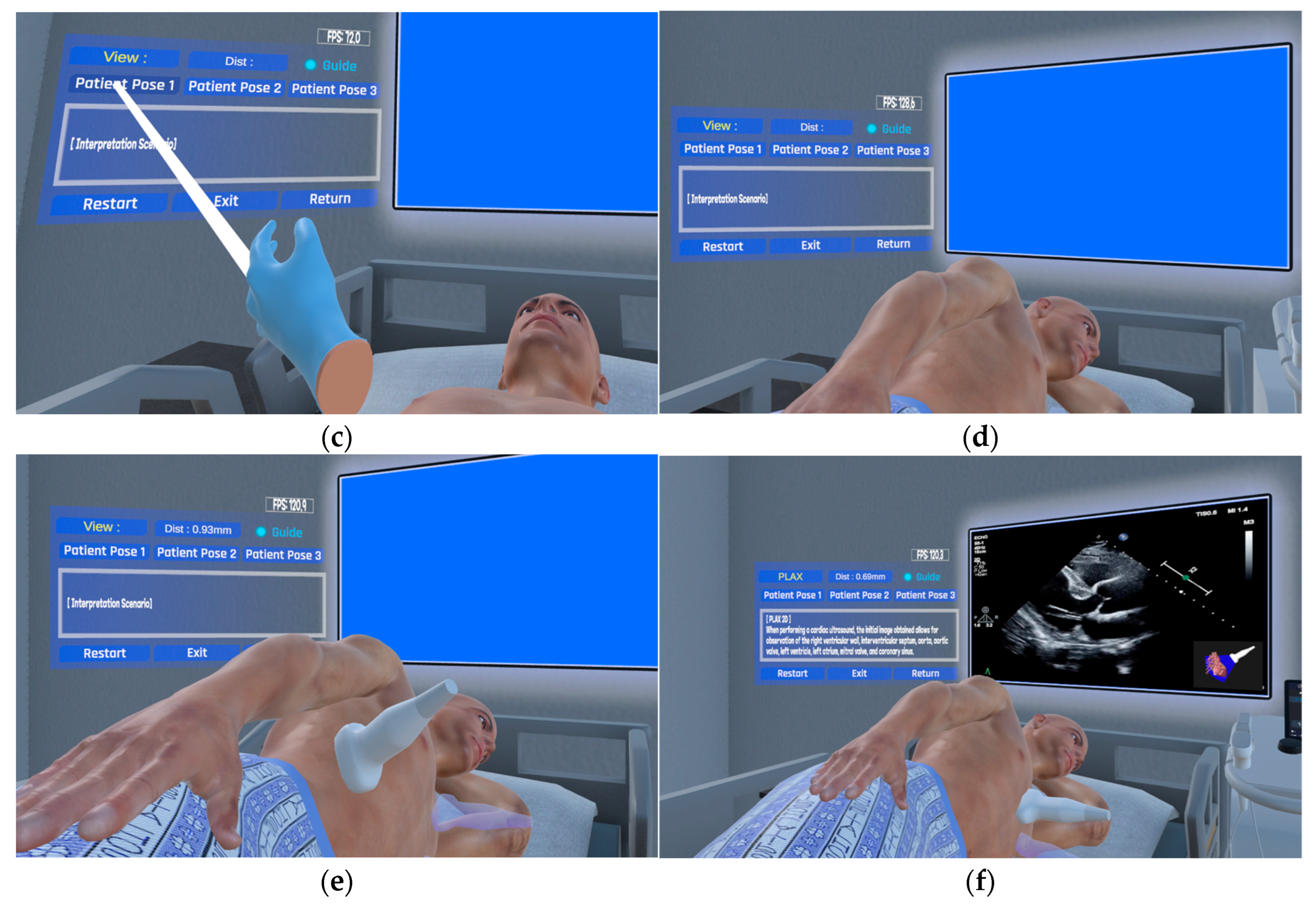

2.2. Approaches to Echocardiography Examination Interaction

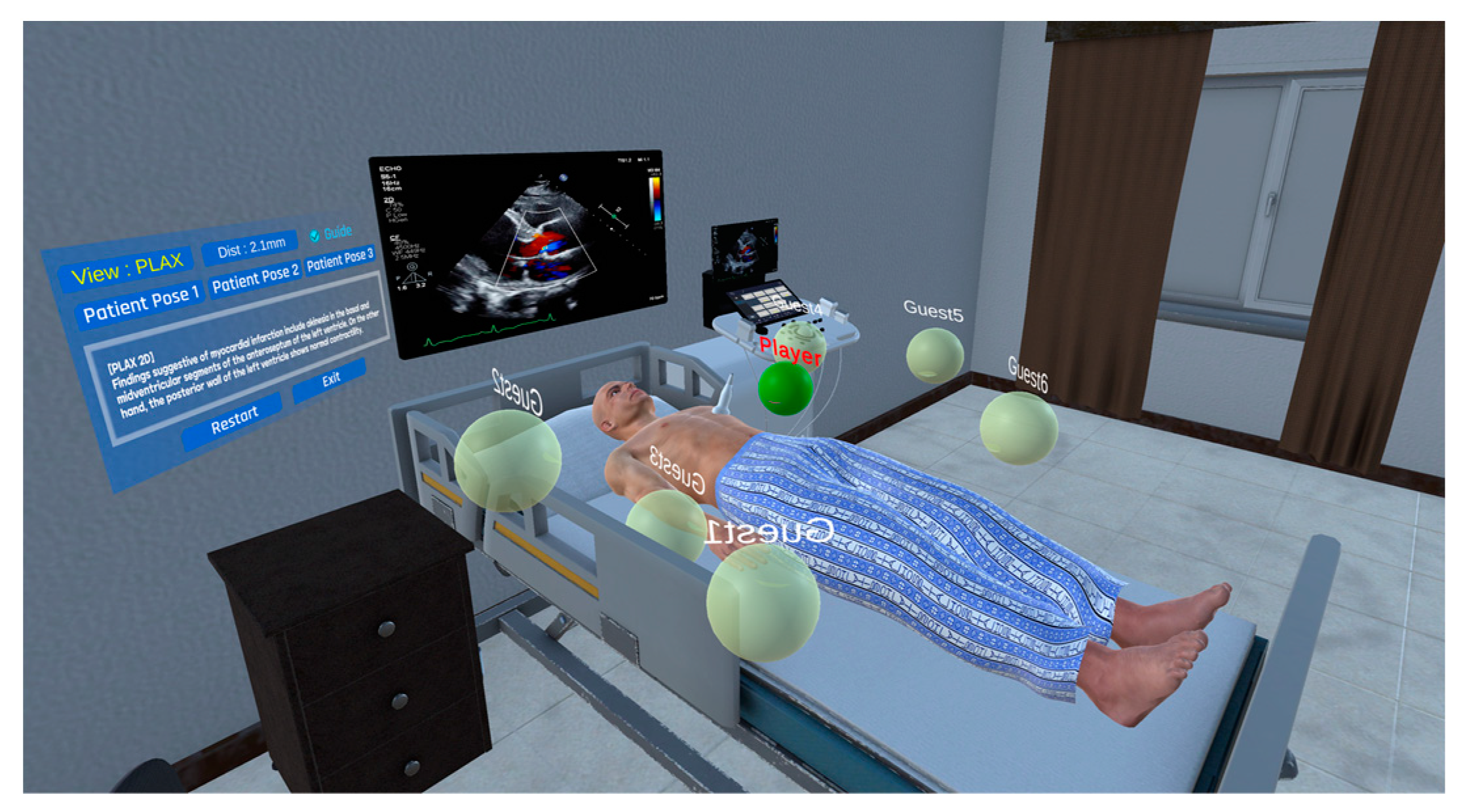

2.3. Multi-User Connection Module

2.4. Implementation Method of the Echocardiography Examination Simulator

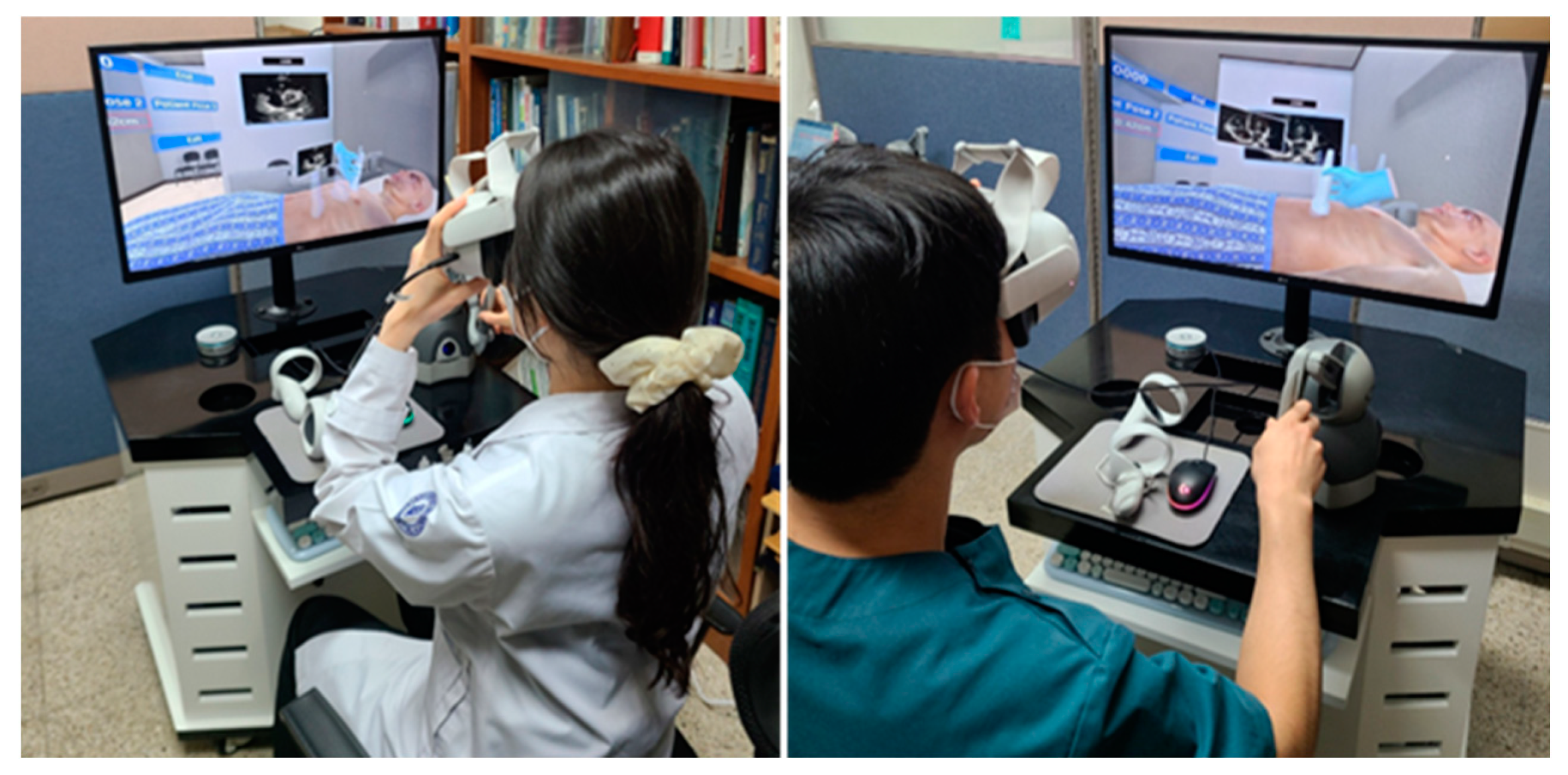

2.5. Performance Evaluation of the Echocardiography Examination Simulator

2.6. Validation of the Echocardiography Examination Simulator

3. Results

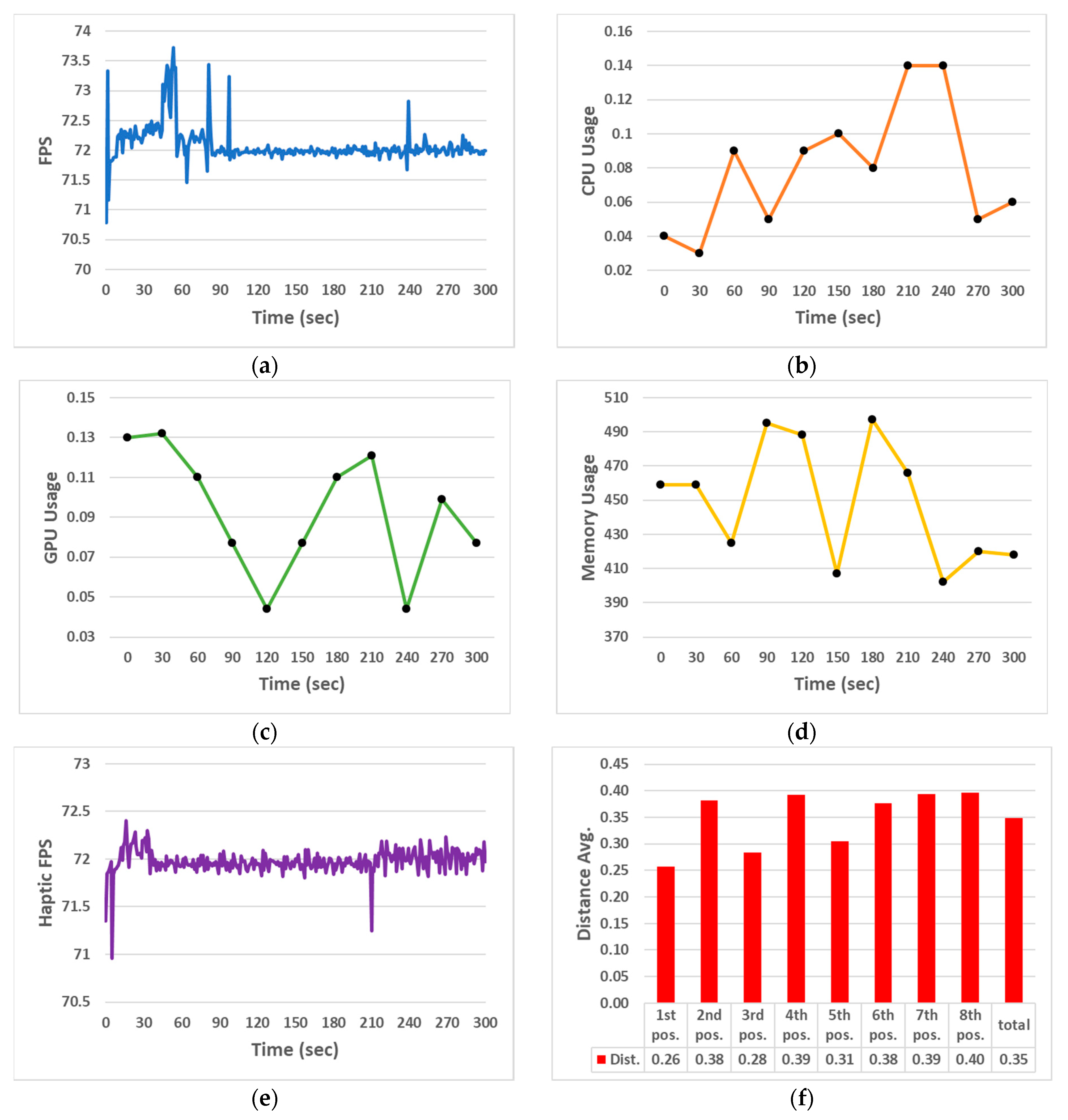

3.1. Performance Evaluation Results of the Echocardiography Examination Simulator

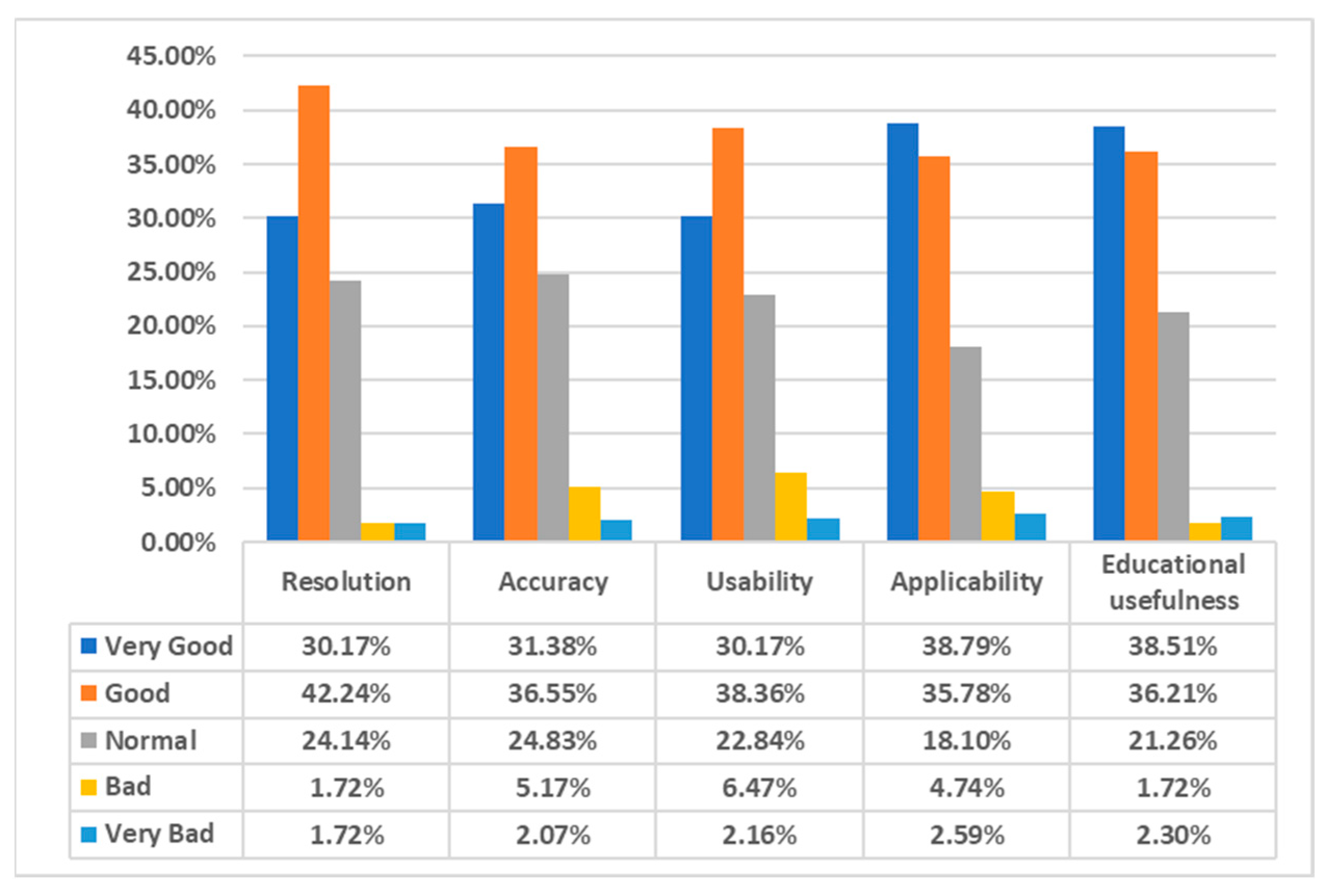

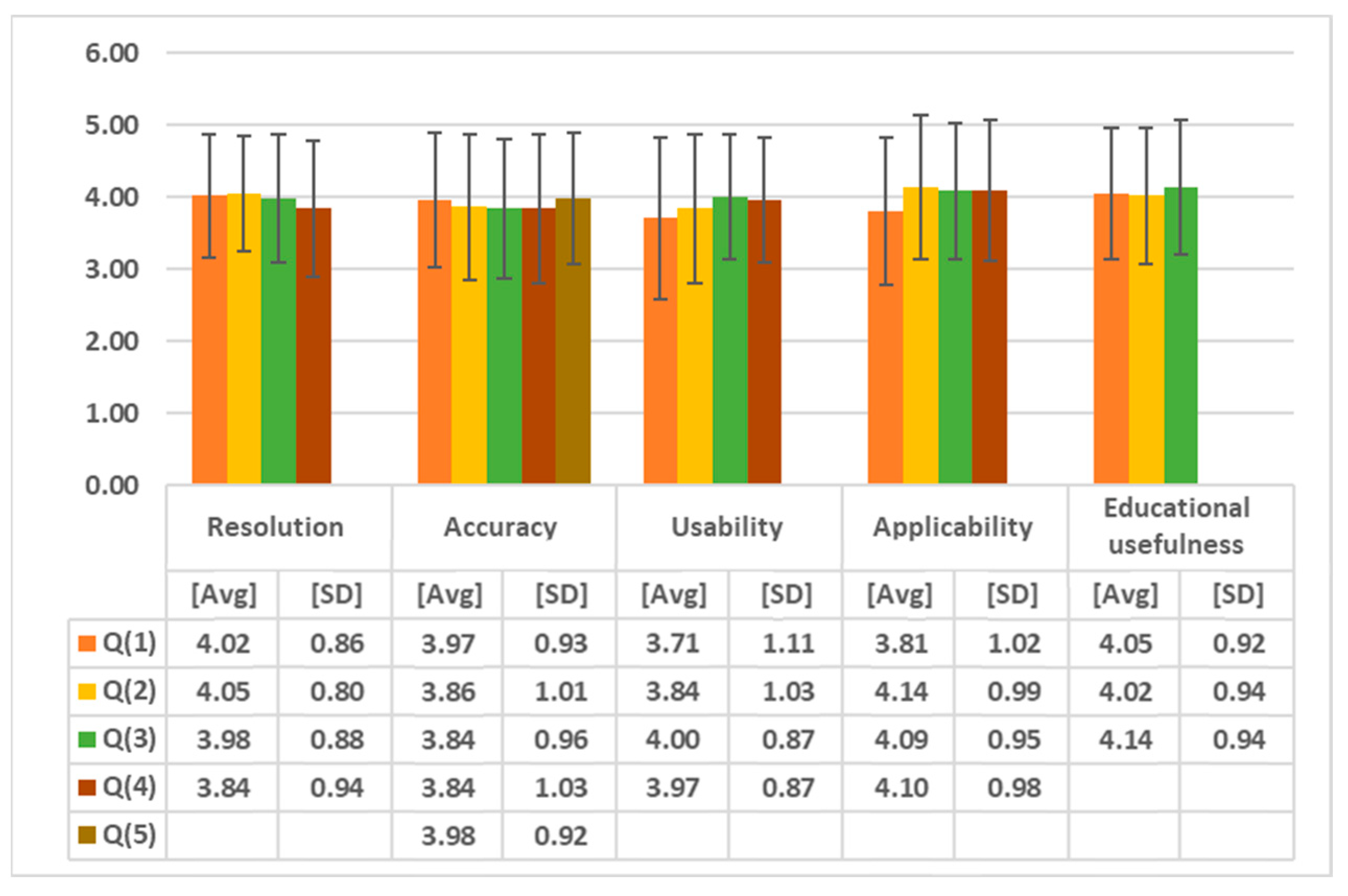

3.2. Validation Results of the Echocardiography Examination Simulator

3.3. Kappa Statistic Results for the Validation Findings

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, J.I. Strategies for Effective Teaching in Clinical Clerkship. Hanyang Med. Rev. 2012, 32, 51–57. [Google Scholar] [CrossRef]

- Oh, Y.K. Importance of clinical medicine in medical education: Review of the articles in this issue. Korean J. Med. Educ. 2015, 27, 243–245. [Google Scholar] [CrossRef]

- Hem-Stokroos, H.V.D.; Scherpbier, A.J.J.A.; Vleuten, C.V.D.; Vries, H.D.; Haarman, H.T.M. How effective is a clerkship as a learning environment? Med. Teach. 2001, 23, 599–604. [Google Scholar] [CrossRef]

- Park, G.H.; Lee, Y.D.; Oh, J.H.; Choi, I.S.; Lim, Y.M.; Kim, Y.L. Program development of student internship (subinternship) in Gachon medical school. Korean J. Med. Educ. 2003, 15, 113–130. [Google Scholar] [CrossRef]

- Li, L.; Yu, F.; Shi, D.; Shi, J.; Tian, Z.; Yang, J.; Wang, X.; Jiang, Q. Application of virtual reality technology in clinical medicine. Am. J. Transl. Res. 2017, 9, 3867–3880. [Google Scholar] [PubMed]

- Kuehn, B.M. Virtual and augmented reality put a twist on medical education. JAMA 2019, 319, 756–758. [Google Scholar] [CrossRef] [PubMed]

- Pottle, J. Virtual reality and the transformation of medical education. Future Healthc. J. 2019, 6, 181–185. [Google Scholar] [CrossRef] [PubMed]

- Oxford Medical Simulation. Available online: https://oxfordmedicalsimulation.com (accessed on 20 November 2023).

- Falah, J.; Khan, S.; Alfalah, T.; Alfalah, S.F.M.; Chan, W.; Harrison, D.K.; Charissis, V. Virtual Reality medical training system for anatomy education. In Proceedings of the 2014 Science and Information Conference, London, UK, 27–29 August 2014; pp. 752–758. [Google Scholar] [CrossRef]

- Schild, J.; Misztal, S.; Roth, B.; Flock, L.; Luiz, T.; Lerner, D.; Herkersdorf, M.; Weaner, K.; Neuberaer, M.; Franke, A.; et al. Ap-plying multi-user virtual reality to collaborative medical training. In Proceedings of the 2018 IEEE Conference on Virtual Real-ity and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; pp. 775–776. [Google Scholar] [CrossRef]

- Ayoub, A.; Pulijala, Y. The application of virtual reality and augmented reality in Oral & Maxillofacial Surgery. BMC Oral Health 2019, 19, 238. [Google Scholar] [CrossRef]

- Ultraleap. Available online: https://www.ultraleap.com (accessed on 23 November 2023).

- McKinney, B.; Dbeis, A.; Lamb, A.; Frousiakis, P.; Sweet, S. Virtual Reality Training in Unicompartmental Knee Arthroplasty: A Randomized, Blinded Trial. J. Surg. Educ. 2022, 79, 1526–1535. [Google Scholar] [CrossRef] [PubMed]

- Andersen, N.L.; Jensen, R.O.; Konge, L.; Laursen, C.B.; Falster, C.; Jacobsen, N.; Elhakim, M.T.; Bojsen, J.A.; Riishede, M.; Franse, M.L.; et al. Immersive Virtual Reality in Basic Point-of-Care Ultrasound Training: A Randomized Controlled Trial. Ultrasound Med. Biol. 2023, 49, 178–185. [Google Scholar] [CrossRef]

- Kennedy, G.A.; Pedram, S.; Sanzone, S. Improving safety outcomes through medical error reduction via virtual reality-based clinical skills training. Saf. Sci. 2023, 165, 106200. [Google Scholar] [CrossRef]

- Arango, S.; Gorbaty, B.; Tomhave, N.; Shervheim, D.; Buyck, D.; Porter, S.T.; Laizzo, P.A.; Perry, T.E. A high-resolution virtual reality-based simulator to enhance perioperative echocardiography training. J. Cardiothorac. Vasc. Anesth. 2023, 37, 299–305. [Google Scholar] [CrossRef] [PubMed]

- Bard, J.T.; Chung, H.K.; Shaia, J.K.; Wellman, L.L.; Elzie, C.A. Increased medical student understanding of dementia through virtual embodiment. Gerontol. Geriatr. Educ. 2023, 44, 221–222. [Google Scholar] [CrossRef]

- Kiyozumi, T.; Ishigami, N.; Tatsushima, D.; Araki, Y.; Sekine, Y.; Saitoh, D. Development of virtual reality content for learning Japan Prehospital Trauma Evaluation and Care initial assessment procedures. Acute Med. Surg. 2022, 9, e755. [Google Scholar] [CrossRef]

- Yang, S.Y.; Oh, Y.H. The effects of neonatal resuscitation gamification program using immersive virtual reality: A quasi-experimental study. Nurse Educ. Today 2022, 117, 105464. [Google Scholar] [CrossRef] [PubMed]

- Ropelato, S.; Menozzi, M.; Michel, D.; Siegrist, M. Augmented reality microsurgery: A tool for training micromanipulations in ophthalmic surgery using augmented reality. J. Soc. Simul. Healthc. 2020, 15, 122–127. [Google Scholar] [CrossRef] [PubMed]

- Rhienmora, P.; Gajananan, K.; Haddawy, P.; Dailey, M.N.; Suebnukarn, S. Augmented reality haptics system for dental surgi-cal skills training. In Proceedings of the 17th ACM Symposium on Virtual Reality Software and Technology, Hong Kong, China, 22–24 November 2010; pp. 97–98. [Google Scholar] [CrossRef]

- Kata, H.; Billinghurst, M. Marker tracking and hmd calibration for a video-based augmented reality conferencing system. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality (IWAR’99), San Francisco, CA, USA, 20–21 October 1999; pp. 85–94. [Google Scholar] [CrossRef]

- Si, W.X.; Liao, X.Y.; Qian, Y.L.; Sun, H.T.; Chen, X.D.; Wang, Q.; Heng, P.A. Assessing performance of augmented reality-based neurosurgical training. Vis. Comput. Ind. Biomed. Art 2019, 2, 6. [Google Scholar] [CrossRef] [PubMed]

- Microsoft. Available online: https://www.microsoft.com/en-us/hololens (accessed on 17 November 2023).

- Schott, D.; Saalfeld, P.; Schmidt, G.; Joeres, F.; Boedecker, C.; Huettl, F.; Lang, H.; Huber, T.; Preim, B.; Hansen, C. A VR/AR Environment for Multi-User Liver Anatomy Education. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; pp. 296–305. [Google Scholar] [CrossRef]

- Aebersold, M.; Lewis, T.V.; Cherara, L.; Weber, M.; Khouri, C.; Levine, R.; Tait, A.R. Interactive Anatomy-Augmented Virtual Simulation Training. Clin. Simul. Nurs. 2018, 15, 34–41. [Google Scholar] [CrossRef] [PubMed]

- Dennler, C.; Jaberg, L.; Spirig, J.; Agten, C.; Götschi, T.; Fürnstahl, P.; Farshad, M. Augmented reality-based navigation increases precision of pedicle screw insertion. J. Orthop. Surg. Res. 2020, 15, 174. [Google Scholar] [CrossRef]

- Zhu, T.; Jiang, S.; Yang, Z.; Zhou, Z.; Li, Y.; Ma, S.; Zhuo, J. A neuroendoscopic navigation system based on dual-mode augmented reality for minimally invasive surgical treatment of hypertensive intracerebral hemorrhage. Comput. Biol. Med. 2022, 140, 105091. [Google Scholar] [CrossRef]

- Hess, O.; Qian, J.; Bruce, J.; Wang, E.; Rodriguez, S.; Haber, N.; Caruso, T.J. Communication Skills Training Using Remote Augmented Reality Medical Simulation: A Feasibility and Acceptability Qualitative Study. Med. Sci. Educ. 2022, 32, 1005–1014. [Google Scholar] [CrossRef] [PubMed]

- Mai, H.N.; Dam, V.V.; Lee, D.H. Accuracy of Augmented Reality–Assisted Navigation in Dental Implant Surgery: Systematic Review and Meta-analysis. J. Med. Internet Res. 2023, 25, e42040. [Google Scholar] [CrossRef]

- Zhong, Y.; Liu, H.; Jinag, J.; Liu, L. 3D Human Body Morphing Based on Shape Interpolation. In Proceedings of the 2009 First International Conference on Information Science and Engineering, Nanjing, China, 26–28 December 2009; pp. 1027–1030. [Google Scholar] [CrossRef]

- AUTODESK. Available online: https://www.autodesk.com (accessed on 19 November 2023).

- Maxon. Available online: https://www.maxon.net (accessed on 13 November 2023).

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

| Environment Model | Interaction Model | Blood Sampling Model |

|---|---|---|

| Ward | 3D hand model | 3D patient model |

| Medical bed | Probe | 3D cardiac model |

| Echocardiography equipment |

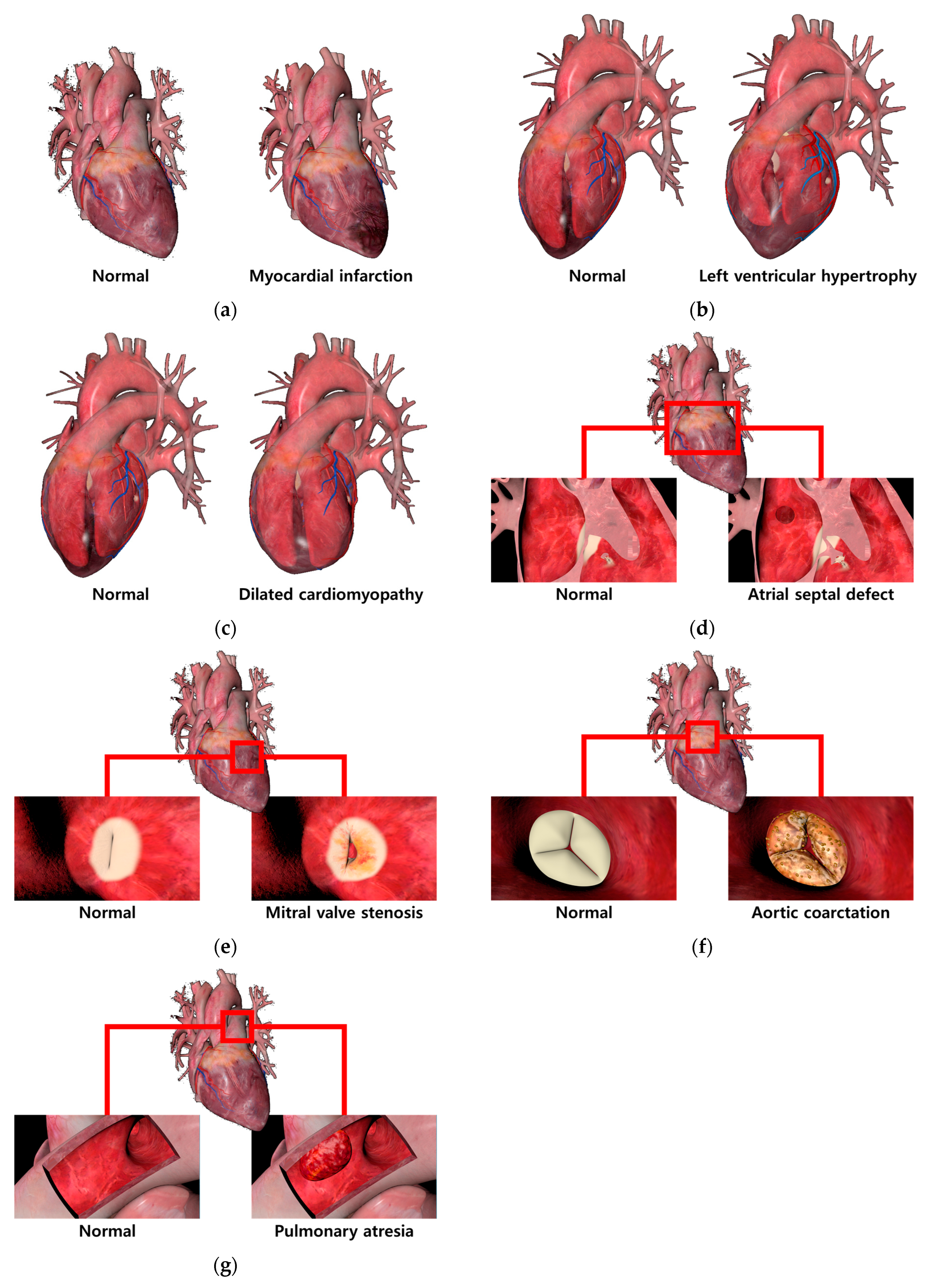

| View | Generation Method Based on Abnormal Cardiac Feature |

|---|---|

| Myocardial infarction | Feature: The presence of necrotic areas due to a lack of blood supply to the cardiac muscle. Method: To represent the necrotic areas, the diffuse map and normal map of the normal cardiac model were modified and applied. |

| Left ventricular hypertrophy | Feature: An increase in the thickness of the left ventricular wall resulting in reduced size of the ventricle. Method: The size of the left ventricle was physically reduced using sculpting, a method of manipulating the shape of the 3D model and adding details. |

| Dilated cardiomyopathy | Feature: A decrease in the thickness of the left ventricular wall resulting in enlarged size of the ventricle. Method: The size of the left ventricle was physically enlarged, a method of manipulating the shape of the 3D model and adding details. |

| Atrial septal defect | Feature: The creation of a perforation in the septum between the left atrium and right atrium. Method: The perforation was represented using the difference function of the Boolean technique, and the created perforation was smoothly rendered using smoothing. |

| Mitral valve stenosis | Feature: Abnormal closure of the mitral valve due to bacterial infection in the mitral valve. Method: A modified diffuse map and a normal map were used to represent bacterial infection in the mitral valve. Abnormal closure movements of the mitral valve were depicted using keyframe interpolation. |

| Aortic coarctation | Feature: Abnormal closure of the aortic valve due to bacterial infection in the aortic valve. Method: A modified diffuse map and a normal map were used to represent bacterial infection in the aortic valve. Abnormal closure movements of the aortic valve were depicted using keyframe interpolation. |

| Pulmonary atresia | Feature: The presence of a blood clot in the artery connecting the cardiac muscle to the lungs, resulting in the blockage of the pulmonary artery. Method: Additional modeling of a blood clot and its placement in the pulmonary artery. |

| View | View area based on probe position and direction |

|---|---|

| PLAX | Probe: Place the probe in the left parasternal region of the chest, with the orientation marker facing the right shoulder of the patient’s 3D model. View area: The aortic valve, left atrium and ventricle, and aortic arch are observed. |

| PSAX MV | Probe: Place the probe in the left parasternal region of the chest, with the orientation marker facing the left shoulder of the patient’s 3D model. View area: The lower left part of the cardiac muscle, specifically the mitral valve and surrounding structures, is visualized. |

| PSAX AV | Probe: Aligned in the same position and orientation as PSAX MV, tilting the probe contact surface downward. View area: Anatomical structures related to the aortic valve, specifically the open state of the aortic valve, are observed. |

| PSAX PM | Probe: Aligned in the same position and orientation as PSAX MV, tilting the probe contact surface upward. View area: The muscles known as the papillary muscles, which are involved in the contraction of the ventricles, are observed. |

| PSAX Apical | Probe: Move down approximately one to two intercostal spaces from the position of the PSAX MV and the orientation marker facing below the right shoulder of the patient’s 3D model. View area: The structures near the top of the ventricles and around the chordae tendineae (chordae tendons) are observed. |

| A4C | Probe: Positioned in the fifth to sixth intercostal space in the lower left chest area, with the orientation marker facing the upper arm of the patient’s 3D model. View area: The right and left atria, as well as the upper and lower ventricles, are simultaneously visualized. |

| A3C | Probe: The contact position for A4C is the same, with the orientation marker facing the right shoulder of the patient’s 3D model. View area: The left atrium, left upper ventricle, and left lower ventricle are observed. |

| A2C | Probe: The contact position for A4C is the same, with the orientation marker facing the head of the patient’s 3D model. View area: The left atrium and left upper ventricle are predominantly observed. |

| Hardware | Specification |

|---|---|

| CPU | Intel i9-12900 K Core 16, Thread 24, 5.20 GHz |

| GPU | NVIDIA GeForce RTX 3080 12 GB |

| Memory | Samsung DDR4-3200(16 GB), Total 32.0 GB |

| Storage | Samsung 870 EVO (2 TB) Total 4 TB |

| OS | Window 10 Education |

| Display | GBLED GX2802 UHD 4 K, Inch 27, 240 Hz, 16.7 M Color |

| Item | Evaluation |

|---|---|

| FPS | Method: With two PCs connected simultaneously, the VR simulation was run for 5 min, recording FPS every second and then calculating the average. |

| CPU usage | Method: With two PCs connected simultaneously, the VR simulation was run for 5 min, recording CPU usage every 30 s and then calculating the average. |

| GPU usage | Method: With two PCs connected simultaneously, the VR simulation was run for 5 min, recording CPU usage every 30 s and then calculating the average. |

| Memory usage | Method: With two PCs connected simultaneously, the VR simulation was run for 5 min, recording Memory usage every 30 s and then calculating the average. |

| Haptic frame | Method: With two PCs connected simultaneously, haptic feedback was used for 5 min, recording haptic frames per second (FPS) every second and then calculating the average. |

| Contact point accuracy | Method: Calculating the average distance error between the probe position (total of eight) of the specialist-designated 3D patient model and the virtual probe contact position where the echocardiography examination image is displayed (based on every 10 trials). |

| Item | Question | |

|---|---|---|

| Resolution | Q (1) | Is the medical imaging displayed on the simulator clear? |

| Q (2) | Compared to the images in the book, is the medical image displayed on the simulator clear? | |

| Q (3) | Is the medical image clear even during actual motion? | |

| Q (4) | Is the previous medical image well maintained when the phase changes? | |

| Accuracy | Q (1) | Has this simulator implemented the human 3D model well? |

| Q (2) | Do the viewpoint and the position of the hand match? | |

| Q (3) | Does the movement of the equipment match the performer’s movements? | |

| Q (4) | Does the movement of the equipment reflect subtle movements? | |

| Q (5) | Does it move to the next step only after the previous step has been clearly carried out? | |

| Usability | Q (1) | Do you think this simulator has tactile realism? |

| Q (2) | Do you think this simulator has visual realism? | |

| Q (3) | Do you think the operation of this simulator is user-friendly? | |

| Q (4) | Did the operation of this simulator work according to the performer’s will? | |

| Applicability | Q (1) | Do you think this simulator is professional? |

| Q (2) | Can this simulator be utilized for actual practice education? | |

| Q (3) | Can this simulator be used for patient explanation? | |

| Q (4) | Would you be willing to use a simulator produced based on this simulator? | |

| Educational usefulness | Q (1) | Compared to the book, does this simulator help in understanding the actual skill? |

| Q (2) | Can the stages of this simulator be applied to the actual echocardiography process? | |

| Q (3) | After learning with this simulator, can you explain the skill to other medical personnel? | |

| Item | Average | Item | Average |

|---|---|---|---|

| FPS | 72.08 (fps) | Memory usage | 447.83 (MB) |

| CPU usage | 6.89 (%) | Haptic frame | 71.98 (fps) |

| GPU usage | 8.5 (%) | Contact point accuracy | 0.39 (mm) |

| Item | Kappa | Asymptotic Standard Error | Z | Lower 95% Asymptotic CI Bound | Upper 95% Asymptotic CI Bound |

|---|---|---|---|---|---|

| Resolution | 0.508 | 0.036 | 14.152 | 0.438 | 0.578 |

| Accuracy | 0.468 | 0.026 | 17.967 | 0.417 | 0.519 |

| Usability | 0.458 | 0.033 | 13.820 | 0.393 | 0.523 |

| Applicability | 0.547 | 0.034 | 15.968 | 0.480 | 0.615 |

| Educational usefulness | 0.540 | 0.051 | 10.666 | 0.441 | 0.639 |

| Total | 0.419 | 0.006 | 68.767 | 0.407 | 0.431 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.-S.; Kim, K.-W.; Kim, S.-R.; Woo, T.-G.; Chung, J.-W.; Yang, S.-W.; Moon, S.-Y. An Immersive Virtual Reality Simulator for Echocardiography Examination. Appl. Sci. 2024, 14, 1272. https://doi.org/10.3390/app14031272

Kim J-S, Kim K-W, Kim S-R, Woo T-G, Chung J-W, Yang S-W, Moon S-Y. An Immersive Virtual Reality Simulator for Echocardiography Examination. Applied Sciences. 2024; 14(3):1272. https://doi.org/10.3390/app14031272

Chicago/Turabian StyleKim, Jun-Seong, Kun-Woo Kim, Se-Ro Kim, Tae-Gyeong Woo, Joong-Wha Chung, Seong-Won Yang, and Seong-Yong Moon. 2024. "An Immersive Virtual Reality Simulator for Echocardiography Examination" Applied Sciences 14, no. 3: 1272. https://doi.org/10.3390/app14031272