Co-Operatively Increasing Smoothing and Mapping Based on Switching Function

Abstract

1. Introduction

- A novel co-operative incremental smoothing and mapping (CI-SAM) algorithm is constructed and the approach of this paper is innovative.

- This algorithm can accurately realize cluster positioning without additional algorithms such as target detection, reduce swarm location divergence, and avoid single-UAV positioning failures, and can be applied to the cluster formation flight of small UAVs to achieve an accurate collaborative localization capability.

- The new method is easily scalable and can be applied to all mainstream VIO systems; as long as the UWB module is accessed, a collaborative localization system with superior performance can be obtained.

2. Related Work

2.1. Relative Positioning in GPS/BeiDou Denial

2.2. Topological Factor and Switching Function

3. Models and Methods

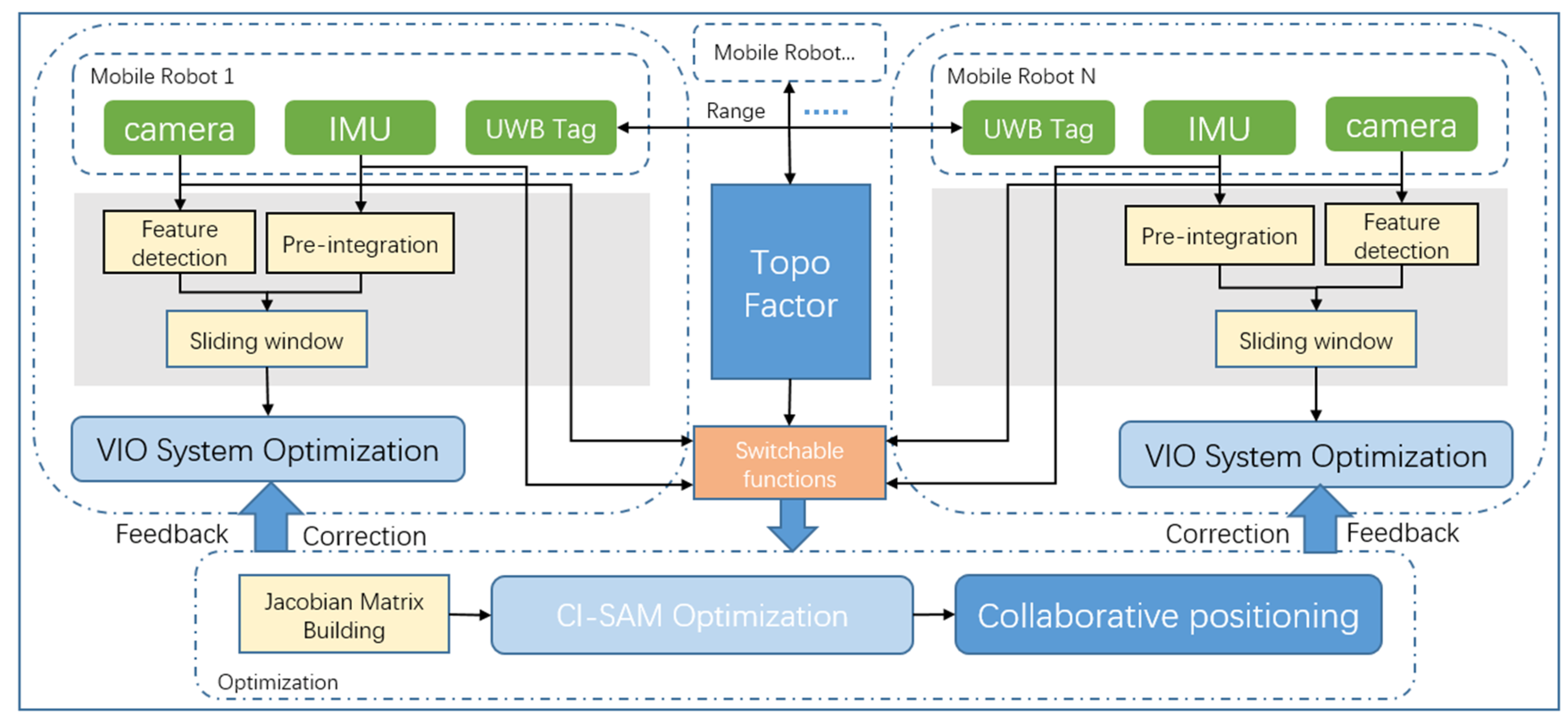

3.1. System Model

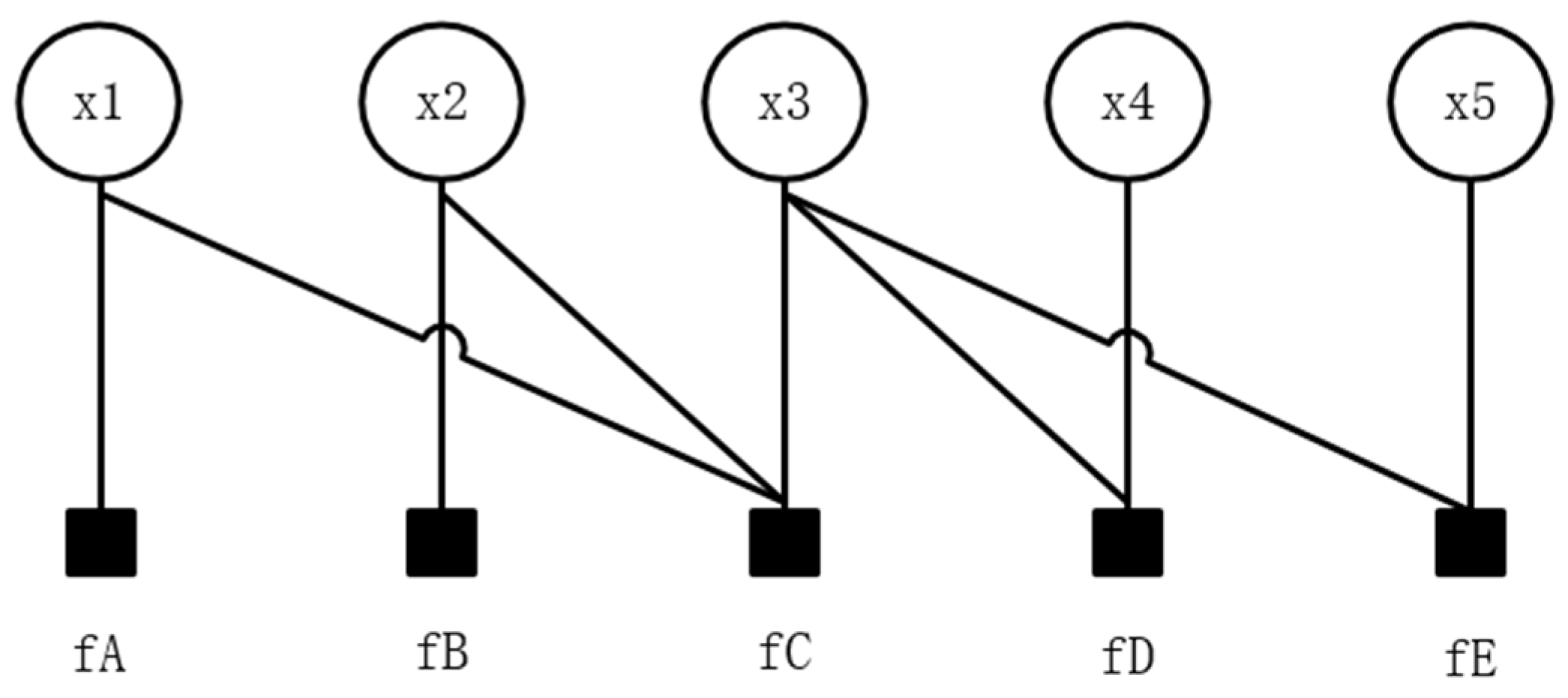

3.2. Factor Graph

3.3. Switching Functions

3.4. Collaborative Positioning

| Algorithm 1 Cooperative Increasing SAM Algorithm |

| Input: IMU_camera_data, UWB_data, GPS_priori; |

| Output: optimized_nodes_values |

| 1: graph = create_graph() |

| 2: for timestep do in parallel |

| 3: initialize_nodes_values(graph, GPS_priori) |

| 4: for each drone do |

| 5: node = create_node(drone, time_step) |

| 6: graph.add_node(VIO_node) |

| 7: Use Equation (19) to calculate the VIO system switching function |

| 8: graph.add_node(switching_node) |

| 9: end for |

| 10: get Topology Matrix D by UWB |

| 11: graph.add_node(UWB_node) |

| 12: Use Equation (21) to calculate the topological system switching function |

| 13: graph.add_node(switching_node) |

| 14: if single_VIO_position = NAN then |

| 15: Recovered position by MDS (27) |

| 16: end if |

| 17: while(times<max_iterations&¬ converged) do |

| 18: optimize_function(graph) |

| 19: end while |

| 20: return optimized_nodes_values |

| 21:end for in parallel |

| 22:return |

4. Simulations

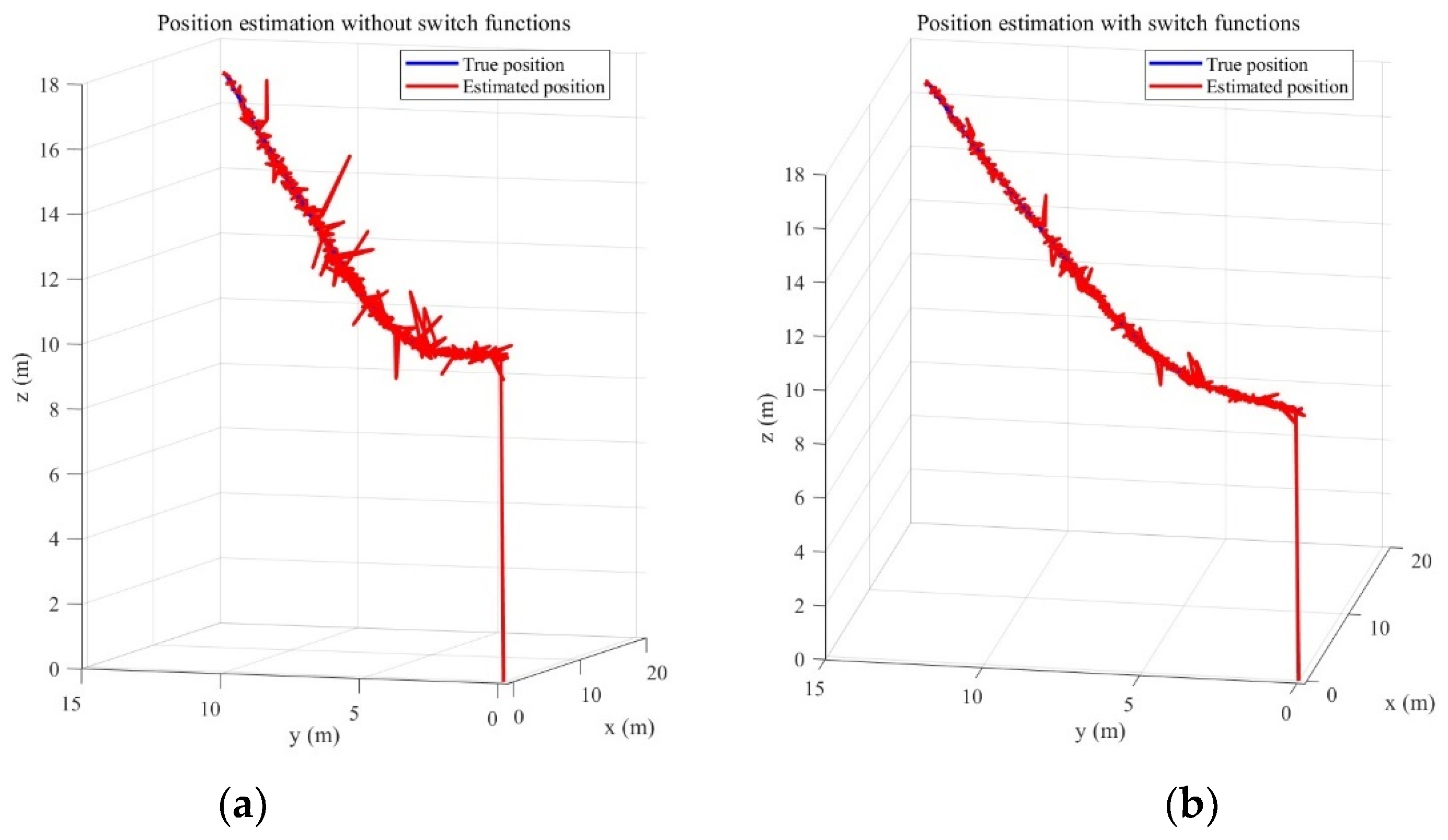

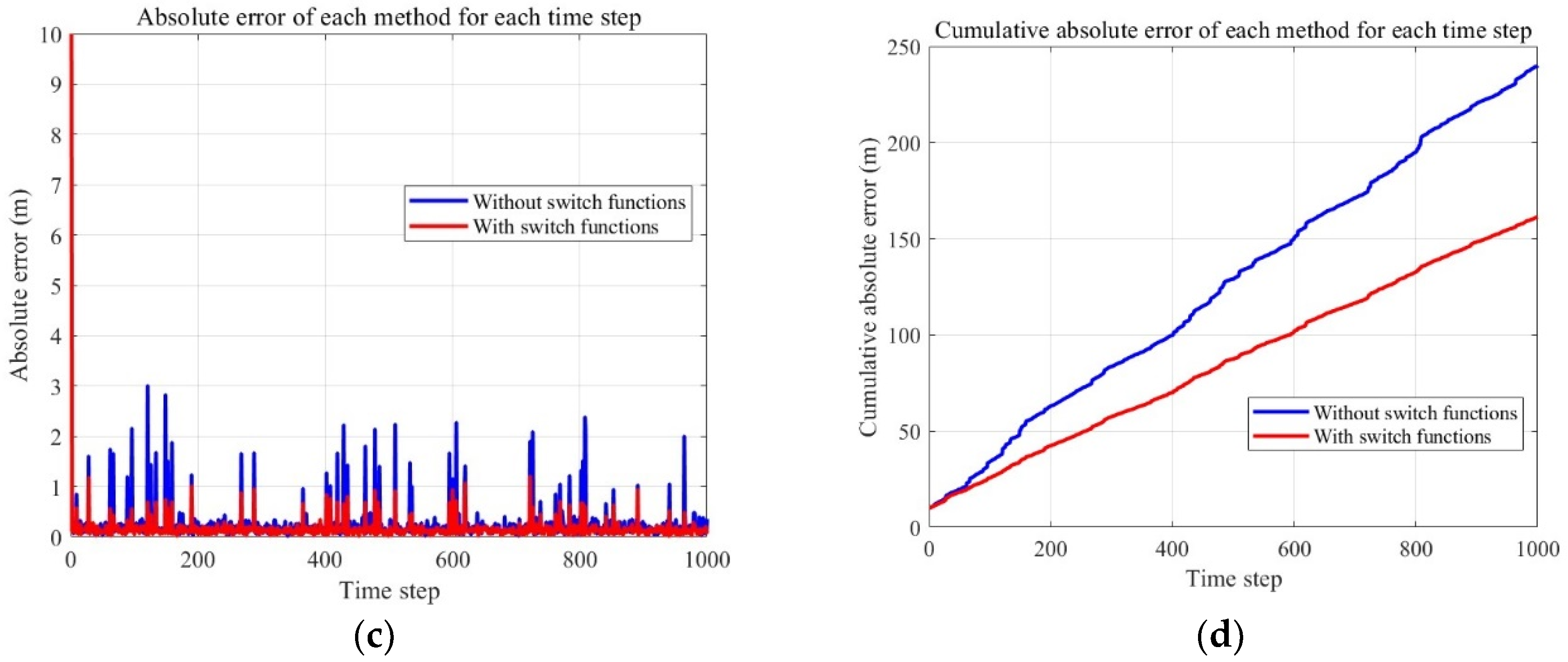

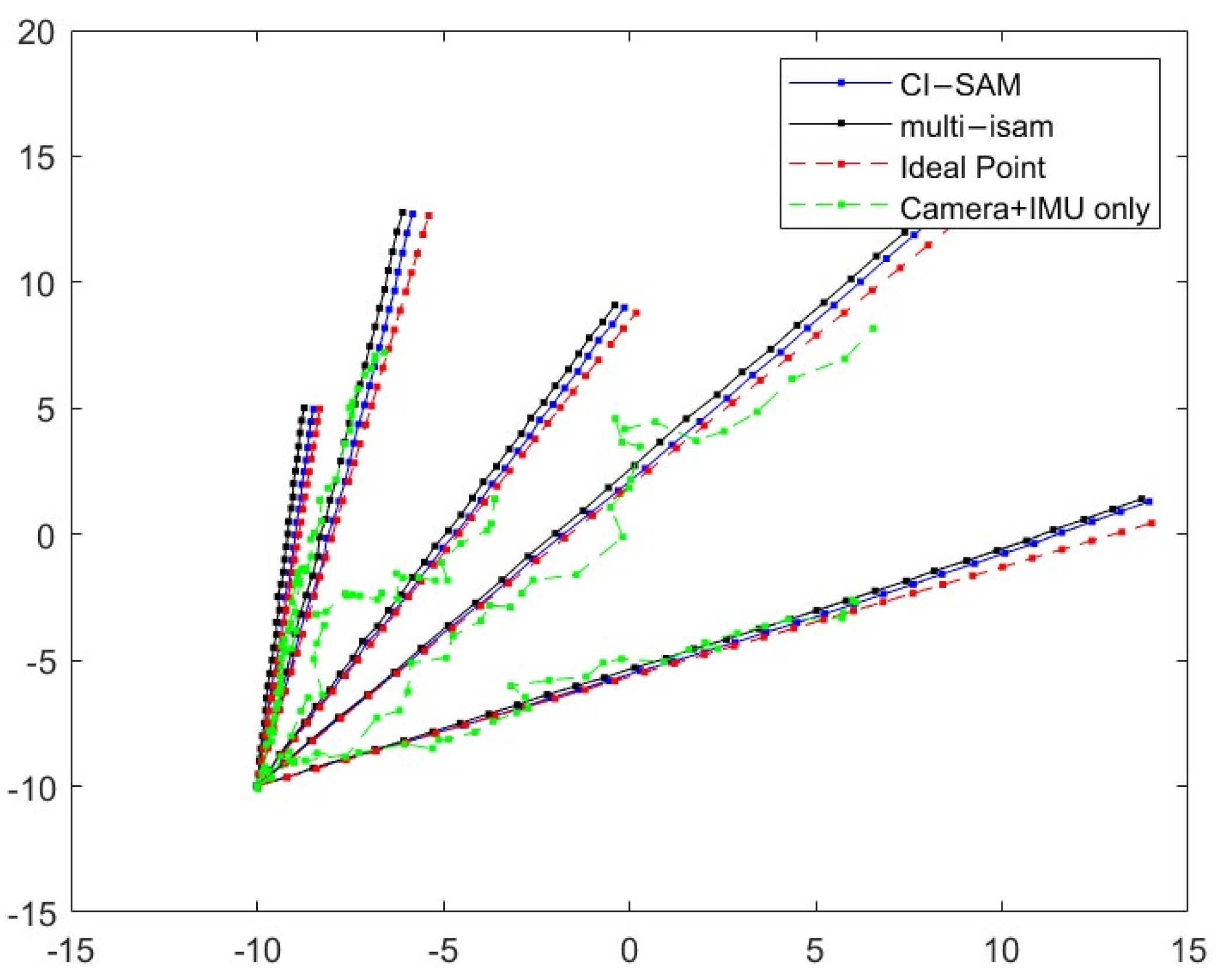

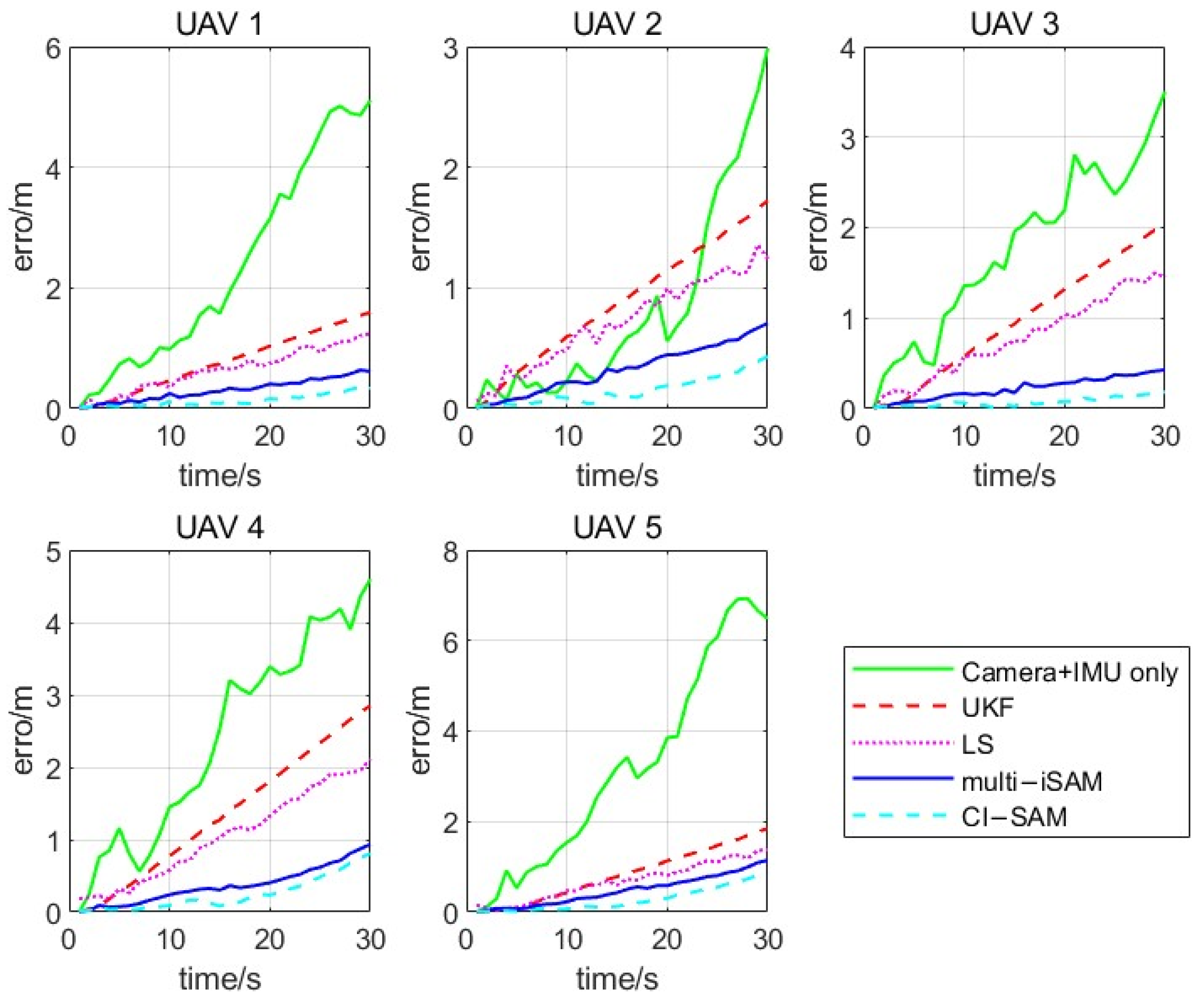

4.1. Experiment 1

4.2. Experiment 2

4.3. Experiment 3

4.4. Experiment 4

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Awasthi, S.; Fernandez-Cortizas, M.; Reining, C.; Arias-Perez, P.; Luna, M.A.; Perez-Saura, D.; Roidl, M.; Gramse, N.; Klokowski, P.; Campoy, P. Micro UAV Swarm for industrial applications in indoor environment–A Systematic Literature Review. Logist. Res. 2023, 16, 1–43. [Google Scholar]

- Dai, J.; Wang, M.; Wu, B.; Shen, J.; Wang, X. A Survey of Latest Wi-Fi Assisted Indoor Positioning on Different Principles. Sensors 2023, 23, 7961. [Google Scholar] [CrossRef] [PubMed]

- Marut, A.; Wojciechowski, P.; Wojtowicz, K.; Falkowski, K. Visual-based landing system of a multirotor UAV in GNSS denied environment. In Proceedings of the 2023 IEEE 10th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Milan, Italy, 19–21 June 2023; pp. 308–313. [Google Scholar]

- Rostum, H.M.; Vásárhelyi, J. A Review of Using Visual Odometery Methods in Autonomous UAV Navigation in GPS-Denied Environment. Acta Univ. Sapientiae Electr. Mech. Eng. 2023, 15, 14–32. [Google Scholar] [CrossRef]

- Sharifi-Tehrani, O.; Ghasemi, M.H. A Review on GNSS-Threat Detection and Mitigation Techniques. Cloud Comput. Data Sci. 2023, 4, 161–185. [Google Scholar] [CrossRef]

- Chen, S.; Yin, D.; Niu, Y. A survey of robot swarms’ relative localization method. Sensors 2022, 22, 4424. [Google Scholar] [CrossRef] [PubMed]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Von Stumberg, L.; Cremers, D. DM-VIO: Delayed marginalization visual-inertial odometry. IEEE Robot. Autom. Lett. 2022, 7, 1408–1415. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Deng, Z.; Qi, H.; Wu, C.; Hu, E.; Wang, R. A cluster positioning architecture and relative positioning algorithm based on pigeon flock bionics. Satell. Navig. 2023, 4, 1. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Wang, J.; Shen, Y. Distributed 3D relative localization of UAVs. IEEE Trans. Veh. Technol. 2020, 69, 11756–11770. [Google Scholar] [CrossRef]

- García-Fernández, Á.F.; Svensson, L.; Särkkä, S. Cooperative localization using posterior linearization belief propagation. IEEE Trans. Veh. Technol. 2017, 67, 832–836. [Google Scholar] [CrossRef]

- Wymeersch, H.; Lien, J.; Win, M.Z. Cooperative localization in wireless networks. Proc. IEEE 2009, 97, 427–450. [Google Scholar] [CrossRef]

- Liu, Y.; Deng, Z.; Hu, E. Multi-sensor fusion positioning method based on batch inverse covariance intersection and IMM. Appl. Sci. 2021, 11, 4908. [Google Scholar] [CrossRef]

- Saska, M.; Baca, T.; Thomas, J.; Chudoba, J.; Preucil, L.; Krajnik, T.; Faigl, J.; Loianno, G.; Kumar, V. System for deployment of groups of unmanned micro aerial vehicles in GPS-denied environments using onboard visual relative localization. Auton. Robot. 2017, 41, 919–944. [Google Scholar] [CrossRef]

- Andersson, L.A.; Nygards, J. C-SAM: Multi-robot SLAM using square root information smoothing. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 2798–2805. [Google Scholar]

- Kaess, M.; Ranganathan, A.; Dellaert, F. iSAM: Incremental smoothing and mapping. IEEE Trans. Robot. 2008, 24, 1365–1378. [Google Scholar] [CrossRef]

- Kaess, M.; Johannsson, H.; Roberts, R.; Ila, V.; Leonard, J.J.; Dellaert, F. iSAM2: Incremental smoothing and mapping using the Bayes tree. Int. J. Robot. Res. 2012, 31, 216–235. [Google Scholar] [CrossRef]

- Kim, B.; Kaess, M.; Fletcher, L.; Leonard, J.; Bachrach, A.; Roy, N.; Teller, S. Multiple relative pose graphs for robust cooperative mapping. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 3185–3192. [Google Scholar]

- Gulati, D.; Zhang, F.; Clarke, D.; Knoll, A. Vehicle infrastructure cooperative localization using factor graphs. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 1085–1090. [Google Scholar]

- Gulati, D.; Zhang, F.; Malovetz, D.; Clarke, D.; Knoll, A. Robust cooperative localization in a dynamic environment using factor graphs and probability data association filter. In Proceedings of the 2017 20th International Conference on Information Fusion (Fusion), Xi’an, China, 10–13 July 2017; pp. 1–6. [Google Scholar]

- Gulati, D.; Zhang, F.; Clarke, D.; Knoll, A. Graph-based cooperative localization using symmetric measurement equations. Sensors 2017, 17, 1422. [Google Scholar] [CrossRef] [PubMed]

- Cheng, C.; Wang, C.; Gao, L.; Zhang, F. Vessel and Underwater Vehicles Cooperative Localization using Topology Factor Graphs. In Proceedings of the OCEANS 2018 MTS/IEEE, Charleston, SC, USA, 22–25 October 2018; pp. 1–4. [Google Scholar]

- Sünderhauf, N.; Protzel, P. Switchable constraints for robust pose graph SLAM. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1879–1884. [Google Scholar]

- Sünderhauf, N.; Obst, M.; Wanielik, G.; Protzel, P. Multipath mitigation in GNSS-based localization using robust optimization. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 784–789. [Google Scholar]

- Gulati, D.; Aravantinos, V.; Somani, N.; Knoll, A. Robust vehicle infrastructure cooperative localization in presence of clutter. In Proceedings of the 2018 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 2225–2232. [Google Scholar]

- Grisetti, G.; Kümmerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Dellaert, F.; Kaess, M. Factor graphs for robot perception. Found. Trends Robot. 2017, 6, 1–139. [Google Scholar] [CrossRef]

- Trawny, N.; Roumeliotis, S.I.; Giannakis, G.B. Cooperative multi-robot localization under communication constraints. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 4394–4400. [Google Scholar]

- Sünderhauf, N.; Protzel, P. Towards a robust back-end for pose graph slam. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 1254–1261. [Google Scholar]

| Parameter | Value | Description |

|---|---|---|

| wx | switching function | |

| 0.2 (m)2 | UWB measurement covariance | |

| 1 (m)2 | VIO system covariance | |

| 0.1 (m)2 | camera covariance | |

| 0.2 (m)2/10° | landmark-bearing covariance | |

| 0.3 (m)2/5.73° | IMU covariance | |

| 0.2 (m)2 | UWB measurement prior value | |

| 1 (m)2/5.73° | VIO system prior value |

| Method | Mean Average Error (m) | Positioning Success Rate (%) |

|---|---|---|

| IMU–camera only | 0.87 | 80 |

| UKF | 0.55 | 93.3 |

| LS | 0.52 | 86.7 |

| Multi-iSAM [19] | 0.44 | 93.3 |

| CI-SAM (this paper) | 0.41 | 100.0 |

| Method | Mean Average Error (m) | Positioning Success Rate (%) |

|---|---|---|

| IMU–camera only | 2.13 | 26.7 |

| UKF | 0.83 | 46.7 |

| LS | 0.77 | 33.3 |

| Multi-iSAM [19] | 0.65 | 66.7 |

| CI-SAM (this paper) | 0.42 | 100.0 |

| Method | Mean Average Error (m) | Positioning Success Rate (%) |

|---|---|---|

| IMU–camera only | 0.88 | 80 |

| UKF | 1.38 | 66.7 |

| LS | 1.45 | 46.7 |

| Multi-iSAM [19] | 0.96 | 66.7 |

| Method in this paper | 0.43 | 100.0 |

| Method | Mean Average Error (m) | Positioning Success Rate (%) |

|---|---|---|

| IMU–camera only | 3.35 | 20 |

| UKF | 2.75 | 46.7 |

| LS | 2.15 | 40 |

| Multi-iSAM [19] | 1.44 | 53.3 |

| Method in this paper | 0.83 | 100.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Deng, Z. Co-Operatively Increasing Smoothing and Mapping Based on Switching Function. Appl. Sci. 2024, 14, 1543. https://doi.org/10.3390/app14041543

Wang R, Deng Z. Co-Operatively Increasing Smoothing and Mapping Based on Switching Function. Applied Sciences. 2024; 14(4):1543. https://doi.org/10.3390/app14041543

Chicago/Turabian StyleWang, Runmin, and Zhongliang Deng. 2024. "Co-Operatively Increasing Smoothing and Mapping Based on Switching Function" Applied Sciences 14, no. 4: 1543. https://doi.org/10.3390/app14041543

APA StyleWang, R., & Deng, Z. (2024). Co-Operatively Increasing Smoothing and Mapping Based on Switching Function. Applied Sciences, 14(4), 1543. https://doi.org/10.3390/app14041543