AI-Driven Innovations in Software Engineering: A Review of Current Practices and Future Directions

Abstract

1. Introduction

- To examine the application of AI across various phases of the software development lifecycle, identifying where AI-driven tools offer the greatest potential for impact.

- To evaluate the benefits of AI integration, including reductions in manual errors, development cycle acceleration, and improved collaboration among cross-functional teams.

- To identify challenges and risks associated with AI-driven software engineering, such as the reliability of AI-generated code, ethical implications, and the possibility of skill degradation among developers.

- To propose actionable recommendations for seamlessly integrating AI into existing workflows, ensuring responsible use and maintaining a balance between automation and human insight.

2. Literature Review

- Relevance: Studies that focus on the integration of AI in software engineering processes.

- Recency: Priority was given to studies published within the past five years to ensure up-to-date analysis.

- Impact: Research with a significant number of citations or from high-impact journals.

- Diversity: A mix of case studies, theoretical analyses, and empirical evaluations to provide a holistic perspective.

- Automated Code Generation: Tools like GitHub Copilot and AlphaCode have demonstrated significant improvements in developer productivity and accuracy. However, concerns remain regarding the reliability and ethical implications of AI-generated code.

- Defect Prediction: Machine learning algorithms trained on historical data enable the early identification of potential bugs, reducing testing time and enhancing software reliability.

- Ethical and Legal Challenges: Bias in AI models and intellectual property concerns require robust frameworks and continuous oversight to ensure responsible adoption.

- Enhancing the capabilities of AI-driven tools to address current limitations in software modeling tasks.

- Developing robust ethical guidelines and legal frameworks to govern the use of AI in SE.

- Creating secure AI systems that mitigate vulnerabilities and ensure data privacy.

- Improving reproducibility and replicability in ML applications within SE to advance research and practical implementations.

- Exploring the impact of AI integration on developer roles, team dynamics, and job requirements.

3. AI Applications in Software Engineering

- Relevance to Industry Applications: Tools and datasets that are widely used in real-world software engineering contexts, such as GitHub Copilot and IBM’s defect prediction tools, were prioritized.

- Recency: Preference was given to tools and datasets published or actively used within the past five years to ensure that the study reflects the current state of the field.

- Accessibility: Open-source datasets and tools with publicly available documentation were selected to facilitate reproducibility.

- Coverage of Development Phases: Examples were chosen to cover diverse phases of the software development lifecycle, including coding, testing, and maintenance.

- Impact and Adoption: The selection emphasized tools and datasets with demonstrated effectiveness, as reported in industry and academic studies.

3.1. Requirements Analysis

3.1.1. Natural Language Processing (NLP) for Requirements Gathering

3.1.2. AI-Driven User Stories

3.2. Design and Architecture

3.2.1. Automated Design Pattern Recognition

3.2.2. Intelligent System Modeling

3.3. Coding and Implementation

3.3.1. AI-Assisted Code Generation

3.3.2. Smart Code Completion and Suggestions

3.4. Testing and Quality Assurance

3.4.1. Automated Test Case Generation

3.4.2. AI in Defect Prediction and Management

3.5. Deployment and Maintenance

3.5.1. Predictive Maintenance Using AI

3.5.2. Automated Monitoring and Alert Systems

4. Case Studies

- Industry Impact: Organizations known for pioneering or significantly influencing AI-driven methodologies in software development (e.g., McKinsey, GitHub, IBM, Microsoft, Snyk, and Google).

- Variety of AI Applications: Each case study addresses different facets of AI integration—such as code generation, defect prediction, and real-time code review—to present a holistic view of AI’s potential.

- Empirical Evidence: Only initiatives with measurable outcomes (e.g., productivity gains or reduction in bugs) were chosen to ensure that concrete data support our analysis.

4.1. McKinsey’s Integration of Generative AI in Software Development

4.2. GitHub Copilot’s Impact on Developer Productivity and Code Quality

4.3. IBM’s AI-Powered Defect Prediction Tool Enhances Software Quality

4.4. Microsoft IntelliCode’s Enhancement of Developer Productivity and Code Quality

4.5. Snyk Code’s AI-Driven Enhancements to Code Quality and Developer Efficiency

4.6. Google’s DeepMind AlphaCode Transforms Code Generation and Problem Solving

4.7. Underexplored Areas and Novelty

- Requirements Engineering: Existing research largely focuses on code-level AI applications; few studies address how AI can automate or improve the capture and analysis of complex, evolving software requirements.

- Domain-Specific AI Tools: Many current solutions target general-purpose software. There is a gap in specialized domains—such as safety-critical, embedded, or high-assurance systems—where compliance and reliability are paramount.

- Long-Term Human–AI Collaboration: Current insights offer snapshots of productivity gains, but long-term impacts on team skill development, morale, and reliance on AI-based suggestions are understudied.

5. Artificial Intelligence on Software Engineering Practices: A Quantitative Analysis

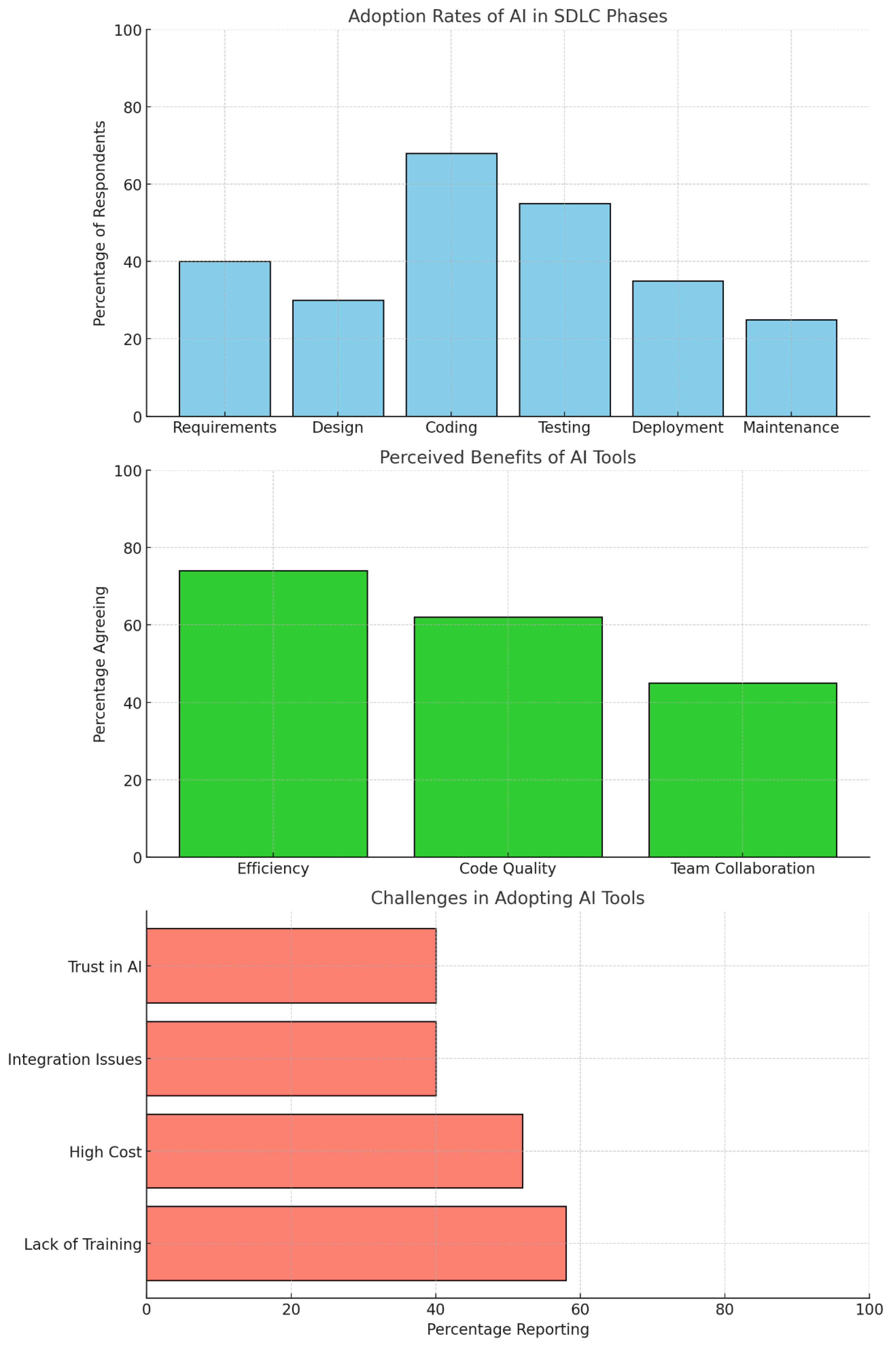

- Adoption Rates: Assessed the frequency of AI tool usage and the specific stages of the SDLC where they are implemented.

- Perceived Benefits: Evaluated improvements in efficiency, productivity, and code quality attributed to AI tools.

- Challenges: Identified barriers to implementation, trust in AI-generated suggestions, and necessary skill shifts.

- Future Outlook: Gauged the willingness of professionals to integrate more AI technologies in the future.

- Usage of AI Tools: In total, 68% of participants reported using AI tools in at least one phase of the SDLC. The most common applications were coding and debugging.

- Popular AI Tools: The tools frequently cited included GitHub Copilot (48%), IntelliCode (30%), and AI-based test automation platforms (22%).

- Efficiency: In total, 74% agreed that AI reduced the time required for routine coding tasks.

- Code Quality: A total of 62% noted a decrease in post-release defects in projects that utilized AI assistance.

- Team Collaboration: In total, 45% acknowledged improved resource allocation due to automated task suggestions.

- Trust in AI: Only 40% expressed complete trust in AI-generated solutions, citing concerns over errors and lack of transparency.

- Skill Gaps: In total, 58% believed that insufficient AI training among team members hindered full adoption.

- Implementation Costs: A total of 52% noted high initial costs as a significant barrier.

- Training Programs: Teams with formal AI training achieved 30% higher efficiency gains.

- Project Size: Medium-to-large projects benefited the most, with a 20% increase in developer productivity.

- Tool Suitability: The customization and alignment of tools with project needs were pivotal, as cited by 68% of respondents.

- Fairness-Aware Models: Implementing fairness-aware algorithms can help mitigate biases in AI-generated recommendations.

- Human-in-the-Loop Systems: Combining AI with human oversight ensures that critical thinking and contextual expertise are preserved.

- Continuous Model Updates: Regular updates and the fine-tuning of AI models based on user feedback and new datasets can enhance performance and reliability.

6. Challenges and Considerations

6.1. Technical Challenges

6.1.1. Data Quality and Availability

6.1.2. Integration with Existing Systems

6.2. Ethical and Legal Issues

6.2.1. Bias in AI Algorithms

6.2.2. Intellectual Property Concerns

6.3. Workforce Implications

6.3.1. Skill Gaps

6.3.2. Resistance to Change

7. Future Directions

- Applicability to Small Startups: Startups with limited resources can use AI tools to automate routine tasks, such as code generation and defect detection, reducing overhead costs. For instance, a small development team could employ GitHub Copilot to streamline coding tasks while focusing their efforts on innovation. Additionally, AI tools offer startups an opportunity to adopt agile practices more effectively, despite their constrained budgets.

- Applicability to Big Companies: In contrast, larger organizations can benefit from AI’s ability to optimize workflows across distributed teams. For example, large-scale enterprises could integrate AI tools into their DevOps pipelines, ensuring continuous integration and delivery. However, big companies must also invest in employee training programs to maximize AI’s potential and address ethical challenges, such as bias in AI algorithms.

- Future Directions for AI in Software Engineering: As the field advances, AI has the potential to foster decentralized software teams that operate autonomously, driven by adaptive AI tools. Additionally, the development of universal benchmarks and ethical standards for AI-driven tools could facilitate cross-industry collaboration, ensuring sustainable and fair AI adoption.

8. Conclusions

- Comprehensive AI Integration Across the Development Lifecycle: Unlike previous research that isolates specific AI applications (e.g., code generation or defect prediction), this study advocates for a cohesive, end-to-end approach. By uniting AI-driven components across planning, development, testing, and maintenance phases, this work highlights AI’s role in creating more adaptive, efficient, and robust software systems.

- Novel Insights into Adaptive and Data-Driven Development: This study demonstrates AI’s capability to learn continuously from evolving codebases and defect datasets, reducing model drift and enhancing long-term reliability. Such adaptivity positions AI not as a static tool but as a collaborative partner capable of evolving with development workflows.

- Balancing Human–AI Collaboration: The findings underscore the indispensable role of human expertise for contextual understanding, strategic oversight, and ethical considerations. This balance between human judgment and AI automation emerges as a critical factor in maximizing the effectiveness and fairness of software engineering practices.

- Identification of Emerging Research Opportunities: By showcasing AI’s current applications in areas such as security and advanced code generation, this study identifies underexplored domains like requirements engineering, safety-critical systems, and human–AI co-evolution. These areas present significant opportunities for impactful future research.

- Scientific Novelty and Future Directions: The study provides an original classification framework for AI methodologies in software engineering, offering a structured lens to analyze and advance the field. Future work should prioritize quantitative studies, ethical frameworks, and interdisciplinary collaborations to deepen this paradigm shift.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Survey Questions

Appendix A.1. Adoption of AI in Software Engineering

- Which phases of the software development lifecycle (SDLC) do you currently use AI tools for?

- Requirements Analysis

- Design

- Coding

- Testing

- Deployment

- Maintenance

- None

- How frequently do you use AI tools in your daily work?

- Always

- Frequently

- Occasionally

- Rarely

- Never

- What are the primary AI tools you use?(Open-ended)

Appendix A.2. Perceived Benefits of AI Tools

- 4.

- To what extent do you agree with the following statement: “AI tools have significantly improved my productivity in software engineering”.(Likert Scale: Strongly Disagree to Strongly Agree)

- 5.

- How much time has AI saved you on routine coding tasks compared to manual efforts?

- No time saved

- 10–25%

- 26–50%

- 51–75%

- Over 75%

- 6.

- Have AI tools reduced errors in your software development process?(Yes/No/Not Sure)

- 7.

- To what extent do you agree that AI tools improve code quality by following best practices?(Likert Scale: Strongly Disagree to Strongly Agree)

- 8.

- Have AI tools enhanced collaboration within your team?(Yes/No)

Appendix A.3. Challenges in Using AI Tools

- 9.

- What challenges have you faced in adopting AI tools?(Select all that apply)

- Lack of training

- High cost

- Integration issues

- Trust in AI

- Resistance to change

- Other

- 10.

- Do you feel sufficiently trained to use AI tools effectively in your work?(Likert Scale: Strongly Disagree to Strongly Agree)

- 11.

- How much do you trust the accuracy and reliability of AI-generated suggestions?(Likert Scale: Strongly Disagree to Strongly Agree)

- 12.

- Have you experienced any ethical or legal concerns when using AI tools?(Yes/No)

Appendix A.4. Outcomes and Future Integration

- 13.

- How have AI tools impacted project completion timelines in your organization?

- Significantly shortened

- Somewhat shortened

- No impact

- Lengthened

- 14.

- Do you believe that AI tools will become a core component of future software development workflows?(Yes/No/Maybe)

- 15.

- What improvements or features would you like to see in AI tools to enhance their effectiveness?(Open-ended)

Appendix A.5. Demographics and Context

- 16.

- What is your primary role in software engineering?

- Developer

- Tester

- Manager

- Architect

- Other

- 17.

- How many years of experience do you have in software engineering?

- Less than 2 years

- 2–5 years

- 6–10 years

- Over 10 years

- 18.

- What is the size of your organization?

- Small (<50 employees)

- Medium (50–500 employees)

- Large (>500 employees)

- 19.

- What industry sector do you work in?

- Technology

- Finance

- Healthcare

- Education

- Other

- 20.

- Have you received formal training on AI tools for software engineering?(Yes/No)

References

- Gurcan, F.; Dalveren, G.G.M.; Cagiltay, N.E.; Soylu, A. Detecting latent topics and trends in software engineering research since 1980 using probabilistic topic modeling. IEEE Access 2022, 10, 74638–74654. [Google Scholar] [CrossRef]

- Inkollu, K.; Gorle, S.K.; Kondabattula, S.R.; Shankar, P.B.; Reddy, M.B. A Review on Software Engineering: Perspective of Emerging Technologies & Challenges. In Proceedings of the Eighth International Conference on Research in Intelligent Computing in Engineering, Hyderabad, India, 1–2 December 2023; pp. 23–27. [Google Scholar]

- Kuhrmann, M.; Tell, P.; Hebig, R.; Klünder, J.; Münch, J.; Linssen, O.; Pfahl, D.; Felderer, M.; Prause, C.R.; MacDonell, S.G.; et al. What makes agile software development agile? IEEE Trans. Softw. Eng. 2021, 48, 3523–3539. [Google Scholar] [CrossRef]

- Forsgren, N. DevOps delivers. Commun. ACM 2018, 61, 32–33. [Google Scholar] [CrossRef]

- Gall, M.; Pigni, F. Taking DevOps mainstream: A critical review and conceptual framework. Eur. J. Inf. Syst. 2022, 31, 548–567. [Google Scholar] [CrossRef]

- Sauvola, J.; Tarkoma, S.; Klemettinen, M.; Riekki, J.; Doermann, D. Future of software development with generative AI. Autom. Softw. Eng. 2024, 31, 26. [Google Scholar] [CrossRef]

- Barenkamp, M.; Rebstadt, J.; Thomas, O. Applications of AI in classical software engineering. AI Perspect. 2020, 2, 1. [Google Scholar] [CrossRef]

- Bader, J.; Kim, S.S.; Luan, F.S.; Chandra, S.; Meijer, E. AI in software engineering at Facebook. IEEE Softw. 2021, 38, 52–61. [Google Scholar] [CrossRef]

- Chen, M.; Tworek, J.; Jun, H.; Yuan, Q.; Pinto, H.P.D.O.; Kaplan, J.; Edwards, H.; Burda, Y.; Joseph, N.; Brockman, G.; et al. Evaluating large language models trained on code. arXiv 2021, arXiv:2107.03374. [Google Scholar]

- Li, Y.; Wang, S.; Nguyen, T.N. Dear: A novel deep learning-based approach for automated program repair. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 21–29 May 2022; pp. 511–523. [Google Scholar]

- de Moor, A.; van Deursen, A.; Izadi, M. A transformer-based approach for smart invocation of automatic code completion. In Proceedings of the 1st ACM International Conference on AI-Powered Software, Porto de Galinhas, Brazil, 15–16 July 2024; pp. 28–37. [Google Scholar]

- Li, Y.; Choi, D.; Chung, J.; Kushman, N.; Schrittwieser, J.; Leblond, R.; Eccles, T.; Keeling, J.; Gimeno, F.; Dal Lago, A.; et al. Competition-level code generation with alphacode. Science 2022, 378, 1092–1097. [Google Scholar] [CrossRef]

- Giray, G.; Bennin, K.E.; Köksal, Ö.; Babur, Ö.; Tekinerdogan, B. On the use of deep learning in software defect prediction. J. Syst. Softw. 2023, 195, 111537. [Google Scholar] [CrossRef]

- Li, L.; Ding, S.X.; Peng, X. Distributed data-driven optimal fault detection for large-scale systems. J. Process Control 2020, 96, 94–103. [Google Scholar] [CrossRef]

- Sawant, P.D. Test Case Prioritization for Regression Testing Using Machine Learning. In Proceedings of the 2024 IEEE International Conference on Artificial Intelligence Testing (AITest), Shanghai, China, 15–18 July 2024; pp. 152–153. [Google Scholar]

- Haji Mohammadkhani, A. Explainable AI for Software Engineering: A Systematic Review and an Empirical Study. Master’s Thesis, University of Calgary, Calgary, AB, Canada, 2023. Available online: https://prism.ucalgary.ca/handle/1880/115792 (accessed on 25 November 2024).

- Tamanampudi, V.M. Deep Learning Models for Continuous Feedback Loops in DevOps: Enhancing Release Cycles with AI-Powered Insights and Analytics. J. Artif. Intell. Res. Appl. 2022, 2, 425–463. [Google Scholar]

- Kokol, P. The Use of AI in Software Engineering: A Synthetic Knowledge Synthesis of the Recent Research Literature. Information 2024, 15, 354. [Google Scholar] [CrossRef]

- Amugongo, L.M.; Kriebitz, A.; Boch, A.; Lütge, C. Operationalising AI ethics through the agile software development lifecycle: A case study of AI-enabled mobile health applications. AI Ethics 2023, 1–18. [Google Scholar] [CrossRef]

- Kalliamvakou, E. Research: Quantifying GitHub Copilot’s Impact on Developer Productivity and Happiness. 2022. Available online: https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-on-developer-productivity-and-happiness/ (accessed on 11 November 2024).

- Odeh, A.; Odeh, N.; Mohammed, A.S. A Comparative Review of AI Techniques for Automated Code Generation in Software Development: Advancements, Challenges, and Future Directions. TEM J. 2024, 13, 726–739. [Google Scholar] [CrossRef]

- France, S.L. Navigating software development in the ChatGPT and GitHub Copilot era. Bus. Horizons 2024, 67, 649–661. [Google Scholar] [CrossRef]

- Bull, C.; Kharrufa, A. Generative AI Assistants in Software Development Education: A vision for integrating Generative AI into educational practice, not instinctively defending against it. IEEE Softw. 2024, 41, 52–59. [Google Scholar] [CrossRef]

- Royce, W.W. Managing the development of large software systems. Proc. IEEE WESCON 1970, 26, 328–388. [Google Scholar]

- Beck, K.; Beedle, M.; Van Bennekum, A.; Cockburn, A.; Cunningham, W.; Fowler, M.; Grenning, J.; Highsmith, J.; Hunt, A.; Jeffries, R.; et al. Manifesto for Agile Software Development. 2001. Available online: http://agilemanifesto.org/ (accessed on 25 November 2024).

- Humble, J.; Farley, D. Continuous Delivery: Reliable Software Releases Through Build, Test, and Deployment Automation; Pearson Education: London, UK, 2010. [Google Scholar]

- Smith, J.; Brown, T.; Wilson, R. Unlocking Developer Productivity: A Deep Dive into GitHub Copilot’s AI-Powered Code Completion. Int. J. Eng. Res. Technol. 2023, 13, 82–87. [Google Scholar]

- Qian, C.; Liu, W.; Liu, H.; Chen, N.; Dang, Y.; Li, J.; Yang, C.; Chen, W.; Su, Y.; Cong, X.; et al. ChatDev: Communicative Agents for Software Development. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 15174–15186. [Google Scholar]

- Ernst, N.A.; Bavota, G. Ai-driven development is here: Should you worry? IEEE Softw. 2022, 39, 106–110. [Google Scholar] [CrossRef]

- Cámara, J.; Troya, J.; Burgueño, L.; Vallecillo, A. On the assessment of generative AI in modeling tasks: An experience report with ChatGPT and UML. Softw. Syst. Model. 2023, 22, 781–793. [Google Scholar] [CrossRef]

- Borges, O.; Lima, M.; Couto, J.; Gadelha, B.; Conte, T.; Prikladnicki, R. ML@ SE: What do we know about how Machine Learning impact Software Engineering practice? In Proceedings of the 2022 17th Iberian Conference on Information Systems and Technologies (CISTI), Madrid, Spain, 22–25 June 2022; pp. 1–7. [Google Scholar]

- Mezouar, H.; Afia, A.E. A systematic literature review of machine learning applications in software engineering. In Proceedings of the International Conference on Big Data and Internet of Things, Chengdu, China, 2–4 December 2022; pp. 317–331. [Google Scholar]

- Wang, S.; Huang, L.; Gao, A.; Ge, J.; Zhang, T.; Feng, H.; Satyarth, I.; Li, M.; Zhang, H.; Ng, V. Machine/deep learning for software engineering: A systematic literature review. IEEE Trans. Softw. Eng. 2022, 49, 1188–1231. [Google Scholar] [CrossRef]

- Aniche, M.; Maziero, E.; Durelli, R.; Durelli, V.H. The effectiveness of supervised machine learning algorithms in predicting software refactoring. IEEE Trans. Softw. Eng. 2020, 48, 1432–1450. [Google Scholar] [CrossRef]

- Durelli, V.H.; Durelli, R.S.; Borges, S.S.; Endo, A.T.; Eler, M.M.; Dias, D.R.; Guimarães, M.P. Machine learning applied to software testing: A systematic mapping study. IEEE Trans. Reliab. 2019, 68, 1189–1212. [Google Scholar] [CrossRef]

- Bird, C.; Ford, D.; Zimmermann, T.; Forsgren, N.; Kalliamvakou, E.; Lowdermilk, T.; Gazit, I. Taking flight with copilot. Commun. ACM 2023, 66, 56–62. [Google Scholar] [CrossRef]

- Lu, Q.; Zhu, L.; Whittle, J.; Michael, J.B. Software engineering for responsible AI. Computer 2023, 56, 13–16. [Google Scholar] [CrossRef]

- Gonzalez, L.A.; Neyem, A.; Contreras-McKay, I.; Molina, D. Improving learning experiences in software engineering capstone courses using artificial intelligence virtual assistants. Comput. Appl. Eng. Educ. 2022, 30, 1370–1389. [Google Scholar] [CrossRef]

- Sofian, H.; Yunus, N.A.M.; Ahmad, R. Systematic mapping: Artificial intelligence techniques in software engineering. IEEE Access 2022, 10, 51021–51040. [Google Scholar] [CrossRef]

- Meriçli, Ç.; Turhan, B. Special section on realizing artificial intelligence synergies in software engineering. Softw. Qual. J. 2017, 25, 231–233. [Google Scholar] [CrossRef][Green Version]

- Shehab, M.; Abualigah, L.; Jarrah, M.I.; Alomari, O.A.; Daoud, M.S. Artificial intelligence in software engineering and inverse. Int. J. Comput. Integr. Manuf. 2020, 33, 1129–1144. [Google Scholar] [CrossRef]

- Mashkoor, A.; Menzies, T.; Egyed, A.; Ramler, R. Artificial intelligence and software engineering: Are we ready? Computer 2022, 55, 24–28. [Google Scholar] [CrossRef]

- Marar, H.W. Advancements in software engineering using AI. Comput. Softw. Media Appl. 2024, 6, 3906. [Google Scholar] [CrossRef]

- Necula, S.C.; Dumitriu, F.; Greavu-Șerban, V. A Systematic Literature Review on Using Natural Language Processing in Software Requirements Engineering. Electronics 2024, 13, 2055. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Raharjana, I.K.; Siahaan, D.; Fatichah, C. User stories and natural language processing: A systematic literature review. IEEE Access 2021, 9, 53811–53826. [Google Scholar] [CrossRef]

- Dwivedi, A.K.; Tirkey, A.; Ray, R.B.; Rath, S.K. Software design pattern recognition using machine learning techniques. In Proceedings of the 2016 IEEE Region 10 Conference (Tencon), Singapore, 22–25 November 2016; pp. 222–227. [Google Scholar]

- André, P.; Tebib, M.E.A. Assistance in Model Driven Development: Toward an Automated Transformation Design Process. Complex Syst. Informatics Model. Q. 2024, 38, 54–99. [Google Scholar] [CrossRef]

- Barke, S.; James, M.B.; Polikarpova, N. Grounded copilot: How programmers interact with code-generating models. Proc. ACM Program. Lang. 2023, 7, 85–111. [Google Scholar] [CrossRef]

- Alenezi, M.; Akour, M. Empowering student entrepreneurship skills: A software engineering course for innovation and real-world impact. J. Infrastruct. Policy Dev. 2024, 8, 9088. [Google Scholar] [CrossRef]

- Magabaleh, A.A.; Ghraibeh, L.L.; Audeh, A.Y.; Albahri, A.; Deveci, M.; Antucheviciene, J. Systematic Review of Software Engineering Uses of Multi-Criteria Decision-Making Methods: Trends, Bibliographic Analysis, Challenges, Recommendations, and Future Directions. Appl. Soft Comput. 2024, 163, 111859. [Google Scholar] [CrossRef]

- Zarour, M.; Akour, M.; Alenezi, M. Enhancing DevOps Engineering Education Through System-Based Learning Approach. Open Educ. Stud. 2024, 6, 20240012. [Google Scholar] [CrossRef]

- Bu, L.; Liang, Y.; Xie, Z.; Qian, H.; Hu, Y.Q.; Yu, Y.; Chen, X.; Li, X. Machine learning steered symbolic execution framework for complex software code. Form. Asp. Comput. 2021, 33, 301–323. [Google Scholar] [CrossRef]

- Wang, S.; Liu, T.; Nam, J.; Tan, L. Deep semantic feature learning for software defect prediction. IEEE Trans. Softw. Eng. 2018, 46, 1267–1293. [Google Scholar] [CrossRef]

- Bowes, D.; Hall, T.; Petrić, J. Software defect prediction: Do different classifiers find the same defects? Softw. Qual. J. 2018, 26, 525–552. [Google Scholar] [CrossRef]

- Almodovar, C.; Sabrina, F.; Karimi, S.; Azad, S. LogFiT: Log anomaly detection using fine-tuned language models. IEEE Trans. Netw. Serv. Manag. 2024, 21, 1715–1723. [Google Scholar] [CrossRef]

- Deniz, B.K.; Gnanasambandam, C.; Harrysson, M.; Hussin, A.; Srivastava, S. Unleashing Developer Productivity with Generative AI. McKinsey Digital. 2023. Available online: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/unleashing-developer-productivity-with-generative-ai (accessed on 10 November 2024).

- Svyatkovskiy, A.; Zhao, Y.; Fu, S.; Sundaresan, N. Pythia: Ai-assisted code completion system. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2727–2735. [Google Scholar]

- DeepCode AI|AI Code Review|AI Security for SAST|Snyk AI|Snyk. Available online: https://snyk.io/platform/deepcode-ai/ (accessed on 11 November 2024).

- Amershi, S.; Begel, A.; Bird, C.; DeLine, R.; Gall, H.; Kamar, E.; Nagappan, N.; Nushi, B.; Zimmermann, T. Software engineering for machine learning: A case study. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Montreal, QC, Canada, 25–31 May 2019; pp. 291–300. [Google Scholar]

- Davoudian, A.; Liu, M. Big data systems: A software engineering perspective. ACM Comput. Surv. (CSUR) 2020, 53, 1–39. [Google Scholar] [CrossRef]

- Novichkov, P.S.; Chandonia, J.M.; Arkin, A.P. CORAL: A framework for rigorous self-validated data modeling and integrative, reproducible data analysis. GigaScience 2022, 11, giac089. [Google Scholar] [CrossRef]

- Russo, D. Navigating the complexity of generative ai adoption in software engineering. ACM Trans. Softw. Eng. Methodol. 2024, 33, 1–50. [Google Scholar] [CrossRef]

- Belgaum, M.R.; Alansari, Z.; Musa, S.; Alam, M.M.; Mazliham, M. Role of artificial intelligence in cloud computing, IoT and SDN: Reliability and scalability issues. Int. J. Electr. Comput. Eng. 2021, 11, 4458. [Google Scholar] [CrossRef]

- Said, M.A.; Ezzati, A.; Mihi, S.; Belouaddane, L. Microservices adoption: An industrial inquiry into factors influencing decisions and implementation strategies. Int. J. Comput. Digit. Syst. 2024, 15, 1417–1432. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Holstein, K.; Wortman Vaughan, J.; Daumé III, H.; Dudik, M.; Wallach, H. Improving fairness in machine learning systems: What do industry practitioners need? In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–16. [Google Scholar]

- Orphanou, K.; Otterbacher, J.; Kleanthous, S.; Batsuren, K.; Giunchiglia, F.; Bogina, V.; Tal, A.S.; Hartman, A.; Kuflik, T. Mitigating bias in algorithmic systems—A fish-eye view. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar] [CrossRef]

- Degli Esposti, M.; Lagioia, F.; Sartor, G. The use of copyrighted works by AI systems: Art works in the data Mill. Eur. J. Risk Regul. 2020, 11, 51–69. [Google Scholar] [CrossRef]

- Chatterjee, S.; NS, S. Artificial intelligence and human rights: A comprehensive study from Indian legal and policy perspective. Int. J. Law Manag. 2022, 64, 110–134. [Google Scholar] [CrossRef]

- Li, P.; Huang, J.; Zhang, S.; Qi, C. SecureEI: Proactive intellectual property protection of AI models for edge intelligence. Comput. Netw. 2024, 255, 110825. [Google Scholar] [CrossRef]

- Karthikeyan, C.; Singh, S. Skill Development Challenges in the Era of Artificial Intelligence (AI). In Integrating Technology in Problem-Solving Educational Practices; IGI Global: Hershey, PA, USA, 2025; pp. 189–218. [Google Scholar]

- Wang, D.; Weisz, J.D.; Muller, M.; Ram, P.; Geyer, W.; Dugan, C.; Tausczik, Y.; Samulowitz, H.; Gray, A. Human-AI collaboration in data science: Exploring data scientists’ perceptions of automated AI. Proc. ACM Hum.-Comput. Interact. 2019, 3, 1–24. [Google Scholar] [CrossRef]

- Kelley, S. Employee perceptions of the effective adoption of AI principles. J. Bus. Ethics 2022, 178, 871–893. [Google Scholar] [CrossRef]

- La Torre, D.; Colapinto, C.; Durosini, I.; Triberti, S. Team formation for human-artificial intelligence collaboration in the workplace: A goal programming model to foster organizational change. IEEE Trans. Eng. Manag. 2021, 70, 1966–1976. [Google Scholar] [CrossRef]

| Era | Methodologies | Key Features | Introduction/Adoption Period | References |

|---|---|---|---|---|

| Traditional | Waterfall model | Linear, sequential approach to software development | 1970s | Royce, 1970 [24] |

| Iterative | Agile, Scrum | Flexible, iterative approach emphasizing collaboration | 1990s | Beck et al., 2001 [25] |

| Modern | DevOps, continuous integration | Automated testing, continuous deployment practices | 2010s | Humble and Farley, 2010 [26] |

| AI-Driven | AI-powered tools, machine learning | Automated coding, adaptive software maintenance | 2020s | Smith et al., 2023 (GitHub Copilot) [27], Qian et al., 2024 [28] |

| Metric | Mean Score (Out of 5) | Std. Deviation |

|---|---|---|

| Reduction in Coding Time | 4.2 | 0.7 |

| Improvement in Code Quality | 4.0 | 0.8 |

| Increase in Team Collaboration | 3.7 | 0.9 |

| Challenges in Skill Development | 3.9 | 1.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alenezi, M.; Akour, M. AI-Driven Innovations in Software Engineering: A Review of Current Practices and Future Directions. Appl. Sci. 2025, 15, 1344. https://doi.org/10.3390/app15031344

Alenezi M, Akour M. AI-Driven Innovations in Software Engineering: A Review of Current Practices and Future Directions. Applied Sciences. 2025; 15(3):1344. https://doi.org/10.3390/app15031344

Chicago/Turabian StyleAlenezi, Mamdouh, and Mohammed Akour. 2025. "AI-Driven Innovations in Software Engineering: A Review of Current Practices and Future Directions" Applied Sciences 15, no. 3: 1344. https://doi.org/10.3390/app15031344

APA StyleAlenezi, M., & Akour, M. (2025). AI-Driven Innovations in Software Engineering: A Review of Current Practices and Future Directions. Applied Sciences, 15(3), 1344. https://doi.org/10.3390/app15031344