Abstract

Laminated panels are widely used in industry, and their quality inspection has traditionally relied on manual labor, which is time-consuming and prone to errors. Automated detection can significantly improve efficiency and reduce human error. With prior knowledge, object detectors focus on updating model structures to improve performance. Despite initial success, most methods become increasingly complex and time-consuming for industrial applications while also neglecting the object distributions in the industrial dataset, especially in the context of industrial laminated panels. All these issues have led to missed and false detections of objects in this scene. We therefore propose a prior-enhanced resolution-extended object detector framework (PERE) for industrial scenarios to solve these issues while enhancing detection accuracy and efficiency. PERE explores the spatial connection of objects and seeks the latent information within the process of forward propagation. PERE introduces the prior-enhanced network (MRPE) and the resolution-extended network (REN) to replace initial modules in one-stage object detectors. MRPE extracts prior knowledge from the spatial distribution of objects in industrial scenes, migrating false detections caused by feature similarities. REN incorporates super-resolution information during the upsampling process to minimize the risk of missing tiny targets. At the same time, we have built a new dataset SPI for studying this topic. Comprehensive experiments show that PERE significantly improves efficiency and performance in object detection within industrial scenes.

1. Introduction

The laminated panel is one kind of industrial assembly element. With the advantage of low cost, high safety, and shorter construction cycles, it plays a pivotal role in the industrial construction field. However, laminated panels can hardly be directly assembled because of the difference in shape, direction, and other details between components within them. Meanwhile, specific information such as locations and categories of different items is vital for subsequent processes such as splicing and filtering. Therefore, the efficient localization and classification of the components within the laminated panels such as the truss and steel bars are crucial for practical deployment.

Currently, the localization of components in the laminated panels relies deeply on manual methods, which are inefficient and labor-intensive for large-scale industrial production. At the same time, using vision algorithms to achieve automatic screening brings many benefits. Among these vision algorithms, optical-based detectors possess fewer parameters and more fitness for the tasks. Present optical-based object detectors can be categorized into two-stage detectors and one-stage detectors. At the expense of more handling time, two-stage detectors [1] first generate a large number of candidate frames and subsequently obtain more accurate results through post-processing. On the contrary, one-stage detectors [2,3,4] screen the image input directly and produce detection results quickly with the aid of predefined anchor frames. Therefore, the utilization of one-stage detectors benefits from the model’s properties of quick handling and end-to-end processing, which suits industrial needs more nowadays.

Therefore, one-stage object detectors are widely utilized in industrial object detection scenarios, such as lane detection tracking in industrial spots [5] and positioning, along with quality inspection of workshop accessories [6]. However, when applying one-stage detectors, the lack of adaptive model tuning and optimization for these unique tasks may cause bad detection performance. For instance, in tree detection, low contrast and lighting between the background and tree regions, with significant variance in the scales of trees, results in poor classification and localization. A simple solution to tackle this difficulty is to correlate the context between different categories by introducing local prior information to optimize the process of generating features. Liu and Aayush have worked on improving pedestrian and cell detection, respectively, by using pipelines that include prior positional details [7,8]. These methods use global information from HOG features or cellular features during training. While these approaches do lead to better results, they still struggle with detecting small objects with pixel dimensions less than in a high-resolution image of . As a result, the accuracy of detection in these cases often falls short. CCNET addresses this issue by introducing criss-cross attention and gathering context in horizontal and vertical directions for every pixel in the feature map [9]. Some other existing methods have tried to improve this by applying techniques like improved channel-space dual-attention mechanisms, extra supervised branches, or joint training [10,11,12] to initial models. Inspired by these proven methods for specific industrial scenarios, we designed a new frame for laminated panels to reduce missed and false detections in industrial scenarios while improving detector performance. The frame fully utilizes the latent spatial distribution within the images, facilitating the performance of the origin features. By summarizing the main difficulties faced in this task, it can be concluded that industrial laminated panel scenes have a single texture and color tone, and there is a degree of similarity between different objects. The limited diversity in the dataset impedes the model’s ability to effectively differentiate between regions of the image that share similar characteristics.

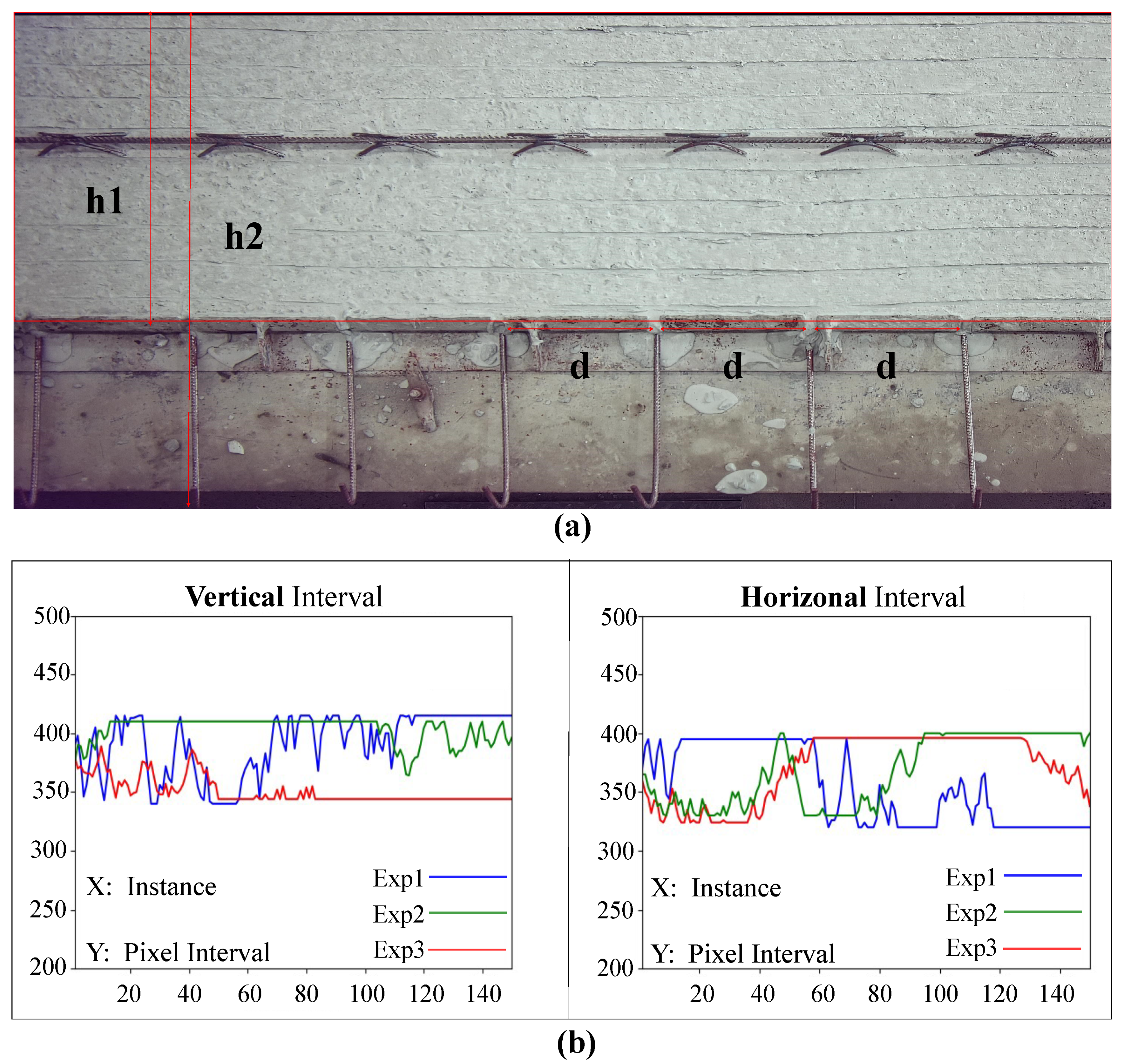

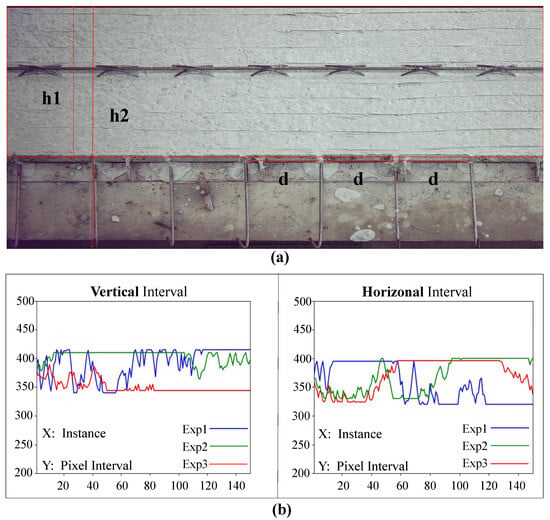

Considering the possibility of deployment in industrial applications, the method of introducing prior information on a one-stage detector is more practical and attractive. To achieve this goal, the CNN detectors for industrial scenarios should learn to accurately and adaptively generate exact positive proposals of different objects at the right locations as well as avoid duplications. Therefore, we perform an analysis based on industrial scenes, as shown in Figure 1a. Among them, h1 represents the length of the panel in the entire scene, h2 represents the length of the entire image, and d represents the spacing between the steel bars in the scene. It can be seen that h1 is at least half of h2, and the value of d is fixed. The analysis reveals prior information available in the laminated panel scene. The boundaries of the laminated panels always occupy a large part of the original image, and there are the same intervals between the joints of the outer rebars. Prior information is then summarized through these obvious phenomena. It is concluded that the adjacent outer bars are always aligned horizontally or vertically and have almost the same spacing. Meanwhile, the area of a laminated panel is no more than 65 percent of the whole picture. A more intuitive presentation of the results is shown in Figure 1b, where 150 edge rebars are randomly selected in the examples and the distances between these examples are calculated in both the horizontal and vertical directions.

Figure 1.

The range of the laminated-panel bounding box is shown in the red rectangular box. Figure (a) represents the analysis of the spacing and length of objects within the scene, where h1 and h2 are the height of the laminated panel and the entire image, respectively, and d is the spacing between steel bars. Figure (b) represents the length statistics of the spacing between steel bars.

Subsequently, we propose a prior-enhanced resolution-extended network for industrial scenarios (PERE). In PERE, we design relevant modules based on architectural modifications of a one-stage detector. To be more specific, a new framework, named the multi-class perceptual prior-enhanced network (MRPE), integrates prior information with industrial scenes to reduce misdetections of similar objects. This is achieved by introducing a scene graph and then restricting the spatial extent of each category during training. MRPE applies different restrictions based on known categories and enhances the feature performance by combining these limits with an improved attention module, reinforcing the detection efficiency. In addition, to address the poor representation of tiny objects, we propose a resolution-extended network (REN), which compensates for low-resolution objects by expanding feature maps at multiple scales. The feature is first expanded in horizontal and vertical directions and then pixels within the feature maps are shuffled into an extremely large feature map, and finally, new weights are generated for further feature fusion. REN increases the chances of tiny objects being noticed by the network, improving the accuracy of the localization. By combining the MRPE and REN modules with the initial network’s aggregation, we achieve more reliable confidence scores for each category.

Specifically, the contributions of this paper are as follows:

- We propose a module of the multi-class perceptual prior-enhanced network (MRPE) for industrial scenarios, which generates prior information based on the object distribution to mitigate the object feature similarity-induced false detection problem.

- We propose the extended resolution network (REN) module, which provides additional information compensation based on varying appearances and scales, improving tiny object detection quality.

- We propose a new dataset named SPI especially focusing on laminated panel scenes, which is in the dataset of industrial scenarios, and most representatives are due to scale differences and occlusion issues between targets. Extensive experiments conducted on industrial datasets demonstrate the superiority of our proposed MRPE and REN.

2. Related Work

2.1. Object Detection Using Prior Information

The incorporation of prior information to improve object detection training brings many benefits to practical tasks. Probabilistic statistics are often utilized to formulate prior information [13], which helps enhance model performance by introducing compensations or constraints. A notable example of this is Faster-RCNN [14], which introduces a two-stage object detection framework. This approach evolves from the use of a fixed set of pre-selected RCNN boxes [1] to the implementation of selective search for generating proposals. This approach exemplifies how prior information can be generated and applied effectively. Liu et al. first use probability distributions of object locations in a motion object detection algorithm based on prior probabilities [15]. Similarly, Zhao et al. utilized infrared data to create approximate prior spectra for hyperspectral maritime vessel detection [16].

These methods primarily focus on the detector’s structure, overlooking the spatial distribution patterns within the scenarios. To address this, Zhang et al. proposed a separate spatial semantic fusion module that leverages the attention mechanism for improved object detection and segmentation through spatial semantic information fusion [17]. Yang introduced a data-driven approach to image perception, effectively utilizing spatial information for object recognition within scenes [18]. Additionally, in applications using prior information, efficiency is crucial. Therefore, simplifying object detectors and reducing the number of parameters is essential. To tackle this, CBAM [19] integrates spatial and channel attention mechanisms within the attention module, while [20] incorporates the attention mechanism during training, particularly for scenarios with smaller datasets.

However, introducing prior information can cause the model to concentrate more on the specific domain related to that information, ignoring the role of local information in the whole image. Dong and Qin highlighted this issue in their global attention-based multi-level feature fusion object detection algorithm [21]. To address this, multi-scale whole-scene surveillance object detection methods propose using global attention with feature pyramids [22]. While these methods have improved generalized object detectors, they often overlook the diversity of scene objects, such as different items in specific industrial settings.

2.2. Object Detection in Industrial Scenarios

Object detection is increasingly utilized in industrial applications such as production and quality inspection. For instance, Nie and Xue highlighted the role of signal processing in 3D industrial object detection [23], while Tan’s EfficientDet: Scalable and Efficient Object Detection employed the bidirectional feature pyramid network (BiFPN) and a balanced scaling model to address resource constraints [24].

In industrial applications, both operational efficiency and computational cost are critical considerations. As a result, several new unipolar detector models have been developed to balance efficiency and cost, including YOLOv5 [25], YOLOv6 [26], and PPYOLO [27]. Liu et al. improved YOLOv5’s performance by replacing regular convolutional layers with SPDConv to enhance efficiency [28]. Li et al. advanced YOLOv6 v3.0 by fully optimizing the model to achieve better performance and accuracy [29].

Considering the diversity of object detection tasks in industrial scenarios, it is difficult for most of the excellent methods or improved modules to produce better results when encountering different industrial scenes. To get out of this dilemma, researchers analyze the specific difficulties according to the tasks, and inspiration is taken from generalized object detection models. For objects to be detected with large differences in resolution, most of the generic object detection methods utilize feature pyramids or multi-scale detection. For example, RPN [30] was first proposed in Faster-RCNN, improving the detection efficiency of objects with differences that are too large in scale by using a multi-scale feature pyramid approach. PANet [31] borrows the idea of a feature pyramid and proposes a network structure with decoupled modules and aggregated multi-branches, which achieves an approximation of a multi-scale feature pyramid with a smaller overhead.

Data augmentation methods can also help address these challenges. For instance, the review "Edge Computing Techniques and Applications" explores various enhancement filters, such as sharpening and edge enhancement filters, and their effects on image processing, particularly in edge detection and detail enhancement [32]. Additionally, methods that expand resolution can achieve similar results. Wang et al. used deeper convolutional networks for image super-resolution, enhancing image quality by learning mappings between high-resolution and low-resolution images [33]. However, these techniques are not yet widely adopted in industrial object detection scenarios.

2.3. Super-Resolution Framework

Integrating a super-resolution framework with object detectors offers a promising solution to practical challenges in the field. Recent advancements in object detection algorithms have incorporated super-resolution techniques, focusing on feature enhancement. For instance, "Image Super-Resolution Using Convolutional Neural Networks" explores the potential of neural networks in super-resolution [34], while “Image Super-Resolution Using Generative Proposal Networks” demonstrates the feasibility of applying super-resolution techniques in object detection [35]. These approaches generally treat super-resolution as an attentional mechanism to enhance object detection features.

However, the full potential of super-resolution has not yet been realized. To address this, methods like recurrent neural network super-resolution [36] and deeper convolutional super-resolution [37] aim to boost efficiency by increasing the number of convolutional layers or improving convolutional structures. An advanced method called Haar wavelet downsampling (HWD) [38] enhances the integration of super-resolution with object detection by introducing probabilities. These approaches improve the upsampling process, reducing information loss during this phase.

However, these methods often involve a large number of parameters and computational processes, leading to performance degradation and making them challenging to deploy in real industrial scenarios. To address this, Carafe [39] combined spatial attention with super-resolution, and Dysample [40] used a multi-scale pyramid network alongside super-resolution, achieving some performance improvements. Despite this, these methods still face challenges in industrial deployment, as their performance heavily depends on dataset size, making them less suitable for practical use.

3. Materials and Methods

In this paper, pipeline of a prior-enhanced networks and resolution-extended networks is proposed to address the problem of detection efficiency due to large differences in class scales in datasets. The pipeline is built on YOLOv9 [41]. YOLOv5 is an improved version of the YOLO series object detection model, released by the Ultralytics team. Its goal is to provide a fast, accurate, and easy-to-use object detection tool. Compared with previous versions, YOLOv5 has a lighter structure, which can improve inference speed while ensuring higher accuracy. It uses a more efficient network design and can run on various hardware, including GPUs, CPUs, and mobile devices. In addition, YOLOv5’s ability to detect small objects has also been enhanced. This version of the model is suitable for multiple fields such as industrial automation and security monitoring and supports real-time object detection. On the other hand, YOLOv9 is the latest version of the YOLO series, further improved and launched by the community and researchers based on YOLOv5. It optimizes the network architecture, improves detection accuracy and speed, and performs particularly well in handling complex scenes, low-light environments, and small-object detection. YOLOv9 introduces new feature extraction techniques and enhanced adaptive capabilities, making it more robust and faster in various industrial applications. Compared with previous versions, YOLOv9 has made significant improvements in the accuracy and efficiency of object detection. In Section 3.1, the basic PERE framework is first applied to YOLOv9, replacing some of the initial modules. Under the framework, the multi-class perceptual prior-enhanced network (MRPE) is introduced in Section 3.2. To improve the performance of tiny objects, the resolution-extended network (REN) is described in Section 3.3.

3.1. General Overview

The detection of laminated panels can be framed as a multi-task learning problem, where both classification and bounding-box regression are optimized together. This approach helps to accurately identify and locate objects, as commonly performed in many high-performing single-stage detectors. Specifically, for an input image with input H × W × C, the single-stage detector is trained and reasoned to obtain a classification score of dimension N × 1, a confidence score of N × 1, and position coordinates of N × 2, where N denotes the total number of prediction frames.

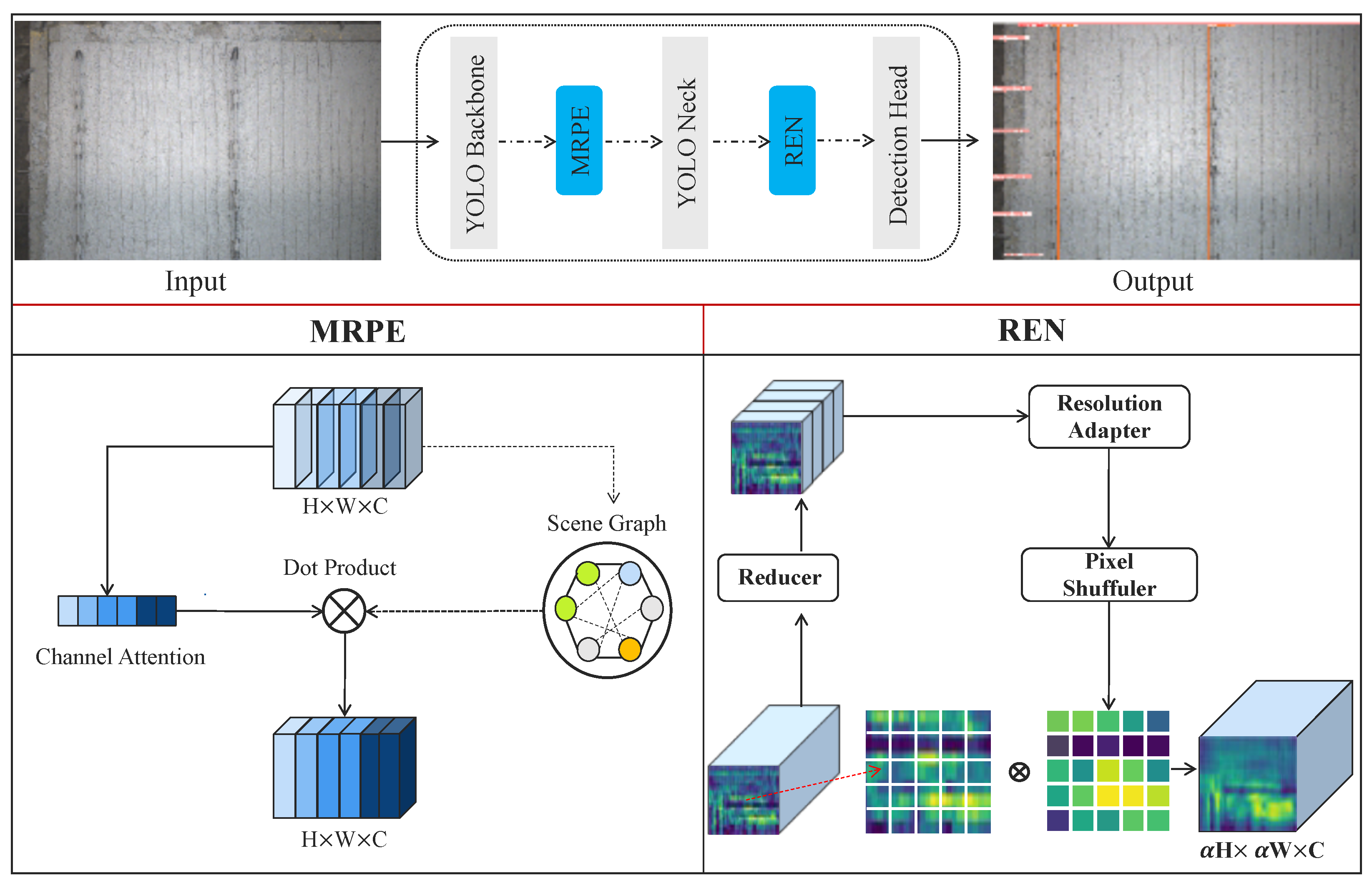

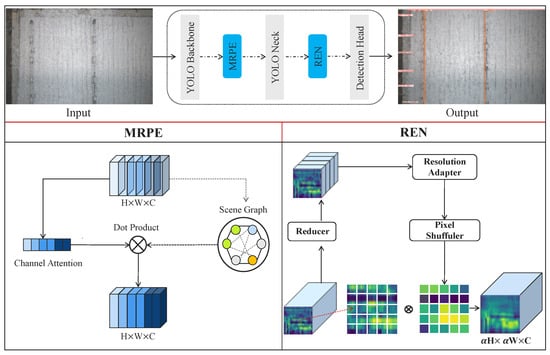

As shown in Figure 2, the whole detection pipeline consists of five parts: a backbone network with Adown and RepNCSPELAN4 modules for efficient processing and extraction of features; a feature-processing module with MRPE; YOLONeck for further processing of features; a bottleneck with a resolution-extended network (REN) for extracted features for high-resolution enhancement, stitching, etc.; and a detection head with six independent branches to output the final results. The feature maps at all scales share the same detection head to increase the efficiency of the additive measurements. The shared detection head has two components: regression and classification branches. Like other popular single-level detectors, YOLOv9’s loss consists of three components: bounding-box regression loss, confidence loss, and category classification loss. Assuming that the loss function of YOLOv9 is L, we can express it as a weighted sum of the three main components:

where is the bounding-box regression loss, and in our example, the IoU loss is used. is the confidence loss, and the binary cross-entropy loss is applied. is the category classification loss. Specifically, represents the bounding box of the target. In object detection, the model needs to predict the position information of each target, usually represented by a rectangular box. This bounding box is usually represented by four coordinates, which are the positioning information of the target area. The model predicts these values through regression tasks. Conf is the confidence score, which represents the confidence that the predicted bounding box contains the target. It reflects the confidence level of the model as to whether the current prediction box contains a certain target. This value is usually between 0 and 1, where 1 indicates that the model is very certain that there is a target within the box, and 0 indicates that the model is almost certain that there is no target within the box. Usually, conf is calculated based on the probability of the target’s existence, and Cls is the class score, which represents the probability that each predicted box belongs to a different category. For multi-class object detection tasks, the model outputs a category score vector for each bounding box, representing the probability that the box belongs to each category. Since there are eight categories of objects to be distinguished, the multivariate cross-entropy loss is then utilized. The bounding-box regression loss (BBox loss), confidence loss (Conf loss), and category classification loss (Cls loss) are the three main components of the total loss function in an object detection model. The BBox loss is used to measure how accurately the model predicts the location and size of the object by comparing the predicted bounding box coordinates with the ground truth. Conf loss refers to the model’s ability to correctly predict the presence of an object within the bounding box. It is measured using binary cross-entropy, where the model learns to classify whether a given region contains an object or not. Cls loss is used to evaluate how well the model assigns the correct object category to each detected object. Since there are multiple object categories, this loss is computed using multivariate cross-entropy, which ensures the model classifies objects correctly. The coefficients , , and serve to balance the contribution of each loss term in the total loss function. These coefficients adjust the importance of each loss type based on the specific needs of the task. In our case, the values are set to 5, 1, and 1, respectively, where the higher weight for the bounding-box regression loss emphasizes the importance of precise localization of the objects. This balancing is essential for achieving good performance in object detection, as it ensures that the model focuses adequately on all three aspects: localization, detection confidence, and classification.

Figure 2.

General overview of prior-enhanced resolution-extended object detector for industrial scenarios. The structure is based on the modular architecture of YOLOv9 and includes a main detection branch and an auxiliary detection branch. We designed and implemented the MRPE module to replace the original Adown module in the auxiliary branch. The MRPE module is placed between the YOLOBackbone and YOLONeck. Additionally, we replaced the original upsampling module with the new REN module, where represents the upsampling multiple, and the multiple is set to 2 in our experiments. In this system, the input image is first processed by the YOLOBackbone to extract basic image features. These features are then refined by the MRPE, YOLONeck, and REN modules, leading to more accurate detection results, which are used for subsequent tasks.

In the original YOLOv9 architecture, the classification branch and the regression branch share the same structure. Specifically, YOLOv9 utilizes six interdependent detection branches, working together to produce the final detection results. In our approach, we retain most of the modules within these six detection branches, keeping them consistent with the primary YOLOv9 architecture. However, to enhance detection efficiency, especially in scenarios involving both large and extremely small objects, we introduce a novel framework called Multi-Category Perceptual Prior Enhancement (MRPE). This framework is designed to improve the detector’s ability to perceive and differentiate between various categories by incorporating prior information. Additionally, MRPE enhances the features extracted from images, thereby improving overall detection accuracy. We replace some of the Adown modules in YOLOv9 with the MRPE module. While functional, these Adown modules have been found to negatively impact execution speed in industrial settings, making them less suitable for high-performance applications.

Furthermore, using high-resolution input images has been shown to improve detection tasks in specialized datasets, particularly for identifying small-scale objects. However, directly introducing higher-resolution input images into the detection process significantly increases the computational load, which can degrade the overall performance of the detection algorithm. To address this challenge, we propose a resolution-extended network (REN), which integrates a super-resolution approach into the feature processing pipeline of the object detection model. REN achieves this while adding minimal computational overhead. In our implementation, we replace all the upsampling modules within the YOLOv9 network with REN. The original YOLOv9 upsampling relied on a nearest-neighbor interpolation strategy, which failed to consider contextual relationships within the image. In contrast, our REN module preserves these contextual relationships, leading to better feature resolution and, consequently, more accurate detection results. Detailed explanations of the MRPE and REN modules are provided in Section 3.2 and Section 3.3, respectively.

3.2. Multi-Class Perceptual Prior Enhanced Networks

In inspection tasks, the classification subtask focuses on finding a way to distinguish between the category being inspected and other background elements. For example, in a laminated panel inspection project, this means identifying categories like reinforcement bars, outer reinforcement bars, and other backgrounds such as the panel boundary. The main challenges here are dealing with dense occlusions and the small size of objects. Additionally, in traditional object detection tasks, features from different locations and scales do not equally contribute to the final result. This, along with the fact that much of the regular pattern information in a specific scene is often overlooked, leads to the network not fully utilizing important features.

These issues lead to varying levels of false detections, which can become more pronounced when using single-stage object detectors. This is because single-stage detectors omit the candidate anchor frame step, leading to less accurate results. To tackle these challenges, a common strategy is to use fine-tuned attention modules. This approach aims to enhance the efficiency of global information usage by adjusting the focus of the neuron’s receptive field.

However, this solution is not always effective for laminated panel detection tasks. The attention mechanism’s performance must be validated through extensive experiments with different models and datasets. In practice, mainstream attention-based methods have not shown significant improvements for tasks like laminated panel detection. Specifically, conventional attention modules have difficulty managing the large pixel gap between categories such as long rebar and line. And in some cases, these categories can even conflict with each other. As a result, regardless of merging the idea of attention, the detector often remains focused on localized information. The limitation can lead to false detections of objects with similar features, reducing the overall accuracy and performance of the detector. The persistent reliance on localized information means that important contextual features are not fully utilized, further impacting the detector’s effectiveness in distinguishing between different categories in complex scenarios.

Considering the above problems, we propose exploring more effective attention modules to guide the model towards better localization. Inspired by the concept of scene graph enhancement used in recurrent neural networks and the idea of utilizing prior information, we aim to improve feature representation by creating and aligning a scene graph with the training data. By integrating prior information and attention mechanisms, we enhance the model’s ability to capture and utilize key features.

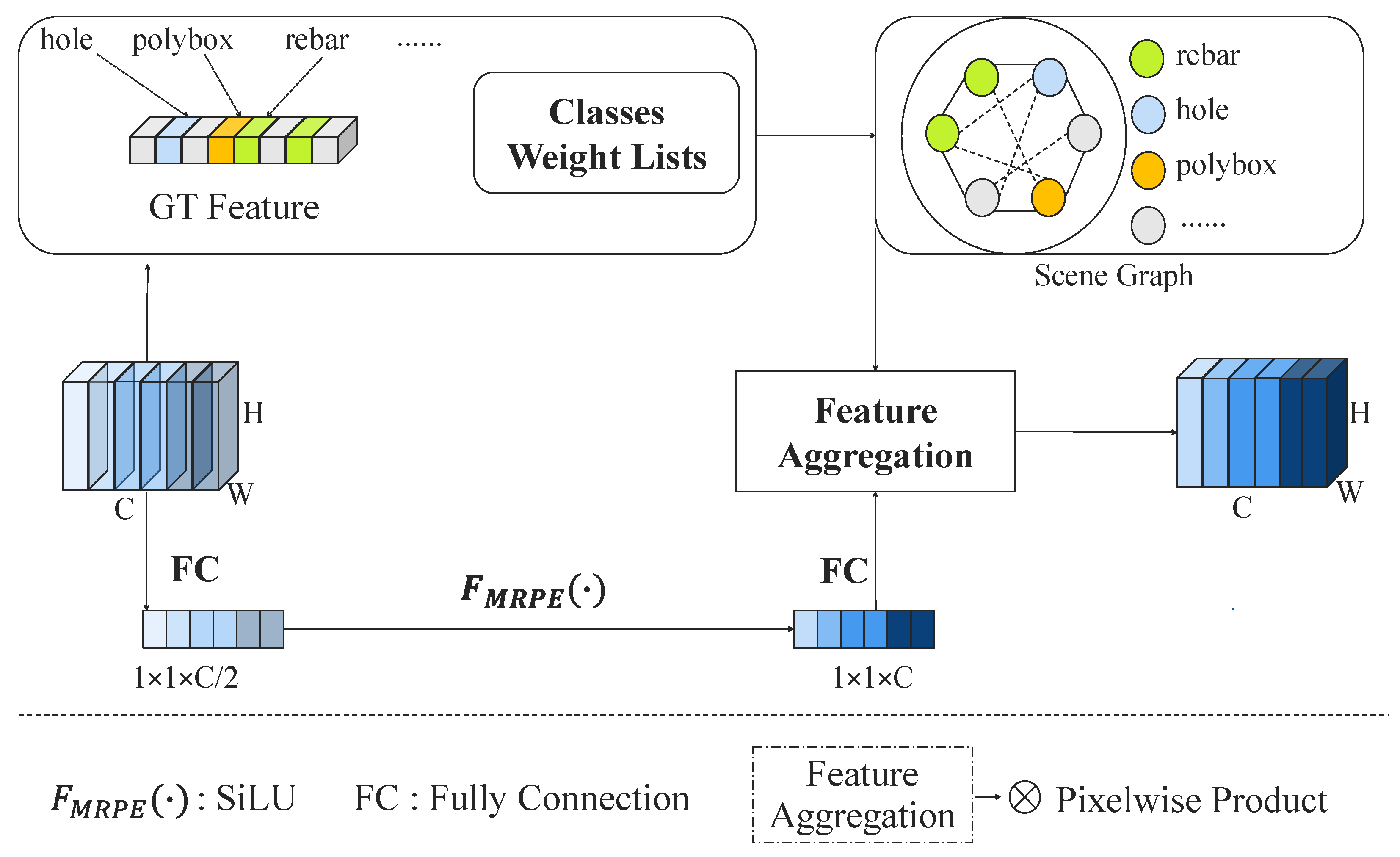

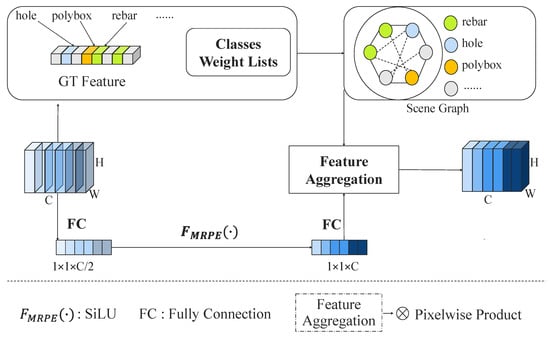

Our approach involves generating prior information specific to the laminated panel scenario to assist in feature extraction, as illustrated in Figure 3. The architecture includes a main feature processing module and an auxiliary branch. In the main branch, the original feature map is compressed to a one-dimensional vector using a fully connected (FC) layer, where fully connected layers are a common type of layer in neural networks, widely used in various deep learning models, especially in classification tasks. Its function is to linearly transform the input features and generate outputs. It can achieve feature fusion and dimensionality reduction in different scenarios. The pixelwise product involves element-wise multiplication between tensors or matrices to refine feature maps and focus attention on crucial image areas. The scale of these operations depends on the input image size, which in this case is 640 × 640 pixels, and the resulting predictions are made at this resolution. An activation function is then applied to provide a more flexible representation of the features.

Figure 3.

A general description of MPRE.

In the auxiliary branch, we generate prior information to reflect the existing scene graph. This involves two types of prior constraints applied during training to improve detection accuracy. The first type aligns prior information with ground truth (GT) features, representing different categories as nodes in the scene graph. The second type represents relationships between objects in 2D space as edges in the scene graph. The scene graph is then fed into the Feature Aggregation module for further processing. This process results in enhanced feature representations that improve overall detection performance.

Without the aid of pre-generated candidate frames, the one-stage object detectors may be “less confident” in identifying similar features, especially in scenes with a single color and similar texture. On the other hand, the single-stage detector is limited to focusing on the local receptive fields and ignoring the relationships between contexts when it learns to annotate the features of a region, leading to a certain degree of false detection relationships between contexts, which leads to a certain degree of false detection. We design an attention module that focuses on channel information and introduces spatial information from the scene graph, aiming to allow the model to pay full attention to spatial feature relationships while attending to differences and similarities in information between multiple channels.

Specifically, we first change the format of the feature map by tensor variation while retaining all the feature information of the image; we further perform activation transformation and linear mapping on the feature map to bring in the spatial feature information, and then subsequently use the obtained results as weights to perform feature aggregation with the original features so that the model learns coarse but information-rich features. Based on this, we further reuse the above modules and use tighter parameter constraints to give the model a greater possibility to refine the features. During this training period, the learned features become more and more refined, and object features with large-scale differences become easier to distinguish. The strategy we employ allows the model to maintain a superior feature update direction, allowing objects with similar features but belonging to different classes to be well regressed, as the resulting bias is eliminated in the next layer of the more stringent refinement module. We name this object feature processing module, which is capable of applying prior information and is category-sensitive, the Multi-Class Perceptual Prior-Enhanced Network (MRPE).

The MRPE block adaptively recalibrates the channel feature responses, allowing the model to focus more on the relationship between local and global features, and recalibration refers to adjusting the feature map responses at different resolutions, allowing the model to prioritize important regions of the input image. Enhancing the CNN representation by modeling the interdependencies between channels, the MRPE block uses multiple activation and normalization operations to allow the model to learn similar features in the laminated panel detection scenario and improve generalization. We also invoke the branch of generating the scene graph to explore the impact of the module’s prior information learning processing logic on model accuracy. To be more specific, the recalibration process in machine learning models involves adjusting parameters, hyperparameters, and outputs to improve accuracy, particularly in tasks like object detection. It includes fine-tuning predicted bounding boxes, class probabilities, and confidence scores. Modeling refers to developing and training the model, selecting appropriate architectures, and validating performance. Normalization ensures input and intermediate data are scaled to a consistent range, enhancing training stability and convergence. The detection scenario defines the real-world conditions, such as lighting, object types, and environmental challenges, influencing model design. Lastly, prior information learning allows the model to leverage previously learned knowledge, enhancing its generalization and accuracy, especially when data are limited or conditions are complex. Together, these processes ensure the model is more accurate, stable, and adaptable to diverse real-world situations. We implement our MRPE by merging the attention branch and attaching the scene graph branch.

In order to make this obscure and difficult process easier to understand, it is obvious that the MRPE block is designed to improve the model’s ability to focus on relevant features by adaptively recalibrating the channel feature responses. This means it adjusts how the network weighs and processes different channels (features) in the CNN (Convolutional Neural Network). The MRPE block enables the model to focus on both local and global feature relationships, which is essential for tasks that require detailed, context-aware analysis, such as laminated panel detection.

By modeling the interdependencies between channels, the MRPE block enhances the CNN’s ability to represent more complex features. It achieves this by utilizing multiple activation and normalization operations, which help the model learn similar features more effectively, improving its generalization ability. This is crucial in real-world detection scenarios where variations across data are common, allowing the model to better adapt to new, unseen data.

Furthermore, the MRPE block includes a branch dedicated to generating a scene graph, which represents the relationships and context between objects in a scene. By exploring the impact of prior information—knowledge learned from previous tasks or data—the scene graph branch enhances the model’s decision-making and improves accuracy. The final implementation of the MRPE merges the attention mechanism (which focuses the model’s attention on relevant features) with the scene graph branch, allowing the model to learn not only about individual features but also about how these features relate to one another in a broader context, thus improving overall model performance. Specifically, we design three restriction rules as the prior information, as shown in Figure 3, which we statistically and analytically, derived based on the two pieces of prior information that the laminated panels are mass-produced according to a uniform mold and that there is the same spacing between the outer reinforcement bars and the intersection points of the laminated panels, and most of the categories always exist in the interior of the bounding box; the categories of the neighboring outer reinforcement and the line always have the same spacing between them. To this end, an accurate structure, confronting specific tasks, must be designed for . Therefore, a simple and delightful method is introduced. To be more specific, the prior information is digitized and normalized into the numbers of the matrix. During the update phase, prior information is regarded as edges within the scene graph, while objects are seen as nodes.

We first define a basic block, which consists of two parts:

where represents the feature map generated by the main branch of MRPE and H is the output of the auxiliary branch. Then, is attached through a simple dot product of two feature maps. can be formulated as:

where X represents the original features, and represents the fully connected layer. A fully connected layer (FC) is a layer where each input is connected to every output. It computes a weighted sum of inputs, followed by an activation function, and is typically used in the final stages of a neural network to make decisions or classifications based on the learned features. represents the excitation function we designed in this module, which implements the learning of nonlinear relations of the network by improving the activation function of SiLU [42]. The result of the main branch, , is generated through these steps. Another process is carried out in the meanwhile, with the following formulation:

where X is the initial feature map, and is the prior enhancement function, which is achieved with the scene graph, and A is the adjusted matrix in the GNN [43]. The inner function of the GNN module can be formulated as the following equations:

Equations (5)–(8) represent the matrix generation, weight generation, message calculation, and feature update modules within the GNN network, respectively. In Equation (5), the adjusted adjacency matrix A is defined, which encodes the relationships between nodes in the scene graph. This matrix A contains elements that represent the similarity or dependency between nodes u and v, and it is used for information propagation in the subsequent GNN steps. In Equation (6), the attention score between nodes u and v is computed. First, the feature vectors and of the two nodes are combined using a weighted operation with a weight matrix W. This combined result is then passed through a non-linear activation function, such as SiLU, to obtain a transformed feature representation. The softmax function is applied to normalize the attention scores for all of node u’s neighbors, indicating how much importance should be given to each neighboring node in the information propagation. Next, Equation (7) calculates the message for node v by aggregating information from its neighbors. The attention scores calculated in the previous step are used to weight the feature vectors of neighboring nodes u. These weighted feature vectors are summed to produce the message that node v will receive from its neighbors, thus aggregating important information for the update process. Finally, in Equation (8), the updated feature vector for node v is computed by combining its original feature with the aggregated message This combined feature vector is then passed through a linear transformation using a weight matrix and a bias term , followed by an activation function like ReLU. This results in the updated feature vector, which captures both the local features of the node and the global context provided by its neighbors, much like a forward propagation step in traditional neural networks.

Together, these steps—matrix generation, attention-based weight calculation, message aggregation, and feature updating—enable the GNN to effectively propagate information across nodes and update their features based on both local and global context, which enhances the model’s performance in tasks such as detection and classification.

3.3. Resolution-Extended Network

The MRPE aims to enhance feature updates by leveraging both channel attention and spatial information from scene graphs to reduce false detections across different objects. This method focuses the model’s attention on specific regions of the image, which helps in minimizing incorrect detections. However, this approach has some drawbacks. Furthermore, objects that are small or have unusual shapes might not be well represented. Because the image is often resized to a fixed dimension to manage computational costs, some features can become elongated or distorted. This distortion particularly affects objects with small scales or extreme aspect ratios. In the context of laminated panel scenes, categories like rebar and outer rebar face significant challenges because their features can become too compressed to be accurately detected.

To address this issue, we propose the resolution-extended network (REN), which integrates high-resolution feature information at various scales into the original feature map to produce a more accurate image representation. The REN leverages features that have been fused by the MRPE and ELAN [44] modules as input. It replaces the upsampling module in YOLOv9 to enhance upsampling performance.

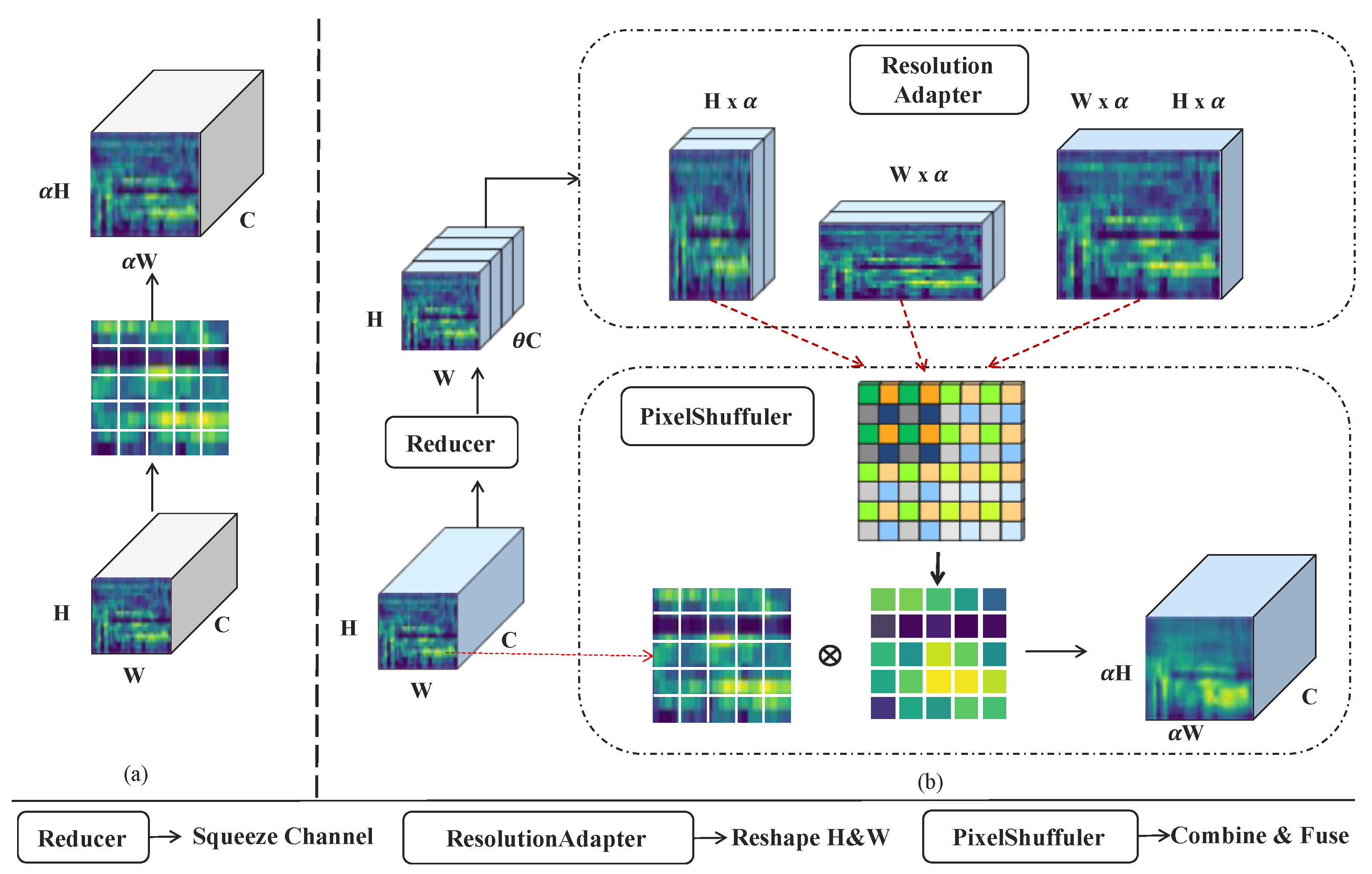

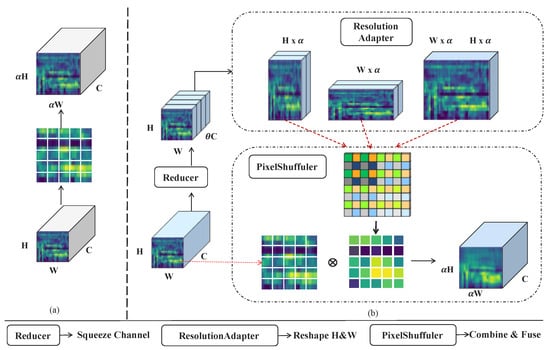

The MRPE module captures instance-specific features in targeted regions, while the ELAN module preserves and integrates feature processing across different stages, enabling seamless collaboration among modules. This approach ensures that architectures designed for various detection tasks contribute rich feature information from diverse perspectives. Although some information loss is unavoidable, it is mitigated by the high-resolution upsampling provided by the REN. Figure 4 illustrates the REN’s complete pipeline, showcasing its operation across three different resolution scales. In Figure 4, the reducer downsamples feature maps using a kernel (e.g., 2 × 2 or 3 × 3) with a stride of 2, reducing spatial dimensions (e.g., from 640 × 640 to 320 × 320 pixels) while retaining essential information. The resolution adapter adjusts image or feature map resolution using interpolation (e.g., bilinear or nearest-neighbor) to match the model’s input size, such as resizing from 2688 × 1792 to 640 × 640 pixels. The shuffler enhances feature learning by permuting channels to improve feature diversity and generalization, especially in deeper network layers, helping the model capture complex patterns.

Figure 4.

(a) shows a common detection model that uses a straightforward two-step process for upsampling the tensor. In contrast, (b) presents the REN architecture, which includes two main steps: resolution adaptation and feature aggregation. First, the original features are encoded, stretched, and flattened for less calculation. These features are then expanded to twice their original length or width and combined with those from the traditional upsampling module for improved feature fusion.

To reduce computational complexity, the original feature map is first scaled by the encoder to the size of , where represents the reduction factor, and in our experiment, it is fixed at 0.5. Instead of directly reshuffling all pixels into a super-resolution tensor, which would disregard the alignment between the initial and input image sizes, we utilize a resolution adaptor. This adaptor generates three intermediate feature maps by expanding the feature map in both horizontal and vertical directions: one with a size of (horizontal expansion), another with (vertical expansion), and a third with (simultaneous horizontal and vertical expansion). In the detection scenario of composite panels, the objects to be detected, such as rebar and external rebar, have orientations in either the horizontal or vertical direction. Performing feature stretching in both directions allows the model to learn the key features of these categories more easily. Therefore, the adaptor can provide additional information in the horizontal or vertical directions to the detector before the low-resolution features are transformed into high-resolution features. Then, these intermediate features are then processed through a pixel muxer, which combines them at different levels of resolution to form a final feature map of size . This approach enables the model to capture multi-scale information effectively and ensures that the alignment between the initial and final image sizes is preserved. In the final step, the upsampled feature map is pixel-wise accumulated with the original upsampled map, producing the final output. This pixel-level accumulation not only improves the spatial accuracy of the feature map but also maintains computational efficiency. To be more specific, in Figure 4b, represents the coefficient in the encoder, which is used to reduce the number of original channels in the encoder, thus achieving the effect of reducing computational complexity. represents the upsampling coefficient, indicating the factor by which the final upsampled image is magnified.

The conventional upsampling module is implemented by multiplying the width and height by a simple multiplier, which can be formulated as:

Given the original feature X, the width and height of the image are multiplied by a fixed number of times to achieve upsampling while maintaining the same number of channels. However, a large amount of feature information is not fully utilized in this process. For this reason, we design a resolution-extended module as shown in Figure 4b: the can be formulated as a result obtained by the pixel-by-pixel dot product of two super-resolution feature maps.

where and represent the feature maps obtained in different ways. Specifically, is the same as the upsampling method used by most detectors, in which each element is given the weight values of the neighboring elements about itself, and then simple number multiplication is performed based on these weight values to achieve upsampling.

We have designed a whole process for the generation of feature to allow it to fully read the image information while maintaining high performance. Broadly speaking, after the original feature X is input into the encoder, three tensor transformations with different specifications are performed, two of the results obtained from the tensor transformations are selected for pixel extension and scale renormalization, and the last two tensors are pixel dot-produced to generate the feature , which is used in the subsequent processing. It can be formulated as Equation (10).

In Equation (12), represents the operation of pixel shuffle in the field of image super-resolution, which breaks up the feature image pixels of and tiles them regularly in a one-dimensional tensor to enrich the spatial domain information of the image. recombines the results of into a specific size to meet the upsampling of the algorithm module’s requirements, and and represent two different tensor transformation results, which are generated as in Equation (13).

Finally, H, W, and C represent the height, width, and number of channels of the feature image, respectively, and stands for the mode of the primary tensor transformation; the mode of the transformation can be interpreted as either multiplying the length by 2, multiplying the width by 2, or multiplying both the length and the width by 2. Thus, there are three cases of the generation of , and we will ablate these three modes in the subsequent experiments. In addition, denotes the process of dimensionality reduction of the original feature, i.e., reducing the number of channels of the feature input into the REN to reduce the amount of computation. When is generated, features and are processed by pixel-by-pixel dot product to obtain a new feature , which replaces the traditionally upsampled feature and is provided to the model for subsequent processing.

4. Results

This section contains the details of our experimental setup, including the source and screening of the dataset, the evaluation metrics of the dataset, the assessment setup, and the computational setup for the number of parameters.

4.1. Laminated Panel Datasets and Evaluation Indicators

For this study, we focus on detecting objects in laminated panels, and we created a dataset using images from such environments. To be more specific, the laminated panels in this study are made of metal. Using a matrix camera, we captured approximately 7500 images of industrial laminated panel scenes. For practical deployment, we selected 2888 images from these captured images to form our dataset. One of the images contains at least one laminated panel, and in some specific cases, multiple images capture the same panel from different angles. Therefore, after statistical analysis, a total of 1300 different laminated panels are included. These images represent a variety of conditions, including varying lighting levels, different textures of laminated panels, and different object positions.

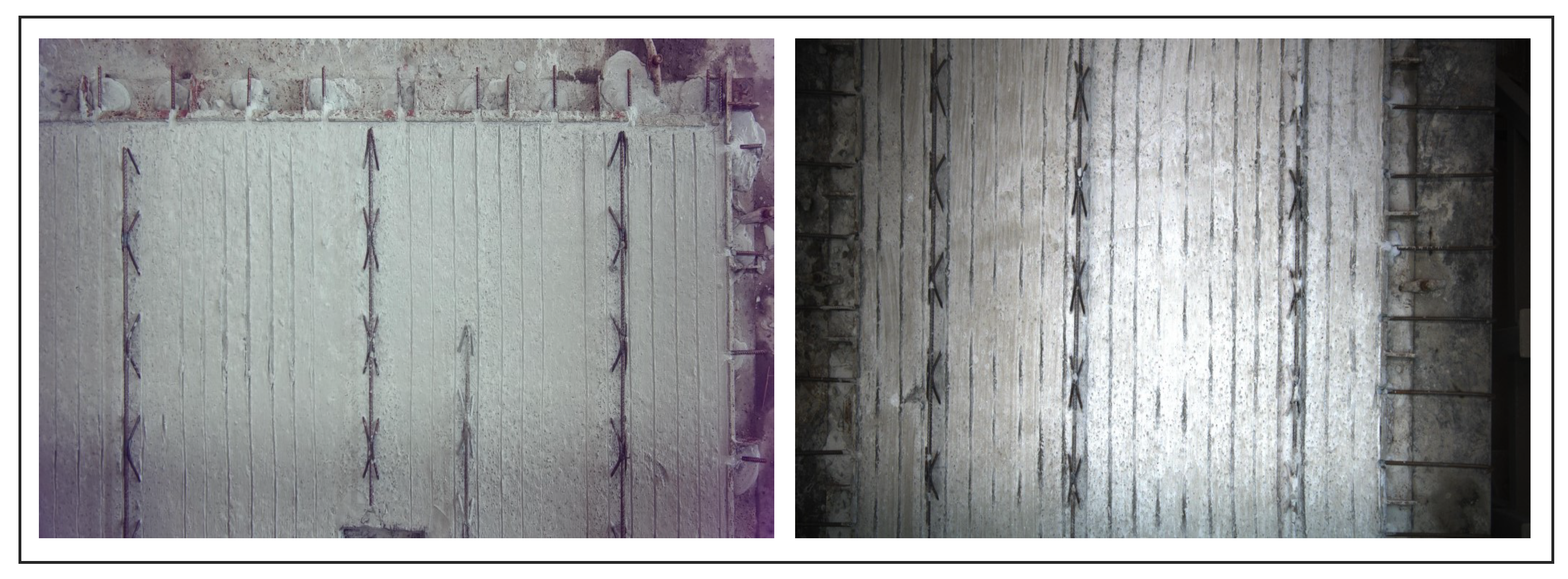

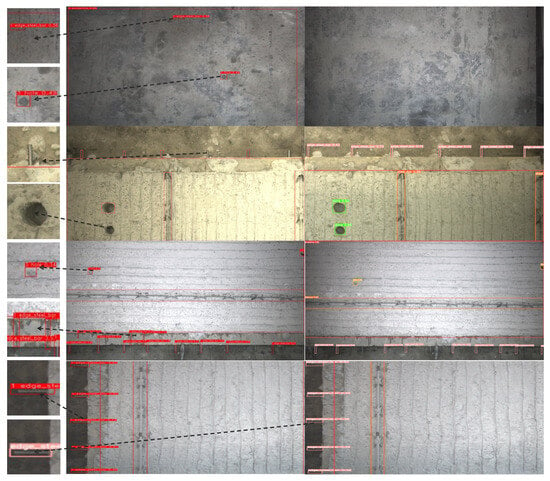

The dataset is divided into three parts: 2304 images for training, 292 images for validation, and 292 images for testing, strictly following the 8:1:1 ratio for dataset splitting. This ensures that the validation and test sets are distinct from the training data. We have named the dataset Laminated Panel Images (SPI). To further challenge the detection model, we categorized the images based on difficulty and ensured that the test set included as many difficult-to-detect samples as possible. However, only a small portion of these harder samples were used during training. A representative sample of SPI is shown in Figure 5. The SPI dataset is accessible at https://huggingface.co/datasets/shiningfrog/laminated_panels (accessed on 10 April 2025).

Figure 5.

Laminated panels dataset.

To speed up the model’s inference without sacrificing image quality, we resized the images to 4096 × 2180 pixels and converted them from BMP to JPG format.

To evaluate the performance of our model on the industrial laminated panel detection task, we use a set of well-defined metrics that capture both accuracy and efficiency. We initially labeled our dataset with nine distinct categories but chose to focus on three representative ones: center, edge rebar, and rebar. These categories were selected to simulate extreme scale gap scenarios that are common in real-world applications.

Center refers to the central portions of the rebar, which are typically much smaller in pixel size compared to the rebar itself. Edge rebar denotes the rebar at the image edges, often featuring small pixel sizes and curved hooks that indicate protrusions from the concrete slab. Rebars generally have extreme aspect ratios and can occupy significant portions of the image both horizontally and vertically.

Given the characteristics of these categories, the evaluation metric average precision at 50% intersection over union (mAP@50) was selected. This metric measures the accuracy of the model at a specific IoU threshold of 50%, which aligns well with the needs of the project and provides a clear view of the model’s performance across different categories. Using AP@50 instead of broader metrics like AP or mAP@50-95 allows for more granular insights into category-specific performance, facilitating effective experimental comparisons.

In addition to precision, recall (R) is crucial for assessing the model’s ability to identify all relevant objects, representing the proportion of true positive detections to the total number of actual positive samples. This metric helps evaluate how well the model detects objects across all categories.

The metric Giga Floating-Point Operations per Second (GFLOPs) is used to evaluate the computational efficiency of the model. This parameter is critical for assessing the feasibility of deploying the model in real-world industrial settings where computational resources may be limited.

To summarize, the evaluation metrics for this experiment include recall (R), mean average precision at 50% IoU (mAP@50), and GFLOPs. Recall and mAP were calculated using their respective formulas, as detailed in Equations (14) and (15). These metrics collectively provide a comprehensive assessment of both the accuracy and efficiency of the model in detecting industrial laminated panels.

where (true positive) represents the correctly detected positive samples, (false positive) represents the incorrectly classified negative samples as positive, and (false negative) represents the incorrectly predicted positive samples as negative. is the average precision.

4.2. Inplement Information

We implemented our improved network through Pytorch and YOLOv9 on the improved YOLOv9 network using the MRPE and REN; all tested models were trained for 300 epochs using the SGD optimizer, and the learning rate was initialized to 0.01 at the online warm-up and reduced by a factor of 10 at the 100th epoch. Additionally, the input image size was 4096 × 2180 and initialized to 640 × 640 when fed into the initial module, We performed the experiments on two NVIDIA RTX 3090 Ti GPUs, manufactured by NVIDIA Corporation, headquartered in Santa Clara, CA, USA. The algorithm is implemented in Pytorch and uses CUDA 11.2 for computing acceleration, while the batch size is set to 16 for maximally utilizing the memory of the Cuda.

4.3. Performance Evaluation of Different Methods

It is worth noting that in all of our proposed methods, the MRPE belongs to the lighter modules, which can be easily replaced in the YOLOv9 model. We validated the effectiveness of our introduced MRPE in a wide range of object detection methods, as shown in Table 1. We conducted comparison experiments between the MRPE and two mainstream attention mechanisms, SE and SGE, and we fine-tuned the network structure of SE and SGE to fit YOLOv9’s model better, as shown in Table 2, by replacing YOLOv9’s modules; the MRPE had about 0.3% performance improvement in the category of rebar. On the other hand, the MRPE had about 0.2% performance improvement in the category of edge rebar. Through our analysis, we found that the MRPE indirectly assigns different weights to different categories, thereby increasing the correlation between targets. This is particularly evident between rebar and edge rebar, two categories that have similar features but different scales. Such correlations are also effective for other categories, such as holes and junction boxes, which are not explicitly shown. The difference between the above modules in GFLOPs can be seen in Table 3, where the MRPE has a relatively obvious improvement in GFLOPs.

Table 1.

Effectiveness of different algorithms on our dataset.

Table 2.

Comparison of MRPE and similar methods.

Table 3.

Comparison of MRPE and similar methods in GFLOPs.

On the other hand, the REN significantly improves the accuracy of small-scale objects to the extent that it outperforms the corresponding module of YOLOv9. To validate the effectiveness of our proposed REN in a wide range of object detection methods, we conducted comparison experiments between the REN and the dominant upsampling methods in two detection models, HWD and DySample, and we fine-tuned the network structure of HWD and DySample to fit the YOLOv9 model better, as shown in Table 4; by replacing the modules of YOLOv9, the REN obtained a certain degree of improvement in the category of rebar. This is primarily due to the fact that in the REN module, the adaptor expands the low-resolution features in both the horizontal and vertical directions, while the rebar itself exhibits horizontal or vertical orientations. However, the REN has a limited effect on edge rebar. Upon analysis, this is mainly because such targets are too small, and merely performing 2× upsampling is insufficient to achieve more accurate results. Similar to the MRPE module, the GFLOPs difference is shown in Table 5. It is worth noting that, compared to DySample and HWD, the REN not only achieves accuracy that is on par with them but also reduces the parameter count by nearly 2.1. This is mainly due to the role of the encoder in the REN module, which reduces the number of original channels and retains the most important channels for feature extraction, thus reducing the computational cost of the model.

Table 4.

Comparison of REN and similar methods.

Table 5.

Comparison of REN and similar methods in GFLOPs.

mAP@50 first calculates the top 50 predictions in the prediction results sorted by confidence and calculates the average precision (AP) among these predictions. These AP values are then averaged to obtain the mAP@50 value, which is used to characterize the quality and stability of the overall detection results. Recall stands for the recall rate, which is the proportion of positive cases that are successfully predicted by the model out of all true positive cases. It is a measure of the model’s ability to detect the number of true positive examples.

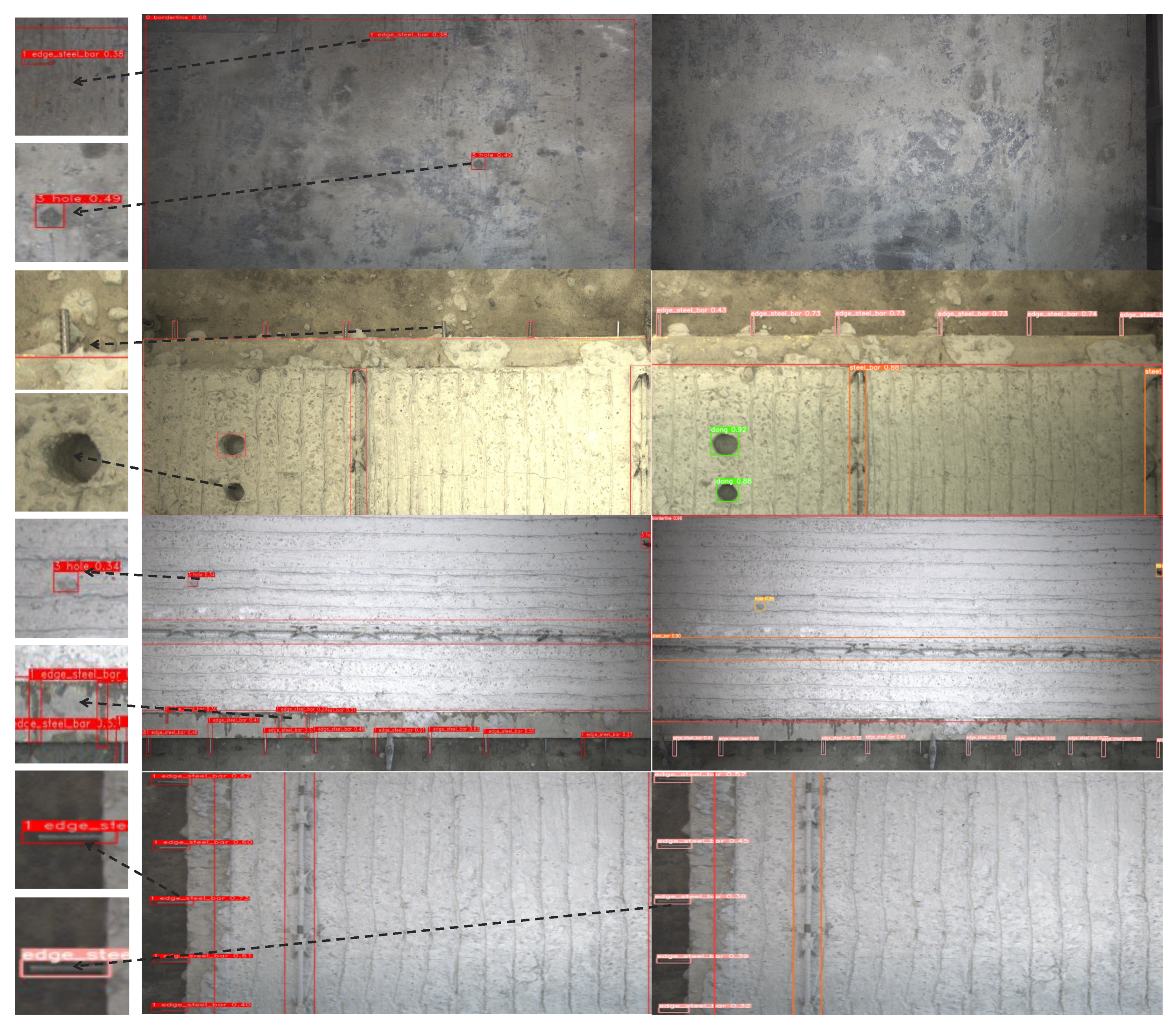

Figure 5 demonstrates a qualitative comparison of YOLOv9 and PERE on the SPI dataset, where images with diverging results were given. It is evident that PERE performs much better, especially when encountering similar tones and small items. To be more specific, a big part of the localizations of the edge rebars are wrong on the left side of Figure 6, whose results come from YOLOv5, while the result of PERE is given on the right side, producing many fewer mistakes.

Figure 6.

A qualitative comparison of YOLOv5 and PERE, which are relatively on the left side and right side of the picture.

4.4. Ablation Experiment

We perform an ablation analysis of all components of the proposed model on a dataset captured by a matrix camera and manually segmented. We continue to use YOLOv9 as the base detector in all ablation experiments and evaluate the effect on the validation set.

To evaluate the impact of our proposed MRPE (Multi-class Perceptual Prior Enhancement) and REN (Resolution Enhancement Network) modules, we conducted an ablation study. The results, summarized in Table 1, highlight the contributions of each component to the performance of our detection model.

Initially, we trained a baseline detector using YOLOv9-c without the MRPE and REN modules. This baseline model applied one-to-one label assignment directly and did not include the additional multi-class perceptual prior enhancement or upsampling processing.

We then integrated the MRPE module into the HEAD layer of the network. This enhancement led to a notable improvement of 4% mAP@50 in the line category and a 0.4% mAP@50 increase in the rebar category.

Next, we replaced the upsampling module in the baseline detector with the REN module. This adjustment allowed us to create multi-resolution feature splicing by combining the original output branches with those from the REN. This configuration resulted in a 1% mAP@50 gain for the line category and a 0.6% mAP@50 gain for the rebar category compared to the baseline model.

When both the MRPE and REN were incorporated into the model pipeline, we observed significant overall improvements. Specifically, the full model achieved a 4.7% mAP@50 gain for the line category, a 0.8% mAP@50 gain for the outer rebar category, and a 0.4% mAP@50 gain for the rebar category. Notably, the addition of only the REN module resulted in the best performance for the rebar category. This is due to the REN and MRPE creating some redundancy in detecting objects with extreme aspect ratios.

Interestingly, while adding the MRPE and REN individually did not significantly shift the accuracy for the outer rebar category, combining both modules led to a substantial 0.8% mAP@50 gain. This improvement is attributed to the enhanced sensitivity of the MRPE and REN to small-scale objects when used together, confirming the effectiveness of our proposed model enhancements.

In summary, the ablation study demonstrates that both the MRPE and REN contribute to improved detection performance, with their combined use providing the most substantial gains across the evaluated categories.

The architecture of the MRPE is as follows.

The different architectures of the MRPE are investigated in Table 6, using the YOLOv9 detector alone as a baseline, and the results are also shown in it, where MRPE s1 denotes the approach that utilizes only a single layer of modules and does not bring in prior information, MRPE s2 denotes the approach that utilizes only a single layer of attention block and brings in prior information, and MRPE s3 denotes the approach that utilizes two layers of attention blocks and brings in prior information as designed. As can be seen from the table, our proposed MRPE with multiple cascade structures shows significant improvement over the baseline detector in the smaller pixel classes, which suggests that our strategy of applying multi-class perception before enhancing feature alignment can help the model to pay more attention to the small-scale objects when fusing features and thus improve the detection efficiency of the small objects in the scenarios with large gaps between classes. Specifically, the best performance is obtained using MRPE blocks with a block number of 2; this suggests that two refinements of the features are sufficient and that more frequent feature aggregation makes the network redundant.

Table 6.

MRPE internal ablation.

The architecture of REN is as follows.

The different architectures of the REN are investigated in Table 7, where the YOLOv9 detector alone is used as a baseline. REN s1, REN s2, and REN s3 denote the different ways of combining the high resolutions in the REN modules. REN s1 represents and , REN s2 represents and , and REN s3 represents and . From Table 7, it can be seen that REN s1 leads to a weak precision gain for the model, REN s2 leads to a 1% gain in mAP@50 about the line and a certain degree of increase in recall, and REN s3 leads to a gain in precision for several categories and a significant increase in the recall for the outer rebar category, which is the is the best experimental result that can be achieved. This shows that the best results are obtained by executing the structure of the REN module with high resolutions of and , which correspond to the horizontal orientation of the majority of the laminated panels in the scene.

Table 7.

REN internal ablation.

5. Discussion

We applied the proposed module to the recently introduced YOLOv9 model and commonly used methods in the industrial field such as YOLOv5. By comparing the results in Table 8, it can be noticed that the methods incorporating our proposed module show a significant improvement in model training accuracy; the enhancement is most significant, of all objects has increased by 2.9 percent points for PERE, and R has increased by 2.6 percent. To be more specific, our method brings more significance to the edge rebar class, which takes the smallest area in the picture but is highest in number; of the edge rebar has increased by 2.3 percent, and R has increased by 2.2 percent. This means that for each image to be detected, there will be a lot reduction in missed detections and false positives. This is more obvious in Figure 5. The image contains images with normal brightness, high brightness, low brightness, and no targets to be detected, where the occurrence of false or missed detections of objects inside the laminated board is greatly reduced. On the other hand, as shown in Table 5, our REN method brings less increase in computational complexity compared to other similar methods, which also indicates that our method brings better performance while decreasing computational complexity. However, our MRPE method brings additional computational complexity compared to traditional attention networks, as shown in Table 3. Overall, our method does have certain limitations in terms of speed and computational complexity. And it is worth mentioning that our method can achieve good performance in industrial laminated panel scenarios but lacks certain universality in other scenarios.

Table 8.

Effectiveness of different algorithms on our dataset.

6. Conclusions

In this paper, we introduce a multi-class prior-enhanced resolution-extended (PERE) detection framework based on YOLOv9, specifically designed to improve object detection in industrial scenes. To address the challenge of missed detection and false detection, we propose a novel learning strategy leveraging multi-class perceptual prior enhancement (MRPE). This approach allows the detector to integrate prior knowledge from industrial environments, effectively enhancing feature representations and reducing uncertainty in the detection process. Additionally, to improve the detection accuracy of small objects, which are common in industrial inspection tasks, we introduce a resolution-extended network (REN). The REN supplements low-resolution features with additional contextual information, significantly enhancing the detection performance of tiny objects that may otherwise be overlooked. Extensive experimental evaluations validate the effectiveness of our proposed PERE framework, demonstrating superior performance in terms of both accuracy and robustness when compared to existing detection methods. The PERE framework shows significant promise for real-world industrial applications, particularly in scenarios such as laminated panel inspection. Moreover, the methodology is highly adaptable and can be extended to other industrial inspection tasks, including those in assembly line environments. Our results suggest that the core principles of PERE, including the integration of perceptual priors and resolution extension, could provide substantial improvements in a wide range of industrial contexts. In future work, we plan to explore the scalability of the PERE framework in more complex industrial scenarios, such as multi-object detection in dynamic environments and the application of the model in different production lines. Additionally, we will investigate the potential for further optimization and adaptation of the proposed method to handle a broader range of industrial inspection challenges.

Author Contributions

Conceptualization, H.W. and X.X.; methodology, H.W. and Y.W.; software, H.W. and Y.W.; validation, H.W., Y.W. and J.L.; formal analysis, H.W. and Y.W.; investigation, H.W.; resources, H.W.; data curation, H.W. and J.L.; writing—original draft preparation, H.W. and Y.W.; writing—review and editing, H.W., Y.W. and J.L.; visualization, H.W.; supervision, J.L. and X.X.; project administration, H.W.; funding acquisition, X.X. and Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar] [CrossRef]

- Mathew, M.P.; Mahesh, T.Y. Leaf-based disease detection in bell pepper plant using YOLO v5. Signal Image Video Process. 2022, 16, 841–847. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Kalfaoglu, M.E.; Ozturk, H.I.; Kilinc, O.; Temizel, A. TopoMaskV2: Enhanced Instance-Mask-Based Formulation for the Road Topology Problem. arXiv 2024, arXiv:2409.11325. [Google Scholar] [CrossRef]

- Zuo, Y.; Wang, J.; Song, J. Application of YOLO object detection network in weld surface defect detection. In Proceedings of the 2021 IEEE 11th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), IEEE, Jiaxing, China, 27–31 July 2021; pp. 704–710. [Google Scholar]

- Liu, W.; Duan, C.; Yu, B.; Chai, L.Y.; Yuan, H.; Zhao, H. Multi-posture pedestrian detection based on posterior HOG features. Acta Electron. Sin. 2015, 43, 8. [Google Scholar]

- Tyagi, A.K.; Mohapatra, C.; Das, P.; Makharia, G.; Mehra, L.; AP, P.; Mausam. DeGPR: Deep Guided Posterior Regularization for Multi-Class Cell Detection and Counting. arXiv 2023, arXiv:2304.00741. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, X.; Wei, Y.; Huang, L.; Shi, H.; Liu, W.; Huang, T.S. CCNet: Criss-Cross Attention for Semantic Segmentation. arXiv 2020, arXiv:1811.11721. [Google Scholar] [CrossRef]

- Azad, B.; Adibfar, P.; Fu, K. TransDAE: Dual Attention Mechanism in a Hierarchical Transformer for Efficient Medical Image Segmentation. arXiv 2024, arXiv:2409.02018. [Google Scholar] [CrossRef]

- Han, W.; Kang, S.; Choo, K.; Hwang, S.J. CoBra: Complementary Branch Fusing Class and Semantic Knowledge for Robust Weakly Supervised Semantic Segmentation. arXiv 2024, arXiv:2403.08801. [Google Scholar] [CrossRef]

- Qin, H.; Yan, J.; Li, X.; Hu, X. Joint Training of Cascaded CNN for Face Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3456–3465. [Google Scholar] [CrossRef]

- Zhang, W.; Li, N.; Wang, Q. Bayesian Inference for Object Detection with Prior Knowledge Fusion. Comput. Vis. Pattern Recognit. J. 2019, 34, 121–134. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Liu, W. A moving target detection algorithm based on posterior probability. Chin. J. Sci. Instrum. 2014, 127, 161–164. [Google Scholar]

- Zhao, X.; Huang, J.; Gao, Y.; Wang, Q. Hyperspectral Target Detection Based on Prior Spectral Perception and Local Graph Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 13936–13948. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, Y.; Zhu, B.; Wang, J.; Tang, M. Semantic-spatial fusion network for human parsing. Neurocomputing 2020, 402, 375–383. [Google Scholar] [CrossRef]

- Yang, H.; Fang, Y.M.; Liu, L.; Ju, H.; Kang, K. Improved YOLOv5 Based on Feature Fusion and Attention Mechanism and Its Application in Continuous Casting Slab Detection. IEEE Trans. Instrum. Meas. 2023, 72, 1–16. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Li, X.; Hu, X.; Yang, J. Spatial Group-wise Enhance: Improving Semantic Feature Learning in Convolutional Networks. arXiv 2019, arXiv:1905.09646. [Google Scholar] [CrossRef]

- Dong, X.; Qin, Y.; Gao, Y.; Fu, R.; Liu, S.; Ye, Y. Attention-Based Multi-Level Feature Fusion for Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 3735. [Google Scholar] [CrossRef]

- Hu, X.; Jing, L.; Sehar, U. Joint pyramid attention network for real-time semantic segmentation of urban scenes. Appl. Intell. Int. J. Artif. Intell. Neural Networks Complex Probl. Solving Technol. 2022, 52, 580–594. [Google Scholar] [CrossRef]

- Nie, M.; Xue, Y.; Wang, C.; Ye, C.; Xu, H.; Zhu, X.; Huang, Q.; Mi, M.B.; Wang, X.; Zhang, L. PARTNER: Level up the Polar Representation for LiDAR 3D Object Detection. arXiv 2023, arXiv:2308.03982. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar] [CrossRef]

- Couturier, R.; Noura, H.N.; Salman, O.; Sider, A. A deep learning object detection method for an efficient clusters initialization. arXiv 2021, arXiv:2104.13634. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, X.; Wang, G.; Dang, Q.; Liu, Y.; Hu, X.; Yu, D. PP-YOLOE-R: An Efficient Anchor-Free Rotated Object Detector. arXiv 2022, arXiv:2211.02386. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. arXiv 2022, arXiv:2208.03641. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Geng, Y.; Jiang, H.; Cheng, M.; Zhang, B.; Ke, Z.; Xu, X.; Chu, X. YOLOv6 v3.0: A Full-Scale Reloading. arXiv 2023, arXiv:2301.05586. [Google Scholar] [CrossRef]

- Zhang, X.; Liang, S. Towards Robust Object Detection: Identifying and Removing Backdoors via Module Inconsistency Analysis. arXiv 2024, arXiv:2409.16057. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. arXiv 2018, arXiv:1803.01534. [Google Scholar]

- Kaur, G.; Batth, R.S. Edge Computing: Classification, Applications, and Challenges. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; pp. 254–259. [Google Scholar] [CrossRef]

- Wang, H.; Nie, Y.; Li, Y.; Liu, H.; Liu, M.; Cheng, W.; Wang, Y. Research, Applications and Prospects of Event-Based Pedestrian Detection: A Survey. arXiv 2024, arXiv:2407.04277. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. IEEE Comput. Soc. 2016. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Xu, G.; Liao, W.; Zhang, X.; Li, C.; He, X.; Wu, X. Haar wavelet downsampling: A simple but effective downsampling module for semantic segmentation. Pattern Recognit. 2023, 143, 109819. [Google Scholar] [CrossRef]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-Aware ReAssembly of FEatures. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar] [CrossRef]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to Upsample by Learning to Sample. arXiv 2023, arXiv:2308.15085. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-Weighted Linear Units for Neural Network Function Approximation in Reinforcement Learning. arXiv 2017, arXiv:1702.03118. [Google Scholar] [CrossRef]

- Bacciu, D.; Errica, F.; Micheli, A.; Podda, M. A gentle introduction to deep learning for graphs. Neural Netw. 2020, 129, 203–221. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, H.; Guo, S.; Zhang, L. Efficient Long-Range Attention Network for Image Super-resolution. arXiv 2022, arXiv:2203.06697. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).