TGA-GS: Thermal Geometrically Accurate Gaussian Splatting

Abstract

1. Introduction

- We propose the TGA-GS method, focusing on 3D thermal model reconstruction in low-light conditions. Extensive experimental results demonstrate that TGA-GS outperforms existing relevant methods in multiple evaluation metrics, showcasing its outstanding performance in 3D reconstruction under low-light environments.

- Our method exhibits robust novel view synthesis capabilities, generating high-resolution images from low-resolution inputs, including high-resolution thermal and RGB images, effectively enhancing image quality and information richness.

- We create a novel dataset that provides essential data support for 3D reconstruction and novel view synthesis research. This dataset comprises RGB and thermal images captured in low-light environments, offering a wealth of samples for studying image features and reconstruction algorithms under low lighting conditions and facilitating further advancements in related fields.

2. Related Work

2.1. Thermal Imaging

2.2. Three-Dimensional Reconstruction and Novel View Synthesis

3. Methods

3.1. Preliminaries

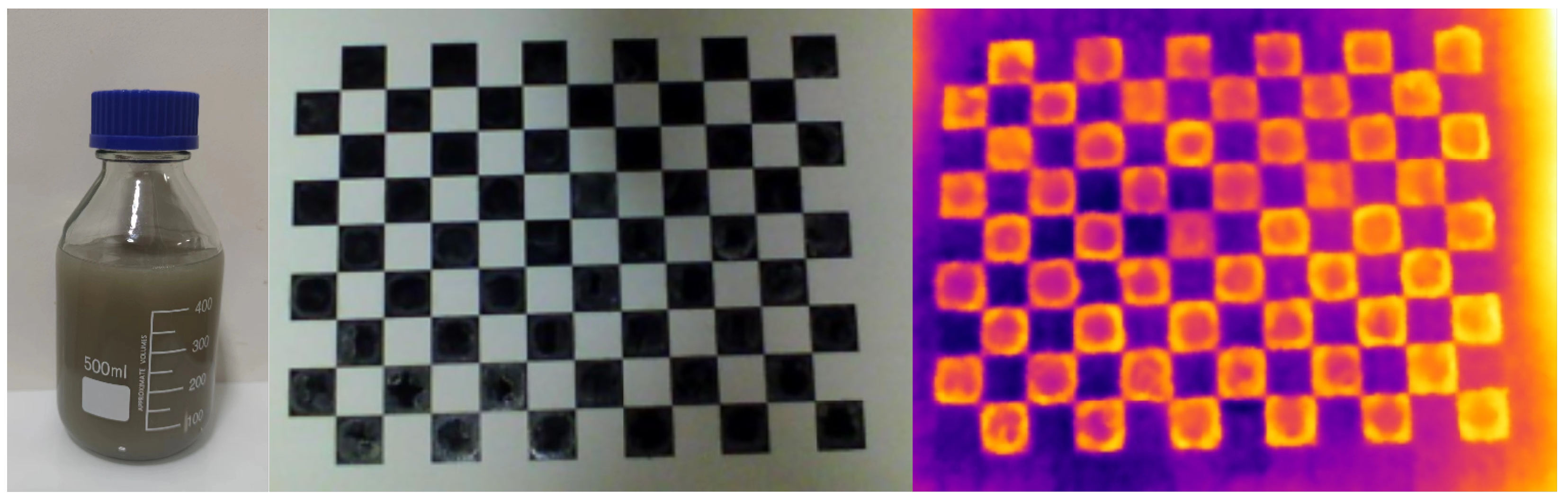

3.2. Multimodal Calibration

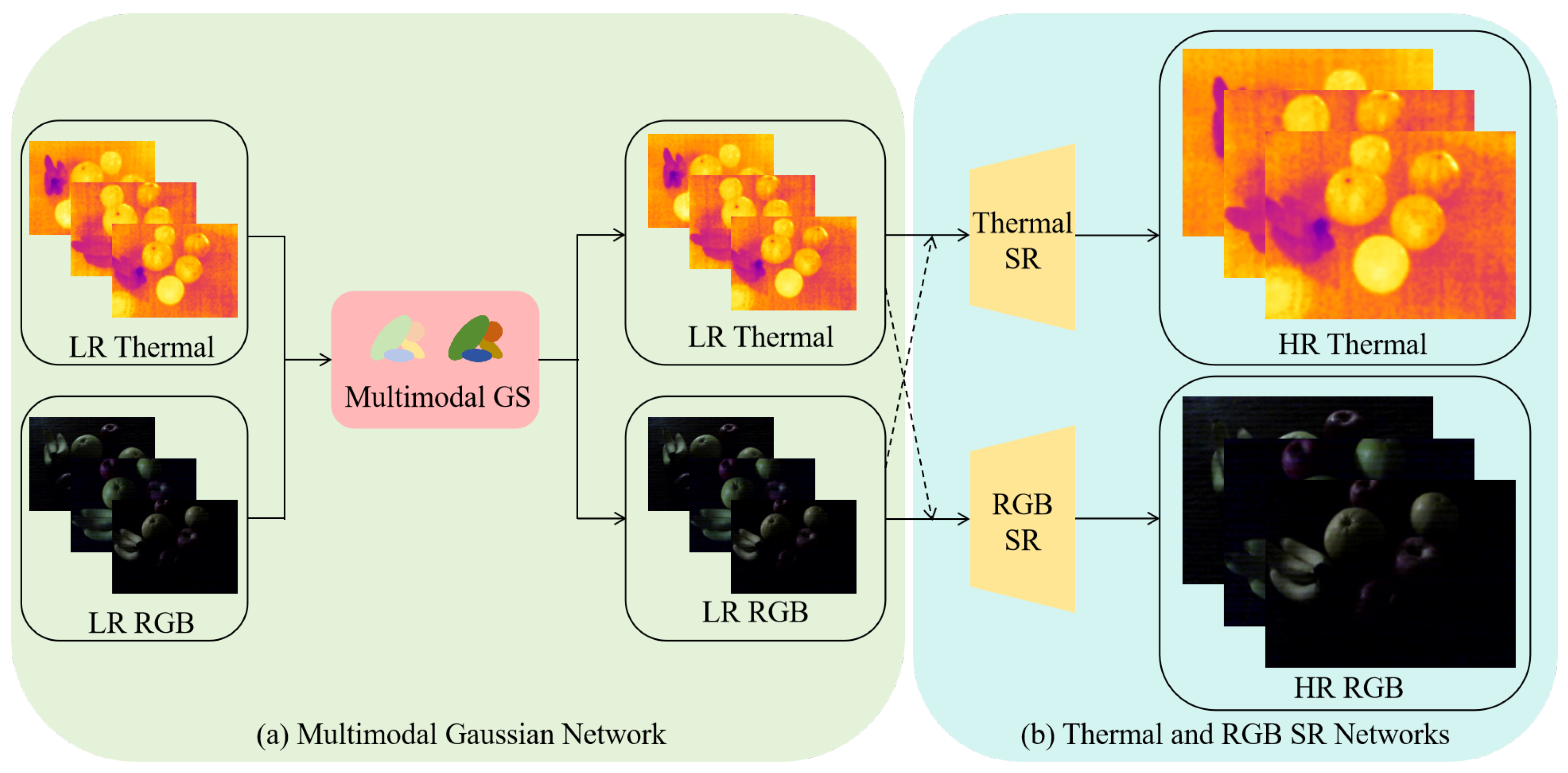

3.3. TGA-GS

3.4. Loss

4. Experiments

4.1. Datasets and Baselines

4.2. Evaluation Metrics

4.3. Implementation Details

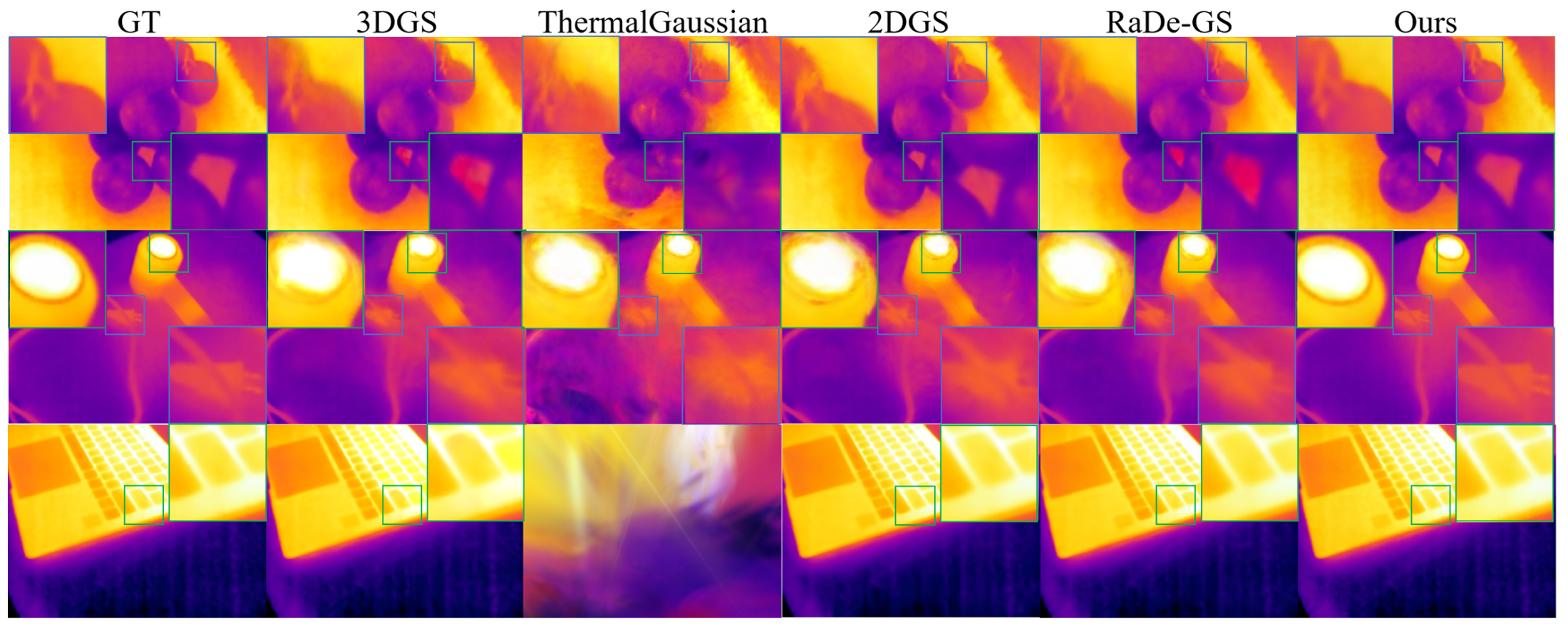

4.4. Thermal View Synthesis

4.5. RGB View Synthesis

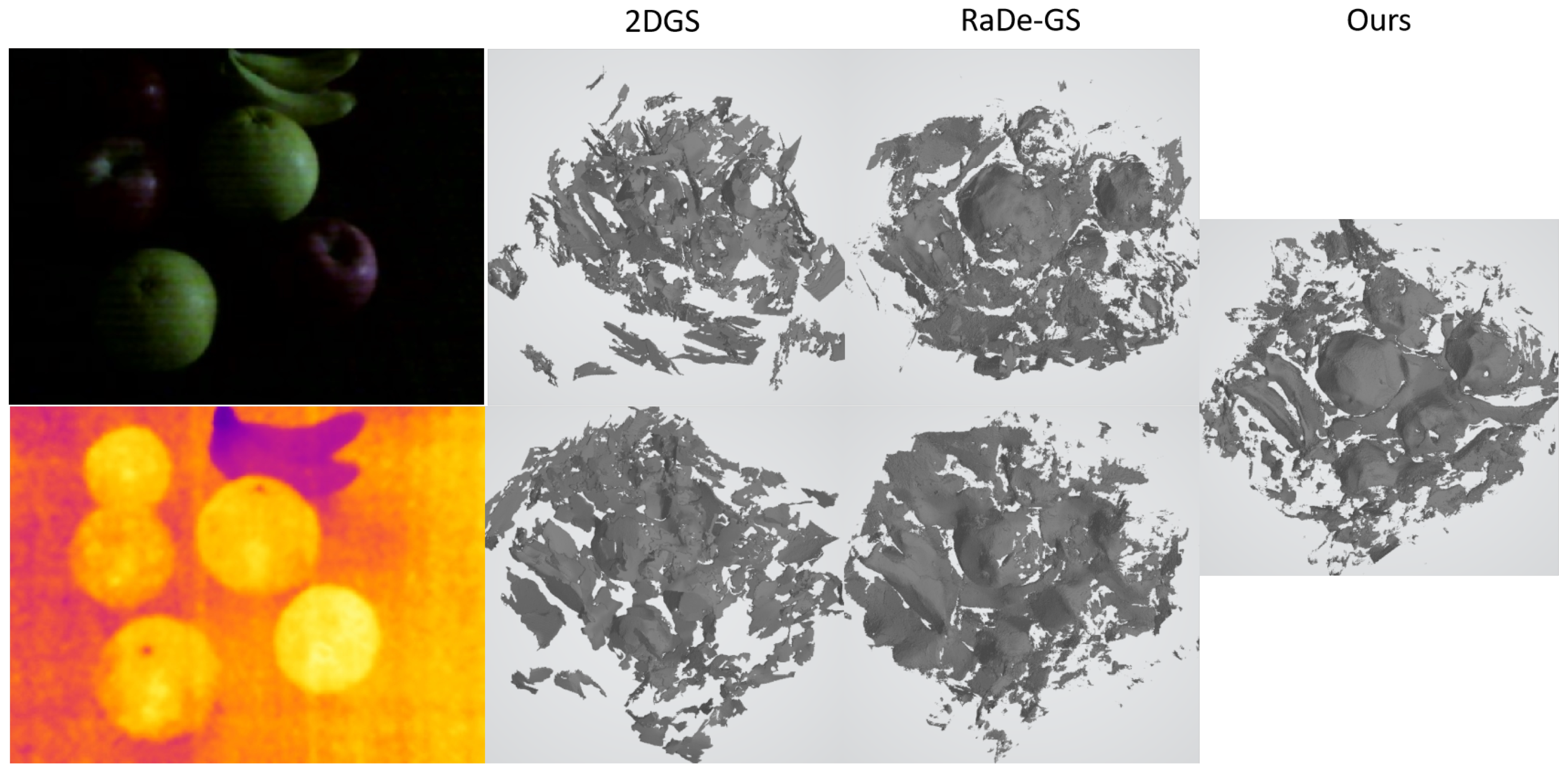

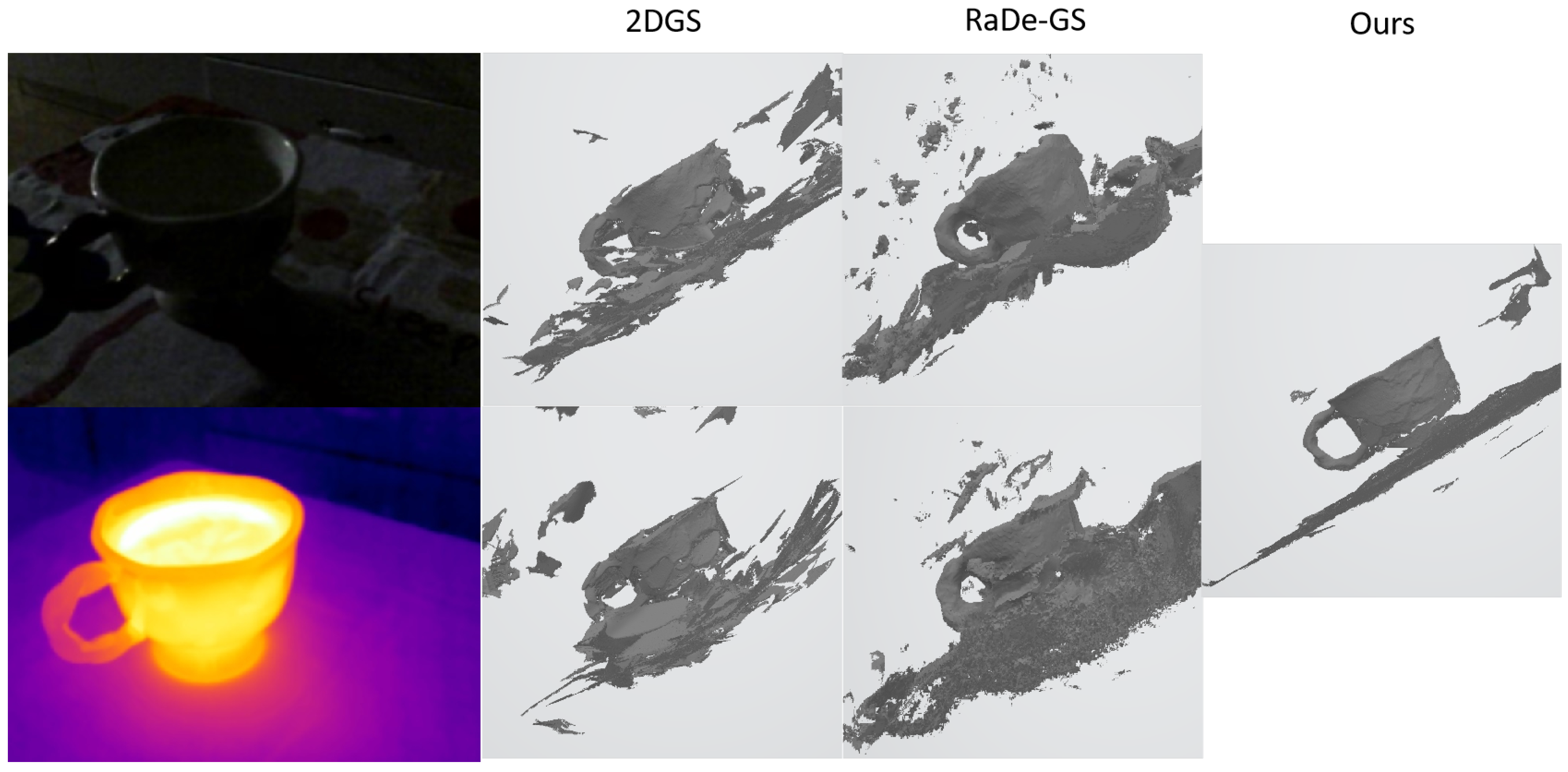

4.6. Three-Dimensional Object Reconstruction

4.7. Ablation Study

4.8. Practical Implications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3d gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 2023, 42, 139:1–139:14. [Google Scholar] [CrossRef]

- Nayar, S.; Narasimhan, S. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 820–827. [Google Scholar]

- Flir. The Ultimate Infrared Handbook for R&D Professionals. 2013. Available online: www.flir.com/thg (accessed on 21 April 2025).

- Tsai, P.F.; Liao, C.H.; Yuan, S.M. Using Deep Learning with Thermal Imaging for Human Detection in Heavy Smoke Scenarios. Sensors 2022, 22, 5351. [Google Scholar] [CrossRef] [PubMed]

- Gade, R.; Moeslund, T. Thermal cameras and applications: A survey. Mach. Vis. Appl. 2014, 25, 245–262. [Google Scholar] [CrossRef]

- He, Y.; Deng, B.; Wang, H.; Cheng, L.; Zhou, K.; Cai, S.; Ciampa, F. Infrared machine vision and infrared thermography with deep learning: A review. Infrared Phys. Technol. 2021, 116, 103754. [Google Scholar] [CrossRef]

- Torresan, H.; Turgeon, B.; Ibarra-Castanedo, C.; Hebert, P.; Maldague, X.P. Advanced surveillance systems: Combining video and thermal imagery for pedestrian detection. In Proceedings of the SPIE Thermosense XXVI, Orlando, FL, USA, 12 April 2004; Volume 5405, pp. 506–515. [Google Scholar]

- Akula, A.; Ghosh, R.; Sardana, H. Thermal imaging and its application in defence systems. Proc. AIP Conf. Proc. 2011, 1391, 333–335. [Google Scholar]

- Wong, W.K.; Tan, P.N.; Loo, C.K.; Lim, W.S. An effective surveillance system using thermal camera. In Proceedings of the International Conference on Signal Acquisition and Processing, Kuala Lumpur, Malaysia, 3–5 April 2009; pp. 13–17. [Google Scholar]

- Yeom, S. Thermal image tracking for search and rescue missions with a drone. Drones 2024, 8, 53. [Google Scholar] [CrossRef]

- Rudol, P.; Doherty, P. Human body detection and geolocalization for UAV search and rescue missions using color and thermal imagery. In Proceedings of the IEEE Aerospace Conference, Big Sky, Montana, 1–8 March 2008; pp. 1–8. [Google Scholar]

- Rodin, C.; de Lima, L.; de Alcantara Andrade, F.; Haddad, D.; Johansen, T.; Storvold, R. Object classification in thermal images using convolutional neural networks for search and rescue missions with unmanned aerial systems. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Iwasaki, K.; Fukushima, K.; Nagasaka, Y.; Ishiyama, N.; Sakai, M.; Nagasaka, A. Real-time monitoring and postprocessing of thermal infrared video images for sampling and mapping groundwater discharge. Water Resour. Res. 2023, 59, e2022WR033630. [Google Scholar] [CrossRef]

- Fuentes, S.; Tongson, E.; Gonzalez Viejo, C. Urban green infrastructure monitoring using remote sensing from integrated visible and thermal infrared cameras mounted on a moving vehicle. Sensors 2021, 21, 295. [Google Scholar] [CrossRef]

- Lega, M.; Napoli, R.M. Aerial infrared thermography in the surface waters contamination monitoring. Desalination Water Treat. 2010, 23, 141–151. [Google Scholar] [CrossRef]

- Pyykonen, P.; Peussa, P.; Kutila, M.; Fong, K.W. Multi-camera-based smoke detection and traffic pollution analysis system. In Proceedings of the IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 8–10 September 2016; pp. 233–238. [Google Scholar]

- Fuentes, S.; Tongson, E.J.; De Bei, R.; Gonzalez Viejo, C.; Ristic, R.; Tyerman, S.; Wilkinson, K. Non-invasive tools to detect smoke contamination in grapevine canopies, berries and wine: A remote sensing and machine learning modeling approach. Sensors 2019, 19, 3335. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Lamichhane, N.; Kim, C.; Shrestha, R. Innovations in Building Diagnostics and Condition Monitoring: A Comprehensive Review of Infrared Thermography Applications. Buildings 2023, 13, 2829. [Google Scholar] [CrossRef]

- De Luis-Ruiz, J.; Sedano-Cibrian, J.; Perez-Alvarez, R.; Pereda-Garcia, R.; Salas-Menocal, R. Generation of 3D Thermal Models for the Analysis of Energy Efficiency in Buildings. In Advances in Design Engineering III; Springer: Berlin/Heidelberg, Germany, 2023; pp. 741–754. [Google Scholar]

- Martin, M.; Chong, A.; Biljecki, F.; Miller, C. Infrared thermography in the built environment: A multi-scale review. Renew. Sustain. Energy Rev. 2022, 165, 112540. [Google Scholar] [CrossRef]

- Masri, Y.; Rakha, T. A scoping review of non-destructive testing (NDT) techniques in building performance diagnostic inspections. Constr. Build. Mater. 2020, 265, 120542. [Google Scholar] [CrossRef]

- Malhotra, V.; Carino, N. CRC Handbook on Nondestructive Testing of Concrete; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar]

- Jackson, C.; Sherlock, C.; Moore, P. Leak Testing. In Nondestructive Testing Handbook; American Society for Nondestructive Testing: Columbus, OH, USA, 1998. [Google Scholar]

- Maset, E.; Fusiello, A.; Crosilla, F.; Toldo, R.; Zorzetto, D. Photogrammetric 3D building reconstruction from thermal images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2-W3, 25–32. [Google Scholar] [CrossRef]

- Zhou, Z.; Majeed, Y.; Naranjo, G.D.; Gambacorta, E.M.T. Assessment for crop water stress with infrared thermal imagery in precision agriculture: A review and future prospects for deep learning applications. Comput. Electron. Agric. 2021, 182, 106019. [Google Scholar] [CrossRef]

- Jurado, J.M.; Lopez, A.; Padua, L.; Sousa, J.J. Remote sensing image fusion on 3D scenarios: A review of applications for agriculture and forestry. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102856. [Google Scholar] [CrossRef]

- Nasi, R.; Honkavaara, E.; Blomqvist, M.; Lyytikainen-Saarenmaa, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Holopainen, M. Remote sensing of bark beetle damage in urban forests at individual tree level using a novel hyperspectral camera from UAV and aircraft. Urban For. Urban Green. 2018, 30, 72–83. [Google Scholar] [CrossRef]

- Miyoshi, G.T.; Arruda, M.S.; Osco, L.P.; Marcato Junior, J.; Goncalves, D.N.; Imai, N.N.; Tommaselli, A.M.G.; Honkavaara, E.; Goncalves, W.N. A novel deep learning method to identify single tree species in UAV-based hyperspectral images. Remote Sens. 2020, 12, 1294. [Google Scholar] [CrossRef]

- Lee, S.; Moon, H.; Choi, Y.; Yoon, D.K. Analyzing thermal characteristics of urban streets using a thermal imaging camera: A case study on commercial streets in Seoul, Korea. Sustainability 2018, 10, 519. [Google Scholar] [CrossRef]

- Carrasco-Benavides, M.; Antunez-Quilobran, J.; Baffico-Hernandez, A.; Avila-Sanchez, C.; Ortega-Farias, S.; Espinoza, S.; Gajardo, J.; Mora, M.; Fuentes, S. Performance assessment of thermal infrared cameras of different resolutions to estimate tree water status from two cherry cultivars: An alternative to midday stem water potential and stomatal conductance. Sensors 2020, 20, 3596. [Google Scholar] [CrossRef] [PubMed]

- Hernandez-Clemente, R.; Hornero, A.; Mottus, M.; Penuelas, J.; Gonzalez-Dugo, V.; Jimenez, J.; Suarez, L.; Alonso, L.; Zarco-Tejada, P. Early diagnosis of vegetation health from high-resolution hyperspectral and thermal imagery: Lessons learned from empirical relationships and radiative transfer modelling. Curr. For. Rep. 2019, 5, 169–183. [Google Scholar] [CrossRef]

- Parihar, G.; Saha, S.; Giri, L.I. Application of infrared thermography for irrigation scheduling of horticulture plants. Smart Agric. Technol. 2021, 1, 100021. [Google Scholar] [CrossRef]

- Glowacz, A. Fault diagnosis of electric impact drills using thermal imaging. Measurement 2021, 171, 108815. [Google Scholar] [CrossRef]

- Lahiri, B.; Bagavathiappan, S.; Jayakumar, T.; Philip, J. Medical applications of infrared thermography: A review. Infrared Phys. Technol. 2012, 55, 221–235. [Google Scholar] [CrossRef]

- Goel, J.; Nizamoglu, M.; Tan, A.; Gerrish, H.; Cranmer, K.; ElMuttardi, N.; Barnes, D.; Dziewulski, P. A prospective study comparing the FLIR ONE with laser Doppler imaging in the assessment of burn depth by a tertiary burns unit in the United Kingdom. Scars Burn. Heal. 2020, 6, 2059513120974261. [Google Scholar] [CrossRef]

- Jaspers, M.E.; Carriere, M.; Meij-de Vries, A.; Klaessens, J.; Van Zuijlen, P. The FLIR ONE thermal imager for the assessment of burn wounds: Reliability and validity study. Burns 2017, 43, 1516–1523. [Google Scholar] [CrossRef]

- Choi, Y.; Kim, N.; Hwang, S.; Park, K.; Yoon, J.; An, K.; Kweon, I. KAIST Multi-Spectral Day/Night Data Set for Autonomous and Assisted Driving. IEEE Trans. Intell. Transp. Syst. 2018, 19, 934–948. [Google Scholar] [CrossRef]

- Lee, A.; Cho, Y.; Shin, Y.S.; Kim, A.; Myung, H. ViViD++: Vision for visibility dataset. IEEE Robot. Autom. Lett. 2022, 7, 6282–6289. [Google Scholar] [CrossRef]

- Farooq, M.A.; Shariff, W.; Khan, F.; Corcoran, P.; Rotariu, C. C3I Thermal Automotive Dataset. 2022. Available online: https://ieee-dataport.org/documents (accessed on 21 April 2025).

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I. Multispectral Pedestrian Detection: Benchmark Dataset and Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1237–1244. [Google Scholar]

- Berg, A.; Ahlberg, J.; Felsberg, M. A thermal infrared dataset for evaluation of short-term tracking methods. In Proceedings of the Swedish Symposium on Image Analysis, Copenhagen, Denmark, 12–14 June 2015. [Google Scholar]

- Kopaczka, M.; Kolk, R.; Merhof, D. A fully annotated thermal face database and its application for thermal facial expression recognition. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, 14–17 May 2018; pp. 1–6. [Google Scholar]

- Cho, Y.; Bianchi-Berthouze, N.; Marquardt, N.; Julier, S. Deep Thermal Imaging: Proximate Material Type Recognition in the Wild through Deep Learning of Spatial Surface Temperature Patterns. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar]

- Vertens, J.; Zürn, J.; Burgard, W. HeatNet: Bridging the Day-Night Domain Gap in Semantic Segmentation with Thermal Images. arXiv 2020, arXiv:2003.04645. [Google Scholar]

- Dai, W.; Zhang, Y.; Chen, S.; Sun, D.; Kong, D. A Multi-spectral Dataset for Evaluating Motion Estimation Systems. arXiv 2021, arXiv:2007.00622. [Google Scholar]

- Yin, J.; Li, A.; Li, T.; Yu, W.; Zou, D. M2DGR: A Multi-sensor and Multi-scenario SLAM Dataset for Ground Robots. IEEE Robot. Autom. Lett. 2021, 7, 2266–2273. [Google Scholar] [CrossRef]

- Vidas, S.; Lakemond, R.; Denman, S.; Fookes, C.; Sridharan, S.; Wark, T. A mask-based approach for the geometric calibration of thermal-infrared cameras. IEEE Trans. Instrum. Meas. 2012, 61, 1625–1635. [Google Scholar] [CrossRef]

- Ko, K.; Shim, K.; Lee, K.; Kim, C. Large-scale benchmark for uncooled infrared image deblurring. IEEE Sens. J. 2023, 23, 30119–30128. [Google Scholar] [CrossRef]

- Kuang, X.; Sui, X.; Liu, Y.; Chen, Q.; Gu, G. Single infrared image enhancement using a deep convolutional neural network. Neurocomputing 2019, 332, 119–128. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Huang, N.; Liu, K.; Liu, Y.; Zhang, Q.; Han, J. Cross-modality person re-identification via multi-task learning. Pattern Recognit. 2022, 128, 108653. [Google Scholar] [CrossRef]

- Poggi, M.; Ramirez, P.Z.; Tosi, F.; Salti, S.; Mattoccia, S.; Stefano, L.D. Cross-spectral Neural Radiance Fields. In Proceedings of the International Conference on 3D Vision (3DV), Prague, Czech Republic, 12–15 September 2022; pp. 606–616. [Google Scholar]

- Ye, T.; Wu, Q.; Deng, J.; Liu, G.; Liu, L.; Xia, S.; Pei, L. Thermal-nerf: Neural radiance fields from an infrared camera. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 1046–1053. [Google Scholar]

- Hassan, M.; Forest, F.; Fink, O.; Mielle, M. Thermonerf: Multimodal neural radiance fields for thermal novel view synthesis. arXiv 2024, arXiv:2403.12154. [Google Scholar]

- Lin, Y.; Pan, X.; Fridovich-Keil, S.; Wetzstein, G. ThermalNeRF: Thermal Radiance Fields. In Proceedings of the 2024 IEEE International Conference on Computational Photography (ICCP), Lausanne, Switzerland, 22–24 July 2024; pp. 1–12. [Google Scholar]

- Zhong, C.; Xu, C. TeX-NeRF: Neural Radiance Fields from Pseudo-TeX Vision. arXiv 2024, arXiv:2410.04873. [Google Scholar]

- Xu, J.; Liao, M.; Kathirvel, R.; Patel, V. Leveraging Thermal Modality to Enhance Reconstruction in Low-Light Conditions. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 321–339. [Google Scholar]

- Chopra, S.; Cladera, F.; Murali, V.; Kumar, V. AgriNeRF: Neural Radiance Fields for Agriculture in Challenging Lighting Conditions. arXiv 2024, arXiv:2409.15487. [Google Scholar]

- Özer, M.; Weiherer, M.; Hundhausen, M.; Egger, B. Exploring Multi-modal Neural Scene Representations With Applications on Thermal Imaging. arXiv 2024, arXiv:2403.11865. [Google Scholar]

- Chen, Q.; Shu, S.; Bai, X. Thermal3D-GS: Physics-induced 3D Gaussians for Thermal Infrared Novel-view Synthesis. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 253–269. [Google Scholar]

- Lu, R.; Chen, H.; Zhu, Z.; Qin, Y.; Lu, M.; Zhang, L.; Yan, C.; Xue, A. ThermalGaussian: Thermal 3D Gaussian Splatting. arXiv 2024, arXiv:2409.07200. [Google Scholar]

- Liu, Y.; Chen, X.; Yan, S.; Cui, Z.; Xiao, H.; Liu, Y.; Zhang, M. ThermalGS: Dynamic 3D Thermal Reconstruction with Gaussian Splatting. Remote Sens. 2025, 17, 335. [Google Scholar] [CrossRef]

- Yang, K.; Liu, Y.; Cui, Z.; Liu, Y.; Zhang, M.; Yan, S.; Wang, Q. NTR-Gaussian: Nighttime Dynamic Thermal Reconstruction with 4D Gaussian Splatting Based on Thermodynamics. arXiv 2025, arXiv:2503.03115. [Google Scholar]

- Planck, M. On the Law of Distribution of Energy in the Normal Spectrum. Ann. Der Phys. 1901, 4, 1. [Google Scholar]

- Stefan, J.; Ebel, J. Kommentierter Neusatz von Uber die Beziehung Zwischen der Wärmestrahlung und der Temperatur. 2014. Available online: https://scholar.google.com.hk/scholar?hl=zh-CN&as_sdt=0%2C5&q=Kommentierter+Neusatz+von+Uber+die+Beziehung+Zwischen+der+Wärmestrahlung+und+der+Temperatur.&btnG= (accessed on 21 April 2025).

- Rogalski, A. Infrared Detectors; CRC Press: Boca Raton, FL, USA, 2000. [Google Scholar]

- Adan, A.; Quintana, B.; Aguilar, J.G.; Pérez, V.; Castilla, F.J. Towards the Use of 3D Thermal Models in Constructions. Sustainability 2020, 12, 8521. [Google Scholar] [CrossRef]

- Grechi, G.; Fiorucci, M.; Marmoni, G.M.; Martino, S. 3D Thermal Monitoring of Jointed Rock Masses Through Infrared Thermography and Photogrammetry. Remote Sens. 2021, 13, 957. [Google Scholar] [CrossRef]

- Schmidt, R. How Patent-Pending Technology Blends Thermal and Visible Light. Available online: https://www.fluke.com/en/learn/blog/thermal-imaging/how-patent-pending-technology-blends-thermal-and-visible-light (accessed on 13 March 2025).

- Bao, F.; Jape, S.; Schramka, A.; Wang, J.; McGraw, T.E.; Jacob, Z. Why Are Thermal Images Blurry. arXiv 2023, arXiv:2307.15800. [Google Scholar] [CrossRef]

- Zhang, H.; Hu, Y.; Yan, M. Thermal Image Super-Resolution Based on Lightweight Dynamic Attention Network for Infrared Sensors. Sensors 2023, 23, 8717. [Google Scholar] [CrossRef]

- Chen, C.; Yeh, C.; Chang, B.; Pan, J. 3D Reconstruction from IR Thermal Images and Reprojective Evaluations. Math. Probl. Eng. 2015, 2015, e520534. [Google Scholar] [CrossRef]

- Ma, Y.; Wang, Y.; Mei, X.; Liu, C.; Dai, X.; Fan, F.; Huang, J. Visible/Infrared Combined 3D Reconstruction Scheme Based on Nonrigid Registration of Multi-Modality Images with Mixed Features. IEEE Access 2019, 7, 19199–19211. [Google Scholar] [CrossRef]

- Lang, S.; Jager, K. 3D Scene Reconstruction from IR Image Sequences for Image-Based Navigation Update and Target Detection of an Autonomous Airborne System. Infrared Technol. Appl. XXXIV 2008, 6940, 535–543. [Google Scholar]

- Schonberger, J.; Frahm, J. Structure-from-Motion Revisited. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Robertson, D.; Cipolla, R. Practical Image Processing and Computer Vision. In Chapter Structure from Motion; John Wiley & Sons: New York, NY, USA, 2009. [Google Scholar]

- Schonberger, J.; Zheng, E.; Pollefeys, M.; Frahm, J. Pixelwise view selection for unstructured multi-view stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Cheng, Z.; Esteves, C.; Jampani, V.; Kar, A.; Maji, S.; Makadia, A. Lu-Nerf: Scene and Pose Estimation by Synchronizing Local Unposed Nerfs. arXiv 2023, arXiv:2306.05410. [Google Scholar]

- Furukawa, Y.; Hernandez, C. Multi-View Stereo: A Tutorial. Found. Trends Comput. Graph. Vis. 2015, 9, 1–148. [Google Scholar] [CrossRef]

- Wang, S.; Jiang, H.; Xiang, L. CT-MVSnet: Efficient Multi-View Stereo with Cross-Scale Transformer. In Proceedings of the International Conference on Multimedia Modeling, Amsterdam, The Netherlands, 29 January–2 February 2024; pp. 394–408. [Google Scholar]

- Mildenhall, B.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.; Barron, J. NeRF in the dark: High dynamic range view synthesis from noisy raw images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16190–16199. [Google Scholar]

- Cui, Z.T.; Gu, L.; Sun, X.; Ma, X.Z.; Qiao, Y.; Harada, T. Aleth-Nerf: Low-Light Condition View Synthesis with Concealing Fields. arXiv 2023, arXiv:2303.05807. [Google Scholar]

- Martin-Brualla, R.; Radwan, N.; Sajjadi, M.; Barron, J.; Dosovitskiy, A.; Duckworth, D. Nerf in the wild: Neural radiance fields for unconstrained photo collections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7210–7219. [Google Scholar]

- Wang, P.; Liu, L.; Liu, Y.; Theobalt, C.; Komura, T.; Wang, W. Neus: Learning neural implicit surfaces by volume rendering for multi-view reconstruction. arXiv 2021, arXiv:2106.10689. [Google Scholar]

- Zeng, Y.J.; Lei, J.; Feng, T.M.; Qin, X.Y.; Li, B.; Wang, Y.Q.; Wang, D.X.; Song, J. Neural Radiance Fields-Based 3D Reconstruction of Power Transmission Lines Using Progressive Motion Sequence Images. Sensors 2023, 23, 9537. [Google Scholar] [CrossRef]

- Zhu, H.; Sun, Y.; Liu, C.; Xia, L.; Luo, J.; Qiao, N.; Nevatia, R.; Kuo, C. Multimodal Neural Radiance Field. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 9393–9399. [Google Scholar]

- Wang, B.J.; Zhang, D.H.; Su, Y.X.; Zhang, H.J. Enhancing View Synthesis with Depth-Guided Neural Radiance Fields and Improved Depth Completion. Sensors 2024, 24, 1919. [Google Scholar] [CrossRef]

- Yu, Z.; Chen, A.; Huang, B.; Sattler, T.; Geiger, A. Mip-splatting: Alias-free 3d gaussian splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19447–19456. [Google Scholar]

- Zou, C.; Ma, Q.; Wang, J.; Lu, M.; Zhang, S.; He, Z. Gaussianenhancer: A General Rendering Enhancer for Gaussian Splatting. In Proceedings of the ICASSP 2025–2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Zou, C.; Ma, Q.; Wang, J.; Lu, M.; Zhang, S.; Qu, Z.; He, Z. Gaussianenhancer++: A General GS-Agnostic Rendering Enhancer. Symmetry 2025, 17, 442. [Google Scholar] [CrossRef]

- Lu, T.; Yu, M.; Xu, L.; Xiangli, Y.; Wang, L.; Lin, D.; Dai, B. Scaffold-gs: Structured 3d gaussians for view-daptive rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 20654–20664. [Google Scholar]

- Guédon, A.; Lepetit, V. Sugar: Surface-aligned gaussian splatting for efficient 3d mesh reconstruction and high-quality mesh rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5354–5363. [Google Scholar]

- Huang, B.; Yu, Z.; Chen, A.; Geiger, A.; Gao, S. 2d gaussian splatting for geometrically accurate radiance fields. In SIGGRAPH 2024 Conference Papers; Association for Computing Machinery: Denver, CO, USA, 2024. [Google Scholar]

- Zhang, B.; Fang, C.; Shrestha, R.; Liang, Y.; Long, X.; Tan, P. Rade-gs: Rasterizing depth in gaussian splatting. arXiv 2024, arXiv:2406.01467. [Google Scholar]

- Rangelov, D.; Waanders, S.; Waanders, K.; van Keulen, M.; Miltchev, R. Impact of camera settings on 3D Reconstruction quality: Insights from NeRF and Gaussian Splatting. Sensors 2024, 24, 7594. [Google Scholar] [CrossRef] [PubMed]

- Ye, S.; Dong, Z.; Hu, Y.; Wen, Y.; Liu, Y. Gaussian in the Dark: Real-Time View Synthesis From Inconsistent Dark Images Using Gaussian Splatting. Comput. Graph. Forum 2024, 43, e15213. [Google Scholar] [CrossRef]

- Lin, S.; Wang, H.; Zhang, X.; Wang, D.; Zu, D.; Song, J.; Liu, Z.; Huang, Y.; Huang, K.; Tao, N.; et al. Direct spray-coating of highly robust and transparent Ag nanowires for energy saving windows. Nano Energy 2019, 62, 111–116. [Google Scholar] [CrossRef]

- Hsu, P.; Liu, C.; Song, A.Y.; Zhang, Z.; Peng, Y.; Xie, J.; Liu, K.; Wu, C.; Catrysse, P.; Cai, L.; et al. A dual-mode textile for human body radiative heating and cooling. Sci. Adv. 2017, 3, e1700895. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, Q. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

| Metrics | Methods | Fruits1 | Fruits2 | Cup | Laptop | Hairdryer | Avg. |

|---|---|---|---|---|---|---|---|

| PSNR (dB)↑ | 3DGS [2] | 37.34 | 29.29 | 29.47 | 35.55 | 30.90 | 32.51 |

| ThermalGaussian [62] | 18.82 | 18.65 | 17.01 | 10.31 | 17.64 | 16.49 | |

| 2DGS [94] | 28.52 | 22.79 | 27.77 | 36.12 | 28.48 | 28.74 | |

| RaDe-GS [95] | 32.56 | 28.46 | 30.24 | 37.25 | 27.75 | 31.25 | |

| Ours | 35.51 | 30.25 | 29.72 | 38.94 | 29.50 | 32.78 | |

| SSIM↑ | 3DGS | 0.967 | 0.935 | 0.948 | 0.975 | 0.951 | 0.955 |

| ThermalGaussian | 0.743 | 0.781 | 0.782 | 0.554 | 0.744 | 0.721 | |

| 2DGS | 0.922 | 0.872 | 0.952 | 0.972 | 0.948 | 0.933 | |

| RaDe-GS | 0.952 | 0.932 | 0.954 | 0.974 | 0.943 | 0.951 | |

| Ours | 0.952 | 0.933 | 0.949 | 0.972 | 0.899 | 0.941 | |

| LPIPS ↓ | 3DGS | 0.108 | 0.156 | 0.099 | 0.058 | 0.089 | 0.102 |

| ThermalGaussian | 0.500 | 0.361 | 0.284 | 0.628 | 0.299 | 0.414 | |

| 2DGS | 0.233 | 0.229 | 0.104 | 0.076 | 0.100 | 0.148 | |

| RaDe-GS | 0.162 | 0.168 | 0.095 | 0.065 | 0.108 | 0.120 | |

| Ours | 0.145 | 0.095 | 0.087 | 0.021 | 0.111 | 0.092 | |

| Mem. (MB) ↓ | 3DGS | 1467 | 1733 | 1743 | 1783 | 1563 | 1658 |

| ThermalGaussian | 945 | 1363 | 1179 | 1147 | 1075 | 1142 | |

| 2DGS | 659 | 1069 | 711 | 711 | 729 | 776 | |

| RaDe-GS | 939 | 1309 | 1027 | 1859 | 1049 | 1237 | |

| Ours | 551 | 571 | 502 | 596 | 480 | 540 | |

| FPS ↑ | 3DGS | 292 | 351 | 230 | 234 | 218 | 265 |

| ThermalGaussian | 390 | 432 | 312 | 347 | 314 | 359 | |

| 2DGS | 260 | 251 | 212 | 173 | 223 | 224 | |

| RaDe-GS | 201 | 298 | 209 | 140 | 184 | 206 | |

| Ours | 387 | 441 | 301 | 315 | 318 | 352 |

| Metrics | Methods | Fruits1 | Fruits2 | Cup | Laptop | Hairdryer | Avg. |

|---|---|---|---|---|---|---|---|

| PSNR (dB)↑ | 3DGS [2] | 37.26 | 36.37 | 38.43 | 41.79 | 36.77 | 38.13 |

| ThermalGaussian [62] | 23.47 | 25.98 | 26.74 | 23.53 | 26.42 | 25.23 | |

| 2DGS [94] | 37.55 | 36.21 | 38.11 | 39.85 | 37.18 | 37.78 | |

| RaDe-GS [95] | 38.58 | 35.75 | 38.37 | 39.70 | 36.49 | 37.78 | |

| Ours | 39.98 | 39.66 | 42.12 | 44.05 | 38.09 | 40.77 | |

| SSIM↑ | 3DGS | 0.941 | 0.925 | 0.953 | 0.970 | 0.941 | 0.946 |

| ThermalGaussian | 0.727 | 0.695 | 0.743 | 0.635 | 0.715 | 0.703 | |

| 2DGS | 0.938 | 0.918 | 0.945 | 0.952 | 0.943 | 0.940 | |

| RaDe-GS | 0.929 | 0.924 | 0.948 | 0.955 | 0.936 | 0.939 | |

| Ours | 0.937 | 0.939 | 0.960 | 0.963 | 0.852 | 0.930 | |

| LPIPS ↓ | 3DGS | 0.078 | 0.115 | 0.131 | 0.055 | 0.130 | 0.102 |

| ThermalGaussian | 0.249 | 0.264 | 0.319 | 0.240 | 0.338 | 0.282 | |

| 2DGS | 0.080 | 0.122 | 0.147 | 0.076 | 0.134 | 0.112 | |

| RaDe-GS | 0.087 | 0.126 | 0.153 | 0.077 | 0.149 | 0.118 | |

| Ours | 0.075 | 0.075 | 0.110 | 0.028 | 0.124 | 0.082 | |

| Mem. (MB) ↓ | 3DGS | 1433 | 1685 | 1447 | 1511 | 1403 | 1496 |

| ThermalGaussian | 945 | 1363 | 1179 | 1147 | 1075 | 1142 | |

| 2DGS | 827 | 1089 | 951 | 1177 | 717 | 952 | |

| RaDe-GS | 865 | 1135 | 887 | 941 | 817 | 929 | |

| Ours | 551 | 571 | 502 | 596 | 480 | 540 | |

| FPS ↑ | 3DGS | 307 | 428 | 342 | 333 | 334 | 349 |

| ThermalGaussian | 442 | 487 | 358 | 365 | 380 | 406 | |

| 2DGS | 215 | 239 | 220 | 152 | 225 | 210 | |

| RaDe-GS | 205 | 283 | 225 | 228 | 228 | 232 | |

| Ours | 439 | 544 | 505 | 447 | 445 | 476 |

| Variant | PSNR↑ | SSIM↑ | LPIPS↓ |

| w/o Thermal Gradient Alignment Loss | 32.22 | 0.937 | 0.144 |

| w/o SR module | 31.54 | 0.930 | 0.340 |

| Ours | 32.78 | 0.941 | 0.092 |

| Variant | PSNR↑ | SSIM↑ | LPIPS↓ |

| w/o Thermal Gradient Alignment Loss | 40.71 | 0.924 | 0.100 |

| w/o SR module | 39.26 | 0.904 | 0.218 |

| Ours | 40.77 | 0.930 | 0.082 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, C.; Ma, Q.; Wang, J.; Lu, R.; Lu, M.; Qu, Z. TGA-GS: Thermal Geometrically Accurate Gaussian Splatting. Appl. Sci. 2025, 15, 4666. https://doi.org/10.3390/app15094666

Zou C, Ma Q, Wang J, Lu R, Lu M, Qu Z. TGA-GS: Thermal Geometrically Accurate Gaussian Splatting. Applied Sciences. 2025; 15(9):4666. https://doi.org/10.3390/app15094666

Chicago/Turabian StyleZou, Chen, Qingsen Ma, Jia Wang, Rongfeng Lu, Ming Lu, and Zhaowei Qu. 2025. "TGA-GS: Thermal Geometrically Accurate Gaussian Splatting" Applied Sciences 15, no. 9: 4666. https://doi.org/10.3390/app15094666

APA StyleZou, C., Ma, Q., Wang, J., Lu, R., Lu, M., & Qu, Z. (2025). TGA-GS: Thermal Geometrically Accurate Gaussian Splatting. Applied Sciences, 15(9), 4666. https://doi.org/10.3390/app15094666