A Review of Physical and Perceptual Feature Extraction Techniques for Speech, Music and Environmental Sounds

Abstract

:1. Introduction

2. Machine Hearing

2.1. Architecture of Machine Hearing Systems

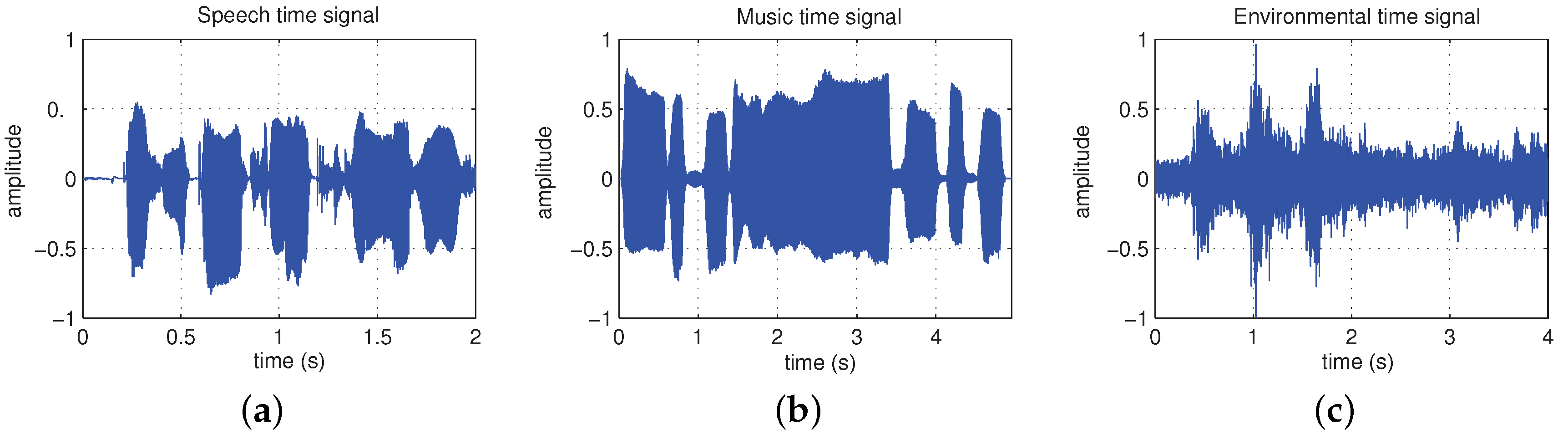

2.2. Key Differences among Speech, Music and Environmental Sounds

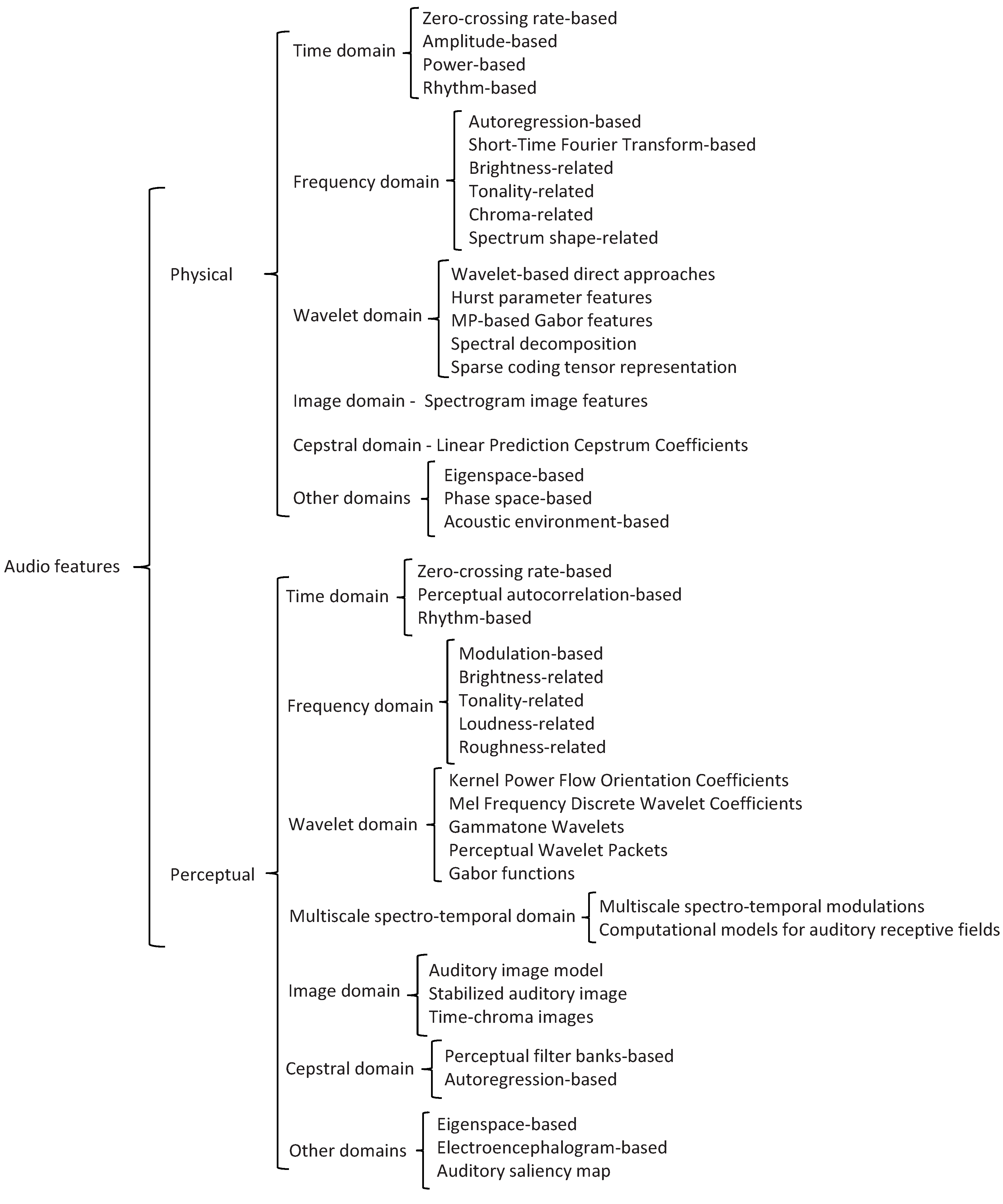

3. Audio Features Taxonomy and Review of Extraction Techniques

4. Physical Audio Features Extraction Techniques

4.1. Time Domain Physical Features

4.1.1. Zero-Crossing Rate-Based Physical Features

- Zero-crossing rate (ZCR): it is defined as the number of times the audio signal waveform crosses the zero amplitude level during a one second interval, which provides a rough estimator of the dominant frequency component of the signal (Kedem [39]). Features based on this criterion have been applied to speech/music discrimination, music classification (Li et al. [40], Bergstra et al. [41], Morchen et al. [42], Tzanetakis and Cook [28], Wang et al. [9])), singing voice detection in music and environmental sound recognition (see the works by Mitrović et al. [17] and Peltonen et al. [18]), musical instrument classification (Benetos et al. [10]), voice activity detection in noisy conditions (Ghaemmaghami et al. [43]) or for audio-based surveillance systems (as in Rabaoui et al. [24]).

- Linear prediction zero-crossing ratio (LP-ZCR): this feature is defined as the ratio between the ZCR of the original audio and the ZCR of the prediction error obtained from a linear prediction filter (see El-Maleh et al. [44]). Its use is intended for discriminating between signals that show different degree of correlation (e.g., between voiced and unvoiced speech).

4.1.2. Amplitude-Based Features

- Amplitude descriptor (AD): it allows for distinguishing sounds with different signal envelopes, being applied, for instance, for the discrimination of animal sounds (Mitrović et al. [46]). It is based on collecting the energy, duration, and variation of duration of signal segments based on their high and low amplitude by means of an adaptive threshold (a level-crossing computation).

- MPEG-7 audio waveform (AW): this feature is computed from a downsampled waveform envelope, and it is defined as the maximum and minimum values of a function of a non-overlapping analysis time window [45]. AW has been used as a feature in environmental sound recognition, like in the works of Muhammad and Alghathbar [47], or by Valero and Alías [48].

- Shimmer: it computes the cycle-to-cycle variations of the waveform amplitude. This feature has been generally applied to study pathological voices (Klingholz [49], Kreiman and Gerratt [50], Farrús et al. [51]). However, it has also been applied to discriminate vocal and non-vocal regions from audio in songs (as in Murthy and Koolagudi [52]), characterize growl and screaming singing styles (Kato and Ito [53]), prototype, classify and create musical sounds (Jenssen [54]) or to improve speaker recognition and verification (Farrús et al. [51]) to name a few.

4.1.3. Power-Based Features

- Short-time energy: using a frame-based procedure, short-time energy (STE) can be defined as the average energy per signal frame (which is in fact the MPEG-7 audio power descriptor [45]). Nevertheless, there exist also other STE definitions in the literature that compute power in the spectral domain (e.g., see Chu et al. [55]). STE can be used to detect the transition from unvoiced to voices speech and vice versa (Zhang and Kuo [56]). This feature has also been used in applications like musical onset detection (Smith et al. [57]), speech recognition (Liang and Fan [58]), environmental sound recognition (Peltonen et al. [18], Muhammad and Alghathbar [47], Valero and Alías [48]) and audio-based surveillance systems (Rabaoui et al. [24]).

- MPEG-7 temporal centroid: it represents the time instant containing the signal largest average energy, and it is computed as the temporal mean over the signal envelope (and measured in seconds) [45]. The temporal centroid has been used as an audio feature in the field of environmental sound recognition, like in the works by Muhammad and Alghathbar [47], and Valero and Alías [48]).

- MPEG-7 log attack time: it characterizes the attack of a given sound (e.g., for musical sounds, instruments can generate either smooth or sudden transitions) and it is computed as the logarithm of the elapsed time from the beginning of a sound signal to its first local maximum [45]. Besides being applied to musical onset detection (Smith et al. [57]), log attack time (LAT) has been used for environmental sound recognition (see Muhammad and Alghathbar [47], and Valero and Alías [48]).

4.1.4. Rhythm-Based Physical Features

- Pulse metric: this is a measure that uses long-time band-passed autocorrelation to determine how rhythmic a sound is in a 5-second window (as defined by Scheirer and Slaney [61]). Its computation is based on finding the peaks of the output envelopes in six frequency bands and its further comparison, giving a high value when all subbands present a regular pattern. This feature has been used for speech/music discrimination.

- Pulse clarity: it is a high-level musical dimension that conveys how easily in a given musical piece, or a particular moment during that piece, listeners can perceive the underlying rhythmic or metrical pulsation (as defined in the work by Lartillot et al. [62]). In that work, the authors describe several descriptors to compute pulse clarity based on approaches such as the analysis of the periodicity of the onset curve via autocorrelation, resonance functions, or entropy. This feature has been employed to discover correlations with qualitative measures describing overall properties of the music used in psychology studies in the work by Friberg et al. [63].

- Band periodicity: this is a measure of the strength of rhythmic or repetitive structures in audio signals (see Lu et al. [64]). Band periodicity is defined within a frequency band, and it is obtained as the mean value along all the signal frames of the maximum peak of the subband autocorrelation function.

- Beat spectrum/spectrogram: it is a two-dimensional parametrization based on time variations and lag time, thus providing an interpretable representation that reflects temporal changes of tempo (see the work by Foote [22,65]). Beat spectrum shows relevant peaks at rhythm periods that match the rhythmic properties of the signal. Beat spectrum can be used for discriminating between music (or between parts within an entire music signal) with different tempo patterns.

- Cyclic beat spectrum: or CBS for short, this is a representation of the tempo of a music signal that groups multiples of the fundamental period of the signal together in a single tempo class (Kurth et al. [66]). Thus, CBS gives a more compact representation of the fundamental beat period of a song. This feature has been employed in the field of audio retrieval.

- Beat tracker: this a feature is derived following an algorithmic approach based on signal subband decomposition and the application of a comb filter analysis in each subband (see Scheirer [67]). Beat tracker mimics at large extent the human ability to track rhythmic beats in music and allows obtaining not only tempo but also compute beat timing positions.

- Beat histogram: it provides a more general tempo perspective and summarizes the beat tempos present in a music signal (Tzanetakis and Cook [28]). In this case, Wavelet transform (see Section 4.3 for further details) is used to decompose the signal in octaves for performing subsequent accumulation of the most salient periodicities in each subband to generate the so-called beat histogram. This feature has been used for music genre classification [28].

4.2. Frequency Domain Physical Features

- Autoregression-based

- STFT-based

- Brightness-related

- Tonality-related

- Chroma-related

- Spectrum shape-related

4.2.1. Autoregression-Based Frequency Features

- Linear prediction coefficients: or LPC for short, this feature represents an all-pole filter that captures the spectral envelope (SE) of a speech signal (formants or spectral resonances that appear in the vocal tract), and have been extensively used for speech coding and recognition applications. LPC have been applied also in audio segmentation and general purpose audio retrieval, like in the works by Khan et al. [68,69].

- Line spectral frequencies: also referred to as Line Spectral Pairs (LSP) in the literature, Line Spectral Frequencies (LSF) are a robust representation of LPC parameters for quantization and interpolation purposes. They can be computed as the roots phases of the palindromic and the antipalindromic polynomials that constitute the LPC polynomial representation, which in turns represent the vocal tract when the glottis is closed and open, respectively (see Itakura [70]). Due to its intrinsic robustness they have been widely applied in a diverse set of classification problems like speaker segmentation (Sarkar and Sreenivas [71]), instrument recognition and in speech/music discrimination (Fu [13]).

- Code excited linear prediction features: or CELP for short, this feature was introduced by Schroeder and Atal [72] and has become one of the most important influences in nowadays speech coding standards. This feature comprises spectral features like LSP but also two codebook coefficients related to signal’s pitch and prediction residual signal. CELP features have been also applied in the environmental sound recognition framework, like in the work by Tsau et al. [73].

4.2.2. STFT-Based Frequency Features

- Subband energy ratio: it is usually defined as a measure of the normalized signal energy along a predefined set of frequency subbands. In a broad sense, it coarsely describes the signal energy distribution of the spectrum (Mitrović et al. [17]). There are different approximations as regards the number and characteristics of analyzed subbands (e.g., Mel scale, ad-hoc subbands, etc.). It has been used for audio segmentation and music analysis applications (see Jiang et al. [60], or Srinivasan et al. [74]) and environmental sound recognition (Peltonen et al. [18]).

- Spectral flux: or SF for short, this feature is defined as the 2-norm of the frame-to-frame spectral amplitude difference vector (see Scheirer and Slaney [61]), and it describes sudden changes in the frequency energy distribution of sounds, which can be applied for detection of musical note onsets or, more generally speaking, detection of significant changes in the spectral distribution. It measures how quickly the power spectrum changes and it can be used to determine the timbre of an audio signal. This feature has been used for speech/music discrimination (like in Jiang et al. [60], or in Khan et al. [68,69]), musical instrument classification (Benetos et al. [10]), music genre classification (Li et al. [40], Lu et al. [12], Tzanetakis and Cook [28], Wang et al. [9]) and environmental sound recognition (see Peltonen et al. [18]).

- Spectral peaks: this feature was defined by Wang [8] as constellation maps that show the most relevant energy bin components in the time-frequency signal representation. Hence, spectral peaks is an attribute that shows high robustness to possible signal distortions (low signal-to-noise ratio (SNR)–see Klingholz [49], equalization, coders, etc.) being suitable for robust recognition applications. This feature has been used for automatic music retrieval (e.g., the well-known Shazam search engine by Wang [8]), but also for robust speech recognition (see Farahani et al. [75]).

- MPEG-7 spectrum envelope and normalized spectrum envelope: the audio spectrum envelope (ASE) is a log-frequency power spectrum that can be used to generate a reduced spectrogram of the original audio signal, as described by Kim et al. [76]. It is obtained by summing the energy of the original power spectrum within a series of frequency bands. Each decibel-scale spectral vector is normalized with the RMS energy envelope, thus yielding a normalized log-power version of the ASE called normalized audio spectrum envelope (NASE) (Kim et al. [76]). ASE feature has been used in audio event classification [76], music genre classification (Lee et al. [77]) and environmental sound recognition (see Muhammad and Alghathbar [47], or Valero and Alías [48]).

- Stereo panning spectrum feature: or SPSF for short, this feature provides a time-frequency representation that is intended to represent the left/right stereo panning of a stereo audio signal (Tzanetakis et al. [78]). Therefore, this feature is conceived with the aim of capturing relevant information of music signals, and more specifically, information that reflects typical postproduction in professional recordings. The additional information obtained through SPSF can be used for enhancing music classification and retrieval system accuracies (Tzanetakis et al. [79]).

- Group delay function: also known as GDF, it is defined as the negative derivative of the unwrapped phase of the signal Fourier transform (see Yegnanarayana and Murthy [80]) and reveals information about temporal localization of events (i.e., signal peaks). This feature has been used for determining the instants of significant excitation in speech signals (like in Smits and Yegnanarayana [81], or Rao et al. [82]) and in beat identification in music performances (Sethares et al. [83]).

- Modified group delay function: or MGDF for short, it is defined as a smoother version of the GDF, reducing its intrinsic spiky nature by introducing a cepstral smoothing process prior to GDF computation. It has been used in speaker identification (Hegde et al. [84]), but also in speech analysis, speech segmentation, speech recognition and language identification frameworks (Murthy and Yegnanarayana [85]).

4.2.3. Brightness-Related Physical Frequency Features

- Spectral centroid: or SC for short, this feature describes the center of gravity of the spectral energy. It can be defined as the first moment (frequency position of the mean value) of the signal frame magnitude spectrum as in the works by Li et al. [40], or by Tzanetakis and Cook [28], or obtained from the power spectrum of the entire signal in MPEG-7. SC reveals the predominant frequency of the signal. In the MPEG-7 standard definition [45], the audio spectrum centroid (ASC) is defined by computing SC over the power spectrum obtained from an octave-frequency scale analysis and roughly describes the sharpness of a sound. SC has been applied in musical onset detection (Smith et al. [57]), music classification (Bergstra et al. [41], Li et al. [40], Lu et al. [12], Morchen et al. [42], Wang et al. [9]), environmental sound recognition (like in Peltonen et al. [18], Muhammad and Alghathbar [47], Valero and Alías [48]) and, more recently, to music mood classification (Ren et al. [86]).

- Spectral center: this feature is defined as the median frequency of the signal spectrum, where both lower and higher energies are balanced. Therefore, is a measure close to spectral centroid. It has been shown to be useful for automatic rhythm tracking in musical signals (see Sethares et al. [83]).

4.2.4. Tonality-Related Physical Frequency Features

- Fundamental frequency: it is also denoted as F0. The MPEG-7 standard defines audio fundamental frequency feature as the first peak of the local normalized spectro-temporal autocorrelation function [45]. There are several methods in the literature to compute F0, e.g., autocorrelation-based methods, spectral-based methods, cepstral-based methods, and combinations (Hess [87]). This feature has been used in applications like musical onset detection (Smith et al. [57]), musical genre classification (Tzanetakis and Cook [28]), audio retrieval (Wold et al. [88]) and environmental sound recognition (Muhammad and Alghathbar [47], Valero and Alías [48]). In the literature F0 is sometimes denoted as pitch as it may represent a rough estimate of the perceived tonality of the signal (e.g., pitch histogram and pitch profile).

- Pitch histogram: instead of using a very specific and local descriptor like fundamental frequency, the pitch histogram describes more compactly the pitch content of a signal. Pitch histogram has been used for musical genre classification by Tzanetakis and Cook [28], as it gives a general perspective of the aggregated notes (frequencies) present in a musical signal along a certain period.

- Pitch profile: this feature is a more precise representation of musical pitch, as it takes into account both pitch mistuning effects produced in real instruments and also pitch representation of percussive sounds. It has been shown that use of pitch profile feature outperforms conventional chroma-based features in musical key detection, like in Zhu and Kankanhalli [89].

- Harmonicity: this feature is useful for distinguishing between tonal or harmonic (e.g., birds, flute, etc.) and noise-like sounds (e.g., dog bark, snare drum, etc.). Most traditional harmonicity features either use an impulse train (like in Ishizuka et al. [90]) to search for the set of peaks in multiples of F0, or uses the autocorrelation-inspired functions to find the self-repetition of the signal in the time- or frequency-domain (as in Kristjansson et al. [91]). Spectral local harmonicity is proposed in the work by Khao [92], a method that uses only the sub-regions of the spectrum that still retain a sufficient harmonic structure. In the MPEG-7 standard, two harmonicity measures are proposed. Harmonic ratio (HR) is a measure of the proportion of harmonic components in the power spectrum. The Upper limit of harmonicity (ULH) is an estimation of the frequency beyond which the spectrum no longer has any harmonic structure. Harmonicity has been used also in the field of environmental sound recognition (Muhammad and Alghathbar [47], Valero and Alías [48]). Some other harmonicity-based features for music genre and instrument family classification have been defined, like harmonic concentration, harmonic energy entropy or harmonic derivative (see Srinivasan and Kankanhalli [93]).

- Inharmonicity: this feature measures the extent to which the partials of a sound are separated with respect to its ideal position in a harmonic context (whose frequencies are integers of a fundamental frequency). Some approaches take into account only partial frequencies (like Agostini et al. [94,95]), while others also consider partial energies and bandwidths (see Cai et al. [96]).

- Harmonic-to-Noise Ratio: Harmonic-to-noise Ratio (HNR) is computed as the relation between the energy of the harmonic part and the energy of the rest of the signal in decibels (dB) (Boersma [97]). Although HNR has been generally applied to analyze pathological voices (like in Klingholz [49], or in Lee et al. [98]), it has also been applied in some music-related applications such as the characterization of growl and screaming singing styles, as in Kato and Ito [53].

- MPEG-7 spectral timbral descriptors: the MPEG-7 standard defines some features that are closely related to the harmonic structure of sounds, and are appropriate for discrimination of musical sounds: MPEG-7 harmonic spectral centroid (HSC) (the amplitude-weighted average of the harmonic frequencies, closely related to brightness and sharpness), MPEG-7 harmonic spectral deviation (HSD) (amplitude deviation of the harmonic peaks from their neighboring harmonic peaks, being minimum if all the harmonic partials have the same amplitude), MPEG-7 harmonic spectral spread (HSS) (the power-weighted root-mean-square deviation of the harmonic peaks from the HSC, related to harmonic bandwidths), and MPEG-7 harmonic spectral variation (HSV) (correlation of harmonic peak amplitudes in two adjacent frames, representing the harmonic variability over time). MPEG-7 spectral timbral descriptors have been employed for environmental sound recognition (Muhammad and Alghathbar [47],Valero and Alías [48]).

- Jitter: computes the cycle-to-cycle variations of the fundamental frequency (Klingholz [49]), that is, the average absolute difference between consecutive periods of speech (Farrús et al. [51]). Besides typically being applied to analyze pathological voices (like in Klingholz [49], or in Kreiman and Gerratt [50]), it has also been applied to prototyping, classification and creation of musical sounds (Jensen [54]), improve speaker recognition (Farrús et al. [51]), characterize growl and screaming singing styles (Kato and Ito [53]) or discriminate vocal and non-vocal regions from audio songs (Murthy and Koolagudi [52]), among others.

4.2.5. Chroma-Related Physical Frequency Features

- Chromagram: also known as chroma-based feature, chromagram is a spectrum-based energy representation that takes into account the 12 pitch classes within an octave (corresponding to pitch classes in musical theory) (Shepard [99]), and it can be computed from a logarithmic STFT (Bartsch and Wakefield [100]). Then, it constitutes a very compact representation suited for musical and harmonic signals representation following a perceptual approach.

- Chroma energy distribution normalized statistics: or CENS for short, this feature was conceived for music similarity matching and has shown to be robust to tempo and timbre variations (Müller et al. [101]). Therefore, it can be used for identifying similarities between different interpretations of a given music piece.

4.2.6. Spectrum Shape-Related Physical Frequency Features

- Bandwidth: usually defined as the second-order statistic of the signal spectrum, it helps to discriminate tonal sounds (with low bandwidths) from noise-like sounds (with high bandwidths) (see Peeters [34]). However, it is difficult to distinguish between complex tonal sounds (e.g., music, instruments, etc.) from complex noise-like sounds using only this feature. It can be defined over the power spectrum or in its logarithmic version (see Liu et al. [59], or Srinivasan and Kankanhalli [93]) and it can be computed over the whole spectrum or within different subbands (like in Ramalingam and Krishnan [102]). MPEG-7 defines audio spectrum spread (ASS) as the standard deviation of the signal spectrum, which constitutes the second moment while (being the ASC the first one). Spectral bandwidth has been used for music classification (Bergstra et al. [41], Lu et al. [12], Morchen et al. [42], Tzanetakis and Cook [28]), and environmental sound recognition (Peltonen et al. [18], Muhammad and Alghathbar [47], Valero and Alías [48]).

- Spectral dispersion: this is a measure closely related to spectral bandwidth. The only difference is that it takes into account the spectral center (median) instead of the spectral centroid (mean) (see Sethares et al. [83]).

- Spectral rolloff point: defined as the 95th percentile of the power spectral distribution (see Scheirer and Slaney [61]), spectral rolloff point can be regarded as a measure of the skewness of the spectral shape. It can be used, for example, for distinguishing between voiced from unvoiced speech sounds. It has been used in music genre classification (like in Li and Ogihara [103], Bergstra et al. [41], Li et al. [40], Lu et al. [12], Morchen et al. [42], Tzanetakis and Cook [28], Wang et al. [9]), speech/music discrimination (Scheirer and Slaney [61]), musical instrument classification (Benetos et al. [10]), environmental sound recognition (Peltonen et al. [18]), audio-based surveillance systems (Rabaoui et al. [24]) and music mood classification (Ren et al. [86]).

- Spectral flatness: this is a measure of uniformity in the frequency distribution of the power spectrum, and it can be computed as the ratio between the geometric and the arithmetic mean of a subband (see Ramalingam and Krishnan [102]) (equivalent to the MPEG-7 audio spectrum flatness (ASF) descriptor [45]). This feature allows distinguishing between noise-like sounds (high value of spectral flatness) and more tonal sounds (low value). This feature has been used in audio fingerprinting (see Lancini et al. [104]), musical onset detection (Smith et al. [57]), music classification (Allamanche et al. [105], Cheng et al. [106], Tzanetakis and Cook [28]) and environmental sound recognition (Muhammad and Alghathbar [47], Valero and Alías [48]).

- Spectral crest factor: in contrast to spectral flatness measure, spectral crest factor measures how peaked the power spectrum is, and it is also useful for differentiation of noise-like (lower spectral crest factor) and tonal sounds (higher spectral crest factor). It can be computed as the ratio between the maximum and the mean of the power spectrum within a subband, and has been used for audio fingerprinting (see Lancini et al. [104], Li and Ogihara [103]) and music classification (Allamanche et al. [105], Cheng et al. [106]).

- Subband spectral flux: or SSF for short, this feature is inversely proportional to spectral flatness, being more relevant in subbands with non-uniform frequency content. In fact, SSF measures the proportion of dominant partials in different subbands, and it can be measured accumulating the differences between adjacent frequencies in a subband. It has been used for improving the representation and recognition of environmental sounds (Cai et al. [96]) and music mood classification (Ren et al. [86]).

- Entropy: this is another measure that describes spectrum uniformity (or flatness), and it can be computed following different approaches (Shannon entropy, or its generalization named Renyi entropy) and also in different subbands (see Ramalingam and Krishnan [102]). It has been used for automatic speech recognition, computing the Shannon entropy in different equal size subbands, like in Misra et al. [107].

- Octave-based Spectral Contrast: also referred to as OSC, it is defined as the difference between peaks (that generally corresponds to harmonic content in music) and valleys (where non-harmonic or noise components are more dominant) measured in subbands by octave-scale filters and using a neighborhood criteria in its computation (Jiang et al. [108]). To represent the whole music piece, mean and standard deviation of the spectral contrast and spectral peak of all frames are used as the spectral contrast features. OSC features have been used for music classification (Lee et al. [77], Lu et al. [12], Yang et al. [109]) and music mood classification, as in Ren et al. [86].

- Spectral skewness and kurtosis: spectral skewness, which is computed as the 3rd order moment of the spectral distribution, is a measure that characterizes the asymmetry of this distribution around its mean value. On the other hand, spectral kurtosis describes the flatness of the spectral distribution around its mean, and its computed as the 4th order moment (see Peeters et al. [34]). Both parameters have been applied for music genre classification (Baniya et al. [112]) and music mood classification (Ren et al. [86]).

4.3. Wavelet-Based Physical Features

- Wavelet-based direct approaches: different type or families of wavelets have been used and defined in the literature in the field of audio processing. Daubechies wavelets have been used in blind source speech separation (see the work by Missaoui and Lachiri [116]) and Debechies together with Haar wavelets have been used in music classification (Popescu et al. [117]), while Coiflets wavelet have been applied recently to de-noising of audio signals (Vishwakarma et al. [118]). Other approaches like Daubechies Wavelet coefficient histogram (DWCH) features, are defined as the first three statistical moments of the coefficient histograms that represent the subbands obtained from Daubechies Wavelet audio signal decomposition (see Li et al. [40,119]). They have been applied in the field of speech recognition (Kim et al. [120]), music analysis applications such as genre classification, artist style identification and emotion detection (as in Li et al. [40,119,121], Mandel and Ellis [122], Yang et al. [109]) or mood classification (Ren et al. [86]). Also, in the work by Tabinda and Ahire [123], different wavelet families (like Daubechies, symlet, coiflet, biorthogonal, stationary and dmer) are used in audio steganography (an application for hiding data in cover speech which is imperceptible from the original audio).

- Hurst parameter features: or pH for short, is a time-frequency statistical representation of the vocal source composed of a vector of Hurst parameters (defined by Hurst [25]), which was computed by applying a wavelet-based multidimensional transformation of the short-time input speech in the work by Sant’Ana [124]. Thanks to its statistical definition, pH is robust to channel distortions as it models the stochastic behavior of input speech signal (see Zao et al. [125], or Palo et al. [126]). pH was originally applied as a means to improve speech-related problems, such as text-independent speaker recognition [124], speech emotion classification [125,126], or speech enhancement [127]. However, it has also been applied to sound source localization in noisy environments recently, as in the work by Dranka and Coelho [128].

- MP-based Gabor features: Wolfe et al. [130] proposed the construction of multiresolution Gabor dictionaries appropriate for audio signal analysis, which is applied for music and speech signals observed in noise, obtaining a more efficient spectro-temporal representation compared to a full multiresolution decomposition. In this work, Gabor atoms are given by time-frequency shifts of distinct window functions. Ezzat et al. describe in [131] the use of 2D Gabor filterbank and illustrate its response to different speech phenomena such as harmonicity, formants, vertical onsets/offsets, noise, and overlapping simultaneous speakers. Meyer and Kollmeier propose in [132] the use of spectro-temporal Gabor features to enhance automatic speech recognition performance in adverse conditions, and obtain better results when Hanning-shaped Gabor filters are used in contrast to more classical Gaussian approaches. Chu et al. [14] proposed using MP and a dictionary of Gabor functions to represent the time dynamics of environmental sounds, which are typically noise-like with a broad flat spectrum, but may include strong temporal domain signatures. Coupled with Mel Frequency Cepstral Coefficients (see Section 5.6 for more details), the MP-based Gabor features allowed improved environmental sound recognition. In [133], Wang et al. proposed a nonuniform scale-frequency map based on Gabor atoms selected via MP, onto which Principal Component Analysis and Linear Discriminate Analysis are subsequently applied to generate the audio feature. The proposed feature was employed for environmental sound classification in home automation.

- Spectral decomposition: in [134], Zhang et al. proposed an audio feature extraction scheme applied to audio effect classification and based on spectral decomposition by matching-pursuit in the frequency domain. Based on psychoacoustic studies, a set of spectral sinusoid-Gaussian basis vectors are constructed to extract pitch, timbre and residual in-harmonic components from the spectrum, and the audio feature consists of the scales of basis vectors after dimension reduction. Also in [135], Umapathy et al. applied an Adaptive Time Frequency Transform (ATFT for short) algorithm for music genre classification as a Wavelet decomposition but using Gaussian-based kernels with different frequencies, translations and scales. The scale parameter, which characterizes the signal envelope, captures information about rhythmic structures, and it has been used for music genre identification (see Fu [13]).

- Sparse coding tensor representation: this work presents an evolution of Gabor atom MP-based audio feature extraction of Chu et al. [14]. The method proposed in the work by Zhang and He [136] tries to preserve the distinctiveness of the atoms selected by the MP algorithm by using a frequency-time-scale tensor derived from the sparse coding of the audio signal. The three tensor dimensions represent the frequency, time center and scale of transient time-frequency components with different dimensions. This feature was coupled with MFCC and applied to perform sound effects classification.

4.4. Image Domain Physical Features

- Spectrogram image features: or SIF for short, are features that comprise a set of techniques that focus on applying techniques from the image processing field to the time-frequency representations (using Fourier, cepstral, or other types of frequency mapping techniques) of the sound to be analyzed (Chu et al. [14], Dennis et al. [138]). Spectrogram image features like subband power distribution (SPD), a two-dimensional representation of the distribution of normalized spectral power over time against frequency, have been shown to be useful for sound event recognition (Dennis [4]). The advantage of the SPD over the spectrogram is that the sparse, high-power elements of the sound event are transformed to a localized region of the SPD, unlike in the spectrogram where they may be scattered over time and frequency. Also, Local Spectrogram features (LS) are introduced by Dennis [4] with the ability to detect an arbitrary combination of overlapping sounds, including two or more different sounds or the same sound overlapping itself. LS features are used to detect keypoints in the spectrogram and then characterize the sound using the Generalized Hough Transform (GHT), a kind of universal transform that can be used to find arbitrarily complex shapes in grey level images, and that it can model the geometrical distribution of speech information over the wider temporal context (Dennis et al. [139]).

4.5. Cepstral Domain Physical Features

- Complex cepstrum: is defined as the Inverse Fourier transform of the logarithm (with unwrapped phase) of the Fourier transform of the signal (see Oppenheim and Schafer [140]), and has been used for pitch determination of speech signals (Noll [141]) but also for identification of musical instruments (see Brown [142]).

- Linear Prediction Cepstrum Coefficients: or LPCC for short. This feature is defined as the inverse Fourier transform of the logarithmic magnitude of the linear prediction spectral complex envelope (Atal [143]), and provide a more robust and compact representation especially useful for automatic speech recognition and speaker identification (Adami and Couto Barone [144]) but also for singer identification (Shen et al. [145]), music classification (Xu et al. [146], Kim and Whitman [147]) and environmental sound recognition (see Peltonen et al. [18], or Chu et al. [14]).

4.6. Other Domains

- Eigenspace: audio features expressed in the eigenspace are usually obtained from sound segments of several seconds of duration, which are postprocessed by dimensionality reduction algorithms in order to obtain a compact representation of the main signal information. This dimensionality reduction is normally performed by means of Principal Component Analysis (PCA) (or alternatively, via Singular Value Decomposition or SVD), which is equivalent to a projection of the original data onto a subspace defined by its eigenvectors (or eigenspace), or Independent Component Analysis (ICA). Some of the most relevant eigendomain physical features found in the literature are: i) MPEG-7 audio spectrum basis/projection feature, which is a combination of two descriptors (audio spectrum basis or ASB–and audio spectrum projection or ASP) conceived for audio retrieval and classification [45,76]. ASB feature is a compact representation of the signal spectrogram obtained through SVD, while ASP is the spectrogram projection against a given audio spectrum basis. ASB and ASP have been used for environmental sound recognition, as in Muhammad and Alghathbar [47]; and ii) Distortion discriminant analysis (DDA) feature, which is a compact time-invariant and noise-robust representation of an audio signal, that is based on applying hierarchical PCA to a time-frequency representation derived from a modulated complex lapped transform (MCLT) (see Burges et al. [148], or Malvar [149]). Therefore, this feature serves as a robust audio representation against many signal distortions (time-shifts, compression artifacts and frequency and noise distortions).

- Phase space: this type of features emerge as a response to the linear approach that has usually been employed to model speech. However, linear models do not take into account nonlinear effects occurring during speech production, thus constituting a simplification of reality. This is why approaches based on nonlinear dynamics try to bridge this gap. A first example are the nonlinear features for speech recognition presented in the work by Lindgren et al. [150], which are based on the so-called reconstructed phase space generated from time-lagged versions of the original time series. The idea is that reconstructed phase spaces have been proven to recover the full dynamics of the generating system, which implies that features extracted from it can potentially contain more and/or different information than a spectral representation. In the works by Kokkinos and Maragos [151] and by Pitsikalis and Maragos [152], a similar idea is employed to compute for short time series of speech sounds useful features like Lyapunov exponents.

- Acoustic environment features: this type of features try to capture information from the acoustic environment where the sound is measured. As an example, in the work by Hu et al. [153], the authors propose the use of Direct-to-Reverberant Ratio (DRR), the ratio between the Room Impulse response (RIR) energy of the direct path and the reverberant components, to perform speaker diarization. In this approach, they don’t use a direct measure of the RIR, but a Non-intrusive Room Acoustic parameter estimator (NIRA) (see Parada et al. [154]). This estimator is a data-driven approach that uses 106 features derived from pitch period importance weighted signal to noise ratio, zero-crossing rate, Hilbert transformation, power spectrum of long term deviation, MFCCs, line spectrum frequency and modulation representation.

5. Perceptual Audio Features Extraction Techniques

5.1. Time Domain Perceptual Features

5.1.1. Zero-Crossing Rate-Based Perceptual Features

- Zero-crossing peak amplitudes (ZCPA): were designed for automatic speech recognition (ASR) in noisy environments by Kim et al. [155], showing better results that linear prediction coefficients. This feature is computed from time-domain zero crossings of the signal previously decomposed in several psychoacoustic scaled subbands. The final representation of the feature is obtained on a histogram of the inverse zero-crossings lengths over all the subband signals. Subsequently, each histogram bin is scaled with the peak value of the corresponding zero crossing interval. In [156], Wang and Zhao applied ZCPA to noise-robust speech recognition.

- Pitch synchronous zero crossing peak amplitudes (PS-ZCPA): were proposed by Ghulam et al. [157] and they were designed for improving robustness of ASR in noisy conditions. The original method is based on an auditory nervous system, as it uses a mel-frequency spaced filterbank as a front-end stage. PS-ZCPA considers only inverse zero-crossings lengths whose peaks have a height above a threshold obtained as a portion of the highest peak within a signal pitch period. PS-ZCPA are only computed in voiced speech segments, being combined with the preceding ZCPA features obtained from unvoiced speech segments. In [158], the same authors presented a new version of the PS-ZCPA feature, using a pitch-synchronous peak-amplitude approach that ignores zero-crossings.

5.1.2. Perceptual Autocorrelation-Based Features

- Autocorrelation function features: or ACF for short, this feature introduced by Ando in [159], has been subsequently applied by the same author to environmental sound analysis [29] and recently adapted to speech representation [160]. To compute ACF, the autocorrelation function is firstly computed from the audio signal, and then this function is parameterized by means of a set of perceptual-based parameters related to acoustic phenomena (signal loudness, perceived pitch, strength of perceived pitch and signal periodicity).

- Narrow-band autocorrelation function features: also known as NB-ACF, this feature was introduced by Valero and Alías [15], where the ACF concept is reused in the context of a filter bank analysis. Specifically, the features are obtained from the autocorrelation function of audio signals computed after applying a Mel filter bank (which are based on the Mel scale, a perceptual scale of pitches judged by listeners to be equal in distance from one another). These features have been shown to provide good performance for indoor and outdoor environmental sound classification. In [161], the same authors improved this technique by substituting the Mel filter bank employed to obtain the narrow-band signals by a Gammatone filter bank with Equivalent Rectangular Bandwidth bands. In addition, the Autocorrelation Zero Crossing Rate (AZCR) was added, following previous works like the one by Ghaemmaghami et al. [43].

5.1.3. Rhythm Pattern

5.2. Frequency Domain Perceptual Features

- Modulation-based

- Brightness-related

- Tonality-related

- Loudness-related

- Roughness-related

5.2.1. Modulation-Based Perceptual Frequency Features

- 4 Hz modulation energy: is defined with the aim of capturing the most relevant hearing sensation of fluctuation in terms of amplitude- and frequency-modulated sounds (see Fastl [164]). The authors propose a model of fluctuation strength, based on a psychoacoustical magnitude, namely the temporal masking pattern. This feature can be computed filtering each subband of a signal spectral analysis by a 4 Hz band-pass filter along time and it has been used for music/speech discrimination (see Scheirer and Slaney [61]).

- Computer model of amplitude-modulation sensitivity of single units in the inferior culliculus: the work by Hewitt and Meddis [165] introduces a computer model of a neural circuit that replicates amplitude-modulation sensitivity of cells in the central nucleous of the inferior culliculus (ICC) is presented, allowing for the encoding of signal periodicity as a rate-based code.

- Joint acoustic and modulation frequency features: these are time-invariant representations that model the non-stationary behavior of an audio signal (Sukittanon and Atlas [166]). Modulation frequencies for each frequency band are extracted from demodulation of the Bark-scaled spectrogram using the Wavelet transform (see Section 4.3). These features have been used for audio fingerprinting by Sukittanon and Atlas [166], and they are similar to rhythm pattern feature (related to rhythm in music).

- Auditory filter bank temporal envelopes: or AFTE for short, this is another attempt to capture modulation information related to sound [167]. Modulation information is here obtained through bandpass filtering the output bands of a logarithmic-scale filterbank of 4th-order Gammatone bandpass filters. These features have been used for audio classification and musical genre classification by McKinney and Breebaart [167], and by Fu et al. [13].

- Modulation spectrogram: also referred to as MS, this feature displays and encodes the signal in terms of the distribution of slow modulations across time and frequency, as defined by Greenberg and Kingsbury [168]. In particular, it was defined to represent modulation frequencies in the speech signal between 0 and 8 Hz, with a peak sensitivity at 4 Hz, corresponding closely to the long-term modulation spectrum of speech. The MS is computed in critical-band-wide channels and incorporates a simple automatic gain control, and emphasizes spectro-temporal peaks. MS has been used for robust speech recognition (see Kingsbury et al. [169], or Baby et al. [170]), music classification (Lee et al. [77]), or content-based audio identification incorporating a Wavelet transform (Sukittanon et al. [171]). Recently, the MS features have been separated through a tensor factorization model, which represents each component as modulation spectra being activated across different subbands at each time frame, being applied for monaural speech separation purposes in the work by Barker and Virtanen [172] and for the classification of pathological infant cries (Chittora and Patil [173]).

- Long-term modulation analysis of short-term timbre features: in the work by Ren et al. [86] the use of a two-dimensional representation of acoustic frequency and modulation frequency to extract joint acoustic frequency and modulation frequency features is proposed, using an approach similar than in the work by Lee et al. [77]. Long-term joint frequency features, such as acoustic-modulation spectral contrast/valley (AMSC/AMSV), acoustic-modulation spectral flatness measure (AMSFM), and acoustic-modulation spectral crest measure (AMSCM), are then computed from the spectra of each joint frequency subband. By combining the proposed features, together with the modulation spectral analysis of MFCC and statistical descriptors of short-term timbre features, this new feature set outperforms previous approaches with statistical significance in automatic music mood classification.

5.2.2. Brightness-Related Perceptual Frequency Features

5.2.3. Tonality-Related Perceptual Frequency Features

5.2.4. Loudness-Related Perceptual Frequency Features

- Loudness: in the original work by Olson [178] the loudness measurement procedure of a complex sound (e.g., speech, music, noise) is described as the sum of the loudness index (using equal loudness contours) for each of the several subbands in which the audio us previously divided. In the work by Breebaart and McKinney [179] the authors compute loudness by firstly computing the power spectrum of the input frame and then normalizing by subtracting (in dB) an approximation of the absolute threshold of hearing, and then filtering by a bank of gammatone filters and summing across frequency to yield the power in each auditory filter, which corresponds to the internal excitation as a function of frequency. These excitations are then compressed, scaled and summed across filters to arrive at the loudness estimate.

- Specific loudness sensation: this is a measure of loudness (in Sone units, a perceptual scale for loudness measurement (see Peeters et al. [34]) in a specific frequency range. It incorporates both Bark-scale frequency analysis and the spectral masking effect that emulates the human auditory system (Pampalk et al. [162]). This feature has been applied to audio retrieval (Morchen et al. [42]).

- Integral loudness: this feature closely measures the human sensation of loudness by spectral integration of loudness over several frequency groups (Pfeiffer [180]). This feature has been used for discrimination between foreground and background sounds (see Linehart et al. [181], Pfeiffer et al. [182]).

5.2.5. Roughness-Related Perceptual Frequency Features

5.3. Wavelet-Based Perceptual Features

- Kernel Power Flow Orientation Coefficients (KPFOC): in the works by Gerazov and Ivanovski [184,185], a bank of 2D kernels is used to estimate the orientation of the power flow at every point in the auditory spectrogram calculated using a Gammatone filter bank (Valero and Alías [161]), obtaining an ASR front-end with increased robustness to both noise and room reverberation with respect to previous approaches, and specially for small vocabulary tasks.

- Mel Frequency Discrete Wavelet Coefficients: or MFDWC for short, account for the perceptual response of the ear by applying the discrete WT to the Mel-scaled log filter bank energies obtained from the input signal (see Gowdy and Tufekci [186]). MFDWC, which were initially defined to improve speech recongintion problems (Tavenei et al. [187]), have been subsequently applied to other machine hearing realed applications such as speaker verification/identification (see Tufekci and Gurbuz [188], Nghia et al. [189]), and audio-based surveillance systems (Rabaoui et al. [24]).

- Gammatone wavelet features: is a subtype of audio features formulated in the Wavelet domain that accounts for perceptual modelling is the Gammatone Wavelet features (GTW) (see Valero and Alías [190], Venkitaraman et al. [191]). These features are obtained by replacing typical mother functions, such as Morlet (Burrus et al. [192]), Coiflet (Bradie [193]) or Daubechies [194]) by Gammatone functions, which model the auditory system. GTW features show superior classification accuracy both in noiseless and noisy conditions when compared to Daubechies Wavelet features in classification of surveillance-related sounds, as exposed by Valero and Alías [190].

- Perceptual wavelet packets: the Wavelet packet transform is an implementation version of the discrete WT, where the filtering process is iterated on both the low frequency and high frequency components (see Jiang et al. [195]), which has been optimized perceptually by including the representation of the input audio into critical bands described by Greenwood [196] and Ren et al. [197]. Wavelet packet transform has been used in different applications like in the work by Dobson et al. [198] for audio coding purposes, or in audio watermarking (Artameeyanant [199]). Perceptual Wavelet Packets (PWP) have been applied to bio-acoustic signal enhancement (Ren et al. [197]), speech recognition (Rajeswari et al. [200]), and more recently also for baby crying sound events recognition (Ntalampiras [201]).

- Gabor functions: the work by Kleinschmidt models the receptive field of cortical neurons also as two-dimensional complex Gabor function [202]. More recently, Heckman et al. have studied in [203] the use of Gabor functions against learning the features via Independent Component Analysis technique for the computation of local features in a two-layer hierarchical bio-inspired approach. Wu et al. employ two-dimensional Gabor functions with different scales and directions to analyze the localized patches of the power spectrogram [204], improving the speech recognition performance in noisy environments, compared with other previous speech feature extraction methods. In a similar way, Schröder et al. [205] propose an optimization of spectro-temporal Gabor filterbank features for the audio events detection task. In [206,207], Lindeberg and Friberg describe a new way of deriving the Gabor filters as a particular case (using non-causal Gaussian windows) of frequency selective temporal receptive fields, representing the first layer of their scale-space theory for auditory signals.

5.4. Multiscale Spectro-Temporal-Based Perceptual Features

- Multiscale spectro-temporal modulations: it consists of two basic stages, as defined by Mesgarani et al. [208]. An early stage models the transformation of the acoustic signal into an internal neural representation referred to as an auditory spectrogram (using bank of 128 constant-Q bandpass filters with center frequencies equally spaced on a logarithmic frequency axis). Subsequently a central stage analyzes the spectrogram to estimate the content of its spectral and temporal modulations using a bank of modulation-selective filters (equivalent to a two-dimensional affine wavelet transform of the auditory spectrogram, with a spectro-temporal mother wavelet resembling a two-dimensional spectro-temporal Gabor function) mimicking those described in a model of the mammalian primary auditory cortex. In [208], Mesgarani et al. use multiscale spectro-temporal modulations to discriminate speech from nonspeech consisting of animal vocalizations, music, and environmental sounds. Moreover, these features have been applied to music genre classification (Panagakis et al. [209]) and voice activity detection (Ng et al. [210]).

- Computational models for auditory receptive fields: in [206,207], Lindeberg and Friberg describe a theoretical and methodological framework to define computational models for auditory receptive fields. The proposal is also based on a two-stage process: (i) a first layer of frequency selective temporal receptive fields where the input signal is represented as multi-scale spectrograms, which can be specifically configured to simulate the physical resonance system in the cochlea spectrogram; (ii) a second layer of spectro-temporal receptive fields which consist of kernel-based 2D processing units in order to capture relevant auditory changes in both time and frequency dimensions (after logarithmic representation in both amplitude and frequency axes, and ignoring phase information), including from separable to non-separable (introducing an specific glissando parameter) spectro-temporal patterns. The presented model is closely related to biological receptive fields (i.e., those that can be physiologically measured from neurons, in the inferior colliculus and the primary auditory cortex, as reported by Qiu et al. in [211]). This work gives an interesting perspective unifying in one theory a way to axiomatically derive representations like Gammatone (see Patterson and Holdsworth [212]) or Gabor filterbanks (Wolfe et al. [130]), regarding the causality of the filters used in the first audio analysis stage (see Section 5.6). A set of new auditory features are proposed respecting auditory invariances, being the result of the output 2D spectrogram after the kernel-based processing, using different operators like: spectro-temporal smoothings, onset and offset detections, spectral sharpenings, ways for capturing frequency variations over time and glissando estimation.

5.5. Image Domain Perceptual Features

- Spectrogram image features: as introduced in Section 4.4, spectrogram image features have been also derived from front-end parametrizations which make use of psychoacoustical models. In [213], Dennis et al. use GHT to construct a codebook from a Gaussian Mixture Model-Hidden Markov Model based ASR, in order to train an artificial neural network that learns a discriminative weighting for optimizing the classification accuracy in a frame-level phoneme recognition application. In this work MFCC are used as front-end parametrization. The same authors compute in [214] a robust sparse spike coding of the 40-dimension Mel-filtered spectrogram (detection of high energy peaks that correlate with a codebook dictionary) to learn a neural network for sound event classification. The results show a superior reliability when the proposed parameterization is used against the conventional raw spectrogram.

- Auditory image model: or AIM for short, this feature extraction technique includes functional and physiological modules to simulate auditory spectral analysis, neural encoding and temporal integration, including new forms of periodicity-sensitive temporal integration that generate stabilized auditory images (Patterson et al. [215,216]). The encoding process is based on a three stage system. Briefly, the spectral analysis stage converts the sound wave into the model’s representation of basilar membrane motion (BMM). The neural encoding stage stabilizes the BMM in level and sharpens features like vowel formants, to produce a simulation of the neural activity pattern produced by the sound in the auditory nerve. The temporal integration stage stabilizes the repeating structure in the NAP and produces a simulation of our perception, referred to as the auditory image.

- Stabilized auditory image: based on the AIM features, the stabilized auditory image (SAI) is defined as a two-dimensional representation of the sound signal (see Walters [137]): the first dimension of a SAI frame is simply the spectral dimension added by a previous filterbank analysis, while the second comes from the strobed temporal integration process by which an SAI is generated. SAI has been applied to speech recognition and audio search [137] and more recently, a low-resolution overlapped SIF has been introduced together with Deep Neural Networks (DNN) to perform robust sound event classification in noisy conditions (McLoughlin et al. [217]).

- Time-chroma images: this feature is a two dimensional representation for audio signals that plots the chroma distribution of an audio signal over time, as described in the work by Malekesmaeili and Ward [218]. This feature employs a modified definition of the chroma concept called chroma, which is defined as the set of all pitches that are apart by n octaves. Coupled with a fingerprinting algorithm that extracts local fingerprints from the time-chroma image, the proposed feature allows improved accuracy in audio copy detection and song identification.

5.6. Cepstral Domain Perceptual Features

5.6.1. Perceptual Filter Banks-Based Cepstral Features

- Mel Frequency Cepstral Coefficients: also denoted as MFCC, have been largely employed in the speech recognition field but also in the field of audio content classification (see the work by Liang and Fan [58]), due to the fact that their computation is based on perceptual-based frequency scale in the first stage (the human auditory model in which is inspired the frequency Mel-scale). After obtaining the frame-based Fourier transform, outputs of a Mel-scale filter bank are logarithmized and finally they are decorrelated by means of the Discrete Cosine Transform (DCT). Only first DCT coefficients (usually from 8 to 13) are used to gather information that represents the low frequency component of the signal’s spectral envelope (mainly related to timbre). MFCCs have been used also for music classification (see the works by Benetos et al. [10], Bergstra et al. [41], Tzanetakis and Cook [28], or Wang et al. [9]), singer identification (as in Shen et al. [145]), environmental sound classification (see Beritelli and Grasso [222], or Peltonen et al. [18]), audio-based surveillance systems (Rabaoui et al. [24]), being also embedded in hearing aids (see Zeng and Liu [223]) and even employed detect breath sound as an indicator of respiratory health and disease (Lei et al. [224]). Also, some particular extensions of MFCC have been introduced in the context of speech recognition and speaker verification in the aim of obtaining more robust spectral representation in the presence of noise (e.g., in the works by Shannon and Paliwal [225], Yuo et al. [27], or Choi [226]).

- Greenwood Function cepstral coefficients: building on the seminal work by Greenwood [196], where it was stated that many mammals have a logarithmic cochlear-frequency response, Clemins et al. [32] introduced Greenwood function Cepstral Coefficients (GFCC), extracting the equal loudness curve from species-specific audiogram measurements as an audio feature extraction for the analysis of environmental sound coming from the vocalization of those species. Later, this features were applied also to multichannel speech recognition by Trawicki et al. [227].

- Noise-robust audio features: or NRAF for short, these features incorporate a specific human auditory model based on a three stage process (a first stage of filtering in the cochlea, transduction of mechanical displacement in electrical activity–log compression in the hair cell stage–, and a reduction stage using decorrelation that mimics the lateral inhibitory network in the cochlear nucleus) (see Ravindran et al. [228]).

- Gammatone cepstral coefficients: also known as GTCC, Patterson et al. in [229,230] proposed a filterbank based on Gammatone function that predicts human masking data accurately, while Hohman proposed in [231] an efficient implementation of a Gammatone filterbank (using the 4th-order linear Gammatone filter) for the frequency analysis and resynthesis of audio signals. Valero and Alías derived the Gammatone cepstral coefficients feature by maintaining the effective computation scheme from MFCC but changing the Mel filter bank by a Gammatone filter bank [232]. Gammatone filters were originally designed to model the human auditory spectral response, given their good approximation in terms of impulse response, magnitude response and filter bandwidth, as described by Patterson and Holdsworth [212]. Gammatone-like features have been used also in audio processing (see the work of Johannesma in [233]), in speech recognition applications (see Shao et al. [234], or Schlüter et al. [235]), water sound event detection for tele-monitoring applications (Guyot et al. [236]), for road noise sources classification (Socoró et al. [237]) and computational auditory scene analysis (Shao et al. [238]). In [206,207] Lindeberg and Friberg describe an axiomatic way of obtaining Gammatone filters as a particular case of a multi-scale spectrogram when the analysis filters are constrained to be causal. They also define a new family of generalized Gammatone filters that allow for additional degrees of freedom in the trade-off between the temporal dynamics and the spectral selectivity of time-causal spectrograms. This approach represents a part of a unified theory for constructing computational models for auditory receptive fields (see a brief description in Section 5.4).

- GammaChirp filterbanks: Irino and Patterson proposed in [239] an extension of the Gammatone filter which was called Gammachirp filter, with the aim of obtaining a more accurate model of the auditory sensitivity, providing an excellent fit to human masking data. Specifically, this approach is able to represent the natural asymmetry of the auditory filter and its dependence on the signal strength. Abdallah and Hajaiej [240] defined GammaChirp Cepstral coefficients (GC-Cept) substituting the typical Mel filterbank in MFCC by a Gammachirp filterbank of 32 filters over speech signals (within the speech frequency band up to 8 KHz). They showed a better performance of the new Gammachirp filterbanks compared with the MFCC in a text independent speaker recognition system for noisy environments.

5.6.2. Autoregression-Based Cepstral Features

- Perceptual Linear Prediction: or PLP for short, this feature represents a more accurate representation of spectral contour by means of a linear prediction-based approach that incorporates also some specific human hearing inspired properties like use of a frequency Bark-scale and asymmetrical critical-band masking curves, as described by Hermansky [241]. These features, were later revised and improved by Hönig et al. [242] for speech recognition purposes and recently applied to baby crying sound events recognition by Ntalampiras [201].

- Relative Spectral-Perceptual Linear Prediction: also referred to as RASTA-PLP, this is a noise-robust version of the PLP feature introduced by Hermansky and Morgan [243]. The objective is to incorporate human-like abilities to disregard noise when listening in speech communication by means of filtering each frequency channel with a bandpass filter that mitigate slow time variations due to communication channel disturbances (e.g., steady background noise, convolutional noise) and fast variations due to analysis artifacts. Also, the RASTA-PLP process uses static nonlinear compression and expansion blocks before and after the bandpass processing. There is a close relation between RASTA processing and delta cepstral coefficients (i.e., first derivatives of MFCC), which are broadly used in the contexts of speech recognition and statistical speech synthesis. This features have also been applied for audio-based surveillance systems by Rabaoui et al. [24].

- Generalized Perceptual Linear Prediction: also denoted as gPLP, is defined as an adaptation of PLP originally developed for human speech processing to represent their vocal production mechanisms of mammals by substituting a species-specific frequency warping and equal loudness curve from humans by those from the analyzed species (see the work by Clemins et al. [32,33]).

5.7. Other Domains

- Eigenspace-based features: in this category, we find Rate-scale-frequency (RSF) features, which describe modulation components present in certain frequency bands of the auditory spectrum, and they are based in the same human auditory model that incorporates the noise-robust audio features (NRAF), as described in the work by Ravindran et al. [228] (see Section 5.6.1). RFS represent a compact and decorrelated representation (they are derived performing a Principal Component Analysis stage) of the two-dimensional Wavelet transform applied to the audio spectrum;

- Electroencephalogram-based features: or EEG-based features for short, these find application in human-centered favorite music estimation, as introduced by Sawata et al. [244]. In that work, the authors compute features from the EEG signals of a user that is listening to his/her favorite music, while simultaneously computing several features from the audio signal (root mean square, brightness, ZCR or tempo, among others). Subsequently, both types of features are correlated by means of kernel canonical correlation analysis (KCCA), which allows deriving a projection between the audio features space and the EEG-based feature space. By using the obtained projection, the new EEG-based audio features can be derived from audio features, since this projection provides the best correlation between both feature spaces. As a result, it becomes possible to transform original audio features into EEG-based audio features with no need of further EEG signals acquisition.

- Auditory saliency map: is a bottom-up auditory attention model which computes an auditory saliency map from the input sound derived by Kalinli et al. [245,246], and it has been applied to environmental sounds in the work by De Coensel and Botteldooren [247] (perception of transportation noise). The saliency map holds non-negative values and its maximum defines the most salient location in 2D auditory spectrum. First, auditory spectrum of sound is estimated using an early auditory (EA) system model, consisting of cochlear filtering, inner hair cell (IHC), and lateral inhibitory stages mimicking the process from basilar membrane to the cochlear nucleus in the auditory system (using a set of constant-Q asymmetric band-pass filters uniformly distributed along a logarithmic frequency axis). Next, the auditory spectrum is analyzed by extracting a set of multi-scale features (2D spectro-temporal receptive filters) which consist of intensity, frequency contrast, temporal contrast and orientation feature channels. Subsequently, center-surround differences (point wise differences across different center-based and surrounding-based scales) are calculated from the previous feature channels, resulting in feature maps. From the computed 30 features maps (six for each intensity, frequency contrast, temporal contrast and twelve for orientation) an iterative and nonlinear normalization algorithm (simulating competition between the neighboring salient locations using a large 2D difference of Gaussians filter) is applied to the possible noisy feature maps, obtaining reduced sparse representations of only those locations which strongly stand-out from their surroundings. All normalized maps are then summed to provide bottom-up input to the saliency map.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| ACF | Autocorrelation Function features |

| AD | Amplitude Descriptor |

| AFTE | Auditory Filter bank Temporal Envelopes |

| AMSC/AMSV | Acoustic-Modulation Spectral Contrast/Valley |

| AMSFM | Acoustic-Modulation Spectral Flatness Measure (AMSFM) |

| AMSCM | Acoustic-Modulation Spectral Crest Measure (AMSCM) |

| ASB | Audio Spectrum Basis |

| ASC | Audio Spectrum Centroid |

| ASE | audio Spectrum Envelope |

| ASF | Audio Spectrum Flatness |

| ASP | Audio Spectrum Projection |

| ASR | Automatic Speech Recognition |

| ASS | Audio Spectrum Spread |

| ATFT | Adaptive Time Frequency Transform |

| AW | Audio Waveform |

| AZCR | Autocorrelation Zero Crossing Rate |

| BMM | Basilar Membrane Motion |

| CASA | Computational Auditory Scene Analysis |

| CBS | Cyclic Beat Spectrum |

| CELP | Code Excited Linear Prediction |

| CENS | Chroma Energy distribution Normalized Statistics |

| dB | Decibels |

| DCT | Discrete Cosine Transform |

| DDA | Distortion Discriminant Analysis |

| DNN | Deep Neural Networks |

| DWCH | Daubechies Wavelet Coefficient Histogram |

| DRR | Direct-to-Reverberant Ratio |

| ERB | Equivalent Rectangular Bandwidth |

| EA | Early Auditory model |

| F0 | Fundamental frequency |

| GC-Cept | GammaChirp Cepstral coefficients |

| GDF | Group Delay Functions |

| GFCC | Greenwood Function Cepstral Coefficients |

| GHT | Generalised Hough Transform |

| gPLP | Generalized Perceptual Linear Prediction |

| GTCC | Gammatone Cepstral Coefficients |

| GTW | Gammatone Wavelet features |

| HNR | Harmonic-to-Noise Ratio |

| HR | Harmonic Ratio |

| HSC | Harmonic Spectral Centroid |

| HSD | Harmonic Spectral Deviation |

| HSS | Harmonic Spectral Spread |

| HSV | Harmonic Spectral Variation |

| ICA | Independent Component Analysis |

| IHC | Inner Hair Cell |

| KCCA | Kernel Canonical Correlation Analysis |

| KPFOCs | Kernel Power Flow Orientation Coefficients |

| LAT | Log Attack Time |

| LPC | Linear Prediction Coefficient |

| LPCC | Linear Prediction Cepstrum Coefficients |

| LP-ZCR | Linear Prediction Zero Crossing Ratio |

| LS | Local Spectrogram features |

| LSF | Line Spectral Frequencies |

| LSP | Line Spectral Pairs |

| MCLT | Modulated Complex Lapped Transform |

| MFCC | Mel-Frequency Cepstrum Coefficient |

| MFDWC | Mel Frequency Discrete Wavelet Coefficients |

| MGDF | Modified Group Delay Functions |

| MP | Matching Pursuit |

| MPEG | Moving Picture Experts Group |

| MS | Modulation spectrogram |

| NASE | Normalized Spectral Envelope |

| NB-ACF | Narrow-Band Autocorrelation Function features |

| NIRA | Non-intrusive Room Acoustic parameter |

| NRAF | Noise-Robust Audio Features |

| OSC | Octave-based Spectral Contrast |

| PCA | Principal Component Analysis |

| pH | Hurst parameter features |

| PLP | Perceptual Linear Prediction |

| PS-ZCPA | Pitch Synchronous Zero Crossing Peak Amplitudes |

| PWP | Perceptual Wavelet Packets |

| RASTA-PLP | Relative Spectral-perceptual Linear Prediction |

| RIR | Room Impulse Response |

| RMS | Root Mean Square |

| RSF | Rate-Scale-Frequency |

| SAI | Stabilised Auditory Image |

| SC | Spectral Centroid |

| SE | Spectral Envelope |

| SF | Spectral Flux |

| SIF | Spectrogram Image Features |

| SNR | Signal-to-Noise Ratio |

| SPD | Subband Power Distribution |

| SPSF | Stereo Panning Spectrum Feature |

| STE | Short-time Energy |

| STFT | Short Time Fourier Transform |

| ULH | Upper Limit of Harmonicity |

| WT | Wavelet Transform |

| ZCPA | Zero Crossing Peak Amplitudes |

| ZCR | Zero Crossing Rate |

References

- Lyon, R.F. Machine Hearing: An Emerging Field. IEEE Signal Process. Mag. 2010, 27, 131–139. [Google Scholar] [CrossRef]

- Gerhard, D. Audio Signal Classification: History and Current Techniques; Technical Report TR-CS 2003-07; Department of Computer Science, University of Regina: Regina, SK, Canada, 2003. [Google Scholar]

- Temko, A. Acoustic Event Detection and Classification. Ph.D. Thesis, Universitat Politècnica de Catalunya, Barcelona, Spain, 23 January 2007. [Google Scholar]

- Dennis, J. Sound Event Recognition in Unstructured Environments Using Spectrogram Image Processing. Ph.D. Thesis, School of Computer Engineering, Nanyang Technological University, Singapore, 2014. [Google Scholar]

- Bach, J.H.; Anemüller, J.; Kollmeier, B. Robust speech detection in real acoustic backgrounds with perceptually motivated features. Speech Commun. 2011, 53, 690–706. [Google Scholar] [CrossRef]

- Kinnunen, T.; Li, H. An overview of text-independent speaker recognition: From features to supervectors. Speech Commun. 2011, 52, 12–40. [Google Scholar] [CrossRef]

- Pieraccini, R. The Voice in the Machine. Building Computers That Understand Speech; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Wang, A.L.C. An industrial-strength audio search algorithm. In Proceedings of the 4th International Conference on Music Information Retrieval (ISMIR), Baltimore, MD, USA, 26–30 October 2003; pp. 7–13.

- Wang, F.; Wang, X.; Shao, B.; Li, T.; Ogihara, M. Tag Integrated Multi-Label Music Style Classification with Hypergraph. In Proceedings of the 10th International Society for Music Information Retrieval Conference (ISMIR), Kobe, Japan, 26–30 October 2009; pp. 363–368.

- Benetos, E.; Kotti, M.; Kotropoulos, C. Musical Instrument Classification using Non-Negative Matrix Factorization Algorithms and Subset Feature Selection. In Proceedings of the 2006 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Toulouse, France, 14–19 May 2006; Volume 5, pp. V:221–V:225.

- Liu, M.; Wan, C. Feature selection for automatic classification of musical instrument sounds. In Proceedings of the ACM/IEEE Joint Conference on Digital Libraries (JCDL), Roanoke, VA, USA, 24–28 June 2001; pp. 247–248.

- Lu, L.; Liu, D.; Zhang, H.J. Automatic Mood Detection and Tracking of Music Audio Signals. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 5–18. [Google Scholar] [CrossRef]

- Lyon, R.F. A Survey of Audio-Based Music Classification and Annotation. IEEE Trans. Multimedia 2011, 13, 303–319. [Google Scholar]

- Chu, S.; Narayanan, S.S.; Kuo, C.J. Environmental Sound Recognition With Time-Frequency Audio Features. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 1142–1158. [Google Scholar] [CrossRef]

- Valero, X.; Alías, F. Classification of audio scenes using Narrow-Band Autocorrelation features. In Proceedings of the 20th European Signal Processing Conference (EUSIPCO), Bucharest, Romania, 27–31 August 2012; pp. 2012–2019.

- Schafer, R.M. The Soundscape: Our Sonic Environment and the Tuning of the World; Inner Traditions/Bear & Co: Rochester, VT, USA, 1993. [Google Scholar]

- Mitrović, D.; Zeppelzauer, M.; Breiteneder, C. Features for content-based audio retrieval. Adv. Comput. 2010, 78, 71–150. [Google Scholar]

- Peltonen, V.; Tuomi, J.; Klapuri, A.; Huopaniemi, J.; Sorsa, T. Computational Auditory Scene Recognition. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Orlando, FL, USA, 13–17 May 2002; Volume 2, pp. II:1941–II:1944.

- Geiger, J.; Schuller, B.; Rigoll, G. Large-scale audio feature extraction and SVM for acoustic scene classification. In Proceedings of the 2013 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 2013; pp. 1–4.

- Oppenheim, A.V.; Schafer, R.W. Discrete-Time Signal Processing; Prentice Hall: Upper Saddle River, NJ, USA, 1989. [Google Scholar]

- Gygi, B. Factors in the Identification of Environmental Sounds. Ph.D. Thesis, Indiana University, Bloomington, IN, USA, 12 July 2001. [Google Scholar]

- Foote, J.; Uchihashi, S. The Beat Spectrum: A New Approach To Rhythm Analysis. In Proceedings of the 2001 IEEE International Conference on Multimedia and Expo (ICME), Tokyo, Japan, 22–25 August 2001; pp. 881–884.

- Bellman, R. Dynamic Programming; Dover Publications: Mineola, NY, USA, 2003. [Google Scholar]

- Rabaoui, A.; Davy, M.; Rossignaol, S.; Ellouze, N. Using One-Class SVMs and Wavelets for Audio Surveillance. IEEE Trans. Inf. Forensics Secur. 2008, 3, 763–775. [Google Scholar] [CrossRef]

- Hurst, H.E. Long-term storage capacity of reservoirs. Trans. Amer. Soc. Civ. Eng. 1951, 116, 770–808. [Google Scholar]

- Eronen, A.J.; Peltonen, V.T.; Tuomi, J.T.; Klapuri, A.P.; Fagerlund, S.; Sorsa, T.; Lorho, G.; Huopaniemi, J. Audio-based context recognition. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 321–329. [Google Scholar] [CrossRef]

- Yuo, K.; Hwang, T.; Wang, H. Combination of autocorrelation-based features and projection measure technique for speaker identification. IEEE Trans. Speech Audio Process. 2005, 13, 565–574. [Google Scholar]

- Tzanetakis, G.; Cook, P.R. Musical genre classification of audio signals. IEEE Trans. Speech Audio Process. 2002, 10, 293–302. [Google Scholar] [CrossRef]

- Ando, Y. A theory of primary sensations and spatial sensations measuring environmental noise. J. Sound Vib. 2001, 241, 3–18. [Google Scholar] [CrossRef]

- Valero, X.; Alías, F. Hierarchical Classification of Environmental Noise Sources by Considering the Acoustic Signature of Vehicle Pass-bys. Arch. Acoustics 2012, 37, 423–434. [Google Scholar] [CrossRef]

- Richard, G.; Sundaram, S.; Narayanan, S. An Overview on Perceptually Motivated Audio Indexing and Classification. Proc. IEEE 2013, 101, 1939–1954. [Google Scholar] [CrossRef]

- Clemins, P.J.; Trawicki, M.B.; Adi, K.; Tao, J.; Johnson, M.T. Generalized Perceptual Features for Vocalization Analysis Across Multiple Species. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toulouse, France, 14–19 May 2006; Volume 1.

- Clemins, P.J.; Johnson, M.T. Generalized perceptual linear prediction features for animal vocalization analysis. J. Acoust. Soc. Am. 2006, 120, 527–534. [Google Scholar] [CrossRef] [PubMed]

- Peeters, G. A Large Set of Audio Features for Sound Description (Similarity And Classification) in the CUIDADO Project; Technical Report; IRCAM: Paris, France, 2004. [Google Scholar]

- Sharan, R.; Moir, T. An overview of applications and advancements in automatic sound recognition. Neurocomputing 2016. [Google Scholar] [CrossRef]

- Gubka, R.; Kuba, M. A comparison of audio features for elementary sound based audio classification. In Proceedings of the 2013 International Conference on Digital Technologies (DT), Zilina, Slovak Republic, 29–31 May 2013; pp. 14–17.

- Boonmatham, P.; Pongpinigpinyo, S.; Soonklang, T. A comparison of audio features of Thai Classical Music Instrument. In Proceedings of the 7th International Conference on Computing and Convergence Technology (ICCCT), Seoul, South Korea, 3–5 December 2012; pp. 213–218.

- Van Hengel, P.W.J.; Krijnders, J.D. A Comparison of Spectro-Temporal Representations of Audio Signals. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 303–313. [Google Scholar] [CrossRef]

- Kedem, B. Spectral Analysis and Discrimination by Zero-crossings. Proc. IEEE 1986, 74, 1477–1393. [Google Scholar] [CrossRef]