Real-Time Whole-Body Imitation by Humanoid Robots and Task-Oriented Teleoperation Using an Analytical Mapping Method and Quantitative Evaluation

Abstract

:1. Introduction

- Most imitation systems that can achieve stable whole-body motions are based on numerical IK methods for posture mapping. However, joint angles generated using numerical methods are not as accurate as those obtained using analytical methods and incur higher computation costs.

- Most analytical methods of joint angle mapping used in imitation systems are simple and rough, and they cannot indicate the orientations of human body links and lack sufficient consideration of the robot’s joint structures. This will affect the accuracy of the joint angles for mapping and limit the imitation similarity.

- Many approaches cannot achieve stable doubly supported and singly supported imitation.

- Most researchers have not considered the imitation of head motions, hand motions or locomotion, which are essential for task execution using an imitation system. Some systems partially accomplish these functions but require additional postures or audio instructions or require ancillary handheld or wearable devices, which are inconvenient.

- Most similarity criteria are based on the positions of key points, i.e., the positions of end effectors or skeleton points, or are based on joint angles and consequently cannot directly reflect the posture similarity.

- A novel comprehensive and unrestricted whole-body imitation system is proposed and developed. In addition, an imitation learning algorithm is presented and developed based on it. To the best of our knowledge, it is the most complete and free whole-body imitation system developed to date, enabling the imitation of head motions, arm motions, lower-limb motions, hand motions and locomotion and not requiring any ancillary handheld or wearable devices or any additional audio or gesture-based instructions. The system includes a double-support mode, a single-support mode and a walking mode. The balance is controlled in each mode, and the stability of the system enables the robot to execute some complicated tasks in real time.

- A novel analytical method called GA-LVVJ is proposed to map the human motions to a robot based on the observed human data. Link vectors are constructed according to the captured skeleton points, and the virtual joints are set according to the link vectors and the robot joints. Then, the frame of each human skeleton model link is built to indicate its orientation and posture. A structural analysis of child and mother joints is employed for the calculation of the joint angles. This method proves the high similarity of the single-support and double-support imitation motions.

- A real-time locomotion method is proposed for the walking mode. Both the rotations and displacements of the human body are calculated in real time. No ancillary equipment is required to issue instructions for recording the position, and no fixed point is needed to serve as a reference position.

- A filter strategy is proposed and employed to ensure that the robot transitions into the correct motion mode and that its motions are stabilized before the mode changes.

- Two quantitative vector-based similarity evaluation methods are proposed, namely, the WBF method and the LLF method, which focus on the whole-body posture and the local-link posture, respectively. They can provide novel metrics of imitation similarity that consider both whole-body and local-link features.

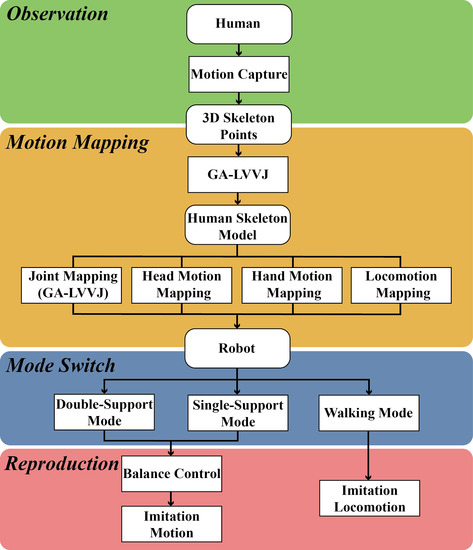

2. System Framework

3. Motion Mapping

3.1. Motion Loop and Locomotion Loop

3.2. Construction of Human Skeleton Model and Joint Mapping: GA-LVVJ

- Construction of human skeleton link vectors in accordance with the 3D skeleton points;

- Construction of human virtual joints in accordance with the human skeleton link vectors and robot joints;

- Establishment of local link frames for the human skeleton model with reference to the virtual joints;

- Calculation of the joint angles of the human skeleton model using the local link frames and link vectors;

- Application of the joint angles of the human skeleton model to the robot.

3.3. Head Motion Mapping

3.4. Hand Motion Mapping

3.5. Locomotion Mapping

3.5.1. Body Rotation

3.5.2. Displacement

3.6. Discussion of Extension to Other Humanoids

4. Mode Switch

4.1. Filter Strategy

4.2. Double-Support Mode to Single-Support Mode

4.3. Double-Support Mode to Walking Mode

4.4. Single-Support Mode to Double-Support Mode

5. Balance Control

6. Similarity Evaluation

6.1. Whole-Body-Focused (WBF) Method

6.2. Local-Link-Focused (LLF) Method

7. Experiment

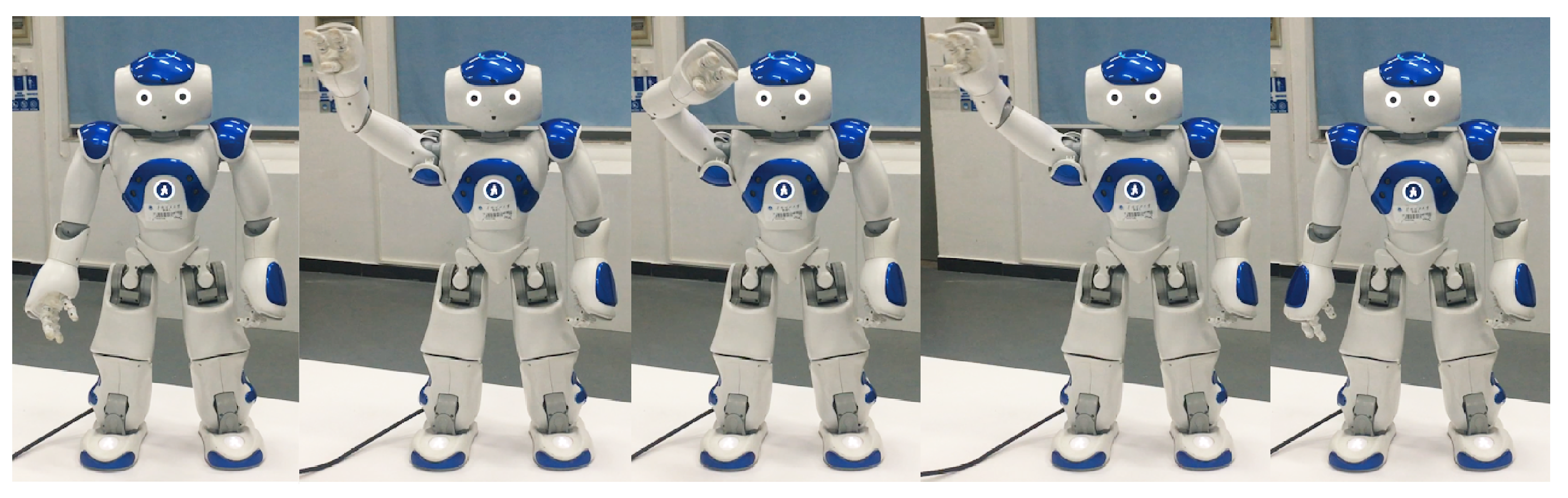

7.1. Real-Time Whole-Body Imitation of Singly and Doubly Supported Motions

7.1.1. Computational Cost

7.1.2. Error Analysis

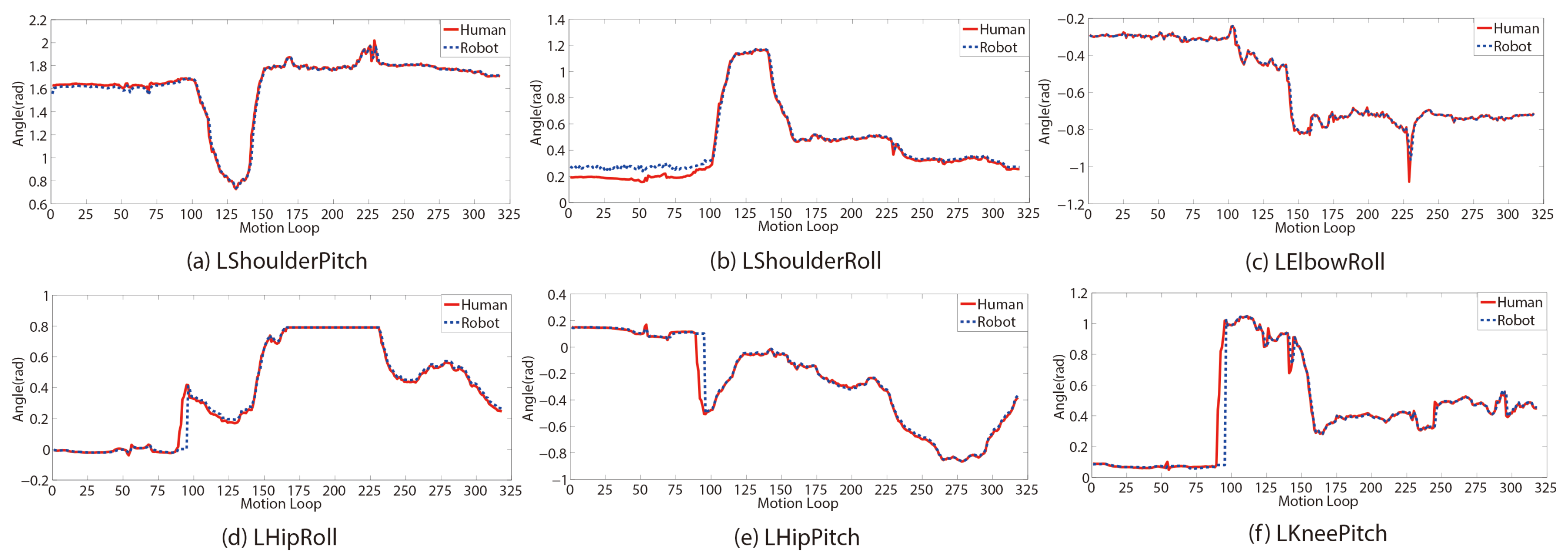

7.1.3. Consistency of Joint Angle Trajectories

7.1.4. Similarity Evaluation

7.1.5. Test on Different Human Body Shapes

7.2. Task-Oriented Teleoperation

8. An Imitation Learning Case

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| GA-LVVJ | Geometrical Analysis Based on Link Vectors and Virtual Joints |

| WBF | Whole-Body-Focused |

| LLF | Local-Link-Focused |

| IK | Inverse kinematic |

| LUT | Left upper torso |

| LSP | LShoulderPitch |

| LSR | LShoulderRoll |

| LUA | Left upper arm |

| LEY | LElbowYaw |

| LER | LElbowRoll |

| LLT | Left lower torso |

| LHR | LHipRoll |

| LHP | LHipPitch |

| LTh | Left thigh |

| DOF | Degree of freedom |

| LKP | LKneePitch |

| LT | Lower torso |

| QP | Quadratic programming |

| GMM | Gaussian Mixture Model |

| GMR | Gaussian Mixture Regression |

| DTW | Dynamic Time Wrapping |

References

- Morita, T.; Mase, K.; Hirano, Y.; Kajita, S. Reciprocal attentive communication in remote meeting with a humanoid robot. In Proceedings of the 9th International Conference on Multimodal Interfaces, Nagoya, Japan, 12–15 November 2007; pp. 228–235. [Google Scholar]

- Qi, R.; Khajepour, A.; Melek, W.W.; Lam, T.L.; Xu, Y. Design, Kinematics, and Control of a Multijoint Soft Inflatable Arm for Human-Safe Interaction. IEEE Trans. Robot. 2017, 33, 594–609. [Google Scholar] [CrossRef]

- Ferraguti, F.; Preda, N.; Manurung, A.; Bonfe, M.; Lambercy, O.; Gassert, R.; Muradore, R.; Fiorini, P.; Secchi, C. An energy tank-based interactive control architecture for autonomous and teleoperated robotic surgery. IEEE Trans. Robot. 2015, 31, 1073–1088. [Google Scholar] [CrossRef]

- Marescaux, J.; Leroy, J.; Rubino, F.; Smith, M.; Vix, M.; Simone, M.; Mutter, D. Transcontinental robot-assisted remote telesurgery: Feasibility and potential applications. Ann. Surg. 2002, 235, 487–492. [Google Scholar] [CrossRef] [PubMed]

- Meng, C.; Zhang, J.; Liu, D.; Liu, B.; Zhou, F. A remote-controlled vascular interventional robot: System structure and image guidance. Int. J. Med. Robot. Comput. Assist. Surg. 2013, 9, 230–239. [Google Scholar] [CrossRef] [PubMed]

- Butner, S.E.; Ghodoussi, M. Transforming a surgical robot for human telesurgery. IEEE Trans. Robot. Autom. 2003, 19, 818–824. [Google Scholar] [CrossRef]

- Atashzar, S.F.; Polushin, I.G.; Patel, R.V. A Small-Gain Approach for Nonpassive Bilateral Telerobotic Rehabilitation: Stability Analysis and Controller Synthesis. IEEE Trans. Robot. 2017, 33, 49–66. [Google Scholar] [CrossRef]

- Nielsen, C.W.; Goodrich, M.A.; Ricks, R.W. Ecological interfaces for improving mobile robot teleoperation. IEEE Trans. Robot. 2007, 23, 927–941. [Google Scholar] [CrossRef]

- Bakker, P.; Kuniyoshi, Y. Robot see, robot do: An overview of robot imitation. In Proceedings of the AISB96 Workshop on Learning in Robots and Animals, Brighton, UK, 1–2 April 1996; pp. 3–11. [Google Scholar]

- Riley, M.; Ude, A.; Wade, K.; Atkeson, C.G. Enabling real-time full-body imitation: A natural way of transferring human movement to humanoids. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Taipei, China, 14–19 September 2003; Volume 2, pp. 2368–2374. [Google Scholar]

- Zhao, X.; Huang, Q.; Peng, Z.; Li, K. Kinematics mapping and similarity evaluation of humanoid motion based on human motion capture. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2004; Volume 1, pp. 840–845. [Google Scholar]

- Gu, Y.; Thobbi, A.; Sheng, W. Human-robot collaborative manipulation through imitation and reinforcement learning. In Proceedings of the IEEE International Conference on Information and Automation (ICIA), Jeju Island, Korea, 19–21 August 2011; pp. 151–156. [Google Scholar]

- Liu, H.Y.; Wang, W.J.; Wang, R.J.; Tung, C.W.; Wang, P.J.; Chang, I.P. Image recognition and force measurement application in the humanoid robot imitation. IEEE Trans. Instrum. Meas. 2012, 61, 149–161. [Google Scholar] [CrossRef]

- Tomić, M.; Chevallereau, C.; Jovanović, K.; Potkonjak, V.; Rodić, A. Human to humanoid motion conversion for dual-arm manipulation tasks. Robotica 2018, 1–21. [Google Scholar] [CrossRef]

- Cheng, G.; Kuniyoshi, Y. Real-time mimicking of human body motion by a humanoid robot. In Proceedings of the 6th Intelligent Autonomous Systems, Venice, Italy, 25–27 July 2000; pp. 273–280. [Google Scholar]

- Hwang, C.L.; Chen, B.L.; Syu, H.T.; Wang, C.K.; Karkoub, M. Humanoid Robot’s Visual Imitation of 3-D Motion of a Human Subject Using Neural-Network-Based Inverse Kinematics. IEEE Syst. J. 2016, 10, 685–696. [Google Scholar] [CrossRef]

- Sakka, S.; Poubel, L.P.; Cehajic, D. Tasks prioritization for whole-body realtime imitation of human motion by humanoid robots. In Proceedings of the Digital Intelligence (DI2014), Nantes, France, 17–19 September 2014; p. 5. [Google Scholar]

- Kondo, Y.; Yamamoto, S.; Takahashi, Y. Real-time Posture Imitation of Biped Humanoid Robot based on Particle Filter with Simple Joint Control for Standing Stabilization. In Proceedings of the Joint 8th International Conference on Soft Computing and Intelligent Systems (SCIS) and 17th International Symposium on Advanced Intelligent Systems, Toyama, Japan, 5–8 December 2016; pp. 130–135. [Google Scholar]

- Filiatrault, S.; Cretu, A.M. Human arm motion imitation by a humanoid robot. In Proceedings of the IEEE International Symposium on Robotic and Sensors Environments (ROSE), Timisoara, Romania, 16–18 October 2014; pp. 31–36. [Google Scholar]

- Chen, J.; Wang, G.; Hu, X.; Shen, J. Lower-body control of humanoid robot NAO via Kinect. Multimedia Tools Appl. 2018, 77, 10883–10898. [Google Scholar] [CrossRef]

- Koenemann, J.; Burget, F.; Bennewitz, M. Real-time imitation of human whole-body motions by humanoids. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2806–2812. [Google Scholar]

- Avalos, J.; Cortez, S.; Vasquez, K.; Murray, V.; Ramos, O.E. Telepresence using the kinect sensor and the NAO robot. In Proceedings of the IEEE 7th Latin American Symposium on Circuits & Systems (LASCAS), Florianopolis, Brazil, 28 February–2 March 2016; pp. 303–306. [Google Scholar]

- Ou, Y.; Hu, J.; Wang, Z.; Fu, Y.; Wu, X.; Li, X. A real-time human imitation system using kinect. Int. J. Soc. Robot. 2015, 7, 587–600. [Google Scholar] [CrossRef]

- Poubel, L.P.; Sakka, S.; Ćehajić, D.; Creusot, D. Support changes during online human motion imitation by a humanoid robot using task specification. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1782–1787. [Google Scholar]

- Zuher, F.; Romero, R. Recognition of human motions for imitation and control of a humanoid robot. In Proceedings of the Robotics Symposium and Latin American Robotics Symposium (SBR-LARS), Fortaleza, Brazil, 16–19 October 2012; pp. 190–195. [Google Scholar]

- Lei, J.; Song, M.; Li, Z.N.; Chen, C. Whole-body humanoid robot imitation with pose similarity evaluation. Signal Process. 2015, 108, 136–146. [Google Scholar] [CrossRef]

- Lee, J.H. Full-body imitation of human motions with kinect and heterogeneous kinematic structure of humanoid robot. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII), Fukuoka, Japan, 16–18 December 2012; pp. 93–98. [Google Scholar]

- Franz, S.; Nolte-Holube, R.; Wallhoff, F. NAFOME: NAO Follows Me-Tracking, Reproduction and Simulation of Human Motion; Jade University of Applied Sciences: Wilhelmshaven, Germany, 2013. [Google Scholar]

- Almetwally, I.; Mallem, M. Real-time tele-operation and tele-walking of humanoid robot NAO using Kinect depth camera. In Proceedings of the 10th IEEE International Conference on Networking, Sensing and Control (ICNSC), Paris-Evry, France, 10–12 April 2013; pp. 463–466. [Google Scholar]

- Aleotti, J.; Skoglund, A.; Duckett, T. Position teaching of a robot arm by demonstration with a wearable input device. In Proceedings of the International Conference on Intelligent Manipulation and Grasping (IMG04), Genova, Italy, 1–2 July 2004; pp. 1–2. [Google Scholar]

- Calinon, S.; Billard, A. Incremental learning of gestures by imitation in a humanoid robot. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Washington, DC, USA, 9–11 March 2007; pp. 255–262. [Google Scholar]

- Asfour, T.; Azad, P.; Gyarfas, F.; Dillmann, R. Imitation learning of dual-arm manipulation tasks in humanoid robots. Int. J. Humanoid Robot. 2008, 5, 183–202. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, X.; Liu, C.; Wang, J.; Zhou, X.; Xu, N.; Jiang, A. An interactive training system of motor learning by imitation and speech instructions for children with autism. In Proceedings of the 9th IEEE International Conference on Human System Interactions (HSI), Krakow, Poland, 25–27 May 2016; pp. 56–61. [Google Scholar]

- Boucenna, S.; Anzalone, S.; Tilmont, E.; Cohen, D.; Chetouani, M. Learning of social signatures through imitation game between a robot and a human partner. IEEE Trans. Auton. Ment. Dev. 2014, 6, 213–225. [Google Scholar] [CrossRef]

- Rozo, L.; Calinon, S.; Caldwell, D.G.; Jimenez, P.; Torras, C. Learning physical collaborative robot behaviors from human demonstrations. IEEE Trans. Robot. 2016, 32, 513–527. [Google Scholar] [CrossRef]

- Alibeigi, M.; Ahmadabadi, M.N.; Araabi, B.N. A fast, robust, and incremental model for learning high-level concepts from human motions by imitation. IEEE Trans. Robot. 2017, 33, 153–168. [Google Scholar] [CrossRef]

- Stephens, B. Integral control of humanoid balance. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Diego, CA, USA, 29 October–2 November 2007; pp. 4020–4027. [Google Scholar]

- Lin, J.L.; Hwang, K.S. Balancing and Reconstruction of Segmented Postures for Humanoid Robots in Imitation of Motion. IEEE Access 2017, 5, 17534–17542. [Google Scholar] [CrossRef]

- Veltrop, T. Control a Humanoid Robot Using the Kinect. 2011. Available online: http://bit.ly/RjAVwQ (accessed on 18 October 2018).

- Päusch, S.; Hochdorfer, S. Teleoperation of the Robot NAO with a Kinect Camera. 2012. Available online: http://www.zafh-servicerobotik.de/ (accessed on 18 October 2018).

- Suay, B. Humanoid Robot Control Using Depth Camera. In Proceedings of the 6th International Conference on Human Robot Interaction, HRI 2011, Lausanne, Switzerland, 6–9 March 2011. [Google Scholar]

- Ishiguro, Y.; Ishikawa, T.; Kojima, K.; Sugai, F.; Nozawa, S.; Kakiuchi, Y.; Okada, K.; Inaba, M. Online master-slave footstep control for dynamical human-robot synchronization with wearable sole sensor. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; pp. 864–869. [Google Scholar]

- Ishiguro, Y.; Kojima, K.; Sugai, F.; Nozawa, S.; Kakiuchi, Y.; Okada, K.; Inaba, M. Bipedal oriented whole body master-slave system for dynamic secured locomotion with LIP safety constraints. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 376–382. [Google Scholar]

- Wang, A.; Ramos, J.; Mayo, J.; Ubellacker, W.; Cheung, J.; Kim, S. The HERMES humanoid system: A platform for full-body teleoperation with balance feedback. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 730–737. [Google Scholar]

- Ramos, J.; Wang, A.; Ubellacker, W.; Mayo, J.; Kim, S. A balance feedback interface for whole-body teleoperation of a humanoid robot and implementation in the hermes system. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 844–850. [Google Scholar]

- Alissandrakis, A.; Nehaniv, C.L.; Dautenhahn, K. Action, state and effect metrics for robot imitation. In Proceedings of the 15th IEEE International Symposium on Robot and Human Interactive Communication, Hatfield, UK, 6–8 September 2006; pp. 232–237. [Google Scholar]

- Zhang, Z.; Beck, A.; Magnenat-Thalmann, N. Human-like behavior generation based on head-arms model for robot tracking external targets and body parts. IEEE Trans. Cybern. 2015, 45, 1390–1400. [Google Scholar] [CrossRef] [PubMed]

- Harada, T.; Taoka, S.; Mori, T.; Sato, T. Quantitative evaluation method for pose and motion similarity based on human perception. In Proceedings of the 4th IEEE/RAS International Conference on Humanoid Robots, Santa Monica, CA, USA, 10–12 November 2004; Volume 1, pp. 494–512. [Google Scholar]

- Microsoft. Kinect for Windows SDK 2.0. 2014. Available online: https://docs.microsoft.com/en-us/previous-versions/windows/kinect/dn799%271(v=ieb.10) (accessed on 18 October 2018).

- Mobini, A.; Behzadipour, S.; Saadat Foumani, M. Accuracy of Kinect’s skeleton tracking for upper body rehabilitation applications. Disabil. Rehabil. Assist. Technol. 2014, 9, 344–352. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Kurillo, G.; Ofli, F.; Bajcsy, R. Evaluation of pose tracking accuracy in the first and second generations of microsoft kinect. Comput. Vis. Pattern Recognit. 2015. [Google Scholar] [CrossRef]

- DARwin-OP. 2018. Available online: http://support.robotis.com/en/product/darwin-op.htm (accessed on 18 October 2018).

- Honda. ASIMO: The World’s Most Advanced Humanoid Robot. 2018. Available online: http://asimo.honda.com/asimo-specs/ (accessed on 18 October 2018).

- Sugihara, T.; Nakamura, Y.; Inoue, H. Realtime humanoid motion generation through ZMP manipulation based on inverted pendulum control. In Proceedings of the IEEE International Conference on Robotics and Automationicra (ICRA), Washington, DC, USA, 11–15 May 2002; Volume 2, pp. 1404–1409. [Google Scholar]

- Lee Rodgers, J.; Nicewander, W.A. Thirteen ways to look at the correlation coefficient. Am. Stat. 1988, 42, 59–66. [Google Scholar] [CrossRef]

- Billard, A.; Calinon, S.; Dillmann, R.; Schaal, S. Robot programming by demonstration. In Springer Handbook Of Robotics; Springer: Berlin, Germany, 2008; pp. 1371–1394. [Google Scholar]

- Calinon, S.; Guenter, F.; Billard, A. On learning, representing, and generalizing a task in a humanoid robot. IEEE Trans. Syst. Man Cybern. Part B 2007, 37, 286–298. [Google Scholar] [CrossRef]

- Chiu, C.Y.; Chao, S.P.; Wu, M.Y.; Yang, S.N.; Lin, H.C. Content-based retrieval for human motion data. J. Vis. Commun. Image Represent. 2004, 15, 446–466. [Google Scholar] [CrossRef]

| Joint | Average Error (rad) | Maximum Error (rad) |

|---|---|---|

| LShoulderPitch | (S) | |

| LShoulderRoll | (D) | |

| LElbowRoll | (S) | |

| LHipRoll | (*) | |

| LHipPitch | (*) | |

| LKneePitch | (*) | |

| RShoulderPitch | (D) | |

| RShoulderRoll | (D) | |

| RElbowRoll | (D) | |

| RHipRoll | (S) | |

| RHipPitch | (S) | |

| RKneePitch | (S) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Niu, Y.; Yan, Z.; Lin, S. Real-Time Whole-Body Imitation by Humanoid Robots and Task-Oriented Teleoperation Using an Analytical Mapping Method and Quantitative Evaluation. Appl. Sci. 2018, 8, 2005. https://doi.org/10.3390/app8102005

Zhang Z, Niu Y, Yan Z, Lin S. Real-Time Whole-Body Imitation by Humanoid Robots and Task-Oriented Teleoperation Using an Analytical Mapping Method and Quantitative Evaluation. Applied Sciences. 2018; 8(10):2005. https://doi.org/10.3390/app8102005

Chicago/Turabian StyleZhang, Zhijun, Yaru Niu, Ziyi Yan, and Shuyang Lin. 2018. "Real-Time Whole-Body Imitation by Humanoid Robots and Task-Oriented Teleoperation Using an Analytical Mapping Method and Quantitative Evaluation" Applied Sciences 8, no. 10: 2005. https://doi.org/10.3390/app8102005

APA StyleZhang, Z., Niu, Y., Yan, Z., & Lin, S. (2018). Real-Time Whole-Body Imitation by Humanoid Robots and Task-Oriented Teleoperation Using an Analytical Mapping Method and Quantitative Evaluation. Applied Sciences, 8(10), 2005. https://doi.org/10.3390/app8102005