Abstract

Lidar imaging systems are one of the hottest topics in the optronics industry. The need to sense the surroundings of every autonomous vehicle has pushed forward a race dedicated to deciding the final solution to be implemented. However, the diversity of state-of-the-art approaches to the solution brings a large uncertainty on the decision of the dominant final solution. Furthermore, the performance data of each approach often arise from different manufacturers and developers, which usually have some interest in the dispute. Within this paper, we intend to overcome the situation by providing an introductory, neutral overview of the technology linked to lidar imaging systems for autonomous vehicles, and its current state of development. We start with the main single-point measurement principles utilized, which then are combined with different imaging strategies, also described in the paper. An overview of the features of the light sources and photodetectors specific to lidar imaging systems most frequently used in practice is also presented. Finally, a brief section on pending issues for lidar development in autonomous vehicles has been included, in order to present some of the problems which still need to be solved before implementation may be considered as final. The reader is provided with a detailed bibliography containing both relevant books and state-of-the-art papers for further progress in the subject.

Keywords:

lidar; ladar; time of flight; 3D imaging; point cloud; MEMS; scanners; photodetectors; lasers; autonomous vehicles; self-driving car 1. Introduction

In the late years, lidar (an acronym of light detection and ranging) has progressed from a useful measurement technique suitable for studies of atmospheric aerosols and aerial mapping, towards a kind of new Holy Grail in optomechanical engineering and optoelectronics. World-class engineering teams are launching start-ups and receiving relevant investments, and companies previously established in the field are being acquired by large industrial corporations, mainly from the automotive industry, or receiving heavy investments from the venture capital sector. The fuel of all this activity is the lack of an adequate solution in all aspects for lidar imaging systems for automobile either because of performance, lack of components, industrialization or cost issues. This has resulted in one of the strongest market-pull cases of the late years in the optics and photonics industries [1], a story which has even appeared in business journals such as Forbes [2], where sizes of the market in the billion unit level are claimed for the future Level 5 (fully automated) self-driving car.

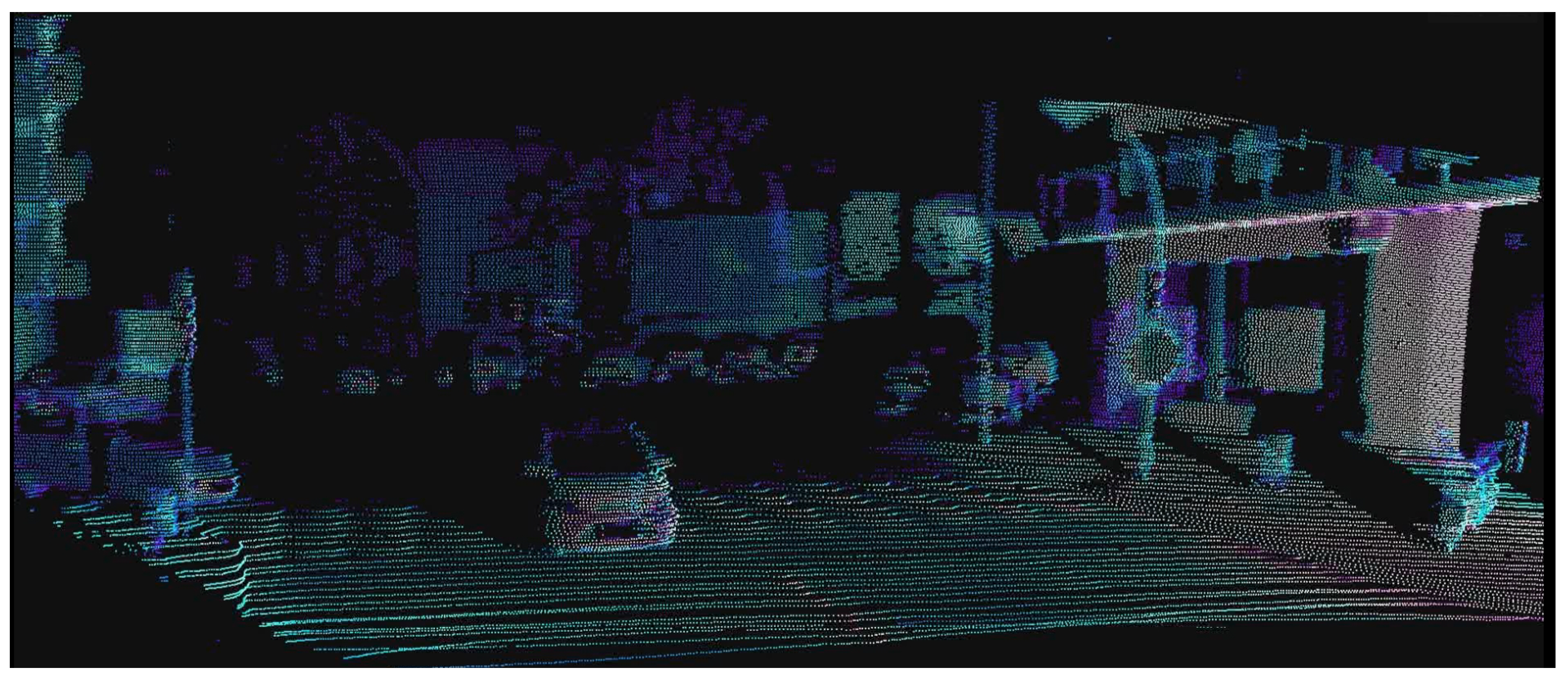

Lidar has been a well-known measurement technique since the past century, with established publications and a dense bibliography corpus. Lidar stands on a simple working principle based on counting the time between events in magnitudes carried out by light, such as e.g., backscattered energy from a pulsed beam. From these time measurements, the speed of light in air is used to compute distances or to perform mapping. Quite logically, this is referred to as the time-of-flight (TOF) principle. Remote sensing has been one of the paramount applications of the technology, either from ground-based stations (e.g., for aerosol monitoring) or as aerial or space-borne instrumentation, typically for Earth observation applications in different wavebands. A number of review papers [3], introductory texts [4] or comprehensive books [5,6] have been made available on the topic across the years. Lidar had become already so relevant that a full industry related to remote sensing and mapping was developed. The relevance of the lidar industry is further shown by the existence of standards, including a dedicated data format and file extension for lidar mapping (.LAS, from laser) which has become an official standard for 3D point cloud data exchange beyond the sensor and the software which generates the point clouds. Despite its interest and the proximity of the topic, it is not the goal of this paper to deal with remote sensing or mapping lidars, even if they provide images. They are already well-established, blooming research fields on their own with long track records, optimized experimental methods and dedicated data processing algorithms, published in specialized journals [7] and conferences [8].

However, a very relevant number of applications on the ground benefit from the capability of a sensor to capture the complete 3D information around a vehicle. Thus, still using time-counting of different events in light, first research-based, then commercial 3D cameras started to become available, and rapidly found applications in audiovisual segmentation [9], RGB+depth fusion [10], 3D screens [11], people and object detection [12] and, of course, in human-machine interfaces [13], some of them reaching the general consumer market, being the best-known example the Microsoft Kinect [14]. These commercial units were in almost all cases amplitude-modulated cameras where the phase of the emitted light was compared with the received one in order to compare their phase difference to estimate distance. A niche was covered, as solutions were optimal for indoor applications without a strong solar background, and although the spatial resolution of the images was limited, and depth resolution was kept in the cm level, they were still useful for applications involving large-scale objects (like humans).

In the meanwhile, a revolution incubated in the mobility industry in the shape of the self-driving car, which is expected to completely disrupt our mobility patterns. A fully autonomous car, with the driver traveling on the highway without neither steering wheel nor pedals, and without the need to monitor the vehicle at all has become a dream technically feasible. Its social advantages are expected to be huge, centered in the removal of human error from driving, with an expected 90% decrease in fatalities, and with relevant improvements related to the reduction of traffic jams and fuel emission, while enabling access to mobility for the aging and disabled populations. New ownership models are expected to appear and several industries (from automotive repair to parking, not forgetting airlines and several others) are expected to be disrupted, with novel business models arising from a social change comparable only to the introduction of the mobile phone. Obviously, autonomous cars will be the first due to the market size they represent, but other autonomous vehicles on the ground, in the air and sea will progressively become partially or completely unmanned, from trains to vessels. This change of paradigm, however, needs reliable sensor suites able to completely monitor the environment of the vehicle, with systems based on different working principles and with different failure modes to anticipate all possible situations. A combination of radar, video cameras, and lidar combined with deep learning procedures is the most likely solution for a vast majority of cases [15]. Lidar, thus, will be at the core of this revolution.

However, the rush towards the autonomous car and robotic vehicles has forced the requirements of lidar sensors into new directions from those of remote sensing. Lidar imaging systems for automotive require a combination of long-range, high spatial resolution, real-time performance and tolerance to solar background in the daytime, which has pushed the technology to its limits. Different specifications with different working principles have appeared for different possible usage cases, including short and long-range, or narrow and wide fields of view. Rotating lidar imagers were the first to achieve the required performances, using a rotating wheel configuration at high speed and multiple stacked detectors [16]. However, large-scale automotive applications required additional performance, like the capability to industrialize the sensor to achieve reliability and ease of manufacturing in order to get a final low-cost unit; or to have a small, nicely packaged sensor fitting in small volumes of the car. It was soon obvious that different lidar sensors were required to cover all the needs of the future self-driving car, e.g., to cover short and long-range 3D imaging with different needs regarding fields of view. Further, the uses of such a sensor in other markets, such as robotics or defense applications, has raised a quest for the final solid-state lidar, in which different competing approaches and systems have been proposed, a new proposal of set-up appears frequently, and a patent family even more frequently.

This paper intends to neutrally introduce the basic aspects of lidar imaging systems applied to autonomous vehicles, especially regarding automobiles, which is the largest and fastest developing market. Due to the strong activity in the field, our goal has been more to focus on the basic details of the techniques and components currently being used, rather than to propose a technical comparison of the different solutions involved. However, we will comment on the main advantages and disadvantages of each approach, and try to provide further bibliography on each aspect for interested readers. Furthermore, an effort has been done in order to skip mentioning the technology used by each manufacturer as this hardly could be complete, would be based on assumptions in some cases and could be subject to fast changes. To those interested, there are excellent reports which identify the technology used for each manufacturer at the moment when they were written [1].

The remaining of the paper has been organized as follows. Section 2 has been devoted to introducing the basics of the measurement principles of lidar, covering, in the first section, the three most used techniques for lidar imaging systems, which involve pulsed beams, amplitude-modulated and frequency-modulated approaches. A second subsection within Section 2 will cover the strategies used to move from the point-like lidar measurement just described to an image-like measurement covering a field of view (FOV). In Section 3, we will cover the main families of light sources and photodetectors currently used in lidar imaging units. Section 4 will be devoted to briefly review a few of the most relevant pending issues currently under discussion in the community, which need be solved before a given solution is deployed commercially. A final section will outline the main conclusions of this paper.

2. Basics of Lidar Imaging

The measurement principle used for imaging using lidar is time-of-flight (TOF), where depth is measured by counting time delays in events in light emitted from a source. Thus, lidar is an active, non-contact range-finding technique, in which an optical signal is projected onto an object we call the target and the reflected or backscattered signal is detected and processed to determine the distance, allowing the creation of a 3D point cloud of a part of the environment of the unit. Hence, the range R or distance to the target is measured based on the round-trip delay of light waves that travel to the target. This may be achieved by modulating the intensity, phase, and/or frequency of the transmitted signal and measuring the time required for that modulation pattern to appear back at the receiver. In the most straightforward case, a short light pulse is emitted towards the target, and the arrival time of the pulse’s echo at the detector sets the distance. This pulsed lidar can provide resolutions around the centimeter-level in single pulses over a wide window of ranges, as the nanosecond pulses used often have high instantaneous peak power. This enables to reach long distances while maintaining average power below the eye-safety limit. A second approach is based on amplitude modulation of a continuous wave (AMCW), so the phase of the emitted and backscattered detected waves are compared enabling to measure distance. A precision comparable to that of the pulsed technique can be achieved but only at moderate ranges, due to the short ambiguity distance imposed by the 2 ambiguity in frequency modulation. The reflected signal arriving at the receiver coming from distant objects is also not as strong as in the pulsed case, as the emission is continuous, which makes the amplitude to remain below the eye-safe limit at all times. Further, the digitization of the back-reflected intensity level becomes difficult at long distances. Finally, a third approach is defined by frequency-modulated continuous-wave (FMCW) techniques, enabled by direct modulation and demodulation of the signals in the frequency domain, allowing detection by a coherent superposition of the emitted and detected wave. FMCW presents two outstanding benefits ahead of the other techniques: it achieves resolutions in range measurement well below those of the other approaches, which may be down to 150 m with 1 m precision at long distances, although its main benefit is to obtain velocimetry measurements simultaneously to range data using the Doppler effect [17]. The three techniques mentioned are briefly discussed in the coming subsection.

2.1. Measurement Principles

2.1.1. Pulsed Approach

Pulsed TOF techniques are based on the simplest modulation principle of the illumination beam: distance is determined by multiplying the speed of light in a medium by the time a light pulse takes to travel the distance to the target. Since the speed of light is a given constant while we stay within the same optical medium, the distance to the object is directly proportional to the traveled time. The measured time is obviously representative of twice the distance to the object, as light travels to the target forth and back, and, therefore, must be halved to give the actual range value to the target [13,18,19]:

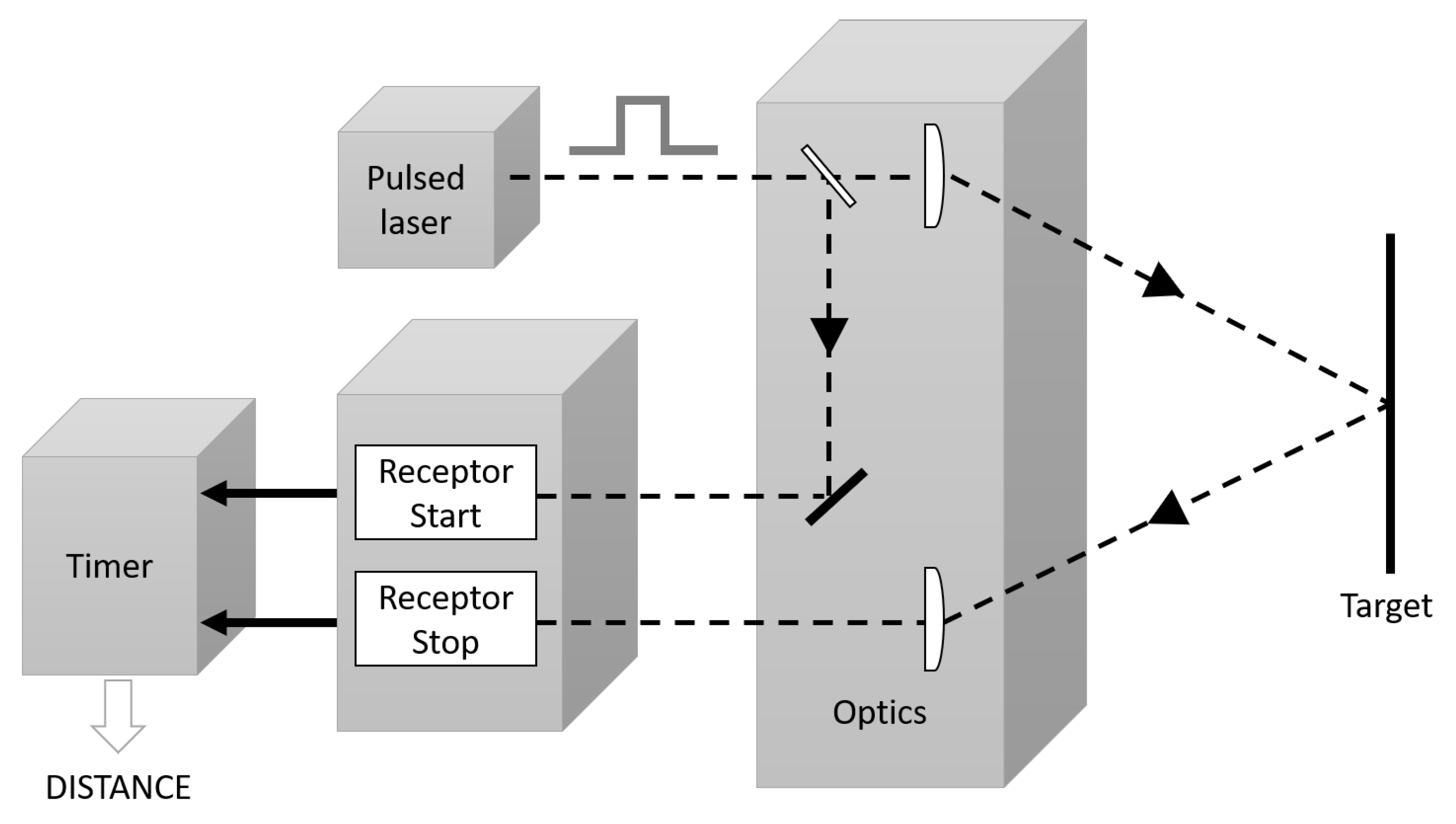

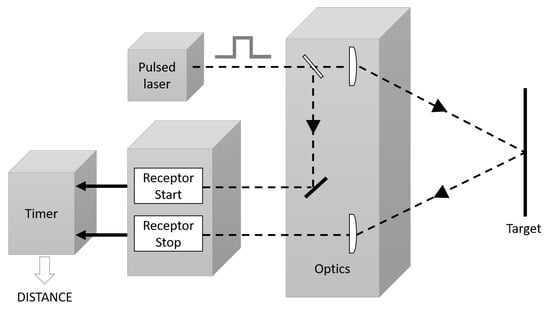

where R is the range to the target, c is the speed of light ( m/s) in free space and is the time it takes for the pulse of energy to travel from its emitter to the observed object and then back to the receiver. Figure 1 shows a simplified diagram of a typical implementation. Further technical details of measuring can be found in references like [18,20].

Figure 1.

Pulsed time-of-flight (TOF) measurement principle.

The attainable resolution in range () is directly proportional to the resolution in time counting available (). As a consequence, the resolution in depth measurement is dependent on the resolution in the time counting electronics. A typical resolution value of the time interval measurement can be assumed to be in the 0.1 ns range, resulting in a resolution in depth of 1.5 cm. Such values may be considered as the current reference, limited by jitter and noise in the time-counting electronics. Significant improvements in resolution may be obtained by using statistics [21], but this requires several pulses per data point, degrading sensor performance in key aspects like frame rate or spatial resolution.

Theoretically speaking, the maximum attainable range () is only limited by the maximum time interval () which can be measured by the time counter. In practice, this time interval is large enough so the maximum range becomes limited by other factors. In particular, the laser energy losses during travel (especially in diffusing targets) combined with the high bandwidth of the detection circuit (which brings on larger noise and jitter) creates a competition between the weak returning signal and the electronic noise, making the signal-to-noise ratio (SNR) the actual range limiting factor in pulsed lidars [22,23]. Another aspect to be considered concerning maximum range is the ambiguity distance (the maximum range which may be measured unambiguously), which in the pulsed approach is limited by the presence of more than one simultaneous pulse in flight, and thus is related to the pulse repetition rate of the laser. As an example, this ambiguity value ranges to 150 m at repetition rates of the laser close to the MHz range using Equation (1).

The pulsed principle directly measures the round trip time between light pulse emission and the return of the pulse-echo resulting from its backscattering from a target object. Thus, pulses need to be as short as possible (usually a few nanoseconds) with fast rise and fall times and large optical power. Because the pulse irradiance power is much higher than the background (ambient) irradiance power, this type of method performs well outdoors (although it is also suitable for indoor applications, where the absence of solar background will reduce the requirements on emitted power), under adverse environmental conditions, and can work for long-distance measurements (from a few meters up to several kilometers). However, once a light pulse is emitted by a laser and reflected onto an object, only a fraction of the optical energy may be received back at the detector. Assuming the target is an optical diffuser (which is the most usual situation), this energy is further divided among multiple scattering directions. Thus, pulsed methods need very sensitive detectors working at high frequencies to detect the faint pulses received. As long as, generally speaking, pulsed methods deal with direct energy measurements, they are an incoherent detection case [23,24,25].

The advantages of the pulsed approach include a simple measurement principle based on direct measurement of time-of-flight, its long ambiguity distance, and the limited influence of background illumination due to the use of high energy laser pulses. However, it is limited by the signal-to-noise ratio (SNR) of the measurement, where intense light pulses are required while eye-safety limits need be kept, and very sensitive detectors need to be used, which may be expensive depending on the detection range. Large amplification factors in detection, together with high-frequency rates add on significant complexity to the electronics. The pulsed approach is, despite these limitations, the one most frequently selected at present in the different alternatives presented by manufacturers of lidar imaging systems for autonomous vehicles, due to its simplicity and its capability to perform properly outdoors.

2.1.2. Continuous Wave Amplitude Modulated (AMCW) Approach

The AMCW approach consists of using the intensity modulation of a continuous lightwave instead of the laser pulses mentioned before. This principle is known as CW modulation, phase-measurement, or amplitude-modulated continuous-wave (AMCW). It uses the phase-shift induced in an intensity-modulated periodic signal in its round-trip to the target in order to obtain the range value. The optical power is modulated with a constant frequency , typically of some tenths of MHz, so the emitted beam is a sinusoidal or square wave of frequency . After reflection from the target, a detector collects the received light signal. Measurement of the distance R is deduced from the phase shift occurring between the reflected and the emitted signal [18,26,27]:

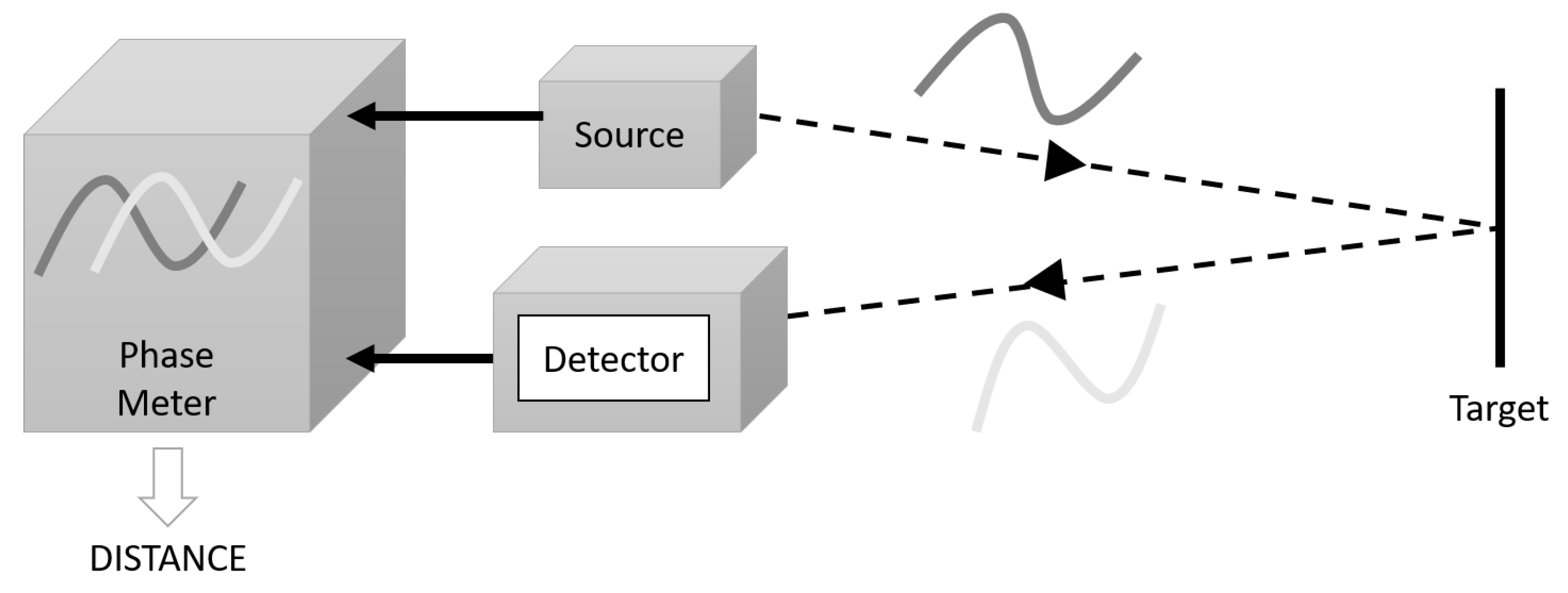

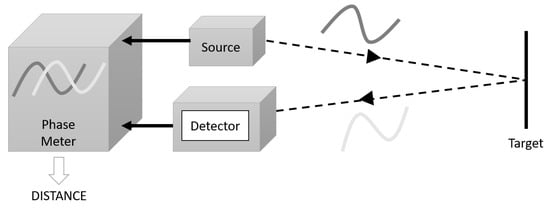

where R and c are, again, the range to the target and the speed of light in free space; is the wavenumber associated to the modulation frequency, d is the total distance travelled and is the modulation frequency of the amplitude of the signal. Figure 2 shows the schematics of a conventional AMCW sensor.

Figure 2.

TOF phase-measurement principle used in amplitude modulation of a continuous wave (AMCW) sensors.

There are a number of techniques that may be used to demodulate the received signal and to extract the phase information from it. For the sake of brevity, they will only be cited so the reader is referred to the linked references. For example, phase measurement may be obtained via signal processing techniques using mixers and low-pass filters [28], or, more generally, by cross-correlation of the sampled signal backscattered at the target with the original modulated signal shifted by a number (typically four) of fixed phase offsets [13,19,29,30]. Another common approach is to sample the received modulated signal and mix it with the reference signal, to then sample the resultant signal at four different phases [31]. The different types of phase meters are usually implemented as electronic circuitry of variable complexity.

In the AMCW approach, the resolution is determined by the frequency of the actual ranging signal (which may be adjusted) and the resolution of the phase meter fixed by the electronics. By increasing , the resolution is also increased if the resolution in the phase meter is fixed. However, larger frequencies bring on shorter unambiguous range measurements, meaning the phase value of the return signal at different range values starts to repeat itself after a 2 phase displacement. Thus, a significant trade-off appears between the maximum non-ambiguous range and the resolution of the measurement. Typical modulation frequencies are generally in the few tenths of MHz range. Approaches using advanced modulated-intensity systems have been proposed, which deploy multi-frequency techniques to extend the ambiguity distance without reducing the modulation frequency [23].

Further, even though phase measurement may be coherent in some domains, the sensitivity of the technique remains limited because of the reduced sensitivity of direct detection in the optical domain. From the point of view of the SNR, which is also related to the depth accuracy, a relatively long integration time is required over several time periods to obtain an acceptable signal rate. In turn, this introduces motion blur in the presence of moving objects. Due to the need for these long integration times, fast shutter speeds or frame rates are difficult to obtain [24,27].

AMCW cameras have, however, been commercialized since the 90s [32], and are often referred to as TOF cameras. They are usually implemented as parallel arrays of emitters and detectors, as discussed in Section 2.2.2, being limited by the range-ambiguity trade-off, and the limited physical integration capability of the phase-meter electronics, which is done pixel by pixel or for a group of pixels in the camera. This limits the spatial resolution of the point clouds to a few thousands of pixels. Furthermore, AMCW modulation is usually implemented on LEDs rather than lasers, which further limits the available power and thus the SNR of the signal and the attainable range, already limited by the ambiguity distance. Moreover, the amplitude of the signal is measured reliably on its arrival, and, in some techniques digitized, at a reasonable number of intensity levels. As a consequence, TOF cameras have little use outdoors, although they show excellent performance indoors, specially for large objects, and have been applied to a number of industries including audiovisual, interfacing and video-gaming [27]. They have also been used inside vehicles in different applications, like passenger or driver detection and vehicle interfacing [33].

2.1.3. Continuous Wave Frequency Modulated (FMCW) Approach

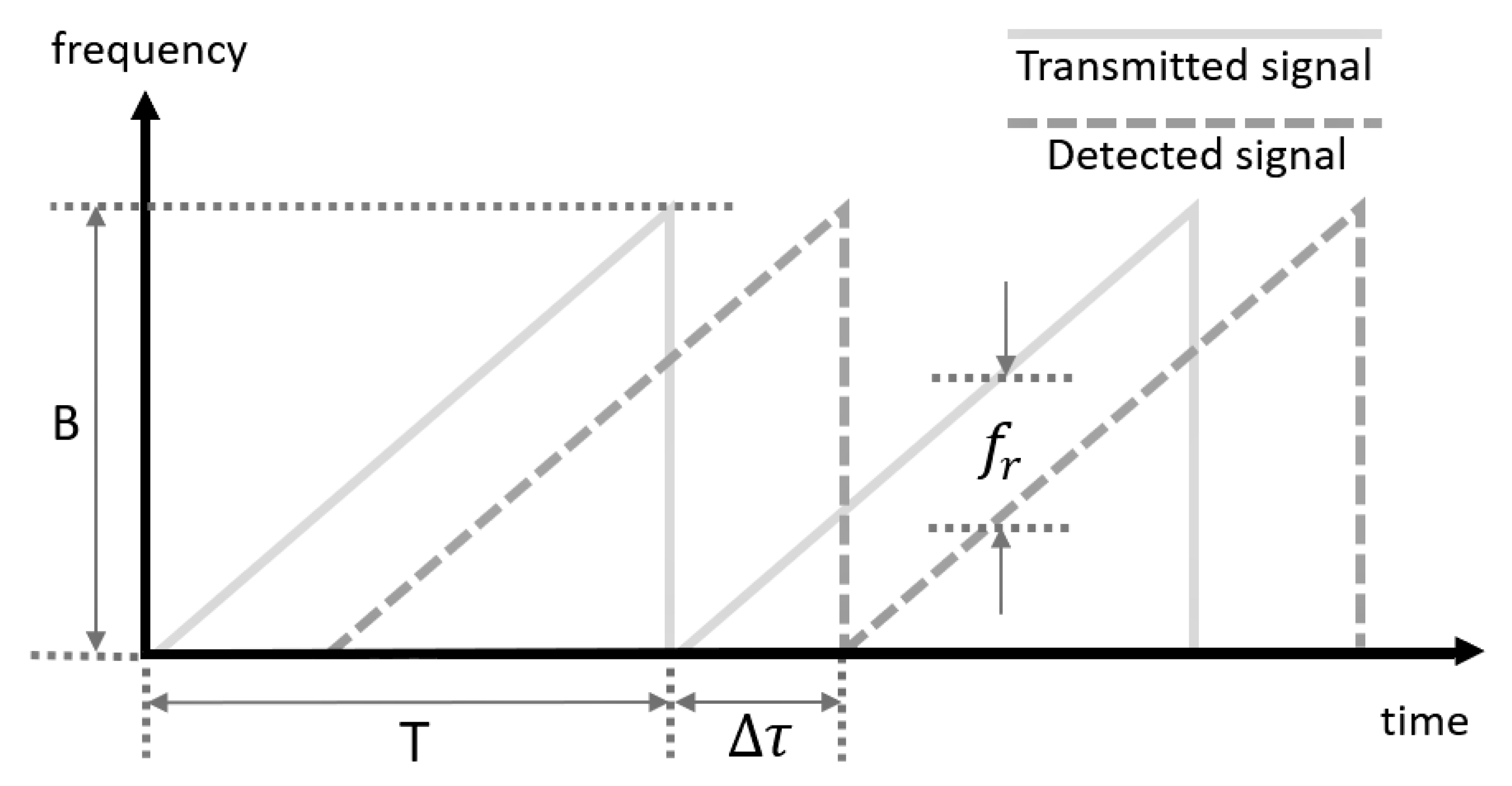

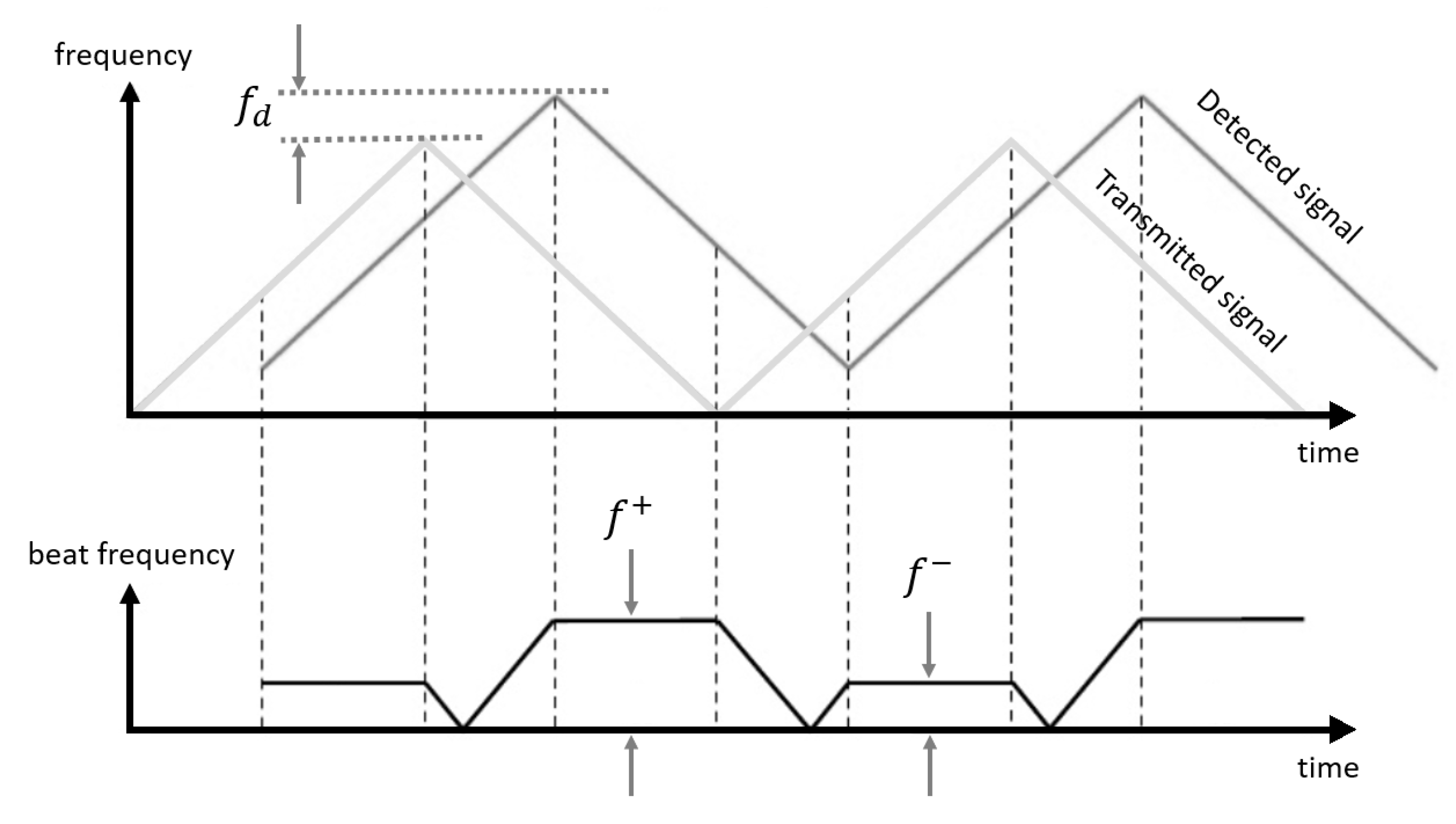

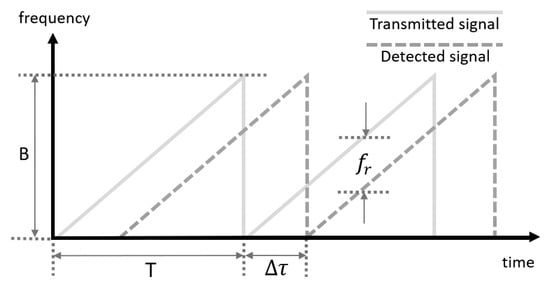

In the case of the FMCW approach, the emitted instantaneous optical frequency is periodically shifted, usually by varying the power applied to the source [34]. The reflected signal is mixed with the emitted source, creating a beat frequency that is a measure of the probed distance [35]. The source is normally a diode laser to enable coherent detection. The signal is then sent to the target, and the reflected signal that arrives at the receiver, after a traveled time , is mixed with a reference signal built from the emitter output. For a static target, the delay between the collected light and the reference causes a constant frequency difference , or beat frequency, from the mixed beams. Letting the instantaneous frequency vary under a linear law, is directly proportional to and hence proportional to the target range too [26,36,37], following:

where B is the bandwidth of the frequency sweep, T denotes the period of the ramp, and equals the total travelled time . Figure 3 depicts all these parameters.

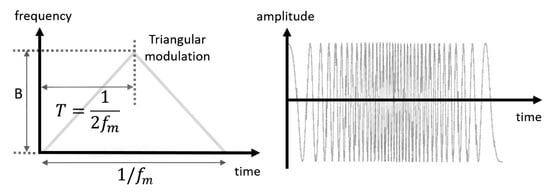

Figure 3.

Frequency modulation and detection in the frequency-modulated continuous-wave (FMCW) method: main parameters involved.

In practice, the frequency difference between the outgoing and incoming components is translated into a periodic phase difference between them, which causes an alternating constructive and destructive interference pattern at the frequency , i.e., a beat signal at frequency . By using FFT to transform the beat signal in time domain to frequency domain, the peak of beat frequency is easily translated into distance.

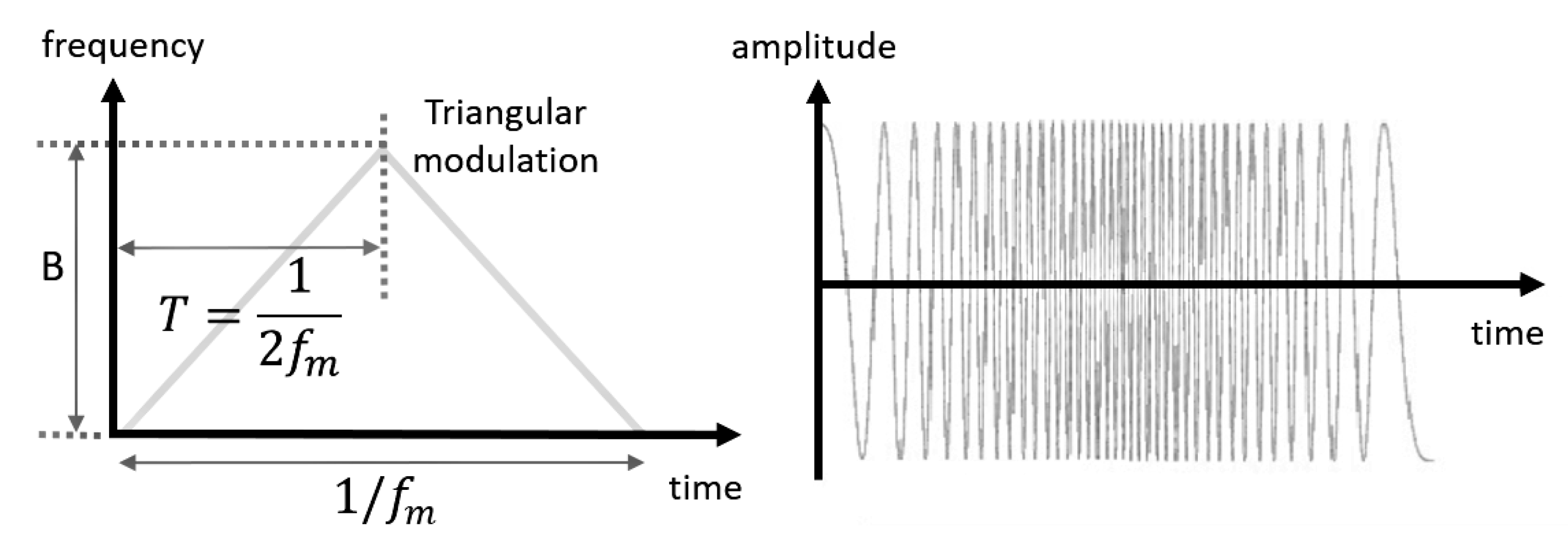

Usually, a triangular frequency modulation is used (Figure 4) rather than a ramp. The modulation frequency in this case is denoted as . Hence, the rate of frequency change can be expressed as [38], and the resulting beat frequency is given by:

Figure 4.

Triangular frequency modulation with time and linked amplitude signal change in the time domain.

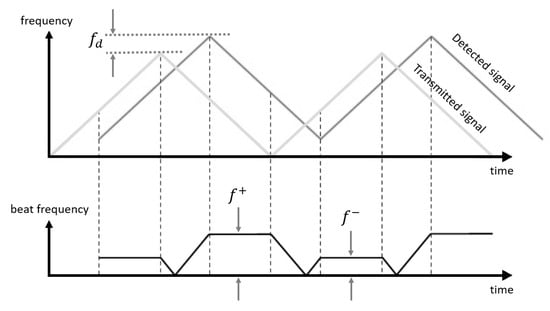

This type of detection has the very relevant advantage of adding on the capability of measuring not only range but also, using the same signal, the velocity of the target and its sign. If the target moves, the beat frequency obtained will not only be related to R, but also to the velocity of the target relative to the sensor. The velocity contribution is taken into account by the Doppler frequency , which will affect the sweep of the beat frequency up or down (Figure 5). Thus, beat frequency components are superimposed to , following [39]:

Figure 5.

Triangular modulation frequency signal and beat frequency for a moving target.

In this case, range can be obtained from:

while relative velocity and its direction can also be calculated using the Doppler effect:

showing the ability of FMCW to simultaneously measure range and relative velocity using the properties of the Fourier spectra [38,40].

FMCW takes advantage of the large frequency bandwidth available in the optical domain and exploits it to improve the performance of the range sensor. The resolution of the technique is now related to the total bandwidth of the signal. Since the ramp period can be chosen arbitrarily, the FMCW method can determine values in the picosecond range, equivalent to millimeter or even submillimeter distances, by performing frequency measurements in the kilohertz regime, which is perfectly feasible. Resolutions of 150 m have been reported, which is an improvement of two orders of magnitude relative to the other approaches. Unfortunately, in general, a perfect linear or triangular optical frequency sweep cannot be realized by a linear modulation of the control current and, in addition, the frequency-current curve is in general nonlinear, especially close to the moment of slope change. As a consequence, deviations from the linear ramp usually occur, which, in turn, bring on relevant variations in . Moreover, the range resolution depends on the measurement accuracy of and also on the accuracy with which the modulation slope is controlled or known [17,26,36].

The FMCW method is fundamentally different from the two previous approaches because of the use of the coherent (homodyne) detection scheme in the Fourier domain, rather than the incoherent intensity detection schemes described until now for time counting or phase measurement approaches [17]. FMCW has shown to be useful in outdoor environments and to have improved resolution and long-range values relative to the pulsed and AMCW approaches. Its main benefit in autonomous vehicle applications is its ability to sense the speed value, and its direction together with range. However, its coherent detection scheme poses potential problems related to practical issues like coherence length (interference in principle requires the beam to be within coherence length in the full round-trip to the target), availability of durable tunable lasers with good temperature stability, external environmental conditions, accuracy of the modulation electronics, or linearity of the intensity-voltage curve of the laser, which require advanced signal processing. Although it is not the principal approach in lidar imaging systems for autonomous vehicles, some teams are currently implementing lidar solutions based on FMCW in commercial systems due to its differential advantages, and clearly winning presence when compared to the rest of approaches we described.

2.1.4. Summary

The three main measurement principles share the goal of measuring TOF but have very different capabilities and applications (see Table 1). The pulsed approach is based on an incoherent measurement principle, as it is based on the detection of intensity, and can show resolutions at the cm level, being operative under strong solar background with large ambiguity distances. Its main advantage is the simplicity of the setup based on indirect detection of intensity, which is stable, robust and has available general-purpose components. The main disadvantage involves the limit in range due to low SNR at long ranges and the emission limit fixed by eye-safety levels. AMCW methods have been commercialized several years ago and are exceptionally well-developed and efficient in indoor environments. They have stable electronics architectures working in parallel in every pixel and are based on the well-known CMOS technology. They present an equivalent resolution to those of pulsed lidars, and the complexity of determining the reliability of the phase measurements using low SNR signals. Further, this SNR limitation constrains their applications outdoors. Finally, the FMCW approach presents relevant advantages which appear to place them as the future natural choice for autonomous vehicles, as its coherent detection scheme enables improvements in resolution of range measurements between one and two orders of magnitude when compared to the other methods, and the use of FFT signal processing enables to measure speed of the target simultaneously. Despite these advantages, it is a coherent system that needs to be significantly stable in its working conditions to be reliable, and aspects like temperature drift or linearity of electronics become important, which is significant for an application that demands robustness and needs units performing stably for several years.

Table 1.

Summary of working principles.

2.2. Imaging Strategies

Once the three main measurement strategies used in lidar imaging systems have been presented, it is worth noting all of them have been presented as pointwise measurements. However, lidar images of interest are always 3D point clouds, which achieve accurate representations of fields of view as large as 360 around the object of interest. A number of strategies have been proposed in order to build lidar images out of the repetition of point measurements, but they can essentially be grouped into three different families: scanning components of different types, detector arrays, and mixed approaches. Scanning systems are used to sweep a broad number of angular positions of the field of view of interest using some beam steering component, while detector arrays exploit the capabilities of electronic integration of detectors to create an array of receiving elements, each one capturing illumination from separate angular sections of the scene to deliver a value for each individual detector. Some of the strategies have also been successfully combined with each other, depending on the measurement approach or requirements, and are briefly discussed in a section devoted to mixed approaches.

2.2.1. Scanners

Currently, in the automotive lidar market, most of the proposed commercial systems rely on scanners of different types [41,42,43,44]. In the most general approach, the scanner element is used to re-position the laser spot on the target by modifying the angular direction of the outgoing beam, in order to generate a point cloud of the scene. This poses questions related to the scanning method, its precision, its speed, its field of view, and its effect on the beam footprint on the target, which directly affects the spatial resolution of the images [45].

A large number of scanning strategies have been proposed for lidar and laser scanning, and are currently effective in commercial products. This includes e.g., galvanometric mirrors [46] or Risley prisms [47]. When it comes to its use in autonomous vehicles, three main categories may be found: mechanical scanners, as described, which use rotating mirrors and galvanometric or piezoelectric positioning of mirrors and prisms to perform the scanning; micro-electromechanical system (MEMS) scanners, which use micromirrors actuated using electromagnetic or piezoelectric actuators to scan the field of view, often supported by expanding optics; and optical phased arrays (OPAs), which perform pointing of the beam based on a multibeam interference principle from an array of optical antennas. Direct comparison of scanning strategies for particular detector configurations has been proposed [42]. Although we will focus on these three large families, it is worth noting that other approaches have been proposed based on alternative working principles such as liquid crystal waveguides [48], electrowetting [49], groups of microlens arrays [50] and even holographic diffraction gratings [51].

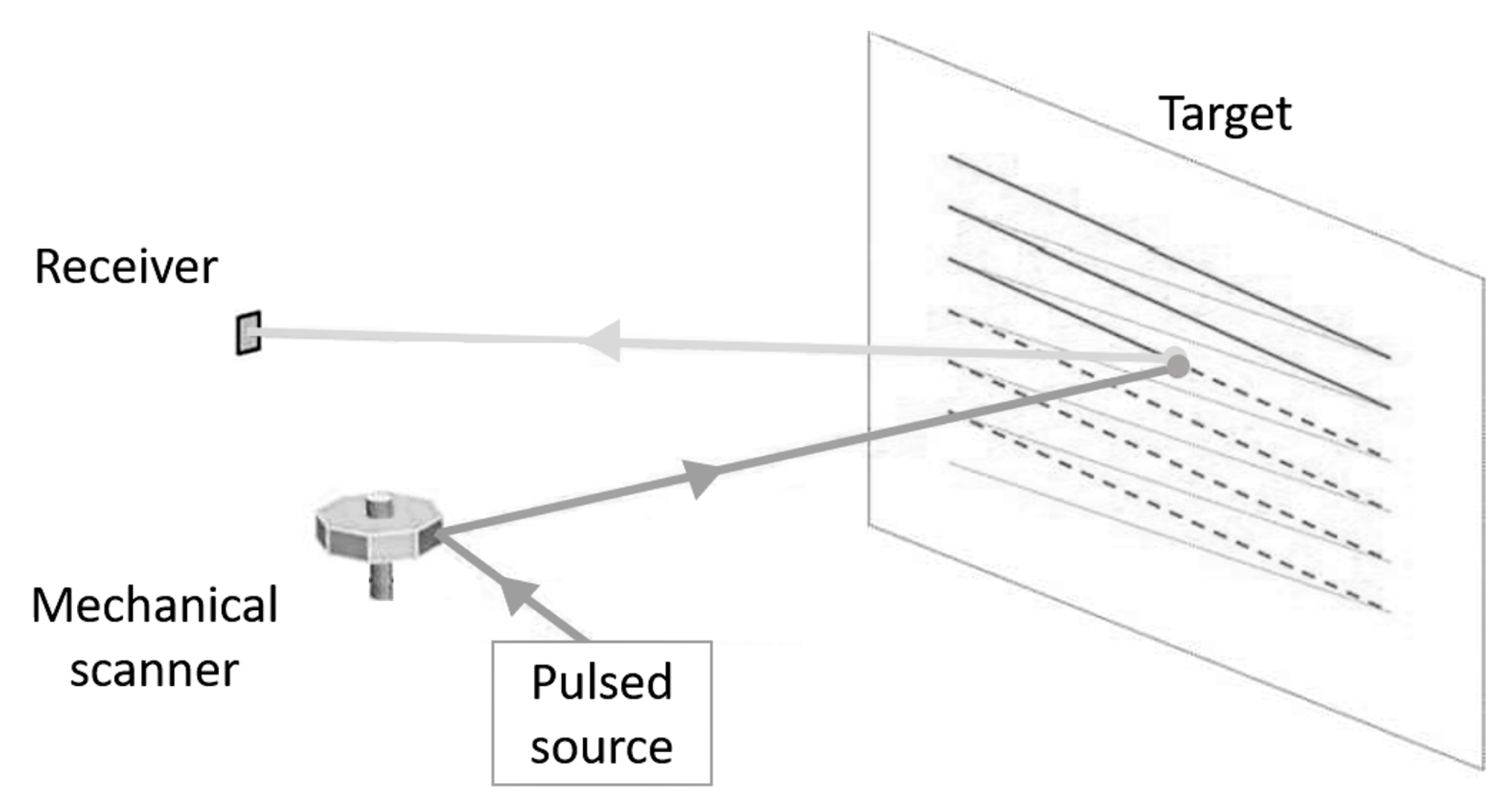

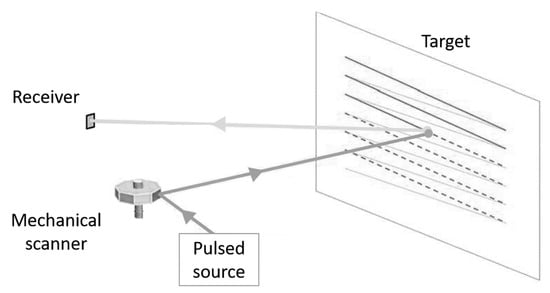

(a) Mechanical scanners

Lidar imaging systems based on mechanical scanners use high-grade optics and some kind of rotating or galvanometric assembly, usually with mirrors or prisms attached to mechanical actuators, to cover a wide field of view. In the case of lidar, units with sources and detectors jointly rotate around a single axis. This may be done by sequentially pointing the beam across the target in 2D, as depicted in Figure 6, or by rotating the optical configuration around a mechanical axis, in which case a number of detectors may be placed in parallel along the spinning axis. In this latter case 360 deg FOVs of the sensor may be achieved, covering the whole surroundings of the vehicle. The mirrors or prisms used may be either rotating or oscillating, or may be polygon mirrors. This is the most popular scanning solution for many commercial lidar sensors, as it provides straight and parallel scan lines with a uniform scanning speed over a vast FOV [52,53], or angularly equispaced concentric data lines. In rotating mirrors, the second dimension is usually obtained by adding more sources and/or detectors to measure different angular directions simultaneously. Further, the optical arrangement for rotating lidar units may be simple and extremely efficient in order to collect faint diffuse light and thus achieve very long ranges as there is some margin in the size of the collection optics, especially when compared to other approaches.

Figure 6.

Schematics of a typical light detection and ranging (lidar) imaging system based on mechanical scanning.

Lidars with mechanical scanners work almost always with pulsed sources and are usually significantly large and bulky. In the case of rotating mirrors, they can achieve large spatial resolution in the direction of turn (usually horizontal) although they become limited in the orthogonal direction (usually vertical) where the density of the point cloud is limited by the number of available sources and detectors measuring in parallel. Further, they need rather high power consumption and, due to the large inertia of the rotating module, the frame rate is limited (frame rate goes from below 1 Hz to about 100 Hz). They are, however, very efficient in long-range applications (polygon mirrors combined with coaxial optical systems easily reach distances well beyond 1 km). Despite the current prevalence of these type of scanners, the setup presents numerous disadvantages in a final consumer unit, in particular the question of reliability and maintenance of the mechanisms, the mass and inertia of the scanning unit which limits the scanning speed, the lack of flexibility of the scanning patterns, and the issue of being misalignment-prone under shock and vibration, beyond being power-hungry, hardly scalable, bulky and expensive. Although several improvements are being introduced in these systems [43], there is a quite general agreement that mechanically scanning lidars need to move towards a solid-state version. However, they are currently providing one of the performances closest to the one desired for the final long-range lidar unit, and they are commercially available from different vendors using different principles. This makes them the sensor of choice for autonomous vehicle research and development, such as algorithm training [54], autonomous cars [55], or robotaxis [56]. Their success also has brought a number of improvements in geometry, size and spatial resolution to make them more competitive as a final lidar imaging system in automotive.

(b) Microelectromechanical scanners

Microelectromechanical systems (MEMS)-based lidar scanners enable programmable control of laser beam position using tiny mirrors with only a few mm in diameter, whose tilt angle varies when applying a stimulus, so the angular direction of the incident beam is modified and the light beam is directed to a specific point in the scene. This may be done bidimensionally (in 2D) so a complete area of the target is scanned. Various actuation technologies are developed including electrostatic, magnetic, thermal and piezoelectric. Depending on the applications and the required performance (regarding scanning angle, scanning speed, power dissipation or packaging compatibility), one or another technology is chosen. The most common stimulus in lidar applications based on MEMS scanners is voltage; the mirrors are steered by drive voltages generated from a digital representation of the scan pattern stored in a memory. Then, digital numbers are mapped to analog voltages with a digital-to-analog converter. However, electromagnetic and piezoelectric actuation has also been successfully reported for lidar applications [52,57,58,59].

Thus, MEMS scanners substitute macroscopic mechanical-scanning hardware with an electromechanical equivalent reduced in size. A reduced FOV is obtained compared to the previously described rotary scanners because they have no rotating mechanical components. However, using multiple channels and fusing their data allows us to create FOVs and point cloud densities able to rival or improve mechanical lidar scanners [60,61]. Further, MEMS scanners have typically resonance frequencies well above those of the vehicle, enhancing maintenance and robustness aspects.

MEMS scanning mirrors are categorized into two classes according to their operating mechanical mode: resonant and non-resonant. On one hand, non-resonant MEMS mirrors (also called quasi-static MEMS mirrors) provide a large degree of freedom in the trajectory design. Although a rather complex controller is required to keep the scan quality, desirable scanning trajectories with constant scan speed at large scan ranges can be generated by an appropriate controller design. Unfortunately, one key spec, such as the scanning angle, is quite limited in this family compared to resonant MEMS mirrors. Typically, additional optomechanics are required in order to enlarge the scan angle, adding optical aberrations like distortion to the scanning angle. On the other hand, resonant MEMS mirrors provide a large scan angle at a high frequency and a relatively simple control design. However, the scan trajectory is sinusoidal, i.e., the scan speed is not uniform. However, their design needs to strike a balance where the combination of scan angle, resonance frequency, and mirror size is combined for the desired resolution, while still keeping the mirror optically flat to avoid additional image distortions which may affect the accuracy of the scan pattern [52,62,63]. Laser power handling at the surface of the mirror is also an issue that needs be carefully taken into account to avoid mirror damage, especially in long-range units.

A 2D laser spot projector can be implemented either with a single mirror with two oscillation axes or using two separate, orthogonal mirrors oscillating each along one axis. Single-axis scanners are simpler to design and fabricate, and are also far more robust to vibration and shock; however, dual-axis scanners provide important optical and packaging advantages, essentially related to the simplicity of the optical arrangement and accuracy required in the relative alignment of the two single-axis mirrors. One crucial difficulty with dual-axis scanners is the crosstalk between the two axes. With the increasing requirements of point cloud resolution, it becomes harder to keep the crosstalk to acceptable levels, which would be a reason to choose the bulkier and more complex two-mirror structure. The most common system architecture is raster scanning, where a low frequency, linear vertical scan (quasi-static) is paired with an orthogonal high frequency, resonant horizontal scan. For the raster scanner, the fast scanner will typically run at frequencies of some KHz (typically exceeding 10 KHz), and should provide a large scan angle [63,64].

A detailed overview of MEMS laser scanners is provided in [62], where the topics covered previously may be found expanded. Extended discussions on accuracy issues in MEMS mirrors may also be found in [63].

Due to its promising advantages (in particular being lightweight, compact and with low power consumption) MEMS-based scanners for lidar have received increasing interest for their use in automotive applications. MEMS-based lidar imaging systems have in parallel shown the feasibility of the technology in different scenarios such as space applications and robotics. Currently, the automotive utilization of MEMS for lidar is in growing development by a number of companies [52,65,66,67,68] becoming one of the preferred solutions at present.

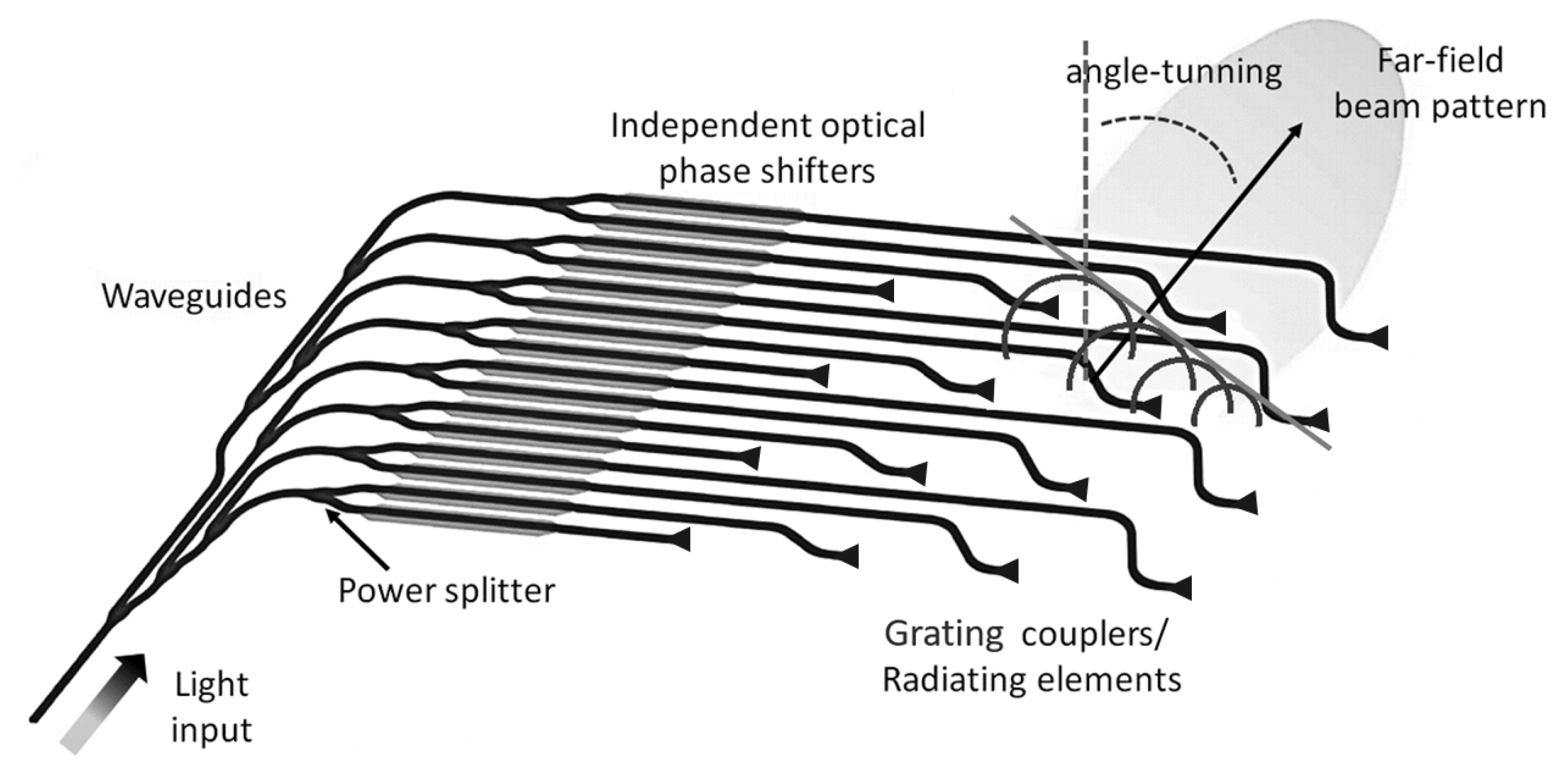

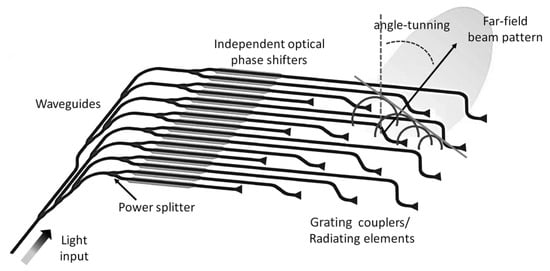

(c) Optical Phased Arrays

An optical phased array (OPA) is a novel type of solid-state device that enables to us steer the beam using a multiplicity of micro-structured waveguides. Its operating principle is equivalent to that of microwave phased arrays, where the beam direction is controlled by tuning the phase relationship between arrays of transmitter antennas. By aligning the emitters’ phases of several coherent emitters, the emitted light interferes constructively in the far-field at certain angles enabling to steer the beam (Figure 7). While phased arrays in radio were first explored more than a century ago, optical beam steering by phase modulation has been demonstrated first in the late 1980s [69,70,71,72].

Figure 7.

Schematic diagram of the working principle of an optical phased array (OPA): emitted fields from each antenna interfere to steer a far-field pattern.

In an OPA device, an optical phase modulator controls the speed of light passing through the device. Regulating the speed of light enables control of the shape and orientation of the wave-front resulting from the combination of the emission from the synced waveguides. For instance, the top beam is not delayed, while the middle and bottom beams are delayed by increasing amounts at will. This phenomenon effectively allows the deflection of a light beam, steering it in different directions. OPAs can achieve very stable, rapid, and precise beam steering. Since there are no mechanical moving parts at all, they are robust and insensitive to external constraints such as acceleration, allowing extremely high scanning speeds (over 100 kHz) over large angles. Moreover, they are highly compact and can be stored in a single chip. However, the insertion loss of the laser power in the OPA is still a drawback, [4,73] as it is their current ability to handle the large power densities required for long-range lidar imaging.

OPAs have gained interest in recent years as an alternative to traditional mechanical beam steering or MEMS-based techniques because they completely lack inertia, which limits the ability to reach a large steering range at high speed. Another relevant advantage is brought by the fact that steering elements may be integrated with an on-chip laser. As a developing technology with high potential, the interests on OPA for automotive lidar is growing in academia and industry, even though OPAs are still under test for long-range lidar. However, they are operative in some commercially available units targeting shorter and mid ranges [74]. Recently, sophisticated OPAs have been demonstrated with performance parameters that make them seem suitable for high power lidar applications [71,75,76]. The combination of OPAs and FMCW detection has got the theoretical potential to drive a lidar system fully solid-state and on-chip, which becomes one of the best potential combinations for a lidar unit. An on-chip lidar fabricated using combined CMOS and photonics manufacturing, if industrialized, has huge potential regarding reliability and reduced cost. However, both technologies, and in particular OPA, need further development from its current state.

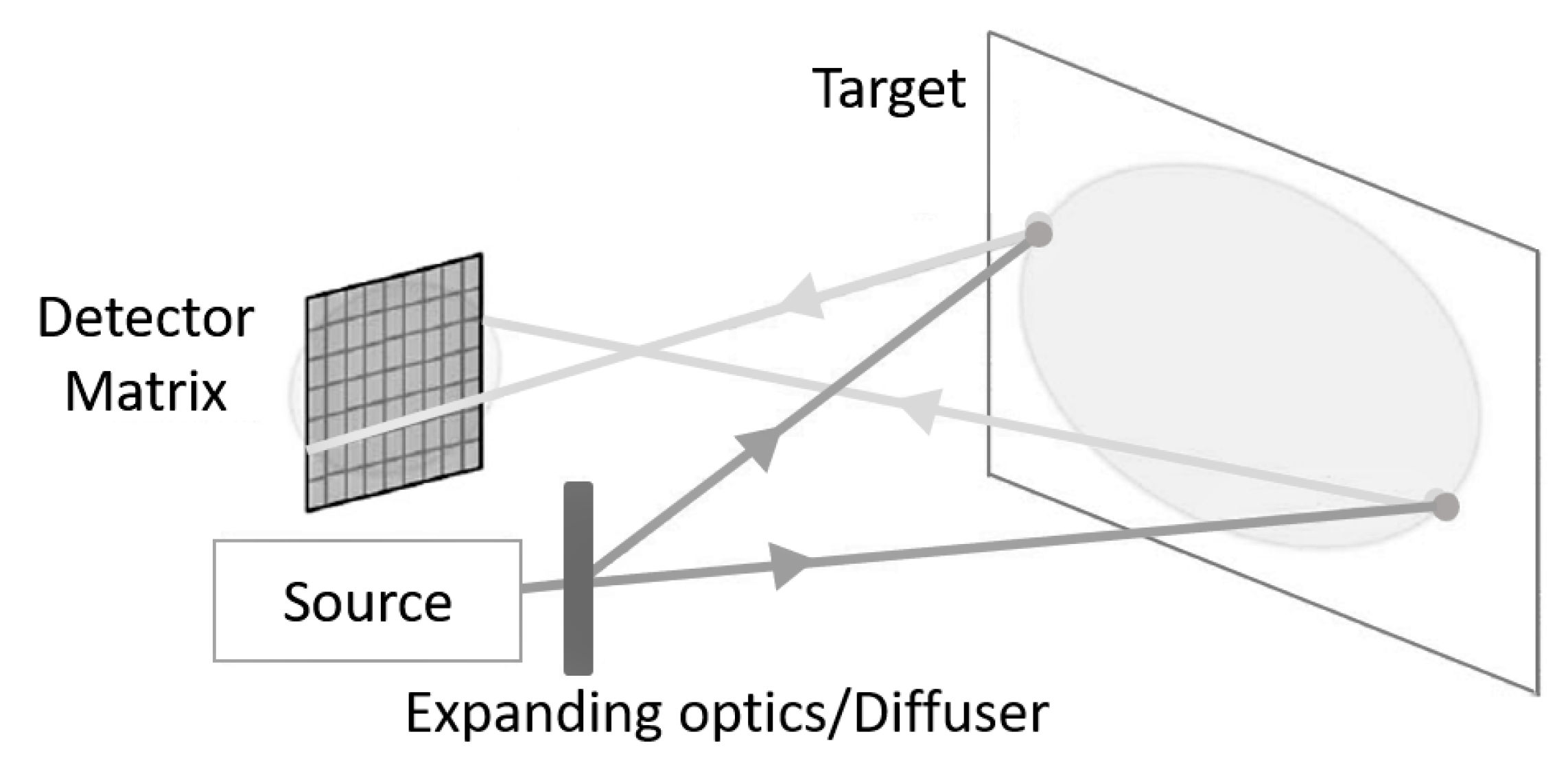

2.2.2. Detector Arrays

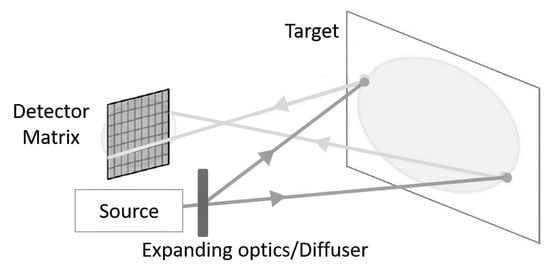

Due to the lack of popularity in the automotive market of scanning lidar approaches based on moving elements, alternative imaging methods have been proposed to overcome their limitations beyond MEMS scanners and OPAs. These scannerless techniques typically combine specialized illumination strategies with arrays of receivers. Transmitting optical elements illuminate a whole scene and a linear array (or matrix) of detectors receives the signals of separate angular subsections in parallel, allowing to obtain range data of the target in a single-shot (Figure 8) making it easy to manage real-time applications. The illumination may be pulsed (flash imagers) or continuous (AMCW or FMCW lidars).

Figure 8.

Detector array-based lidar diagram.

With the exception of FMCW lidars, where coherent detection enables longer ranges, flash imagers or imagers based on AMCW principle (TOF cameras) are limited to medium to short ranges. In flash lidars the emitted light pulse is dispersed in all directions, significantly reducing the SNR, while in TOF cameras the phase ambiguity effect limits the measured ranges to a few meters. A brief description of the basic working principle is provided next.

(a) Flash imagers

One very successful architecture for lidar imaging systems in autonomous vehicles is flash lidar, which has progressed to a point where it is very close to commercial deployment in short and medium-range systems. In a flash lidar, imaging is obtained by flood-illuminating a target scene or a portion of a target scene using pulsed light. The backscattered light is collected by the receiver which is divided among multiple detectors. They respond to the schematics in Figure 8, considering a pulsed source and appropriate expanding optics to expand the beam to cover the scene of interest. Each detector captures the image distance, and sometimes the reflected intensity using the conventional time-of-flight principle. Hence, both the optical power imaged onto a 2-D array of detectors and the 3D point cloud are directly obtained with a single laser blast on the target [45,77,78].

In a flash-lidar system, the FOV of the array of detectors needs to closely match the illuminated region on the scene. This is usually achieved by using an appropriate divergent optical system which expands the laser beam to illuminate the full FOV. The beam divergence of the laser is usually arranged to optically match the receiver FOV, allowing us to illuminate all the pixels in the array at once. Each detector in the array is individually triggered by the arrival of a pulse return, and measures both its intensity and the range. Thus, the spatial resolution strongly depends on the resolution of the camera, that is, on the density with which the detectors have been packed, usually limited by CMOS technology patterning. The resolution in z depends, usually, on the pulse width and the accuracy of the time counting device. Typically, the spatial resolution obtained is not very high due to the size and cost of the focal plane array used. Values around some tenths of Kilopixels are usual [42], limited by cost and size of the detector (as they usually work at 1.55 m, and are thus InGaAs based. Resolution in depth and angular resolution are comparable to scanning lidars, even better in some arrangements.

Since the light intensity from the transmitter is dispersed with a relatively large angle to cover the full scene, and such a value is limited by eye-safety considerations, the measurement distance is dependent on sensing configurations including aspects like emitted power, sensor FOV and detector type and sensitivity. It can vary from tenths of meters to very long distances, although at present they are used in the 20 m to 150 m range in automotive. Range and spatial resolution of the measurement obviously depend on the FOV considered for the system, which limits the entrance pupil of the optics and the area where the illuminator needs to spread power enough to be detected afterwards. The divergence of the illuminating area and the backscattering at the target significantly reduce the amount of optical power available, so very high peak illumination power and very sensitive detectors are required in comparison to single-pixel scanners. Detectors are usually SPADs (single photon avalanche diodes), discussed in Section 3.2.2. This has caused the present flash setups to keep being concentrated in sensing at medium or short-range applications in autonomous vehicles, where they take advantage of their lack of moving elements, and they have acceptable costs in mass production due to the simplicity of the setup [52].

Eye-safety considerations are significant in these flood illumination approaches [52]. However, the main disadvantage of flash imagers comes from the type of response of the detectors used, which are used in Geiger mode and thus produce a flood of electrons at each detection. The presence of retro-reflectors in the real-world environment, designed to reflect most of the light and backscatter very little to be visible at night when illuminated by car headlamps, is a significant problem to these cameras. In roads and highways, for instance, retro-reflectors are routinely used in traffic signs and license plates. In practice, retro-reflectors flood the SPAD detector with photons, saturating it, and blinding the entire sensor for some frames, rendering it useless. Some schemes based on interference have been proposed to avoid such problems [79]. Issues related to mutual interference of adjacent lidars, where one lidar detects the illumination pattern of the other, are also expected to be a hard problem to solve in flash imagers. On the positive side, since flash lidars capture the entire scene in a single image, the data capture rate can be very fast, so the method is very resilient to vibration effects and movement artifacts, which otherwise could distort the image. Hence, flash lidars have proven to be useful in specific applications such as tactical military imaging scenarios where both the sensor platform and the target move during the image capture, a situation also common in vehicles. Other advantages include the elimination of scanning optics and moving elements and potential for creating a miniaturized system [80,81]. This has resulted in the existence of systems based on flash lidars effectively being commercialized at present in the automotive market [82].

(b) AMCW cameras

A second family of lidar imaging systems that use detector arrays are the TOF cameras based on the AMCW measuring principle. As described in Section 2.1.2, these devices modulate the intensity of the source and then measure the phase difference between the emitter and the detected signal at each pixel on the detector. This is done by sampling the returned signal at least four times. Detectors for these cameras are manufactured using standard CMOS technology, so they are small and low-cost, based on well-known technology and principles, and capable of short-distance measurements (from a few centimeters to several meters) before they enter into redundancy issues due to the periodicity of the modulation. Typical AMCW lidars with a range of 10 m may be modulated at around 15 MHz, thus requiring that the signal at each pixel to be sampled at around 60 MHz. Most commercially available TOF cameras operate by modulating the light intensity in the near-infrared (NIR) and use arrays of detectors where each pixel or group of pixels incorporates its own phase meter electronics. This in practice poses a maximum value on the spatial resolution of the scenes to the available capabilities of lithographic patterning, being most of them limited to some tenths of thousands of points per image. It is a limit comparable to that of the flash array detectors just mentioned, also due to manufacturing limitations. However, TOF cameras perform usually in the NIR region, so their detectors are based on silicon, while flash imagers are more often working at 1.55 m for eye-safety considerations. The AMCW strategy is less suitable for outdoor purposes due to the effects of background light on SNR and the number of digitization levels needed to reliably measure the phase of the signal. However, they have been widely used indoor in numerous other fields such as robotics, computer vision, and home entertainment [83]. In automobiles, they have been proposed for occupant monitoring in the car. A detailed overview of current commercial TOF cameras is provided in [13].

2.2.3. Mixed Approaches

Some successful proposals of lidar imagers have mixed the two imaging modalities presented above, that is, they have combined some scanning approach together with some multiple detector arrangement. The cylindrical geometry enabled by a single rotating axis combined with a vertical array of emitters and detectors has been very successful [84] to parallelize the conventional point scanning approach both in emission and detection. These so-called spinning lidars increase data and frame rate while enabling for each detector a narrow field of view, which makes them very efficient energetically and, thus, able to reach long distances. A comparable approach has also been developed for flash lidars with an array of detectors and multiple beams mounted on a rotating axis [85]. These spinning approaches obviously demand line-shaped illumination of the scene. They may enable 360 deg vision of the FOV, in difference with MEMS or flash approaches which only retrieve the image within the predefined FOV of the unit.

Another interesting and successful mixed approach has been to use 1D MEMS mirrors for scanning a projected line onto the target, which is then recovered by either cylindrical optics on a 2D detector array, or onto an array of 1D detectors [52]. This method significantly reduces the size and weight of the unit while enabling large point rates, and provides very small and efficient sensors without macroscopic moving elements.

2.2.4. Summary

Single-point measurement strategies used for lidar need imaging strategies to deliver the desired 3D maps of the surroundings of the vehicle. We divided them into scanners and detector arrays. A summary of the keypoints described is provided in Table 2.

Table 2.

Summary of imaging strategies.

While mechanical scanners are now prevalent in the industry, the presence of moving elements supposes a threat to the duration and reliability of the unit in the long term, due to sustained shock and vibration conditions. MEMS scanners appear to be the alternative as they are lightweight, compact units with resonance frequencies well above those typical in a vehicle. They can scan large angles using adapted optical systems and use little power. However, they present problems related to the linearity of the motion and heat dissipation. OPAs would be the ideal solution, providing static beam steering, but they still present problems in the management of large power values, which set them currently in the research level. However, OPAs are a feasible alternative for the future in long-range, and currently they are commercialized in short and medium-range applications.

On the detector array side, flash lidars provide a good solution without any moving elements, especially in the close and medium ranges, where they achieve comparable performance figures to scanning systems. Large spatial resolution using InGaAs detectors is, however, expensive; and the use of detectors in Geiger mode poses issues related to the presence of retroreflective signs which can temporarily blind the sensor. The AMCW approach, as described, is based on detector arrays but is not reliable enough in outdoor environments. Intermediate solutions combining scanning using cylindrical geometries (rotation around an axis) with line illuminators and linear detector arrays along a spinning axis have also been proposed, both using the scanning and the flash approaches.

We do not want to finish this Section without mentioning an alternative way of classifying lidar sensors which by now should be obvious to the reader. We preferred an approach based on the components utilized for building the images, as it provides an easier connection with the coming Sections. However, an alternative classification of lidars based on how they illuminate the target would have also been possible, dividing them into those who illuminate the target point by point (scanners), as 1D lines (like the cylindrical approach just described in Section 2.2.3) or as a simultaneous 2D flow (flash imagers or TOF cameras). Such classification also enables us to include all the different families of lidar imaging systems. An equivalent classification based on the detection strategy (single detector, 1D array, 2D array) would also have been possible.

3. Sources and Detectors for Lidar Imaging Systems in Autonomous Vehicles

Lidar systems illuminate a scene and use the backscattered signal that returns to a receiver for range-sensing and imaging. Thus, the basic system must include a source or transmitter, a sensitive photodetector or receiver, the data processing electronics, and a strategy to obtain the information from all the target, essential for the creation of the 3D maps and/or proximity data images. We have just discussed the main imaging strategies, and the data processing electronics used are usually specialized regarding their firmware or code, but there is not really a choice of technology specific for lidar for these components in general. Data processing and device operation are usually managed by variable combinations of field programmable gate arrays (FPGAs), digital signal processors (DSPs), microcontrollers or even computers depending on the system architecture. Efforts oriented to dedicated chipsets for lidar are emerging and have reached the commercial level [86], although they still need of dedicated sources and detectors.

Thus, we believe the inclusion of a dedicated section to briefly review the particularities of sources and detectors used in lidar imaging systems is required to complete this paper. Due to the strong requirements on frame rate, spatial resolution and SNR imposed to lidar imaging systems, light sources and photodetectors become key state-of-the-art components of the unit, subject to strong development efforts, and which have different trade-offs and performance limits which affect the overall performance of the lidar setup.

3.1. Sources

Lidars usually employ lasers sources with wavelengths from the infrared region, typically from 0.80 to 1.55 m, to take advantage of the atmospheric transmission window, and in particular of water at those wavelengths [87], while enabling the use of beams not visible to the human eye. The sources are mainly used in three regions: a waveband from 0.8 m to 0.95 m, dominated by diode lasers which may be combined with silicon-based photodetectors; lasers at 1.06 m, still usable for Si detectors and usually based on fibre lasers, and lasers at 1.55 m, available from the telecom industry, which need InGaAs detectors. However, other wavelengths are possible as the lidar detection principle is general and works in most cases regardless of the wavelength selected. Wavelength is in general chosen taking into account considerations related to cost and eye safety. 1.55 m, for instance, enables much more power within the eye safety limit defined by Class 1 [88] but may become really expensive if detectors need to be above the conventional telecom size (200 m).

Thus, sources are based either on lasers or on nonlinear optical systems driven by lasers, although other sources may be found (such as LEDs), usually for short-range applications. Different performance features must be taken into account in order to select the most suitable source according to the system purpose. This includes, as the most relevant for automotive, peak power, pulse repetition rate (PRR), pulse width, wavelength (including purity and thermal drift), emission (single-mode, beam quality, CW/pulsed), size, weight, power consumption, shock resistance and operating temperature. In one very typical feature of lidar imaging systems, such performance features involve trade-offs; for instance, a large peak power typically goes against a large PRR or spectral purity.

Currently, the most popular sources for use in lidar technology are solid-state lasers (SSL) and diode lasers (DLs), with few exceptions. SS lasers employ insulating solids (crystals, ceramics or glasses) with elements added (dopants) that provide the energy levels needed for lasing. A process called optical pumping is used to provide the energy for exciting the energy levels and creating the population inversion required for lasing. Generally, SSLs use DLs as pumping sources to create the population inversion needed for lasing. The use of SSLs for performing active-sensing for range-finding started in 1960 with the SS ruby laser, thanks to the development of techniques to generate pulses with a duration of nanoseconds. Since then, almost every type of laser developed has been employed in demonstrations of rangefinders. SSLs can be divided into a wide range of categories, for example bulk or fiber, with this latter increasingly becoming prevalent in lidar for autonomous vehicles, not only because of its efficiency and capacity for generation of high average powers with high beam quality and PRR, but also because it provides an easy way to mount and align the lidar using free-space optics.

Beyond fiber lasers, increasing attention has been devoted to microchip lasers. They are, perhaps, the ultimate in miniaturization of diode-pumped SSLs. Its robust and readily mass-produced structure is very attractive. Their single-frequency CW performance and their excellence at generating sub-nanosecond pulses make microchip lasers well suited for many applications, lidar being among them. They need free-space alignment, unlike fibers, but can also achieve large peak energies with excellent beam quality. A more detailed description of all these laser sources, and of some others less relevant to lidar, can be found in [45].

Finally, semiconductor DLs are far more popular in the industry due to their lower cost and their countless applications beyond lidar. They are considered to be a separate laser category, although they are also made from solid-state material. In a DL, the population inversion leading to lasing takes place in a thin layer comprised between a semiconductor PN junction, named the depletion zone. Under electrical polarization, the recombination of electrons and holes in the depletion zone produces photons that get confined inside the region. This provides lasing without the need of an optical pump, and it is the reason why DLs can directly convert electrical energy into laser light output, making them very efficient. Different configurations are available for improved performance, including Fabry–Perot cavities, vertical-cavity surface-emitting lasers, distributed feedback lasers, etc. [89].

Sources in Lidar Imaging Systems

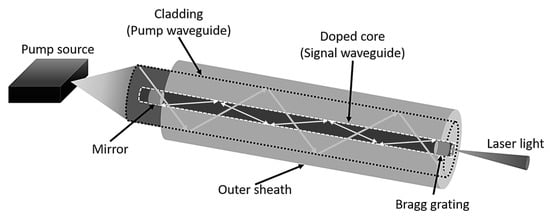

(a) Fiber lasers

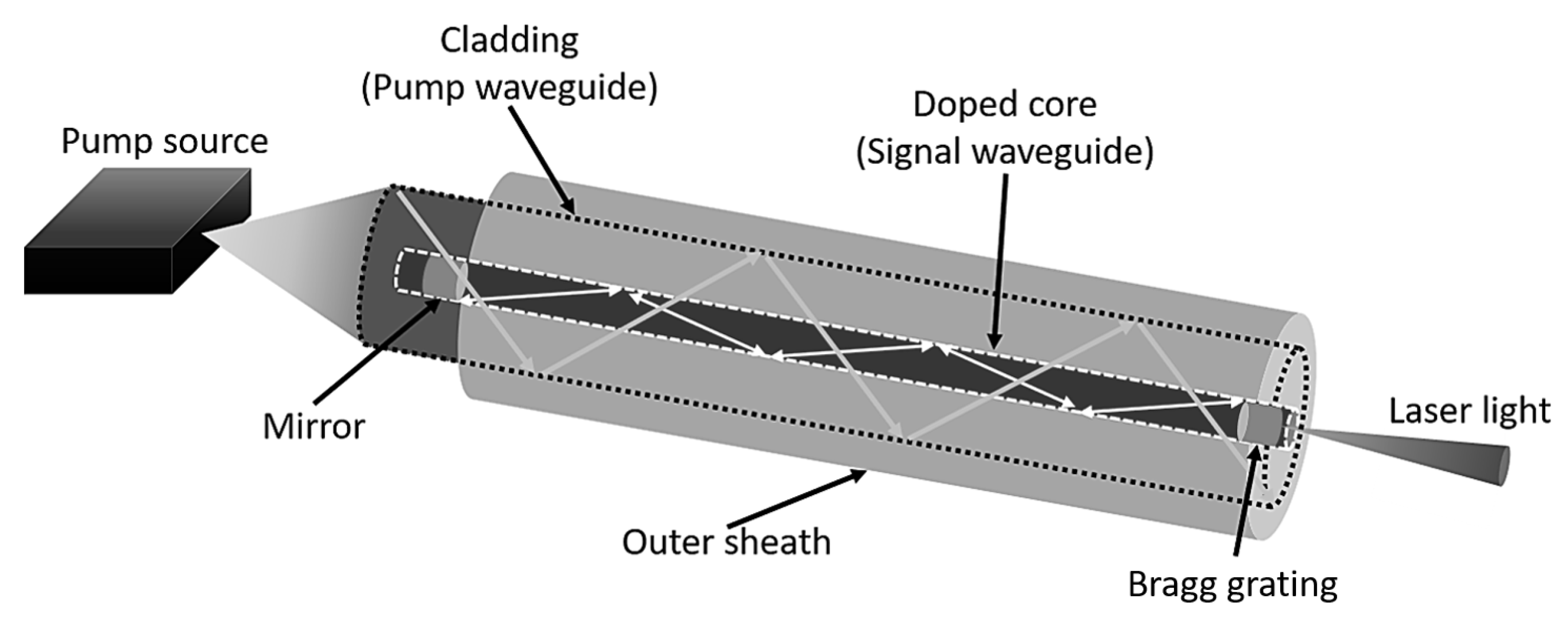

Fiber lasers use optical fibers as the active media for lasing. The optical fiber is doped with rare earth elements (such as Er) and one or several fiber-coupled DLs are used for pumping. In order to turn the fiber into a laser cavity, some kind of reflector (mirror) is needed to form a linear resonator or to build a fiber ring architecture (Figure 9). For commercial products, it is common to use a Bragg grating at the edge of the fiber which allows to reflect back a part of the photons. Although, in broad terms, the gain media of fiber lasers is similar to that of SS bulk lasers, the wave-guiding effect of the fiber and the small effective mode area usually lead to substantially different properties. For example, fiber lasers often operate with much higher laser gain and resonator losses than SSLs [90,91,92,93].

Figure 9.

Schematic diagram of the fiber laser.

Fiber lasers can have very long active regions, so they can provide very high optical gain. Currently, there are high-power fiber lasers with outputs of hundreds of watts, sometimes even several kilowatts (up to 100 kW in continuous-wave operation) from a single fiber [94]. This potential arises from two main reasons: on one side, from a high surface-to-volume ratio, which allows efficient cooling because of the low and distributed warming; on the other side, the guiding effect of the fiber avoids thermo-optical problems even under conditions of significant heating [95]. The fiber’s waveguiding properties also permit the production of a diffraction-limited spot, that is, the smallest spot possible due to the laws of Physics, with very good beam quality, introducing a very small divergence of the beam linked only to the numerical aperture of the fibre, which stays usually within 0.1 deg values [96,97]. This divergence is proportional to the footprint of the beam on the target, and thus is directly related to the spatial resolution of the lidar image.

Furthermore, due to the fact that light is already coupled into a flexible fiber, it is easily sent to a movable focusing element allowing convenient power delivery and high stability to movement. Moreover, fiber lasers also enable configurable external trigger modes to ease the system synchronization and control and have a compact size because the fiber can be bent and coiled to save space. Some other advantages include its reliability, the availability of different wavelengths, their large PRR (>0.5 MHz is a typical value) and their small pulse width (<5 ns). Fiber lasers provide a good balance between peak power, pulse duration and pulse repetition rate, very well suited to the lidar specifications. Nowadays, its main drawback is its cost, which is much larger than other alternatives [45].

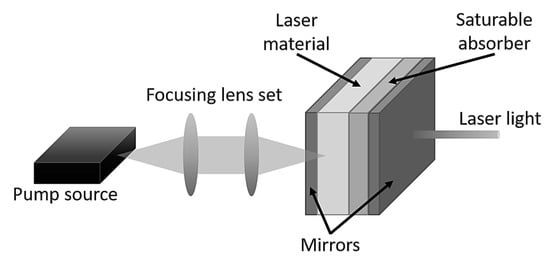

(b) Microchip lasers

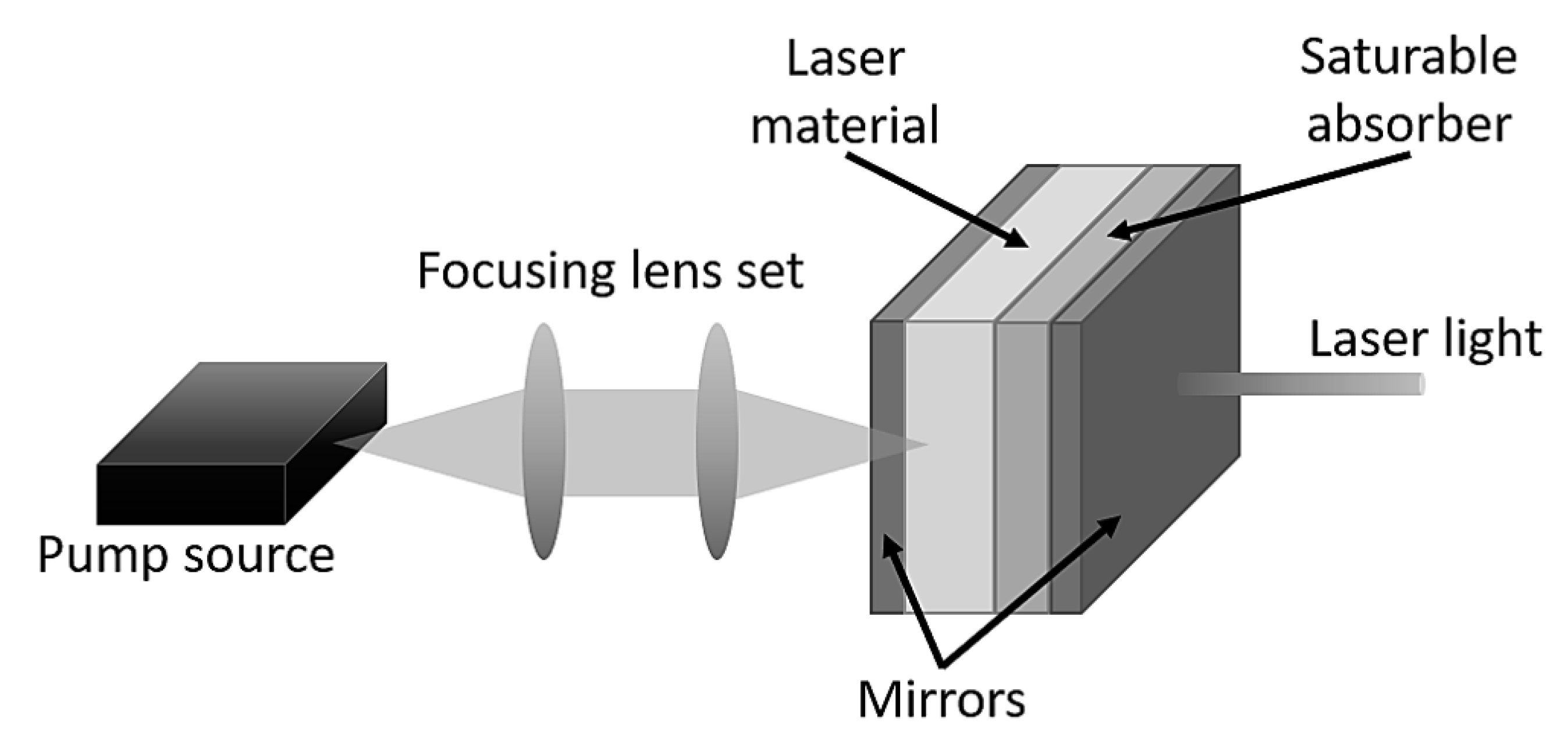

Microchip lasers are bulk SS lasers with a piece of doped crystal or glass working as the gain medium. Its design allows optical pump power to be transmitted into the material, as well as to spatially overlap the generated laser power with the region of the material which receives the pump energy (Figure 10). The ability to store large amounts of energy in the laser medium is one of the key advantages of bulk SS lasers and is unique to them. A saturable absorber (active or passive) induces high losses in the cavity until the energy storage saturates (passive) or is actively liberated by means of a shutter (active Q-switch) [98,99].

Figure 10.

Schematic diagram of a microchip laser.

The active medium of microchip lasers is a millimeter-thick, longitudinally pumped crystal (such as Nd:YAG) directly bonded to solid media that have saturable absorption at the laser wavelength. This transient condition results in the generation of a pulse containing a large fraction of the energy initially stored in the laser material, with a width that is related to the round-trip time of light in the laser cavity. Due to the bulk energy storage combined with their short laser resonator which leads to very short round-trip time, microchip lasers are well suited to the generation of high power short pulses, well beyond conventional lidar specs. Simple construction methods are enough to obtain short pulses in the nanosecond-scale (typical <5 ns). Q-switched microchip lasers may also allow the generation of unusually short pulses with a duration below 1 ns, although in lidar this poses problems in the bandwidth of the amplification electronics at the detector. Particularly with passive Q-switching, it is possible to achieve high PRR in the MHz region combined with short pulses of few ns, very well suited to present lidar needs. For lower repetition rates (around 1 KHz), pulse energies of some microjoules and pulse duration of a few nanoseconds allow for large peak powers (>1 kW). Beam quality can be very good, even diffraction-limited [100,101,102,103].

Microchip lasers provide an acceptable balance between peak power and pulse duration in a compact and cost-effective design in scale production. They have found their own distinctive role, which makes them very suitable for a large number of applications. Many of these applications benefit from a compact and robust structure and small electric power consumption. In other cases, their excellence at generating sub-nanosecond pulses and/or the possible high pulse repetition rates are of interest. For example, in laser range-sensing, it is possible to achieve a very high spatial resolution (down to 10 cm or less) due to the short pulse duration [104,105]. The cost stays midway between DL and fiber lasers, although closer to the level of fiber lasers.

(c) Diode lasers

There are two major types of DLs: interband lasers, which use transitions from one electronic band to another; and quantum cascade lasers (QCL), which operate on intraband transitions relying on an artificial gain medium made possible by quantum-well structures, built using band structure engineering. DLs are by far the most widespread in use, but recently, QCL have emerged as an important source of mid and long-wave infrared emission [4,45,106].

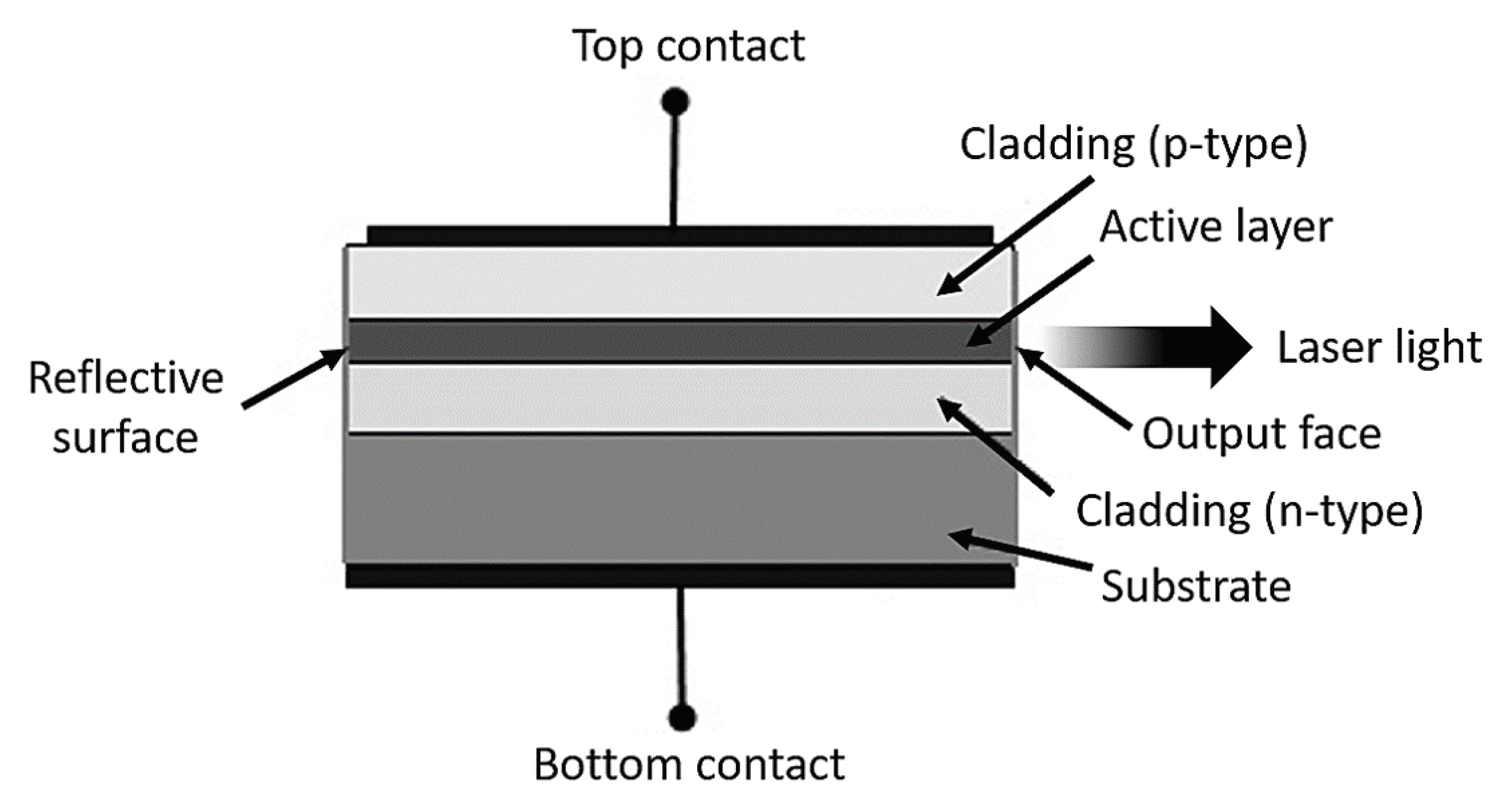

Interband DLs are electrically pumped semiconductor lasers in which the gain is generated by an electrical current flowing through a PN junction or (more frequently) with a P doped-Insulator-N doped (PIN) structure to improve performance. A PIN junction properly polarized makes the I-region the active media expanding the depletion zone, where carriers can recombine releasing energy as photons, and at the same time acting as a waveguide for the generated photons. The goal is to recombine all electrons and holes to create light confined by edge mirrors of the I region, one of them partially reflective so the radiation can escape from the cavity. This process can be spontaneous, but it can also be stimulated by incident photons effectively leading to optical amplification and to laser emission [89,107,108,109].

Interband lasers can be presented in a number of geometries that correspond to systems operating in very different regimes of optical output power, wavelength, bandwidth, and other properties. Some examples include edge-emitting lasers (EEL, Figure 11), vertical-cavity surface-emitting lasers (VCSEL), distributed feedback lasers (DFB), high-power stacked diode bars or external cavity DL. Many excellent reviews exist discussing the characteristics and applications of each of these types of laser [45,110,111,112,113,114].

Figure 11.

Schematic diagram of a edge-emitting (EEL) diode laser.

Regarding lidar applications, DLs have the advantage of being cheap and compact, two key properties in autonomous vehicle applications. In particular, DLs are extremely good regarding their cost against peak power figure of merit. Although they provide less peak power than fiber or microchip lasers, it is still enough for several lidar applications (maximum peak power may be up to the 10 kW range in optimal conditions) [115]. However, they become limited by a reduced pulse repetition rate (∼100 kHz) and an increased pulse width (∼100 ns). Furthermore, high-power lasers are usually in the EEL configuration, yielding a degraded beam quality with a fast and a slow axis diverging differently, which negatively affects the laser footprint and the spatial resolution. However, they are currently used in almost every lidar, either as a direct source or as optical pumps in SSLs [116].

Table 3 presents a brief summary of the key characteristics of the different laser sources described when used in lidar applications.

Table 3.

Summary: main features of sources for lidar.

3.2. Photodetectors

Along with light sources, photodetectors are the other main component of a lidar system that has dedicated features. Photodetectors are the critical photon sensing device in an active receiver which enables the TOF measurement. It needs to have a large sensitivity for direct detection of intensity, and it also has to be able to detect short pulses, thus requiring high bandwidth. A wide variety of detectors are available for lidar imaging, ranging from single-element detectors to arrays of 2D detectors, which may build an image with a single pulse [117].

The material of the detector defines its sensitivity to the wavelength of interest. Currently, Si-based detectors are used for the waveband between 0.3 m and 1.1 m, while InGaAs detectors are used above 1.1 m, although they have acceptable sensitivities from 0.7 m and beyond [118]. InP detectors and InGaAs/InP heterostructures have also been proposed as detectors in the mid-infrared [119], although their use in commercial lidar systems is rare due to their large cost if outside telecommunications standards and, eventually, the need for cooling them to reduce their noise figure.

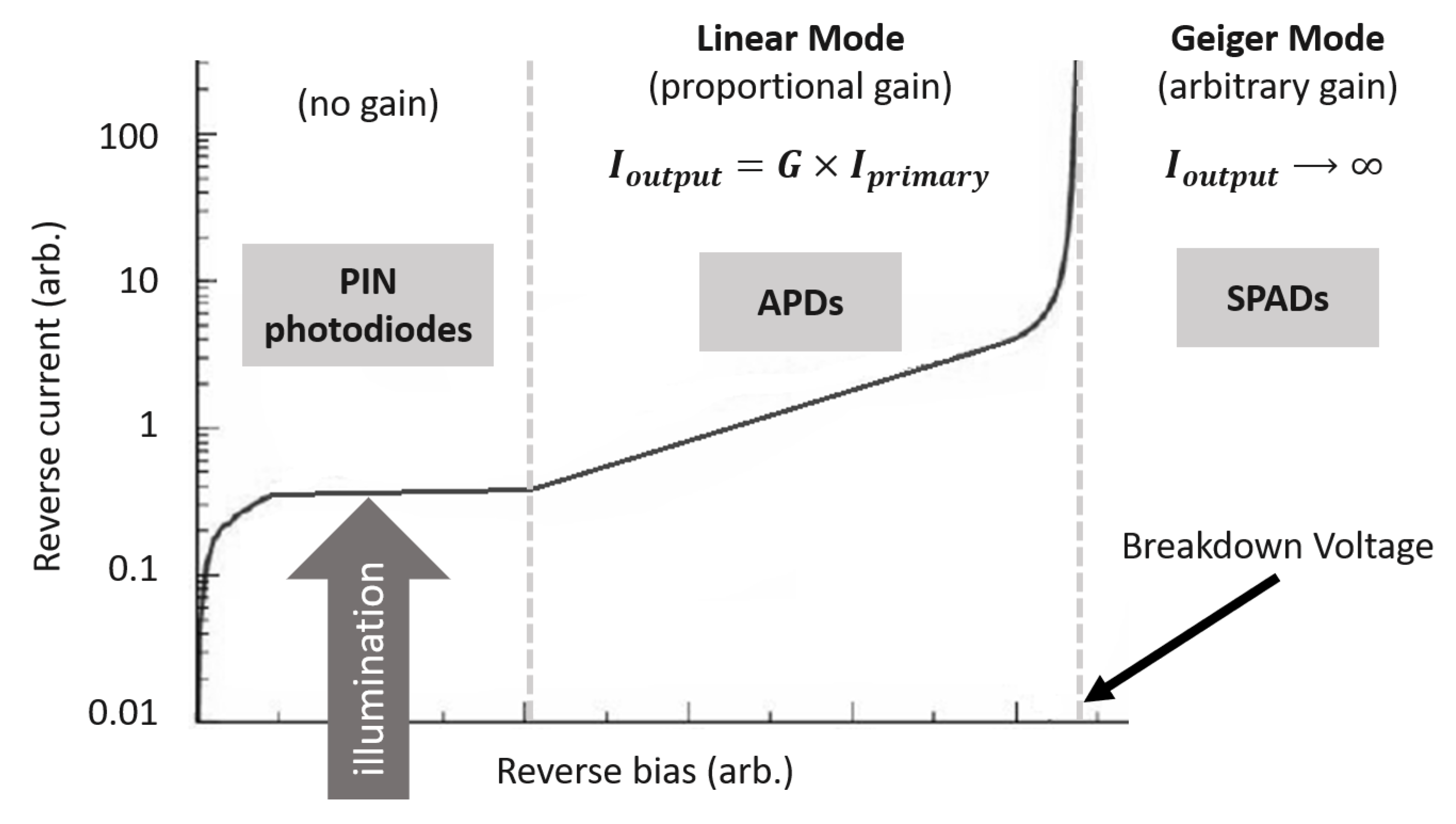

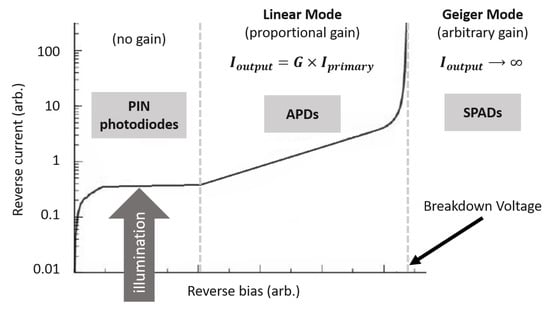

Light detection in lidar imaging systems usually accounts for five different types of detectors: PIN diodes, avalanche photodiodes (APD), single-photon avalanche photodiodes (SPAD), multi-pixel photon counters (MPPC) and, eventually, photomultiplier tubes (PMT). Each one may be built from the material which addresses the wavelength of interest. The most used single-detector are PIN photodiodes, which may be very fast detecting events in light if they have enough sensitivity for the application, but do not provide any gain inside of their media, so in optimal efficiency conditions, each photon creates a single photoelectron. For applications that need moderate to high sensitivity and can tolerate bandwidths just below the GHz regime typical of PIN diodes, avalanche photodiodes (APDs) are the most useful receivers, since their structure provides a certain level of multiplication of the current generated by the incident light. APDs are able to internally increase the current generated by the photons incident in the sensitive area, providing a level of gain, usually around two orders of magnitude. In fact, their gain is proportional to the reverse bias applied, so they are linear devices with adjustable gain which provide a current output proportional to the optical power received. Single-photon avalanche diodes (SPAD) are essentially APDs biased beyond the breakdown voltage, and with their internal structure arranged to repetitively withstand large avalanche events. Whereas in an APD a single photon can produce in the order of tens to few hundredths of electrons, in a SPAD a single photon produces a large electron avalanche of thousands of electrons which results in detectable photocurrents. An interesting arrangement of SPADs has been proposed recently with MPPC, which are pixelated devices formed by an array of SPADs where all pixel outputs are added together in a single analog output, effectively enabling photon-counting through the measurement of the fired intensity [45,120,121,122]. Finally, PMTs are based on the external photoelectric effect and the emission of electrons within a vacuum tube which brings them to collision with cascaded dynodes, resulting in a true avalanche of electrons. Although they are not solid-state, they still provide the largest gains available for single-photon detection and are sensitive to UV, which may make them useful in very specific applications. Their use in autonomous vehicles is rare, but they have been the historical detector of reference in atmospheric or remote sensing lidar, so we include them here for completeness.

Several complete overviews of photodetection, photodetector types, and their key performance parameters may be found elsewhere and go beyond the scope of this paper [45,123,124,125,126]. Here we will focus only on main photodetectors used in lidar imaging. Due to the relevance of the concepts of gain and noise, where SNR ratios in detection are usually small, a brief discussion on these concepts will be introduced here before getting into the detailed description of the different detector families of interest.

3.2.1. Gain and Noise

As described above, the gain is a critical ability of a photodetector in low SNR conditions as it enables us to increase the available signal from an equivalent input. Gain increases the power or amplitude of a signal from the input (in lidar, the initial number of photoelectrons generated by the incoming photons which are absorbed) to the output (the final number of photoelectrons sent to digitization) by adding energy to the signal. It is usually defined as the mean ratio of the output power to the input signal, so a gain greater than 1 indicates amplification. On the other hand, noise includes all the unwanted, irregular fluctuations introduced by the signal itself, the detector and the associated electronics which accompany the signal and perturb its detection by obscuring it. Photodetection noise may be due to different parameters well described in [127].

Gain is a relevant feature of any photodetector in low SNR applications. Conventionally, a photon striking the detector surface has some probability of producing a photoelectron, which in turn produces a current within the detector which usually is converted to voltage and digitized after some amplification circuitry. The gain of the photodetector dictates how many electrons are produced by each photon that is successfully converted into a useful signal. The effect of these detectors in the signal-to-noise ratio of the system is to add a gain factor G to both the signal and certain noise terms, which may also be amplified by the gain.

Noise is, in fact, a deviation of the response to the ideal signal; so, it is represented by a standard deviation (). A combination of independent noise sources is then the accumulation of its standard deviations, which means that a dominant noise term is likely to appear: