Recognition and Positioning of Strawberries Based on Improved YOLOv7 and RGB-D Sensing

Abstract

:1. Introduction

2. Materials and Methods

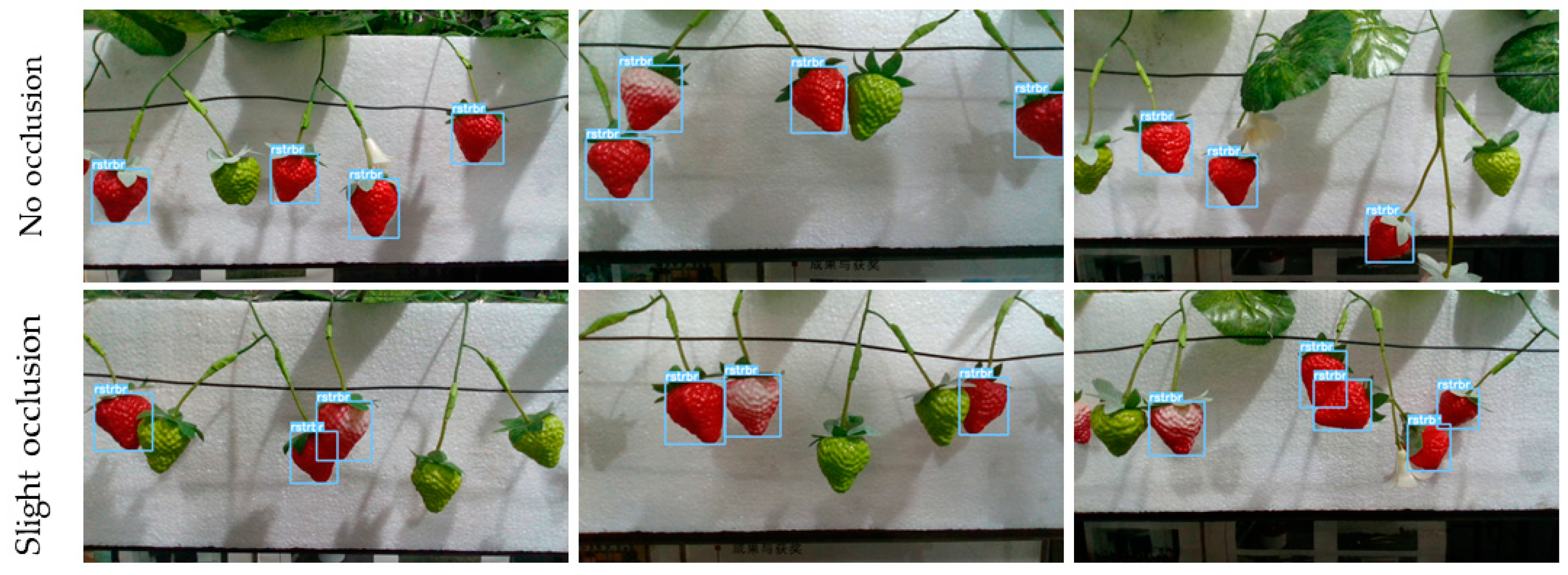

2.1. Image Acquisition and Dataset Construction

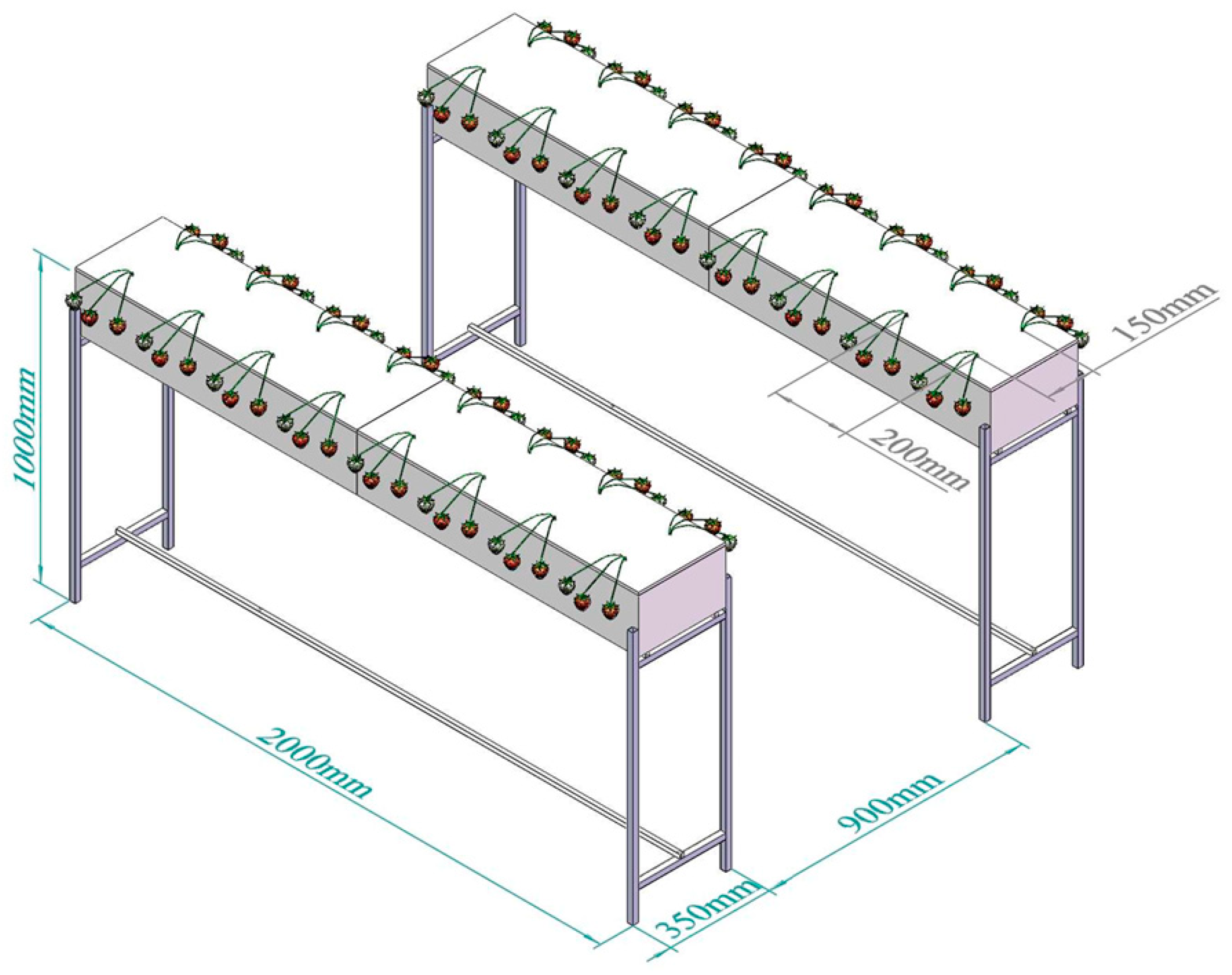

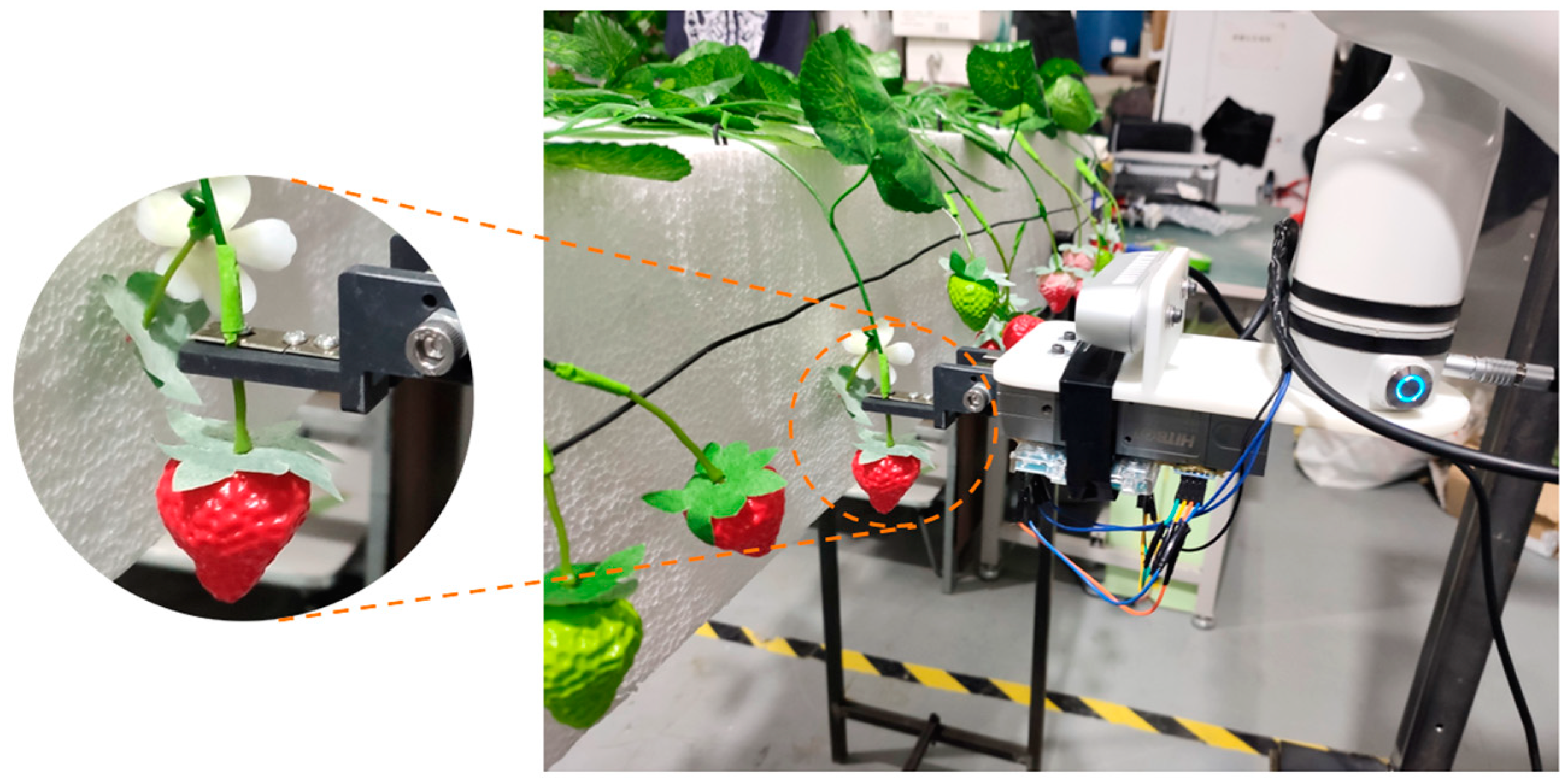

2.1.1. Strawberry Scene

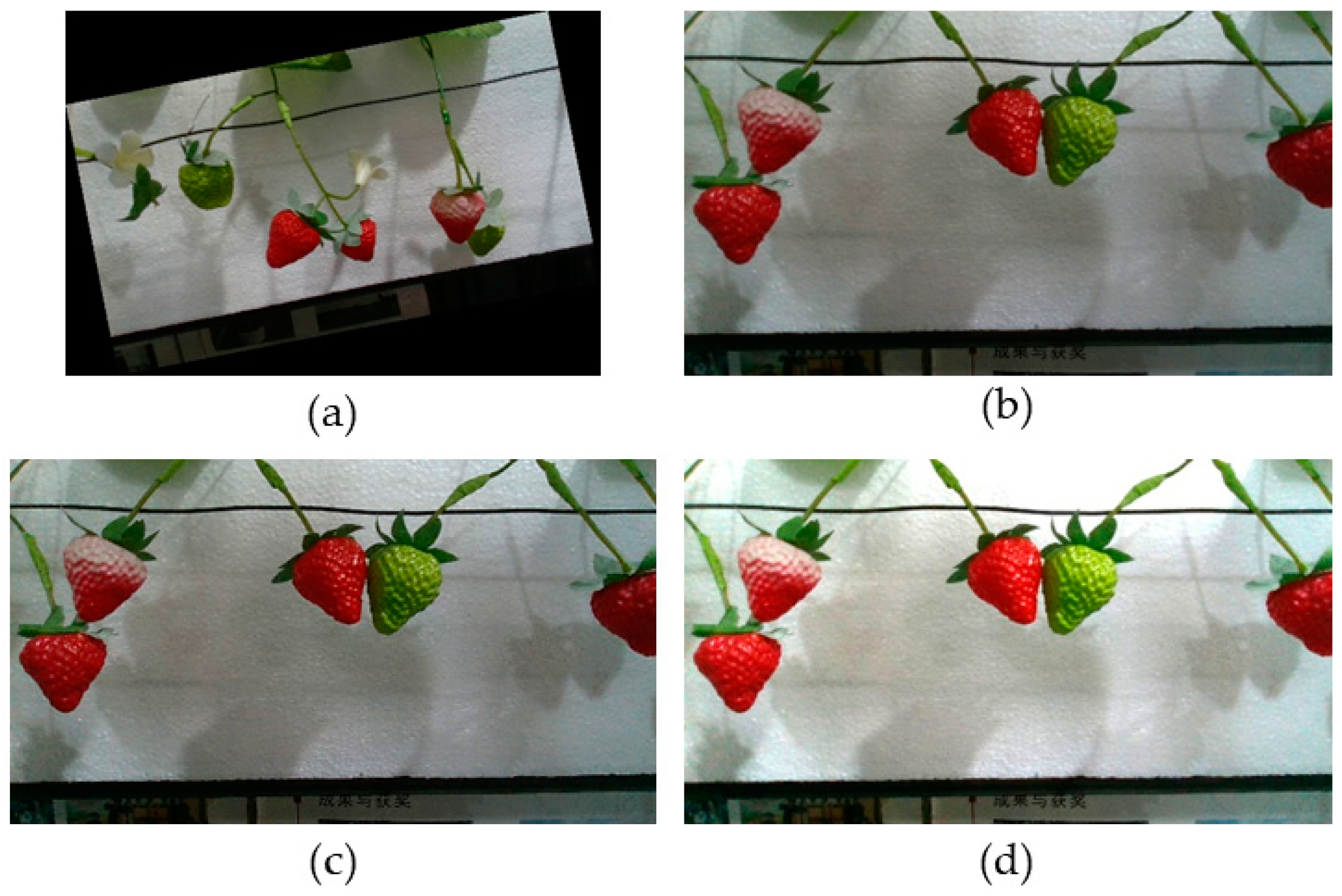

2.1.2. Image Acquisition

2.1.3. Training Environment

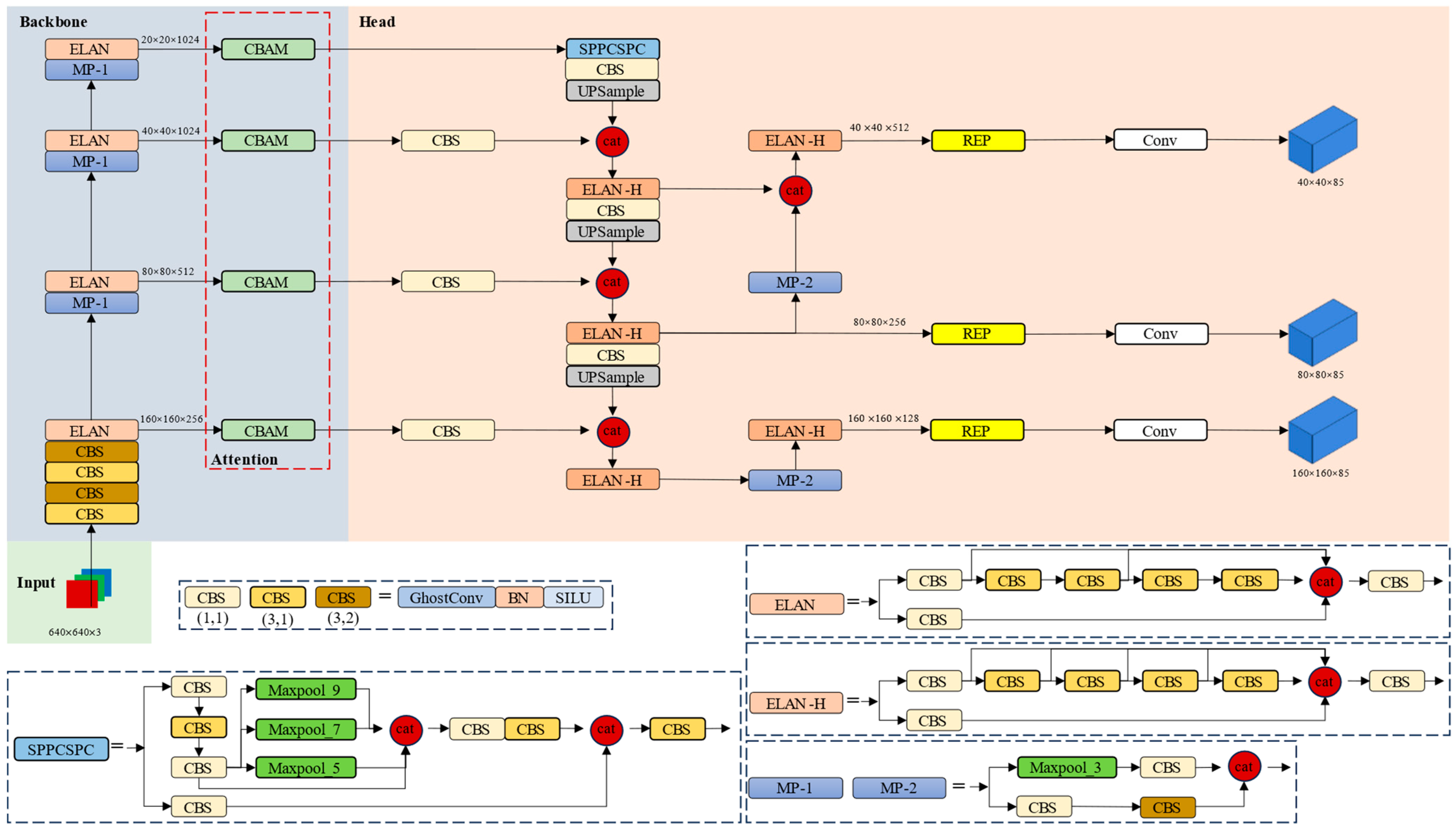

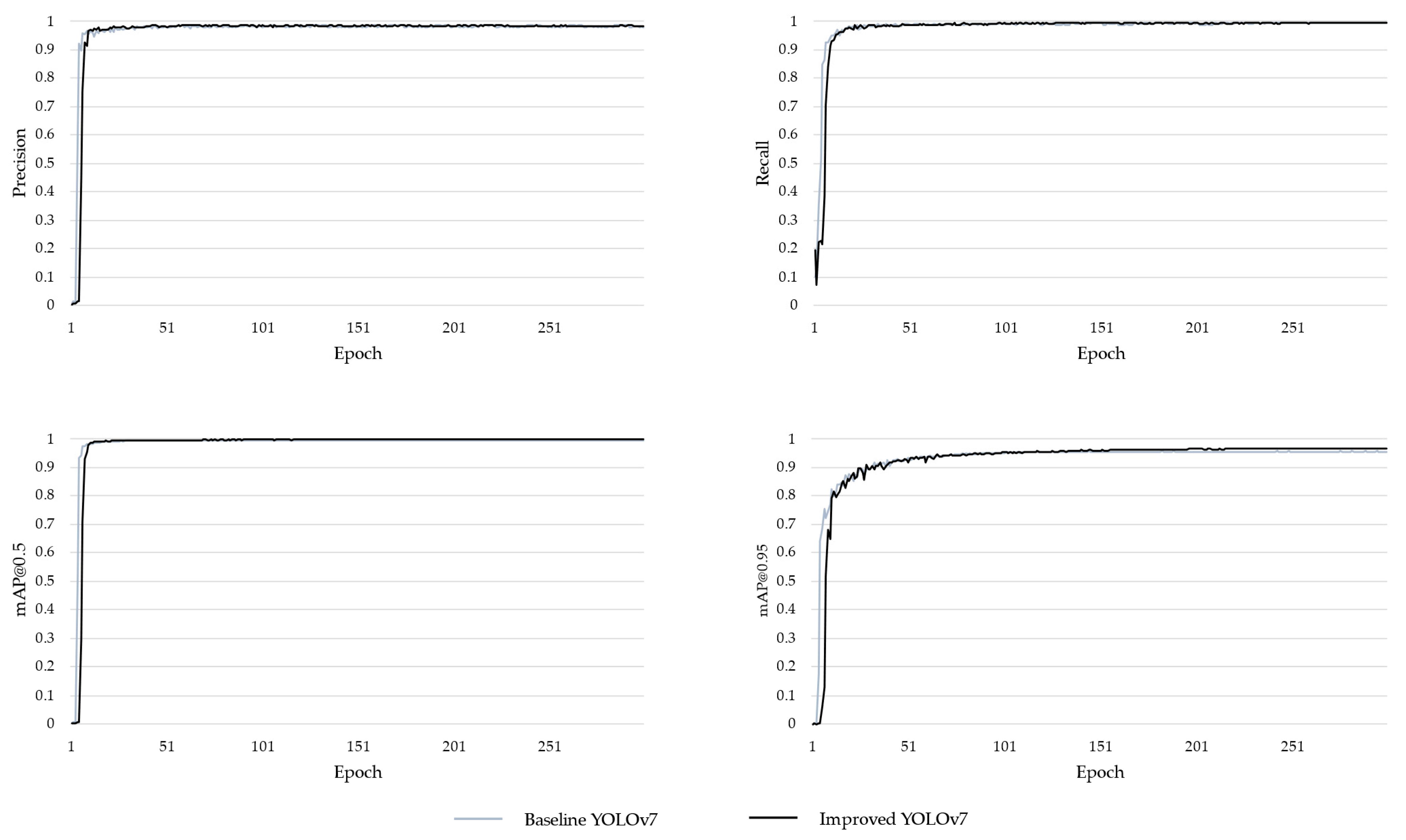

2.2. Strawberry Recognition

2.2.1. Baseline YOLOv7 Network

2.2.2. Improved YOLOv7 Network

2.2.3. Performance Evaluation Index

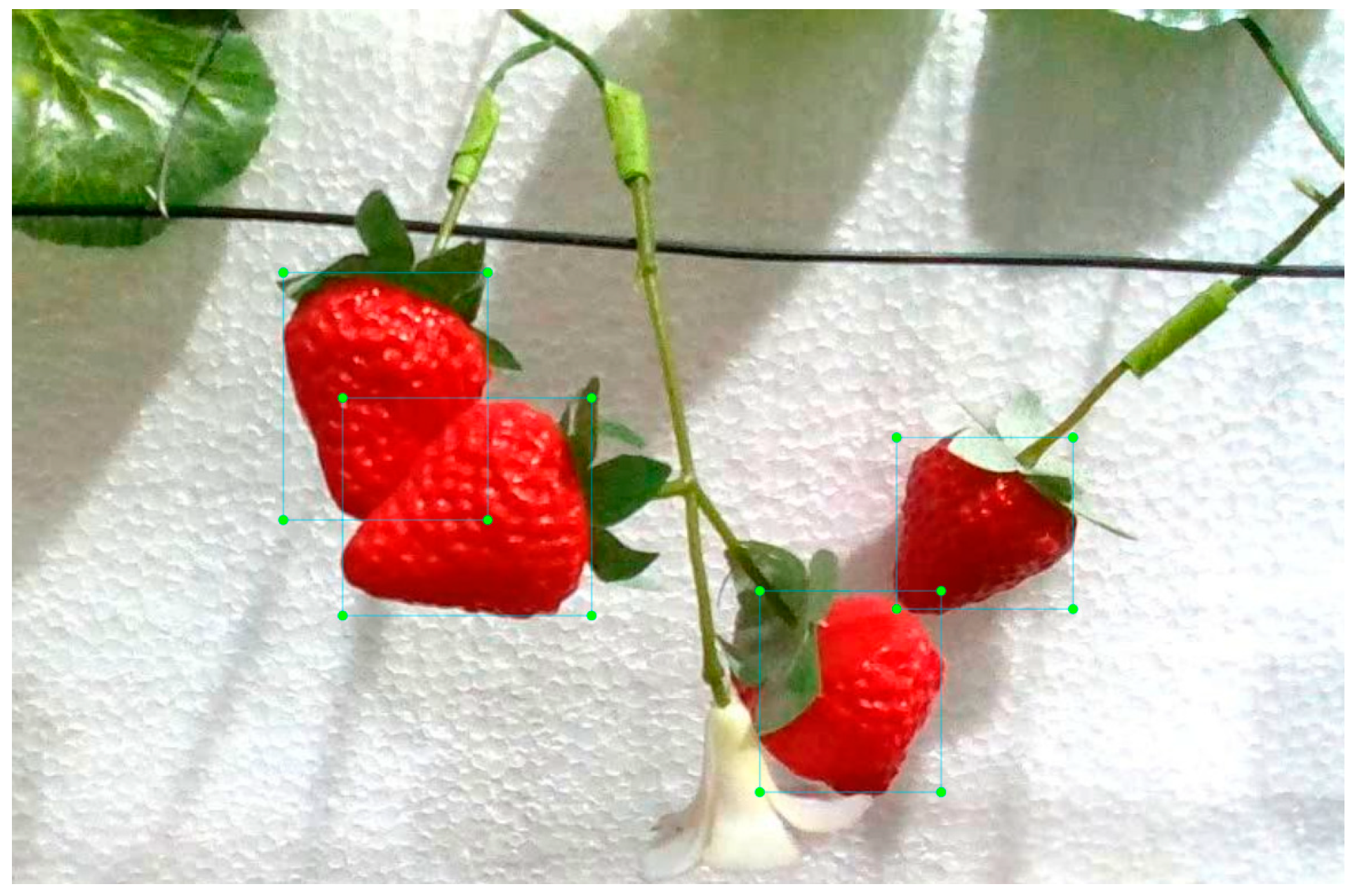

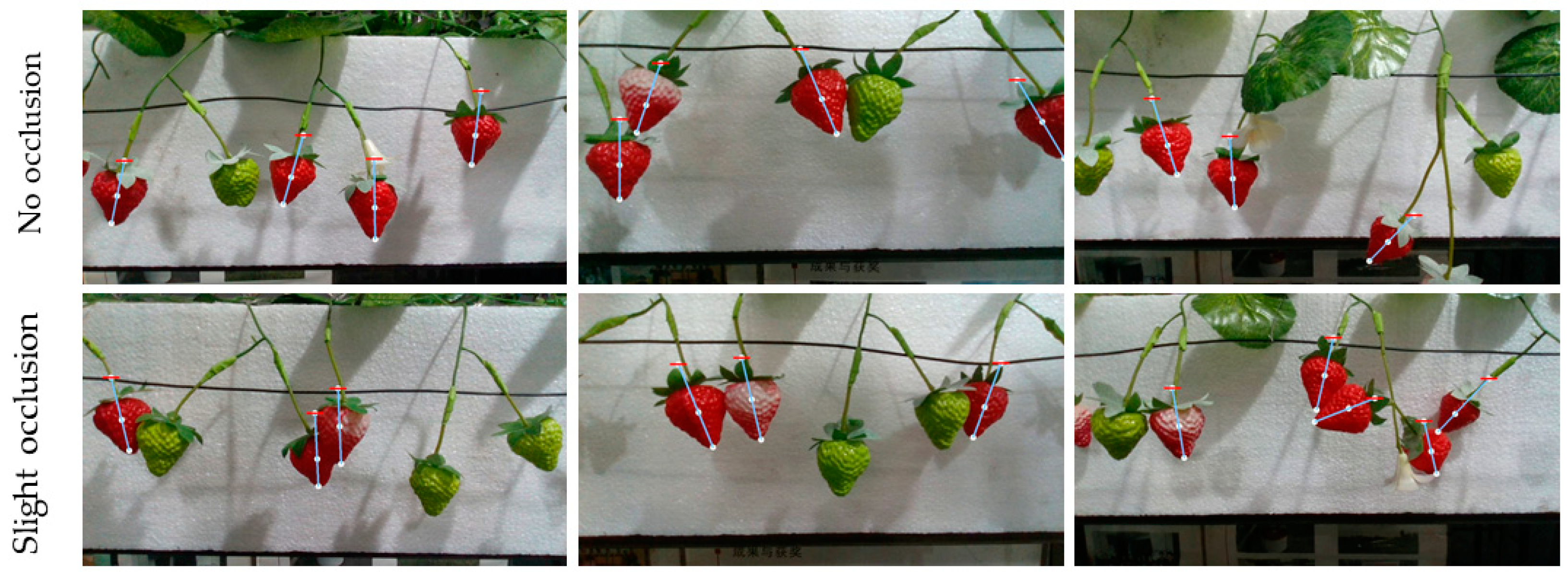

2.3. Position of Picking Points

2.3.1. Positioning Method

2.3.2. Picking Point Positioning Evaluation

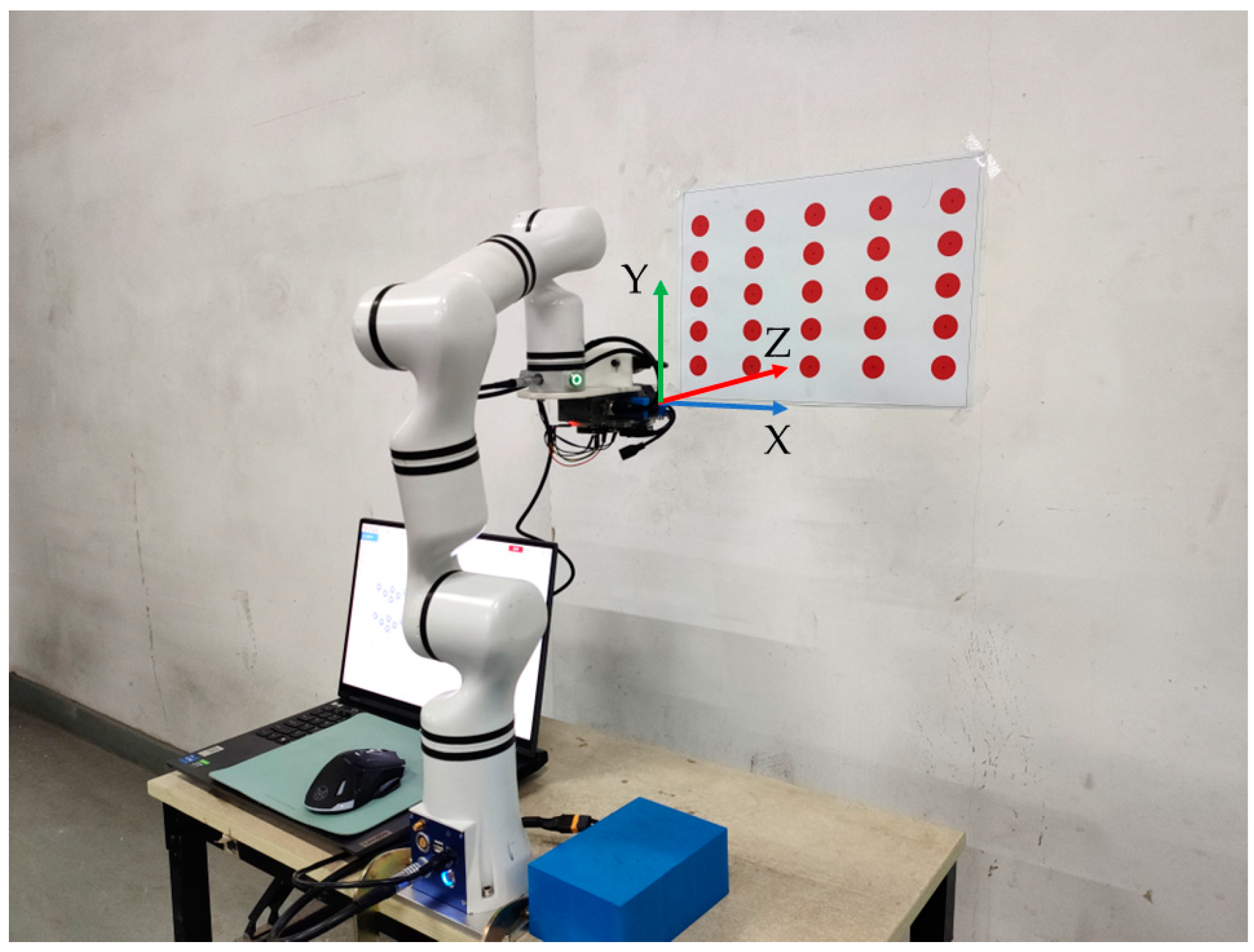

2.4. Hand–Eye Calibration

2.4.1. Calibration Method

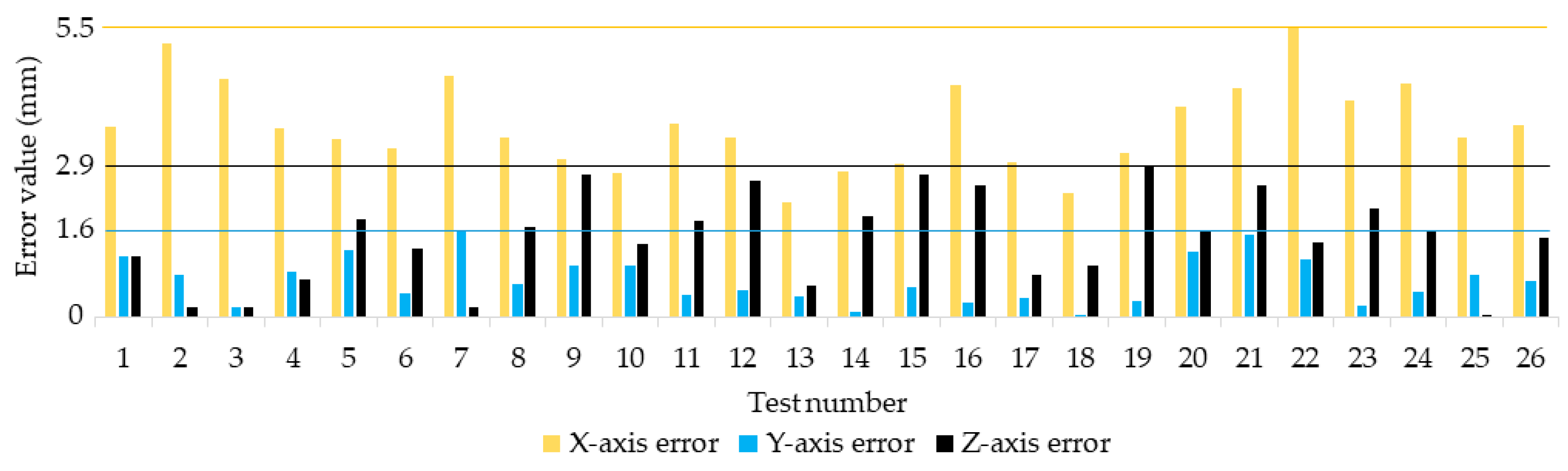

2.4.2. Calibration Error

3. Results and Discussion

3.1. Strawberry Detection

3.2. Position of Picking Points

3.3. Calibration Error

3.4. Robot Picking

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hossain, A.; Begum, P.; Zannat, M.S.; Rahman, M.H.; Ahsan, M.; Islam, S.N. Nutrient Composition of Strawberry Genotypes Cultivated in a Horticulture Farm. Food Chem. 2016, 199, 648–652. [Google Scholar] [CrossRef]

- Giampieri, F.; Tulipani, S.; Alvarez-Suarez, J.M.; Quiles, J.L.; Mezzetti, B.; Battino, M. The Strawberry: Composition, Nutritional Quality, and Impact on Human Health. Nutrition 2012, 28, 9–19. [Google Scholar] [CrossRef]

- Hakala, M.; Lapveteläinen, A.; Huopalahti, R.; Kallio, H.; Tahvonen, R. Effects of Varieties and Cultivation Conditions on the Composition of Strawberries. J. Food Compos. Anal. 2003, 16, 67–80. [Google Scholar] [CrossRef]

- Liu, Q.; Cao, C.; Zhang, X.; Li, K.; Xu, W. Design of Strawberry Picking Hybrid Robot Based on Kinect Sensor. In Proceedings of the 2018 International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Xi’an, China, 15–17 August 2018. [Google Scholar] [CrossRef]

- Tafuro, A.; Adewumi, A.; Parsa, S.; Amir, G.E.; Debnath, B. Strawberry Picking Point Localization Ripeness and Weight Estimation. In Proceedings of the 2022 IEEE International Conference on Robotics and Automation (ICRA 2022), Philadelphia, PA, USA, 23–27 May 2022; pp. 2295–2302. [Google Scholar] [CrossRef]

- Yamamoto, S.; Hayashi, S.; Yoshida, H.; Kobayashi, K. Development of a Stationary Robotic Strawberry Harvester with a Picking Mechanism That Approaches the Target Fruit from Below. JARQ-Jpn. Agric. Res. Q. 2014, 48, 261–269. [Google Scholar] [CrossRef]

- Huang, Z.; Sklar, E.; Parsons, S. Design of Automatic Strawberry Harvest Robot Suitable in Complex Environments. In Proceedings of the HRI’20: Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 567–569. [Google Scholar] [CrossRef]

- Feng, Q.; Chen, J.; Zhang, M.; Wang, X. Design and Test of Harvesting Robot for Table-Top Cultivated Strawberry. In Proceedings of the 2019 World Robot Conference Symposium on Advanced Robotics and Automation (WRC SARA 2019), Beijing, China, 21–22 August 2019; pp. 80–85. [Google Scholar] [CrossRef]

- Xiong, Y.; Peng, C.; Grimstad, L.; From, P.J.; Isler, V. Development and Field Evaluation of a Strawberry Harvesting Robot with a Cable-Driven Gripper. Comput. Electron. Agric. 2019, 157, 392–402. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; Tenorio, G.L.; From, P.J. Fruit Localization and Environment Perception for Strawberry Harvesting Robots. IEEE Access 2019, 7, 147642–147652. [Google Scholar] [CrossRef]

- He, Z.; Karkee, M.; Zhang, Q. Detecting and Localizing Strawberry Centers for Robotic Harvesting in Field Envi-ronment. IFAC Pap. 2022, 55, 30–35. [Google Scholar] [CrossRef]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-Value Crops: State-of-the-Art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Wang, Z.; Xun, Y.; Wang, Y.; Yang, Q. Review of Smart Robots for Fruit and Vegetable Picking in Agriculture. Int. J. Agric. Biol. Eng. 2022, 15, 33–54. [Google Scholar] [CrossRef]

- Bulanon, D.M.; Kataoka, T. Fruit Detection System and an End Effector for Robotic Harvesting of Fuji Apples. Agric. Eng. Int. CIGR E-J. 2010, 12, 203–210. [Google Scholar]

- Ji, C.; Zhang, J.; Yuan, T.; Li, W. Research on Key Technology of Truss Tomato Harvesting Robot in Greenhouse. Appl. Mech. Mater. 2014, 442, 480–486. [Google Scholar] [CrossRef]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous Sweet Pepper Harvesting for Protected Cropping Systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef]

- Mehta, S.S.; MacKunis, W.; Burks, T.F. Robust Visual Servo Control in the Presence of Fruit Motion for Robotic Citrus Harvesting. Comput. Electron. Agric. 2016, 123, 362–375. [Google Scholar] [CrossRef]

- Van Henten, E.; Hemming, J.; Van Tuijl, B.; Kornet, J.; Bontsema, J. Collision-Free Motion Planning for a Cucumber Picking Robot. Biosyst. Eng. 2003, 86, 135–144. [Google Scholar] [CrossRef]

- Edan, Y.; Rogozin, D.; Flash, T.; Miles, G. Robotic Melon Harvesting. IEEE Trans. Robot. Autom. 2000, 16, 831–835. [Google Scholar] [CrossRef]

- Scarfe, A.J.; Flemmer, R.C.; Bakker, H.H.; Flemmer, C.L. Development of An Autonomous Kiwifruit Picking Robot. In Proceedings of the Fourth International Conference on Autonomous Robots and Agents, Wellington, New Zealand, 10–12 February 2009; Gupta, G., Mukhopadhyay, S., Eds.; pp. 639–643. [Google Scholar] [CrossRef]

- Defterli, S.G.; Shi, Y.; Xu, Y.; Ehsani, R. Review of Robotic Technology for Strawberry Production. Appl. Eng. Agric. 2016, 32, 301–318. [Google Scholar] [CrossRef]

- Cui, Y.; Gejima, Y.; Kobayashi, T.; Hiyoshi, K.; Nagata, M. Study on Cartesian-Type Strawberry-Harvesting Robot. Sens. Lett. J. Dedic. All Asp. Sens. Sci. Eng. Med. 2013, 11, 1223–1228. [Google Scholar] [CrossRef]

- Habaragamuwa, H.; Ogawa, Y.; Suzuki, T.; Shiigi, T.; Ono, M.; Kondo, N. Detecting Greenhouse Strawberries (Mature and Immature), Using Deep Convolutional Neural Network. Eng. Agric. Environ. Food 2018, 11, 127–138. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit Detection for Strawberry Harvesting Robot in Non-Structural Environment Based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Liu, H.; Yang, L.; Zhang, D. Real-Time Visual Localization of the Picking Points for a Ridge-Planting Strawberry Harvesting Robot. IEEE Access 2020, 8, 116556–116568. [Google Scholar] [CrossRef]

- Lemsalu, M.; Bloch, V.; Backman, J.; Pastell, M. Real-Time CNN-Based Computer Vision System for Open-Field Strawberry Harvesting Robot. IFAC Pap. 2022, 55, 24–29. [Google Scholar] [CrossRef]

- Perez-Borrero, I.; Marin-Santos, D.; Gegundez-Arias, M.E.; Cortes-Ancos, E. A Fast and Accurate Deep Learning Method for Strawberry Instance Segmentation. Comput. Electron. Agric. 2020, 178, 105736. [Google Scholar] [CrossRef]

- Kim, S.-J.; Jeong, S.; Kim, H.; Jeong, S.; Yun, G.-Y.; Park, K. Detecting Ripeness of Strawberry and Coordinates of Strawberry Stalk Using Deep Learning. In Proceedings of the 2022 Thirteenth International Conference on Ubiquitous and Future Networks (ICUFN), Barcelona, Spain, 5–8 July 2022; pp. 454–458. [Google Scholar] [CrossRef]

- Perez-Borrero, I.; Marin-Santos, D.; Vasallo-Vazquez, M.J.; Gegundez-Arias, M.E. A New Deep-Learning Strawberry Instance Segmentation Methodology Based on a Fully Convolutional Neural Network. Neural Comput. Appl. 2021, 33, 15059–15071. [Google Scholar] [CrossRef]

- Lamb, N.; Chuah, M.C. A Strawberry Detection System Using Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, DC, USA, 10–13 December 2018; Abe, N., Liu, H., Pu, C., Hu, X., Ahmed, N., Qiao, M., Song, Y., Kossmann, D., Liu, B., Lee, K., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 2515–2520. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Zhang, F.; Cao, W.; Wang, S.; Cui, X.; Yang, N.; Wang, X.; Zhang, X.; Fu, S. Improved YOLOv4 Recognition Algorithm for Pitaya Based on Coordinate Attention and Combinational Convolution. Front. Plant Sci. 2022, 13, 1030021. [Google Scholar] [CrossRef] [PubMed]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 1577–1586. [Google Scholar] [CrossRef]

- Tsai, F.-T.; Nguyen, V.-T.; Duong, T.-P.; Phan, Q.-H.; Lien, C.-H. Tomato Fruit Detection Using Modified Yolov5m Model with Convolutional Neural Networks. Plants 2023, 12, 3067. [Google Scholar] [CrossRef] [PubMed]

- Dou, S.; Wang, L.; Fan, D.; Miao, L.; Yan, J.; He, H. Classification of Citrus Huanglongbing Degree Based on CBAM-MobileNetV2 and Transfer Learning. Sensors 2023, 23, 5587. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Volume 11211, pp. 3–19. [Google Scholar] [CrossRef]

- Guo, S.; Yoon, S.-C.; Li, L.; Wang, W.; Zhuang, H.; Wei, C.; Liu, Y.; Li, Y. Recognition and Positioning of Fresh Tea Buds Using YOLOv4-Lighted + ICBAM Model and RGB-D Sensing. Agriculture 2023, 13, 518. [Google Scholar] [CrossRef]

| Parameters | Stats |

|---|---|

| Dimension (mm) | 90 × 25 × 25 |

| Depth image resolution (pixels) | 848 × 480 |

| Depth field of view (°) | 87 × 58 |

| RGB image resolution (pixels) | 1280 × 720 |

| RGB field of view (°) | 69 × 42 |

| Frame rate (FPS) | 30 |

| Service distance (m) | 0.1–10 |

| Component | Description |

|---|---|

| CPU | Intel Core i7-11800H (2.30 GHz) |

| GPU hardware | NVIDIA GeForce RTX 3070 Laptop |

| GPU programming library | CUDA 11.6 and CUDNN 8.9 |

| Integrated development environment | PyCharm 2022.2.2 |

| Operating system | Windows 11 |

| Model | Parameters | Model Size (MB) | Frame Rate (FPS) |

|---|---|---|---|

| Baseline YOLOv7 model | 37.2 million | 74.8 | 18.7 |

| Improved YOLOv7 model | 15.0 million | 30.5 | 22.3 |

| Test Number | Theoretical Coordinate (mm) | Actual Coordinate (mm) | Test Number | Theoretical Coordinate (mm) | Actual Coordinate (mm) |

|---|---|---|---|---|---|

| 1 | (−270.1, −523.2, −444.0) | (−266.5, −524.4, −442.9) | 14 | (−94.5, −664.6, −439.6) | (−91.8, −664.5, −441.5) |

| 2 | (−270.2, −571.0, −443.0) | (−265.0, −571.8, −443.1) | 15 | (−92.8, −713.6, −439.1) | (−89.9, −713.1, −441.8) |

| 3 | (−269.1, −620.1, −442.3) | (−264.6, −619.9, −442.4) | 16 | (−10.3, −517.2, −441.5) | (−5.9, −516.9, −439.0) |

| 4 | (−267.7, −668.3, −442.4) | (−264.1, −667.4, −441.7) | 17 | (−8.7, −566.1, −441.1) | (−5.7, −565.8, −440.3) |

| 5 | (−266.3, −717.1, −443.9) | (−262.9, −715.9, −442.0) | 18 | (−7.9, −615.4, −440.6) | (−5.6, −615.4, −441.6) |

| 6 | (−183.5, −520.6, −442.0) | (−180.3, −521.0, −443.3) | 19 | (−6.9, −662.9, −440.0) | (−3.8, −663.2, −442.9) |

| 7 | (−183.2, −569.1, −441.0) | (−178.6, −567.5, −441.1) | 20 | (−6.5, −711.2, −439.5) | (−2.6, −712.4, −441.2) |

| 8 | (−181.6, −618.0, −439.1) | (−178.2, −618.6, −440.8) | 21 | (77.3, −517.0, −441.8) | (81.6, −515.4, −439.3) |

| 9 | (−180.6, −666.5, −439.4) | (−177.6, −665.5, −442.1) | 22 | (78.4, −565.2, −441.2) | (83.9, −564.2, −442.6) |

| 10 | (−179.6, −715.4, −440.0) | (−176.8, −714.5, −441.4) | 23 | (79.6, −613.3, −440.8) | (83.7, −613.5, −442.9) |

| 11 | (−98.0, −519.4, −441.5) | (−94.3, −519.8, −439.7) | 24 | (80.2, −661.5, −440.6) | (84.6, −662.0, −442.2) |

| 12 | (−95.9, −568.0, −442.5) | (−92.5, −568.5, −439.9) | 25 | (81.5, −710.1, −440.5) | (84.9, −710.9, −440.5) |

| 13 | (−94.8, −616.2, −440.7) | (−92.6, −616.6, −411.2) |

| Experiment Number | Ripe Strawberry Number | Picking Success Number | Picking Success Rate |

|---|---|---|---|

| 1 | 28 | 27 | 96.4% |

| 2 | 24 | 21 | 87.5% |

| 3 | 26 | 23 | 88.4% |

| 4 | 20 | 18 | 90.0% |

| Total | 98 | 89 | 90.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wang, W.; Guo, X.; Wang, X.; Liu, Y.; Wang, D. Recognition and Positioning of Strawberries Based on Improved YOLOv7 and RGB-D Sensing. Agriculture 2024, 14, 624. https://doi.org/10.3390/agriculture14040624

Li Y, Wang W, Guo X, Wang X, Liu Y, Wang D. Recognition and Positioning of Strawberries Based on Improved YOLOv7 and RGB-D Sensing. Agriculture. 2024; 14(4):624. https://doi.org/10.3390/agriculture14040624

Chicago/Turabian StyleLi, Yuwen, Wei Wang, Xiaohuan Guo, Xiaorong Wang, Yizhe Liu, and Daren Wang. 2024. "Recognition and Positioning of Strawberries Based on Improved YOLOv7 and RGB-D Sensing" Agriculture 14, no. 4: 624. https://doi.org/10.3390/agriculture14040624

APA StyleLi, Y., Wang, W., Guo, X., Wang, X., Liu, Y., & Wang, D. (2024). Recognition and Positioning of Strawberries Based on Improved YOLOv7 and RGB-D Sensing. Agriculture, 14(4), 624. https://doi.org/10.3390/agriculture14040624