A Zero-Shot Image Classification Method of Ship Coating Defects Based on IDATLWGAN

Abstract

1. Introduction

- (1)

- This paper proposes a new zero-sample classification method for ship painting defects based on deep adversarial transfer learning based on Wasserstein GAN (IDATLWGAN) for identifying new unknown class painting defects in the target domain.

- (2)

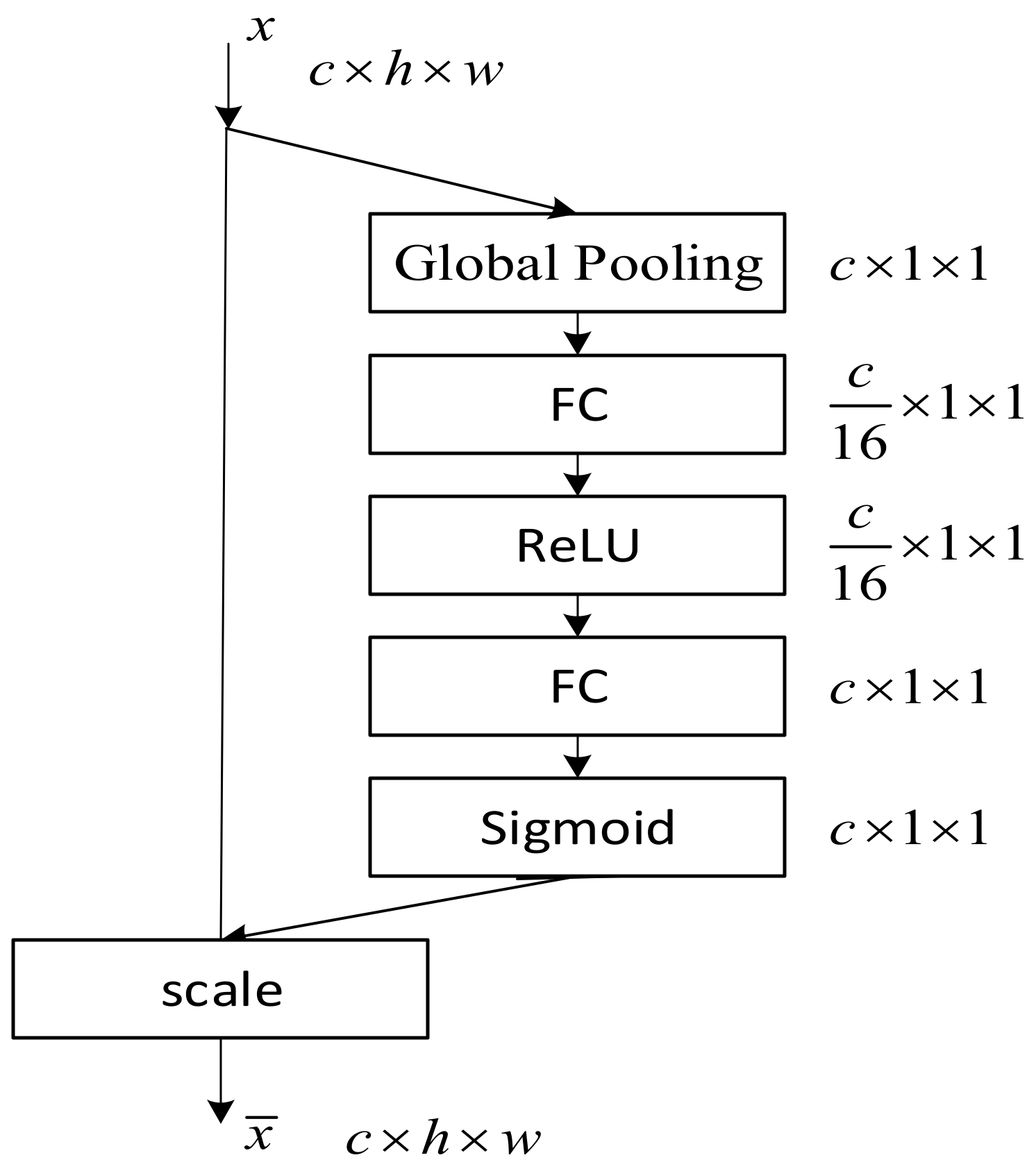

- In this paper, the Squeeze-and-Excitation (SE) module is introduced in the domain-invariant feature extractor and used in the transfer learning task, which is more capable of using global information to acquire important domain-invariant features selectively and suppress less useful ones.

- (3)

- Domain alignment discriminators are introduced and used in a deep transfer model, which learns domain-invariant and class-separation features to classify defects accurately through two-stage adversarial training.

2. Theoretical Background

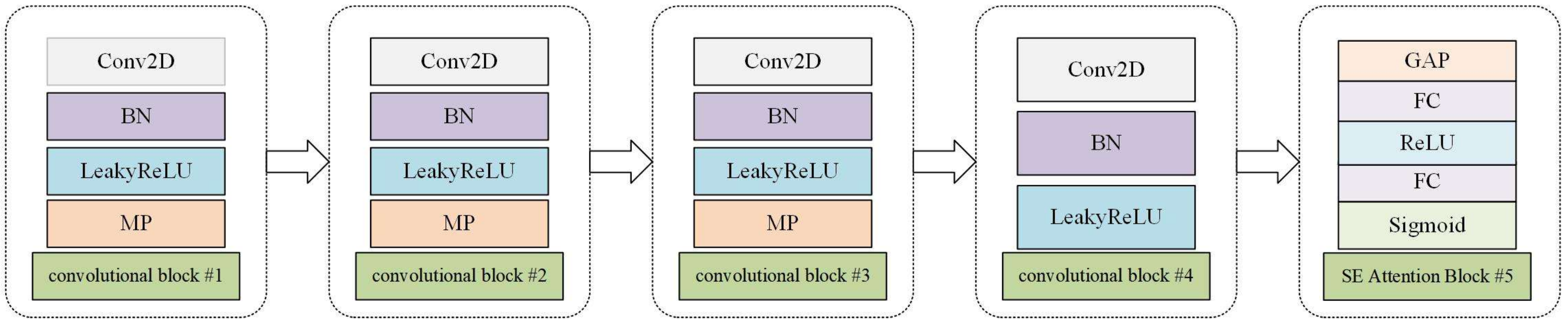

2.1. Classification Domain CNN Structure

2.2. SE Attention Mechanisms

2.3. Adversarial-Based Domain Adaptation Training

3. Proposed Methodology

3.1. Problem Definition and Symbolic Description

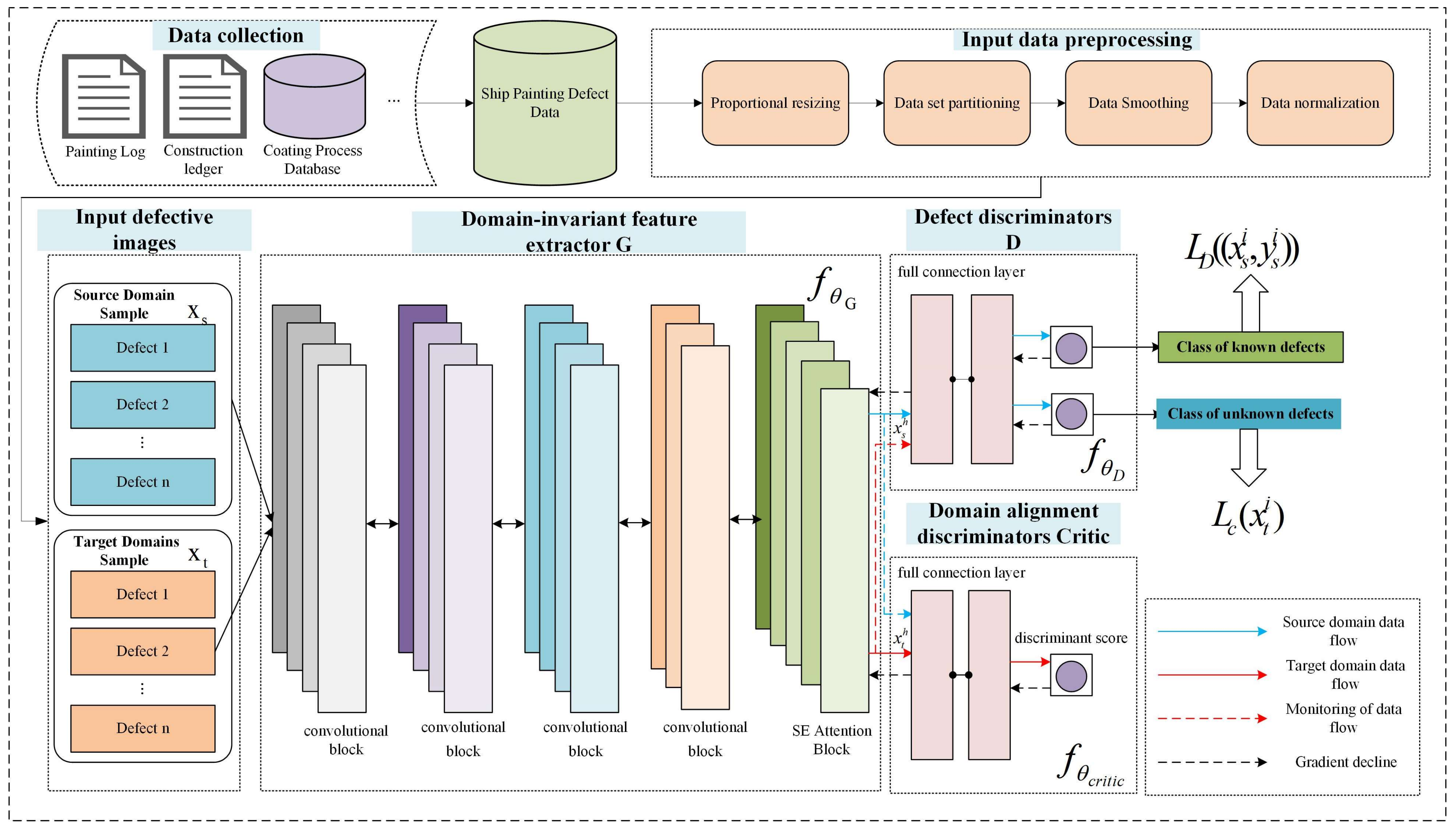

3.2. Overall Network Framework

3.3. Loss Function

3.4. Training Process Optimization and Implementation Details

3.5. Training Process

- STEP1: Data Collection: Original ship painting defect data sets are collected through the shipyard’s painting log, construction ledger, and painting process database.

- STEP2: Data Preprocessing: Image preprocessing consists of four steps, namely, proportional resizing, data set partitioning, data smoothing, and data normalization.

- STEP3: The ship painting defects training set after STEP2 processing is divided into source and target domain data.

- STEP4: The source and target domain defective training sets are used together as input to the domain-invariant feature extractor G. The extractor extracts high-level domain-invariant features from the defective images of the source and target domains.

- STEP5: The Defect discriminator D and domain alignment discriminators critic is employed to classify the known unlabeled defects and unknown unlabeled defects in the target domain by the learned high-level domain-invariant features and to reduce further the distributional differences between the edge probability distributions of the source and target domains.

- STEP6: Update parameters , , and of domain-invariant feature extractor G, Defect discriminator model D, and domain alignment discriminators critic, respectively.

- STEP7: Repeat STEP4~STEP6 to iteratively update the parameters of each module through the adversarial training strategy until convergence, and store all the parameters to obtain the trained optimal estimated parameter values , , and .

- STEP8: All test datasets are used to test and validate the validity of the IDATLWGAN model.

| Algorithm 1: The overall training process for the IDATLWGAN model |

| Require: Source and target domain datasets and ; small batch size n; learning rate α1, α2; number of updates of domain alignment discriminators critic in each iteration; and weight balance coefficients λ, η, and ρ for each loss function. Initialize hyperparameters for different networks , , and . While i < Maximum number of iterations or convergence of parameters of each module do 1: ←Randomized small batch sampling from a real ship painting defect source domain dataset. 2: ←Random small batch sampling from a real ship painting defects target domain dataset. 3: For i = 1, …, do 4: , 5: Sampling from pairs and yields 6: 7: 8: 9: 10: 11: End For 12: 13: End While Output Optimal parameter estimates for each module , , and . End |

4. Experiments Setup and Results

4.1. Description of the Dataset

4.2. Experimental Environment

4.3. Evaluation Metrics

4.4. Experimental Results and Analysis

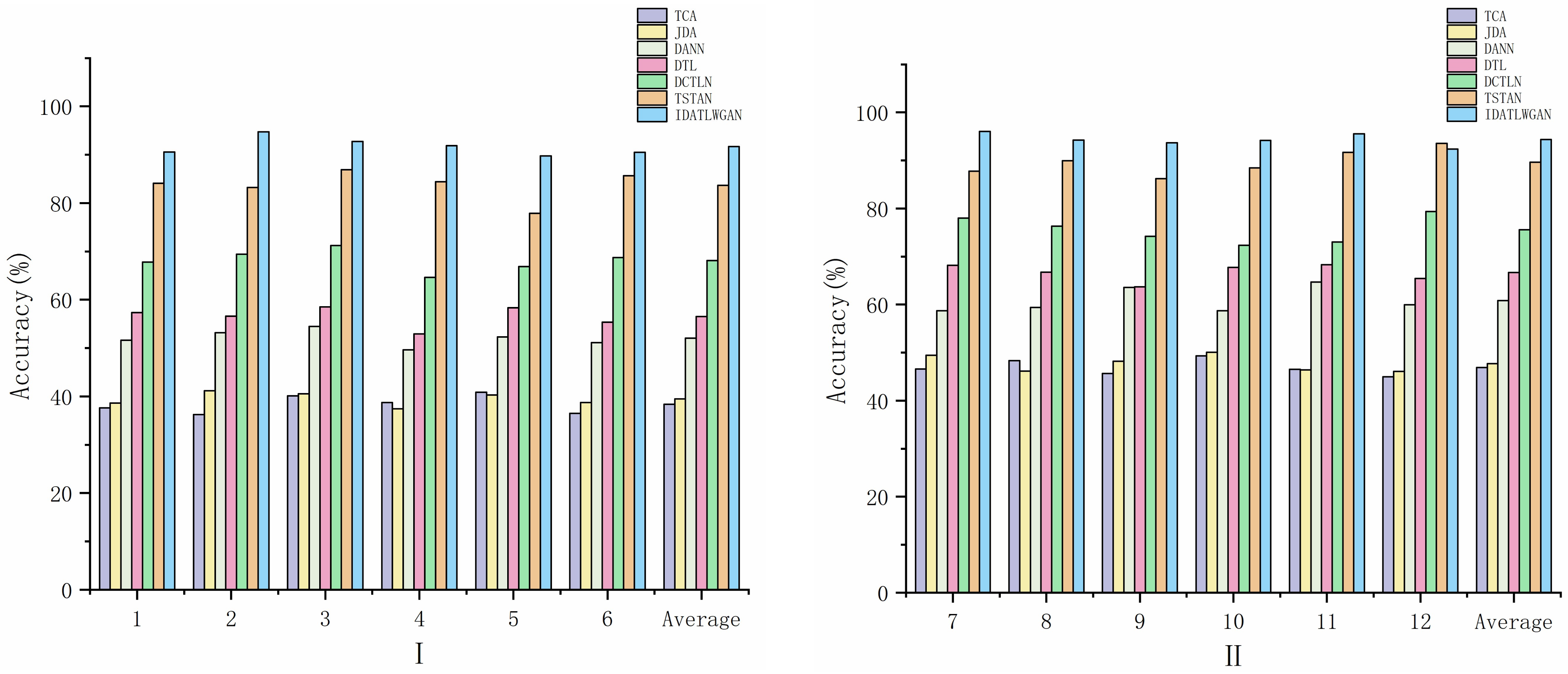

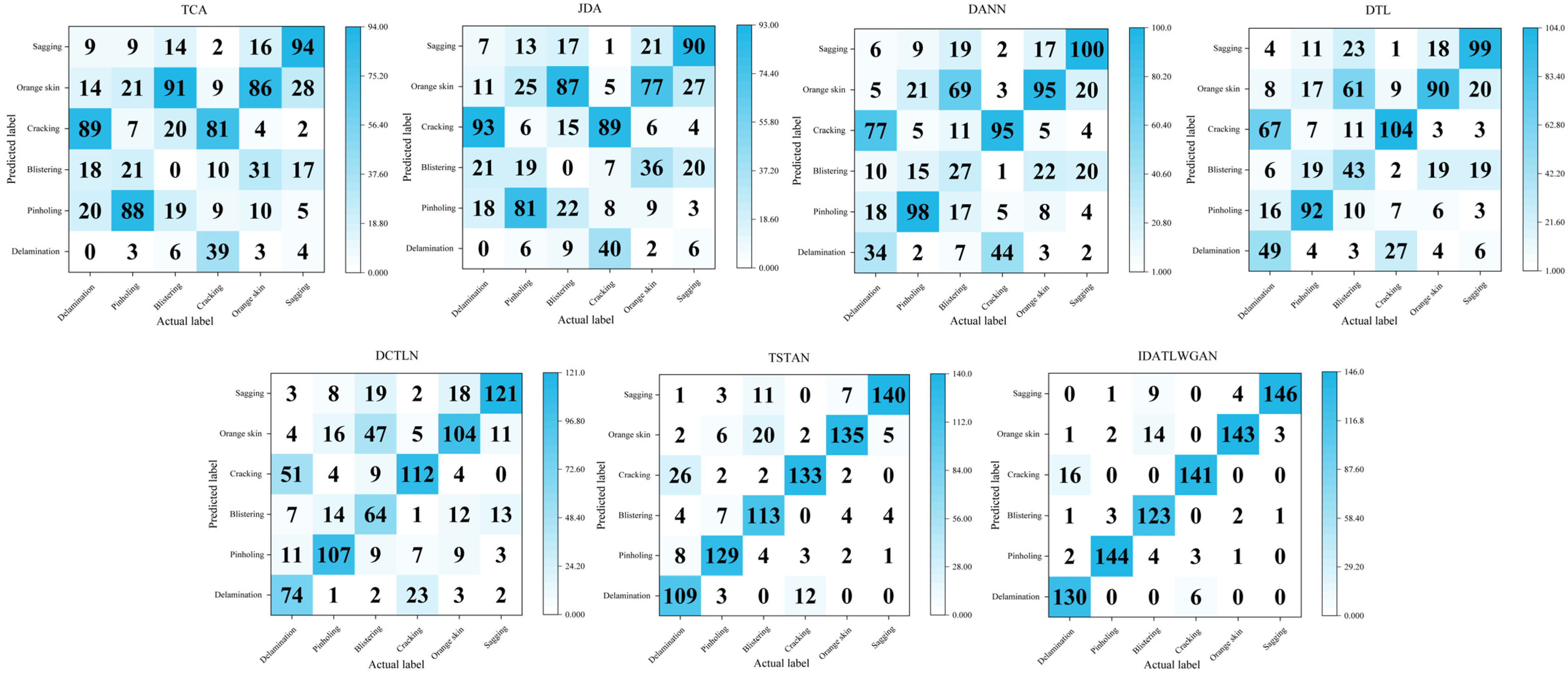

4.4.1. Performance Comparison of Different Transfer Learning Models on Different Painting Defect Categories

4.4.2. Performance Comparison of Different Transfer Learning Models on Different Migration Tasks

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bu, H.; Yuan, X.; Niu, J.; Yu, W.; Ji, X.; Lyu, Y.; Zhou, H. Ship Painting Process Design Based on IDBSACN-RF. Coatings 2021, 11, 1458. [Google Scholar] [CrossRef]

- Yuan, X.; Bu, H.; Niu, J.; Yu, W.; Zhou, H. Coating matching recommendation based on improved fuzzy comprehensive evaluation and collaborative filtering algorithm. Sci. Rep. 2021, 11, 14035. [Google Scholar] [CrossRef]

- Bu, H.; Hu, C.; Yuan, X.; Ji, X.; Lyu, H.; Zhou, H. An Image Generation Method of Unbalanced Ship Coating Defects Based on IGASEN-EMWGAN. Coatings 2023, 13, 620. [Google Scholar] [CrossRef]

- Ma, H.; Lee, S. Smart System to Detect Painting Defects in Shipyards: Vision AI and a DeepLearning Approach. Appl. Sci. 2022, 12, 2412. [Google Scholar] [CrossRef]

- Bu, H.; Ji, X.; Zhang, J.; Lyu, H.; Yuan, X.; Pang, B.; Zhou, H. A Knowledge Acquisition Method of Ship Coating Defects Based on IHQGA-RS. Coatings 2022, 12, 292. [Google Scholar] [CrossRef]

- Li, H.; Lv, Y.; Yuan, R.; Dang, Z.; Cai, Z.; An, B. Fault diagnosis of planetary gears based on intrinsic feature extraction and deep transfer learning. Meas. Sci. Technol. 2022, 34, 014009. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, B.; Yu, X.; Yu, A.; Gao, K.; Ding, L. From Video to Hyperspectral: Hyperspectral Image-Level Feature Extraction with Transfer Learning. Remote Sens. 2022, 14, 5118. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, K.; Wu, S.; Shi, S.; Zhao, Y.; Sun, Y.; Zhuang, H.; Fu, E. An Investigation of a Multidimensional CNN Combined with an Attention Mechanism Model to Resolve Small-Sample Problems in Hyperspectral Image Classification. Remote Sens. 2022, 14, 785. [Google Scholar] [CrossRef]

- Shi, K.; Hao, Y.; Li, G.; Xu, S. EBNAS: Efficient binary network design for image classification via neural architecture search. Eng. Appl. Artif. Intell. 2023, 120, 105845. [Google Scholar] [CrossRef]

- Jin, Y.; Lu, H.; Zhu, W.; Huo, W. Deep learning based classification of multi-label chest X-ray images via dual-weighted metric loss. Comput. Biol. Med. 2023, 157, 106683. [Google Scholar] [CrossRef]

- Li, Z.; Lai, Z.; Xu, Y.; Yang, J.; Zhang, D. A Locality-Constrained and Label Embedding Dictionary Learning Algorithm for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 278–293. [Google Scholar] [CrossRef]

- Lawal, O.M. YOLOMuskmelon: Quest for Fruit Detection Speed and Accuracy Using Deep Learning. IEEE Access 2021, 9, 15221–15227. [Google Scholar] [CrossRef]

- Bu, H.; Yang, T.; Hu, C.; Zhu, X.; Ge, Z.; Zhou, H. An Image Classification Method of Unbalanced Ship Coating Defects Based on DCCVAE-ACWGAN-GP. Coatings 2024, 14, 288. [Google Scholar] [CrossRef]

- Yin, J.; Dai, K.; Cheng, L.; Xu, X.; Zhang, Z. End-to-end image feature extraction-aggregation loop closure detection network for visual SLAM. In Proceedings of the 35th China Control and Decision Making Conference, Yichang, China, 20–22 May 2023; pp. 14–20. [Google Scholar]

- Arne, D.; Glenn, G.; De Baets, B.; Jan, V. Combining natural language processing and multidimensional classifiers to predict and correct CMMS metadata. Comput. Ind. 2023, 145, 103830. [Google Scholar] [CrossRef]

- Lyu, Y.; Jing, L.; Wang, J.; Guo, M.; Wang, M.; Yu, J. Siamese transformer with hierarchical concept embedding for fine-grained image recognition. Sci. China Inf. Sci. 2023, 66, 132017. [Google Scholar] [CrossRef]

- Meng, W.; Yolwas, N. A Study of Speech Recognition for Kazakh Based on Unsupervised Pre-Training. Sensors 2023, 23, 857. [Google Scholar] [CrossRef]

- Cheng, C.; Zhou, B.; Ma, G.; Wu, D.; Yuan, Y. Wasserstein distance based deep adversarial transfer learning for intelligent fault diagnosis with unlabeled or insufficient labeled data. Neurocomputing 2020, 409, 35–45. [Google Scholar] [CrossRef]

- Li, J.; Huang, R.; He, G.; Wang, S.; Li, G.; Li, W. A deep adversarialtransfer learning network for machinery emerging fault detection. IEEE Sens. J. 2020, 20, 8413–8422. [Google Scholar] [CrossRef]

- Liu, F.; Huang, S.; Hu, J.; Chen, X.; Song, Z.; Dong, J.; Liu, Y.; Huang, X.; Wang, S.; Wang, X.; et al. Design of prime-editing guide RNAs with deep transfer learning. Nat. Mach. Intell. 2023, 5, 1261–1274. [Google Scholar] [CrossRef]

- Kuang, J.; Xu, G.; Tao, T.; Wu, Q. Class-Imbalance Adversarial Transfer Learning Network for Cross-Domain Fault Diagnosis with Imbalanced Data. IEEE Trans. Instrum. Meas. 2022, 71, 3501111. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, Z.; Huo, H.; Yang, C.; Zhang, K. Transfer Subspace Learning based on Double Relaxed Regression for Image Classification. Appl. Intell. 2022, 52, 16294–16309. [Google Scholar] [CrossRef]

- Li, J.; Huang, R.; He, G.; Liao, Y.; Wang, Z.; Li, W. A Two-Stage Transfer Adversarial Network for Intelligent Fault Diagnosis of Rotating Machinery with Multiple New Faults. IEEE ASME Trans. Mechatron. 2021, 26, 1591–1601. [Google Scholar] [CrossRef]

- Xu, Y.; Lang, H. Ship Classification in SAR Images with Geometric Transfer Metric Learning. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6799–6813. [Google Scholar] [CrossRef]

- Li, Z.; Wei, X.; Hassaballah, M.; Li, Y.; Jiang, J. A deep learning model for steel surface defect detection. Complex Intell. Syst. 2024, 10, 885–897. [Google Scholar] [CrossRef]

- Pan, S.; Yang, Y. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, K.; Sun, C. Model-Based Transfer Reinforcement Learning Based on Graphical Model Representations. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 1035–1048. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Li, Y.; Lin, Y.; Zhuang, Y. Relational knowledge transfer for zero-shot learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 2145–2151. [Google Scholar]

- Yang, Q.; Zhang, Y.; Dai, W.; Pan, S.J. Feature-Based Transfer Learning. In Transfer Learning; Cambridge University Press: Cambridge, UK, 2020; pp. 34–44. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y. Adversarial Transfer Learning. In Introduction to Transfer Learning. Machine Learning: Foundations, Methodologies, and Applications; Springer: Singapore, 2022; pp. 163–174. [Google Scholar]

- Yue, S.; Lei, W.; Xue, Y.; Wang, Q.; Xu, X. Research on Fault Diagnosis Method of Deep Adversarial Transfer Learning. Aerosp. Sci. Technol. 2022, 41, 342–348. [Google Scholar] [CrossRef]

- Yuan, J.; Luo, L.; Jiang, H.; Zhao, Q.; Zhou, B. An intelligent index-driven multiwavelet feature extraction method for mechanical fault diagnosis. Mech. Syst. Signal Process. 2023, 188, 109992. [Google Scholar] [CrossRef]

- Liu, G.; Wang, L.; Fei, L.; Liu, D.; Yang, J. Hyperspectral Image Classification Based on Fuzzy Nonparallel Support Vector Machine. In Proceedings of the Global Conference on Robotics, Artificial Intelligence and Information Technology, Chicago, IL, USA, 30–31 July 2022; pp. 140–144. [Google Scholar]

- Park, S.; Kim, M.; Park, K.; Shin, H. Mutual Domain Adaptation. Pattern Recognit. 2024, 145, 109919. [Google Scholar] [CrossRef]

- Du, J.; Li, D.; Deng, Y.; Zhang, L.; Lu, H.; Hu, M.; Shen, M.; Liu, Z.; Ji, X. Multiple Frames Based Infrared Small Target Detection Method Using CNN. In Proceedings of the 2021 4th International Conference on Algorithms, Computing and Artificial Intelligence, New York, NY, USA, 22–24 December 2021; pp. 397–402. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.J.; Pouget, A.J.; Mirza, M.; Xu, B.; Warde, F.D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Yaroslav, G.; Evgeniya, U.; Hana, A.; Pascal, G.; Hugo, L.; François, L.; Mario, M.; Victor, L. Domain-Adversarial Training of Neural Networks. In Part of the Advances in Computer Vision and Pattern Recognition Book Series (ACVPR); Springer: Berlin/Heidelberg, Germany, 2017; pp. 189–209. [Google Scholar]

| Variable and Function Symbols | Define |

|---|---|

| , | Source and target domain datasets |

| , | High-level domain invariant features of the source and target domains |

| , | Marginal probability distributions for source and target domains |

| Domain-invariant feature extractor | |

| Defect discriminators modeling | |

| Domain alignment discriminators | |

| Set of weight parameters and bias parameters for each layer in the domain-invariant feature extractor | |

| Set of weight parameters and bias parameters for each layer in the Defect discriminators model | |

| Set of weight parameters and bias parameters for each layer in the domain alignment discriminators | |

| α | Penalty coefficients for the gradient inversion layer |

| λ | Weight balance coefficients for the Defect discriminators model |

| η, ρ | Weight balance coefficients for domain alignment discriminators |

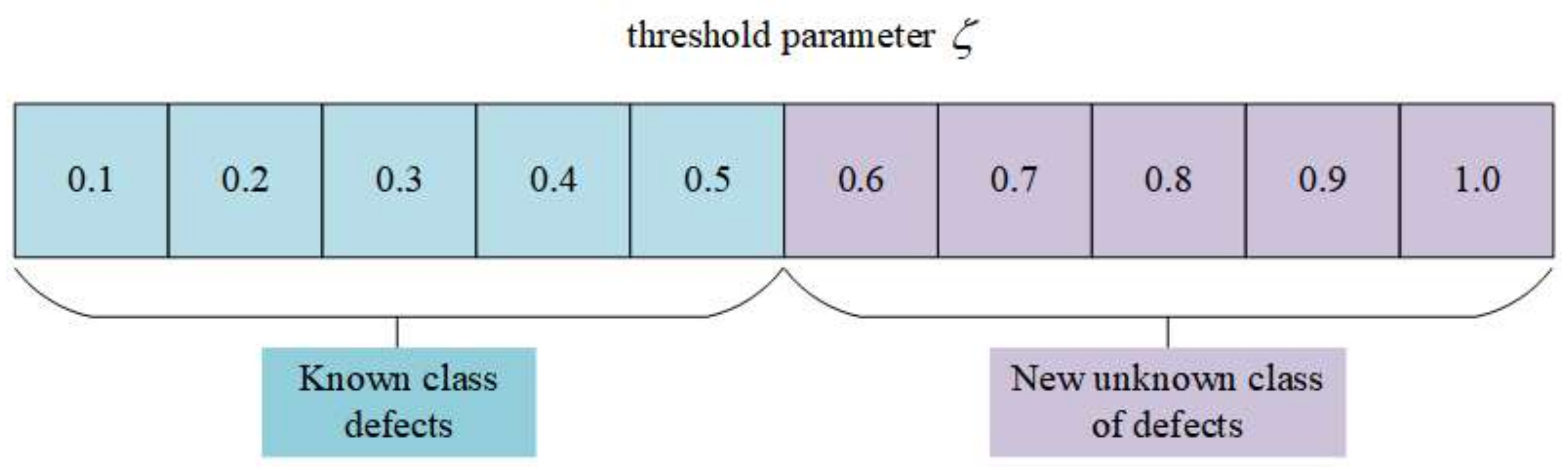

| Thresholding parameters for Defect discriminator models | |

| α1 | The learning rate of domain-invariant feature extractor and Defect discriminator models |

| α2 | The learning rate of domain alignment discriminators |

| Layer | Filter Size | Pacemaker | Padding | Output Size |

|---|---|---|---|---|

| Input layer real samples x | — | — | — | 3 × 128 × 128 |

| Conv2D + BN + LeakyReLU | 3 × 3 | 1 | 1 | 128 × 128 × 128 |

| Max pooling layer | 2 × 2 | 2 | — | 128 × 64 × 64 |

| Conv2D + BN + LeakyReLU | 3 × 3 | 1 | 1 | 256 × 64 × 64 |

| Max pooling layer | 2 × 2 | 2 | — | 256 × 32 × 32 |

| Conv2D + BN + LeakyReLU | 3 × 3 | 1 | 1 | 512 × 32 × 32 |

| Max pooling layer | 2 × 2 | 2 | — | 512 × 16 × 16 |

| Conv2D + BN + LeakyReLU | 3 × 3 | 1 | 1 | 1024 × 16 × 16 |

| Global average pooling layer | — | — | — | 1024 × 1 × 1 |

| FC + ReLU | — | — | — | 64 × 1 × 1 |

| FC + sigmoid | — | — | — | 1024 × 1 × 1 |

| Output layer real samples x | — | — | — | 1024 × 16 × 16 |

| Defect Class | Sample Image | Source Domain Sample Data Quantities | Quantity of Data in the Target Domain Train Set | Quantity of Data in the Target Domain Test Set | Label |

|---|---|---|---|---|---|

| Sagging |  | 300 | 150 | 150 | SA |

| Orange skin |  | 300 | 150 | 150 | OS |

| Cracking |  | 300 | 150 | 150 | CR |

| Blistering |  | 300 | 150 | 150 | BL |

| Pinholing |  | 300 | 150 | 150 | PH |

| Delamination |  | 300 | 150 | 150 | DF |

| Class | Predicted Positive Class | Predicted Negative Class |

|---|---|---|

| Actual positive class | TP | FN |

| Actual negative class | FP | TN |

| Model | Parameter | Time |

|---|---|---|

| TCA | 182 k | 1124 s |

| JDA | 173 k | 973 s |

| DANN | 227 k | 572 s |

| DTL | 235 k | 721 s |

| DCTLN | 166 k | 1028 s |

| TSTAN | 112 k | 677 s |

| IDATLWGAN | 91 k | 559 s |

| Model | F1-Score | Precision | Recall | |||||

|---|---|---|---|---|---|---|---|---|

| SA | OS | BL | CR | PH | DF | |||

| TCA | 0.695 | 0.676 | 0.648 | 0.712 | 0.768 | 0.789 | 0.634 | 0.637 |

| JDA | 0.487 | 0.497 | 0.573 | 0.539 | 0.572 | 0.625 | 0.519 | 0.485 |

| DANN | 0.893 | 0.735 | 0.826 | 0.867 | 0.848 | 0.864 | 0.745 | 0.812 |

| DTL | 0.805 | 0.756 | 0.874 | 0.882 | 0.865 | 0.895 | 0.796 | 0.854 |

| DCTLN | 0.823 | 0.628 | 0.889 | 0.943 | 0.874 | 0.913 | 0.832 | 0.897 |

| TSTAN | 0.945 | 0.784 | 0.876 | 0.834 | 0.865 | 0.876 | 0.873 | 0.749 |

| IDATLWGAN | 0.932 | 0.912 | 0.957 | 0.935 | 0.881 | 0.943 | 0.994 | 0.995 |

| Task | Source Domain→Target Domain | Source Domain Dataset Defect Class | Target Domain Dataset Defect Class | |

|---|---|---|---|---|

| I | 1 | A→C | SA, OS, CR, PH | SA, OS, CR, PH, BL, DF |

| 2 | C→A | SA, OS, CR, PH | SA, OS, CR, PH, BL, DF | |

| 3 | B→E | SA, OS, CR, PH | SA, OS, CR, PH, BL, DF | |

| 4 | E→B | SA, OS, CR, PH | SA, OS, CR, PH, BL, DF | |

| 5 | C→D | SA, OS, CR, PH | SA, OS, CR, PH, BL, DF | |

| 6 | D→C | SA, OS, CR, PH | SA, OS, CR, PH, BL, DF | |

| II | 7 | B→A | SA, OS, CR, PH | SA, OS, CR, PH, BL |

| 8 | B→A | SA, OS, CR, PH | SA, OS, CR, PH, DF | |

| 9 | D→E | SA, OS, CR, PH | SA, OS, CR, PH, BL | |

| 10 | D→E | SA, OS, CR, PH | SA, OS, CR, PH, DF | |

| 11 | F→B | SA, OS, CR, PH | SA, OS, CR, PH, BL | |

| 12 | F→B | SA, OS, CR, PH | SA, OS, CR, PH, DF |

| Painting Condition | Air Temperature (°C) | Relative Humidity (%) |

|---|---|---|

| A | 15 | 50 |

| B | 15 | 60 |

| C | 25 | 50 |

| D | 25 | 60 |

| E | 35 | 50 |

| F | 35 | 60 |

| Migration Model | TCA | JDA | DANN | DTL | DCTLN | TSTAN | IDATLWGAN | |

|---|---|---|---|---|---|---|---|---|

| I | 1 | 37.65 | 38.64 | 51.63 | 57.34 | 67.78 | 84.08 | 90.56 |

| 2 | 36.24 | 41.18 | 53.15 | 56.61 | 69.41 | 83.23 | 94.74 | |

| 3 | 40.14 | 40.56 | 54.48 | 58.56 | 71.22 | 86.89 | 92.78 | |

| 4 | 38.78 | 37.44 | 49.67 | 53.00 | 64.67 | 84.33 | 91.89 | |

| 5 | 40.86 | 40.33 | 52.29 | 58.37 | 66.87 | 77.87 | 89.77 | |

| 6 | 36.51 | 38.72 | 51.11 | 55.35 | 68.73 | 85.63 | 90.51 | |

| Average | 38.36 | 39.48 | 52.05 | 56.54 | 68.11 | 83.67 | 91.71 | |

| II | 7 | 46.56 | 49.45 | 58.73 | 68.18 | 78.02 | 87.75 | 96.03 |

| 8 | 48.31 | 46.14 | 59.41 | 66.73 | 76.32 | 89.98 | 94.25 | |

| 9 | 45.68 | 48.21 | 63.59 | 63.68 | 74.21 | 86.23 | 93.67 | |

| 10 | 49.31 | 50.08 | 58.73 | 67.74 | 72.36 | 88.43 | 94.21 | |

| 11 | 46.54 | 46.37 | 64.67 | 68.29 | 73.03 | 91.71 | 95.57 | |

| 12 | 44.95 | 46.08 | 59.96 | 65.43 | 79.37 | 93.58 | 92.39 | |

| Average | 46.89 | 47.72 | 60.85 | 66.68 | 75.55 | 89.61 | 94.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bu, H.; Yang, T.; Hu, C.; Zhu, X.; Ge, Z.; Yan, Z.; Tang, Y. A Zero-Shot Image Classification Method of Ship Coating Defects Based on IDATLWGAN. Coatings 2024, 14, 464. https://doi.org/10.3390/coatings14040464

Bu H, Yang T, Hu C, Zhu X, Ge Z, Yan Z, Tang Y. A Zero-Shot Image Classification Method of Ship Coating Defects Based on IDATLWGAN. Coatings. 2024; 14(4):464. https://doi.org/10.3390/coatings14040464

Chicago/Turabian StyleBu, Henan, Teng Yang, Changzhou Hu, Xianpeng Zhu, Zikang Ge, Zhuwen Yan, and Yingxin Tang. 2024. "A Zero-Shot Image Classification Method of Ship Coating Defects Based on IDATLWGAN" Coatings 14, no. 4: 464. https://doi.org/10.3390/coatings14040464

APA StyleBu, H., Yang, T., Hu, C., Zhu, X., Ge, Z., Yan, Z., & Tang, Y. (2024). A Zero-Shot Image Classification Method of Ship Coating Defects Based on IDATLWGAN. Coatings, 14(4), 464. https://doi.org/10.3390/coatings14040464