Simple Summary

Humans have the amazing ability to make thousands of different facial expressions due to the existence of two different brain pathways for facial expressions: The Voluntary Pathway, which controls intentional expressions, and the Involuntary Pathway, which is activated for spontaneous expressions. These two pathways could also differentially influence the left and right sides of the face when we make a posed smile or a spontaneous smile, an issue that has not been studied carefully before. In two experiments, we found a double-peak pattern: compared to the felt smile, the posed smile involves a faster and wider movement in the left corner of the mouth, while an early deceleration of the right corner occurs in the second phase of the movement, after the speed peak. Our findings will aid to clarify the lateralized bases of emotion expression.

Abstract

Humans can recombine thousands of different facial expressions. This variability is due to the ability to voluntarily or involuntarily modulate emotional expressions, which, in turn, depends on the existence of two anatomically separate pathways. The Voluntary (VP) and Involuntary (IP) pathways mediate the production of posed and spontaneous facial expressions, respectively, and might also affect the left and right sides of the face differently. This is a neglected aspect in the literature on emotion, where posed expressions instead of genuine expressions are often used as stimuli. Two experiments with different induction methods were specifically designed to investigate the unfolding of spontaneous and posed facial expressions of happiness along the facial vertical axis (left, right) with a high-definition 3-D optoelectronic system. The results showed that spontaneous expressions were distinguished from posed facial movements as revealed by reliable spatial and speed key kinematic patterns in both experiments. Moreover, VP activation produced a lateralization effect: compared with the felt smile, the posed smile involved an initial acceleration of the left corner of the mouth, while an early deceleration of the right corner occurred in the second phase of the movement, after the velocity peak.

1. Introduction

The human face has 43 muscles, which can stretch, lift, and contort into thousands combinations involving different muscles at different times and with different intensities [1]. In neuroanatomical terms, movement of the human face is controlled by two cranial nerves, the facial nerve (cranial nerve VII) and the trigeminal nerve (cranial nerve V). The facial nerve controls the superficial muscles attached to the skin, which are primarily responsible for facial expressions, and originate in the two facial nuclei located on either side of the midline in the pons. These nuclei do not communicate directly with each other, and this is why emotional expression can vary in intensity across a vertical axis (i.e., left vs. right). Differences can also occur across a horizontal axis (i.e., upper vs. lower area), given that the facial nerve has five major branches, with each branch serving a different portion of the face. In particular, the upper face (i.e., eye area) is controlled differently than the lower part (i.e., mouth area [2,3]). The upper part of the face receives input from both the ipsilateral and contralateral facial nerves, whereas the lower part is controlled primarily by the contralateral facial nerve [4,5]. Differences in the lateralization of facial expressions may therefore result from a lack of communication between the facial nuclei. These differences are mainly observed in the lower part of the face, due to the contralateral innervation produced by the branch of the facial nerve responsible for the contraction of the muscles around the mouth. These asymmetries should be specifically amplified during posed expressions of happiness, which are controlled by the Voluntary Pathway. Indeed, emotional expressions can be voluntarily or involuntarily modulated depending on the recruitment of two anatomically separate pathways for the production of facial expressions: the Voluntary Pathway (VP) and the Involuntary Pathway (IP [6]). The former involves input from the primary motor cortex and is primarily responsible for voluntary expression. The second, on the other hand, is a subcortical system that is primarily responsible for spontaneous expression. The contraction of facial muscles related to genuine emotion originates from subcortical brain areas that provide excitatory stimuli to the facial nerve nucleus via extrapyramidal motor tracts. In contrast, posed expressions are controlled by impulses of the pyramidal tracts from the primary motor cortex [7,8,9]. Therefore, small changes in the dynamical development of a facial display may characterize and distinguish genuine from posed facial expressions in each of the four quadrants resulting from the intersection of the vertical and horizonal axes, a topic so far neglected (but see [5,10]).

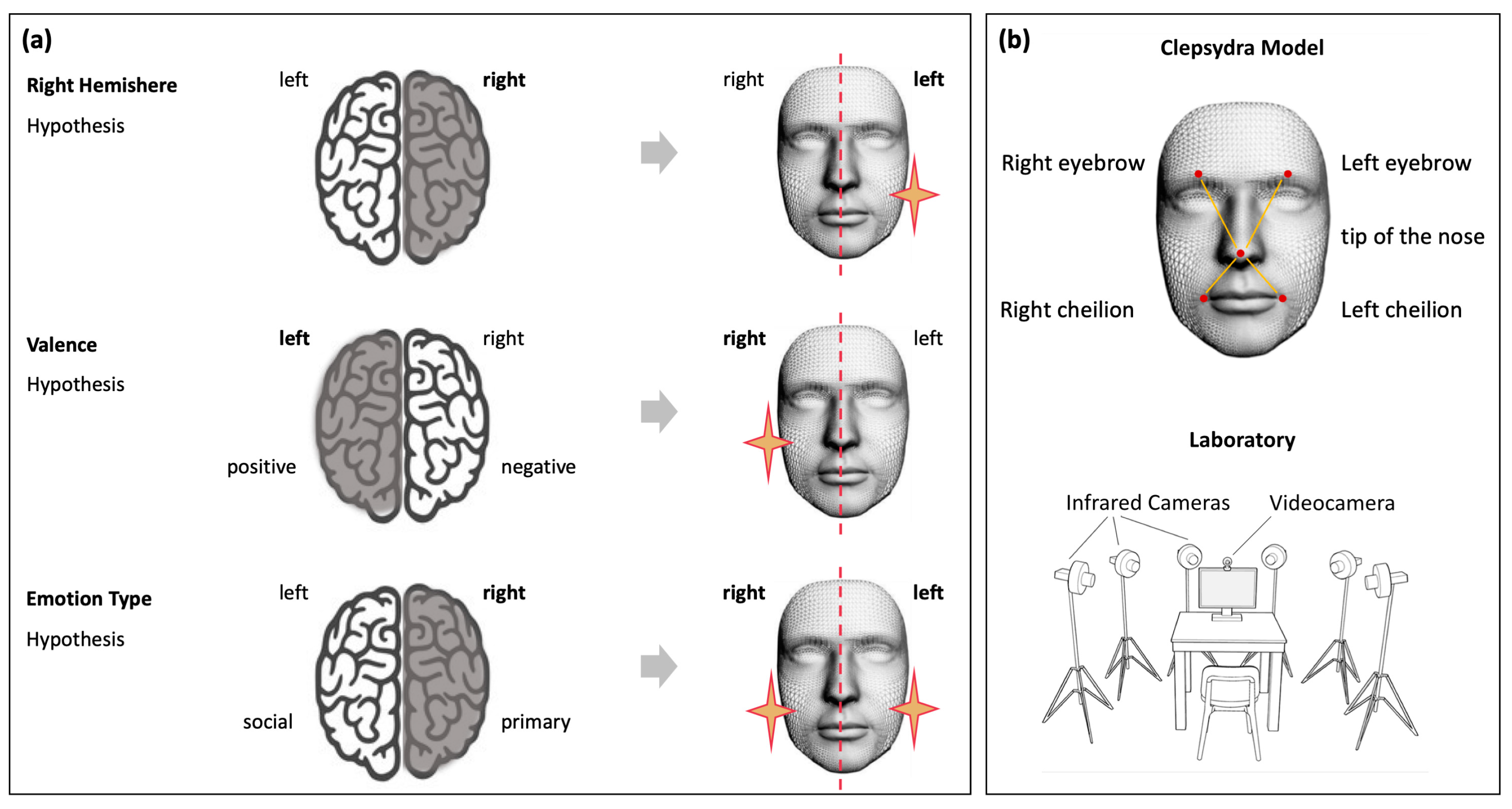

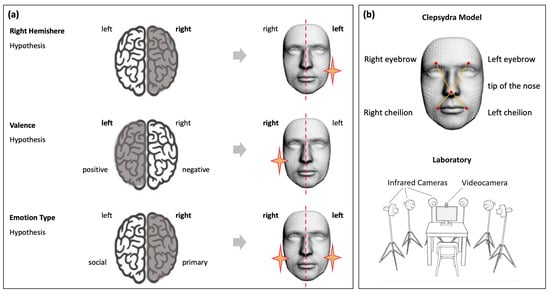

In this respect, three major models of emotional processing address the so-called “hemispheric lateralization of emotion” topic in humans [11,12]: the Right-Hemisphere Hypothesis [8], the Valence-Specific Hypothesis [13], and the Emotion-type Hypothesis [5,14]. Analysis of facial expressions has been a traditional means for inferring hemispheric lateralization of emotions by measuring expressive differences between the left and right hemiface, based on the assumption that the right hemisphere controls the left side of the face, and the left hemisphere controls the right side of the face [14,15,16,17,18,19,20]. The Right-Hemisphere Hypothesis [8] states that all emotions are a dominant, lateralized function of the right hemisphere, regardless of their valence or the emotional feeling processed. Their associated expressions would therefore be lateralized in the left side of the face. The Valence-Specific Hypothesis [13] states that negative, avoidance, or withdrawal-type emotions are lateralized to the right hemisphere (the associated expressions would then be lateralized to the left hemiface), whereas positive emotions, such as happiness, are lateralized to the left hemisphere (with expressions lateralized to the right hemiface). Finally, the Emotion-type Hypothesis [21] states that primary emotional responses are initiated by the right hemisphere on the left side of the face, while social-emotional responses are initiated by the left hemisphere on the right side of the face. Primary emotions and their manifestations are happiness, sadness, anger, fear, disgust, and surprise; whereas social emotions, such as embarrassment, envy, guilt, and shame, are acquired through parental socialization and during play, school, and religious-cultural activities [21].

Based on these theories, various patterns of facial lateralization of emotion expressions can be hypothesized (for a schematic representation of different hypotheses and related predictions on happiness expressions, see Figure 1a). However, a large number of replication studies exploring those hypotheses provided inconsistent results [11,12]. One possible explanation for these contradictory data is that previous literature considered both genuine and posed expressions without distinction. This aspect is particularly remarkable when considering expressions of happiness. Duchenne and non-Duchenne are terms used to classify if a smile reflects a true emotional feeling versus a false smile [22,23,24]. A felt (Duchenne) smile is very expressive and it is classically described as causing the cheeks to lift, the eyes to narrow, and wrinkling of the skin to produce crow’s feet. A false (non-Duchenne) smile, instead, would only involve the lower face area. However, recent research using high-speed videography has shown that the difference between a felt (Duchenne) versus a fake smile might in fact relate to the side of the face initiating the smile [25], thus providing a fundamental—yet not tested—cue to an observer for emotion authenticity detection. The key role of temporal features (i.e., time-onset vs maximum expression) as a locus for investigating the lateralization of facial displays and inferring hemispheric lateralization has been, in fact, largely neglected. A rigorous methodological approach able to track the full unfolding of an expression over time and across the two spatial axes is therefore necessary to characterize and distinguish spontaneous from posed smiles and to provide crucial cues for the hemispheric lateralization debate.

Figure 1.

Schematic representation of the three main hypotheses of emotional processing and related patterns of facial lateralization (panel (a)). The Clepsydra Model (panel (b), top image) was adopted to test these hypotheses. Five markers were applied to the left and right eyebrows, left and right cheilions, and the tip of the nose. The experimental set up was equipped with six infrared cameras placed in a semicircle (bottom figure).

A major drawback of the existing literature on facial expressions is that, to collect reliable and controlled databases, researchers typically showed participants static images of posed expressions (for reviews, see [15,26]). Adopted stimuli were neither dynamic nor genuine, and the induction method [27] did not differentiate between Emotional Induction (i.e., the transmission of emotions from one individual to another [28,29]) and Motor Contagion (i.e., the automatic reproduction of the motor patterns of another individual [30,31]). To conclude, inconsistencies in the major models of hemispheric lateralization of emotion and arbitrary use of different experimental stimuli and elicitation methods are all sources of poor consensus in the literature on facial expressions of emotion.

The objective of this study was to investigate lateralized patterns of movement in the expression of happiness and the possible impact of dynamic stimuli with different induction methods on spontaneous expressions (i.e., Emotional Induction, Motor Contagion). In particular, we hypothesized that lateralized kinematic patterns should have emerged in the lower part of the face for posed expressions innervated contralaterally by the VP and that Motor Contagion should have modified the choreography of spontaneous expressions.

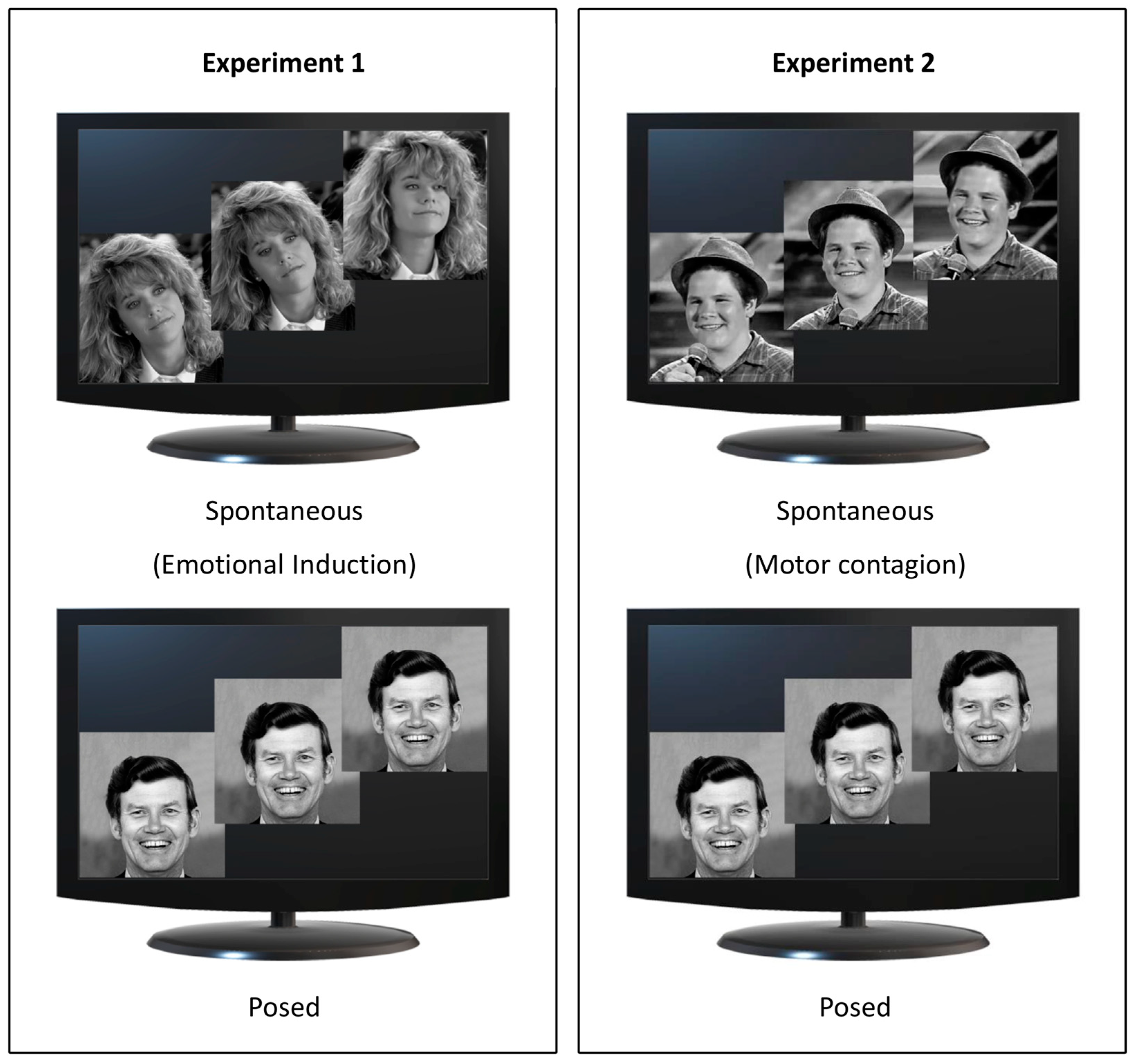

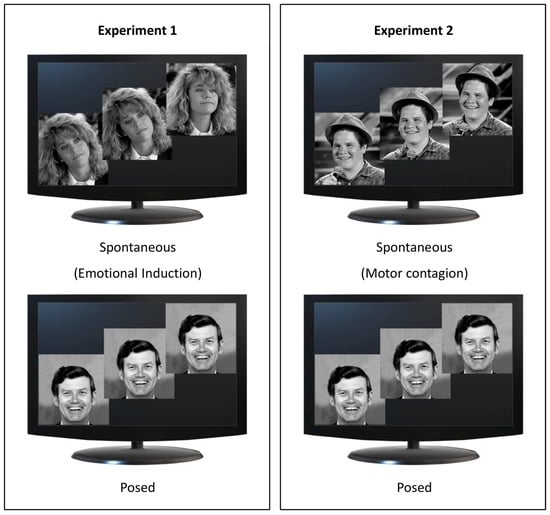

To investigate these hypotheses, in Experiment 1, we presented two sets of stimuli: (i) videoclips extracted from popular comedies that produced hilarity without showing smiling faces (Spontaneous condition, Emotional Induction), and (ii) static pictures of smiles (Posed condition). In Experiment 2, we showed videos of people shot frontally while manifesting the expression of happiness (Spontaneous condition, Motor Contagion). For the Posed condition, we maintained the same procedure as in Experiment 1.

To test the spatiotemporal dynamics of facial movements, we capitalized on a method recently developed in our laboratory [32], which combines an ultra-high definition optoelectronic system with a Facial Action Coding System (FACS, a comprehensive, anatomically based system for describing all visually discernible facial movement) [33]. This method proved to be remarkably accurate in the quantitative capture of facial motion.

Thanks to this method, in Experiments 1 and 2, we expected to show lateralized kinematic patterns in the lower part of the face for posed compared to spontaneous expressions. On the other hand, we expected to find differences for spontaneous expressions across the two experiments depending on the induction method.

Finally, we tried to disambiguate which hypothesis on the hemispheric lateralization of emotion expressions is more rigorous in explaining the observed data.

2. General Methods

The data for Experiments 1 and 2 were collected at the Department of General Psychology—University of Padova.

2.1. Ethics Statement

All experiments were conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the University of Padova (protocols no. 3580, 4539). All participants were naïve to the purposes of the experiment and gave their written informed consent for their participation.

2.2. Apparatus

Participants were tested individually in a dimly lit room. Their faces were recorded frontally with a video camera (Logitech C920 HD Pro Webcam, Full HD 1080p/30fps Logitech, Lausanne, CH) positioned above the monitor for the FACS validation procedure. The stimuli presentation was implemented using E-prime V2.0. Five infrared reflective markers (i.e., ultra-light 3 mm diameter semi-spheres) were applied to the faces of participants according to the Clepsydra Model (Figure 1b, top picture [34]) for kinematic analysis. We selected the minimum number of markers adopted in the literature as a common denominator to compare our findings with previous results [35,36]. Markers were taped to the left and right eyebrows and to the left and right cheilions to test the facial nerve branches that specifically innervate the upper and the lower parts of the face, respectively. A further marker was placed on the tip of the nose to perform a detailed analysis of the lateralized movements of each marker with respect to this reference point. The advantage of applying kinematic analysis to pairs of markers rather than individual markers is that it accounts for any head movement [37]. Because of its simplicity, the Clepsydra model could be validated and replicated by different laboratories around the world [38]. Six infrared cameras (sampling rate 140 Hz), placed in a semicircle at a distance of 1–1.2 m from the center of the room (Figure 1b, bottom picture) captured the relative position of the markers. Facial movements were recorded using a 3-D motion analysis system (SMART-D, Bioengineering Technology and Systems [B|T|S] BTS, Milano, Italy). The coordinates of the markers were reconstructed with an accuracy of 0.2 mm over the field of view. The standard deviation of the reconstruction error was 0.2 mm for the vertical (Y) axis and 0.3 mm for the two horizontal (X and Z) axes.

2.3. Procedure

Each participant underwent a single experimental session (Experiment 1 or 2) lasting approximately 20 min. They were seated in a height-adjustable chair in front of a monitor (40 cm from the edge of the table) and were free to move while observing selected stimuli displayed on the monitor (Figure 2). Facial movements were recorded under two experimental conditions: (i) Spontaneous condition, in which participants watched happiness-inducing videos and reacted freely (i.e., they were given no instructions); (ii) Posed condition, in which participants produced a voluntary expression of happiness while a posed image of happiness was shown on the monitor. The two experimental conditions within each experiment—Spontaneous and Posed—were specifically adopted to activate the two pathways (Voluntary and Involuntary). Crucially, we wanted to test the two methods of spontaneous induction in two separate experiments to avoid possible carry-over effects between them while comparing them both with the same Posed condition. This condition served, on the one hand, to define each participant’s expressive baseline (as a term of intra-individual comparison) and, on the other hand, to test the specific role of the Voluntary versus Involuntary Pathway. Moreover, for the Posed condition, we chose a classical image of happiness taken from Ekman’s dataset for three reasons: (i) for comparison with previous literature [39]; (ii) for relevance to our experimental manipulation (being a prototypical non-genuine expression of happiness); and (iii) to keep the attention fixed on the monitor. Participants were instructed to mime the happiness expression three times so that we could have a sufficient number of repetitions. No instruction whatsoever was given on the duration of the expression. This procedure was aimed at generating expressions without forcing the participants to respect time constraints as in the Spontaneous conditions [40]. To avoid possible carry-over effects between trials due to the Emotional Induction from the videos used in the Spontaneous condition, we capitalized on the procedure adopted by Sowden and colleagues [35], and divided the trials into two separate blocks (first the spontaneous block, then the posed block after a brief pause). The inter stimulus interval was between 30 and 60 s.

Figure 2.

Experimental design. Spontaneous conditions for Experiments 1 and 2 are represented in the top panels and Posed conditions in the bottom panels. In Experiment 1 (left panel), participants viewed video extracts from comedy for Emotional induction (Spontaneous condition, upper image; source: YouTube) and a static image of happiness (Posed condition, lower image; source: Pictures of Facial Affect [39]). In Experiment 2 (right panel), participants viewed videos showing happy faces inducing Motor Contagion (Spontaneous condition, upper image; source: YouTube) and the same static image of happiness adopted in Experiment 1 (Posed condition, lower image; Pictures of Facial Affect [39]).

2.4. Expression Extraction and FACS Validation Procedure

All repeated expressions of happiness within a single trial were included in the analysis. A two-step procedure was adopted to ensure a correct selection of each expression. First, we manually identified all the single epochs—the beginning and end of each smile—according to the FACS criteria (e.g., Action Units 6 and 12, the Cheek Raiser and the Lip Corner Puller). Despite the existence of an automated FACS coding, we decided to apply the manual one, as it is demonstrated to have a strong concurrent validity with the automated FACS coding, thus denoting the reliability of the method. Furthermore, the manual procedure has recently been demonstrated to outperform the automated one [41]. Second, we applied a kinematic algorithm to automatically identify the beginning and end of each smile using the cross-reference on the threshold velocity of the cheilion. Identification of motion onset and end performed with the two methods were compared and obtained a 100% match.

2.5. Data Acquisition

2.5.1. Kinematic 3-D Tracking

Following kinematic data collection, the SMART-D Tracker software package (Bioengineering Technology and Systems, B|T|S) was employed to automatically reconstruct the 3-D marker positions as a function of time. Then, each clip was individually checked for correct marker identification.

2.5.2. Kinematic 3-D Analysis

To investigate spatial, velocity, and temporal key kinematic parameters in both the upper and lower face, we considered the relative movement of two pairs of markers:

- Lower part of the face:

- Left cheilion and the tip of the nose (Left-CH);

- Right cheilion and the tip of the nose (Right-CH).

- Upper part of the face:

- Left eyebrow and the tip of the nose (Left-EB);

- Right eyebrow and the tip of the nose (Right-EB).

Each expression was analyzed from the onset point to the apex (i.e., the peak). Movement onset was calculated as the first time point at which the mouth widening speed crossed a 0.2 mm/s threshold and remained above it for longer than 100 ms. Movement end was considered when the lip corners reached the maximum distance (i.e., the time at which the mouth widening speed dropped below the 0.2 mm/s threshold). Movement time was calculated as the temporal interval between movement onset and movement offset. We measured morphological (i.e., spatial) and dynamic (i.e., velocity and temporal) characteristics of each expression on each pair of markers [42]:

- Spatial parameters:

- Maximum Distance (MD, mm) is the maximum distance reached by the 3-D coordinates (x,y,z) of two markers.

- Delta Distance (DD, mm) is the difference between the maximum and the minimum distance reached by two markers, to account for functional and anatomical differences across participants.

- Velocity parameters:

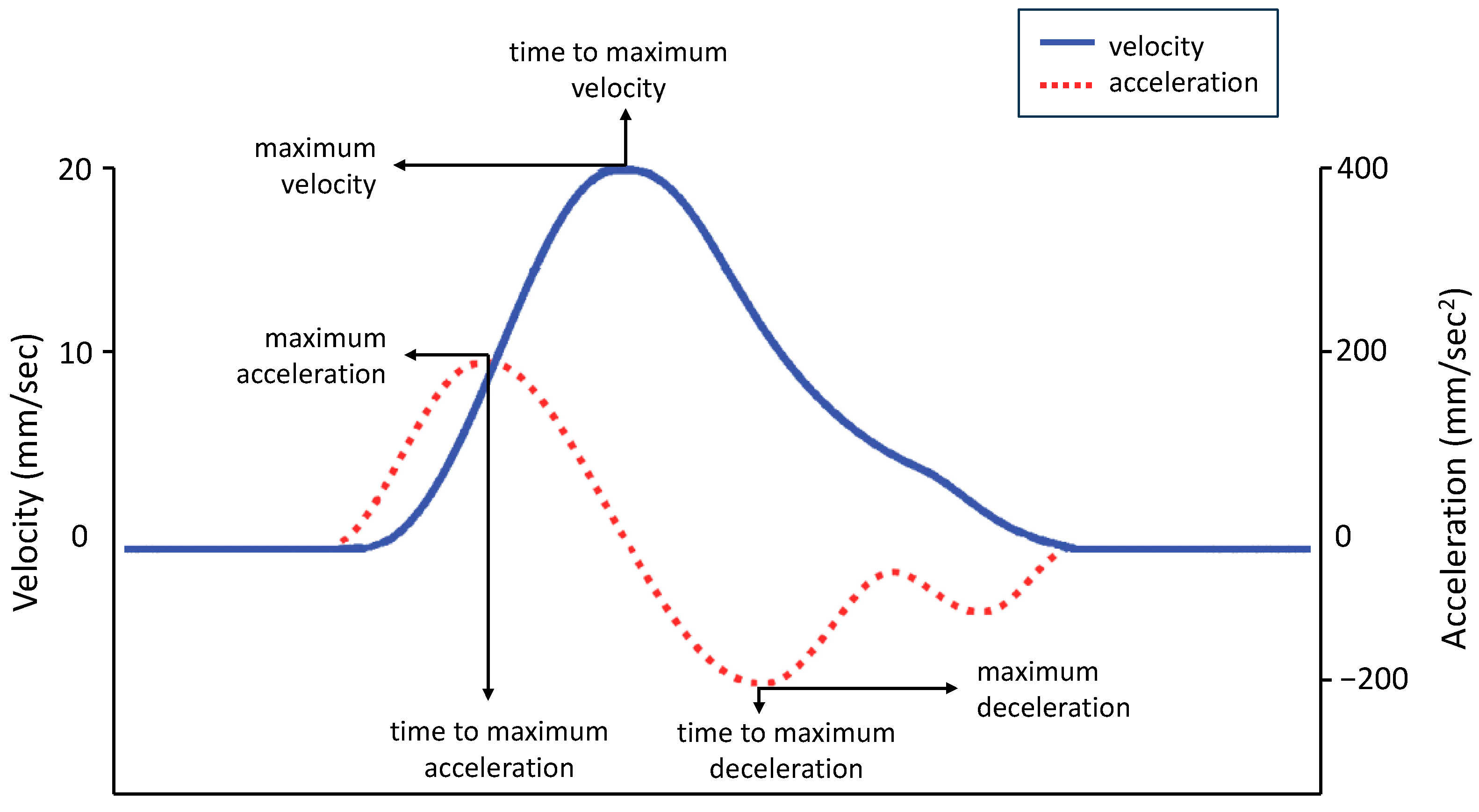

- Maximum Velocity (MV, mm/s) is the maximum velocity reached by the 3-D coordinates (x,y,z) of each pair of markers. In the equation V = d/t, V is the velocity, d is the distance, and t is the time. The velocity of a pair of markers is calculated instant by instant as the displacement between the markers divided by the time required to make the displacement. The maximum velocity was the highest value of this equation and reflected the speed at which the two markers achieved maximum displacement in the minimum time (see Figure 3, blue line).

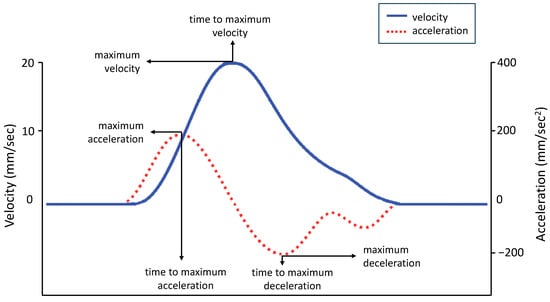

Figure 3. Graphical representation of experimental variables. The graphs of velocity (solid blue line) and acceleration (dashed red line) show the average amplitude and time sequence of the different peaks. Velocity events performed by the body classically occur in a predefined order: acceleration to peak velocity followed by deceleration. The same pattern is shown by the hand during a reaching task (for a comparison, see [43]).

Figure 3. Graphical representation of experimental variables. The graphs of velocity (solid blue line) and acceleration (dashed red line) show the average amplitude and time sequence of the different peaks. Velocity events performed by the body classically occur in a predefined order: acceleration to peak velocity followed by deceleration. The same pattern is shown by the hand during a reaching task (for a comparison, see [43]). - Maximum Acceleration (MA, mm/s2) is the maximum acceleration reached by the 3-D coordinates (x,y,z) of each pair of markers. In the equation A = v/t, A is the acceleration, v is the velocity, and t is the time. Acceleration is calculated moment by moment as the rate of change of velocity of a pair of markers. The maximum acceleration was the highest value of this equation (see Figure 3, red dashed line).

- Maximum Deceleration (MDec, mm/s2): is the maximum deceleration reached by the 3-D coordinates (x,y,z) of each pair of markers. Deceleration is a negative acceleration and is calculated moment by moment as the rate of change of velocity of a pair of markers as their speed decreases. The maximum deceleration was the highest negative value of this equation, reported here in absolute value for graphical purposes (see Figure 3, red dashed line).

The time parameters were calculated by measuring the time when the spatial and velocity parameters just described reached their peaks after movement onset. Each temporal value was then normalized (i.e., divided by its corresponding total movement time) to account for individual speed differences:

- Time to Maximum Distance (TMD%, the proportion of time at which a pair of markers reached a maximum distance, calculated from movement onset)

- Time to Maximum Velocity (TMV%, the proportion of time at which a pair of markers reached a peak velocity, calculated from movement onset)

- Time to Maximum Acceleration (TMA%, the proportion of time at which a pair of markers reached a peak acceleration, calculated from movement onset)

- Time to Maximum Deceleration (TMDec%, the proportion of time at which a pair of markers reached a peak deceleration, calculated from movement onset)

2.6. Statistical Approach

All behavioral data were analyzed using JASP version 0.16 [44] statistical software. Data analysis for each experiment was divided into three main parts: the first one was aimed at testing whether facial motion differs across the vertical axis (i.e., Left-CH vs. Right-CH and Left-EB vs. Right-EB) for spontaneous and posed emotional expressions; the second part was aimed at exploring differences in the induction methods. During the first part of the analysis, for each experiment, a repeated-measures ANOVA with condition (Spontaneous, Posed) and side of the face (left, right) as within-subject variables was performed together with planned orthogonal contrasts. The Volk–Selke Maximum p-Ratio on the two-sided p-value was computed, too, in order to quantify the maximum possible odds in favor of the alternative hypothesis over the null one (VS-MPR [45]). Finally, to explore the possible differences triggered by different induction methods in the expression of happiness (posed and spontaneous), we conducted a mixed analysis of variance with Experiment (1, 2) as the between-subjects factor, and condition (Spontaneous, Posed), and side of the face (left, right) as the within-subjects factor. For all statistical analyses, a significance threshold of p < 0.05 was set and Bonferroni correction was applied to post hoc contrasts.

Sample size was determined by means of GPOWER 3.1 [46] based on previous literature [47]. Since we used a repeated-measures design in Experiments 1 and 2, we considered an effect size f of 0.25, alpha = 0.05, and power = 0.8. The projected sample size needed with this effect size was N = 20 for within-group comparisons in each experiment. For the comparison analysis, the sample obtained was the sum of the samples from Experiments 1 and 2, and, for this reason, it was not estimated a priori. We then calculated the post hoc power and found that, even with small effects, the power was high, namely > 0.95.

3. Experiment 1

3.1. Participants

Twenty participants were recruited to take part to the experiment. Three participants were subsequently excluded due to technical or recording problems; therefore, a sample of seventeen participants (13 females, 4 males) aged between 21 and 32 years (M = 24.8, SD = 3) were included in the analysis.

3.2. Stimuli

For the Spontaneous condition, we selected N = 2 emotion-inducing videos from a recently-validated dataset structured to elicit genuine facial expressions [40]. Videoclips were extracted from popular comedy movies in which actors produced hilarity without showing smiling faces (e.g., jokes by professional comedians). Videoclips lasted an average of 2 min and 55 s (video 1 = 3 min 49 s; video 2 = 2 min 2 s). The length of the clips did not exceed 5 min according to the recommended size for emotional video [48]. Each video was presented once without repetition to avoid possible habituation effects. Participants rated the intensity of the emotion felt while watching the videos at the end of each presentation. Participants rated the stimuli on a 9-point Likert scale, where 1 was negative, 5 was neutral, and 9 was positive. The mean score assigned to the stimuli (6; SD = 1.286) was significantly higher than the central value of the Likert scale (i.e., 5; t17 = 2.383; p = 0.015).

3.3. Results

Participants performed a range of 3–5 expressions of happiness per trial in the Spontaneous condition and three in the Posed condition.

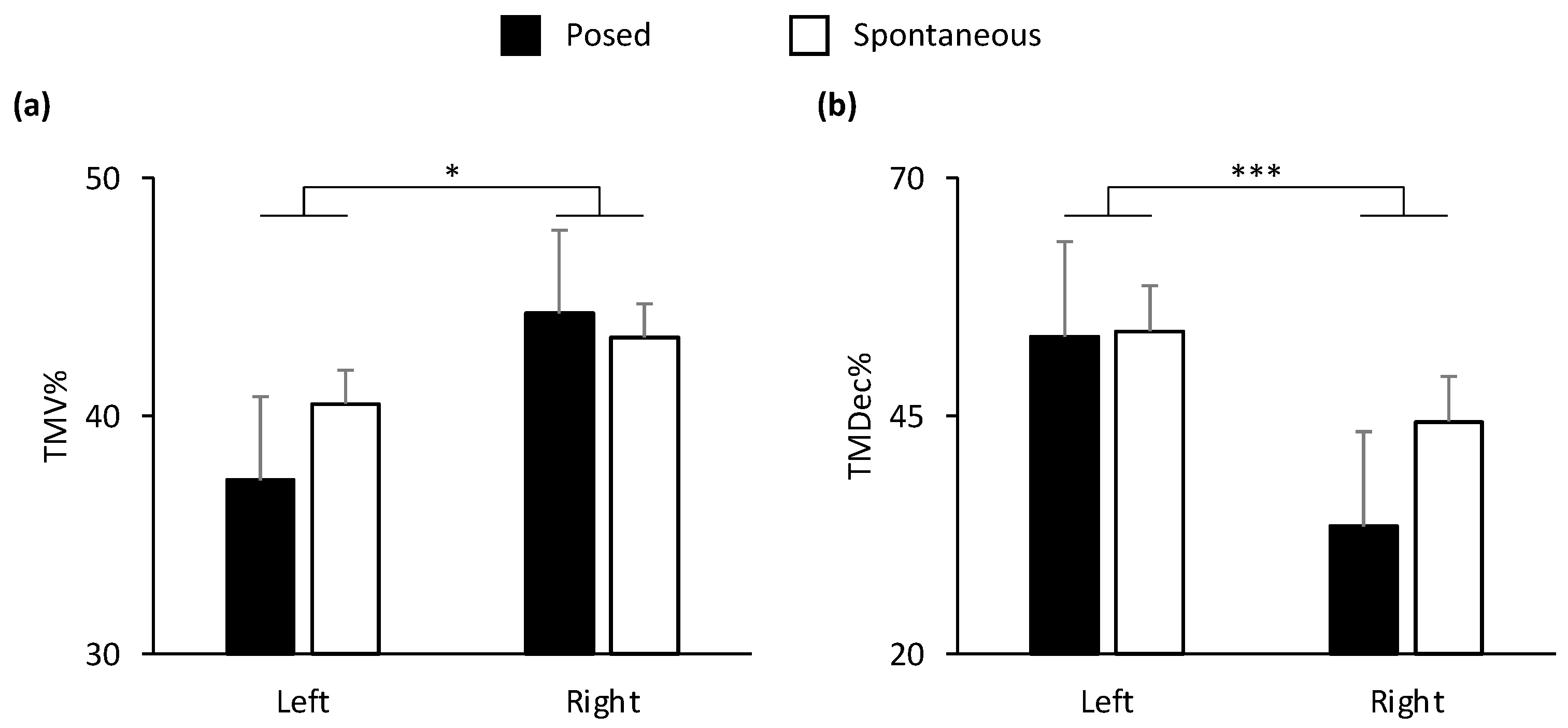

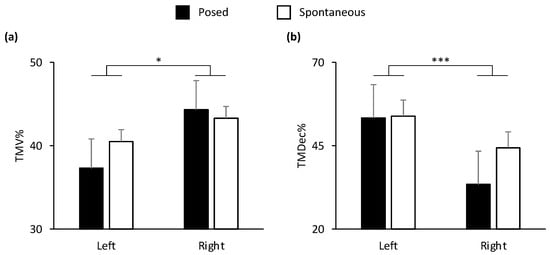

Repeated-Measures ANOVA

In the lower part of the face, all the spatial and velocity kinematic parameters, together with two of temporal parameters (TMD%, TMA%), showed a main effect of condition (Posed vs. Spontaneous). In the upper part of the face, MD and TMV% showed a main effect of condition (Table 1). In general, the results showed an amplified choreography for posed expressions in spatial, velocity, and temporal terms compared to spontaneous expressions: posed smiles were wider, quicker, and more anticipated than spontaneous smiles (see graphical representation in Figure S1, Supplementary Materials). A main effect of side of the face (Left vs. Right) was found on the lower part of the face for TMV% and TMDec% (Figure 4). In particular, the left cheilion reached its peak Velocity earlier than the right cheilion, and the right cheilion reached its Maximum Deceleration earlier than the left cheilion in both conditions.

Table 1.

Results of Repeated-measures ANOVA for Experiment 1. Only parameters with at least one significant result were reported. Results on the main effect of condition are graphically represented in Figure S1 enclosed in Supplementary Materials. Two main effects of side of the face were found in the lower part of the face (see Figure 4). No interaction was statistically significant.

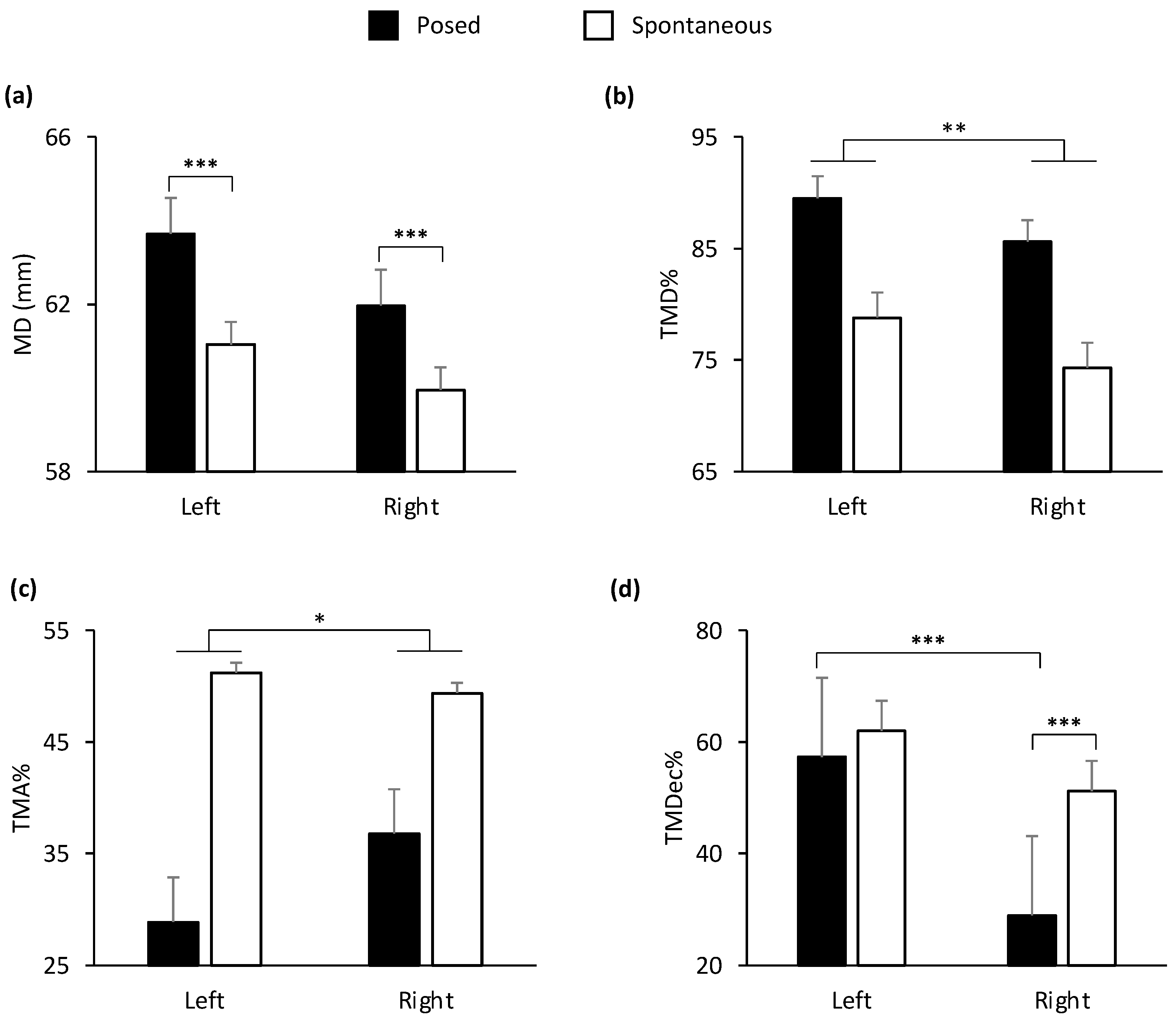

Figure 4.

Graphical representation of temporal components of movement in the lower part (i.e., cheilion markers, CH) of the face during posed and spontaneous expressions of happiness. A significant main effect of side of the face was found for: (a) Time to Maximum Velocity (TMV%) and (b) Time to Maximum Deceleration (TMDec%) in the lower part of the face. TMV% was earlier in the left side of the face, and TMDec% was earlier in the right side of the face. Error bars represent standard error. Asterisks indicate statistically significant comparisons (* = p < 0.05; *** = p < 0.001).

4. Experiment 2

In this experiment we specifically manipulated the induction method to evaluate the effect of Motor Contagion (i.e., the automatic reproduction of the motor patterns of another individual) on the spontaneous expressions of happiness. While, in Experiment 1, happiness was induced with movie scenes showing professional actors who performed hilarious scenes without exhibiting smiling faces, in Experiment 2, we selected videos from YouTube in which people were shot frontally while being particularly happy and expressing uncontrollable laughter.

4.1. Participants

Twenty participants (15 females, 5 males) aged between 21 and 27 years (M = 23, SD = 1.8) were recruited to take part to the experiment.

4.2. Stimuli

The image adopted for the Posed condition was the same as for Experiment 1. Spontaneous happiness was instead elicited by using three emotion-inducing videos extracted from YouTube and already validated in a previous study from our laboratory [32]. While Experiment 1 videos were longer because the actor needed time to deliver the hilarious joke, in Experiment 2, the videos were shorter because only the expression of happiness was presented. As a result, the time available for participants to spontaneously smile while watching the videos was shorter. We therefore increased the number of stimuli from two to three to collect enough observations. Videoclips lasted an average of 49 s (video 1 = 31 s; video 2 = 57 s; video 3 = 59 s). Each video was presented once without repetition to avoid possible habituation effects. As in Experiment 1, participants rated the intensity of the emotion felt while watching the videos at the end of each presentation stimuli on a 9-point Likert scale, where 1 was negative, 5 was neutral, and 9 was positive. The mean score assigned to the stimuli (7; SD = 1.457) was significantly higher than the central value of the Likert scale (i.e., 5; t19 = 5.679; p < 0.001) and higher than the score reported in Experiment 1 (t36 = −2.535; p = 0.008).

4.3. Results

Participants performed a range of 3–5 expressions of happiness per trial in the Spontaneous condition and three in the Posed condition.

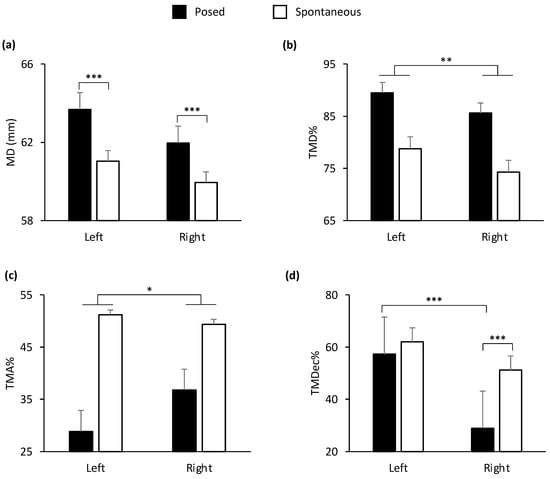

Repeated-Measures ANOVA

In the lower part of the face, all the kinematic parameters showed a main effect of condition (Posed vs. Spontaneous), except for TMV%. In the upper part of the face, no parameters showed any statistically significant effect (all ps > 0.05; Table 2). In general, the results confirmed the amplified choreography for posed expressions found in Experiment 1 for spatial, velocity, and temporal parameters compared to spontaneous expressions (for a graphical representation of the main effects of condition, see Figure S2 in the Supplementary Materials). A main effect of side of the face (Left vs. Right) was shown for TMD%, TMA%, and TMDec%, and a statistically significant interaction condition by side of the face was found for MD and TMDec% (Figure 5 and Table 2). The results of the interaction showed that the left cheilion during posed expressions was more distal than the right cheilion during spontaneous expressions (Figure 5a). Crucially, the peak Deceleration of the right cheilion during posed expressions occurred earlier than during spontaneous expressions, and earlier than the peak of the left cheilion during posed smiles (Figure 5d). The results of the main effects showed that the left cheilion reached its Maximum Acceleration earlier than the right cheilion in both conditions (Figure 5c), but it reached its Maximum Distance later than the right cheilion in both conditions (Figure 5b).

Table 2.

Results of Repeated-measures ANOVA for Experiment 2. Only parameters with at least one significant result were reported. Results on the main effect of condition are graphically represented in Figure S2 enclosed in Supplementary Materials. Four main effects of side of the face and two interactions were found in the lower part of the face (see Figure 5).

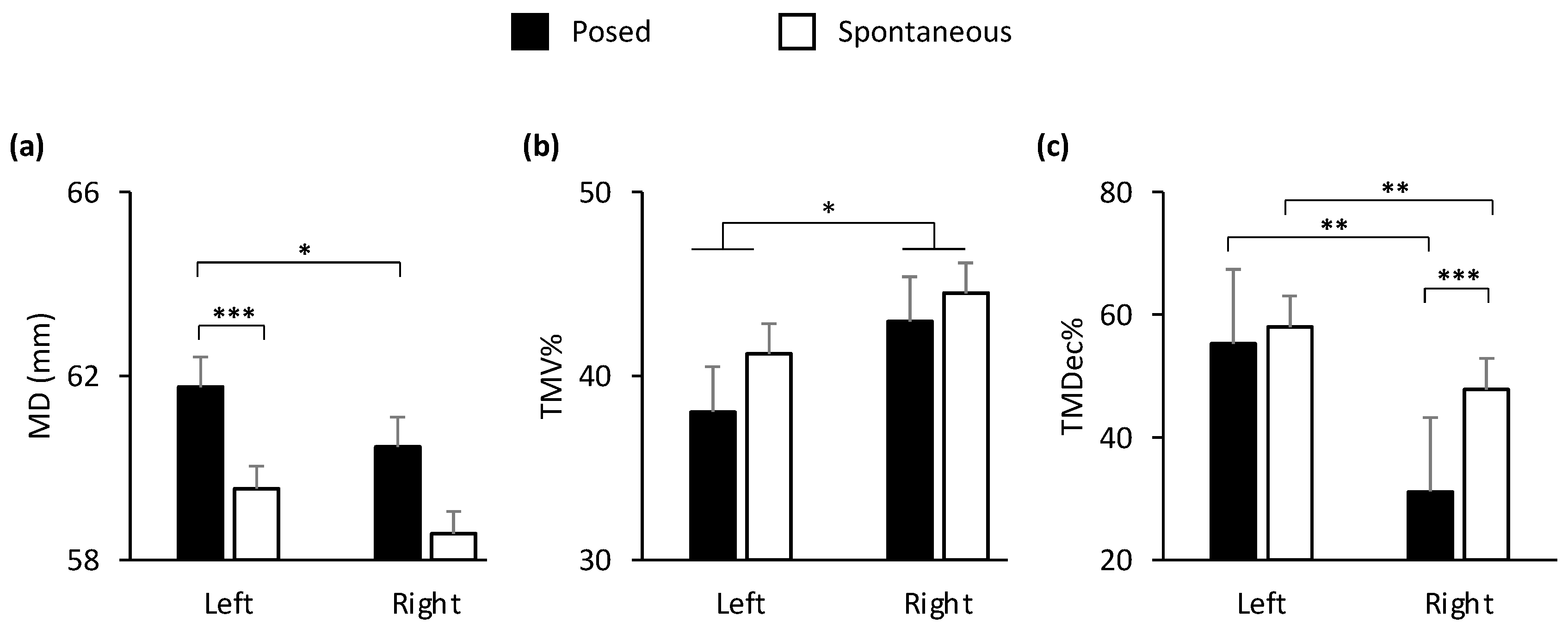

Figure 5.

Graphical representation of spatial and temporal components of movement in the lower part (i.e., cheilion markers, CH) of the face during posed and spontaneous expressions of happiness. A main effect of side of the face (Left vs. Right) was shown for: (b) Time to Maximum Distance (TMD%) and (c) Time to Maximum Acceleration (TMA%). TMD% was earlier in the right side of the face and TMA% was earlier in the left side of the face. A statistically significant interaction condition by side of the face was found for: (a) Maximum Distance (MD) and (d) Time to Maximum Deceleration (TMDec%). MD was wider during posed compared to a spontaneous smile both in the left and right sides of the face. TMDec% of the right cheilion during posed smiles was the earliest, compared to both the left cheilion and the same marker during spontaneous smiles. Error bars represent standard error. Asterisks indicate statistically significant comparisons (* = p < 0.05; ** = p < 0.01; *** = p < 0.001).

5. Comparison Analysis (Experiment 1 vs. 2)

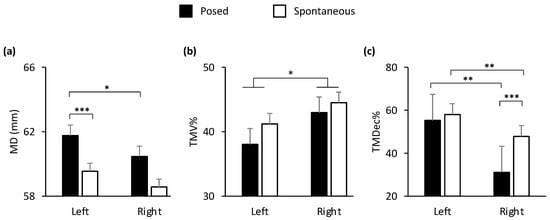

Mixed ANOVA: Posed vs. Spontaneous, Left vs. Right, and Experiment 1 vs. 2

When directly comparing the possible differences triggered by different induction methods in the expression of happiness (posed and spontaneous), the variable Experiment (1 vs. 2) was never found to be significant, and, consequently, neither was the 3-way interaction between condition, side of the face and experiment for all investigated variables. However, a statistically significant main effect of condition (Posed vs. Spontaneous) was found in the lower part of the face for all the kinematic parameters, except for TMV%. In the upper part of the face, only MD showed a main effect of condition (see Table 3). In general, considering both Experiments 1 and 2, the results confirmed the amplified choreography for posed expressions for spatial, velocity, and temporal parameters compared to spontaneous expressions (for a graphical representation of the main effects of condition, see Figure S3 in the Supplementary Materials). A main effect of side of the face (Left vs. Right) was shown for MD, TMV%, and TMDec%, and a statistically significant interaction condition by side of the face was found for MD and TMDec% (Figure 6 and Table 3). The results of the interaction showed that, during posed expressions, the left cheilion was more distal than the right cheilion (Figure 6a). Crucially, the peak Deceleration of the right cheilion during posed expressions occurred earlier than during spontaneous expressions, and earlier than the peak of the left cheilion during posed smiles (Figure 6c). Moreover, during spontaneous expressions, the peak Deceleration of the right cheilion occurred earlier than the peak of the left cheilion (Figure 6c). The results of the main effects showed that the left cheilion reached its Maximum Acceleration earlier than the right cheilion in both conditions (Figure 6c), but it reached its Maximum Distance later than the right cheilion in both conditions (Figure 6b).

Table 3.

Results of Mixed ANOVA (comparison analysis). Only parameters with at least one significant result were reported. Results on the main effect of condition are graphically represented in Figure S3 enclosed in Supplementary Materials. Three main effects of side of the face and two interactions were found in the lower part of the face (see Figure 6).

Figure 6.

Graphical representation of spatial and of temporal components of movement in the lower part (i.e., cheilion markers, CH) of the face during posed and spontaneous expressions of happiness. A main effect of side of the face (Left vs. Right) was shown for: (b) Time to Maximum Velocity (TMV%). TMV% was reached earlier in the left than the right cheilion (b). A statistically significant interaction condition by side of the face was found for: (a) Maximum Distance (MD) and (c) Time to Maximum Deceleration (TMDec%). MD of the left cheilion during posed smiles was the widest, compared to both the right cheilion and the same marker during spontaneous smiles (a). TMDec% during spontaneous smiles was earlier in the right than in the left side of the face (c). TMDec% of the right cheilion during posed smiles was the earliest, compared to both the left cheilion and the same marker during spontaneous smiles (c). Error bars represent standard error. Asterisks indicate statistically significant comparisons (* = p < 0.05; ** = p < 0.01; *** = p < 0.001).

6. Discussion

Facial expressions are a mosaic phenomenon, in which there is independent motor control of upper and lower facial expressions and a partially independent hemispheric motor control of the right and left sides [5]. Here, with two experiments, we reliably confirmed that facial movements provide relevant and consistent details to characterize and distinguish between spontaneous and posed expressions. In particular, the comparison analysis showed that a posed expression of happiness is characterized by increased peak distance of both cheilions and eyebrows, and increased peak velocity, acceleration, and deceleration of the cheilions compared to a spontaneous expression. In temporal terms, posed smiles show anticipated acceleration and deceleration peaks and a delayed peak distance compared to spontaneous smiles. These results were extended by showing also a lateralization pattern in spatiotemporal terms for posed expressions. The peak Distance, the Time to peak Velocity, and the Time to peak Deceleration appear, in fact, to be reliable markers of differences across the facial vertical axis. The peak Distance was increased, and the Velocity peak was reached earlier in the left side of the mouth compared to the right side. Whereas, in the second phase of the movement, after the velocity peak, an early Deceleration occurred in the right corner of the mouth. These data seem to indicate that the complex choreography of a fake smile implies a spatial amplification of the movement in the left hemiface, which then cascades into a slowdown in the final phase. More importantly, they tell us that this effect had a double peak across the hemiface, with the right corner of the mouth reaching earlier the peak Deceleration. In the case of a spontaneous smile, we find a lateralized effect only for one temporal component: the right corner of the mouth reached the Deceleration peak earlier with respect to the left side of the mouth.

6.1. Left vs. Right

In 2016, Ross and colleagues [5] were the first to describe a double-peak phenomenon. They reported that, in some cases, emotion-related expressions showed a slight relaxation before continuing to the final peak, and, in about one third of these expressions, the second innervation started on the contralateral side of the face. These qualitative observations led the authors to believe that the expressions were the result of two innervations, a “double-peak vertical blend”, indicating that the expression of interest had two independent motor components driven by opposite hemispheres. In the present study, in line with the hypothesis by Ross and colleagues [21], we found a consistent lateralization pattern in the left lower hemiface specific to posed expressions of happiness. That is, an acceleration that began first in the left corner of the mouth until the peak of maximum speed, beyond which an anticipated peak of Deceleration occurred in the right corner of the mouth.

The adoption of a novel 3-D kinematic approach allowed us to investigate the morphological and dynamic characteristics of lateralized expressions on each of the four quadrants resulting from the vertical and horizontal axes. Notably, previous studies using 2-D automated facial image analysis (e.g., [49]) found no evidence of asymmetry between the left and right side of a smile, likely due to a methodological limitation. Accurate assessment of asymmetry requires, in fact, either a frontal view of the face or precise 3-D registration. Moreover, a 3-D dynamic analysis is also required to exclude asymmetries that simply result from baseline differences in face shape.

In the light of these results, we speculate that it is necessary to study the expressions of emotions in each of the four quadrants resulting from the horizontal and vertical axes by distinguishing between spontaneous and posed displays, and investigating the function for which they are expressed and the type of anatomical pathway (i.e., Voluntary vs. Involuntary) underlying them, before we can draw a firm conclusion. Recent research points, indeed, at the existence of multiple interrelated networks, each associated with the processing of a specific component of emotions (i.e., generation, perception, regulation), which do not necessarily share the same lateralization patterns [50]. A recent meta-analysis revealed, in fact, that the perception, experience, and expression of emotion are each subserved by a distinct network [51]. Hence, the lateralization of emotion is a multi-layered phenomenon and, as such, should be considered.

6.2. Posed vs. Spontaneous

Results from two experiments demonstrated and confirmed that facial movements provide relevant and consistent details to characterize and distinguish between spontaneous and posed expressions. In line with our predictions, the results revealed that the speed and amplitude of the mouth as it widens into a smile are greater in posed than genuine happiness. In particular, a posed smile is characterized by an increase in the smile amplitude, speed, and deceleration, as indicated by the cheilion pair of markers. As concerns the upper part of the face, the results showed a similar increase in the Maximum Distance of the eyebrows when the participants performed a posed smile compared to when they smiled spontaneously. These findings confirm and extend previous literature [35,37,52,53] by showing that performing a fake smile entails a speeded choreography of amplified movements both in the lower and upper parts of the face.

The main limitation of our experiment is that it was not possible to extract the reaction times of the individual side markers (e.g., the right vs the left cheilion) because the videoclips triggered several smiles in sequence, and it was not easy to define the end of one smile and the beginning of the next. This is a widespread problem with ecological paradigms. We therefore focused on the speed at which the pair of markers on the mouth moved away: we defined the start of the movement as the event in which the speed crossed a 0.2 mm/s threshold and remained above it for longer than 100 ms. This parameter guaranteed us replicability and rigor, as it was not influenced by possible random sub-movements of a single marker, and it accounted for any head movement. However, this procedure did not allow us to define on which side of the face posed and spontaneous smiles began. Further studies are needed to clarify this aspect. Another limitation is that dynamic stimuli (video clips) could not be adopted for the Posed condition as well, because, otherwise, the voluntarily generated expressions would have overlapped and mixed with the authentic ones, not giving us the ability to discern one from the other. In the past, the use of static, posed, and archetypical stimuli, such as the one we adopted here for the Posed condition, has provided high scientific control and repeatability, but at the cost of ecological validity [54]. Further studies are therefore needed to adopt ecologically valid paradigms: in everyday life, posed expressions are produced to be perceived by another person (e.g., when mothers exaggerate their facial movements to be recognized by their infant children). The next step will be, therefore, to adopt real contexts to induce posed emotional displays.

This approach raises two questions: What if posed expressions that often occur in everyday life are nonetheless genuine? At what point does the benefit of using ecologically valid paradigms balance out the increase in inter-individual variability? Emotion science is now facing a classic trade-off. We believe, however, that if the science of emotions were to remain still anchored in prototypical displays and static induction methods, it would not rise to the level of understanding the processes that evolved in response to real social contexts during the phylogenetic development of the human species. Having a comprehensive taxonomy of real emotion expression will help to formulate new theories with a greater degree of complexity (for a review, see [15]).

6.3. Emotional Induction vs. Motor Contagion

Our data on the Likert scale indicate that both Emotional Induction and Motor Contagion were effective in activating a felt emotion of happiness. Moreover, videos adopted for the Motor Contagion were rated as more intense than those for the Emotional Induction. However, the comparison analysis on the two experiments showed no kinematic differences on spontaneous expressions depending on the method. Spontaneous expressions seem, therefore, to be conveyed by an automatic pathway, which is difficult to modify. This result is in line with the notion that a genuine emotion originates from subcortical brain areas that provide excitatory stimuli to the facial nerve nucleus via extrapyramidal motor tracts (i.e., the Involuntary Pathway). Future studies are needed to apply this methodology to other emotions in order to accurately investigate the full range of subtle differences in facial expressions and the role played by the Involuntary Pathway in emotion expression.

6.4. Clinical applications

The possibility of discriminating the spontaneous vs. posed expressions of emotions by means of sophisticated analysis of facial movements has potential for future clinical applications. One example is the application of this technique to patients suffering from Parkinson’s disease (PD), who are characterized by a deficit in the expression of genuine emotions (i.e., amimia), but who are still able to intentionally produce emotional expressions [55], thus manifesting the automatic–voluntary dissociation that underlies the distinction between the Voluntary and Involuntary Pathways. From a neuroanatomical point of view, patients with PD present defective functioning of the basal ganglia [56]. The connections between the basal ganglia and the cerebral cortex form extrapyramidal circuits, which are divided into five parallel networks connected to: the frontal motor and oculomotor cortex, the prefrontal cortex dorsolateral, the anterior cingulate cortex, and the orbitofrontal cortex [57]. Connections with the orbitofrontal and cingulate cortex constitute the limbic circuits, which also involve subcortical structures, such as the amygdala and hippocampus [58]. This rich neural network between the basal ganglia and the structures of the limbic system establishes a link between the perception of emotions, their motor production through facial expressions, and final recognition [59]. An in-depth investigation of the ability of PD patients to produce spontaneous and posed expressions, using an advanced and validated protocol for emotion induction and a sophisticated technique for data acquisition and analysis, could, therefore, be applied to investigate the emotion expression deficits in these patients. Furthermore, future research could investigate the correlation between the deficit in emotion production and functional/structural alterations of the brain in PD patients, in order to identify a behavioral biomarker that can estimate the severity of the disease.

In applicative terms, once the Clepsydra model has been tested on all basic emotions and validated on a large population including people of different ethnicities, it will be possible to use it through automatic facial recognition applications that are already present in the latest smartphones. The model will then allow it to be applied on a large scale and also reveal subtle changes in the expression of mixed emotions (e.g., happiness and surprise).

7. Conclusions

Despite the importance of emotions in human functioning, scientists have been unable to reach a consensus on the debated issue of lateralization of emotions. Our research, conducted with a 3-D high-definition optoelectronic system in conjunction with FACS, showed that posed smiles were more amplified than spontaneous smiles, with maximum acceleration occurring first in the left hemiface, followed by an earlier deceleration peak in the right corner of the mouth. This result would be in line with a recent hypothesis that the temporal dynamics of movement distinguish a posed smile from a spontaneous one: according to Ross and colleagues, posed expressions would, in fact, begin on the right side of the face [25]. The overall integration of this knowledge with our new data seems to suggest that posed smiles might begin in the right facial area, then rapidly expand into the contralateral hemiface before completing movement in the right side of the face. This loop seems to indicate dual hemispheric innervation along the vertical axis, and we can infer that both hemispheres exert motor control to produce an apparently unified expression [10].

Our findings may be the key to resolving the apparent conflict between various theories that have attempted to discriminate true and false expressions of happiness, and it will aid in clarifying the hemispheric bases of emotion expression. We believe, indeed, that investigating the dynamic pattern of facial expressions of emotions, which can be controlled consciously only in part, would provide a useful operational test for comparing the different predictions of various lateralization hypotheses, thus allowing this long-standing conundrum to be solved.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/biology12091160/s1. Figure S1: Experiment 1. Graphical representation of spatial, speed and temporal components of movement of the face during Posed and Spontaneous expressions of happiness. Figure S2: Experiment 2. Graphical representation of spatial, speed and temporal components of movement of the face during Posed and Spontaneous expressions of happiness. Figure S3: Mixed ANOVA (comparison analysis). Graphical representation of spatial, speed and temporal components of movement of the face during Posed and Spontaneous expressions of happiness.

Author Contributions

Conceptualization, E.S., C.S. and L.S.; methodology, C.S. and L.S.; software, E.S.; validation, E.S, C.S., S.B. and L.S.; formal analysis, E.S. and A.S.; investigation, E.S., S.B. and B.C.B.; resources, L.S.; data curation, E.S. and A.S.; writing—original draft preparation, E.S. and L.S.; writing—review and editing E.S., C.S., S.B. and L.S.; visualization, E.S., C.S., A.S., S.B., B.C.B. and L.S.; supervision, L.S.; project administration, L.S.; funding acquisition, L.S. All authors have read and agreed to the published version of the manuscript.

Funding

The study was supported by a grant from MIUR (Dipartimenti di Eccellenza DM11/05/2017 no. 262) to the Department of General Psychology. The present study was partially supported by a grant from the University of Padova (Supporting TAlent in ReSearch @ University of Padova—STARS Grants no. 2017) to C.S.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of University of Padova (protocol n. 3580 and date of approval 1 June 2020; protocol n. 4539 and date of approval 31 December 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The dataset has been uploaded in the Supplementary Materials section.

Acknowledgments

We thank Alessio Miolla for technical support with the FACS coding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ekman, P. Lie Catching and Microexpressions. In The Philosophy of Deception; Martin, C.W., Ed.; Oxford University Press: New York, NY, USA, 2009; pp. 118–136. ISBN 978-0-19-532793-9. [Google Scholar]

- Koff, E.; Borod, J.; Strauss, E. Development of Hemiface Size Asymmetry. Cortex 1985, 21, 153–156. [Google Scholar] [CrossRef]

- Rinn, W.E. The Neuropsychology of Facial Expression: A Review of the Neurological and Psychological Mechanisms for Producing Facial Expressions. Psychol. Bull. 1984, 95, 52–77. [Google Scholar] [CrossRef]

- Morecraft, R.J.; Stilwell–Morecraft, K.S.; Rossing, W.R. The Motor Cortex and Facial Expression:: New Insights From Neuroscience. Neurologist 2004, 10, 235–249. [Google Scholar] [CrossRef]

- Ross, E.D.; Gupta, S.S.; Adnan, A.M.; Holden, T.L.; Havlicek, J.; Radhakrishnan, S. Neurophysiology of Spontaneous Facial Expressions: I. Motor Control of the Upper and Lower Face Is Behaviorally Independent in Adults. Cortex J. Devoted Study Nerv. Syst. Behav. 2016, 76, 28–42. [Google Scholar] [CrossRef] [PubMed]

- Morecraft, R.J.; Louie, J.L.; Herrick, J.L.; Stilwell-Morecraft, K.S. Cortical Innervation of the Facial Nucleus in the Non-Human Primate: A New Interpretation of the Effects of Stroke and Related Subtotal Brain Trauma on the Muscles of Facial Expression. Brain J. Neurol. 2001, 124, 176–208. [Google Scholar] [CrossRef] [PubMed]

- Gazzaniga, M.S.; Smylie, C.S. Hemispheric Mechanisms Controlling Voluntary and Spontaneous Facial Expressions. J. Cogn. Neurosci. 1990, 2, 239–245. [Google Scholar] [CrossRef]

- Hopf, H.C.; Md, W.M.-F.; Hopf, N.J. Localization of Emotional and Volitional Facial Paresis. Neurology 1992, 42, 1918. [Google Scholar] [CrossRef]

- Krippl, M.; Karim, A.A.; Brechmann, A. Neuronal Correlates of Voluntary Facial Movements. Front. Hum. Neurosci. 2015, 9, 598. [Google Scholar] [CrossRef] [PubMed]

- Ross, E.D.; Gupta, S.S.; Adnan, A.M.; Holden, T.L.; Havlicek, J.; Radhakrishnan, S. Neurophysiology of Spontaneous Facial Expressions: II. Motor Control of the Right and Left Face Is Partially Independent in Adults. Cortex 2019, 111, 164–182. [Google Scholar] [CrossRef]

- Demaree, H.A.; Everhart, D.E.; Youngstrom, E.A.; Harrison, D.W. Brain Lateralization of Emotional Processing: Historical Roots and a Future Incorporating “Dominance”. Behav. Cogn. Neurosci. Rev. 2005, 4, 3–20. [Google Scholar] [CrossRef]

- Killgore, W.D.S.; Yurgelun-Todd, D.A. The Right-Hemisphere and Valence Hypotheses: Could They Both Be Right (and Sometimes Left)? Soc. Cogn. Affect. Neurosci. 2007, 2, 240–250. [Google Scholar] [CrossRef] [PubMed]

- Davidson, R.J. Affect, Cognition, and Hemispheric Specialization. In Emotions, Cognition, and Behavior; Cambridge University Press: New York, NY, USA, 1985; pp. 320–365. ISBN 978-0-521-25601-8. [Google Scholar]

- Ross, E.D.; Prodan, C.I.; Monnot, M. Human Facial Expressions Are Organized Functionally Across the Upper-Lower Facial Axis. Neuroscientist 2007, 13, 433–446. [Google Scholar] [CrossRef] [PubMed]

- Straulino, E.; Scarpazza, C.; Sartori, L. What Is Missing in the Study of Emotion Expression? Front. Psychol. 2023, 14, 1158136. [Google Scholar] [CrossRef] [PubMed]

- Sackeim, H.A.; Gur, R.C.; Saucy, M.C. Emotions Are Expressed More Intensely on the Left Side of the Face. Science 1978, 202, 434–436. [Google Scholar] [CrossRef]

- Ekman, P.; Hager, J.C.; Friesen, W.V. The Symmetry of Emotional and Deliberate Facial Actions. Psychophysiology 1981, 18, 101–106. [Google Scholar] [CrossRef]

- Borod, J.C.; Cicero, B.A.; Obler, L.K.; Welkowitz, J.; Erhan, H.M.; Santschi, C.; Grunwald, I.S.; Agosti, R.M.; Whalen, J.R. Right Hemisphere Emotional Perception: Evidence across Multiple Channels. Neuropsychology 1998, 12, 446–458. [Google Scholar] [CrossRef]

- Borod, J.C.; Koff, E.; White, B. Facial Asymmetry in Posed and Spontaneous Expressions of Emotion. Brain Cogn. 1983, 2, 165–175. [Google Scholar] [CrossRef]

- Thompson, J.K. Right Brain, Left Brain; Left Face, Right Face: Hemisphericity and the Expression of Facial Emotion. Cortex J. Devoted Study Nerv. Syst. Behav. 1985, 21, 281–299. [Google Scholar] [CrossRef]

- Ross, E.D. Differential Hemispheric Lateralization of Emotions and Related Display Behaviors: Emotion-Type Hypothesis. Brain Sci. 2021, 11, 1034. [Google Scholar] [CrossRef]

- Duchenne de Boulogne, G.-B. The Mechanism of Human Facial Expression; Cuthbertson, R.A., Ed.; Studies in Emotion and Social Interaction; Cambridge University Press: Cambridge, UK, 1990; ISBN 978-0-521-36392-1. [Google Scholar]

- Ekman, P.; Friesen, W.V. Felt, False, and Miserable Smiles. J. Nonverbal Behav. 1982, 6, 238–252. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; O’Sullivan, M. Smiles When Lying. J. Pers. Soc. Psychol. 1988, 54, 414–420. [Google Scholar] [CrossRef] [PubMed]

- Ross, E.D.; Pulusu, V.K. Posed versus Spontaneous Facial Expressions Are Modulated by Opposite Cerebral Hemispheres. Cortex 2013, 49, 1280–1291. [Google Scholar] [CrossRef] [PubMed]

- Barrett, L.F.; Adolphs, R.; Marsella, S.; Martinez, A.M.; Pollak, S.D. Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychol. Sci. Public Interest J. Am. Psychol. Soc. 2019, 20, 1–68. [Google Scholar] [CrossRef] [PubMed]

- Siedlecka, E.; Denson, T.F. Experimental Methods for Inducing Basic Emotions: A Qualitative Review. Emot. Rev. 2019, 11, 87–97. [Google Scholar] [CrossRef]

- Kavanagh, L.C.; Winkielman, P. The Functionality of Spontaneous Mimicry and Its Influences on Affiliation: An Implicit Socialization Account. Front. Psychol. 2016, 7, 458. [Google Scholar] [CrossRef]

- Prochazkova, E.; Kret, M.E. Connecting Minds and Sharing Emotions through Mimicry: A Neurocognitive Model of Emotional Contagion. Neurosci. Biobehav. Rev. 2017, 80, 99–114. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Fogassi, L.; Gallese, V. Neurophysiological Mechanisms Underlying the Understanding and Imitation of Action. Nat. Rev. Neurosci. 2001, 2, 661–670. [Google Scholar] [CrossRef]

- Hess, U.; Fischer, A. Emotional Mimicry: Why and When We Mimic Emotions. Soc. Personal. Psychol. Compass 2014, 8, 45–57. [Google Scholar] [CrossRef]

- Straulino, E.; Scarpazza, C.; Miolla, A.; Spoto, A.; Betti, S.; Sartori, L. Different Induction Methods Reveal the Kinematic Features of Posed vs. Spontaneous Expressions. under review.

- Ekman, P.; Friesen, W. Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: Palo Alto, CA, USA, 1978. [Google Scholar]

- Straulino, E.; Miolla, A.; Scarpazza, C.; Sartori, L. Facial Kinematics of Spontaneous and Posed Expression of Emotions. In Proceedings of the International Society for Research on Emotion—ISRE 2022, Los Angeles, CA, USA, 15–18 July 2022. [Google Scholar]

- Sowden, S.; Schuster, B.A.; Keating, C.T.; Fraser, D.S.; Cook, J.L. The Role of Movement Kinematics in Facial Emotion Expression Production and Recognition. Emot. Wash. DC 2021, 21, 1041–1061. [Google Scholar] [CrossRef]

- Zane, E.; Yang, Z.; Pozzan, L.; Guha, T.; Narayanan, S.; Grossman, R.B. Motion-Capture Patterns of Voluntarily Mimicked Dynamic Facial Expressions in Children and Adolescents With and Without ASD. J. Autism Dev. Disord. 2019, 49, 1062–1079. [Google Scholar] [CrossRef]

- Guo, H.; Zhang, X.-H.; Liang, J.; Yan, W.-J. The Dynamic Features of Lip Corners in Genuine and Posed Smiles. Front. Psychol. 2018, 9, 202. [Google Scholar] [CrossRef] [PubMed]

- Fox, D. The Scientists Studying Facial Expressions. Nature 2020. Online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Pictures of Facial Affect; Consulting Psychologists Press: Palo Alto, CA, USA, 1976. [Google Scholar]

- Miolla, A.; Cardaioli, M.; Scarpazza, C. Padova Emotional Dataset of Facial Expressions (PEDFE): A Unique Dataset of Genuine and Posed Emotional Facial Expressions. Behav. Res. Methods 2022. [Google Scholar] [CrossRef] [PubMed]

- Le Mau, T.; Hoemann, K.; Lyons, S.H.; Fugate, J.M.B.; Brown, E.N.; Gendron, M.; Barrett, L.F. Professional Actors Demonstrate Variability, Not Stereotypical Expressions, When Portraying Emotional States in Photographs. Nat. Commun. 2021, 12, 5037. [Google Scholar] [CrossRef] [PubMed]

- Vimercati, S.L.; Rigoldi, C.; Albertini, G.; Crivellini, M.; Galli, M. Quantitative Evaluation of Facial Movement and Morphology. Ann. Otol. Rhinol. Laryngol. 2012, 121, 246–252. [Google Scholar] [CrossRef]

- Ceccarini, F.; Castiello, U. The Grasping Side of Post-Error Slowing. Cognition 2018, 179, 1–13. [Google Scholar] [CrossRef]

- JASP Team JASP-Version 0.16.4. Comput. Softw. 2022. Available online: https://jasp-stats.org/faq/how-do-i-cite-jasp/ (accessed on 18 July 2023).

- Sellke, T.; Bayarri, M.J.; Berger, J.O. Calibration of ρ Values for Testing Precise Null Hypotheses. Am. Stat. 2001, 55, 62–71. [Google Scholar] [CrossRef]

- Erdfelder, E.; Faul, F.; Buchner, A. GPOWER: A General Power Analysis Program. Behav. Res. Methods Instrum. Comput. 1996, 28, 1–11. [Google Scholar] [CrossRef]

- Sidequersky, F.V.; Mapelli, A.; Annoni, I.; Zago, M.; De Felício, C.M.; Sforza, C. Three-Dimensional Motion Analysis of Facial Movement during Verbal and Nonverbal Expressions in Healthy Subjects. Clin. Anat. 2016, 29, 991–997. [Google Scholar] [CrossRef]

- Gross, J.J.; Levenson, R.W. Emotion Elicitation Using Films. Cogn. Emot. 1995, 9, 87–108. [Google Scholar] [CrossRef]

- Ambadar, Z.; Cohn, J.F.; Reed, L.I. All Smiles Are Not Created Equal: Morphology and Timing of Smiles Perceived as Amused, Polite, and Embarrassed/Nervous. J. Nonverbal Behav. 2009, 33, 17–34. [Google Scholar] [CrossRef] [PubMed]

- Palomero-Gallagher, N.; Amunts, K. A Short Review on Emotion Processing: A Lateralized Network of Neuronal Networks. Brain Struct. Funct. 2022, 227, 673–684. [Google Scholar] [CrossRef] [PubMed]

- Morawetz, C.; Riedel, M.C.; Salo, T.; Berboth, S.; Eickhoff, S.B.; Laird, A.R.; Kohn, N. Multiple Large-Scale Neural Networks Underlying Emotion Regulation. Neurosci. Biobehav. Rev. 2020, 116, 382–395. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, K.L.; Ambadar, Z.; Cohn, J.F.; Reed, L.I. Movement Differences between Deliberate and Spontaneous Facial Expressions: Zygomaticus Major Action in Smiling. J. Nonverbal Behav. 2006, 30, 37–52. [Google Scholar] [CrossRef]

- Schmidt, K.L.; Bhattacharya, S.; Denlinger, R. Comparison of Deliberate and Spontaneous Facial Movement in Smiles and Eyebrow Raises. J. Nonverbal Behav. 2009, 33, 35–45. [Google Scholar] [CrossRef]

- Kret, M.E.; De Gelder, B. A Review on Sex Differences in Processing Emotional Signals. Neuropsychologia 2012, 50, 1211–1221. [Google Scholar] [CrossRef]

- Smith, M.C.; Smith, M.K.; Ellgring, H. Spontaneous and Posed Facial Expression in Parkinson’s Disease. J. Int. Neuropsychol. Soc. JINS 1996, 2, 383–391. [Google Scholar] [CrossRef]

- Blandini, F.; Nappi, G.; Tassorelli, C.; Martignoni, E. Functional Changes of the Basal Ganglia Circuitry in Parkinson’s Disease. Prog. Neurobiol. 2000, 62, 63–88. [Google Scholar] [CrossRef]

- Alexander, G.E.; Crutcher, M.D. Functional Architecture of Basal Ganglia Circuits: Neural Substrates of Parallel Processing. Trends Neurosci. 1990, 13, 266–271. [Google Scholar] [CrossRef]

- McDonald, A.J. Organization of Amygdaloid Projections to the Prefrontal Cortex and Associated Striatum in the Rat. Neuroscience 1991, 44, 1–14. [Google Scholar] [CrossRef]

- Adolphs, R. Recognizing Emotion from Facial Expressions: Psychological and Neurological Mechanisms. Behav. Cogn. Neurosci. Rev. 2002, 1, 21–62. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).