Cardiac Healthcare Digital Twins Supported by Artificial Intelligence-Based Algorithms and Extended Reality—A Systematic Review

Abstract

1. Introduction

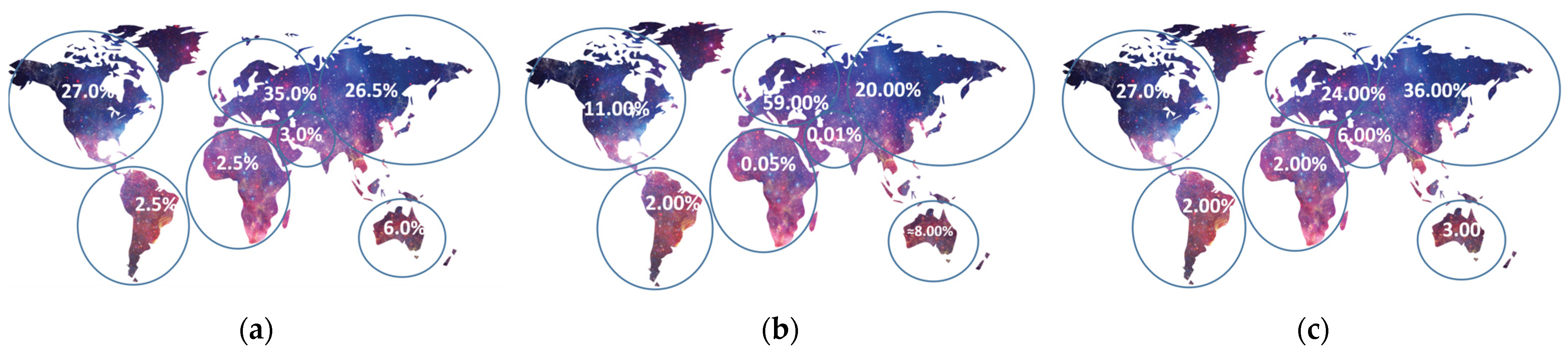

2. Materials and Methods

- (1)

- Duplicate and non-relevant records were removed;

- (2)

- Resources whose titles and abstracts were not relevant to the topic were excluded;

- (3)

- Non-retrieved resources were removed;

- (4)

- Conference papers, reviews, Ph.D. theses, and sources that did not contain information about the Metaverse, AI, and XR in the context of cardiology used were excluded.

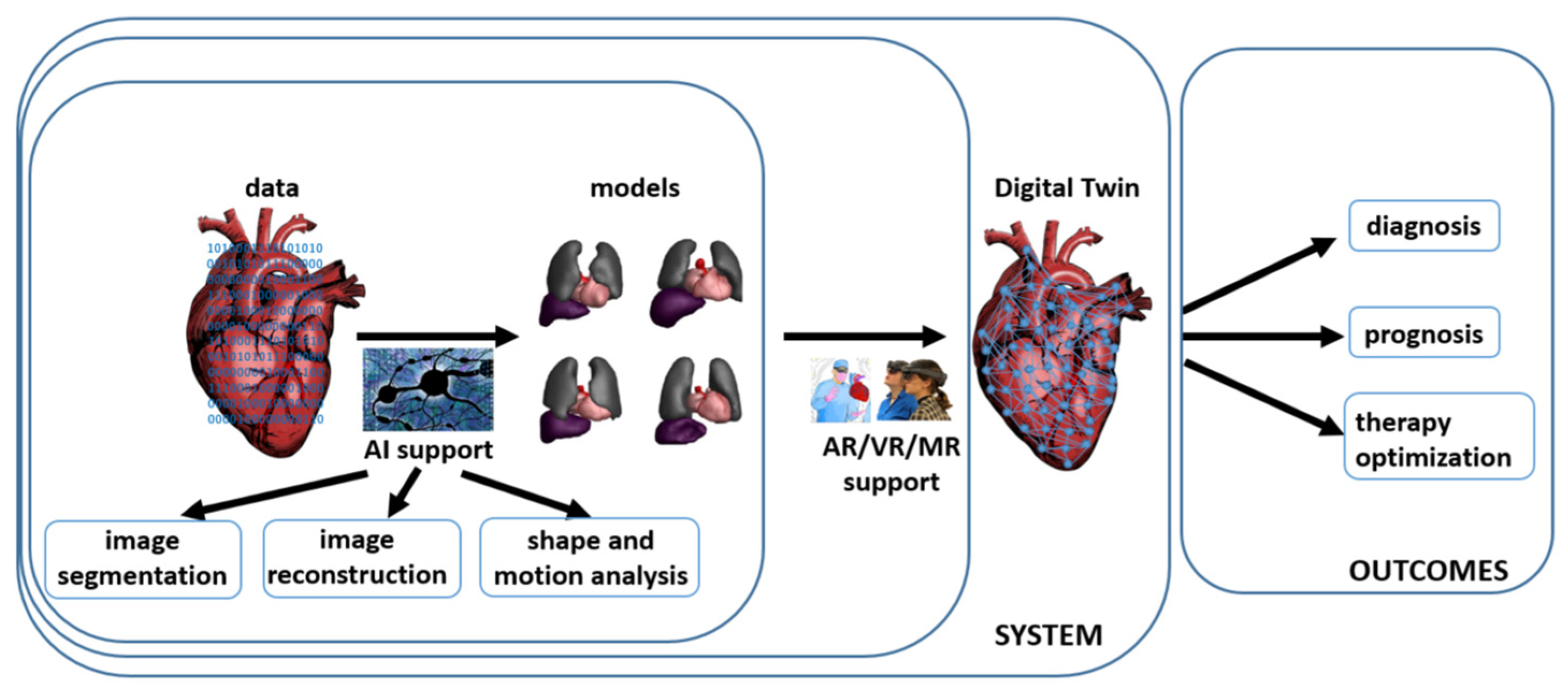

3. Digital Twins in Cardiology—The Heart Digital Twin

4. Extended Reality in Cardiology

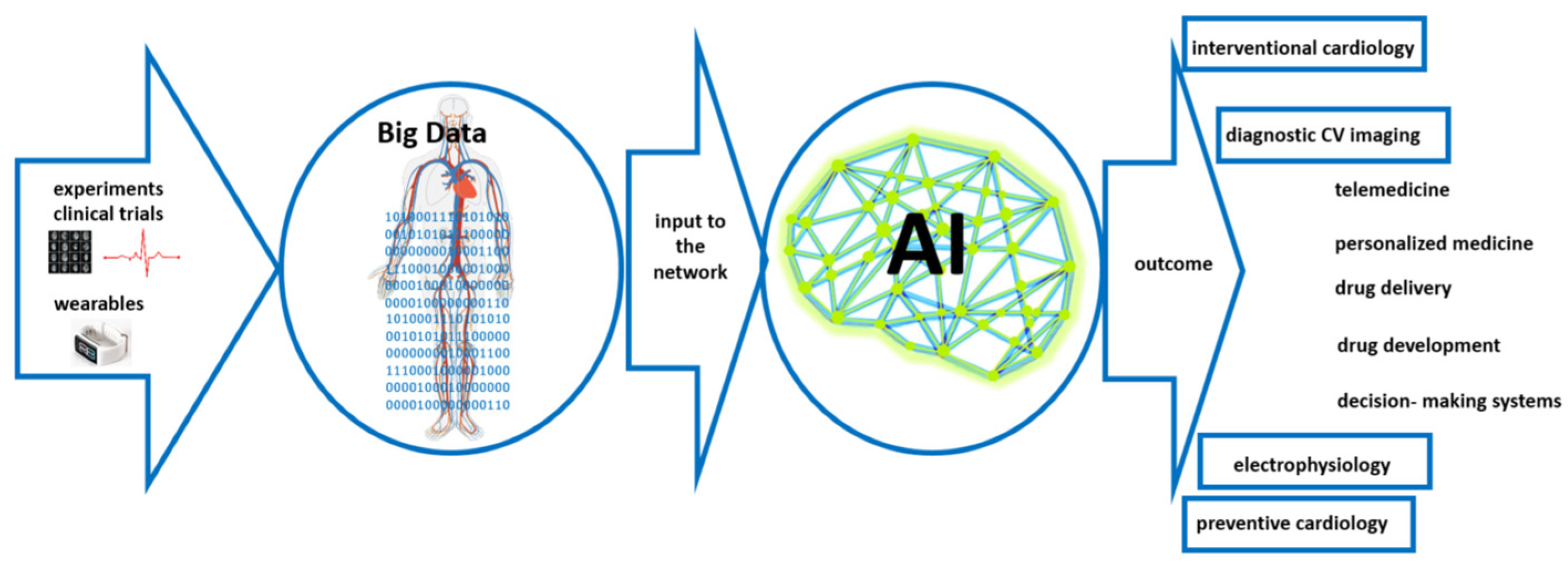

5. Artificial Intelligence-Based Support in Cardiology

5.1. Application of the You-Only-Look-Once (YOLO) Algorithm

5.2. Genetic Algorithms

5.3. Artificial Neural Networks

5.4. Convolutional Neural Networks

5.5. Recurrent Neural Networks

5.6. Spiking Neural Networks

5.7. Generative Adversarial Networks

5.8. Graph Neural Networks

5.9. Transformers

5.10. Quantum Neural Networks

5.11. Evaluation Metrics in Medical Image Segmentation

6. Data and Data Security Issues Connected with the Metaverse and Artificial Intelligence

7. Ethical Issues Connected with the Metaverse and Artificial Intelligence

8. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kamel Boulos, M.N.; Zhang, P. Digital Twins: From Personalised Medicine to Precision Public Health. J. Pers. Med. 2021, 11, 745. [Google Scholar] [CrossRef] [PubMed]

- Sabri, A.; Sönmez, U. Digital Twin in Health Care. In Digital Twin Driven Intelligent Systems and Emerging Metaverse; Enis, K., Aydin, Ö., Cali, Ü., Challenger, M., Eds.; Springer Nature: Singapore, 2023; pp. 209–231. [Google Scholar] [CrossRef]

- Venkatesh, K.P.; Brito, G.; Kamel Boulos, M.N. Health Digital Twins in Life Science and Health Care Innovation. Annu. Rev. Pharmacol. Toxicol. 2024, 64, 159–170. [Google Scholar] [CrossRef] [PubMed]

- Tortorella, G.L.; Fogliatto, F.S.; Mac Cawley Vergara, A.; Vassolo, R.; Sawhney, R. Healthcare 4.0: Trends, Challenges and Research Directions. Prod. Plan. Control 2020, 31, 1245–1260. [Google Scholar] [CrossRef]

- Duque, R.; Bravo, C.; Bringas, S.; Postigo, D. Leveraging a Visual Language for the Awareness-Based Design of Interaction Requirements in Digital Twins. Future Gener. Comput. Syst. 2024, 153, 41–51. [Google Scholar] [CrossRef]

- Logeswaran, A.; Munsch, C.; Chong, Y.J.; Ralph, N.; McCrossnan, J. The Role of Extended Reality Technology in Healthcare Education: Towards a Learner-Centred Approach. Future Health J. 2021, 8, e79–e84. [Google Scholar] [CrossRef]

- Castille, J.; Remy, S.; Vermue, H.; Victor, J. The Use of Virtual Reality to Assess the Bony Landmarks at the Knee Joint—The Role of Imaging Modality and the Assessor’s Experience. Knee 2024, 46, 41–51. [Google Scholar] [CrossRef]

- Marrone, S.; Costanzo, R.; Campisi, B.M.; Avallone, C.; Buscemi, F.; Cusimano, L.M.; Bonosi, L.; Brunasso, L.; Scalia, G.; Iacopino, D.G.; et al. The Role of Extended Reality in Eloquent Area Lesions: A Systematic Review. Neurosurg. Focus 2023, 56, E16. [Google Scholar] [CrossRef]

- Cai, Y.; Wu, X.; Cao, Q.; Zhang, X.; Pregowska, A.; Osial, M.; Dolega-Dolegowski, D.; Kolecki, R.; Proniewska, K. Information and Communication Technologies Combined with Mixed Reality as Supporting Tools in Medical Education. Electronics 2022, 11, 3778. [Google Scholar] [CrossRef]

- Garlinska, M.; Osial, M.; Proniewska, K.; Pregowska, A. The Influence of Emerging Technologies on Distance Education. Electronics 2023, 12, 1550. [Google Scholar] [CrossRef]

- Hasan, M.M.; Islam, M.U.; Sadeq, M.J.; Fung, W.-K.; Uddin, J. Review on the Evaluation and Development of Artificial Intelligence for COVID-19 Containment. Sensors 2023, 23, 527. [Google Scholar] [CrossRef] [PubMed]

- Hosny, K.M.; Elshoura, D.; Mohamed, E.R.; Vrochidou, E.; Papakostas, G.A. Deep Learning and Optimization-Based Methods for Skin Lesions Segmentation: A Review. IEEE Access 2023, 11, 85467–85488. [Google Scholar] [CrossRef]

- Young, M.R.; Abrams, N.; Ghosh, S.; Rinaudo, J.A.S.; Marquez, G.; Srivastava, S. Prediagnostic Image Data, Artificial Intelligence, and Pancreatic Cancer: A Tell-Tale Sign to Early Detection. Pancreas 2020, 49, 882–886. [Google Scholar] [CrossRef]

- Khayyam, H.; Madani, A.; Kafieh, R.; Hekmatnia, A.; Hameed, B.S.; Krishnan, U.M. Artificial Intelligence-Driven Diagnosis of Pancreatic Cancer. Cancers 2022, 14, 5382. [Google Scholar] [CrossRef]

- Granata, V.; Fusco, R.; Setola, S.V.; Galdiero, R.; Maggialetti, N.; Silvestro, L.; De Bellis, M.; Di Girolamo, E.; Grazzini, G.; Chiti, G.; et al. Risk Assessment and Pancreatic Cancer: Diagnostic Management and Artificial Intelligence. Cancers 2023, 15, 351. [Google Scholar] [CrossRef]

- Rethlefsen, M.L.; Kirtley, S.; Waffenschmidt, S.; Ayala, A.P.; Moher, D.; Page, M.J.; Koffel, J.B.; Group, P.-S. PRISMA-S: An Extension to the PRISMA Statement for Reporting Literature Searches in Systematic Reviews. Syst. Rev. 2021, 10, 39. [Google Scholar] [CrossRef] [PubMed]

- Garg, A.; Mou, J.; Su, S.; Gao, L. Reconfigurable Battery Systems: Challenges and Safety Solutions Using Intelligent System Framework Based on Digital Twins. IET Collab. Intell. Manuf. 2022, 4, 232–248. [Google Scholar] [CrossRef]

- Subasi, A.; Subasi, M.E. Digital Twins in Healthcare and Biomedicine. In Artificial Intelligence, Big Data, Blockchain and 5G for the Digital Transformation of the Healthcare Industry: A Movement Toward More Resilient and Inclusive Societies; Academic Press: London, UK, 2024; pp. 365–401. [Google Scholar] [CrossRef]

- Banerjee, S.; Jesubalan, N.G.; Kulkarni, A.; Agarwal, A.; Rathore, A.S. Developing cyber-physical system and digital twin for smart manufacturing: Methodology and case study of continuous clarification. J. Ind. Inf. Integr. 2024, 38, 100577. [Google Scholar] [CrossRef]

- Capriulo, M.; Pizzolla, I.; Briganti, G. On the Use of Patient-Reported Measures in Digital Medicine to Increase Healthcare Resilience. In Artificial Intelligence, Big Data, Blockchain and 5G for the Digital Transformation of the Healthcare Industry: A Movement Toward More Resilient and Inclusive Societies; Academic Press: London, UK, 2024; pp. 41–66. [Google Scholar] [CrossRef]

- Jarrett, A.M.; Hormuth, D.A.; Wu, C.; Kazerouni, A.S.; Ekrut, D.A.; Virostko, J.; Sorace, A.G.; DiCarlo, J.C.; Kowalski, J.; Patt, D.; et al. Evaluating Patient-Specific Neoadjuvant Regimens for Breast Cancer via a Mathematical Model Constrained by Quantitative Magnetic Resonance Imaging Data. Neoplasia 2020, 22, 820–830. [Google Scholar] [CrossRef] [PubMed]

- Scheufele, K.; Subramanian, S.; Biros, G. Fully Automatic Calibration of Tumor-Growth Models Using a Single MpMRI Scan. IEEE Trans. Med. Imaging 2020, 40, 193–204. [Google Scholar] [CrossRef]

- Lorenzo, G.; Heiselman, J.S.; Liss, M.A.; Miga, M.I.; Gomez, H.; Yankeelov, T.E.; Reali, A.; Hughes, T.J.R.; Lorenzo, G. Patient-Specific Computational Forecasting of Prostate Cancer Growth during Active Surveillance Using an Imaging-Informed Biomechanistic Model. arXiv 2023, arXiv:2310.00060. [Google Scholar]

- Wu, C.; Jarrett, A.M.; Zhou, Z.; Elshafeey, N.; Adrada, B.E.; Candelaria, R.P.; Mohamed, R.M.M.; Boge, M.; Huo, L.; White, J.B.; et al. MRI-Based Digital Models Forecast Patient-Specific Treatment Responses to Neoadjuvant Chemotherapy in Triple-Negative Breast Cancer. Cancer Res. 2022, 82, 3394–3404. [Google Scholar] [CrossRef] [PubMed]

- Azzaoui, A.E.; Kim, T.W.; Loia, V.; Park, J.H. Blockchain-Based Secure Digital Twin Framework for Smart Healthy City. In Advanced Multimedia and Ubiquitous Engineering; Park, J.J., Loia, V., Pan, Y., Sung, Y., Eds.; Springer: Singapore, 2021; pp. 107–113. [Google Scholar]

- Croatti, A.; Gabellini, M.; Montagna, S.; Ricci, A. On the Integration of Agents and Digital Twins in Healthcare. J. Med. Syst. 2020, 44, 161. [Google Scholar] [CrossRef] [PubMed]

- Corral-Acero, J.; Margara, F.; Marciniak, M.; Rodero, C.; Loncaric, F.; Feng, Y.; Gilbert, A.; Fernandes, J.F.; Bukhari, H.A.; Wajdan, A.; et al. The “Digital Twin” to Enable the Vision of Precision Cardiology. Eur. Heart J. 2020, 41, 4556–4564B. [Google Scholar] [CrossRef] [PubMed]

- Gerach, T.; Schuler, S.; Fröhlich, J.; Lindner, L.; Kovacheva, E.; Moss, R.; Wülfers, E.M.; Seemann, G.; Wieners, C.; Loewe, A. Mathematics Electro-Mechanical Whole-Heart Digital Twins: A Fully Coupled Multi-Physics Approach. Mathematics 2021, 9, 1247. [Google Scholar] [CrossRef]

- Laita, N.; Rosales, R.M.; Wu, M.; Claus, P.; Janssens, S.; Martínez, M.Á.; Doblaré, M.; Peña, E. On Modeling the in Vivo Ventricular Passive Mechanical Behavior from in Vitro Experimental Properties in Porcine Hearts. Comput. Struct. 2024, 292, 107241. [Google Scholar] [CrossRef]

- Chen, Y.C.; Zheng, G.; Donner, D.G.; Wright, D.K.; Greenwood, J.P.; Marwick, T.H.; McMullen, J.R. Cardiovascular Magnetic Resonance Imaging for Sequential Assessment of Cardiac Fibrosis in Mice: Technical Advancements and Reverse Translation. Am. J. Physiol. Heart Circ. Physiol. 2024, 326, H1–H24. [Google Scholar] [CrossRef] [PubMed]

- Kouzehkonan, V.G.; Paul Finn, J. Artificial Intelligence in Cardiac MRI. In Intelligence-Based Cardiology and Cardiac Surgery: Artificial Intelligence and Human Cognition in Cardiovascular Medicine; Academic Press: London, UK, 2024; pp. 191–199. [Google Scholar] [CrossRef]

- Brent Woodland, M.; Ong, J.; Zaman, N.; Hirzallah, M.; Waisberg, E.; Masalkhi, M.; Kamran, S.A.; Lee, A.G.; Tavakkoli, A. Applications of Extended Reality in Spaceflight for Human Health and Performance. Acta Astronaut. 2024, 214, 748–756. [Google Scholar] [CrossRef]

- Schöne, B.; Kisker, J.; Lange, L.; Gruber, T.; Sylvester, S.; Osinsky, R. The Reality of Virtual Reality. Front. Psychol. 2023, 14, 1093014. [Google Scholar] [CrossRef]

- Chessa, M.; Van De Bruaene, A.; Farooqi, K.; Valverde, I.; Jung, C.; Votta, E.; Sturla, F.; Diller, G.P.; Brida, M.; Sun, Z.; et al. Three-Dimensional Printing, Holograms, Computational Modelling, and Artificial Intelligence for Adult Congenital Heart Disease Care: An Exciting Future. Eur. Heart J. 2022, 43, 2672–2684. [Google Scholar] [CrossRef]

- Willaert, W.I.M.; Aggarwal, R.; Van Herzeele, I.; Cheshire, N.J.; Vermassen, F.E. Recent Advancements in Medical Simulation: Patient-Specific Virtual Reality Simulation. World J. Surg. 2012, 36, 1703–1712. [Google Scholar] [CrossRef]

- Rad, A.A.; Vardanyan, R.; Lopuszko, A.; Alt, C.; Stoffels, I.; Schmack, B.; Ruhparwar, A.; Zhigalov, K.; Zubarevich, A.; Weymann, A. Virtual and Augmented Reality in Cardiac Surgery. Braz. J. Cardiovasc. Surg. 2022, 37, 123–127. [Google Scholar] [CrossRef]

- Iannotta, M.; Angelo d’Aiello, F.; Van De Bruaene, A.; Caruso, R.; Conte, G.; Ferrero, P.; Bassareo, P.P.; Pasqualin, G.; Chiarello, C.; Militaru, C.; et al. Modern Tools in Congenital Heart Disease Imaging and Procedure Planning: A European Survey. J. Cardiovasc. Med. 2024, 25, 76–87. [Google Scholar] [CrossRef]

- Gałeczka, M.; Smerdziński, S.; Tyc, F.; Fiszer, R. Virtual Reality for Transcatheter Procedure Planning in Congenital Heart Disease. Kardiol. Pol. 2023, 81, 1026–1027. [Google Scholar] [CrossRef] [PubMed]

- Priya, S.; La Russa, D.; Walling, A.; Goetz, S.; Hartig, T.; Khayat, A.; Gupta, P.; Nagpal, P.; Ashwath, R. “From Vision to Reality: Virtual Reality’s Impact on Baffle Planning in Congenital Heart Disease”. Pediatr. Cardiol. 2023, 45, 165–174. [Google Scholar] [CrossRef]

- Stepanenko, A.; Perez, L.M.; Ferre, J.C.; Ybarra Falcón, C.; Pérez de la Sota, E.; San Roman, J.A.; Redondo Diéguez, A.; Baladron, C. 3D Virtual Modelling, 3D Printing and Extended Reality for Planning of Implant Procedure of Short-Term and Long-Term Mechanical Circulatory Support Devices and Heart Transplantation. Front. Cardiovasc. Med. 2023, 10, 1191705. [Google Scholar] [CrossRef]

- Ghosh, R.M.; Mascio, C.E.; Rome, J.J.; Jolley, M.A.; Whitehead, K.K. Use of Virtual Reality for Hybrid Closure of Multiple Ventricular Septal Defects. JACC Case Rep. 2021, 3, 1579–1583. [Google Scholar] [CrossRef] [PubMed]

- Battal, A.; Taşdelen, A. The Use of Virtual Worlds in the Field of Education: A Bibliometric Study. Particip. Educ. Res. 2023, 10, 408–423. [Google Scholar] [CrossRef]

- Eves, J.; Sudarsanam, A.; Shalhoub, J.; Amiras, D. Augmented Reality in Vascular and Endovascular Surgery: Scoping Review. JMIR Serious Games 2022, 10, e34501. [Google Scholar] [CrossRef]

- Chahine, J.; Mascarenhas, L.; George, S.A.; Bartos, J.; Yannopoulos, D.; Raveendran, G.; Gurevich, S. Effects of a Mixed-Reality Headset on Procedural Outcomes in the Cardiac Catheterization Laboratory. Cardiovasc. Revascularization Med. 2022, 45, 3–8. [Google Scholar] [CrossRef]

- Ghlichi Moghaddam, N.; Namazinia, M.; Hajiabadi, F.; Mazlum, S.R. The Efficacy of Phase I Cardiac Rehabilitation Training Based on Augmented Reality on the Self-Efficacy of Patients Undergoing Coronary Artery Bypass Graft Surgery: A Randomized Clinical Trial. BMC Sports Sci. Med. Rehabil. 2023, 15, 156. [Google Scholar] [CrossRef]

- Vernemmen, I.; Van Steenkiste, G.; Hauspie, S.; De Lange, L.; Buschmann, E.; Schauvliege, S.; Van den Broeck, W.; Decloedt, A.; Vanderperren, K.; van Loon, G. Development of a Three-Dimensional Computer Model of the Equine Heart Using a Polyurethane Casting Technique and in Vivo Contrast-Enhanced Computed Tomography. J. Vet. Cardiol. 2024, 51, 72–85. [Google Scholar] [CrossRef]

- Alonso-Felipe, M.; Aguiar-Pérez, J.M.; Pérez-Juárez, M.Á.; Baladrón, C.; Peral-Oliveira, J.; Amat-Santos, I.J. Application of Mixed Reality to Ultrasound-Guided Femoral Arterial Cannulation During Real-Time Practice in Cardiac Interventions. J. Health Inf. Res. 2023, 7, 527–541. [Google Scholar] [CrossRef]

- Bloom, D.; Colombo, J.N.; Miller, N.; Southworth, M.K.; Andrews, C.; Henry, A.; Orr, W.B.; Silva, J.R.; Avari Silva, J.N. Early Preclinical Experience of a Mixed Reality Ultrasound System with Active GUIDance for NEedle-Based Interventions: The GUIDE Study. Cardiovasc. Digit. Health J. 2022, 3, 232–240. [Google Scholar] [CrossRef]

- Syahputra, M.F.; Zanury, R.; Andayani, U.; Hardi, S.M. Heart Disease Simulation with Mixed Reality Technology. J. Phys. Conf. Ser. 2021, 1898, 012025. [Google Scholar] [CrossRef]

- Proniewska, K.; Khokhar, A.A.; Dudek, D. Advanced Imaging in Interventional Cardiology: Mixed Reality to Optimize Preprocedural Planning and Intraprocedural Monitoring. Kardiol. Pol. 2021, 79, 331–335. [Google Scholar] [CrossRef]

- Brun, H.; Bugge, R.A.B.; Suther, L.K.R.; Birkeland, S.; Kumar, R.; Pelanis, E.; Elle, O.J. Mixed Reality Holograms for Heart Surgery Planning: First User Experience in Congenital Heart Disease. Eur. Heart J. Cardiovasc. Imaging 2019, 20, 883–888. [Google Scholar] [CrossRef] [PubMed]

- Southworth, M.K.; Silva, J.R.; Silva, J.N.A. Use of Extended Realities in Cardiology. In Trends in Cardiovascular Medicine; Elsevier Inc.: Amsterdam, The Netherlands, 2020; pp. 143–148. [Google Scholar] [CrossRef]

- Salavitabar, A.; Zampi, J.D.; Thomas, C.; Zanaboni, D.; Les, A.; Lowery, R.; Yu, S.; Whiteside, W. Augmented Reality Visualization of 3D Rotational Angiography in Congenital Heart Disease: A Comparative Study to Standard Computer Visualization. Pediatr. Cardiol. 2023, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Hemanth, J.D.; Kose, U.; Deperlioglu, O.; de Albuquerque, V.H.C. An Augmented Reality-Supported Mobile Application for Diagnosis of Heart Diseases. J. Supercomput. 2020, 76, 1242–1267. [Google Scholar] [CrossRef]

- Yhdego, H.; Kidane, N.; Mckenzie, F.; Audette, M. Development of Deep-Learning Models for a Hybrid Simulation of Auscultation Training on Standard Patients Using an ECG-Based Virtual Pathology Stethoscope. Simulation 2023, 99, 903–915. [Google Scholar] [CrossRef]

- Tahri, S.M.; Al-Thani, D.; Elshazly, M.B.; Al-Hijji, M. A Blueprint for an AI & AR-Based Eye Tracking System to Train Cardiology Professionals Better Interpret Electrocardiograms. In Persuasive Technology; Baghaei, N., Vassileva, J., Ali, R., Oyibo, K., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 221–229. [Google Scholar]

- Bamps, K.; De Buck, S.; Ector, J. Deep Learning Based Tracked X-Ray for Surgery Guidance. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2022, 10, 339–347. [Google Scholar] [CrossRef]

- Kieu, V.; Sumski, C.; Cohen, S.; Reinhardt, E.; Axelrod, D.M.; Handler, S.S. The Use of Virtual Reality Learning on Transition Education in Adolescents with Congenital Heart Disease. Pediatr. Cardiol. 2023, 44, 1856–1860. [Google Scholar] [CrossRef]

- Pham, J.; Kong, F.; James, D.L.; Marsden, A.L. Virtual shape-editing of patient-specific vascular models using Regularized Kelvinlets. IEEE Trans. Biomed. Eng. 2024, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Skalidis, I.; Salihu, A.; Kachrimanidis, I.; Koliastasis, L.; Maurizi, N.; Dayer, N.; Muller, O.; Fournier, S.; Hamilos, M.; Skalidis, E. Meta-CathLab: A Paradigm Shift in Interventional Cardiology Within the Metaverse. Can. J. Cardiol. 2023, 39, 1549–1552. [Google Scholar] [CrossRef]

- Huang, J.; Ren, L.; Feng, L.; Yang, F.; Yang, L.; Yan, K. AI Empowered Virtual Reality Integrated Systems for Sleep Stage Classification and Quality Enhancement. IEEE Trans. Neural. Syst. Rehabil. Eng. 2022, 30, 1494–1503. [Google Scholar] [CrossRef]

- García Fierros, F.J.; Moreno Escobar, J.J.; Sepúlveda Cervantes, G.; Morales Matamoros, O.; Tejeida Padilla, R. VirtualCPR: Virtual Reality Mobile Application for Training in Cardiopulmonary Resuscitation Techniques. Sensors 2021, 21, 2504. [Google Scholar] [CrossRef] [PubMed]

- Fan, M.; Yang, X.; Ding, T.; Cao, Y.; Si, Q.; Bai, J.; Lin, Y.; Zhao, X. Application of Ultrasound Virtual Reality in the Diagnosis and Treatment of Cardiovascular Diseases. J. Health Eng. 2021, 9999654. [Google Scholar] [CrossRef]

- Mocan, B.; Mocan, M.; Fulea, M.; Murar, M.; Feier, H. Home-Based Robotic Upper Limbs Cardiac Telerehabilitation System. Int. J. Env. Res. Public Health 2022, 19, 11628. [Google Scholar] [CrossRef]

- Serfözö, P.D.; Sandkühler, R.; Blümke, B.; Matthisson, E.; Meier, J.; Odermatt, J.; Badertscher, P.; Sticherling, C.; Strebel, I.; Cattin, P.C.; et al. An Augmented Reality-Based Method to Assess Precordial Electrocardiogram Leads: A Feasibility Trial. Eur. Heart J. Digit. Health 2023, 4, 420–427. [Google Scholar] [CrossRef] [PubMed]

- Groninger, H.; Stewart, D.; Fisher, J.M.; Tefera, E.; Cowgill, J.; Mete, M. Virtual Reality for Pain Management in Advanced Heart Failure: A Randomized Controlled Study. Palliat. Med. 2021, 35, 2008–2016. [Google Scholar] [CrossRef]

- Pagano, T.P.; dos Santos, L.L.; Santos, V.R.; Sá, P.H.M.; da Bonfim, Y.S.; Paranhos, J.V.D.; Ortega, L.L.; Nascimento, L.F.S.; Santos, A.; Rönnau, M.M.; et al. Remote Heart Rate Prediction in Virtual Reality Head-Mounted Displays Using Machine Learning Techniques. Sensors 2022, 22, 9486. [Google Scholar] [CrossRef]

- Perrotta, A.; Alexandra Silva, P.; Martins, P.; Sainsbury, B.; Wilz, O.; Ren, J.; Green, M.; Fergie, M.; Rossa, C. Preoperative Virtual Reality Surgical Rehearsal of Renal Access during Percutaneous Nephrolithotomy: A Pilot Study. Electronics 2022, 11, 1562. [Google Scholar] [CrossRef]

- Lau, I.; Gupta, A.; Ihdayhid, A.; Sun, Z. Clinical Applications of Mixed Reality and 3D Printing in Congenital Heart Disease. Biomolecules 2022, 12, 1548. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Espada, C.; Linares-Palomino, J. Mixed Reality: A Promising Technology for Therapeutic Patient Education. Vasa 2023, 52, 160–168. [Google Scholar] [CrossRef]

- El Ali, A.; Ney, R.; van Berlo, Z.M.C.; Cesar, P. Is That My Heartbeat? Measuring and Understanding Modality-Dependent Cardiac Interoception in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2023, 29, 4805–4815. [Google Scholar] [CrossRef]

- Chiang, P.; Zheng, J.; Yu, Y.; Mak, K.H.; Chui, C.K.; Cai, Y. A VR Simulator for Intracardiac Intervention. IEEE Comput. Graph. Appl. 2013, 33, 44–57. [Google Scholar] [CrossRef] [PubMed]

- Patel, N.; Costa, A.; Sanders, S.P.; Ezon, D. Stereoscopic Virtual Reality Does Not Improve Knowledge Acquisition of Congenital Heart Disease. Int. J. Cardiovasc. Imaging 2021, 37, 2283–2290. [Google Scholar] [CrossRef]

- Lim, T.R.; Wilson, H.C.; Axelrod, D.M.; Werho, D.K.; Handler, S.S.; Yu, S.; Afton, K.; Lowery, R.; Mullan, P.B.; Cooke, J.; et al. Virtual Reality Curriculum Increases Paediatric Residents’ Knowledge of CHDs. Cardiol. Young 2023, 33, 410–414. [Google Scholar] [CrossRef]

- O’Sullivan, D.M.; Foley, R.; Proctor, K.; Gallagher, S.; Deery, A.; Eidem, B.W.; McMahon, C.J. The Use of Virtual Reality Echocardiography in Medical Education. Pediatr. Cardiol. 2021, 42, 723–726. [Google Scholar] [CrossRef]

- Choi, S.; Nah, S.; Cho, Y.S.; Moon, I.; Lee, J.W.; Lee, C.A.; Moon, J.E.; Han, S. Accuracy of Visual Estimation of Ejection Fraction in Patients with Heart Failure Using Augmented Reality Glasses. Heart 2023, heartjnl-2023-323067. [Google Scholar] [CrossRef]

- Gladding, P.A.; Loader, S.; Smith, K.; Zarate, E.; Green, S.; Villas-Boas, S.; Shepherd, P.; Kakadiya, P.; Hewitt, W.; Thorstensen, E.; et al. Multiomics, Virtual Reality and Artificial Intelligence in Heart Failure. Future Cardiol 2021, 17, 1335–1347. [Google Scholar] [CrossRef]

- Boonstra, M.J.; Oostendorp, T.F.; Roudijk, R.W.; Kloosterman, M.; Asselbergs, F.W.; Loh, P.; Van Dam, P.M. Incorporating Structural Abnormalities in Equivalent Dipole Layer Based ECG Simulations. Front. Physiol. 2022, 13, 2690. [Google Scholar] [CrossRef] [PubMed]

- He, B.; Hu, W.; Zhang, K.; Yuan, S.; Han, X.; Su, C.; Zhao, J.; Wang, G.; Wang, G.; Zhang, L. Image Segmentation Algorithm of Lung Cancer Based on Neural Network Model. Expert Syst. 2021, 39, e12822. [Google Scholar] [CrossRef]

- Evans, L.M.; Sözümert, E.; Keenan, B.E.; Wood, C.E.; du Plessis, A. A Review of Image-Based Simulation Applications in High-Value Manufacturing. Arch. Comput. Methods Eng. 2023, 30, 1495–1552. [Google Scholar] [CrossRef]

- Arafin, P.; Billah, A.M.; Issa, A. Deep Learning-Based Concrete Defects Classification and Detection Using Semantic Segmentation. Struct. Health Monit. 2024, 23, 383–409. [Google Scholar] [CrossRef]

- Ye-Bin, M.; Choi, D.; Kwon, Y.; Kim, J.; Oh, T.H. ENInst: Enhancing Weakly-Supervised Low-Shot Instance Segmentation. Pattern. Recognit. 2024, 145, 109888. [Google Scholar] [CrossRef]

- Hong, F.; Kong, L.; Zhou, H.; Zhu, X.; Li, H.; Liu, Z. Unified 3D and 4D Panoptic Segmentation via Dynamic Shifting Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Rudnicka, Z.; Szczepanski, J.; Pregowska, A. Artificial Intelligence-Based Algorithms in Medical Image Scan Seg-Mentation and Intelligent Visual-Content Generation-a Concise over-View. Electronics 2024, 13, 746. [Google Scholar] [CrossRef]

- Sammani, A.; Bagheri, A.; Van Der Heijden, P.G.M.; Te Riele, A.S.J.M.; Baas, A.F.; Oosters, C.A.J.; Oberski, D.; Asselbergs, F.W. Automatic Multilabel Detection of ICD10 Codes in Dutch Cardiology Discharge Letters Using Neural Networks. NPJ Digit. Med. 2021, 4, 37. [Google Scholar] [CrossRef]

- Muscogiuri, G.; Van Assen, M.; Tesche, C.; De Cecco, C.N.; Chiesa, M.; Scafuri, S.; Guglielmo, M.; Baggiano, A.; Fusini, L.; Guaricci, A.I.; et al. Review Article Artificial Intelligence in Coronary Computed Tomography Angiography: From Anatomy to Prognosis. BioMed Res. Int. 2020, 2020, 6649410. [Google Scholar] [CrossRef]

- Yasmin, F.; Shah, S.M.I.; Naeem, A.; Shujauddin, S.M.; Jabeen, A.; Kazmi, S.; Siddiqui, S.A.; Kumar, P.; Salman, S.; Hassan, S.A.; et al. Artificial Intelligence in the Diagnosis and Detection of Heart Failure: The Past, Present, and Future. Rev. Cardiovasc. Med. 2021, 22, 1095–1113. [Google Scholar] [CrossRef]

- Samieiyeganeh, M.E.; Rahmat, R.W.; Khalid, F.B.; Kasmiran, K.B. An overview of deep learning techniques in echocardiography image segmentation. J. Theor. Appl. Inf. Technol. 2020, 98, 3561–3572. [Google Scholar]

- Wahlang, I.; Kumar Maji, A.; Saha, G.; Chakrabarti, P.; Jasinski, M.; Leonowicz, Z.; Jasinska, E.; Dimauro, G.; Bevilacqua, V.; Pecchia, L. Electronics Article. Electronics 2021, 10, 495. [Google Scholar] [CrossRef]

- Muraki, R.; Teramoto Id, A.; Sugimoto, K.; Sugimoto, K.; Yamada, A.; Watanabe, E. Automated Detection Scheme for Acute Myocardial Infarction Using Convolutional Neural Network and Long Short-Term Memory. PLoS ONE 2022, 17, e0264002. [Google Scholar] [CrossRef] [PubMed]

- Roy, S.S.; Hsu, C.H.; Samaran, A.; Goyal, R.; Pande, A.; Balas, V.E. Vessels Segmentation in Angiograms Using Convolutional Neural Network: A Deep Learning Based Approach. CMES Comput. Model. Eng. Sci. 2023, 136, 241–255. [Google Scholar] [CrossRef]

- Liu, J.; Yuan, G.; Yang, C.; Song, H.; Luo, L. An Interpretable CNN for the Segmentation of the Left Ventricle in Cardiac MRI by Real-Time Visualization. CMES Comput. Model. Eng. Sci. 2023, 135, 1571–1587. [Google Scholar] [CrossRef]

- Tandon, A. Artificial Intelligence in Pediatric and Congenital Cardiac Magnetic Resonance Imaging. In Intelligence-Based Cardiology and Cardiac Surgery: Artificial Intelligence and Human Cognition in Cardiovascular Medicine; Academic Press: London, UK, 2024; pp. 201–209. [Google Scholar] [CrossRef]

- Candemir, S.; White, R.D.; Demirer, M.; Gupta, V.; Bigelow, M.T.; Prevedello, L.M.; Erdal, B.S. Automated Coronary Artery Atherosclerosis Detection and Weakly Supervised Localization on Coronary CT Angiography with a Deep 3-Dimensional Convolutional Neural Network. Comput. Med. Imaging Graph. 2020, 83, 101721. [Google Scholar] [CrossRef]

- Singh, N.; Gunjan, V.K.; Shaik, F.; Roy, S. Detection of Cardio Vascular Abnormalities Using Gradient Descent Optimization and CNN. Health Technol. 2024, 14, 155–168. [Google Scholar] [CrossRef]

- Banerjee, D.; Dey, S.; Pal, A. An SNN Based ECG Classifier for Wearable Edge Devices. In Proceedings of the NeurIPS 2022 Workshop on Learning from Time Series for Health, New Orleans, LA, USA, 2 December 2022. [Google Scholar]

- Ullah, U.; García, A.; Jurado, O.; Diez Gonzalez, I.; Garcia-Zapirain, B. A Fully Connected Quantum Convolutional Neural Network for Classifying Ischemic Cardiopathy. IEEE Access 2022, 10, 134592–134605. [Google Scholar] [CrossRef]

- Çınar, A.; Tuncer, S.A. Classification of Normal Sinus Rhythm, Abnormal Arrhythmia and Congestive Heart Failure ECG Signals Using LSTM and Hybrid CNN-SVM Deep Neural Networks. Comput. Methods Biomech. Biomed. Engin. 2021, 24, 203–214. [Google Scholar] [CrossRef]

- Fradi, M.; Khriji, L.; Machhout, M. Real-Time Arrhythmia Heart Disease Detection System Using CNN Architecture Based Various Optimizers-Networks. Multimed. Tools Appl. 2022, 81, 41711–41732. [Google Scholar] [CrossRef]

- Rahul, J.; Sharma, L.D. Automatic Cardiac Arrhythmia Classification Based on Hybrid 1-D CNN and Bi-LSTM Model. Biocybern. Biomed. Eng. 2022, 42, 312–324. [Google Scholar] [CrossRef]

- Ahmed, A.A.; Ali, W.; Abdullah, T.A.A.; Malebary, S.J.; Yao, Y.; Huang, X.; Ahmed, A.A.; Ali, W.; Abdullah, T.A.A.; Malebary, S.J. Citation: Classifying Cardiac Arrhythmia from ECG Signal Using 1D CNN Deep Learning Model. Mathematics 2023, 11, 562. [Google Scholar] [CrossRef]

- Eltrass, A.S.; Tayel, M.B.; Ammar, A.I. A New Automated CNN Deep Learning Approach for Identification of ECG Congestive Heart Failure and Arrhythmia Using Constant-Q Non-Stationary Gabor Transform. Biomed. Signal Process. Control 2021, 65, 102326. [Google Scholar] [CrossRef]

- Zheng, Z.; Chen, Z.; Hu, F.; Zhu, J.; Tang, Q.; Liang, Y. Electronics Article. Electronics 2020, 9, 121. [Google Scholar] [CrossRef]

- Cheng, J.; Zou, Q.; Zhao, Y. ECG Signal Classification Based on Deep CNN and BiLSTM. BMC Med. Inf. Decis. Mak. 2021, 21, 365. [Google Scholar] [CrossRef] [PubMed]

- Rawal, V.; Prajapati, P.; Darji, A. Hardware Implementation of 1D-CNN Architecture for ECG Arrhythmia Classification. Biomed. Signal Process. Control 2023, 85, 104865. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, S.; He, Z.; Zhang, Y.; Wang, C. A CNN Model for Cardiac Arrhythmias Classification Based on Individual ECG Signals. Cardiovasc. Eng. Technol. 2022, 13, 548–557. [Google Scholar] [CrossRef]

- Khozeimeh, F.; Sharifrazi, D.; Izadi, N.H.; Hassannataj Joloudari, J.; Shoeibi, A.; Alizadehsani, R.; Tartibi, M.; Hussain, S.; Sani, Z.A.; Khodatars, M.; et al. RF-CNN-F: Random Forest with Convolutional Neural Network Features for Coronary Artery Disease Diagnosis Based on Cardiac Magnetic Resonance. Sci. Rep. 2022, 12, 17. [Google Scholar] [CrossRef]

- Aslan, M.F.; Sabanci, K.; Durdu, A. A CNN-Based Novel Solution for Determining the Survival Status of Heart Failure Patients with Clinical Record Data: Numeric to Image. Biomed. Signal Process. Control 2021, 68, 102716. [Google Scholar] [CrossRef]

- Yoon, T.; Kang, D. Bimodal CNN for Cardiovascular Disease Classification by Co-Training ECG Grayscale Images and Scalograms. Sci. Rep. 2023, 13, 2937. [Google Scholar] [CrossRef]

- Sun, L.; Shang, D.; Wang, Z.; Jiang, J.; Tian, F.; Liang, J.; Shen, Z.; Liu, Y.; Zheng, J.; Wu, H.; et al. MSPAN: A Memristive Spike-Based Computing Engine With Adaptive Neuron for Edge Arrhythmia Detection. Front. Neurosci. 2021, 15, 761127. [Google Scholar] [CrossRef]

- Wang, J.; Zang, J.; Yao, S.; Zhang, Z.; Xue, C. Multiclassification for Heart Sound Signals under Multiple Networks and Multi-View Feature. Measurement 2024, 225, 114022. [Google Scholar] [CrossRef]

- Wang, L.-H.; Ding, L.-J.; Xie, C.-X.; Jiang, S.-Y.; Kuo, I.-C.; Wang, X.-K.; Gao, J.; Huang, P.-C.; Patricia, A.; Abu, A.R. Automated Classification Model With OTSU and CNN Method for Premature Ventricular Contraction Detection. IEEE Access 2021, 9, 156581–156591. [Google Scholar] [CrossRef]

- Jungiewicz, M.; Jastrzębski, P.; Wawryka, P.; Przystalski, K.; Sabatowski, K.; Bartuś, S. Vision Transformer in Stenosis Detection of Coronary Arteries. Expert Syst. Appl. 2023, 228, 120234. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, G.; Wang, W.; Cao, S.; Dong, S.; Yu, D.; Wang, X.; Wang, K. TTN: Topological Transformer Network for Automated Coronary Artery Branch Labeling in Cardiac CT Angiography. IEEE J. Transl. Eng. Health Med. 2023, 12, 129–139. [Google Scholar] [CrossRef] [PubMed]

- Rao, S.; Li, Y.; Ramakrishnan, R.; Hassaine, A.; Canoy, D.; Cleland, J.; Lukasiewicz, T.; Salimi-Khorshidi, G.; Rahimi, K.; Shishir Rao, O. An Explainable Transformer-Based Deep Learning Model for the Prediction of Incident Heart Failure. IEEE J. Biomed. Health Inf. 2022, 26, 3362–3372. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhang, W. A Dense RNN for Sequential Four-Chamber View Left Ventricle Wall Segmentation and Cardiac State Estimation. Front. Bioeng. Biotechnol. 2021, 9, 696227. [Google Scholar] [CrossRef]

- Ding, C.; Wang, S.; Jin, X.; Wang, Z.; Wang, J. A Novel Transformer-Based ECG Dimensionality Reduction Stacked Auto-Encoders for Arrhythmia Beat Detection. Med. Phys. 2023, 50, 5897–5912. [Google Scholar] [CrossRef]

- Ding, Y.; Xie, W.; Wong, K.K.L.; Liao, Z. DE-MRI Myocardial Fibrosis Segmentation and Classification Model Based on Multi-Scale Self-Supervision and Transformer. Comput. Methods Programs Biomed. 2022, 226, 107049. [Google Scholar] [CrossRef]

- Hu, R.; Chen, J.; Zhou, L. A Transformer-Based Deep Neural Network for Arrhythmia Detection Using Continuous ECG Signals. Comput. Biol. Med. 2022, 144, 105325. [Google Scholar] [CrossRef]

- Gaudilliere, P.L.; Sigurthorsdottir, H.; Aguet, C.; Van Zaen, J.; Lemay, M.; Delgado-Gonzalo, R. Generative Pre-Trained Transformer for Cardiac Abnormality Detection. Available online: https://physionet.org/content/mitdb/1.0.0/ (accessed on 20 February 2024).

- Lecesne, E.; Simon, A.; Garreau, M.; Barone-Rochette, G.; Fouard, C. Segmentation of Cardiac Infarction in Delayed-Enhancement MRI Using Probability Map and Transformers-Based Neural Networks. Comput. Methods Programs Biomed. 2023, 242, 107841. [Google Scholar] [CrossRef]

- Ahmadi, N.; Tsang, M.Y.; Gu, A.N.; Tsang, T.S.M.; Abolmaesumi, P. Transformer-Based Spatio-Temporal Analysis for Classification of Aortic Stenosis Severity from Echocardiography Cine Series. IEEE Trans. Med. Imaging 2024, 43, 366–376. [Google Scholar] [CrossRef] [PubMed]

- Han, T.; Ai, D.; Li, X.; Fan, J.; Song, H.; Wang, Y.; Yang, J. Coronary Artery Stenosis Detection via Proposal-Shifted Spatial-Temporal Transformer in X-Ray Angiography. Comput. Biol. Med. 2023, 153, 106546. [Google Scholar] [CrossRef] [PubMed]

- Ning, Y.; Zhang, S.; Xi, X.; Guo, J.; Liu, P.; Zhang, C. CAC-EMVT: Efficient Coronary Artery Calcium Segmentation with Multi-Scale Vision Transformers. In Proceedings of the 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 9–12 December 2021; pp. 1462–1467. [Google Scholar] [CrossRef]

- Deng, K.; Meng, Y.; Gao, D.; Bridge, J.; Shen, Y.; Lip, G.; Zhao, Y.; Zheng, Y. TransBridge: A Lightweight Transformer for Left Ventricle Segmentation in Echocardiography. In Simplifying Medical Ultrasound; Noble, J.A., Aylward, S., Grimwood, A., Min, Z., Lee, S.-L., Hu, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 63–72. [Google Scholar]

- Alkhodari, M.; Kamarul Azman, S.; Hadjileontiadis, L.J.; Khandoker, A.H. Ensemble Transformer-Based Neural Networks Detect Heart Murmur in Phonocardiogram Recordings. In Proceedings of the 2022 Computing in Cardiology (CinC), Tampere, Finland, 4–7 September 2022. [Google Scholar]

- Meng, L.; Tan, W.; Ma, J.; Wang, R.; Yin, X.; Zhang, Y. Enhancing Dynamic ECG Heartbeat Classification with Lightweight Transformer Model. Artif. Intell. Med. 2022, 124, 102236. [Google Scholar] [CrossRef]

- Chu, M.; Wu, P.; Li, G.; Yang, W.; Gutiérrez-Chico, J.L.; Tu, S. Advances in Diagnosis, Therapy, and Prognosis of Coronary Artery Disease Powered by Deep Learning Algorithms. JACC Asia 2023, 3, 1–14. [Google Scholar] [CrossRef]

- Feng, Y.; Geng, S.; Chu, J.; Fu, Z.; Hong, S. Building and Training a Deep Spiking Neural Network for ECG Classification. Biomed. Signal Process. Control 2022, 77, 103749. [Google Scholar] [CrossRef]

- Yan, Z.; Zhou, J.; Wong, W.F. Energy Efficient ECG Classification with Spiking Neural Network. Biomed. Signal Process. Control 2021, 63, 102170. [Google Scholar] [CrossRef]

- Kovács, P.; Samiee, K. Arrhythmia Detection Using Spiking Variable Projection Neural Networks. In Proceedings of the 2022 Computing in Cardiology (CinC), Tampere, Finland, 4–7 September 2022; Volume 49. [Google Scholar] [CrossRef]

- Singhal, S.; Kumar, M. GSMD-SRST: Group Sparse Mode Decomposition and Superlet Transform Based Technique for Multi-Level Classification of Cardiac Arrhythmia. IEEE Sens. J. 2024. [Google Scholar] [CrossRef]

- Kiladze, M.R.; Lyakhova, U.A.; Lyakhov, P.A.; Nagornov, N.N.; Vahabi, M. Multimodal Neural Network for Recognition of Cardiac Arrhythmias Based on 12-Load Electrocardiogram Signals. IEEE Access 2023, 11, 133744–133754. [Google Scholar] [CrossRef]

- Li, Z.; Calvet, L.E. Extraction of ECG Features with Spiking Neurons for Decreased Power Consumption in Embedded Devices Extraction of ECG Features with Spiking Neurons for Decreased Power Consumption in Embedded Devices Extraction of ECG Features with Spiking Neurons for Decreased Power Consumption in Embedded Devices. In Proceedings of the 2023 19th International Conference on Synthesis, Modeling, Analysis and Simulation Methods and Applications to Circuit Design (SMACD), Funchal, Portugal, 3–5 July 2023. [Google Scholar] [CrossRef]

- Revathi, T.K.; Sathiyabhama, B.; Sankar, S. Diagnosing Cardio Vascular Disease (CVD) Using Generative Adversarial Network (GAN) in Retinal Fundus Images. Ann. Rom. Soc. Cell Biol. 2021, 25, 2563–2572. Available online: http://annalsofrscb.ro (accessed on 20 February 2024).

- Chen, J.; Yang, G.; Khan, H.R.; Zhang, H.; Zhang, Y.; Zhao, S.; Mohiaddin, R.H.; Wong, T.; Firmin, D.N.; Keegan, J. JAS-GAN: Generative Adversarial Network Based Joint Atrium and Scar Segmentations on Unbalanced Atrial Targets. IEEE J. Biomed. Health Inf. 2021, 26, 103–114. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, J.; Guo, X.; Ren, Y. Comparative Analysis of U-Net and TLMDB GAN for the Cardiovascular Segmentation of the Ventricles in the Heart. Comput. Methods Programs Biomed. 2022, 215, 106614. [Google Scholar] [CrossRef] [PubMed]

- Decourt, C.; Duong, L. Semi-Supervised Generative Adversarial Networks for the Segmentation of the Left Ventricle in Pediatric MRI. Comput. Biol. Med. 2020, 123, 103884. [Google Scholar] [CrossRef] [PubMed]

- Diller, G.P.; Vahle, J.; Radke, R.; Vidal, M.L.B.; Fischer, A.J.; Bauer, U.M.M.; Sarikouch, S.; Berger, F.; Beerbaum, P.; Baumgartner, H.; et al. Utility of Deep Learning Networks for the Generation of Artificial Cardiac Magnetic Resonance Images in Congenital Heart Disease. BMC Med. Imaging 2020, 20, 113. [Google Scholar] [CrossRef] [PubMed]

- Rizwan, I.; Haque, I.; Neubert, J. Deep Learning Approaches to Biomedical Image Segmentation. Inf. Med. Unlocked 2020, 18, 100297. [Google Scholar] [CrossRef]

- Van Lieshout, F.E.; Klein, R.C.; Kolk, M.Z.; Van Geijtenbeek, K.; Vos, R.; Ruiperez-Campillo, S.; Feng, R.; Deb, B.; Ganesan, P.; Knops, R.; et al. Deep Learning for Ventricular Arrhythmia Prediction Using Fibrosis Segmentations on Cardiac MRI Data. In Proceedings of the 2022 Computing in Cardiology (CinC), Tampere, Finland, 4–7 September 2022. [Google Scholar] [CrossRef]

- Liu, X.; He, L.; Yan, J.; Huang, Y.; Wang, Y.; Lin, C.; Huang, Y.; Liu, X. A Neural Network for High-Precise and Well-Interpretable Electrocardiogram Classification. bioRxiv 2023. [Google Scholar] [CrossRef]

- Lu, P.; Bai, W.; Rueckert, D.; Noble, J.A. Modelling Cardiac Motion via Spatio-Temporal Graph Convolutional Networks to Boost the Diagnosis of Heart Conditions. In Statistical Atlases and Computational Models of the Heart; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Yang, H.; Zhen, X.; Chi, Y.; Zhang, L.; Hua, X.-S. CPR-GCN: Conditional Partial-Residual Graph Convolutional Network in Automated Anatomical Labeling of Coronary Arteries. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Huang, F.; Lian, J.; Ng, K.-S.; Shih, K.; Vardhanabhuti, V.; Huang, F.; Lian, J.; Ng, K.-S.; Shih, K.; Vardhanabhuti, V. Citation: Predicting CT-Based Coronary Artery Disease Using Vascular Biomarkers Derived from Fundus Photographs with a Graph Convolutional Neural Network. Diagnostics 2022, 12, 1390. [Google Scholar] [CrossRef]

- Gao, R.; Hou, Z.; Li, J.; Han, H.; Lu, B.; Zhou, S.K. Joint Coronary Centerline Extraction And Lumen Segmentation From Ccta Using Cnntracker And Vascular Graph Convolutional Network. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), Nice, France, 13–16 April 2021; pp. 1897–1901. [Google Scholar]

- Chakravarty, A.; Sarkar, T.; Ghosh, N.; Sethuraman, R.; Sheet, D. Learning Decision Ensemble Using a Graph Neural Network for Comorbidity Aware Chest Radiograph Screening. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 1234–1237. [Google Scholar] [CrossRef]

- Reddy, G.T.; Praveen, M.; Reddy, K.; Lakshmanna, K.; Rajput, D.S.; Kaluri, R.; Gautam, S. Hybrid Genetic Algorithm and a Fuzzy Logic Classifier for Heart Disease Diagnosis. Evol. Intell. 2020, 13, 185–196. [Google Scholar] [CrossRef]

- Priyanka; Baranwal, N.; Singh, K.N.; Singh, A.K. YOLO-Based ROI Selection for Joint Encryption and Compression of Medical Images with Reconstruction through Super-Resolution Network. Future Gener. Comput. Syst. 2024, 150, 1–9. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Zhuang, Z.; Jin, P.; Joseph Raj, A.N.; Yuan, Y.; Zhuang, S. Automatic Segmentation of Left Ventricle in Echocardiography Based on YOLOv3 Model to Achieve Constraint and Positioning. Comput. Math. Methods Med. 2021, 2021, 3772129. [Google Scholar] [CrossRef] [PubMed]

- Alamelu, V.; Thilagamani, S. Lion Based Butterfly Optimization with Improved YOLO-v4 for Heart Disease Prediction Using IoMT. Inf. Technol. Control 2022, 51, 692–703. [Google Scholar] [CrossRef]

- Lee, S.; Xibin, J.; Lee, A.; Gil, H.W.; Kim, S.; Hong, M. Cardiac Detection Using YOLO-v5 with Data Preprocessing. In Proceedings of the 2022 International Conference on Computational Science and Computational Intelligence (CSCI 2022), Las Vegas, NV, USA, 14–16 December 2022. [Google Scholar] [CrossRef]

- Mortada, M.J.; Tomassini, S.; Anbar, H.; Morettini, M.; Burattini, L.; Sbrollini, A. Segmentation of Anatomical Structures of the Left Heart from Echocardiographic Images Using Deep Learning. Diagnostics 2023, 13, 1683. [Google Scholar] [CrossRef]

- Smirnov, D.; Pikunov, A.; Syunyaev, R.; Deviatiiarov, R.; Gusev, O.; Aras, K.; Gams, A.; Koppel, A.; Efimov, I.R. Correction: Genetic Algorithm-Based Personalized Models of Human Cardiac Action Potential. PLoS ONE 2020, 15, e0231695. [Google Scholar] [CrossRef]

- Kanwal, S.; Rashid, J.; Nisar, M.W.; Kim, J.; Hussain, A. An Effective Classification Algorithm for Heart Disease Prediction with Genetic Algorithm for Feature Selection. In Proceedings of the 2021 Mohammad Ali Jinnah University International Conference on Computing (MAJICC), Karachi, Pakistan, 15–17 July 2021. [Google Scholar] [CrossRef]

- Alizadehsani, R.; Roshanzamir, M.; Abdar, M.; Beykikhoshk, A.; Khosravi, A.; Nahavandi, S.; Plawiak, P.; Tan, R.S.; Acharya, U.R. Hybrid Genetic-Discretized Algorithm to Handle Data Uncertainty in Diagnosing Stenosis of Coronary Arteries. Expert Syst. 2020, 39, e12573. [Google Scholar] [CrossRef]

- Badano, L.P.; Keller, D.M.; Muraru, D.; Torlasco, C.; Parati, G. Artificial Intelligence and Cardiovascular Imaging: A Win-Win Combination. Anatol. J. Cardiol. 2020, 24, 214–223. [Google Scholar] [CrossRef] [PubMed]

- Souza Filho, E.M.; Fernandes, F.A.; Pereira, N.C.A.; Mesquita, C.T.; Gismondi, R.A. Ethics, Artificial Intelligence and Cardiology. Arq. Bras. De Cardiol. 2020, 115, 579–583. [Google Scholar] [CrossRef]

- Mathur, P.; Srivastava, S.; Xu, X.; Mehta, J.L. Artificial Intelligence, Machine Learning, and Cardiovascular Disease. Clin. Med. Insights Cardiol. 2020, 14, 1179546820927404. [Google Scholar] [CrossRef]

- Miller, D.D. Machine Intelligence in Cardiovascular Medicine. Cardiol. Rev. 2020, 28, 53–64. [Google Scholar] [CrossRef]

- Swathy, M.; Saruladha, K. A Comparative Study of Classification and Prediction of Cardio-Vascular Diseases (CVD) Using Machine Learning and Deep Learning Techniques. ICT Express 2021, 8, 109–116. [Google Scholar] [CrossRef]

- Salte, I.M.; Østvik, A.; Smistad, E.; Melichova, D.; Nguyen, T.M.; Karlsen, S.; Brunvand, H.; Haugaa, K.H.; Edvardsen, T.; Lovstakken, L.; et al. Artificial Intelligence for Automatic Measurement of Left Ventricular Strain in Echocardiography. JACC Cardiovasc. Imaging 2021, 14, 1918–1928. [Google Scholar] [CrossRef]

- Nithyakalyani, K.; Ramkumar, S.; Rajalakshmi, S.; Saravanan, K.A. Diagnosis of Cardiovascular Disorder by CT Images Using Machine Learning Technique. In Proceedings of the 2022 International Conference on Communication, Computing and Internet of Things (IC3IoT), Chennai, India, 10–11 March 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, L.; Soroushmehr, R.; Wood, A.; Gryak, J.; Nallamothu, B.; Najarian, K. Vessel Segmentation for X-Ray Coronary Angiography Using Ensemble Methods with Deep Learning and Filter-Based Features. BMC Med. Imaging 2022, 22, 10. [Google Scholar] [CrossRef] [PubMed]

- Galea, R.R.; Diosan, L.; Andreica, A.; Popa, L.; Manole, S.; Bálint, Z. Region-of-Interest-Based Cardiac Image Segmentation with Deep Learning. Appl. Sci. 2021, 11, 1965. [Google Scholar] [CrossRef]

- Tandon, A.; Mohan, N.; Jensen, C.; Burkhardt, B.E.U.; Gooty, V.; Castellanos, D.A.; McKenzie, P.L.; Zahr, R.A.; Bhattaru, A.; Abdulkarim, M.; et al. Retraining Convolutional Neural Networks for Specialized Cardiovascular Imaging Tasks: Lessons from Tetralogy of Fallot. Pediatr. Cardiol. 2021, 42, 578–589. [Google Scholar] [CrossRef] [PubMed]

- Stough, J.V.; Raghunath, S.; Zhang, X.; Pfeifer, J.M.; Fornwalt, B.K.; Haggerty, C.M. Left Ventricular and Atrial Segmentation of 2D Echocardiography with Convolutional Neural Networks. In Proceedings of the Medical Imaging 2020: Image Processing, Houston, TX, USA, 10 March 2020. [Google Scholar] [CrossRef]

- Sander, J.; de Vos, B.D.; Išgum, I. Automatic Segmentation with Detection of Local Segmentation Failures in Cardiac MRI. Sci. Rep. 2020, 10, 21769. [Google Scholar] [CrossRef] [PubMed]

- Masutani, E.M.; Bahrami, N.; Hsiao, A. Deep Learning Single-Frame and Multiframe Super-Resolution for Cardiac MRI. Radiology 2020, 295, 552–561. [Google Scholar] [CrossRef] [PubMed]

- O’Brien, H.; Whitaker, J.; Singh Sidhu, B.; Gould, J.; Kurzendorfer, T.; O’Neill, M.D.; Rajani, R.; Grigoryan, K.; Rinaldi, C.A.; Taylor, J.; et al. Automated Left Ventricle Ischemic Scar Detection in CT Using Deep Neural Networks. Front. Cardiovasc. Med. 2021, 8, 655252. [Google Scholar] [CrossRef]

- Lin, A.; Chen, B.; Xu, J.; Zhang, Z.; Lu, G. DS-TransUNet: Dual Swin Transformer U-Net for Medical Image Segmentation. IEEE Trans. Instrum. Meas. 2022, 71, 4005615. [Google Scholar] [CrossRef]

- Koresh, H.J.D.; Chacko, S.; Periyanayagi, M. A Modified Capsule Network Algorithm for Oct Corneal Image Segmentation. Pattern Recognit. Lett. 2021, 143, 104–112. [Google Scholar] [CrossRef]

- Khan, M.Z.; Gajendran, M.K.; Lee, Y.; Khan, M.A. Deep Neural Architectures for Medical Image Semantic Segmentation: Review. IEEE Access 2021, 9, 83002–83024. [Google Scholar] [CrossRef]

- Fischer, A.M.; Eid, M.; De Cecco, C.N.; Gulsun, M.A.; Van Assen, M.; Nance, J.W.; Sahbaee, P.; De Santis, D.; Bauer, M.J.; Jacobs, B.E.; et al. Accuracy of an Artificial Intelligence Deep Learning Algorithm Implementing a Recurrent Neural Network with Long Short-Term Memory for the Automated Detection of Calcified Plaques from Coronary Computed Tomography Angiography. J. Thorac. Imaging 2020, 35, S49–S57. [Google Scholar] [CrossRef]

- Lyu, Q.; Shan, H.; Xie, Y.; Kwan, A.C.; Otaki, Y.; Kuronuma, K.; Li, D.; Wang, G. Cine Cardiac MRI Motion Artifact Reduction Using a Recurrent Neural Network. IEEE Trans. Med. Imaging 2021, 40, 2170–2181. [Google Scholar] [CrossRef]

- Ammar, A.; Bouattane, O.; Youssfi, M. Automatic Spatio-Temporal Deep Learning-Based Approach for Cardiac Cine MRI Segmentation. In Networking, Intelligent Systems and Security; Mohamed, B.A., Teodorescu, H.-N.L., Mazri, T., Subashini, P., Boudhir, A.A., Eds.; Springer: Singapore, 2022; pp. 59–73. [Google Scholar]

- Lu, X.H.; Liu, A.; Fuh, S.C.; Lian, Y.; Guo, L.; Yang, Y.; Marelli, A.; Li, Y. Recurrent Disease Progression Networks for Modelling Risk Trajectory of Heart Failure. PLoS ONE 2021, 16, e0245177. [Google Scholar] [CrossRef] [PubMed]

- Fu, Q.; Dong, H. An Ensemble Unsupervised Spiking Neural Network for Objective Recognition. Neurocomputing 2021, 419, 47–58. [Google Scholar] [CrossRef]

- Rana, A.; Kim, K.K. A Novel Spiking Neural Network for ECG Signal Classification. J. Sens. Sci. Technol. 2021, 30, 20–24. [Google Scholar] [CrossRef]

- Shekhawat, D.; Chaudhary, D.; Kumar, A.; Kalwar, A.; Mishra, N.; Sharma, D. Binarized Spiking Neural Network Optimized with Momentum Search Algorithm for Fetal Arrhythmia Detection and Classification from ECG Signals. Biomed. Signal Process. Control 2024, 89, 105713. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Yan, X.; Yan, Y.; Cao, M.; Xie, W.; O’Connor, S.; Lee, J.J.; Ho, M.H. Effectiveness of Virtual Reality Distraction Interventions to Reduce Dental Anxiety in Paediatric Patients: A Systematic Review and Meta-Analysis. J. Dent. 2023, 132, 104455. [Google Scholar] [CrossRef]

- Kim, Y.; Panda, P. Visual Explanations from Spiking Neural Networks Using Inter-Spike Intervals. Sci. Rep. 2021, 11, 19037. [Google Scholar] [CrossRef] [PubMed]

- Kazeminia, S.; Baur, C.; Kuijper, A.; van Ginneken, B.; Navab, N.; Albarqouni, S.; Mukhopadhyay, A. GANs for Medical Image Analysis. Artif. Intell. Med. 2020, 109, 101938. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, H.; Chen, J.; Gao, Z.; Zhang, P.; Muhammad, K.; Del Ser, J. Vessel-GAN: Angiographic Reconstructions from Myocardial CT Perfusion with Explainable Generative Adversarial Networks. Future Gener. Comput. Syst. 2022, 130, 128–139. [Google Scholar] [CrossRef]

- Laidi, A.; Ammar, M.; El Habib Daho, M.; Mahmoudi, S. GAN Data Augmentation for Improved Automated Atherosclerosis Screening from Coronary CT Angiography. EAI Endorsed. Trans. Scalable. Inf. Syst. 2023, 10, e4. [Google Scholar] [CrossRef]

- Olender, M.L.; Nezami, F.R.; Athanasiou, L.S.; De La Torre Hernández, J.M.; Edelman, E.R. Translational Challenges for Synthetic Imaging in Cardiology. Eur. Heart J. Digit. Health 2021, 2, 559–560. [Google Scholar] [CrossRef]

- Wieneke, H.; Voigt, I. Principles of Artificial Intelligence and Its Application in Cardiovascular Medicine. Clin. Cardiol. 2023, 47, e24148. [Google Scholar] [CrossRef]

- Hasan Rafi, T.; Shubair, R.M.; Farhan, F.; Hoque, Z.; Mohd Quayyum, F. Recent Advances in Computer-Aided Medical Diagnosis Using Machine Learning Algorithms with Optimization Techniques. IEEE Access 2021, 9, 137847–137868. [Google Scholar] [CrossRef]

- Chiu, J.-C.; Sarkar, E.; Liu, Y.-M.; Dong, X.; Lei, Y.; Wang, T.; Liu, L.; Wolterink, J.M.; Brune, C.; J Veldhuis, R.N. Physics in Medicine & Biology Anatomy-Aided Deep Learning for Medical Image Segmentation: A Review Deep Learning-Based Attenuation Correction in the Absence of Structural Information for Whole-Body Positron Emission Tomography Imaging Anatomy-Aided Deep Learning for Medical Image Segmentation: A Review. Phys. Med. Biol. 2021, 66, 11–12. [Google Scholar] [CrossRef]

- Banta, A.; Cosentino, R.; John, M.M.; Post, A.; Buchan, S.; Razavi, M.; Aazhang, B. Nonlinear Regression with a Convolutional Encoder-Decoder for Remote Monitoring of Surface Electrocardiograms. arXiv 2020, arXiv:2012.06003. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. Available online: https://github.com/facebookresearch/detr (accessed on 20 February 2024).

- Jannatul Ferdous, G.; Akhter Sathi, K.; Hossain, A.; Moshiul Hoque, M.; Member, S.; Ali Akber Dewan, M. LCDEiT: A Linear Complexity Data-Efficient Image Transformer for MRI Brain Tumor Classification. IEEE Access 2017, 11, 20337–20350. [Google Scholar] [CrossRef]

- Henein, M.; Liao, M.; Lian, Y.; Yao, Y.; Chen, L.; Gao, F.; Xu, L.; Huang, X.; Feng, X.; Guo, S. Left Ventricle Segmentation in Echocardiography with Transformer. Diagnostics 2023, 13, 2365. [Google Scholar] [CrossRef]

- Ahn, S.S.; Ta, K.; Thorn, S.L.; Onofrey, J.A.; Melvinsdottir, I.H.; Lee, S.; Langdon, J.; Sinusas, A.J.; Duncan, J.S. Co-Attention Spatial Transformer Network for Unsupervised Motion Tracking and Cardiac Strain Analysis in 3D Echocardiography. Med. Image Anal. 2023, 84, 102711. [Google Scholar] [CrossRef]

- Fazry, L.; Haryono, A.; Nissa, N.K.; Sunarno; Hirzi, N.M.; Rachmadi, M.F.; Jatmiko, W. Hierarchical Vision Transformers for Cardiac Ejection Fraction Estimation. In Proceedings of the 2022 7th International Workshop on Big Data and Information Security (IWBIS), Depok, Indonesia, 1–3 October 2022; pp. 39–44. [Google Scholar] [CrossRef]

- Upendra, R.R.; Simon, R.; Shontz, S.M.; Linte, C.A. Deformable Image Registration Using Vision Transformers for Cardiac Motion Estimation from Cine Cardiac MRI Images. In Functional Imaging and Modeling of the Heart; Olivier, B., Clarysse, P., Duchateau, N., Ohayon, J., Viallon, M., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 375–383. [Google Scholar]

- Beer, K.; Bondarenko, D.; Farrelly, T.; Osborne, T.J.; Salzmann, R.; Scheiermann, D.; Wolf, R. Training Deep Quantum Neural Networks. Nat. Commun. 2020, 11, 808. [Google Scholar] [CrossRef] [PubMed]

- Landman, J.; Mathur, N.; Li, Y.Y.; Strahm, M.; Kazdaghli, S.; Prakash, A.; Kerenidis, I. Quantum Methods for Neural Networks and Application to Medical Image Classification. Quantum 2022, 6, 881. [Google Scholar] [CrossRef]

- Shahwar, T.; Zafar, J.; Almogren, A.; Zafar, H.; Rehman, A.U.; Shafiq, M.; Hamam, H. Automated Detection of Alzheimer’s via Hybrid Classical Quantum Neural Networks. Electronics 2022, 11, 721. [Google Scholar] [CrossRef]

- Ovalle-Magallanes, E.; Avina-Cervantes, J.G.; Cruz-Aceves, I.; Ruiz-Pinales, J. Hybrid Classical–Quantum Convolutional Neural Network for Stenosis Detection in X-Ray Coronary Angiography. Expert Syst. Appl. 2022, 189, 116112. [Google Scholar] [CrossRef]

- Kumar, A.; Choudhary, A.; Tiwari, A.; James, C.; Kumar, H.; Kumar Arora, P.; Akhtar Khan, S. An Investigation on Wear Characteristics of Additive Manufacturing Materials. Mater. Today Proc. 2021, 47, 3654–3660. [Google Scholar] [CrossRef]

- Belli, C.; Sagingalieva, A.; Kordzanganeh, M.; Kenbayev, N.; Kosichkina, D.; Tomashuk, T.; Melnikov, A. Hybrid Quantum Neural Network For Drug Response Prediction. Cancers 2023, 15, 2705. [Google Scholar] [CrossRef]

- Pregowska, A.; Perkins, M. Artificial Intelligence in Medical Education: Technology and Ethical Risk. Available online: https://ssrn.com/abstract=4643763 (accessed on 20 February 2024).

- Rastogi, D.; Johri, P.; Tiwari, V.; Elngar, A.A. Multi-Class Classification of Brain Tumour Magnetic Resonance Images Using Multi-Branch Network with Inception Block and Five-Fold Cross Validation Deep Learning Framework. Biomed. Signal Process. Control 2024, 88, 105602. [Google Scholar] [CrossRef]

- Fotiadou, E.; van Sloun, R.J.; van Laar, J.O.; Vullings, R. Physiological Measurement A Dilated Inception CNN-LSTM Network for Fetal Heart Rate Estimation. Physiol. Meas. 2021, 42, 045007. [Google Scholar] [CrossRef]

- Tariq, Z.; Shah, S.K.; Lee, Y.; Shah, Z.; Lee, S.K.; Tariq, Y. Feature-Based Fusion Using CNN for Lung and Heart Sound Classification. Feature-Based Fusion Using CNN for Lung and Heart Sound Classification. Sensors 2022, 22, 1521. [Google Scholar] [CrossRef] [PubMed]

- Sudarsanan, S.; Aravinth, J. Classification of Heart Murmur Using CNN. In Proceedings of the 2020 5th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 10–12 June 2020; pp. 818–822. [Google Scholar] [CrossRef]

- Fakhry, M.; Gallardo-Antolín, A. Elastic Net Regularization and Gabor Dictionary for Classification of Heart Sound Signals Using Deep Learning. Eng. Appl. Artif. Intell. 2024, 127, 107406. [Google Scholar] [CrossRef]

- Ainiwaer, A.; Hou, W.Q.; Qi, Q.; Kadier, K.; Qin, L.; Rehemuding, R.; Mei, M.; Wang, D.; Ma, X.; Dai, J.G.; et al. Deep Learning of Heart-Sound Signals for Efficient Prediction of Obstructive Coronary Artery Disease. Heliyon 2024, 10, e23354. [Google Scholar] [CrossRef] [PubMed]

- Chunduru, A.; Kishore, A.R.; Sasapu, B.K.; Seepana, K. Multi Chronic Disease Prediction System Using CNN and Random Forest. SN Comput. Sci. 2024, 5, 157. [Google Scholar] [CrossRef]

- Anggraeni, W.; Kusuma, M.F.; Riksakomara, E.; Wibowo, R.P.; Sumpeno, S. Combination of BERT and Hybrid CNN-LSTM Models for Indonesia Dengue Tweets Classification. Int. J. Intell. Eng. Syst. 2024, 17, 813–826. [Google Scholar] [CrossRef]

- Kusuma, S.; Jothi, K.R. ECG Signals-Based Automated Diagnosis of Congestive Heart Failure Using Deep CNN and LSTM Architecture. Biocybern. Biomed. Eng. 2022, 42, 247–257. [Google Scholar] [CrossRef]

- Shrivastava, P.K.; Sharma, M.; Sharma, P.; Kumar, A. HCBiLSTM: A Hybrid Model for Predicting Heart Disease Using CNN and BiLSTM Algorithms. Meas. Sens. 2023, 25, 100657. [Google Scholar] [CrossRef]

- Shaker, A.M.; Tantawi, M.; Shedeed, H.A.; Tolba, M.F. Generalization of Convolutional Neural Networks for ECG Classification Using Generative Adversarial Networks. IEEE Access 2020, 8, 35592–35605. [Google Scholar] [CrossRef]

- Wang, Z.; Stavrakis, S.; Yao, B. Hierarchical Deep Learning with Generative Adversarial Network for Automatic Cardiac Diagnosis from ECG Signals. Comput. Biol. Med. 2023, 155, 106641. [Google Scholar] [CrossRef]

- Puspitasari, R.D.I.; Ma’sum, M.A.; Alhamidi, M.R.; Kurnianingsih; Jatmiko, W. Generative Adversarial Networks for Unbalanced Fetal Heart Rate Signal Classification. ICT Express 2022, 8, 239–243. [Google Scholar] [CrossRef]

- Rahman, A.U.; Alsenani, Y.; Zafar, A.; Ullah, K.; Rabie, K.; Shongwe, T. Enhancing Heart Disease Prediction Using a Self-Attention-Based Transformer Model. Sci. Rep. 2024, 14, 514. [Google Scholar] [CrossRef]

- Wang, Q.; Zhao, C.; Sun, Y.; Xu, R.; Li, C.; Wang, C.; Liu, W.; Gu, J.; Shi, Y.; Yang, L.; et al. Synaptic Transistor with Multiple Biological Functions Based on Metal-Organic Frameworks Combined with the LIF Model of a Spiking Neural Network to Recognize Temporal Information. Microsyst. Nanoeng. 2023, 9, 96. [Google Scholar] [CrossRef]

- Ji, W.; Li, J.; Bi, Q.; Liu, T.; Li, W.; Cheng, L. Segment Anything Is Not Always Perfect: An Investigation of SAM on Different Real-World Applications. arXiv 2023, arXiv:2304.05750. Available online: https://segment-anything.com (accessed on 20 February 2024).

- El-Ghaish, H.; Eldele, E. ECGTransForm: Empowering Adaptive ECG Arrhythmia Classification Framework with Bidirectional Transformer. Biomed. Signal Process. Control 2024, 89, 105714. [Google Scholar] [CrossRef]

- Akan, T.; Alp, S.; Alfrad, M.; Bhuiyan, N. ECGformer: Leveraging Transformer for ECG Heartbeat Arrhythmia Classification. arXiv 2024, arXiv:2401.05434. [Google Scholar]

- Pawłowska, A.; Karwat, P.; Żołek, N. Letter to the Editor. Re: “[Dataset of Breast Ultrasound Images by W. Al-Dhabyani, M.; Gomaa, H. Khaled & A. Fahmy, Data in Brief, 2020, 28, 104863]”. Data Brief 2023, 48, 109247. [Google Scholar] [CrossRef] [PubMed]

- Pawłowska, A.; Ćwierz-Pieńkowska, A.; Domalik, A.; Jaguś, D.; Kasprzak, P.; Matkowski, R.; Fura, Ł.; Nowicki, A.; Żołek, N. Curated Benchmark Dataset for Ultrasound Based Breast Lesion Analysis. Sci. Data 2024, 11, 148. [Google Scholar] [CrossRef] [PubMed]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on Deep Learning with Class Imbalance. J. Big Data. 2019, 6, 27. [Google Scholar] [CrossRef]

- Fernando, K.R.M.; Tsokos, C.P. Dynamically Weighted Balanced Loss: Class Imbalanced Learning and Confidence Calibration of Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 2940–2951. [Google Scholar] [CrossRef] [PubMed]

- Habbal, A.; Ali, M.K.; Abuzaraida, M.A. Artificial Intelligence Trust, Risk and Security Management (AI TRiSM): Frameworks, Applications, Challenges and Future Research Directions. Expert Syst. Appl. 2024, 240, 122442. [Google Scholar] [CrossRef]

- Ghubaish, A.; Salman, T.; Zolanvari, M.; Unal, D.; Al-Ali, A.; Jain, R. Recent Advances in the Internet-of-Medical-Things (IoMT) Systems Security. IEEE Internet Things J. 2021, 8, 8707–8718. [Google Scholar] [CrossRef]

- Avinashiappan, A.; Mayilsamy, B. Internet of Medical Things: Security Threats, Security Challenges, and Potential Solutions. In Internet of Medical Things: Remote Healthcare Systems and Applications; Hemanth, D.J., Anitha, J., Tsihrintzis, G.A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 1–16. [Google Scholar] [CrossRef]

- Al-Hawawreh, M.; Hossain, M.S. A Privacy-Aware Framework for Detecting Cyber Attacks on Internet of Medical Things Systems Using Data Fusion and Quantum Deep Learning. Inf. Fusion 2023, 99, 101889. [Google Scholar] [CrossRef]

- Bradford, L.; Aboy, M.; Liddell, K. International Transfers of Health Data between the EU and USA: A Sector-Speciic Approach for the USA to Ensure an “adequate” Level of Protection. J. Law Biosci. 2020, 7, 1–33. [Google Scholar] [CrossRef]

- Scheibner, J.; Raisaro, J.L.; Troncoso-Pastoriza, J.R.; Ienca, M.; Fellay, J.; Vayena, E.; Hubaux, J.-P. Revolutionizing Medical Data Sharing Using Advanced Privacy-Enhancing Technologies: Technical, Legal, and Ethical Synthesis. J. Med. Internet Res. 2021, 23, e25120. [Google Scholar] [CrossRef]

- Proniewska, K.; Dołęga-Dołęgowski, D.; Pręgowska, A.; Walecki, P.; Dudek, D. Holography as a Progressive Revolution in Medicine. In Simulations in Medicine: Computer-Aided Diagnostics and Therapy; De Gruyter: Berlin, Germany; Boston, MA, USA, 2020. [Google Scholar] [CrossRef]

- Bui, T.X. Proceedings of the 56th Hawaii International Conference on System Sciences (HICCS), Hyatt Regency Maui, 3–6 January 2023; University of Hawaii at Manoa: Honolulu, HI, USA, 2023. [Google Scholar]

- Grinbaum, A.; Adomaitis, L. Moral Equivalence in the Metaverse. Nanoethics 2022, 16, 257–270. [Google Scholar] [CrossRef]

- Todorović, D.; Matić, Z.; Blagojević, M. Religion in Late Modern Society; A Thematic Collection of Papers of International Significance; Yugoslav Society for the Scientific Study of Religion (YSSSR): Niš, Serbia; Committee of Education and Culture of the Diocese of Požarevac and Braničevo: Požarevac, Serbia, 2022.

- Available online: https://www.scu.edu/ethics/metaverse/#:~:text=Do%20no%20Harm%E2%80%94Take%20no,and%20concern%20for%20other%20people (accessed on 20 February 2024).

- Viola, F.; Del Corso, G.; De Paulis, R.; Verzicco, R. GPU Accelerated Digital Twins of the Human Heart Open New Routes for Cardiovascular Research. Sci. Rep. 2023, 13, 8230. [Google Scholar] [CrossRef] [PubMed]

- Anshari, M.; Syafrudin, M.; Fitriyani, N.L.; Razzaq, A. Ethical Responsibility and Sustainability (ERS) Development in a Metaverse Business Model. Sustainability 2022, 14, 15805. [Google Scholar] [CrossRef]

- Chen, M. The Philosophy of the Metaverse. Ethics Inf. Technol. 2023, 25, 41. [Google Scholar] [CrossRef]

- Armeni, P.; Polat, I.; De Rossi, L.M.; Diaferia, L.; Meregalli, S.; Gatti, A. Digital Twins in Healthcare: Is It the Beginning of a New Era of Evidence-Based Medicine? A Critical Review. J. Pers. Med. 2022, 12, 1255. [Google Scholar] [CrossRef]

- Braun, M.; Krutzinna, J. Digital Twins and the Ethics of Health Decision-Making Concerning Children. Patterns 2022, 3, 100469. [Google Scholar] [CrossRef]

- Leonelli, S.; Tempini, N. Data Journeys in the Sciences; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Coorey, G.; Figtree, G.A.; Fletcher, D.F.; Snelson, V.J.; Vernon, S.T.; Winlaw, D.; Grieve, S.M.; Mcewan, A.; Yee, J.; Yang, H.; et al. The Health Digital Twin to Tackle Cardiovascular Disease-a Review of an Emerging Interdisciplinary Field. Npj Digit. Med. 2022, 5, 126. [Google Scholar] [CrossRef]

- Albahri, A.S.; Duhaim, A.M.; Fadhel, M.A.; Alnoor, A.; Baqer, N.S.; Alzubaidi, L.; Albahri, O.S.; Alamoodi, A.H.; Bai, J.; Salhi, A.; et al. A Systematic Review of Trustworthy and Explainable Artificial Intelligence in Healthcare: Assessment of Quality, Bias Risk, and Data Fusion. Inf. Fusion 2023, 96, 156–191. [Google Scholar] [CrossRef]

- Eprs. BRIEFING EU Legislation in Progress. Available online: https://epthinktank.eu/eu-legislation-in-progress/ (accessed on 20 February 2024).

- Jalilvand, I.; Jang, J.; Gopaluni, B.; Milani, A.S. VR/MR Systems Integrated with Heat Transfer Simulation for Training of Thermoforming: A Multicriteria Decision-Making User Study. J. Manuf. Syst. 2024, 72, 338–359. [Google Scholar] [CrossRef]

- Jung, C.; Wolff, G.; Wernly, B.; Bruno, R.R.; Franz, M.; Schulze, P.C.; Silva, J.N.A.; Silva, J.R.; Bhatt, D.L.; Kelm, M. Virtual and Augmented Reality in Cardiovascular Care: State-of-the-Art and Future Perspectives. JACC Cardiovasc. Imaging 2022, 15, 519–532. [Google Scholar] [CrossRef] [PubMed]

- Arshad, I.; de Mello, P.; Ender, M.; McEwen, J.D.; Ferré, E.R. Reducing Cybersickness in 360-Degree Virtual Reality. Multisens. Res. 2021, 35, 203–219. [Google Scholar] [CrossRef] [PubMed]

- Daling, L.M.; Schlittmeier, S.J. Effects of Augmented Reality-, Virtual Reality-, and Mixed Reality–Based Training on Objective Performance Measures and Subjective Evaluations in Manual Assembly Tasks: A Scoping Review. Hum. Factors 2022, 66, 589–626. [Google Scholar] [CrossRef]

- Kimmatudawage, S.P.; Srivastava, R.; Kachroo, K.; Badhal, S.; Balivada, S. Toward Global Use of Rehabilitation Robots and Future Considerations. In Rehabilitation Robots for Neurorehabilitation in High-, Low-, and Middle-Income Countries: Current Practice, Barriers, and Future Directions; Academic Press: London, UK, 2024; pp. 499–516. [Google Scholar] [CrossRef]

- Salvador, M.; Regazzoni, F.; Dede’, L.; Quarteroni, A. Fast and Robust Parameter Estimation with Uncertainty Quantification for the Cardiac Function. Comput. Methods Programs Biomed. 2023, 231, 107402. [Google Scholar] [CrossRef]

| XR Technology Type | HDM Type | AI Support | Perception of Real Surrounding | Application Field | References |

|---|---|---|---|---|---|

| MR | HoloLens 2 | No | Yes | Visualization of ultrasound-guided femoral arterial cannulations | [47] |

| MR | HoloLens 2 | No | Yes | USG visualization | [48] |

| MR | HoloLens 2 | No | Yes | Visualization of heart structures | [49] |

| MR | HoloLens 2 | No | Yes | Operation planning | [50] |

| MR | HoloLens | No | Yes | Operation planning | [51] |

| MR | HoloLens 2 | No | Yes | Visualization of heart structures | [52] |

| MR | HoloLens 2 | No | Yes | Visualization of heart structures | [53] |

| AR | mobile phone | No | Yes | Diagnosis of the heart | [54] |

| AR | none | No | Yes | Virtual pathology stethoscope detection | [55] |

| AR | none | Yes | Yes | Eye-tracking system | [56] |

| AR | none | Yes | Yes | Detection of semi-opaque markers in fluoroscopy | [57] |

| VR | Simulator Stanford Virtual Heart | No | No | Visualization of heart structures | [58] |

| VR | Simulator Stanford Virtual Heart | No | No | Visualization of heart structures | [59] |

| VR | Meta-CathLab (concept) | No | No | Merging interventional cardiology with the Metaverse | [60] |

| VR | VR glasses | Yes | No | Sleep stage classification—concept | [61] |

| VR | Virtualcpr: mobile application | Yes | No | Training in cardiopulmonary resuscitation techniques | [62] |

| VR | none | Yes | No | Diagnostic of cardiovascular diseases—visualization | [63] |

| VR | none | No | No | Cardiovascular education | [61] |

| AI/ML Model | Application Fields (In General) | Application Fields (In Cardiology) | References |

|---|---|---|---|

| ANNs | classification, pattern recognition, image recognition, natural language processing (NLP), speech recognition, recommendation systems, prediction, cybersecurity, object manipulation, path planning, sensor fusion | prediction of atrial fibrillation, acute myocardial infarctions, and dilated cardiomyopathy detection of the structural abnormalities in heart tissues | [85] [86] |

| RNNs | ordinal or temporal problems (language translation, speech recognition, NLP image captioning), time series prediction, music generation, video analysis, patient monitoring, disease progression prediction | segmentation of the heart and subtle structural changes cardiac MRI segmentation | [87] [88] |

| LSTMs | ordinal or temporal problems (language translation, speech recognition, NLP, image captioning), time series prediction, music generation, video analysis, patient monitoring, disease progression prediction | segmentation and classification of 2D echo images segmentation and classification of 3D Doppler images segmentation and classification of video graphics images and detection of the AMI in echocardiography | [89] [90] |

| CNNs | pattern recognition, segmentation/classification, object detection, semantic segmentation, facial recognition, medical imaging, gesture recognition, video analysis | cardiac image segmentation to diagnose CAD cardiac image segmentation to diagnose Tetralogy of Fallot localization of the coronary artery atherosclerosis detection of cardiovascular abnormalities detection of arrhythmia detection of coronary artery disease prediction of the survival status of heart failure patients prediction of cardiovascular disease LV dysfunction screening prediction of premature ventricular contraction detection | [91,92] [93] [94] [95] [96,97,98,99,100,101,102,103,104,105,106,107] [108] [109] [110] [111,112] |

| Transformers | NLP, speech processing, computer vision, graph-based tasks, electronic health records, building conversational AI systems and chatbots | coronary artery labeling prediction of incident heart failure arrhythmia classification cardiac abnormality detection segmentation of MRI in case of cardiac infarction classification of aortic stenosis severity LV segmentation heart murmur detection myocardial fibrosis segmentation ECG classification | [113,114] [115] [116,117,118,119] [120] [121] [122,123] [118,124,125] [126] [118] [127] |

| SNNs | pattern recognition, cognitive robotics, SNN hardware, brain–machine interfaces, neuromorphic computing | ECG classification detection of arrhythmia extraction of ECG features | [128,129,130] [131,132,133] [134] |

| GANs | image-to-image translation, image synthesis and generation, data generation for training, data augmentation, creating realistic scenes | CVD diagnosis segmentation of the LA and atrial scars in LGE CMR images segmentation of ventricles based on MRI scans left ventricle segmentation in pediatric MRI scans generation of synthetic cardiac MRI images for congenital heart disease research | [135] [136] [137] [138] [139] |

| GNNs | graph/node classification, link prediction, graph generation, social/biological network analysis, fraud detection, recommendation systems | classification of polar maps in cardiac perfusion imaging analysis of CT/MRI scans prediction of ventricular arrhythmia segmentation of cardiac fibrosis diagnosis of cardiac condition: LV motion in cardiac MR cine images automated anatomical labeling of coronary arteries prediction of CAD automation of coronary artery analysis using CCTA screening of cardio, thoracic, and pulmonary conditions in chest radiograph | [140,141] [142] [141] [141] [143] [144] [145] [146] [147] |

| QNNs | optimization of hardware operations, user interfaces | classification of ischemic heart disease | [97] |

| GA | optimization techniques, risk prediction, gene therapies, medicine development | classification of heart disease | [148] |

| Network Type | Evaluation Metrics | Input | Output | Xr Connection | Dt Contention | Reference |

|---|---|---|---|---|---|---|

| ANN | accuracy 94.32% | ECG recordings | binary classification of normal and ventricular ectopic beats | No | No | [131] |

| ROI 89.00% | Echocardiography | Automatic measurement of left ventricular strain | No | No | [163] | |

| accuracy 91.00% | Electronic health records | classification and prediction of cardiovascular diseases | No | No | [162] | |

| RNN-LSTM | accuracy 80.00% F1 score 84.00% | 3D Doppler images | heart abnormalities classification | No | No | [89] |

| accuracy 97.00% F1 score 97.00% | 2D echo images | heart abnormalities classification | No | No | [89] | |

| (1) accuracy 85.10% (2) accuracy 83.20% | echocardiography images | automated classification of acute myocardial infarction: (1) classification of the left ventricular long-axis view; (2) classification of short-axis view (papillary muscle level) | No | No | [90] | |

| accuracy 93.10% | coronary computed tomography angiography | diagnostic of the coronary artery calcium | No | No | [175] | |

| accuracy 90.67% | ECG recordings | prediction of the arrhythmia | No | No | [98] | |

| RNN | IoU factor 92.13% | MRI cardiac images | estimation of the cardiac state: sequential four-chamber view left ventricle wall segmentation | No | No | [116] |

| CNN | accuracy 95.92% | ECG recordings | binary classification of normal and ventricular ectopic beats | No | No | [131] |

| IoU factor 61.75% | MRI cardiac images | estimation of the cardiac state: sequential four-chamber view left ventricle wall segmentation | No | No | [116] | |

| accuracy 94.00% F1 score 95.00% | 2D echo images | heart abnormalities classification | No | No | [89] | |

| accuracy 98.00% F1 score 98.00% | 3D Doppler images | heart abnormalities classification | No | No | [89] | |

| accuracy 92.00% | ECG recordings | ECG classification | No | No | [183] | |

| accuracy 88.00% | Electronic health records | heart disease prediction | No | No | [135] | |

| accuracy 98.82% | ECG recordings | prediction of heart failure and arrhythmia | No | No | [102] | |

| accuracy 95.13% | Electronic health records | prediction of the survival status of heart failure patients | No | No | [108] | |

| accuracy 99.60% | ECG recordings | estimation of the fetal heart rate | No | No | [207] | |

| accuracy 99.10% | heart audio recordings | heart disease classification | No | No | [208] | |

| accuracy 97.00% | heart sound signals | classification of heart murmur | No | No | [209] | |

| accuracy 98.95% | heart sound signals | classification of heart sound signals | No | No | [210] | |

| ROC curve 0.834 | heart sound signals | prediction of obstructive coronary artery disease | No | No | [211] | |

| accuracy 85.25% | MRI image scans | chronic disease prediction | No | No | [212] | |

| accuracy 99.10% | heart sound signals | diagnosis of cardiovascular disease | No | No | [213] | |

| CNN-LSTM | accuracy 99.52% Dice coef. 0.989 ROC curve 0.999 | ECG recordings | prediction of congestive heart failure | No | No | [214] |

| accuracy 96.66% | Heart disease Cleveland UCI dataset | prediction of the heart disease | No | No | [215] | |

| accuracy 99.00% | ECG recordings | prediction of the heart failure | No | No | [106] | |

| SNN | ROC curve 0.99 | ECG recordings | ECG classification | No | No | [181] |

| accuracy 97.16% | ECG recordings | binary classification of normal and ventricular ectopic beats | No | No | [131] | |

| accuracy 93.60% | ECG recordings | ECG classification | No | No | [182] | |

| accuracy 85.00% | ECG recordings | ECG classification | No | No | [180] | |

| accuracy 84.41% | ECG recordings | ECG classification | No | No | [129] | |

| accuracy 91.00% | ECG recordings | ECG classification | No | No | [183] | |

| GNN | Dice coef. 0.82 | cardiac MRI images | prediction diverticular arrhythmia | No | No | [141] |

| ROC curve 0.739 | CT image scan | prediction of coronary artery disease | No | No | [145] | |

| AUC 0.821 | chest radiographs | screening of cardio, thoracic, and pulmonary conditions | No | No | [147] | |

| ROC area 0.98 | 12-lead ECG record | remote monitoring of surface electrocardiograms | No | No | [192] | |

| GAN | accuracy 99.08% Dice coef. 0.987 | CT image scan | cardiac fat segmentation | No | No | [52] |

| accuracy 98.00% | ECG recordings | ECG classification | No | No | [216] | |

| accuracy 95.40% | ECG recordings | ECG classification | No | No | [217] | |

| accuracy 68.07% | CTG signal dataset | fetal heart rate signal classification | No | No | [218] | |

| Dice coef. 0.880 | MRI image scans | segmentation of the left ventricle | No | No | [138] | |

| Transformers | accuracy 96.51% | Cleveland dataset | prediction of cardiovascular diseases | No | No | [219] |

| accuracy 98.70% | heart sound signals—Mel-spectrogram, bispectral analysis, and Phonocardiogram | heart sound classification | No | No | [220] | |

| Dice coef. 0.861 | 12-lead ECG record | arrhythmia classification | No | No | [221] | |

| Dice coef. 0.0004 | ECG recordings | arrhythmia classification | No | No | [222] | |

| Dice coef. 0.980 | ECG recordings | arrhythmia classification | No | No | [223] | |

| Dice coef. 0.911 | ECG recordings | classification of ECG recordings | No | No | [134] | |

| GA | - | laboratory data, patient medical history, ECG, physical examinations, and echocardiogram (Z-Alizadeh Sani dataset) | determination of the parameters to prediction of the coronary artery disease (next SVM-based classifier was applied) | No | No | [157] |

| QNN | accuracy 84.60% | Electronic health records | classification of ischemic cardiopathy | No | No | [97] |

| accuracy 91.80% Dice coef. 0.918 | X-ray coronary angiography | stenosis detection | No | No | [202] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rudnicka, Z.; Proniewska, K.; Perkins, M.; Pregowska, A. Cardiac Healthcare Digital Twins Supported by Artificial Intelligence-Based Algorithms and Extended Reality—A Systematic Review. Electronics 2024, 13, 866. https://doi.org/10.3390/electronics13050866

Rudnicka Z, Proniewska K, Perkins M, Pregowska A. Cardiac Healthcare Digital Twins Supported by Artificial Intelligence-Based Algorithms and Extended Reality—A Systematic Review. Electronics. 2024; 13(5):866. https://doi.org/10.3390/electronics13050866