Abstract

The landscape of neurorehabilitation is undergoing a profound transformation with the integration of artificial intelligence (AI)-driven robotics. This review addresses the pressing need for advancements in pediatric neurorehabilitation and underscores the pivotal role of AI-driven robotics in addressing existing gaps. By leveraging AI technologies, robotic systems can transcend the limitations of preprogrammed guidelines and adapt to individual patient needs, thereby fostering patient-centric care. This review explores recent strides in social and diagnostic robotics, physical therapy, assistive robotics, smart interfaces, and cognitive training within the context of pediatric neurorehabilitation. Furthermore, it examines the impact of emerging AI techniques, including artificial emotional intelligence, interactive reinforcement learning, and natural language processing, on enhancing cooperative neurorehabilitation outcomes. Importantly, the review underscores the imperative of responsible AI deployment and emphasizes the significance of unbiased, explainable, and interpretable models in fostering adaptability and effectiveness in pediatric neurorehabilitation settings. In conclusion, this review provides a comprehensive overview of the evolving landscape of AI-driven robotics in pediatric neurorehabilitation and offers valuable insights for clinicians, researchers, and policymakers.

1. Introduction

Neurorehabilitation is a multidimensional profession revolving around the physical, cognitive, psychological, and social aspects of human disabilities. It usually involves a multidisciplinary team providing personalized physical therapy, occupational therapy, nursing, neuropsychological support, nutrition guidance, speech–language therapy, and education [1]. Pediatric neurorehabilitation is a multifaceted trans-discipline that aims to support the needs arising from neural deficiencies and irregularities in the population under the age of 18. Pediatric neural deficiencies are commonly associated with neuromuscular diseases such as cerebral palsy, spina bifida, and muscular dystrophy, as well as with autism spectrum disorders, brain tumors and strokes, autoimmune brain and neuromuscular diseases, and traumatic brain injuries (TBIs) including spinal cord injuries [2].

Robotics is currently experiencing a Cambrian explosion [3], as they have proven to be immensely useful in and outside the medical field [4]. However, the introduction of robots into pediatric neurorehabilitation is still faced with numerous challenges [5]:

- The starting point for rehabilitative care covers an extremely wide spectrum of conditions. For example, following moderate-to-severe TBIs, the disability spectrum ranges from mild cognitive and physical impairments to deep coma.

- Although neurorehabilitation programs exist, protocols vary across rehabilitation centers and across patients since there are currently no standard protocols, but rather general guidelines. Moreover, neurorehabilitation is often personalized to each patient’s injury and symptom profile.

- Cognizant of the limited scope of their ability to help, specialized neurorehabilitation centers define their admission criteria based on the likelihood of a successful outcome. Traditionally, clinical stability has been a key requirement for initiating rehabilitation; however, emerging trends advocate for early intervention without the necessity for prior stability. Therefore, centers may no longer strictly mandate clinical stability as a prerequisite for admission, emphasizing the importance of early intervention. Instead, they may focus on criteria such as the patient’s ability to actively participate in a daily rehabilitation program, demonstrate potential for progress, possess a support network (family and friends), and have the means to finance a prolonged stay at the center. Wide-ranging assessment and progress monitoring remain essential. However, since medical diagnostic tools (e.g., imaging) often cannot fully predict functional disruption or the rehabilitation outcome, assessments often involve comprehensive expert-led neuropsychological, pedagogical, and emotional testing.

- The efficiency of neurorehabilitation programs is hard to evaluate. While there is a consensus as to the importance of early-onset rehabilitation, an increasing number of studies have begun to confirm the effectiveness of such programs. However, it remains challenging to conduct evidence-based studies due to ethical considerations surrounding the feasibility of randomized controlled trials in the context of neurorehabilitation. Consequently, determining the most suitable treatment for each patient over the long term remains a complex and intractable task [6].

- The disciplines comprising neurorehabilitation care generally require practitioners who evidence conscious emotional intelligence to provide optimal treatment in conjunction with the provision of empathy and psychological containment. One of the most important qualities of successful treatment is clinicians’ ability to harness patients’ intrinsic motivation to change [7].

At first glance, this set of challenges may seem inapplicable to robotic systems, which are traditionally preprogrammed to follow specific guidelines, defined under known operating conditions. However, advances at the intersection of robotics, artificial intelligence (AI), and control theory over the past two decades clearly point to the yet-to-be-realized potential for the integration of intelligent robots into pediatric neurorehabilitation. AI-powered robots, or intelligent robotics, can enhance neurorehabilitation care and, importantly, decentralize and democratize quality care. Intelligent robots, driven by machine learning (ML), have many advantages including utilizing historically and instantaneously obtained patient data to provide heuristic-driven personalized continuing cognitive care, social interactions, and powered assistance, as well as suggesting treatment strategies.

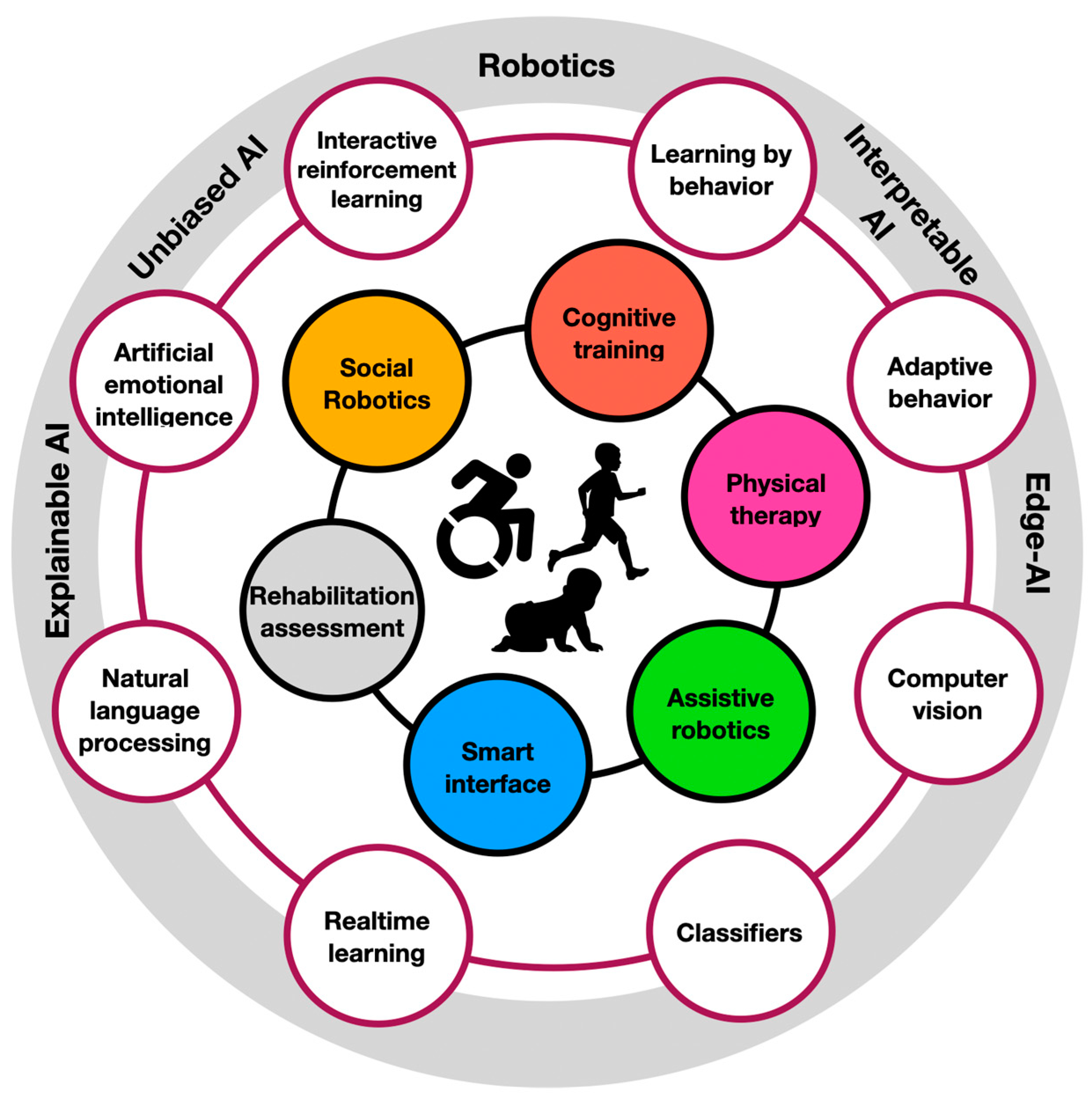

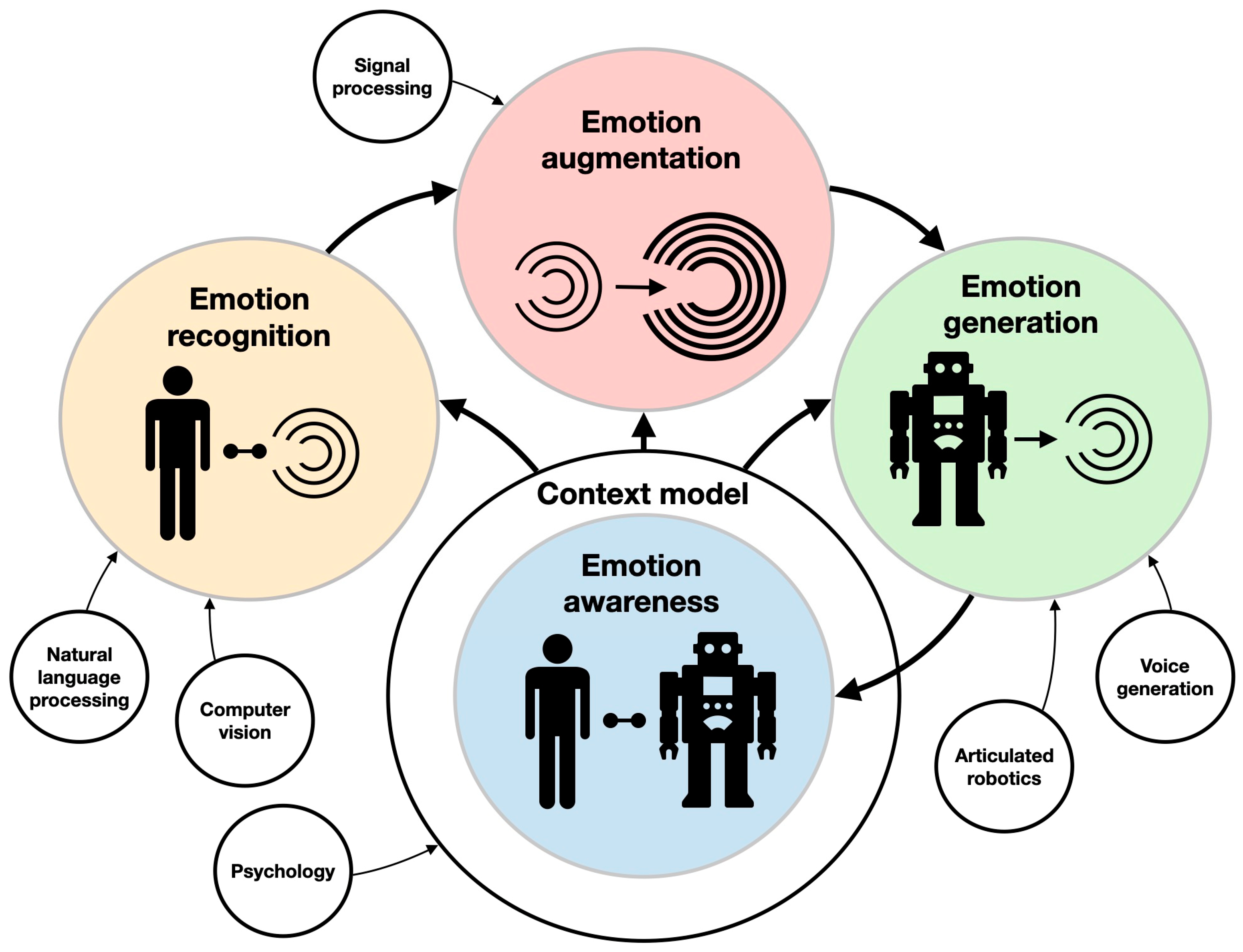

Numerous rehabilitation AI-powered technologies have been proposed in pediatric neurorehabilitation, including virtual reality and intelligent games [8,9]. AI has even been used to power-optimize the mechanical designs of neurorehabilitation robotics [10]. In this review, we examine the state of the art in intelligent robotics by focusing on key aspects of personalized pediatric neurorehabilitation: (1) social interaction; (2) rehabilitation outcome prediction and condition assessment; (3) physical therapy; (4) assistive robotics; (5) smart interfaces; and (6) cognitive training. While most available reviews have tackled one or two of these frontiers, here, we aim to provide a bird’s-eye view of intelligent robotics in pediatric neurorehabilitation to enable an integrated grasp of the field’s potential. The current review highlights the key developments in intelligent robotics that are crucial for pediatric neurorehabilitation. These include advances in artificial emotional intelligence (AEI), interactive reinforcement learning (IRL), probabilistic models, policy learning, natural language processing (NLP), facial expression analysis with computer vision, real-time learning for adaptive behavior, classifiers for the identification of intended behavior, and learning by demonstration (LbD). Other important developments in unbiased AI, explainable AI, and interpretable AI will also be briefly discussed since they are likely to contribute to the adaptability of AI-powered neurorehabilitation. Finally, because many neurorehabilitation robots are embedded in energy-constrained environments (e.g., translational robots), edge AI, where AI models are deployed on microprocessors, will be overviewed as well. The AI-driven neurorehabilitation ecosystem is illustrated in Figure 1. In the following section, the role and potential impact of intelligent robotics in pediatric neurorehabilitation will be discussed. Then, we concisely address the common algorithmic approaches underlying ML before moving to a description of their contribution to advances in intelligent robotics for neurorehabilitation. This is followed by projections and hopes for the future development of the field.

Figure 1.

The ecosystem of AI-driven robotic neurorehabilitation. At the innermost circle are the people facing neural deficiencies. Their immediate treatment areas (e.g., cognitive training and physical therapy) are described in their surrounding circles. Those are supported by AI-driven technologies (e.g., computer vision and adaptive behavior). At the outermost circle are the research areas in which those AI-driven technologies are commonly developed (e.g., robotics and edge AI).

2. Methods

The literature review was conducted in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) methodology, which is described in length in [11]. Eligibility criteria included published manuscripts that were written in English, published between 2016 and 2022 (older references were used only to highlight specific topics such as deep learning and the importance of neurorehabilitation), and that reported the use of (1) artificial emotional intelligence, (2) interactive reinforcement learning, (3) probabilistic models, (4) policy learning, (5) natural language processing, (6) facial expression analysis, (7) real-time learning for adaptive behavior, (8) classifiers, (9) learning by demonstration, (10) unbiased AI, (11) explainable AI, and (12) interpretable AI for (1) social interaction, (2) rehabilitation outcome prediction and condition assessment, (3) physical therapy, (4) assistive robotics, (5) smart interfaces, (6) cognitive training, and (7) identification of intended behavior. All papers were searched in the fields of (1) engineering, (2) computer science, or (3) medicine. All manuscripts were published as (1) regular research papers or (2) reviews in (1) academic journals or (2) conference proceedings with full-text availability. The literature search was carried out in Scopus, IEEE Xplore, Web of Science, Google Scholar, and PubMed databases. Search queries were formulated with multiple keywords falling within the topics described above as eligibility criteria, and the search covered the title, abstract, or keyword fields. All retrieved manuscripts were manually screened against the eligibility criteria.

3. Theoretical Background: AI Techniques in a Nutshell

ML-powered AI comprises a vast spectrum of approaches. Here, we briefly describe some of the most common methodologies associated with ML to give readers a better understanding of the following sections. This is by no means a comprehensive description of ML but rather a concise definition of the computational rationale behind different techniques underlying it, limited by the breadth of the discussion below.

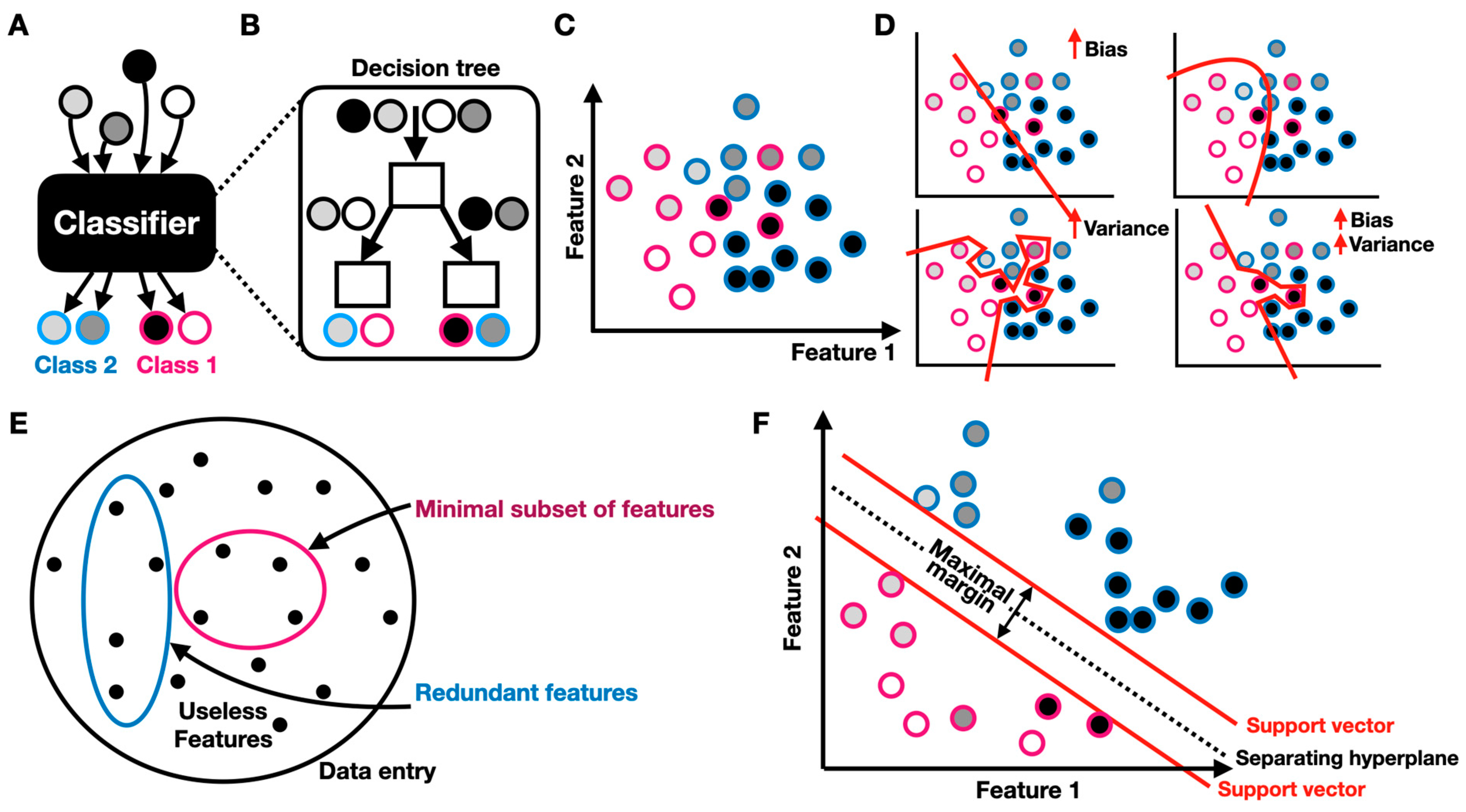

3.1. Classification and Feature Selection

Classification is one of the key objectives of AI. A classifier is a predictive model that categorizes an item into one of several classes (Figure 2A). One popular representation of a classifier is the decision tree, a flow chart that mimics a tree structure in which the nodes represent decisions for which the edges represent possible consequences [12] (Figure 2B). Classification is made with respect to features, which represent important aspects of the classified data (Figure 2C). When compared to an ideal categorization, classifiers are prone to bias and/or variance (Figure 2D). When the model is ideal (truth), both the training and test datasets have a low error. When the bias is high, the model is referred to as “underfitted”, i.e., it is not robust enough to fit or represent the required categorization. When the variance is high, the model is referred to as “overfitted”, a scenario in which the model fits the training data with high accuracy while failing to do so for the test data. A model can also be characterized by both high bias and variance. Supervised learning (see next section) is therefore inherently limited to the availability, quality, and nature of the training data, as well as to the model’s expressivity, trainability, and generalizability. Feature selection is one of the prime challenges, especially when the examined dataset is vast and is governed by numerous variables (Figure 2E). Features can be useless as well as redundant (highly correlated). It is therefore important to choose the right (hopefully minimal) subset of features, with which the data can be accurately classified. There are numerous manual and algorithmic approaches to feature selection. For example, a classifier was trained to identify children with autism using EEG and eye-tracking data [13]. To train the classifier, the researchers used the minimum redundancy maximum relevance (MRMR) feature selection algorithm. MRMR identifies a minimal and optimal subset of features, with which a predictive classification model can be designed. The algorithm identifies features that have high relevance to a target variable while retaining low redundancy with other features [14]. In this case, these features included eye fixation time in the areas of interest (e.g., mouth, body, etc.) and the relative power of delta, theta, alpha, beta, and gamma bands over the chosen EEG electrodes. Support vector machine (SVM) is another central classification technique used in numerous supervised AI-driven applications and is used to discriminate non-linearly separable data by projecting the data into a higher dimensional feature space, where it becomes either linearly (hard margin) or non-linearly (soft margin) separable [15]. Given a series of vectorized labeled data points , the SVM strives to identify a hyperplane that separates into its labeled categories, such that the distance between that hyperplane to the nearest data point is maximized. For example, the SVM is routinely used to discriminate a subset of features from electromyography (EMG) recordings taken from various muscle groups to accurately identify patients’ motion intentions and is used in assistive robotics [16,17].

Figure 2.

Classification and feature selection: (A) classifier schematic; circles correspond to data entries and the border color indicates the class label; (B) decision tree schematic; (C) feature space; (D) schematic of bias and variance in underfitted (top left), truth (top right), and overfitted (bottom left) classifiers. (E) feature selection schematic; (F) SVM-based classification.

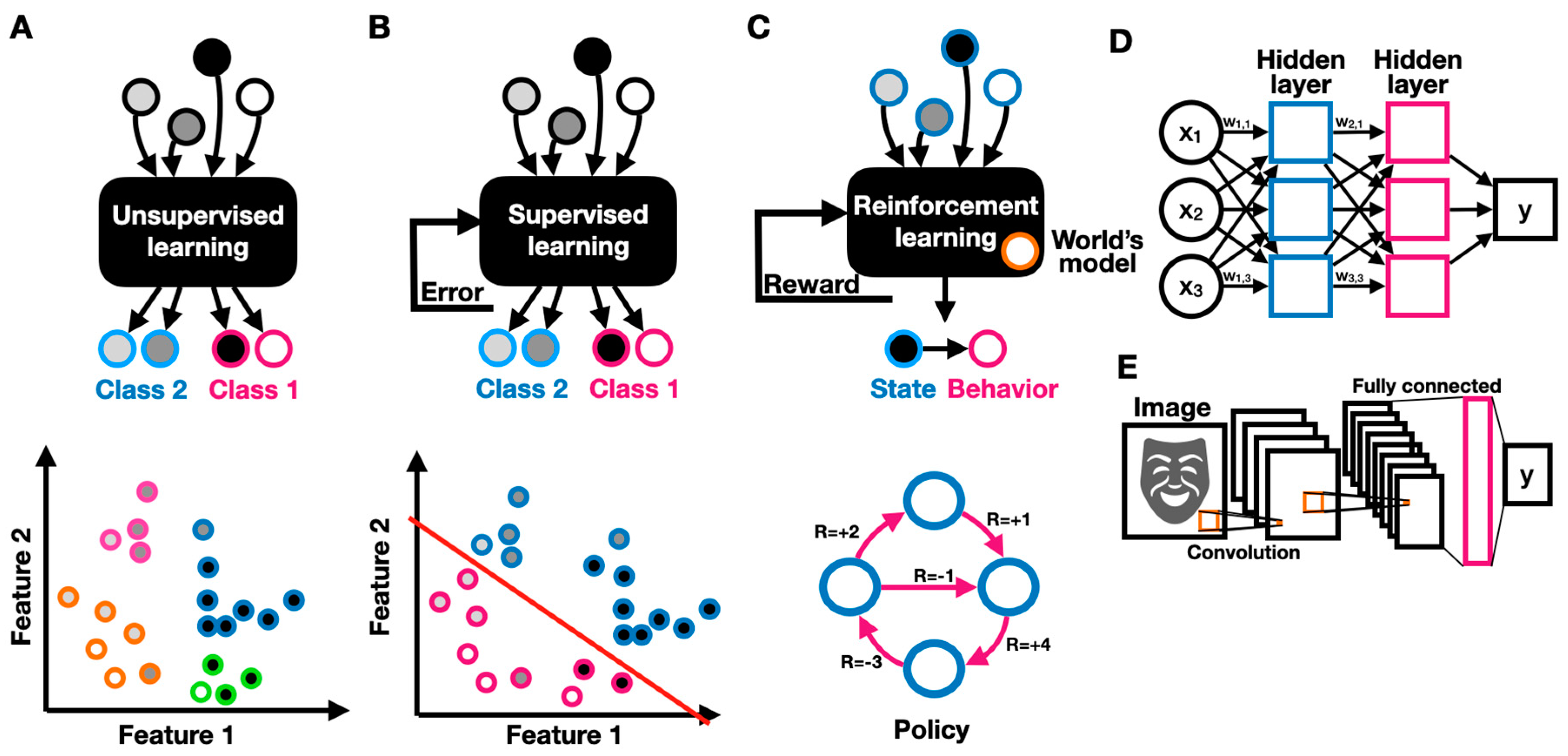

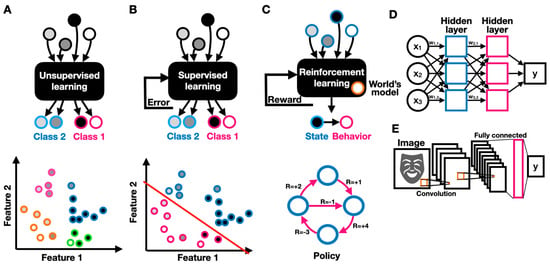

3.2. Unsupervised, Supervised, and Reinforcement Learning

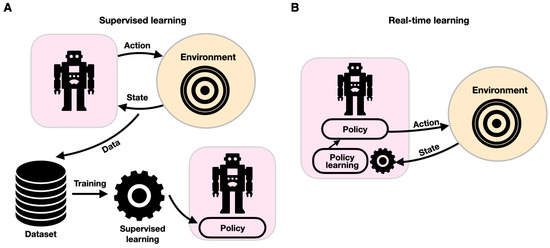

Unsupervised learning refers to the task of identifying patterns in unlabeled data (where the data entries are not defined as related to a particular category or label of interest) (Figure 3A). Unsupervised algorithms try to elucidate patterns by relating them to probability densities. For example, to monitor the progression of neurodegeneration processes through the continuous monitoring of a patient’s lower and upper limb activity, wearable sensing systems have been developed [18]. In this study, the researchers used unsupervised learning algorithms to group motor performance; classify them into performance measures; and identify mild, moderate, and severe motor deficiencies. The system provides an objective, personalized, and unattended neuro-assessment of motor performance through motion analysis. In supervised learning, labeled data are used to discover the underlying patterns and relationships between the data and labels (Figure 3B). A trained model can potentially predict accurate labeling results when presented with unlabeled data. For example, the EEG and eye fixation-based autistic/non-autistic discrimination model described above was developed using supervised learning [13]. The researchers built a labeled dataset containing data recorded from 97 children, of whom 49 were diagnosed with ASD, and used it to build an SVM-based classification model. Reinforcement learning is another important paradigm in ML, in which an AI-driven robot (agent) learns to improve its performance (measured by some reward function) by interacting with the environment (Figure 3C). Reinforcement learning differs from supervised learning in that it does not rely on labeled data but rather on the agent’s earned reward when choosing to implement a particular behavior. An agent follows a policy that guides its behavior. An optimal policy maximizes the agent’s expected reward. In reinforcement learning, the agent interacts with the environment and uses its collected rewards (or outcomes) to improve its policy predominantly through Markov decision processes (MDPs) [19]. In reinforcement learning, the agent uses observable rewards rather than having a complete model of the world, thus allowing context-dependent personalization. An MDP can be specified by a set of states , a set of actions , a transition function that specifies the transition probability of reaching a state from state by taking action , a reward function specifying the reward obtained by taking an action in state , and a discount factor that reflects the agent’s preference to favor immediate over longer-term rewards. The guiding principle in reinforcement learning is exploration. Based on the exploration guideline, the agent needs to experience the environment as much as possible, such that an optimal policy specifies for each the best choice for an action . An amazingly diverse body of research has been devoted to finding the optimal policy over all existing fixed, unobservable, or stochastic policies in various environments [12]. Reinforcement learning is predominantly used in functional electrical stimulation (FES) for neuroprostheses, in which electrical stimulation patterns govern the actuation of desired actions. Reinforcement learning can be employed as a control strategy where a “human in the loop” provides reward signals as inputs, thus allowing controller adaptation to the users’ specific physiological characteristics, reaching range, and preferences [20].

Figure 3.

Machine learning models. Schematics of unsupervised (A), supervised (B), and (C) reinforcement learning, describing the models’ inputs, outputs, and feedback; (D) schematic of a deep feed-forward two-layer neural network; (E) schematic of a convolutional neural network with two convolutional layers and one feed-forward fully connected layer.

3.3. Deep, Convolutional, and Recurrent Neural Networks

Deep neural networks (DNNs) have become a stepping stone in ML due to their superior performance on various pattern recognition tasks. In a neural network, neurons, which are simple computing elements, are interconnected through adjustable weighted connections to create a network through which signals can propagate (Figure 3D). The network’s weights can be optimized via weight updates (training) to create a predictive model (inference). In a fully connected DNN, the neurons are organized in layers, where each neuron in one layer is connected to every other neuron in the successive layer. DNNs have been shown to be extremely useful in a wide variety of neurorehabilitation applications. For example, a DNN was used to automate the quality assessment of physical rehabilitation exercises by providing a numerical score for movement performance [21]. In convolutional neural networks (CNNs), convolution and sampling layers followed by a DNN-like feed-forward classifier are concatenated to support image or vision-related tasks (Figure 3E). A convolutional layer comprises several neuronal maps, each detecting a specific spatial pattern (e.g., edge) at different places across the input. CNNs are often used for vision-based applications, such as the recognition of human behavior [22]. However, CNNs are not limited to conventional frame-based visual scenes. For example, a CNN was used to correctly identify hand gestures from a surface EMG spectrogram, thus enabling a gesture-based interface for assistive robotics [23]. Recurrent neural networks (RNNs) are DNNs with feedback connections, where neurons are recurrently connected to themselves or to other neurons. Recurrently connected neurons can change the network’s state given input data and the network’s previous states, thus allowing the network to be sensitive to sequential patterns. RNNs are therefore widely used for sequential pattern recognition in text, audio, and video. While RNNs can efficiently handle temporally short sequences, they often fail to relate distant data. To predict patterns over longer periods of time, long short-term memory (LSTM) networks were developed. LSTMs have proved to be very powerful models and are at the core of most state-of-the-art temporal models (e.g., sentiment analysis in artificial emotional intelligence [24]). Well-rounded guides to artificial neural networks are available [25,26]. More recently, attention-based RNNs were shown to be exceptionally relevant for applications ranging from handwriting synthesis to speech recognition [27]. Attention-based models iteratively spotlight parts of the incoming data while decreasing the “focus” on other less important parts of the data parts. Attention models were shown to significantly improve RNNs’ capacity to derive long-range dependencies. A key innovative step in sequential modeling is data preprocessing, where vector-embedding techniques take place. Vector embedding enables parts of a sequence (e.g., words) to be encoded along with their appropriate context, where each entity is represented in a conceptual space, thus allowing for context-dependent understanding [28]. All of these models have been considerably enhanced by the advent of transfer learning. In transfer learning, a pretrained model is used as an initial configuration and then retrained to perform a related task [29].

4. Intelligent Robotics in Personalized Pediatric Neurorehabilitation

4.1. Diagnostic Robots

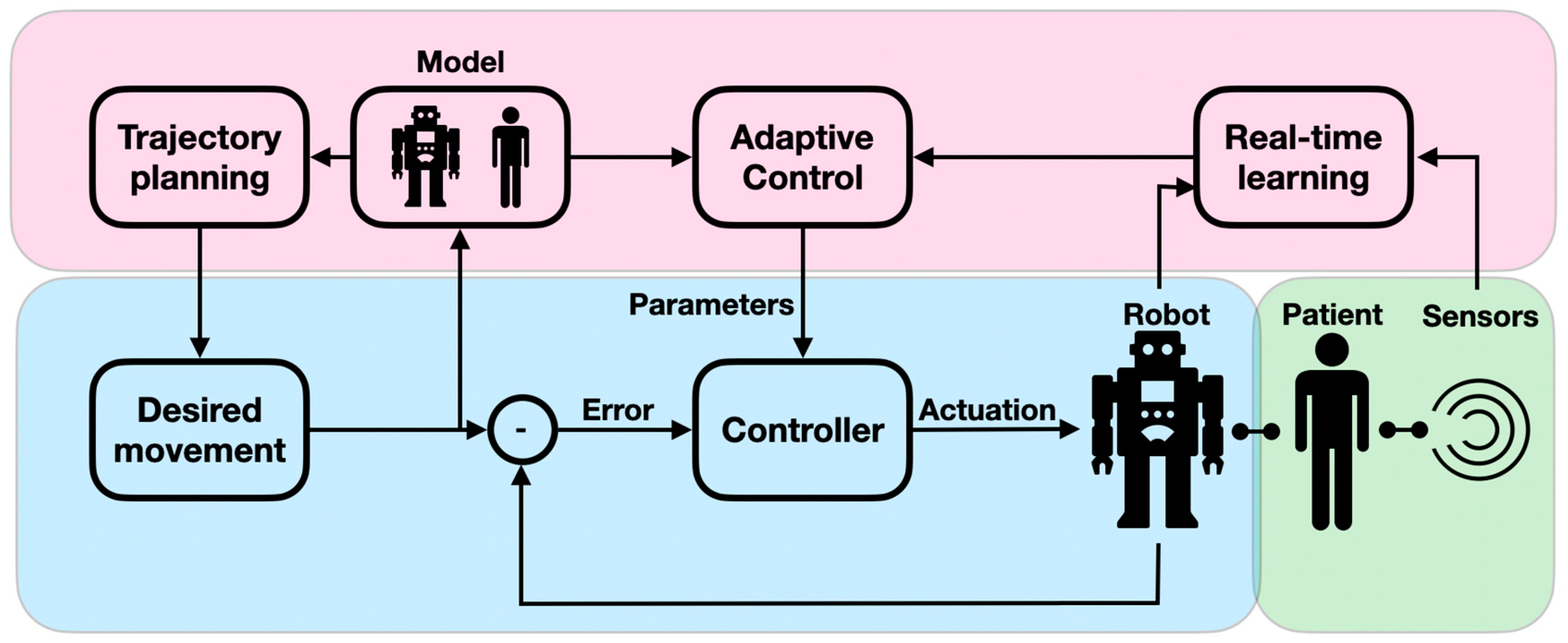

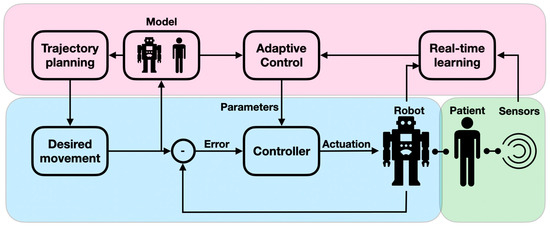

Patients undergoing neurorehabilitation need to be periodically assessed for their physical, cognitive, and behavioral capacities in a quantitative and reliable manner. When assessed in a complete manual intervention, the diagnostic intervals, result interpretation, and procedural reliability are not optimal. Neuro-assessment is a sensitive and important task as it provides an initial severity evaluation of impaired functions and is used for monitoring the recovery process and the treatment effectiveness. Diagnostic robots can therefore play a paramount role in neuro-assessments. The importance of smart digital neuro-assessments was recently shown to be key for monitoring chronic conditions and is even more crucial in periods of epidemic-related confinement [30]. In the context of physical performance, diagnostic robots can accurately measure the user’s body posture and applied force. By reliably reproducing stress stimuli, the robot can precisely assess the user’s sensation levels and muscle tone. Diagnostic robots are often designed to allow the measurement of a range of conditions in a single modular system, which permits the derivation of a complete functional profile (active workspace; coordination levels; arm impedance; and movement velocity accuracy, smoothness, and efficacy) [31,32]. For example, robots were used to assess the proprioceptive, motor, and sensorimotor impairment of proximal joints [33] as well as to provide a clinical evaluation of motor paralysis following strokes [16]. Despite their importance for the accurate assessment of motor functions, traditional control modules assume predefined conditions because they follow scripted behaviors. However, when interacting with the assessing robot, the patient interacts with the device’s dynamics, since the robot needs to adaptively compensate for joint friction, mass, and inertia, as well as account for backlash to provide an accurate assessment (e.g., measuring arm impedance) (Figure 4). These adaptive admittance controllers are critical for tasks in which a motion trajectory is guided by the robot. The robot needs to adjust its course of motion to follow the patient’s anomalous muscle tone and range so that the muscle is not injured [34]. An intelligent adaptive robot could further improve child–robot alignment by allowing for improved assessment accuracy [35]. This is because non-adaptive robot controllers provide a distorted assessment, particularly with weak patients, as is the case in pediatric neuro-assessment. Diagnostic robots have also been utilized for behavioral assessment. For example, robots have been used to characterize autistic children’s social behavior by monitoring their eye movements, gestures, voice variations, and facial expressions [36]. AI-powered algorithms in computer vision and video summarization embedded in a responsive robot provide faster diagnoses of ASD and CP, enabling early therapeutic interventions and monitoring [37]. For example, EEG and eye-tracking data were used to identify children with ASD via SVM classifier [13] and logistic regression (LR) [38]. AI-driven computer vision was used to detect abnormal eye imagery (eye misalignment, e.g., esotropia and hypertropia) in periodic assessments of children with CP [39].

Figure 4.

Adaptive admittance controllers: The conventional controller, comprising the desired state, the controller, and the error feedback loop, is shaded in blue; the adaptive feedback component, comprising a model of the robot–patient behavior, a mechanism for real-time learning, and a control module that drives correcting signals, is shaded in purple; and the human-in-the-loop component, comprising the patient and the retrieved data (via sensors), is shaded in green.

4.2. Physical Therapy

Motor impairments are frequent in neurological disorders. To maintain and improve the motorized functionality of people with neural deficiencies and irregularities, neurorehabilitation usually comprises physical therapy. Physical therapy is particularly important for disabled children since mobility at an early age is crucial to avoid “learned helplessness” [40]. Physical therapy is usually guided by an expert physiotherapist who designs a personalized goal/performance-based rehabilitation plan comprising a physical examination, diagnosis, prognosis, education, and ultimately, an intervention that usually involves progressive training schemes (progressive task regulation). Carefully setting a gradual profile of difficulty is important for effective neurorehabilitation. Using robot-assisted physical therapy allows for a precise definition of task difficulty, and when enhanced with neuro-assessment, the difficulty level can be dynamically changed to match the patient’s capabilities (shared control), thus promoting active participation and motivation [32]. An adaptive robot can gradually reduce assistance during or after training sessions (motor synergy relaxation) because it can detect faster, more stable, and smoother movements that allow for personalized robotic intervention that autonomously provides guided physical treatment [41] (Figure 4). For example, children with CP showed greatly improved motor function and development after a 6-week training program with dynamic weight assistance technology [42]; infants with CP were able to use assistive robots to learn how to crawl [43], and an adaptive ankle resistance robot (proportional to the ankle moment during walking) was shown to improve muscle recruitment in children with CP [44]. Recent advances in probabilistic AI make it possible to achieve intelligent shared control such that user safety attains increasing control, which can lead to improved user performance. For example, AI-based LbD was used to learn assistive policies via a probabilistic model that provided efficient, real-time training strategies [45]. Smart AI-driven control can also harness classifier or pattern recognition algorithms. For example, SVM and K-nearest neighbors (KNNs) were used to recognize rehabilitative hand gestures when employing the Leap Motion sensor (comprising IR cameras and LEDs) [46]. KNNs, logistic regression (LR), and decision trees (DTs) were used to elucidate upper body posture in patients wearing strain sensors [47], and probabilistic (hidden Markov model-based) classifiers were used to detect walking gait phases in inactive knee orthosis and provided shared control during training in children with CP [48]. Like diagnostic robots in the physical assessment phase, personalized robotic care can benefit from advances in real-time learning. An important, relatively new paradigm for physical therapy is the utilization of wearable robotics, known as exoskeletons, to provide natural movement patterns, as discussed below.

4.3. Assistive Robotics

As described above, supporting the mobility of people with neural deficiencies and irregularities is important, as it empowers weakened or paralyzed children during activities of daily living (ADL) [49]. While personal attention is time-consuming and expensive, and it inhibits the patient’s sense of independence, simple mechanical aids (canes, mechanical prosthetics, etc.) are often limited in functionality. Assistive smart robots were first developed four decades ago [50]. They represent one of the most important frontiers in neurorehabilitation by contributing to children’s sense of independence and well-being. Assistive robots can take the form of robotic walkers, exoskeletons, prostheses, powered wheelchairs, and wheelchair-mounted robotic arms. Assistive robots provide structure, support, and energy for ADL. Clearly, shared control plays an important role in assistive robotics when utilized for ADL. For example, a recently proposed adaptive robotic walker for disabled children dynamically actuates its base wheels and drives them to correspond to the child’s walking gait (as deciphered from infra-red (IR) position sensors), thus significantly reducing the required energy for walking [51]. Other recent developments involve utilizing inertial measurement units (IMUs), laser range finders (LRFs), and haptic feedback to provide robotic walkers with advanced capabilities such as walking guidance and autonomous navigation [52,53,54]. Exoskeletons hold great promise in assistive robotics since they can provide embodied sensing and actuation that supports natural movements. For example, lower limb multijoint exoskeletons were shown to improve ankle–knee moving dynamics [55,56,57], and higher limb exoskeletons were shown to improve arm–shoulder and elbow movements in children with CP [58,59]. Advances in AI contribute to powering the performance of assistive robots and the range of applications they can support [60]. For example, AI-driven gaming agents were used to cooperatively control a lower limb exoskeleton in a video game, increasing the participation rate [61]. AI for the detection of motion intentions was used to design an exoskeleton with varying degrees of autonomy for the lower [62] and upper limbs [63]. As in robot-assisted physical therapy, shared control can be enhanced with human-in-the-loop optimization, allowing for the personalized user-driven optimization of the assistance level. Whereas some patients prefer retaining control during task execution, others may favor task performance [64].

4.4. Smart Interfaces

Conventional interaction apparatuses, which constitute a feedback framework required to enable shared autonomy (e.g., computer mouse or keyboard), are often inaccessible to people with neural deficiencies and irregularities due to impaired mobility. Their quality of life and the available range of engageable technologies are therefore dramatically affected. A smart user–robot interface is therefore an important hallmark in neurorehabilitation. Countless devices and innovative approaches, ranging from simple force sensors to brain–computer interfaces, have been suggested to create a robot that can decipher user intent in real time. Smart interfaces involve data acquisition and an inference algorithm. A wide range of sensors have been used for data acquisition in the context of neurorehabilitation, including hand-operated joysticks, eye trackers, tongue-operated force-sensitive surfaces (tongue–machine interface (TMI)), bend-sensitive optical fibers [65], voice commands, electromyography (EMG), touch screen displays, Leap Motion, and IMUs. Brain–computer interfaces have attracted growing interest [66], in association with technologies such as electrocorticography (ECoG), electroencephalography (EEG), magnetoencephalography (MEG), electrooculography (EOG), the cerebral cortical registration of neural activity, functional magnetic resonance imaging (fMRI), and functional near-infrared spectroscopy (fNIRS) and have powered exciting research directions. For example, EMG [15] and EOG [67], as well as NLP [68], are used extensively to drive wheelchairs. Advances in AI, in many cases, permit inferences. For example, EEG and physiological data (blood volume pulse, skin temperature, skin conductance, etc.) have been used to elucidate emotions via AI-powered algorithms such as gradient boosting machines (GBMs) and CNNs [69]. Cameras and eye-tracking devices [70], TMIs [71], and neural interfaces [72] have been used to control an assistive robotic arm using various AI-powered algorithms such as the linear discriminant classifier and A* graph transversal. Driven by smart interfaces, various smart wheelchair designs have been proposed. For example, EMG and IMUs were utilized to design a smart wheelchair operated by SVM-identified hand gestures [73].

4.5. Cognitive Training

People with cognitive impairment have a “hidden disability that often manifests as a deficient mental capacity to handle conceptualization efficiently and adaptively, including symbol manipulation, executive functions, memory, and the interpretation of social cues” [74]. Cognitive training is a diverse, widely debated field [75]. It includes the enhancement of interpretation and attention via cognitive bias modification (CBM) techniques, improvement in inhibitory and regulatory behavior via inhibitory training, and memory sharpening via attentional allocation training [76]. Cognitive training is associated with the treatment of children with ASD [77] and CP [78]. Cognitive training can be led by a trained expert. Such intervention, however, is expensive and limited in duration, scope, and availability. It also heavily relies on the expertise of the caregiver. Interestingly, robot-assisted cognitive training was shown to improve training efficacy. While 12-week cognitive training (involving memory, language, calculation, visuospatial function, and executive function) demonstrated an attenuation of age-related cortical thinning in the frontotemporal association cortices, robot-assisted training showed improved results with less cortical thinning in the anterior cingulate cortices. These improvements have been attributed to instantaneous feedback, enhanced motivation, and the unique interactive situation [79]. Robot-assisted cognitive training has proven valuable even when used solely to provide encouragement and hints while a user is engaged in a cognitive task [80]. Similar to social robotics that intersects robot-assisted cognitive training on several fronts (discussed below), this technology is tightly linked to AI since it often entails the development of a model of a person (e.g., their personality or preferences) to guide the robot’s interaction scheme [81]. Robots can utilize sensors for low-level data perception (e.g., speech, gestures, and physiological signals) to infer users’ high-level states (e.g., mood, engagement, and cognitive abilities). Robots can use the devised state to personalize high-level aspects of the interaction (e.g., training adjustments and encouragement) [82]. For example, self-organizing maps, a form of ANN, were used to identify differential trajectories of change in children who underwent intensive working memory training. This enabled the derivation of different profiles of response to training for the optimized process [83]. One AI approach geared to enable personalized cognitive training is known as IRL or incremental learning [84]. IRL is used to design interactive robotic games in which the system adjusts its level of difficulty at each interactive step by dynamically updating the robot’s game strategy (or policy) [85]. For example, in “nut catcher” games, the user, equipped with a robotic joystick, collects nuts falling from the trees. The mission gets harder as a function of performance [86]. The rise of cognitive robotics, fueled by advances in reinforcement learning and computer vision, is likely to lead to major breakthroughs in supported applications over the next few years [87], in particular in robot-assisted cognitive training.

4.6. Social Robots

Neurorehabilitation often involves procedural pain and distress. In pediatric rehabilitation, these difficulties are commonly addressed through breathing exercises, social distractions, cognitive–behavioral interactions, and games, as well as music, animals, and art therapy [88]. An important aspect of treatment in this space is psychological intervention, which is inherently expert-led, expensive, and often not readily available. Social robots (SRs) were developed to alleviate distress by providing psychological interventions during painful interventions through continually available interactions and therapeutic augmentation. An SR should be able to direct activities while drawing on the child’s performance and engagement to improve participatory motivation. Furthermore, SRs can provide continuing at-home care. SRs have been shown to be useful for children with autism [36] and CP [89]. SRs have been used with autistic children to improve their social interaction skills (understanding facial expressions and developing turn-taking skills), maintain focus, and improve physical gestures and speech (via imitation). SRs have been implemented with CP-assessed children to improve physical balance (via exercise imitation), emotion recognition, and participatory motivation. While beneficial, current SRs typically adhere to scripted behavior and have limited autonomy and unrealistic responsiveness. They are therefore limited in their personalized and adaptive behavior. Advances in AI technology, and in particular the development of NLP-driven models of human language, the fast elucidation of facial and physical expressions via computer vision, and the design of new models for AEI, should lead to simultaneous monitoring and adaptive responses to sensed behavior.

5. Advances in AI-Driven Personalized Neurorehabilitation Technologies

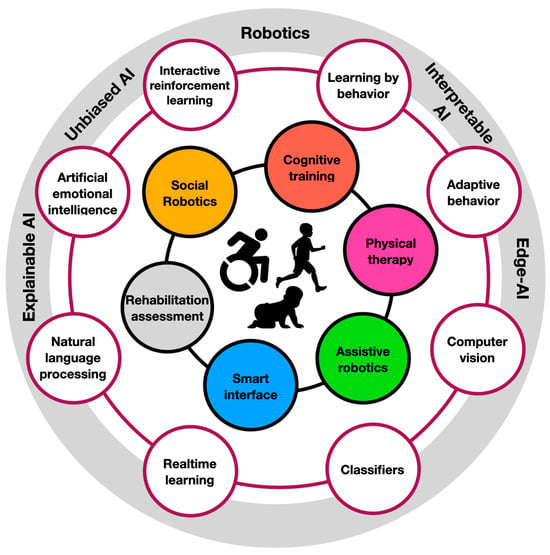

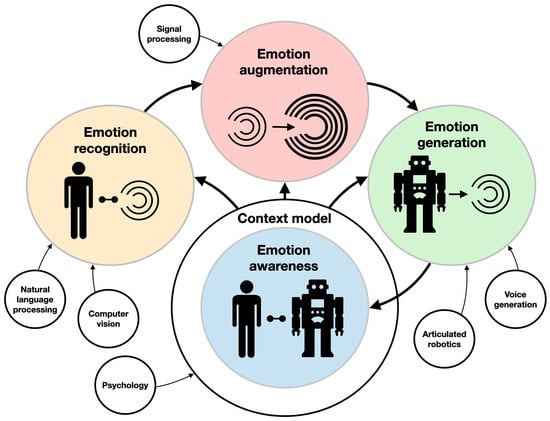

5.1. Artificial Emotional Intelligence (AEI)

Social robotics can enhance the psychological intervention aspect of neurorehabilitation. However, to be effective, these robots should be able to feature some aspects of emotional intelligence. AEI is considered by many to be a futuristic “Space Odyssey”-like wishful thinking [90]. However, it has potential value for the design of personalized robot-assisted neurorehabilitation, above all for pediatric patients. AEI is closely connected to recent developments in AI. AEI consists of the integration of emotion recognition, emotion augmentation (planning, creativity, and reasoning), and emotion generation, all under a contextual model (the state of the user, interaction, system, and world) [91] (Figure 5). AEI underlies AI-enabled emotion-aware robotics [92]. Emotion recognition (sentiment analysis) from voice acoustics (accounting for arousal and dominance), facial expression, body poses and kinematics, brain activity, physiological signals [93], and even skin temperature [94] in the context of robot–human interaction are currently being extensively explored. For example, ML algorithms can capture verbal and non-verbal (e.g., laughing, crying, etc.) cues, which are then implemented to recognize seven emotional states (e.g., anger, anxiety, boredom, etc.) with 52% accuracy [24]. The researchers used an SVM to extract sound segments. Each segment was introduced to a CNN, providing features. These features were fed to an LSTM, resulting in an identified emotion. Another example is the End4You Python-based library [95], which has a deep learning-based capacity to use raw data (audio, visual, physiological, etc.) to identify emotions (anger, happiness, neutral, etc.) [96]. Emotion generation in robotics is also extensively researched in the context of human–machine interactions. A robot exhibiting neutral, happy, angry, sad, surprised, fearful, and worried facial expressions has been tested in various systems [97]. In pediatric rehabilitation, these robotic systems have been successfully applied to autistic children, who were encouraged to use gestures by imitating the robot’s expressions [98].

Figure 5.

Components of artificial emotional intelligence. The main areas of research (e.g., emotion generation and emotion augmentation) and their interrelations are described with colored circles and thick arrows. The supporting technologies (e.g., computer vision and voice generation) are described with clear circles and thin arrows.

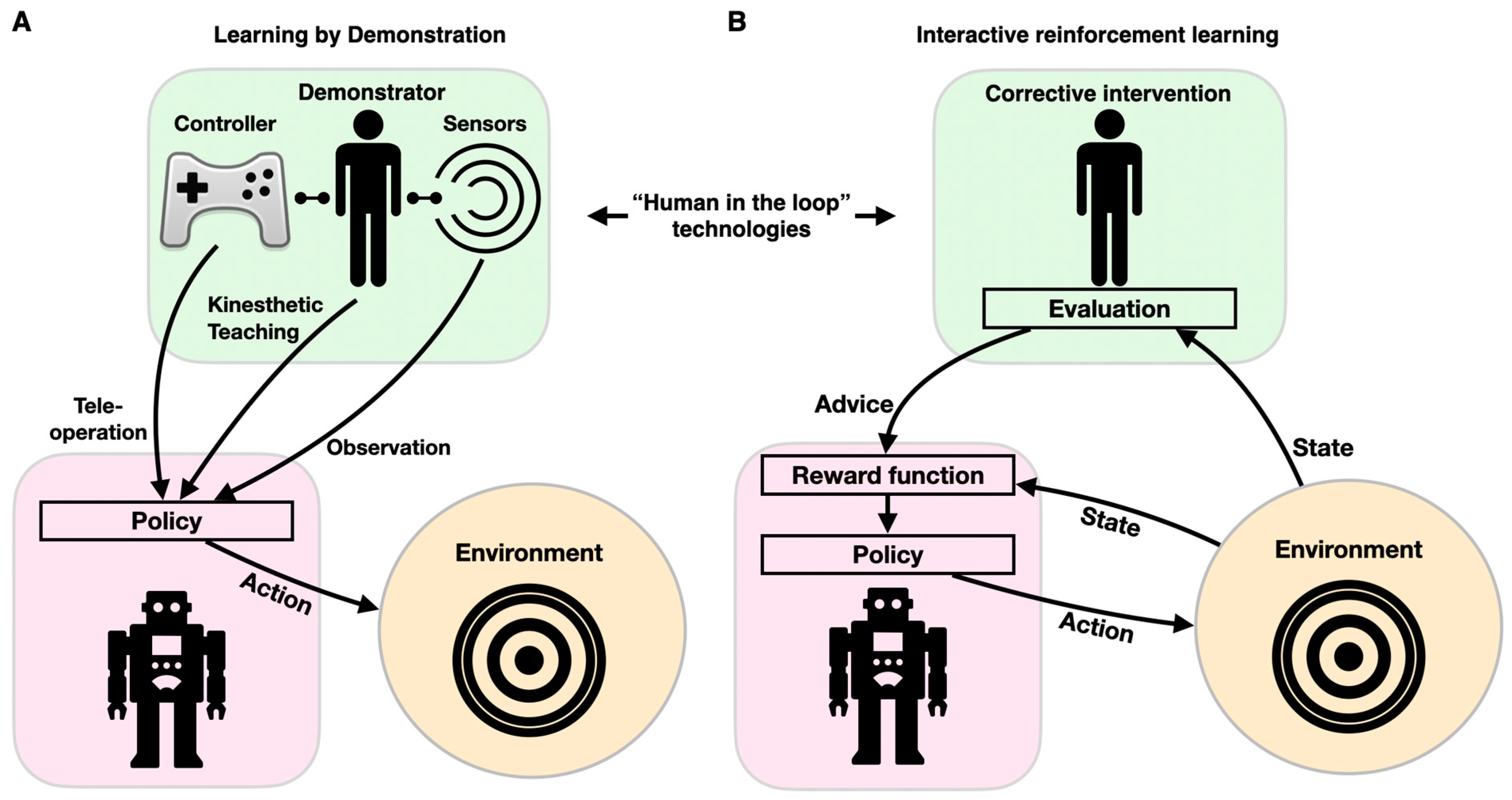

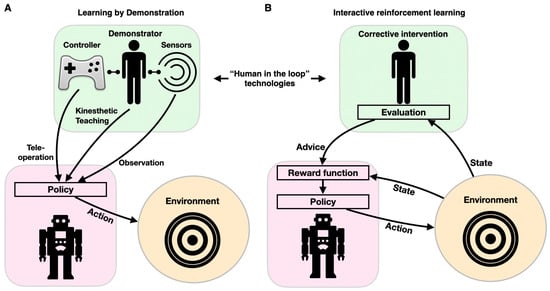

5.2. Learning by Demonstration

As described above, robots for diagnostics, physical therapy, and assistance in ADL have to be controlled to execute the desired motion. This is traditionally achieved through conventional programming, in which a behavior is predefined to respond to a specific set of stimuli. However, instead of programming robots to follow predefined trajectories or to respond to a stimulus with a preset pattern of behaviors (via supervised, reinforcement, and unsupervised learning), robots can be directed to learn appropriate behaviors by imitating a (human) expert through LbD (also known as imitation learning, programming by demonstration, or behavioral cloning). LbD enables robots to facilitate adaptive behaviors by implicitly learning the task constraints and requirements through observations, without a predefined reward function or “ideal behavior” [99]. These adaptive robots can perform better in new, convoluted, stochastic, unstructured environments, without a need for reprogramming. LbD can be executed via (1) kinesthetic teaching, where a human expert physically manipulates the robots’ joints to achieve the desired motions; (2) teleoperation, where the robot is guided by an external interface such as a joystick; and (3) observation, where a motion is demonstrated by a human body (or a different robot) and tracked with sensors (Figure 6A). In assistive robotics, LbD has been utilized for a wide spectrum of applications. For example, a dataset comprising haptic and motion signals acquired while human participants manipulated food items with a fork was used to guide a robotic arm for autonomous feeding, so that it could move food items with various compliances, sizes, and shapes (generalization) [100,101]. LbD was used by a robotic arm to learn a physiotherapist’s behavior as it interacted with a patient through kinesthetic teaching, thus allowing the patient to continue practicing the exercise with the robotic system afterward [102,103]. A similar approach was taken to provide robotic assistance to children with CP through “pick and place” playing, thus increasing their engagement in meaningful play [104]. In conjunction with reinforcement learning, LbD can also be used to personalize socially assistive robots (e.g., instruct and aid a cognitively impaired person to prepare tea through a set of instructions, social conversations, interactions, and re-engagement activities) [105].

Figure 6.

Components of human-in-the-loop technologies: learning by demonstration (A) and interactive reinforcement learning (B). Both paradigms affect the robot’s behavior (purple shape) through its interacting with the environment (orange circles) via feedback from a human (green shape).

5.3. Interactive Reinforcement Learning (IRL)

Cognitive training and smart interfaces should be interactive and highly adaptive. Programming adaptive and interactive frameworks is a difficult task, as the variety of inputs is enormous, and creative response is intractable to conventional if–else modalities. The reinforcement learning paradigm is used to design an adaptable personalized model for cognitive training and robot–human interactions. To provide personalized robotic behavior, ML can be enhanced by incorporating a human-in-the-loop component through interaction to optimize the agent’s policy. IRL can be implemented with various learning approaches, most often when user feedback in response to an action is used as a reinforcement signal (learning from feedback), or when a corrective intervention is selected prior to user action (learning from guidance) [106] (Figure 6B). Because feedback is imperative to IRL, various feedback sources have been developed and tested. Feedback can be either uni- or multimodal. Unimodal feedback can be delivered via dedicated hardware (e.g., clicking a mouse or pressing a button) or natural interactions (facial expression, audible cues, or gestures). Multimodal feedback can integrate speech and gesture feedback, as well as a laugh or a smile [107]. IRL was first proposed with the COBOT system, which was embedded within a social chat [108]. COBOT learned to adapt its behavior in accordance with data it collected from numerous users, thus maximizing its rewards and allowing it to learn individual and communal action preferences (such as proposing a topic for conversation, introducing two human users to each other, or engaging in certain wordplay routines) [109]. IRL was later implemented in specialized simulation frameworks to evaluate various learning methodologies [110]. More recently, IRL was used to drive adaptive robot-guided therapy, when the user performs a set of cognitive or physical tasks (e.g., the “nut catcher”) [111]. In this work, the robot selects an action (the next difficulty level or task switching) according to its policy. The robot keeps track of the task duration and the user’s score while playing with the user and updates its policy in real time.

5.4. Natural Language Processing (NLP)

AI-driven RNN-based natural language processing (NLP) makes it possible for an algorithm, as an artificial being, to interpret unstructured human language and thus engage in interactions with a human. This technology is particularly important for cognitive training, social robotics, and smart interfaces. NLP is powered by innovative RNNs, CNNs, and attention-based models, which can identify complex linguistic relationships. Advances in NLP are also driven by hardware developments, the increased availability of databases and benchmarks, and the development of domain-specific language models [28]. Today, NLP is routinely utilized for language tagging, text classification, machine translation, sentiment analysis, human language understanding, artificial conversation companion, and virtual personal assistance [112,113]. In clinical settings, NLP has proved invaluable in numerous applications, including the derivation of consequential knowledge from healthcare incident reports [114], as well as for inferring personalized medical diagnoses [115], performing risk assessment [116], and providing follow-up recommendations [117]. Importantly, NLP is also emerging as an imperative technology in recent governmental efforts to lessen national health inequality [28]. NLP has been powered by recent attempts to efficiently screen a large pool of electronic health records for patients with chronic mobility disabilities [118]. While useful, algorithmic screening depends on having suitable confirmation for NLP-flagged cases. There is still a long way to go in designing equitable and inclusive NLP-driven models. Models are inheritably limited to the social attributes on which they were trained. In recent work, it was shown that NLP models contain social biases toward toxicity prediction and sentiment analysis, thus creating barriers for people with disabilities [119]. These biases are reflected in the overexpression of gun violence, homelessness, and drug addiction in texts discussing mental disorders. Designing a robot that can naturally communicate with pediatric patients in real-life stochastic environments is also an immense challenge. This type of robot would have to support a symbolic system in which signs are interpreted in the context of a specific environment and understood subjectively by a young population. Supporting a shared “belief system” between a child and a robot is indispensable for effective neurorehabilitation. Interestingly, robotics has been used to advance the current capacity of NLP by providing it with a notion of embodiment. Sensors and motors can provide the basis for grounding language in a physical and interacting world [120]. In the field of robotic neurorehabilitation, NLP has been employed for personalized cognitive assessment [30] and smart human–robot interactions [121]. NLP underlies many recent attempts to personalize social robots by allowing them to convey empathy by interactively providing gestures, gazing, and spatial behavior through NLP-powered automatic speech recognition and scene understanding [122].

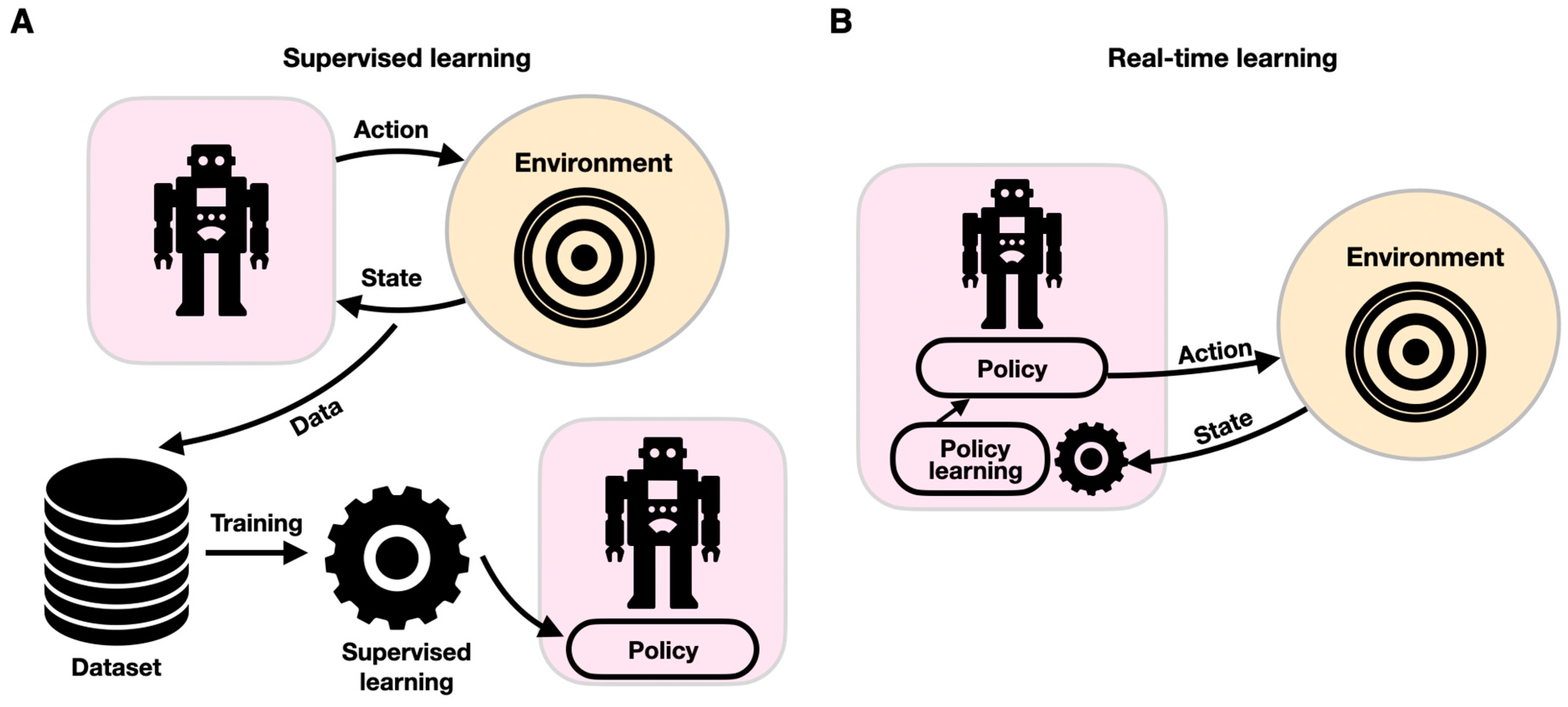

5.5. Real-Time Learning for Adaptive Behavior

Computational motion planning is fundamental to autonomous systems such as the ones described for diagnostic, assistive, and social robots. Conventional computational motion planning uses a set of predetermined parameters, such as mass matrices and forward and inverse kinematic models, to provide motion trajectories [123,124]. While such conventional controllers have been shown to handle intricate robotic maneuvers in challenging convoluted settings, they often struggle in a stochastic, uncertain environment requiring adaptive control. Adaptive robotics is key in assistive and diagnostic rehabilitation robotics. Real-time, ML-powered adaptive control schemes are employed to enable robotic systems to dynamically respond to changing conditions (Figure 7). Adaptive motor control is commonly mediated by neural networks that provide vision and proprioception-driven real-time error-correcting adaptive signals to achieve dynamic motor control [125]. In sharp contrast to traditional ML schemes in which a model is trained with historical data offline (supervised learning), learning in real time is governed by a live stream of data that propagates through the model while changing it continuously. Real-time ML is an event-driven framework, which adheres more closely to the principles of biological learning. Whereas ANNs are modulated via global learning rules and propagate differential error signals, biological learning is characterized by local learning rules and spike-based neural communication. Biological adaptive motor control is known to be mediated by projection neurons involving the basal ganglia and the neocortex [125]. Failure to generate these error-correcting signals can manifest as Parkinson’s [126] or Huntington’s [127] brain disorders. Notably, while adaptive control has been implemented in conventional neural computational frameworks [128], in recent years, they have also implemented spiking neural networks (SNNs). A typical SNN comprises a densely connected, spike-generating neuron-weighted fabric through which spikes are propagated, thus closely emulating biological neural networks [129]. SNNs can provide efficient adaptive control of robotic systems [130], and their architecture usually mimics brain circuitry. SNNs were shown to be able to shed new light on cognitive processes, particularly on visual cognition [131,132]. Spiking neuronal architectures can enhance performance with lower energy consumption [133,134] and support the sensing–moving embodiment of robotic systems [135]. An SNN-based framework was devised to control a robotic arm by anatomically organizing the spiking neurons to move downstream through the premotor, primary motor, and cerebellar cortices [136]. Recently, SNNs were reported to enable motion guidance and adaptive behavior in a wheelchair-mounted robotic arm, supporting ADL tasks, such as drinking from a cup and lifting objects from shelves [137].

Figure 7.

Components of supervised (A) and real-time learning (B). In each paradigm, the robotic system is described within a purple shape, and the environment is shown within an orange circle. While with supervised learning, learning provides updates to the robot’s policy offline, with real-time learning, the robot–environment interaction is continuously changing the robot’s policy.

5.6. Classifiers for the Identification of Intended Behavior

The identification of intended behavior is key to the design of intelligent robotic systems that aim to support physical therapy and assistive robotics. For example, in 2005, a robust wearable system to support health monitoring via real-time event data streaming with context classification, termed LiveNet, was developed in the MIT Media Lab [138]. Through a behavioral classification, LiveNet was suggested as a support for a wide range of clinical applications, such as Parkinson’s disease monitoring (using accelerometers to detect dyskinesia, hypokinesia, and tremors) [139]; seizure detection (unlike standard EEG- and EMG-based classifications, LiveNet used accelerometers to classified epileptic seizure-characterizing motions); depression therapy (using heart rate variability, motor activity, vocal features, and movement patterns to monitor the impact of electroconvulsive therapy to depressed patients); and quantifying social engagement (using infrared IR tags and voice features) [140]. LiveNet served as a multimodal feedback-powered approach to neurorehabilitation that provided an instantaneous classification of the patient’s state and context, thus significantly reducing feedback time [140]. Behavioral classification such as the one used for monitoring the progress of Parkinson’s disease can be implemented in neural networks and tree classification models [139]. For example, features can be derived from accelerometric data using SVMs, Bayesian, nearest neighbors, and neural networks after training on specific motion patterns (e.g., extending the arm forward). These accelerometric data can be synchronously acquired from distributed sensors located on various parts of the body from both healthy and motor-impaired individuals. Features can then be derived from the accelerometric data segments based on a discrete Fourier transform, which can provide the mean power across frequency bands. In recent work, ataxic gait assessment with a neural network achieved accuracy rates of 78.9%, 89.9%, 98.0%, and 98.5% on 201 signal segment power band accelerometric data acquired from the feet, legs, shoulders, and head/spine, respectively [141]. Combining the combined power of a neural network and a decision tree, physiological tremors were correctly classified with an accuracy of ~85% using acceleration and surface electromyography data [142]. Similarly, ML was utilized to classify movement patterns in gymnastics [143], eye movements [144], and gait patterns during load carriage [145].

5.7. AI on the Edge

Smart rehabilitation assistive robotics is driven by AI algorithms, which are usually deployed on the edge (and not in centralized data-processing computing devices). This imposes considerable space, energy, and latency (time to response) constraints on the computing hardware. This is particularly true when the robot is mobile (e.g., involving wearable sensors mounted on a wheelchair). Edge computing is therefore facing a tremendous challenge since neural networks and ML models are rapidly increasing in size and complexity and are most often deployed in large data centers and servers. One way to access large-scale ML models is via the cloud, which only requires a sensor and a wireless communication channel installed on the edge that can stream data to a remote computing service. This approach, however, is limited by the required latency requirement and network bandwidth [146]. Edge computing is often divided into edge training (for real-time learning) and edge inference (using pretrained AI models). A recent comprehensive review of both is available [147]. The importance of edge computing is also reflected in the growing set of graphical processing unit (GPU) supporting hardware [148] and dedicated software libraries [149]. Having GPUs (or other ML-relevant acceleration hardware) on the edge is important since they allow for efficient neural network-driven inference and training [150]. For example, NVIDIA was behind the development of the Jetson microprocessors with an embedded GPU having varying complexities on which various robotic [151] and vision [152] edge applications can be deployed. Real-time learning with SNN requires its own dedicated (silicon neuron-based) hardware, such as Intel’s Loihi or OZ [153]. Computing at the edge also has the added advantage of more secure data processing, which is crucial in medical applications. There is an ongoing debate on the tradeoff between the required low latency and the high data security in medical edge devices. This topic is discussed in detail in [154].

In rehabilitation, many applications require wearable sensors and processors. To eliminate the need for multichip on-body communication with AI-capable hardware, several specialized systems have been designed. For example, a neural processor designed for an AI-driven wearable rehabilitation system successfully provided 5 ms networking operation latency with a mere 20 uW of power consumption [155]. Another important piece of hardware for smart edge computing is Google’s tensor processing unit (TPU), which is specifically designed to accelerate inference on the edge. A study showed that TPU was able to efficiently infer body posture during knee injury rehabilitation [156].

5.8. Unbiased, Explainable, and Interpretable AI

AI is often regarded as a black box that cannot provide explainable high-level reasoning about its decisions (classification, chosen behavior, etc.). While AI holds great promise for clinical work, it entails a new dynamic for patient–healthcare provider interactions. Traditionally, patients discuss their condition with their physician, who makes the clinical decision after patients give their informed consent. AI in its current form could incorporate automated, often unexplainable biases into the decision-making space. To overcome these hurdles, clinicians are expected to oversee and take responsibility for AI inferences. Whereas the engineer who designed the AI system, in most cases, would not be present at the patient’s bedside with the clinician, the clinician would still be accountable for the outcome of the AI decision process. At the very least, AI must adhere to “common norms which govern conduct”, which entail unbiased, explainable, interpretable, responsible, and transparent AI [157]. Biased AI systems have become a major concern, particularly in the medical field, and as a result, major companies such as IBM and Microsoft have made public commitments to “de-bias” their technologies [158]. Although AI remains a black box, building a transparent AI system has become an important goal to reach before AI can be adopted, as is evident from the growing body of relevant literature and dedicated conferences [159]. This is particularly true for the clinical field. A survey of the algorithms and techniques developed for explainable AI in the medical field can be found in [160]. One way of increasing the interoperability and explainability of an AI model is through visualization. The Shapley additive explanation (SHAP) visualization tool is a widely adopted technique [161]. Recently, SHAP was utilized to provide an explainable AI for predicting readmission risk for patients after discharge to rehabilitation centers [162]. In the rehabilitation space, having transparent AI can also improve users’ engagement and cooperation. While the design of explainable AI in rehabilitation is still in its infancy, transparent AI is a reality in the context of home rehabilitation [163] and is used in the assessment of stroke rehabilitation exercises [164], as well as for detecting the development of neurological disorders [165] and identifying the biomechanical parameters of gait [166].

6. Conclusions

Revisiting the challenges listed earlier in the introduction will allow us to shed light on the current state of the art in pediatric robotic rehabilitation while listing challenges and open research directions.

- Rehabilitative care covers an extremely wide spectrum of conditions. Therefore, a preprogrammed robotic system would find it hard to create real value over expert-led therapy. For many years, the high level of requirements expected from such robotic systems made the transition from lab to clinic unfeasible, thus making the introduction of intelligent robotics into neurorehabilitation a topic of heated debate for several decades. A rehabilitation robot is expected to have high mechanical compliance, adaptive assistance levels, soft interactions for proprioceptive awareness, interactive (bio) feedback, and precisely controlled movement trajectories while supporting objective and quantifiable measures of performance [167]. This implies a paradox in which a rehabilitation robot needs to support standardized treatment while being adaptable and offering patient-tailored care [168]. While this paradox can be effectively handled by a human healthcare provider, it requires a level of agility that surpasses traditional robotics. The highlighted research above points toward developments in real-time and reinforcement learning as well as adaptive control as a means to work with robots that change themselves in real time in response to a new condition. These proved useful for mechanical aid, such as in diagnostic and assistive robotics, as well as for designing social robots. This point was recently highlighted as a need for precision rehabilitation, which has the potential to revolutionize clinical care, optimize function for individuals, and magnify the value of rehabilitation in healthcare [169]. There is still a need for further improvement in real-time learning, for it to apply to high-level, behavioral, and cognitive training.

- Recent advances in the utilization of neuromorphic designs to provide adaptive robotic control show great promise in various applications such as classical inverse kinematic calculations in joint-based systems featuring low [123] and high degrees of freedom [170], as well as in free-moving autonomous vehicular systems [171]. It was recently implemented for the first time in a clinical rehabilitation framework where a neuromorphically controlled framework was used to control a robotic arm mounted on a wheelchair, providing accurate responsive control with low energy requirements and a high level of adaptability [137]. The contribution of neuromorphic systems for neurorehabilitation is still under development in research facilities, and the extent to which those frameworks might contribute to clinical applications remains to be seen.

- Neurological impairments are inherently multidimensional, encompassing physical, sensory, cognitive, and psychological aspects, therefore imposing challenges to adequate autonomous robotic-driven assessment. While a one-stop robotic solution for a complete neurological assessment might be the holy grail for rehabilitation robotics, it seems that it is currently out of reach. Therefore, currently, neurological assessments should incorporate multiple robots and complementary assessment methods to comprehensively evaluate the different aspects of neurological impairment.

- Neurorehabilitation protocols vary across rehabilitation centers and patients. This challenge can most definitely be addressed by adopting user-centered AI-driven robotic systems. As neurorehabilitation protocols can quickly become monotonous because exercises repeat themselves with the same cognitive and physiological tests, a robotic system can provide the patient with motivation and a sense of continuous adaptation/improvement [172]. The challenge currently lies in the adoption of new technologies in this area. Developments in unbiased and interpretable AI are crucial to allow experts and centers to rely on AI over expert-led intervention. As mentioned above, this research direction is heavily explored and remains an important open question. One of the most crucial upcoming milestones is the adoption of AI-driven systems in medical care, which involves overcoming the four key challenges of regulation, interpretability, interoperability, and the need for structured data and evidence [173]. Recent developments in transparent, explainable, unbiased, and responsible AI may be able to bridge the “trust gap” between humans and machines [174,175]. The trust gap in the unique patient–clinician–robot triad was highlighted in a call for the development of design features to foster trust, encouraging the rehabilitation community to actively pursue it [176]. While there are no specific guidelines for AI, the FDA has begun to clear AI software for clinical use [177]. For example, all AI-driven clinical decision support systems (CDSSs) (e.g., the diagnostic robots discussed above) must be validated for secure use and effectiveness [173]. However, because the role of intelligent robotics in rehabilitation is multidimensional, the regulatory process for each robotic application is different and should be addressed carefully.

- Specialized neurorehabilitation centers may require the patient to be medically stable, be able to actively participate in a daily rehabilitation program, demonstrate an ability to make progress, have a social support system, and be able to finance a prolonged stay at the center. By providing robotic-assisted neurorehabilitation, this barrier to admission can be significantly lowered as it can significantly reduce associated costs. For example, a physiotherapist was shown to be able to simultaneously handle four robots, which quadruples the effectiveness of the post-stroke rehabilitation of the upper and lower limbs [178] and was shown to cost ~35% less than the hourly physiotherapy rate [179]. The economic case for robotic rehabilitation is nevertheless complicated since it is dependent on the national healthcare system’s reimbursement strategy [180], which in many cases is not fully supportive of robotic solutions.

- The efficiency of neurorehabilitation programs is hard to evaluate. By having a robotic-assisted diagnosis, which can periodically produce reliable progress reports, a neurorehabilitation treatment protocol can be readily evaluated. Current technologies, however, are limited to physical therapy.

- The disciplines comprising neurorehabilitation care generally require practitioners who evidence conscious emotional intelligence to provide optimal treatment. This is particularly true when the target population is young and involves gaining the trust of parents and children while remaining sensitive enough to the child’s special emotional and physiological needs. While advances in AEI are impressive, they are still limited to basic social robots. There is room for vast improvements in that field for it to be applicable in neurorehabilitation.

To conclude, recent developments in AI, in particular in the fields of artificial emotional intelligence, interactive reinforcement learning, natural language processing, real-time learning, computer vision, and adaptive behavior, suggest that AI-driven robotics are coming closer to providing individual intensive care during neurorehabilitation.

Author Contributions

E.E.T. and O.E. jointly wrote and revised the review. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a research grant from the Open University of Israel.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Acknowledgments

The authors would like to thank the students of the Neuro and Biomorphic Engineering Lab at the Open University of Israel for the fruitful discussions.

Conflicts of Interest

The authors declare no competing interests.

References

- Karol, R.L. Team models in neurorehabilitation: Structure, function, and culture change. NeuroRehabilitation 2014, 34, 655–669. [Google Scholar] [CrossRef] [PubMed]

- Locascio, G. Cognitive Rehabilitation for Pediatric Neurological Disorders; Cambridge University Press: Cambridge, UK, 2018. [Google Scholar]

- Pratt, G. Is a Cambrian explosion coming for robotics? J. Econ. Perspect. 2015, 29, 51–60. [Google Scholar] [CrossRef]

- Dixit, P.; Payal, M.; Goyal, N.; Dutt, V. Robotics, AI and IoT in Medical and Healthcare Applications. In AI and IoT-Based Intelligent Automation in Robotics; Wiley: Hoboken, NJ, USA, 2021; pp. 53–73. [Google Scholar]

- Greenwald, B.D.; Rigg, J.L. Neurorehabilitation in traumatic brain injury: Does it make a difference? Mt. Sinai J. Med. J. Transl. Pers. Med. 2009, 76, 182–189. [Google Scholar] [CrossRef] [PubMed]

- Oberholzer, M.; Müri, R.M. Neurorehabilitation of traumatic brain injury (TBI): A clinical review. Med. Sci. 2019, 7, 47. [Google Scholar] [CrossRef] [PubMed]

- Van den Broek, M.D. Why does neurorehabilitation fail? J. Head Trauma Rehabil. 2005, 20, 464–473. [Google Scholar] [CrossRef] [PubMed]

- Georgiev, D.D.; Georgieva, I.; Gong, Z.; Nanjappan, V.; Georgiev, G.V. Virtual reality for neurorehabilitation and cognitive enhancement. Brain Sci. 2021, 11, 221. [Google Scholar] [CrossRef]

- Esfahlani, S.S.; Butt, J.; Shirvani, H. Fusion of artificial intelligence in neuro-rehabilitation video games. IEEE Access 2019, 7, 102617–102627. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Z.; Du, C.; Wang, W.; Peng, Q.; Qiu, J.; Wang, G. The realization of robotic neurorehabilitation in clinical: Use of computational intelligence and future prospects analysis. Expert Rev. Med. Devices 2020, 17, 1311–1322. [Google Scholar] [CrossRef]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: Explanation and elaboration. J. Clin. Epidemiol. 2009, 62, e1–e34. [Google Scholar] [CrossRef]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson: London, UK, 2020. [Google Scholar]

- Kang, J.; Han, X.; Song, J.; Niu, Z.; Li, X. The identification of children with autism spectrum disorder by SVM approach on EEG and eye-tracking data. Comput. Biol. Med. 2020, 120, 103722. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Anand, R.; Wang, M. Maximum relevance and minimum redundancy feature selection methods for a marketing machine learning platform. In Proceedings of the IEEE International Conference on Data Science and Advanced Analytics, Washington, DC, USA, 5–8 October 2019. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Ceseracciu, E.; Reggiani, M.; Sawacha, Z.; Sartori, M.; Spolaor, F.; Cobelli, C.; Pagello, E. SVM classification of locomotion modes using surface electromyography for applications in rehabilitation robotics. In Proceedings of the 9th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010. [Google Scholar]

- Hamaguchi, T.; Saito, T.; Suzuki, M.; Ishioka, T.; Tomisawa, Y.; Nakaya, N.; Abo, M. Support vector machine-based classifier for the assessment of finger movement of stroke patients undergoing rehabilitation. J. Med. Biol. Eng. 2020, 40, 91–100. [Google Scholar] [CrossRef]

- Rovini, E.; Fiorini, L.; Esposito, D.; Maremmani, C.; Cavallo, F. Fine motor assessment with unsupervised learning for personalized rehabilitation in Parkinson disease. In Proceedings of the 6th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019. [Google Scholar]

- Garcia, F.; Rachelson, E. Markov decision processes. In Markov Decision Processes in Artificial Intelligence; Wiley Online Library: Hoboken, NJ, USA, 2013; pp. 1–38. [Google Scholar]

- Jagodnik, K.M.; Thomas, P.S.; Bogert, A.J.V.D.; Branicky, M.S.; Kirsch, R.F. Training an actor-critic reinforcement learning controller for arm movement using human-generated rewards. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1892–1905. [Google Scholar] [CrossRef]

- Liao, Y.; Vakanski, A.; Xian, M. A deep learning framework for assessing physical rehabilitation exercises. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 468–477. [Google Scholar] [CrossRef]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef]

- Duan, N.; Liu, L.-Z.; Yu, X.-J.; Li, Q.; Yeh, S.-C. Classification of multichannel surface-electromyography signals based on convolutional neural networks. J. Ind. Inf. Integr. 2019, 15, 201–206. [Google Scholar] [CrossRef]

- Huang, K.-Y.; Wu, C.-H.; Hong, Q.-B.; Su, M.-H.; Chen, Y.-H. Speech emotion recognition using deep neural network considering verbal and nonverbal speech sounds. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019. [Google Scholar]

- Jain, K.; Mao, J.; Mohiuddin, K.M. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef]

- Yang, G.R.; Wang, X.-J. Artificial neu- ral networks for neuroscientists: A primer. arXiv 2020, arXiv:2006.01001. [Google Scholar]

- Chorowski, J.K.; Bahdanau, D.; Serdyuk, D.; Cho, K.; Bengio, Y. Attention-based models for speech recognition. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Baclic, O.; Tunis, M.; Young, K.; Doan, C.; Swerdfeger, H.; Schonfeld, J. Artificial intelligence in public health: Challenges and opportunities for public health made possible by advances in natural language processing. Can. Commun. Dis. Rep. 2020, 46, 161. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Moret-Tatay, C.; Iborra-Marmolejo, I.; Jorques-Infante, M.J.; Esteve-Rodrigo, J.V.; Schwanke, C.H.; Irigaray, T.Q. Can Virtual Assistants Perform Cognitive Assessment in Older Adults? A Review. Medicina 2021, 57, 1310. [Google Scholar] [CrossRef] [PubMed]

- Zbytniewska, M.; Kanzler, C.M.; Jordan, L.; Salzmann, C.; Liepert, J.; Lambercy, O.; Gassert, R. Reliable and valid robot-assisted assessments of hand proprioceptive, motor and sensorimotor impairments after stroke. J. NeuroEng. Rehabil. 2021, 18, 115. [Google Scholar] [CrossRef] [PubMed]

- Colombo, R. Performance measures in robot assisted assessment of sensorimotor functions. In Rehabilitation Robotics; Academic Press: Cambridge, MA, USA, 2018; pp. 101–115. [Google Scholar]

- Hussain, S.; Jamwal, P.K.; Vliet, P.V.; Brown, N.A. Robot Assisted Ankle Neuro-Rehabilitation: State of the art and Future Challenges. Expert Rev. Neurother. 2021, 21, 111–121. [Google Scholar] [CrossRef]

- Sheng, B.; Tang, L.; Moosman, O.M.; Deng, C.; Xie, S.; Zhang, Y. Development of a biological signal-based evaluator for robot-assisted upper-limb rehabilitation: A pilot study. Australas. Phys. Eng. Sci. Med. 2019, 42, 789–801. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; McDaid, A.; Zhang, S.; Zhang, Y.; Xie, S.Q. Automated robot-assisted assessment for wrist active ranges of motion. Med. Eng. Phys. 2019, 71, 98–101. [Google Scholar] [CrossRef] [PubMed]

- Saleh, M.A.; Hanapiah, F.A.; Hashim, H. Robot applications for autism: A comprehensive review. Disabil. Rehabil. Assist. Technol. 2021, 16, 580–602. [Google Scholar] [CrossRef]

- Ramirez-Duque, A.A.; Frizera-Neto, A.; Bastos, T.F. Robot-assisted autism spectrum disorder diagnostic based on artificial reasoning. J. Intell. Robot. Syst. 2019, 96, 267–281. [Google Scholar] [CrossRef]

- Yaneva, V.; Eraslan, S.; Yesilada, Y.; Mitkov, R. Detecting high-functioning autism in adults using eye tracking and machine learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1254–1261. [Google Scholar] [CrossRef]

- Illavarason, P.; Renjit, J.A.; Kumar, P.M. Medical diagnosis of cerebral palsy rehabilitation using eye images in machine learning techniques. J. Med. Syst. 2019, 43, 278. [Google Scholar] [CrossRef]

- Fernandes, T. Independent mobility for children with disabilities. Int. J. Ther. Rehabil. 2006, 13, 329–333. [Google Scholar] [CrossRef]

- Colombo, R.; Sterpi, I.; Mazzone, A.; Delconte, C.; Pisano, F. Taking a lesson from patients’ recovery strategies to optimize training during robot-aided rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 276–285. [Google Scholar] [CrossRef]

- Prosser, L.A.; Ohlrich, L.B.; Curatalo, L.A.; Alter, K.E.; Damiano, D.L. Feasibility and preliminary effectiveness of a novel mobility training intervention in infants and toddlers with cerebral palsy. Dev. Neurorehabilit. 2012, 15, 259–266. [Google Scholar] [CrossRef] [PubMed]

- Ghazi, M.A.; Nash, M.D.; Fagg, A.H.; Ding, L.; Kolobe, T.H.; Miller, D.P. Novel assistive device for teaching crawling skills to infants. In Field and Service Robotics; Springer: Cham, Switzerland, 2016; pp. 593–605. [Google Scholar]

- Conner, B.C.; Luque, J.; Lerner, Z.F. Adaptive ankle resistance from a wearable robotic device to improve muscle recruitment in cerebral palsy. Ann. Biomed. Eng. 2020, 48, 1309–1321. [Google Scholar] [CrossRef] [PubMed]

- Soh, H.; Demiris, Y. When and how to help: An iterative probabilistic model for learning assistance by demonstration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Li, W.-J.; Hsieh, C.-Y.; Lin, L.-F.; Chu, W.-C. Hand gesture recognition for post-stroke rehabilitation using leap motion. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017. [Google Scholar]

- Giorgino, T.; Lorussi, F.; Rossi, D.D.; Quaglini, S. Posture classification via wearable strain sensors for neurological rehabilitation. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006. [Google Scholar]

- Taborri, J.; Rossi, S.; Palermo, E.; Cappa, P. A HMM distributed classifier to control robotic knee module of an active orthosis. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Singapore, 11–14 August 2015. [Google Scholar]

- Argall, B.D. Autonomy in rehabilitation robotics: An intersection. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 441–463. [Google Scholar] [CrossRef] [PubMed]