Automated Grapevine Cultivar Identification via Leaf Imaging and Deep Convolutional Neural Networks: A Proof-of-Concept Study Employing Primary Iranian Varieties

Abstract

:- ∘

- A CNN approach for automatic identification of grapevine cultivar by leaf images.

- ∘

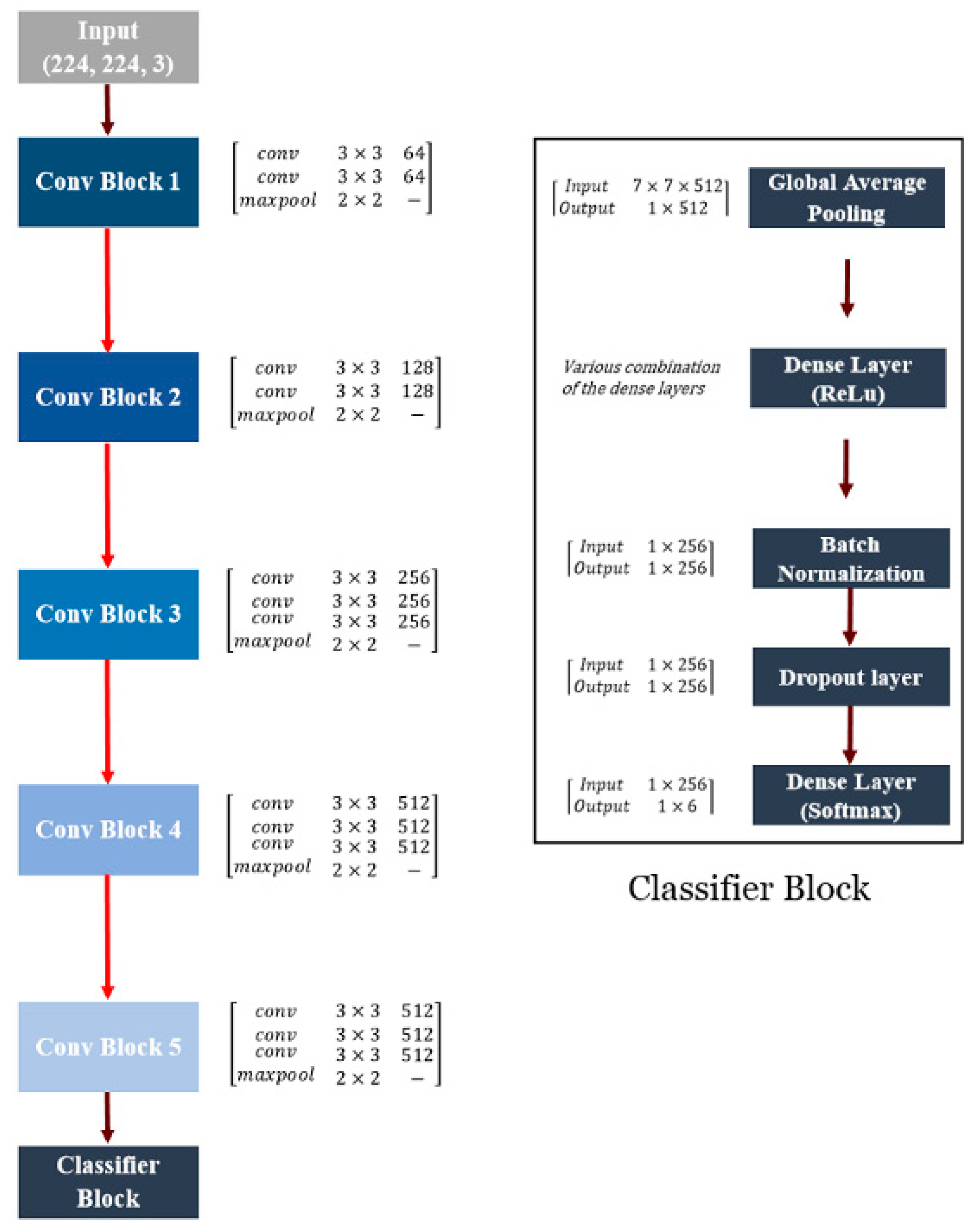

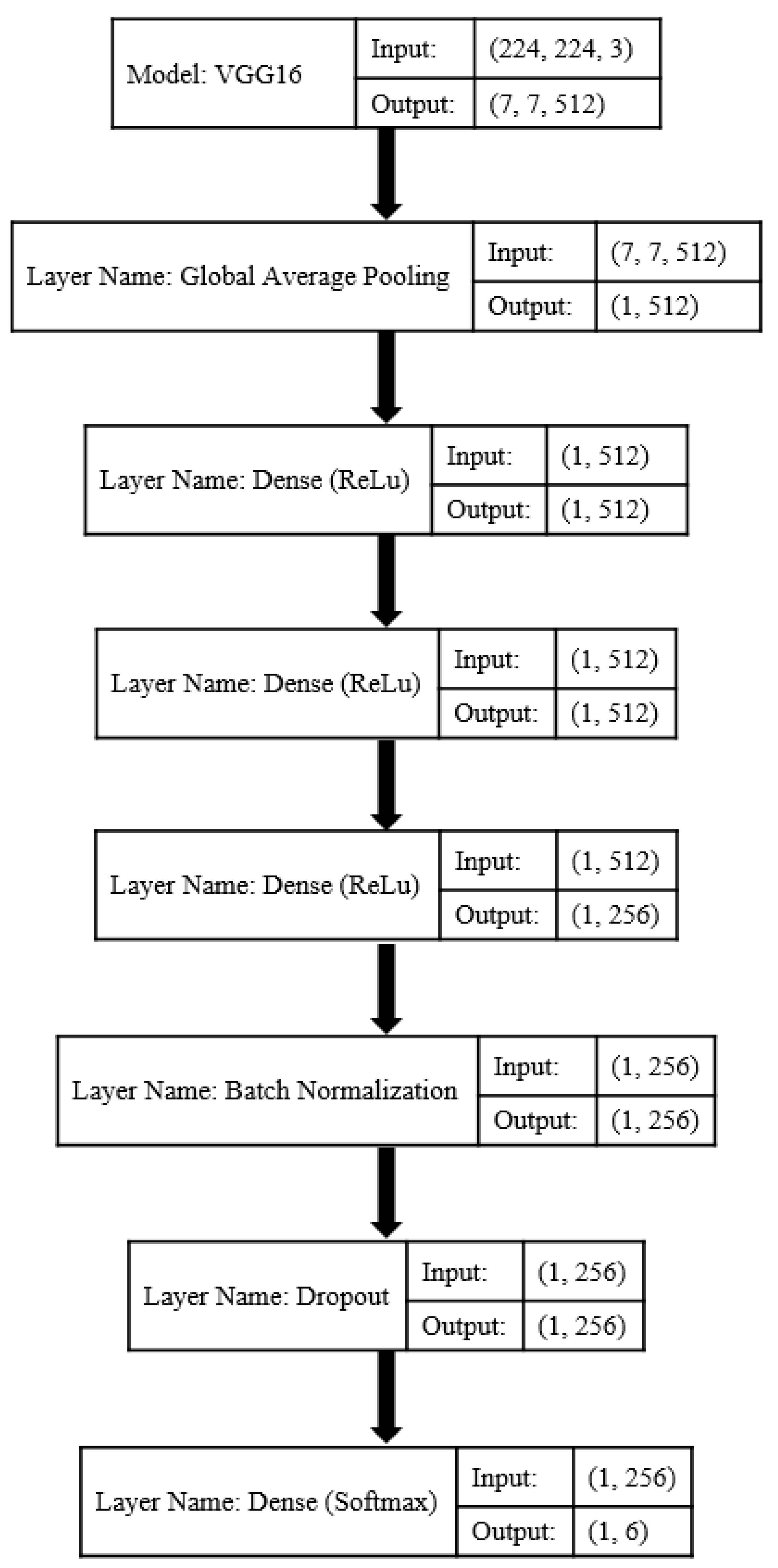

- The VGG16 architecture was modified by adding four layers.

- ∘

- An average classification accuracy of over 99% was obtained.

- ∘

- Rapid, low-cost and high-throughput grapevine cultivar identification was feasible.

- ○

- The obtained tool complements existing methods, assisting cultivar identification services.

1. Introduction

2. Materials and Methods

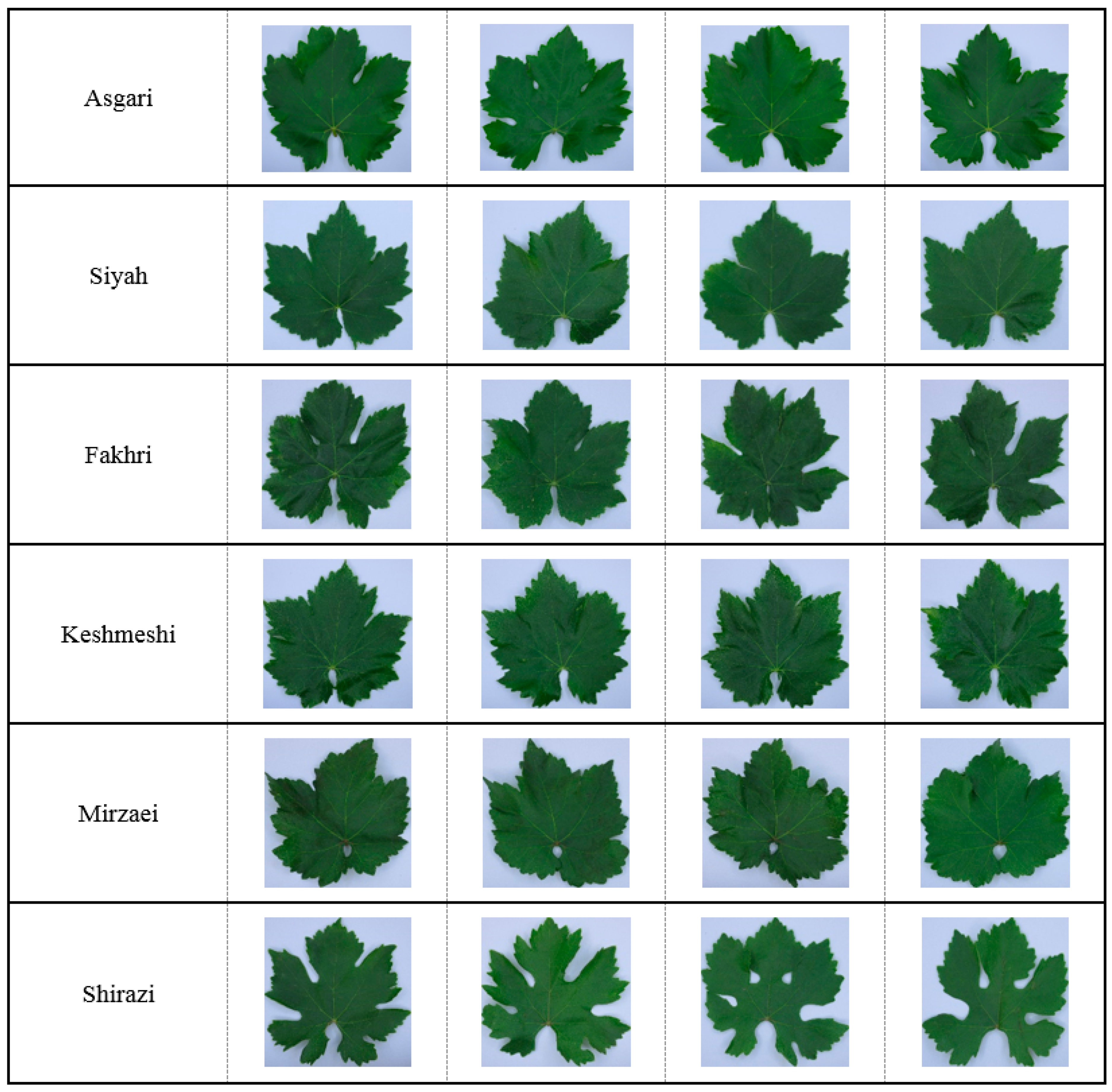

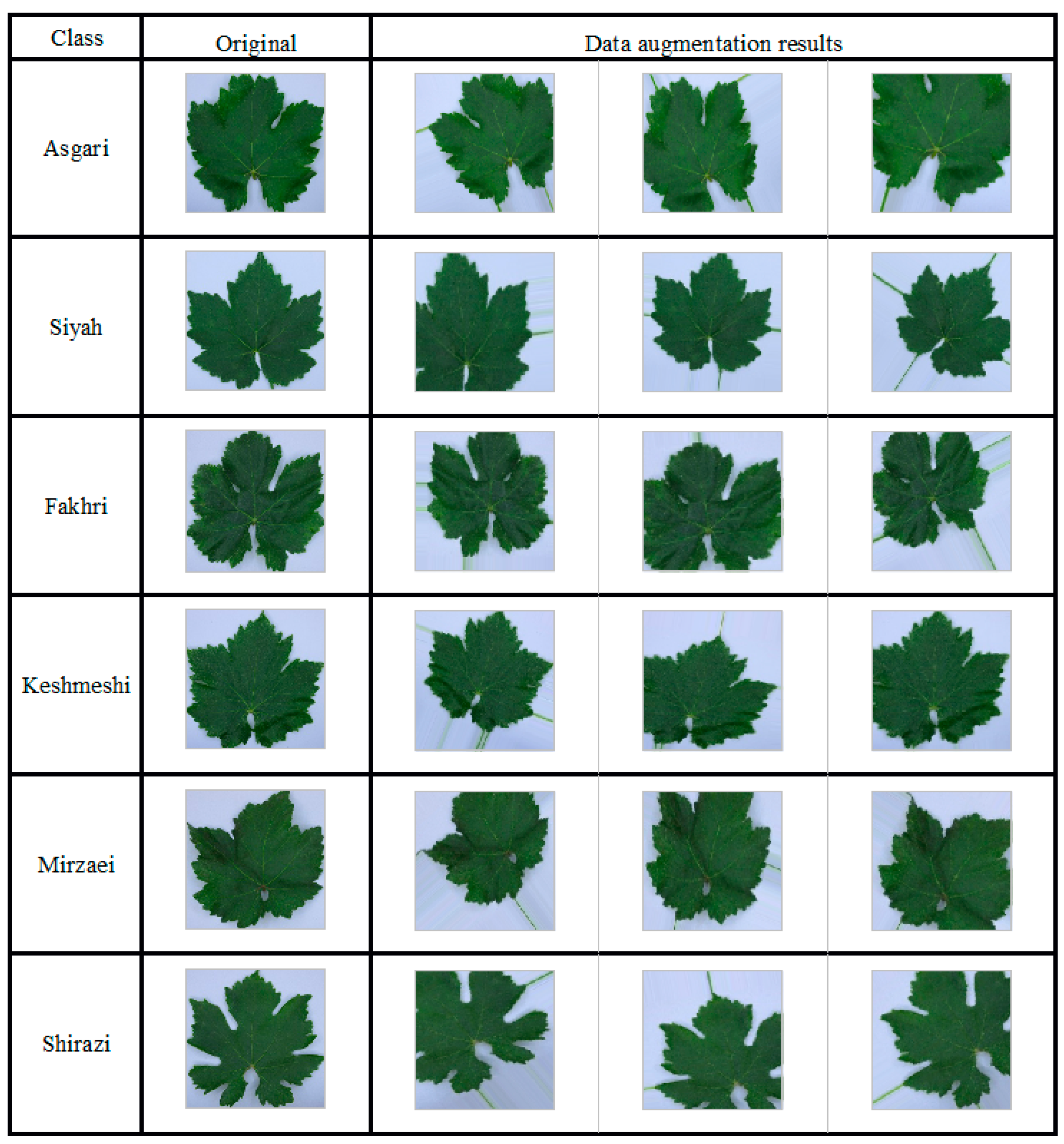

2.1. Plant Material and Sampling Protocol

2.2. Image Acquisition

2.3. Deep Convolutional Neural Network-Based Model

2.3.1. VGGNet-Based CNN Model

2.3.2. CNN Model Training

2.3.3. k-Fold Cross-Validation and Assessment of Classification Accuracy

3. Results and Discussion

3.1. Evaluation of Quantitative Classification

3.2. Analysis of Qualitative

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | artificial neural network |

| AUC | area under the curve |

| CCD | charged-coupled device |

| CNN | convolutional neural network |

| IPGRI | International Plant Genetic Resources Institute |

| OIV | International Office of the Vine and Wine |

| ReLU | Rectified Linear Unit |

| RGB | red, green and blue |

| RIGR | Research Institute for Grapes and Raisin |

| UPOV | International Union for the Protection of New Varieties of Plants |

| VGGNet | Visual Geometry Group network |

| VIVC | Vitis International Variety Catalogue |

References

- Maul, E.; Röckel, F. Vitis International Variety Catalogue VIVC. 2015. Available online: https://www.vivc.de/ (accessed on 1 July 2021).

- Grigoriou, A.; Tsaniklidis, G.; Hagidimitriou, M.; Nikoloudakis, N. The Cypriot Indigenous Grapevine Germplasm is a Multi-Clonal Varietal Mixture. Plants 2020, 9, 1034. [Google Scholar] [CrossRef]

- Cipriani, G.; Spadotto, A.; Jurman, I.; Gaspero, G.D.; Crespan, M.; Meneghetti, S.; Frare, E.; Vignani, R.; Cresti, M.; Morgante, M.; et al. The SSR-based molecular profile of 1005 grapevine (Vitis vinifera L.) accessions uncovers new synonymy and parentages, and re-veals a large admixture amongst varieties of different geographic origin. Theor. Appl. Genet. 2010, 121, 1569–1585. [Google Scholar] [CrossRef] [PubMed]

- This, P.; Jung, A.; Boccacci, P.; Borrego, J.; Botta, R.; Costantini, L.; Crespan, M.; Dangl, G.S.; Eisenheld, C.; Ferreira-Monteiro, F.; et al. Development of a standard set of microsatellite reference alleles for identification of grape cultivars. Theor. Appl. Genet. 2004, 109, 1448–1458. [Google Scholar] [CrossRef] [PubMed]

- Fanourakis, D.; Kazakos, F.; Nektarios, P. Allometric Individual Leaf Area Estimation in Chrysanthemum. Agronomy 2021, 11, 795. [Google Scholar] [CrossRef]

- Taheri-Garavand, A.; Nejad, A.R.; Fanourakis, D.; Fatahi, S.; Majd, M.A. Employment of artificial neural networks for non-invasive estimation of leaf water status using color features: A case study in Spathiphyllum wallisii. Acta Physiol. Plant. 2021, 43, 78. [Google Scholar] [CrossRef]

- Koubouris, G.; Bouranis, D.; Vogiatzis, E.; Nejad, A.R.; Giday, H.; Tsaniklidis, G.; Ligoxigakis, E.K.; Blazakis, K.; Kalaitzis, P.; Fanourakis, D. Leaf area estimation by considering leaf dimensions in olive tree. Sci. Hortic. 2018, 240, 440–445. [Google Scholar] [CrossRef]

- Fanourakis, D.; Giday, H.; Li, T.; Kambourakis, E.; Ligoxigakis, E.K.; Papadimitriou, M.; Strataridaki, A.; Bouranis, D.; Fiorani, F.; Heuvelink, E.; et al. Antitranspirant compounds alleviate the mild-desiccation-induced reduction of vase life in cut roses. Postharvest Biol. Technol. 2016, 117, 110–117. [Google Scholar] [CrossRef]

- Huixian, J. The Analysis of Plants Image Recognition Based on Deep Learning and Artificial Neural Network. IEEE Access 2020, 8, 68828–68841. [Google Scholar] [CrossRef]

- Salazar, J.C.S.; Melgarejo, L.M.; Bautista, E.H.D.; Di Rienzo, J.A.; Casanoves, F. Non-destructive estimation of the leaf weight and leaf area in cacao (Theobroma cacao L.). Sci. Hortic. 2018, 229, 19–24. [Google Scholar] [CrossRef]

- Serdar, Ü.; Demirsoy, H. Non-destructive leaf area estimation in chestnut. Sci. Hortic. 2006, 108, 227–230. [Google Scholar] [CrossRef]

- Peksen, E. Non-destructive leaf area estimation model for faba bean (Vicia faba L.). Sci. Hortic. 2007, 113, 322–328. [Google Scholar] [CrossRef]

- Cristofori, V.; Rouphael, Y.; de Gyves, E.M.; Bignami, C. A simple model for estimating leaf area of hazelnut from linear measure-ments. Sci. Hortic. 2007, 113, 221–225. [Google Scholar] [CrossRef]

- Kandiannan, K.; Parthasarathy, U.; Krishnamurthy, K.; Thankamani, C.; Srinivasan, V. Modeling individual leaf area of ginger (Zingiber officinale Roscoe) using leaf length and width. Sci. Hortic. 2009, 120, 532–537. [Google Scholar] [CrossRef]

- Mokhtarpour, H.; Christopher, B.S.; Saleh, G.; Selamat, A.B.; Asadi, M.E.; Kamkar, B. Non-destructive estimation of maize leaf area, fresh weight, and dry weight using leaf length and leaf width. Commun. Biometry Crop Sci. 2010, 5, 19–26. [Google Scholar]

- Córcoles, J.I.; Domínguez, A.; Moreno, M.A.; Ortega, J.F.; de Juan, J.A. A non-destructive method for estimating onion leaf area. Ir. J. Agric. Food Res. 2015, 54, 17–30. [Google Scholar] [CrossRef] [Green Version]

- Yeshitila, M.; Taye, M. Non-destructive prediction models for estimation of leaf area for most commonly grown vegetable crops in Ethiopia. Sci. J. Appl. Math. Stat. 2016, 4, 202–216. [Google Scholar] [CrossRef] [Green Version]

- Fuentes, S.; Hernández-Montes, E.; Escalona, J.; Bota, J.; Viejo, C.G.; Poblete-Echeverría, C.; Tongson, E.; Medrano, H. Automated grapevine cultivar classification based on machine learning using leaf morpho-colorimetry, fractal dimension and near-infrared spectroscopy parameters. Comput. Electron. Agric. 2018, 151, 311–318. [Google Scholar] [CrossRef]

- Qadri, S.; Furqan Qadri, S.; Husnain, M.; Saad Missen, M.M.; Khan, D.M.; Muzammil-Ul-Rehman, R.A.; Ullah, S. Machine vision approach for classification of citrus leaves using fused features. Int. J. Food Prop. 2019, 22, 2071–2088. [Google Scholar] [CrossRef]

- A Vivier, M.; Pretorius, I.S. Genetically tailored grapevines for the wine industry. Trends Biotechnol. 2002, 20, 472–478. [Google Scholar] [CrossRef]

- This, P.; Lacombe, T.; Thomas, M.R. Historical origins and genetic diversity of wine grapes. Trends Genet. 2006, 22, 511–519. [Google Scholar] [CrossRef]

- Thomas, M.R.; Cain, P.; Scott, N.S. DNA typing of grapevines: A universal methodology and database for describing cultivars and evaluating genetic relatedness. Plant Mol. Biol. 1994, 25, 939–949. [Google Scholar] [CrossRef] [PubMed]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Sousa, J.; Peres, E.; Sousa, J.J.; Morais, R.; Sousa, A. Grapevine Varieties Classification Using Machine Learning BT—Progress in Artificial Intelligence; Moura Oliveira, P., Novais, P., Reis, L.P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 186–199. [Google Scholar]

- Thet, K.Z.; Htwe, K.K.; Thein, M.M. Grape Leaf Diseases Classification using Convolutional Neural Network. In Proceedings of the 2020 International Conference on Advanced Information Technologies (ICAIT), Yangon, Myanmar, 4–5 November 2020; pp. 147–152. [Google Scholar]

- Pai, S.; Thomas, M.V. A Comparative Analysis on AI Techniques for Grape Leaf Disease Recognition BT—Computer Vision and Image Processing; Singh, S.K., Roy, P., Raman, B., Nagabhushan, P., Eds.; Springer: Singapore, 2021; pp. 1–12. [Google Scholar]

- Yang, G.; He, Y.; Yang, Y.; Xu, B. Fine-Grained Image Classification for Crop Disease Based on Attention Mechanism. Front. Plant Sci. 2020, 11, 2077. [Google Scholar] [CrossRef] [PubMed]

- Ramos, R.P.; Gomes, J.S.; Prates, R.M.; Filho, E.F.S.; Teruel, B.J.; Costa, D.D.S. Non-invasive setup for grape maturation classification using deep learning. J. Sci. Food Agric. 2021, 101, 2042–2051. [Google Scholar] [CrossRef] [PubMed]

- Moghimi, A.; Pourreza, A.; Zuniga-Ramirez, G.; Williams, L.; Fidelibus, M. Grapevine Leaf Nitrogen Concentration Using Aerial Multispectral Imagery. Remote Sens. 2020, 12, 3515. [Google Scholar] [CrossRef]

- Villegas Marset, W.; Pérez, D.S.; Díaz, C.A.; Bromberg, F. Towards practical 2D grapevine bud detection with fully convolutional networks. Comput. Electron. Agric. 2021, 182, 105947. [Google Scholar] [CrossRef]

- Boulent, J.; St-Charles, P.-L.; Foucher, S.; Théau, J. Automatic Detection of Flavescence Dorée Symptoms Across White Grapevine Varieties Using Deep Learning. Front. Artif. Intell. 2020, 3, 564878. [Google Scholar] [CrossRef]

- Alessandrini, M.; Rivera, R.C.F.; Falaschetti, L.; Pau, D.; Tomaselli, V.; Turchetti, C. A grapevine leaves dataset for early detection and classification of esca disease in vineyards through machine learning. Data Brief 2021, 35, 106809. [Google Scholar] [CrossRef] [PubMed]

- Ampatzidis, Y.; Cruz, A.; Pierro, R.; Materazzi, A.; Panattoni, A.; De Bellis, L.; Luvisi, A. Vision-based system for detecting grapevine yellow diseases using artificial intelligence. Acta Hortic. 2020, 1279, 225–230. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- ur Rahman, H.; Ch, N.J.; Manzoor, S.; Najeeb, F.; Siddique, M.Y.; Khan, R.A. A comparative analysis of machine learning approaches for plant disease identification. Adv. Life Sci. 2017, 4, 120–126. [Google Scholar]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Singh, V.; Misra, A. Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef] [Green Version]

- Cruz, A.; Ampatzidis, Y.; Pierro, R.; Materazzi, A.; Panattoni, A.; De Bellis, L.; Luvisi, A. Detection of grapevine yellows symptoms in Vitis vinifera L. with artificial intelligence. Comput. Electron. Agric. 2019, 157, 63–76. [Google Scholar] [CrossRef]

- Liu, Y.; Su, J.; Xu, G.; Fang, Y.; Liu, F.; Su, B. Identification of Grapevine (Vitis vinifera L.) Cultivars by Vine Leaf Image via Deep Learning and Mobile Devices. 2020. Available online: https://www.researchsquare.com/article/rs-27620/v1 (accessed on 1 July 2021).

- Gutiérrez, S.; Novales, J.F.; Diago, M.P.; Tardaguila, J. On-The-Go Hyperspectral Imaging Under Field Conditions and Machine Learning for the Classification of Grapevine Varieties. Front. Plant Sci. 2018, 9, 1102. [Google Scholar] [CrossRef] [PubMed]

| Model | Layer Name | ||||

|---|---|---|---|---|---|

| Global Average Pooling | Dense (ReLU) | Batch Normalization | Dropout | Dense (Softmax) | |

| 1 | ✓ | [256] | ✓ | ✓ | [6] |

| 2 | ✓ | [512] | ✓ | ✓ | [6] |

| 3 | ✓ | [512, 512] | ✓ | ✓ | [6] |

| 4 | ✓ | [512, 512, 256] | ✓ | ✓ | [6] |

| Model | Training | Validation | Test | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Loss | Accuracy | Loss | Accuracy | Loss | |||||||

| Average | STD | Average | STD | Average | STD | Average | STD | Average | STD | Average | STD | |

| 1 | 0.84 | 0.05 | 0.49 | 0.09 | 0.9 | 0.05 | 0.43 | 0.18 | 0.93 | 0.04 | 0.25 | 0.08 |

| 2 | 0.87 | 0.04 | 0.41 | 0.08 | 0.91 | 0.05 | 0.33 | 0.15 | 0.98 | 0.02 | 0.17 | 0.08 |

| 3 | 0.91 | 0.03 | 0.3 | 0.11 | 0.93 | 0.05 | 0.26 | 0.17 | 0.97 | 0.03 | 0.12 | 0.08 |

| 4 | 0.93 | 0.02 | 0.26 | 0.07 | 0.95 | 0.04 | 0.18 | 0.12 | 0.97 | 0.02 | 0.12 | 0.06 |

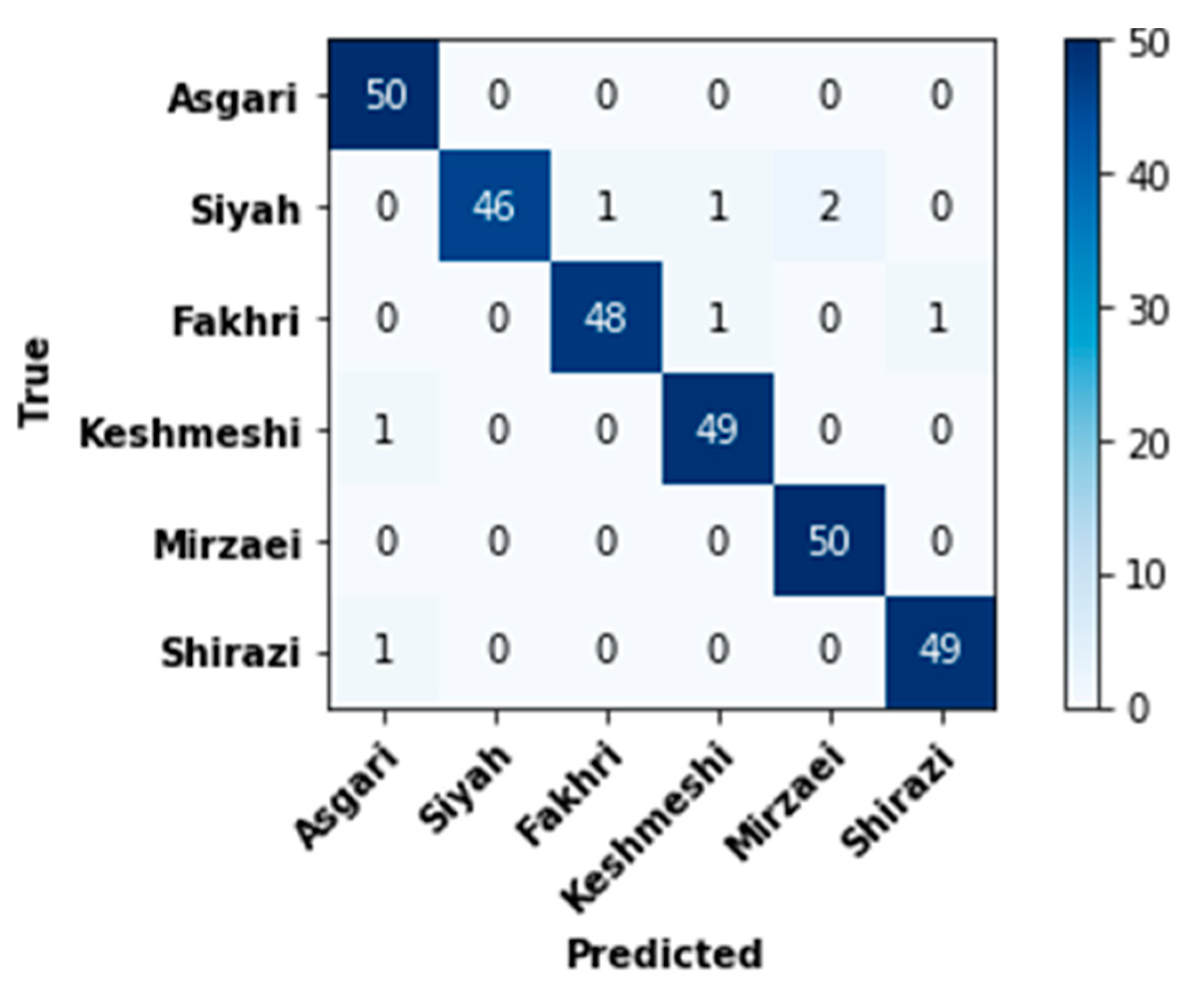

| Class | Accuracy (%) | Precision (%) | Sensitivity (%) | Specificity (%) | AUC (%) | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Average | STD | Average | STD | Average | STD | Average | STD | Average | STD | |

| Asgari | 99.34 | 1.49 | 96.67 | 7.45 | 100 | 0 | 99.2 | 1.79 | 99.6 | 0.89 |

| Siyah | 98.67 | 2.17 | 100 | 0 | 92 | 13.03 | 100 | 0 | 96 | 6.52 |

| Fakhri | 99 | 0.91 | 98.18 | 4.06 | 96 | 5.47 | 99.6 | 0.89 | 97.8 | 2.58 |

| Keshmeshi | 99 | 0.91 | 96.36 | 4.98 | 98 | 4.47 | 99 | 1.09 | 98.6 | 2.07 |

| Mirzaie | 99.34 | 0.91 | 96.36 | 4.98 | 100 | 0 | 99.2 | 1.09 | 99.6 | 0.54 |

| Shirazi | 99.34 | 0.91 | 98.18 | 4.06 | 98 | 4.47 | 99.6 | 0.89 | 98.8 | 2.17 |

| Average per class | 99.11 | 0.74 | 97.62 | 1.96 | 97.33 | 2.23 | 99.47 | 0.44 | 98.4 | 1.34 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasiri, A.; Taheri-Garavand, A.; Fanourakis, D.; Zhang, Y.-D.; Nikoloudakis, N. Automated Grapevine Cultivar Identification via Leaf Imaging and Deep Convolutional Neural Networks: A Proof-of-Concept Study Employing Primary Iranian Varieties. Plants 2021, 10, 1628. https://doi.org/10.3390/plants10081628

Nasiri A, Taheri-Garavand A, Fanourakis D, Zhang Y-D, Nikoloudakis N. Automated Grapevine Cultivar Identification via Leaf Imaging and Deep Convolutional Neural Networks: A Proof-of-Concept Study Employing Primary Iranian Varieties. Plants. 2021; 10(8):1628. https://doi.org/10.3390/plants10081628

Chicago/Turabian StyleNasiri, Amin, Amin Taheri-Garavand, Dimitrios Fanourakis, Yu-Dong Zhang, and Nikolaos Nikoloudakis. 2021. "Automated Grapevine Cultivar Identification via Leaf Imaging and Deep Convolutional Neural Networks: A Proof-of-Concept Study Employing Primary Iranian Varieties" Plants 10, no. 8: 1628. https://doi.org/10.3390/plants10081628

APA StyleNasiri, A., Taheri-Garavand, A., Fanourakis, D., Zhang, Y.-D., & Nikoloudakis, N. (2021). Automated Grapevine Cultivar Identification via Leaf Imaging and Deep Convolutional Neural Networks: A Proof-of-Concept Study Employing Primary Iranian Varieties. Plants, 10(8), 1628. https://doi.org/10.3390/plants10081628