Abstract

Face recognition and identification are very important applications in machine learning. Due to the increasing amount of available data, traditional approaches based on matricization and matrix PCA methods can be difficult to implement. Moreover, the tensorial approaches are a natural choice, due to the mere structure of the databases, for example in the case of color images. Nevertheless, even though various authors proposed factorization strategies for tensors, the size of the considered tensors can pose some serious issues. Indeed, the most demanding part of the computational effort in recognition or identification problems resides in the training process. When only a few features are needed to construct the projection space, there is no need to compute a SVD on the whole data. Two versions of the tensor Golub–Kahan algorithm are considered in this manuscript, as an alternative to the classical use of the tensor SVD which is based on truncated strategies. In this paper, we consider the Tensor Tubal Golub–Kahan Principal Component Analysis method which purpose it to extract the main features of images using the tensor singular value decomposition (SVD) based on the tensor cosine product that uses the discrete cosine transform. This approach is applied for classification and face recognition and numerical tests show its effectiveness.

1. Introduction

An important challenge in the last few years was the extraction of the main information in large datasets, measurements, observations that appear in signal and hyperspectral image processing, data mining, machine learning. Due to the increasing volume of data required by these applications, approximative low-rank matrix and tensor factorizations play a fundamental role in extracting latent components. The idea is to replace the initial large and maybe noisy and ill conditioned large scale original data by a lower dimensional approximate representation obtained via a matrix or multi-way array factorization or decomposition. Principal Components Analysis is a widely used technique for image recognition or identification. In the matrix case, it involves the computation of eigenvalues or singular decompositions. In the tensor case, even though various factorization techniques have been developed over the last decades (high-order SVD (HOSVD), Candecomp–Parafac (CP) and Tucker decomposition), the recent tensor SVDs (t-SVD and c-SVD), based on the use of the tensor t-product or c-products offer a matrix-like framework for third-order tensors, see [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15] for more details on recent work related to tensors and applications. In the present work, we consider third order tensors that could be defined as three dimensional arrays of data. As our study is based on the cosine transform product, we limit this work to three-order tensors.

For a given 3-mode tensor , we denote by the element of the tensor . A fiber is defined by fixing all the indexes except one. An element is called a tubal-scalar or simply tube of length n. For more details refer to [1,2].

2. Definitions and Notations

2.1. Discrete Cosine Transformation

In this subsection we recall some definitions and properties of the discrete cosine transformation and the c-product of tensors. During recent years, many advances were made in order to establish a rigorous framework enabling the treatment of problems for which the data is stored in three-way tensors without having to resort to matricization [1,8]. One of the most important feature of such a framework is the definition of a tensor-tensor product as the t-product, based on the Fast Fourier Transform. For applications as image treatment, the tensor-tensor product based on the Discrete Cosine Transformation (DCT) has shown to be an interesting alternative to FFT. We now give some basic facts on the DCT and its associated tensor-tensor product. The DCT of a vector is defined by

where is the discrete cosine transform matrix with entries

with is the Kronecker delta; see p. 150 in [16] for more details. It is known that the matrix is orthogonal, i.e., ; see [17]. Furthermore, for any vector , the matrix vector multiplication can be computed in operations. Moreover, Reference [17] have shown that a certain class of Toeplitz-plus-Hankel matrices can be diagonalized by . More precisely, we have

where

and is the diagonal matrix whose i-th diagonal element is .

2.2. Definitions and Properties of the Cosine Product

In this subsection, we briefly review some concepts and notations, which play a central role for the elaboration of the tensor global iterative methods based on the c-product; see [18] for more details on the c-product.

Let be a real valued third-order tensor, then the operations mat and its inverse ten are defined by

and the inverse operation denoted by ten is simply defined by

Let us denote the tensor obtained by applying the DCT on all the tubes of the tensor . This operation and its inverse are implemented in the Matlab by the commands and as

where denotes the Inverse Discrete Cosine Transform.

Remark 1.

Notice that the tensorcan be computed by using the 3-mode product defined in [2] as follows:

where M is theinvertible matrix given by

wheredenote deDiscrete Cosine Transform DCT matrix,is the diagonal matrix made of the first column of the DCT matrix, Z iscirculant upshift matrix which can be computed in MATLAB usingand I theidentity matrix; see [18] for more details.

Let be the matrix

where the matrices ’s are the frontal slices of the tensor . The block matrix can also be block diagonalized by using the DCT matrix as follows

Definition 1.

The c-product of two tensorsandis thetensor defined by:

Notice that from Equation (3), we can show that the product is equivalent to . Algorithm 1 allows us to compute, in an efficient way, the c-product of the tensors and , see [18].

| Algorithm 1 Computing the c-product. |

| Inputs: and |

| Output: |

| 1. Compute and . |

| 2. Compute each frontal slices of by

|

| 3. Compute . |

Next, give some definitions and remarks on the c-product and related topics.

Definition 2.

The identity tensor is the tensor such that each frontal slice of is the identity matrix .

An tensor is said to be invertible if there exists a tensor of order such that

In that case, we denote. It is clear thatis invertible if and only ifis invertible.

The inner scalar product is defined by

and its corresponding norm is given by

An tensor is said to be orthogonal if

Definition 3

([1]). A tensor is called f-diagonal if its frontal slices are diagonal matrices. It is called upper triangular if all its frontal slices are upper triangular.

Next we recall the Tensor Singular Value Decomposition of a tensor (Algorithm 2); more details can be found in [19].

Theorem 1.

Let be an real-valued tensor. Then can be factored as follows

where and are orthogonal tensors of order and , respectively, and is an f-diagonal tensor of order . This factorization is called Tensor Singular Value Decomposition (c-SVD) of the tensor .

| Algorithm 2 The Tensor SVD (c-SVD). |

| Input: Output: , and . |

| 1. Compute . |

| 2. Compute each frontal slices of , and from as follows |

| (a) for |

| (b) End for |

| 3. Compute , and . |

Remark 2.

As for the t-product [19], we can show that if is a c-SVD of the tensor , then we have

where , , and are the frontal slices of the tensors , , and , respectively, and

Theorem 2.

Note that when this theorem reduces to the well known Eckart–Young theorem for matrices [20].

Definition 4

(The tensor tubal-rank).Let be an be a tensor and consider its c-SVD . The tensor tubal rank of , denoted as rank() is defined to be the number of non-zero tubes of the f-diagonal tensor , i.e.,

Definition 5.

The multi-rank of the tensor is a vector with the i-th element equal to the rank of the i-th frontal slice of , i.e.,

The well known QR matrix decomposition can also be extended to the tensor case; see [19].

Theorem 3.

Let be a real-valued tensor of order . Then can be factored as follows

where is an orthogonal tensor and is an f-upper triangular tensor.

3. Tensor Principal Component Analysis for Face Recognition

Principle Component Analysis (PCA) is a widely used technique in image classification and face recognition. Many approaches involve a conversion of color images to grayscale in order to reduce the training cost. Nevertheless, for some applications, color an is important feature and tensor based approaches offer the possibility to take it into account. Moreover, especially in the case of facial recognition, it allows the treatment of enriched databases including for instance additional biometric information. However, one has to bear in mind that the computational cost is an important issue as the volume of data can be very large. We first recall some background facts on the matrix based approach.

3.1. The Matrix Case

One of the simplest and most effective PCA approaches used in face recognition systems is the so-called eigenface approach. This approach transforms faces into a small set of essential characteristics, eigenfaces, which are the main components of the initial set of learning images (training set). Recognition is done by projecting a test image in the eigenface subspace, after which the person is classified by comparing its position in eigenface space with the position of known individuals. The advantage of this approach over other face recognition strategies resides in its simplicity, speed and insensitivity to small or gradual changes on the face.

The process is defined as follows: Consider a set of training faces , , …, . All the face images have the same size: . Each face is transformed into a vector using the operation : . These vectors are columns of the matrix

We compute the average image Set and consider the new matrices

Notice that the covariance matrix can be very large. Therefore, the computation of the eigenvalues and the corresponding eigenvectors (eigenfaces) can be very difficult. To circumvent this issue, we instead consider the smaller matrix .

Let be an eigenvector of L then and

which shows that is an eigenvector of the covariance matrix .

The p eigenvectors of are then used to find the p eigenvectors of C that form the eigenface space. We keep only k eigenvectors corresponding to the largest k eigenvalues (eigenfaces corresponding to small eigenvalues can be omitted, as they explain only a small part of characteristic features of the faces.)

The next step consists of projecting each image of the training sample onto the eigenface space spanned by the orthogonal vectors :

The matrix is an orthogonal projector onto the subspace . A face image can be projected onto this face space as

We now give the steps of an image classification process based on this approach:

Let be a test vector-image and project it onto the face space to get Notice that the reconstructed image is given by

Compute the Euclidean distance

A face is classified as belonging to the class l when the minimum l is below some chosen threshold Set

and let be the distance between the original test image x and its reconstructed image : . Then

- If , then the input image is not even a face image and not recognized.

- If and for all i then the input image is a face image but it is an unknown image face.

- If and for all i then the input images are the individual face images associated with the class vector .

We now give some basic facts on the relation between the singular value decomposition (SVD) and PCA in this context:

Consider the Singular Value Decomposition of the matrix A as

where U and V are orthonormal matrices of sizes and p, respectively. The singular values are the square roots of the eigenvalues of the matrix , the ’s are the left vectors and the are the right vectors. We have

which is is the eigendecomposition of the matrix L and

In the PCA method, the projected eigenface space is then generated by the first columns of the unitary matrix U derived from the SVD decomposition of the matrix .

As only a small number k of the largest singular values are needed in PCA, we can use the well known Golub–Kahan algorithm to compute these wanted singular values and the corresponding singular vectors to define the projected subspace.

In the next section, we explain how the SVD based PCA can be extended to tensors and propose an algorithm for facial recognition in this context.

4. The Tensor Golub–Kahan Method

As explained in the previous section, it is important to take into account the potentially large size of datasets, especially for the training process. The idea of extending the matrix Golub–Kahan bidiagonalization algorithm to the tensor context has been explored in the recent years for large and sparse tensors [21]. In [1], the authors established the foundations of a remarkable theoretical framework for tensor decompositions in association with the tensor-tensor t- or c-products, allowing to generalize the main notions of linear algebra to tensors.

4.1. The Tensor C-Global Golub–Kahan Algorithm

Let be a tensor ans an integer. The Tensor c-global Golub–Kahan bidiagonalization algorithm (associated to the c-product) is described in Algorithm 3.

| Algorithm 3 The Tensor Global Golub–Kahan algorithm (TGGKA). |

| 1. Choose a tensor such that and set . |

| 2. For |

| (a) , |

| (b) , |

| (c) , |

| (d) , |

| (e) . |

| (f) . |

| End |

Let be the upper bidiagonal matrix defined by

Let and be the and tensors with frontal slices and , respectively, and let and be the and tensors with frontal slices and , respectively. We set

with

Then, we have the following results [13].

Proposition 1.

The tensors produced by the tensor c-global Golub–Kahan algorithm satisfy the following relations

where the product ⊛ is defined by:

We set the following notation:

where is the i-th column of the matrix .

We note that since the matrix is bidiagonal, is symmetric and tridiagonal and then Algorithm computes the same information as tensor global Lanczos algorithm applied to the symmetric matrix .

4.2. Tensor Tubal Golub–Kahan Bidiagonalisation Algorithm

First, we introduce some new products that will be useful in this section.

Definition 6

([13]). Let and , the tube fiber tensor product is an tensor defined by

Definition 7

([13]). Let , , and be tensors. The block tensor

is defined by compositing the frontal slices of the four tensors.

Definition 8.

Letwhere, we denoted byTVect() the tensor vectorization operator:obtained by superposing the laterals slicesof, for. In others words, for a tensorwhere, we have:

Remark 3.

TheTVectoperator transform a given tensor on lateral slice. Its easy to see that when we take, theTVectoperator coincides with the operationwhich transform the matrix on vector.

Proposition 2.

Let be a tensor of size , we have

Definition 9.

Letwhere. We define the range space ofdenoted byas the c-linear span of the lateral slices of

Definition 10

([14]). Let and , the c-Kronecker product of and is the tensor in which the i-th frontal slice of their transformed tensor is given by:

where and are the i-th frontal slices of the tensors and , respectively.

We introduce now a normalization algorithm allowing us to decompose the non-zero tensor , such that:

where is an invertible tube fiber of size and and e is the tube fiber defined by .

This procedure is described in Algorithm 4.

| Algorithm 4 Normalization algorithm (Normalize). |

| 1. Input. and a tolerance . |

| 2. Output. The tensor and the tube fiber . |

| 3. Set |

| (a) For |

| i |

| ii if , |

| iii else ; |

| ; |

| (b) End |

| 4. , |

| 5. End |

Next, we give the Tensor Tube Global Golub–Kahan (TTGGKA) algorithm, seeElIchi1. Let be a tensor and let be an integer. The Tensor Tube Global Golub–Kahan bidiagonalization process is described in Algorithm 5.

| Algorithm 5 The Tensor Tube Global Golub–Kahan algorithm (TTGGKA). |

| 1. Choose a tensor such that and set . |

| 2. For |

| (a) , |

| (b) . |

| (c) , |

| (d) . |

| End |

Let be the upper bidiagonal tensor (each frontal slice of is a bidiagonal matrix) and the defined by

Let and be the and tensors with frontal slices and , respectively, and let and be the and tensors with frontal slices and , respectively. We set

Then, we have the following results.

Proposition 3.

The tensors produced by the tensor TTGGKA algorithm satisfy the following relations

where with 1 in the position and zeros in the other positions, the identity tensor and is the fiber tube in the position of the tensor .

5. The Tensor Tubal PCA Method

In this section, we describe a tensor-SVD based PCA method for order 3 tensors which naturally arise in problems involving images such as facial recognition. As for the matrix case, we consider a set of N training images, each of one being encoded as real tensors , . In the case of RGB images, each frontal slice would contain the encoding for each color layer () but in order to be able to store additional features, the case could be contemplated.

Let us consider one training image . Each one of the frontal slices of is resized into a column vector of length and we form a tensor defined by . Applying this procedure to each training image, we obtain N tensors of size . The average image tensor is defined as and we define the training tensor , where .

Let us now consider the c-SVD decomposition of , where and are orthogonal tensors of size and , respectively, and S is a f-diagonal tensor of size .

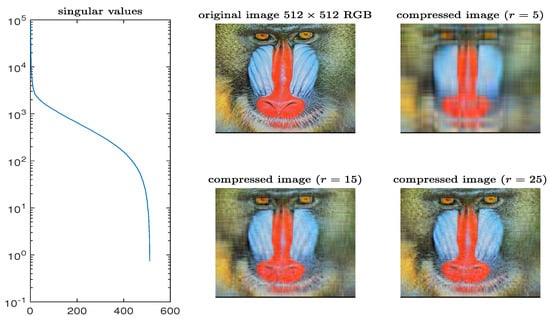

In the matrix context, it is known that just a few singular values suffice to capture the main features of an image, therefore, applying this idea to each one of the three color layers, an RGB image can be approximated by a low tubal rank tensor. Let us consider an image tensor and its c-SVD decomposition . Choosing an integer r such as , we can approximate by the r tubal rank tensor

In Figure 1, we represented a RGB image and the images obtained for various truncation indices. On the left part, we plotted the singular values of one color layer of the RGB tensor (the exact same behaviour is observed on the two other layers). The rapid decrease of the singular values explain the good quality of compressed images even for small truncation indices.

Applying this idea to our problem, we want to be able to obtain truncated tensor SVDs of the training tensor , without needing to compute the whole c-SVD. After k iterations of the TTGGKA algorithm (for the case ), we obtain three tensors , and as defined in Equation (21) such as

Let the c-SVD of , noticing that is much smaller than . Then first tubal singular values and the left tubal singular tensors of are given by and , respectively, for , see [1] for more details.

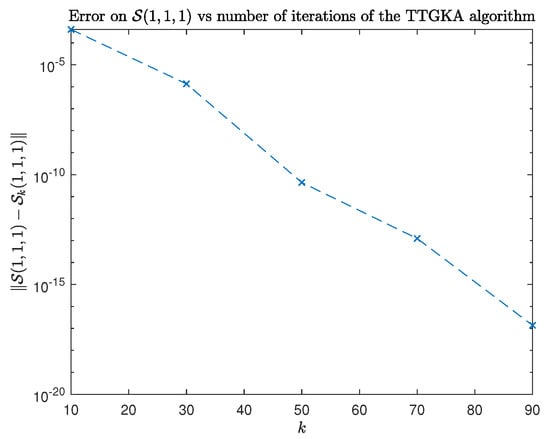

In order to illustrate the ability to approximate the first singular elements of a tensor using the TTGGKA algorithm, we considered a real tensor which frontal slices were matrices generated by a finite difference discretization method of differential operators. On Figure 2, we displayed the error on the first diagonal coefficient of the first frontal in function of the number of iteration of the Tensor Tube Golub–Kahan algorithm, where is the c-SVD of .

In Table 1, we reported on the errors on the tensor Frobenius norms of the singular tubes in function of the number k of the Tensor Tube Golub–Kahan algorithm.

The same behaviour was observed on all the other frontal slices. This example illustrate the ability of the TTGKA algorithm for approximating the largest singular tubes.

The projection space is generated by the lateral slices of the tensor derived from the TTGGKA algorithm and the c-SVD decomposition of the bidiagonal tensor , i.e., the c-linear span of first k lateral slices of , see [1,19] for more details.

The steps of the Tensor Tubal PCA algorithm for face recognition which finds the closest image in the training database for a given image are summarized in Algorithm 6:

| Algorithm 6 The Tensor Tubal PCA algorithm (TTPCA). |

| 1. Inputs Training Image tensor (N images), mean image tensor ,Test image , index of truncation r, k=number of iterations of the TTGGKA algorithm (). |

| 2. Output Closest image in the Training database. |

| 3. Run k iterations of the TTGGKA algorithm to obtain tensors and |

| 4. Compute c-SVD |

| 5. Compute the projection tensor , where |

| 6. Compute the projected Training tensor and projected centred test image |

| 7. Find |

In the next section, we consider image identification problems on various databases.

6. Numerical Tests

In this section, we consider three examples of image identification. In the case of grayscale images, the global version of Golub–Kahan was used to compute the dominant singular values in order to perform a PCA on the data. For the two other situations, we used the Tensor Tubal PCA (TTPCA) method based on the Tube Global Golub–Kahan (TTGGKA) algorithm in order to perform facial recognition on RGB images. The tests were performed with Matlab 2019a, on an Intel i5 laptop with 16 Go of memory. We considered various truncation indices r for which the recognition rates were computed. We also reported the CPU time for the training process.

6.1. Example 1

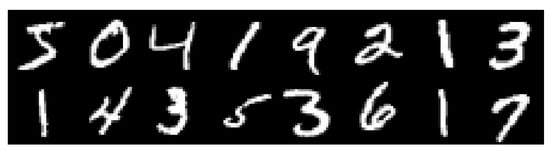

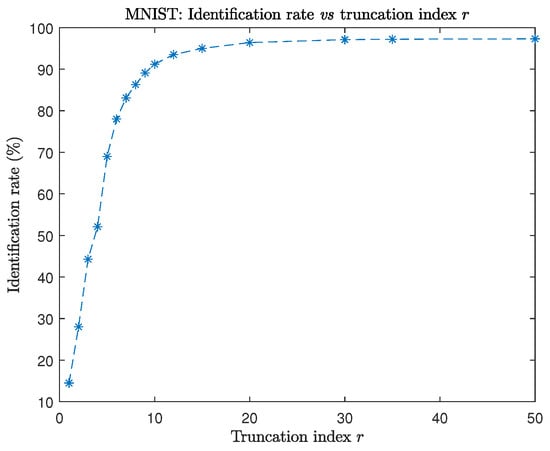

In this example, we considered the MNIST database of handwritten digits [22]. The database contains two subsets of grayscale images (60,000 training images and 10,000 test images). A sample is shown in Figure 3. Each image was vectorized as a vector of length and, following the process described in Section 3.1, we formed the training and the test matrices of sizes and , respectively.

Both matrices were centred by substracting the mean training image and the Golub–Kahan algorithm was used to generate an approximation of r dominant singular values and left singular vectors , .

Let us denote the subspace spanned by the columns of . Let t be a test image and its projection onto . The closest image in the training dataset is determined by computing

where .

For various truncation indices r, we tested each image of the test subset and computed the recognition rate (i.e., a test is successful if the digit is correctly identified). The results are plotted on Figure 4 and show that a good level of accuracy is obtained with only a few approximate singular values. Due to the large size of the training matrix, it validates the interest of computing only a few singular values with the Golub–Kahan algorithm.

6.2. Example 2

In this example, we used the Georgia Tech database GTDB_ crop [23], which contains 750 face images of 50 persons in different illumination conditions, facial expression and face orientation, as shown in Figure 5. The RGB JPEG images were resized to 100 × 100 × 3 tensors.

Each image file is coded as a tensor and transformed into a 10,000 tensor as explained in the previous section. We built the training and test tensors as follows: from 15 pictures of each person in the database, five pictures were randomly chosen and stored in the test folder and the 10 remaining pictures were used for the train tensor. Hence, the database was partitioned into two subsets containing 250 and 500 items, respectively, at each iteration of the simulation.

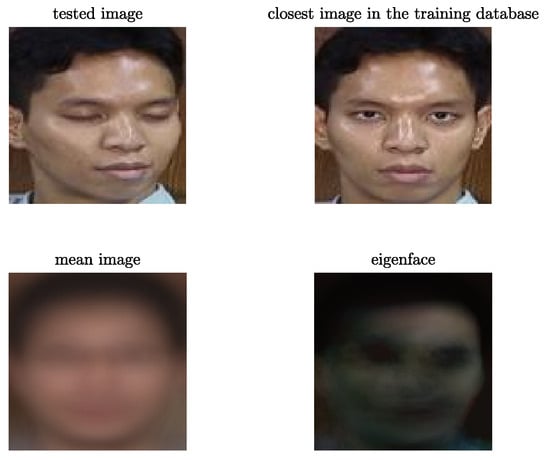

We applied the TTGGKA based Algoritm 6 for various truncation indices. In Figure 6, we represented a test image (top left position), the closest image in the database (top right), the mean image of the training database (bottom left) and the eigenface associated to the test image (bottom right).

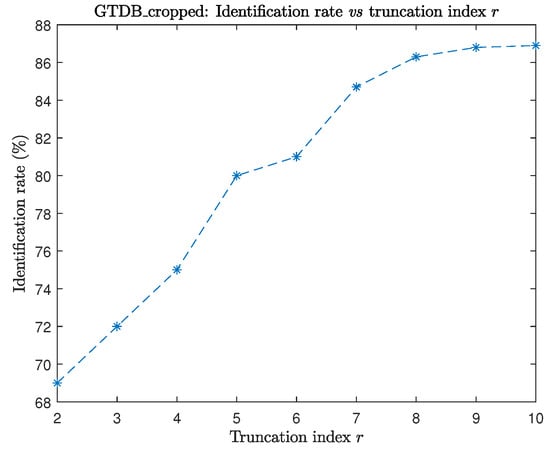

In order compute the rate of recognition, we ran 100 simulations, obtained the number of successes (i.e., a test is successful if the person is correctly identified) and reported the best identification rates, in function of the truncation index r in Figure 7.

The results match the performances observed in the literature [24] for this database and it confirms that the use of a Golub–Kahan strategy is interesting especially because, in terms of training, the Tube Tensor PCA algorithm required only 5 s instead of 25 s when using a c-SVD.

6.3. Example 3

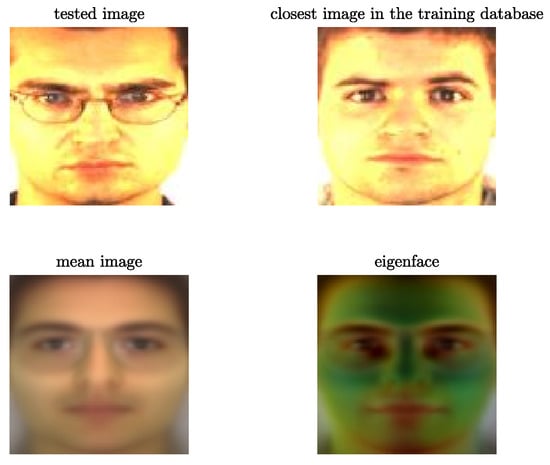

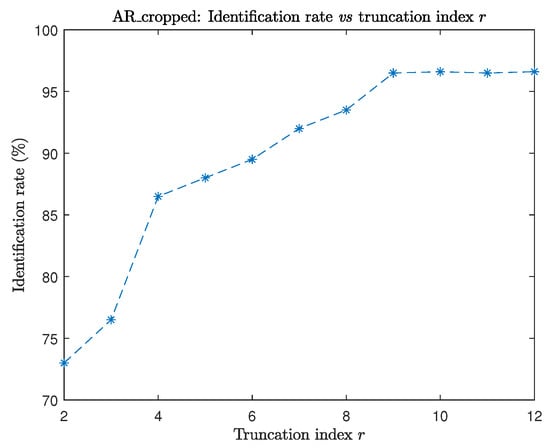

In the second example, we used the larger AR face database (cropped version) (Face crops) [9], which contains 2600 bitmap pictures of human faces (50 males and 50 females, 26 pictures per person), with different expressions, lightning conditions, facial expressions and face orientation. The bitmap pictures were resized to 100 × 100 Jpeg images. The same protocol as for Example 1 was followed: we partitioned the set of images in two subsets. Out of 26 pictures, 6 pictures were randomly chosen as test images and the remaining 20 were put into the training folder. The training process took 24 s while it would have taken 81.5 s if using a c-SVD. An example of test image, the closest match in the dataset, the mean image and its associated eigenface are shown in Figure 8.

We applied our approach (TTPCA) to the 10,000 training tensor and plotted the recognition rate as a function of the truncation index in Figure 9.

For all examples, it is worth noticing that, as expected in face identification problems, only a few of the first largest singular elements suffice to capture the main features of an image. Therefore, the Golub–Kahan based strategies such as the TTPCA method are an interesting choice.

7. Conclusions

In this manuscript, we focused on two types of Golub–Kahan factorizations. We used the recent advances in the field of tensor factorization and showed that this approach is efficient for image identification. The main feature of this approach resides in the ability of the Global Golub–Kahan algorithms to approximate the dominant singular elements of a training matrix or tensor without needing to compute the SVD. This is particularly important as the matrices and tensors involved in this type of application can be very large. Moreover, in the case for which color has to be taken into account, this approach do not involve a conversion to grayscale, which can be very important for some applications. In a future work, we would like to study the feasability of implementing the promising randomized PCA approaches in the Golub–Kahan tensor algorithm in order to improve the training process computational cost in the case of very large datasets.

Author Contributions

Conceptualization, M.H., K.J., C.K. and M.M.; methodology, M.H. and K.J.; software, M.H.; validation, M.H., K.J., C.K. and M.M.; writing—original draft preparation, M.H., K.J., C.K. and M.M.; writing—review and editing, M.H., K.J., C.K. and M.M.; visualization, M.H., K.J., C.K. and M.M.; supervision, K.J.; project administration, M.H. and K.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Mustapha Hached acknowledges support from the Labex CEMPI (ANR-11-LABX-0007-01).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kilmer, M.E.; Braman, K.; Hao, N.; Hoover, R.C. Third-order tensors as operators on matrices: A theoretical and computational framework with applications in imaging. SIAM J. Matrix Anal. Appl. 2013, 34, 148–172. [Google Scholar]

- Kolda, T.G.; Bader, B.W. Tensor Decompositions and Applications. SIAM Rev. 2009, 3, 455–500. [Google Scholar] [CrossRef]

- Zhang, J.; Saibaba, A.K.; Kilmer, M.E.; Aeron, S. A randomized tensor singular value decomposition based on the t-product. Numer Linear Algebra Appl. 2018, 25, e2179. [Google Scholar] [CrossRef]

- Cai, S.; Luo, Q.; Yang, M.; Li, W.; Xiao, M. Tensor robust principal component analysis via non-convex low rank approximation. Appl. Sci. 2019, 9, 1411. [Google Scholar] [CrossRef]

- Kong, H.; Xie, X.; Lin, Z. t-Schatten-p norm for low-rank tensor recovery. IEEE J. Sel. Top. Signal Process. 2018, 12, 1405–1419. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, M.; Ma, Y. The augmented Lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Kang, Z.; Peng, C.; Cheng, Q. Robust PCA via nonconvex rank approximation. In Proceedings of the 2015 IEEE International Conference on Data Mining, Atlantic City, NJ, USA, 14–17 November 2015. [Google Scholar]

- Lu, C.; Feng, J.; Chen, Y.; Liu, W.; Lin, Z.; Yan, S. Tensor Robust Principal Component Analysis with a New Tensor Nuclear Norm. IEEE Anal. Mach. Intell. 2020, 42, 925–938. [Google Scholar] [CrossRef] [PubMed]

- Martinez, A.M.; Kak, A.C. PCA versus LDA. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 228–233. [Google Scholar] [CrossRef]

- Guide, M.E.; Ichi, A.E.; Jbilou, K.; Sadaka, R. Tensor Krylov subspace methods via the T-product for color image processing. arXiv 2020, arXiv:2006.07133. [Google Scholar]

- Brazell, M.; Navasca, N.L.C.; Tamon, C. Solving Multilinear Systems Via Tensor Inversion. SIAM J. Matrix Anal. Appl. 2013, 34, 542–570. [Google Scholar] [CrossRef]

- Beik, F.P.A.; Jbilou, K.; Najafi-Kalyani, M.; Reichel, L. Golub–Kahan bidiagonalization for ill-conditioned tensor equations with applications. Numer. Algorithms 2020, 84, 1535–1563. [Google Scholar] [CrossRef]

- Ichi, A.E.; Jbilou, K.; Sadaka, R. On some tensor tubal-Krylov subspace methods via the T-product. arXiv 2020, arXiv:2010.14063. [Google Scholar]

- Guide, M.E.; Ichi, A.E.; Jbilou, K. Discrete cosine transform LSQR and GMRES methods for multidimensional ill-posed problems. arXiv 2020, arXiv:2103.11847. [Google Scholar]

- Vasilescu, M.A.O.; Terzopoulos, D. Multilinear image analysis for facial recognition. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec City, QC, Canada, 11–15 August 2002; pp. 511–514. [Google Scholar]

- Jain, A. Fundamentals of Digital Image Processing; Prentice–Hall: Englewood Cliffs, NJ, USA, 1989. [Google Scholar]

- Ng, M.K.; Chan, R.H.; Tang, W. A fast algorithm for deblurring models with Neumann boundary conditions. SIAM J. Sci. Comput. 1999, 21, 851–866. [Google Scholar] [CrossRef]

- Kernfeld, E.; Kilmer, M.; Aeron, S. Tensor-tensor products with invertible linear transforms. Linear Algebra Appl. 2015, 485, 545–570. [Google Scholar] [CrossRef]

- Kilmer, M.E.; Martin, C.D. Factorization strategies for third-order tensors. Linear Algebra Appl. 2011, 435, 641–658. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 3rd ed.; Johns Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Savas, B.; Eldén, L. Krylov-type methods for tensor computations I. Linear Algebra Appl. 2013, 438, 891–918. [Google Scholar] [CrossRef]

- Lecun, Y.; Cortes, C.; Curges, C. The MNIST Database. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 22 February 2021).

- Nefian, A.V. Georgia Tech Face Database. Available online: http://www.anefian.com/research/face_reco.htm (accessed on 22 February 2021).

- Wang, S.; Sun, M.; Chen, Y.; Pang, E.; Zhou, C. STPCA: Sparse tensor Principal Component Analysis for feature extraction. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 2278–2281. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).